Recommended practices for implementing Elastic Storage Server and IBM Spectrum Scale use cases

This chapter provides general guidelines for using Elastic Storage Server (ESS) and IBM Spectrum Scale.

This chapter includes the following sections:

3.1 Introduction

A good understanding of the use cases and planning ahead helps avoid problems along the way when you use any system. This concept applies to the use of ESS and Spectrum Scale. IBM provides a large amount of relevant documentation on the web. It is a good practice to spend enough time with that documentation to understand what the system is going to be used for.

There are many attributes of IBM Spectrum Scale file systems to consider when you develop a solution. Consider the issues for a typical genomics system:

•Number of file systems: Multiple file systems are typically required, which increases administrative overhead. But you need to make sure that you actually need more than one IBM Spectrum Scale file system.

•Striping: IBM Spectrum Scale stripes data across all available resources. When you have multiple file systems, you might want to isolate resources. However, for most workloads it is preferable for IBM Spectrum Scale to stripe across all available resources.

To balance these considerations, while still having a manageable number of file systems, Spectrum Scale provides the concept of fileset within a file system. This feature is explained in this chapter.

3.2 Planning

For guidelines on basic data center planning, see Planning for the system at IBM Knowledge Center.

When you install an ESS, the first place to look into is the Elastic Storage Server (ESS) product documentation at IBM Knowledge Center. The link not only provides up-to-date documentation, but also bulletins and alerts that are valuable when you are running ESS in your environment.

Another excellent resource is the IBM Spectrum Scale Frequently Asked Questions and Answers (FAQ). This FAQ is generic for Spectrum Scale, not specific to ESS. However, it is a valuable resource for the common questions about the product.

Spectrum Scale uses a file system object that is called a fileset. A fileset is a subtree of a file system namespace that in many respects behaves like an independent file system. Filesets provide a means of partitioning the file system to allow administrative operations at a finer granularity than the entire file system:

•Filesets can be used to define quotas on both data blocks and inodes.

•The owning fileset is an attribute of each file. This attribute can be specified in a policy to control initial data placement, migration, and replication of the file's data.

•Fileset snapshots can be created instead of creating a snapshot of an entire file system.

Spectrum Scale provides a powerful policy engine that can be used to process the entire file system in an efficient way. It can use any of the following attribute sets:

– POSIX attributes (UID, GID, filename, atime, and so on)

– Spectrum Scale attributes (fileset name, fileheat, and so on)

– Any attribute that the file system admin wants to use with the use of extended attributes.

All these attributes give the storage administrator an extremely flexible policy engine that is built into the file system. Keep this capability in mind when you plan for file systems. For more information, see Information lifecycle management for IBM Spectrum Scale at IBM Knowledge Center.

3.3 Guidelines

ESS as a solution based on IBM Spectrum Scale has multiple ways to access and interact with the data. The same best practices for Spectrum Scale apply to ESS:

•Pre-planning. Involve IBM technical presales in the discussion.

•Do not mix different storages in the same storage pool.

•Understand the workload, including application logical block, patterns, and so on.

•Plan. When you purchase your first ESS, you receive up to 100 free hours of an onsite IBM Lab Services consultant for planning and installation.

•Unless you have hard evidence not to, use the defaults settings.

•Network is key. For more information, see 3.4, “Networking” on page 35.

3.4 Networking

Networking is one of the most important factors when you use Spectrum Scale in general. ESS as part of the Spectrum Scale family is no exception to this rule. You can never do enough planning in networking. Spectrum Scale runs over the network. So, the performance you can get from the network directly relates to the performance that you see from the file system.

On ESS, two network technologies are available to connect with the native Spectrum Scale protocol, called Network Shared Disk (NSD):

•Ethernet

•Remote Direct Memory Access (RDMA)

As a rule, regardless of the technology that is used, do not share the daemon (data) network for other purposes. This precaution is particularly important in Ethernet networks because the design of RDMA networks typically is flatter than Ethernet networks. RDMA networks have a reduced number of routers and switches because they typically connect devices to a single switch instead of separate switches.

IBM Spectrum Scale -- with ESS as part of the same family -- uses two networks: admin and daemon. They can be defined using the same network. However, in some cases it might be beneficial to split them, for example, to isolate the daemon network from other workloads.

3.4.1 Admin network

The Spectrum Scale admin network has these characteristics:

•Used for the execution of administrative commands.

•Requires TCP/IP.

•Can be the same network as the Spectrum Scale daemon network, or a different one.

•Establishes the reliability of Spectrum Scale.

3.4.2 Daemon network

The Spectrum Scale daemon network has these characteristics:

•Is used for communication between the mmfsd daemon of all nodes.

•Requires TCP/IP.

•In addition to TCP/IP, Spectrum Scale can be optionally configured to use RDMA for daemon communication. TCP/IP is still required if RDMA is enabled for daemon communication.

•Establishes the performance of Spectrum Scale, as determined by its bandwidth, latency, and reliability of the Spectrum Scale daemon network.

Both networks must be reliable to be able to have a reliable cluster. If TCP/IP packets are lost, there are significant variable latencies between the systems or other undesirable anomalies in the health of the network. The Spectrum Scale cluster and file systems suffer the consequences of these problems.

When you design the network, use a network that is as fast as the daemon network. If you are using RDMA, you can use IP over InfiniBand (IPoIB) for the IP part of the daemon network.

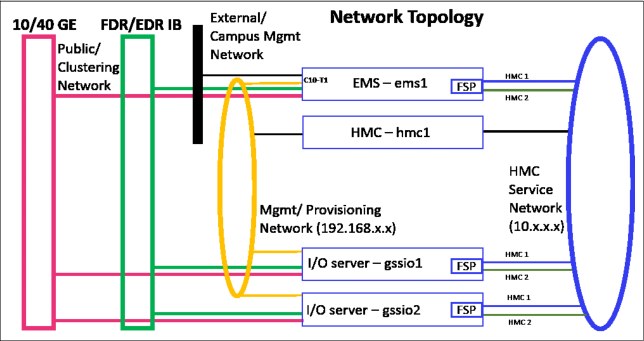

The admin network can use the same fast and reliable network as the daemon network. However, on ESS-only clusters, the system is delivered with at least one high-speed network and one management network as shown in the network diagram in Figure 3-1.

Figure 3-1 Second-generation ESS network diagram

The management network should be used in an ESS cluster as the admin network. The high-speed network, which is shown as 10/40 GE and FDE/EDR IB, should be used as the daemon network. For details about the ESS network requirements, see ESS networking considerations at IBM Knowledge Center.

In an existing cluster, you use the mmlscluster command to see what networks are used for admin and which for daemon. Example 3-1 shows the output of the mmlslcuster command when both networks are using different interfaces.

Example 3-1 mmlscluster with admin and daemon networks being the same

# mmlscluster

GPFS cluster information

========================

GPFS cluster name: ITSO.stg.forum.fi.ibm.com

GPFS cluster id: 8075176174583977671

GPFS UID domain: ITSO.stg.forum.fi.ibm.com

Remote shell command: /usr/bin/ssh

Remote file copy command: /usr/bin/scp

Repository type: CCR

Node Daemon node name IP address Admin node name Designation

---------------------------------------------------------------------------------------------------------------------------------------

1 specscale01-hs 10.10.16.15 specscale01 quorum-manager-perfmon

2 specscale02-hs 10.10.16.16 specscale02 quorum-manager-perfmon

3 specscale03-hs 10.10.16.17 specscale03 quorum-manager-perfmon

|

Note: This example shows only the network output of mmlscluster and it is valid for all IBM Spectrum Scale products, including the ESS.

|

You might plan to extend the cluster with non-ESS parts. In that case, you must plan how the admin and daemon networks are going to interconnect in a reliable manner with enough performance to the ESS cluster nodes. That plan must include end-to-end design of those networks, switches, uplinks, networks, hops, routing if used, firewall configurations if used. Many other planning topics are beyond the scope of a storage product.

To identify possible issues on the network, create a baseline for the network after all planning and deployment is complete. IBM Spectrum Scale includes tools to create this baseline. In particular, use the nsdperf command. For more information about how to run the nsdperf command to create a baseline, see Testing network performance with nsdperf.

Every time that you change something on the network, check the results against your baseline. Tuning is an ongoing process that does not end on the day of delivery. Systems evolve, as do workloads. Therefore, it is important to regularly monitor the network for changes in performance and resilience. These factors have a direct and critical impact on any networked service, including IBM Spectrum Scale and ESS as part of the Spectrum Scale family.

3.5 Initial configuration

When you set up ESS for the first time in your environment, review the sample ESS client configuration script for your non-ESS nodes (including IO nodes and EMS).

3.5.1 Generic client script part of ESS

The script contains default Spectrum Scale configuration parameters for generic workloads. Review and adapt those parameters to fit your specific needs. For more information about Spectrum Scale parameters, see mmchconfig command at IBM Knowledge Center.

ESS includes the gssClientConfig.sh script, which is in the /usr/lpp/mmfs/samples/gss/ directory. It can be used to add client nodes to the ESS cluster.

|

Note: When you use the gssClientConfig.sh script, do a dry run by using the -D parameter to review the settings without committing them.

|

For more information about the gssClientConfig.sh script, see Adding IBM Spectrum Scale nodes to an ESS cluster at IBM Knowledge Center.

3.5.2 Ongoing maintenance (upgrades)

A proper planning phase is critical for a successful upgrade. An ESS update/upgrade changes multiple layers in the system, including but not limited to the following items:

•Operating system (OS)

– Kernel

– Libraries

•Device Driver and firmware levels

– Network stack

– Storage subsystem

– Node

– Enclosure

– Host bus adapter (HBA)

– Drives (SSD, HDD, and so on)

•IBM Spectrum Scale and IBM Spectrum Scale RAID

|

Note: Before any system upgrade, back up all important data before you do the upgrade to avoid the possible loss of critical data.

|

You might make protocol nodes a part of the cluster. If so, plan for the IP addresses failovers that will occur and the consequences that these might have in the clients.

In addition to the components that the ESS upgrade involves, planning is needed for the components that ESS (and IBM Spectrum Scale) interacts with directly or indirectly. This list includes, but is not limited to, the following items:

•RDMA fabrics

•Applications - Middleware

•Users

•Other

This process might cause brief NFS, SMB, and Performance Monitoring outages.

ESS upgrade guidelines

Familiarize yourself with OS, device drivers and firmware levels, IBM Spectrum Scale, and IBM Spectrum Scale RAID levels. You find the supported levels in the release notes for IBM Elastic Storage Server (ESS) 5.3.1 under the “Package Information” section.

|

Note: Be aware that the links and information referred to here are related to ESS version 5.3.1. Always check the latest information in IBM Knowledge Center, which is the official documentation.

|

When you use RDMA, check the MOFED levels that ESS supplies with the fabric levels and firmware that are currently in use. Make plans at the fabric level before you apply the ESS update/upgrade. For information about the supported levels of Mellanox, see the Mellanox support matrix.

For application levels, contact the software vendor for recommendations if applicable.

IBM Spectrum Scale matrix support

Confirm the IBM Spectrum Scale version that is included in each ESS version. The ESS versions are listed in Planning for the system. You should check that the versions on non-ESS nodes are compatible with Spectrum Scale version that is delivered with the ESS. The best place to look for the compatibility across versions is the IBM Spectrum Scale Frequently Asked Questions and Answers (FAQ).

For ESS-supported update/upgrade paths, see Supported upgrade paths at IBM Knowledge Center.

Preferred upgrade flow

For the ESS building blocks, see Upgrading Elastic Storage Server at IBM Knowledge Center.

The link gives you a starting plan for the upgrade process. It covers the ESS parts only. So, the plan is not complete until you account for the rest of the parts that interact with ESS, and add these to an overall plan.

Your cluster might have other nodes that are not part of the ESS, such as the following node types:

•Protocol Nodes

•Active File Management (AFM) gateways

•Transparent Cloud Tier (TCT)

•IBM Spectrum Protect™ nodes

•IBM Spectrum Archive™

See Upgrading a cluster containing ESS and protocol nodes at IBM Knowledge Center.

Final comments

This chapter is not intended to scare you about update or upgrades. Instead, the goal is to make you aware that proper planning, as with anything in technology, is important to reach success. This requirement is even more important as interactions between interconnected systems become more common and complex. Remember that when you do an ESS update or upgrade, you can always involve IBM to help you with it.

..................Content has been hidden....................

You can't read the all page of ebook, please click here login for view all page.