GDPS Continuous Availability solution

In this chapter, we introduce the GDPS Continuous Availability solution. This solution aims to significantly reduce the time spent to recover systems in a disaster recovery situation, and enable planned and unplanned switching of workloads between sites.

The chapter includes the following topics:

7.1 Overview of GDPS Continuous Availability

In this section, we provide a high-level description of the GDPS Continuous Availability solution and explain where it fits in with the other GDPS products.

7.1.1 Positioning GDPS Continuous Availability

Business continuity features the following key metrics:

•Recovery time objective (RTO): How long can you afford to be without your systems?

•Recovery point objective (RPO): How much data can you afford to lose or re-create?

•Network recovery objective (NRO): How long does it take to switch over the network?

Multiple offerings are available in the GDPS family, all of which are covered in this book. The GDPS products other than GDPS Continuous Availability are continuous availability (CA) and disaster recovery (DR) solutions that are based on synchronous or asynchronous disk hardware replication.

To achieve the highest levels of availability and minimize the recovery for planned and unplanned outages, various clients deployed GDPS Metro multi-site workload configurations, which have the following requirements:

•All critical data must be Metro Mirrored and HyperSwap enabled.

•All critical CF structures must be duplexed.

•Applications must be Parallel Sysplex enabled.

However, the signal latency between sites can affect online workload throughput and batch duration. This issue results in sites typically being separated by no more than approximately 20 km (12.4 miles) fiber distance1.

Therefore, the GDPS Metro multi-site workload configuration, which can provide an RPO of zero and an RTO as low as a few minutes, does not provide a solution if an enterprise requires that the distance between the active sites is much greater than 20 - 30 km (12.4 - 18.6 miles.

The GDPS products that are based on asynchronous hardware replication (GDPS XRC and GDPS GM) provide for virtually unlimited site separation. However, they do require that the workload from the failed site is restarted in the recovery site and this process typically takes 30 - 60 minutes. Therefore, GDPS XRC and GDPS GM cannot achieve the RTO of seconds that is required by various enterprises for their most critical workloads.

When the GDPS products are used based on hardware replication, it is not possible to achieve aggressive RPO and RTO goals while providing the sufficient site separation that is being required by some enterprises.

For these reasons, the Active/Active sites concept was conceived.

7.1.2 GDPS Continuous Availability sites concept

The Active/Active sites concept consists of having two sites that are separated by virtually unlimited distances, running the same applications, and having the same data to provide cross-site workload balancing and continuous availability and disaster recovery. This change is a fundamental paradigm shift from a failover model to a continuous availability model.

GDPS Continuous Availability (GDPS CA) does not use any of the infrastructure-based data replication techniques that other GDPS products rely on, such as Metro Mirror (PPRC), Global Mirror (GM), or z/OS Global Mirror (XRC). 2

Instead, GDPS Continuous Availability relies on both of the following methods:

•Software-based asynchronous replication techniques for copying the data between sites.

•Automation, primarily operating at a workload level, to manage the availability of selected workloads and the routing of transactions for these workloads.

The GDPS Continuous Availability product, which is a component of the GDPS Continuous Availability solution, acts primarily as the coordination point or controller for these activities. It is a focal point for operating and monitoring the solution and readiness for recovery.

|

Note: For simplicity, in this chapter we refer to both the solution and the product as GDPS Continuous Availability. We might also refer to the environment managed by the solution, and the solution itself, as GDPS CA.

|

What is a workload

A workload is defined as the aggregation of the following components:

•Software

User-written applications, such as COBOL programs, and the middleware runtime environment (for example, CICS regions, InfoSphere Replication Server instances and IBM Db2 subsystems).

•Data

•A related set of objects that must preserve transactional consistency and optionally referential integrity constraints (for example, Db2 tables or IMS databases). Network connectivity

One or more TCP/IP addresses and ports (for example, 10.10.10.1:80), IBM MQ Queue managers, or SNA applids.

The following workload types are supported and managed in a GDPS Continuous Availability environment:

•Update or read/write workloads

These workloads run in what is known as the Active/Standby configuration. In this case, a workload that is managed by GDPS Continuous Availability is active in one sysplex and receives transactions that are routed to it by the workload distribution mechanism that is managed by the IBM Multi-site Workload Lifeline.

The workload also uses software replication to copy changed data to another instance of the workload that is running in a second sysplex where all the infrastructure components (LPARs, systems, middleware, and so on) and even the application are ready to receive work in what is termed a standby mode.

The updated data from the active instance of the workload is applied in real time to the database subsystem instance that is running in standby mode.

•Query or read-only workloads

These workloads are associated with update workloads, but they can be actively running in both sites concurrently. Workload distribution between the sites is based on policy options, and takes into account environmental factors, such as the latency for replication that determines the age (or currency) of the data in the standby site.

No data replication is associated with the query workload because no updates are made to the data. You can associate up to two query workloads with a single update workload.

•Crypto workload

These workloads provide the optional capability to keep cryptographic key material synchronized between sites in a GDPS Continuous Availability environment. Only one crypto workload ever is available, which is responsible for monitoring the replication of changes to the VSAM data sets that are used by ICSF in the two sites. It also alerts the operator to any problems with this issue.

Figure 7-1 shows these concepts for an update workload at a high level. Transactions arrive at the workload distributor, which is also known as the load balancer. Depending on the current situation, the transactions are routed to what is termed the currently active sysplex in the configuration for that particular workload.

Figure 7-1 GDPS Continuous Availability concept

The environment is constantly being monitored to ensure that workload is being processed in the active sysplex. If GDPS Continuous Availability detects that workload is not processing normally, a policy-based decision is made to automatically start routing work to the standby sysplex (rather than the currently active sysplex), or to prompt the operator to take some action. In a similar way, for query workloads, a policy that uses the latency of replication as thresholds trigger GDPS Continuous Availability or other products in the solution to take some action.

Information is constantly being exchanged by the systems in the active and standby sysplexes, the GDPS controllers (one in each location), and the workload distribution mechanism to ensure that an accurate picture of the health of the environment is maintained to enable appropriate decisions from the automation.

In a planned manner, it is also possible to switch each workload from the currently active to the standby sysplex if the need arises, such as for routine maintenance.

|

Note: In this chapter, we sometimes refer to workloads that are managed by GDPS Continuous Availability as Active-Active workloads.

|

In your environment, you are likely to have some applications and data that you do not want to manage with, or that cannot be managed by GDPS Continuous Availability. For example, you might have an application that uses a data type for which software data replication is not available or is not supported by GDPS Continuous Availability.

You still need to provide high availability and disaster recovery for such applications and data. For this task, GDPS Continuous Availability provides for integration and co-operation with other GDPS products that rely on hardware replication and are independent of application and data type.

Specifically, special coordination is provided with GDPS Metro, which is described in 7.5, “GDPS Continuous Availability co-operation with GDPS Metro” on page 239, and GDPS MGM, is described in 7.6, “GDPS Continuous Availability disk replication integration” on page 241.

7.2 GDPS Continuous Availability solution products

The GDPS Continuous Availability architecture, which is shown at a conceptual level in Figure 7-2, consists of several products coordinating the monitoring and managing of the various aspects of the environment.

Figure 7-2 GDPS Continuous Availability architecture

This section describes the various products that are required for GDPS Continuous Availability and their role or function within the overall framework. The following products are briefly discussed:

•GDPS Continuous Availability

•IBM Z NetView for z/OS

•IBM Z NetView for z/OS Enterprise Management Agent (NetView agent)

•IBM Z NetView Monitoring for GDPS

•IBM Z Monitoring

•IBM System Automation for z/OS

•IBM Multi-site Workload Lifeline for z/OS

•Middleware such as CICS, IMS, Db2 to run the workloads

•Replication Software:

– IBM InfoSphere Data Replication for DB2 for z/OS

WebSphere MQ is required for Db2 data replication

– IBM InfoSphere Data Replication for VSAM for z/OS

CICS Transaction Server, CICS VSAM Recovery, or both, are required for VSAM replication

– IBM InfoSphere IMS Replication for z/OS

•Other optional components

IBM Tivoli OMEGAMON XE family of monitoring products for monitoring the various parts of the solution

For more information about a solution view that shows how the products are used in the various systems in which they run, see 7.3, “GDPS Continuous Availability environment” on page 213.

7.2.1 GDPS Continuous Availability product

The GDPS Continuous Availability product provides automation code that is an extension of many of the techniques that were tested in other GDPS products. They also were tested with many client environments around the world for management of their mainframe continuous availability and disaster recovery requirements.

The following key functions are provided by GDPS Continuous Availability code:

•Workload management, such as starting or stopping all components of a workload in a specific sysplex.

•Replication management, such as starting or stopping replication for a specific workload from one sysplex to the other.

•Routing management, such as stopping or starting routing of transactions to one sysplex or the other for a specific workload.

•System and Server management, such as STOP (graceful shutdown) of a system; LOAD, RESET, ACTIVATE, DEACTIVATE the LPAR for a system; and capacity on-demand actions, such as CBU/OOCoD activation.

•Monitoring the environment and alerting for unexpected situations.

•Planned/Unplanned situation management and control, such as planned or unplanned site or workload switches.

•Autonomic actions, such as automatic workload switch (policy-dependent).

•Powerful scripting capability for complex/compound scenario automation.

•Co-operation with GDPS Metro to provide continuous data availability in the Active-Active sysplexes.

•Single point of control for managing disk replication functions when running GDPS MGM together with GDPS Continuous Availability to protect non Active-Active data.

•Monitoring of replication across GDPS CA-managed sysplexes of cryptographic data, which is stored by ICSF in VSAM data sets. If a switch occurs from one sysplex to another, all workloads that use ICSF cryptographic services can continue to run.

•Easy-to-use graphical user interface.

7.2.2 IBM Z NetView

The IBM Z NetView product is a prerequisite for GDPS Continuous Availability automation and management code. In addition to being the operating environment for GDPS, the NetView product provides more monitoring and automation functions that are associated with the GDPS Continuous Availability solution.

Monitoring capability by using the NetView agent is provided for the following items:

•IBM Multi-site Workload Lifeline for z/OS

•IBM InfoSphere Data Replication for DB2 for z/OS

•IBM InfoSphere Data Replication for VSAM for z/OS

•IBM InfoSphere Data Replication for IMS for z/OS

NetView Agent

The IBM Z NetView Enterprise Management Agent (also known as TEMA) is used in the solution to pass information from the z/OS NetView environment to the Tivoli Enterprise Portal, which is used to provide a view of your enterprise. From this portal, you can drill down to more closely examine components of each system being monitored. The NetView agent requires IBM NetView Monitoring for Continuous Availability.

7.2.3 IBM NetView Monitoring for Continuous Availability

IBM NetView Monitoring for Continuous Availability is a suite of monitoring components to monitor and report on various aspects of a client’s IT environment. Several of the IBM NetView Monitoring components are used in the overall monitoring of aspects (such as monitoring the workload) within the GDPS Continuous Availability environment.

The specific components that are required for GDPS Continuous Availability are listed here.

Tivoli Enterprise Portal

Tivoli Enterprise Portal (portal client or portal) is a Java-based interface for viewing and monitoring your enterprise. Tivoli Enterprise Portal offers two modes of operation: desktop and browser.

Tivoli Enterprise Portal Server

Tivoli Enterprise Portal Server (portal server) provides the core presentation layer for retrieval, manipulation, analysis, and preformatting of data. The portal server retrieves data from the hub monitoring server in response to user actions at the portal client, and sends the data back to the portal client for presentation. The portal server also provides presentation information to the portal client so that it can render the user interface views suitably.

Tivoli Enterprise Monitoring Server

The Tivoli Enterprise Monitoring Server (monitoring server) is the collection and control point for performance and availability data and alerts that are received from monitoring agents (for example, the NetView agent). It is also responsible for tracking the online or offline status of monitoring agents.

The portal server communicates with the monitoring server, which in turn controls the remote servers and any monitoring agents that might be connected to it directly.

7.2.4 System Automation for z/OS

IBM System Automation for z/OS is a cornerstone of all members of the GDPS family of products. In GDPS Continuous Availability, it provides the critical policy repository function, in addition to managing the automation of the workload and systems elements. System Automation for z/OS also provides the capability for GDPS to manage and monitor systems in multiple sysplexes.

System Automation for z/OS is required on the Controllers and all production systems that are running Active-Active workloads. If you use an automation product other than System Automation for z/OS to manage your applications, you do not need to replace your entire automation with System Automation. Your automation can coexist with System Automation and an interface is provided to ensure that proper coordination occurs.

7.2.5 IBM Multi-site Workload Lifeline for z/OS

This product provides intelligent routing recommendations to external load balancers or IBM MQ Queue Managers for server instances that can span two sysplexes or sites. Support also is provided for workloads that target SNA applications that are running in an Active/Standby configuration across two sites. Finally, user exits are provided with which you can manage the routing of workloads that use unsupported connectivity methods.

The IBM Multi-site Workload Lifeline for z/OS product consists of Advisors and Agents. One Lifeline Advisor is available that is active in the same z/OS image as the GDPS Primary Controller and assumes the role of primary Advisor. At most, one other Lifeline Advisor is active on the Backup Controller and assumes the role of secondary Advisor.

The two Advisors exchange state information so that the secondary Advisor can take over the primary Advisor role if the current primary Advisor is ended or a failure occurs on the system where the primary Advisor was active.

In addition, a Lifeline Agent is active on all z/OS images where workloads can run. All Lifeline Agents monitor the health of the images they are running on and the health of the workload. These Agents communicate this information back to the primary Lifeline Advisor, which then calculates routing recommendations.

For TCP/IP-based routing, external load balancers establish a connection with the primary Lifeline Advisor and receive routing recommendations through the open-standard Server/Application State Protocol (SASP) API, which is documented in RFC 4678.

For IBM MQ-based routing, the Lifeline Advisor and agents communicate with IBM MQ Queue managers to manage IBM MQ message traffic. Lifeline communicates with VTAM in the z/OS LPARs in which the workloads run to monitor and manage SNA-based traffic to the workloads.

Finally, user exits are started when routing decisions must be made for a workload that relies on connectivity methods that are not directly supported by IBM Multi-site Workload Lifeline.

The Lifeline Advisor also establishes a Network Management Interface (NMI) to allow network management applications (such as NetView) to retrieve internal data that the Advisor uses to calculate routing recommendations.

The Lifeline Advisors and Agents use configuration information that is stored in text files to determine what workloads must be monitored and how to connect to each other and external load balancers and IBM MQ Queue managers.

7.2.6 Middleware

Middleware components, such as CICS regions or Db2 subsystems, form a fundamental part of the Active/Active environment because they provide the application services that are required to process the workload.

To maximize the availability characteristics of the GDPS Continuous Availability environment, applications and middleware must be replicated across multiple images in the active and standby Parallel Sysplexes to cater for local high availability if components fail. Automation must be in place to ensure clean start, shutdown, and local recovery of these critical components. CICS/DB2 workloads that are managed by CPSM derive more benefits in a GDPS Continuous Availability environment.

7.2.7 Replication software

Unlike in other GDPS solutions where the replication is based on mirroring, the disk-based data at the block level that uses hardware (such as Metro Mirror or Global Mirror) or a combination of hardware and software (z/OS Global Mirror, also known as XRC), replication in GDPS Continuous Availability is managed by software only. The following products are supported in GDPS Continuous Availability:

•IBM InfoSphere Data Replication for Db2 for z/OS (IIDR for Db2)

This product, also known widely as Q-rep, uses underlying IBM WebSphere MQ as the transport infrastructure for moving the Db2 data from the source to the target copy of the database. Transaction data is captured at the source site and placed in IBM MQ queues for transmission to a destination queue at the target location, where the updates are then applied in real time to a running copy of the database.

For large scale and update-intensive Db2 replication environments, a single pair of capture/apply engines might not be able to keep up with the replication. Q-rep provides a facility that is known as Multiple Consistency Groups (MCG) where the replication work is spread across multiple capture/apply engines, yet the time order (consistency) for the workload across all of the capture/apply engines is preserved in the target database. GDPS supports and provides specific facilities for workloads that use MCG with Db2 replication.

•IBM InfoSphere Data Replication for IMS for z/OS (IIDR for IMS)

IBM InfoSphere IMS Replication for z/OS is the product that provides IMS data replication and uses a capture and apply technique that is similar to the technique that is outlined for Db2 data. However, IMS Replication does not use IBM MQ as the transport infrastructure to connect the source and target copies. Instead, TCP/IP is used in place of IBM MQ through the specification of host name and port number to identify the target to the source and similarly to define the source to the target.

•IBM InfoSphere Data Replication for VSAM for z/OS (IIDR for VSAM)

IBM InfoSphere Data Replication for VSAM for z/OS is similar in structure to the IMS replication product, except that it is for replicating VSAM data. For CICS VSAM data, the sources for capture are CICS log streams. For non-CICS VSAM data, CICS VSAM Recovery (CICS VR) is required for logging, and is the source for replicating such data. Similar to IMS replication, TCP/IP is used as the transport for VSAM replication.

GDPS Continuous Availability provides high-level control capabilities to start and stop replication between identified source and target instances through scripts and window actions in the GDPS GUI.

GDPS also monitors replication latency and uses this information when deciding whether Query workloads can be routed to the standby site.

7.2.8 Other optional components

Other components can optionally be used to provide specific monitoring, as described in this section.

Tivoli OMEGAMON XE family

Other products, such as Tivoli OMEGAMON® XE on z/OS, Tivoli OMEGAMON XE for DB2, and Tivoli OMEGAMON XE for IMS, can be deployed to provide specific monitoring of products that are part of the Active/Active sites solution.

7.3 GDPS Continuous Availability environment

In this section, we provide a conceptual view of a GDPS Continuous Availability environment, plugging in the products that run on the various systems in the environment. We then take a closer look at how GDPS Continuous Availability works. Finally, we briefly discuss environments where Active-Active and other workloads coexist on the same sysplex.

Figure 7-3 GDPS Continuous Availability environment functional overview

The GDPS Continuous Availability environment consists of two production sysplexes (also referred to as sites) in different locations. For each update workload that is to be managed by GDPS Continuous Availability, at any time, one of the sysplexes is the active sysplex and the other acts as standby.

As shown in Figure 7-3, one workload and only one active production system is running this workload in one sysplex, and one production system is standby for this workload. However, multiple cloned instances of the active and the standby production systems can exist in the two sysplexes.

When multiple workloads are managed by GDPS, a specific sysplex can be the active sysplex for one update workload, while it is standby for another. It is the routing for each update workload that determines which sysplex is active and which sysplex is standby for a workload. As such, in environments where multiple workloads exist, no concept as an active sysplex is used. A sysplex that is the currently active one for an update workload is used.

The production systems (the active and the standby instances) are actively running the workload that is managed by GDPS. What makes a sysplex (and therefore the systems in that sysplex) active or standby is whether update transactions are being routed to that sysplex.

The SASP routers in the network, which are shown in Figure 7-3 on page 213 as the cloud under GDPS and LifeLine Advisor, control routing of transactions for a workload to one sysplex or the other. Although a single router is the minimum requirement, we expect that you configure multiple routers for resiliency.

The workload is actively running on the z/OS system in both sysplexes. The workload on the system that is active for that workload is processing update transactions because update transactions are being routed to this sysplex.

The workload on the standby sysplex is actively running, but is not processing any update transactions because update transactions are not being routed to it. It is waiting for work, and can process work at any time if a planned or unplanned workload switch occurs that results in transactions being routed to this sysplex. If a workload switch occurs, the standby sysplex becomes the active sysplex for the workload.

The workload on the standby sysplex can be actively processing query transactions for the query workload that is associated with an update workload. Replication latency at any time, with thresholds that you specify in the GDPS policy, determines whether query transactions are routed to the standby sysplex.

The GDPS policy indicates when the latency or the replication lag is considered to be too high (that is, the data in the standby sysplex is considered to be too far behind) to the extent that query transactions are no longer be routed there, but are routed to the active sysplex instead. When query transactions are no longer being routed to the standby sysplex because the latency threshold was exceeded, another threshold is available that you specify in the Lifeline configuration that indicates when it is OK to route query transactions to the standby sysplex again.

For example, your policy might indicate that query transactions for a workload are not routed to the standby sysplex if latency exceeds 7 seconds and that it is permitted to route to the standby sysplex after latency falls below 4 seconds. Latency is continually monitored to understand whether query transactions can be routed to the standby sysplex.

In addition to the latency control, you can specify a policy to indicate what percentage of the incoming query transactions are routed to the standby site or whether you want the conditions, such as latency and workload health, to dictate a dynamic decision on which of the two sysplexes query transactions are routed to at any time.

The workload is any subsystem that is receiving and processing updates or query transactions through the routing mechanism and uses the replicated databases.

On the active system, you see a replication capture engine. One or more such engines can exist, depending on the data being replicated. This software replication component captures all updates to the databases that are used by the workload that is managed by GDPS and forwards them to the standby sysplex.

On the standby sysplex, the counterpart of the capture engine is the apply engine. The apply engine receives the updates that are sent by the capture engine and immediately applies them to the database for the standby sysplex.

The data replication in a GDPS environment is asynchronous (not all GDPS types are asynchronous). Therefore, the workload can perform a database update and this write operation can complete, independent of the replication process.

Replication requires sufficient bandwidth for transmission of the data being replicated. IBM has services that can help you determine the bandwidth requirements based on your workload.

If replication is disrupted for any reason, the replication engines, when restored, include logic to know where they left off and can transmit only those changes that are made after the disruption.

Because the replication is asynchronous, no performance effect is associated with replication. For a planned workload switch, the switch can occur after all updates are drained from the sending side and applied on the receiving side.

For Db2 replication, GDPS provides extra automation to determine whether all updates drained. This feature allows planned switch of workloads by using Db2 replication to be completely automated.

For an unplanned switch, some data often is captured but not yet transmitted and therefore not yet applied on the target sysplex because replication is asynchronous. The amount of this data effectively translates to RPO.

With a correctly sized, robust transmission network, the RPO during normal operations is expected to be as low as a few seconds. You might also hear the term latency used with replication. Latency is another term that is used for the replication lag or RPO.

Although we talk about RPO, data is lost only if the original active site or the disks in this site where some updates were stranded are physically damaged so that they cannot be restored with the data intact. Following an unplanned switch to the standby site, if the former active site is restored with its data intact, any stranded updates can be replicated to the new active site at that time and no data is lost.

Also, specialized implementations of GDPS Continuous Availability, known as a Zero Data Loss or ZDL configurations, can be used in some environments to provide an RPO of zero. This feature is available even when the disks in the site that failed were physically damaged. For more information about the ZDL configuration, see 7.7, “Zero Data Loss configuration” on page 243.

IBM MQ is shown on production systems and is required for Db2 replication. Either CICS or CICS VR is required on the production systems for VSAM replication.

On the production systems on both the active and standby sysplexes, you also see the monitoring and management products. NetView, System Automation, and the LifeLine Agent run on all production systems, monitoring the system, the workload on the system, and replication latency, and provide information to the active-active Controllers.

TCP/IP on the production systems is required in support of several functions that are related to GDPS Continuous Availability.

On the production systems, we show that you might have a product other than System Automation to manage your applications. In such an environment, System Automation is still required for GDPS Continuous Availability workload management. However, it is not necessary to replace your automation to use System Automation. A simple process for enabling the coexistence of System Automation and other automation products is available.

Not shown in Figure 7-3 on page 213 is the possibility of running other workloads that are not managed by GDPS Continuous Availability on the same production systems that run Active-Active workloads. For more information about other non-Active-Active workloads, see 7.3.2, “Considerations for other non-Active-Active workloads” on page 220.

Figure 7-3 on page 213 shows two GDPS Controller systems. At any time, one is the Primary Controller and the other is the Backup. These systems often are in each of the production sysplex locations, but they are not required to be collocated in this way.

GDPS Continuous Availability introduces the term Controller, as opposed to the Controlling System term that is used within other GDPS solutions. The function of the Primary Controller is to provide a point of control for the systems and workloads that are participating in the GDPS Continuous Availability environment for planned actions (such as IPL and directing, which is the active sysplex for a workload) and for recovery from unplanned outages. The Primary Controller is also where the data that is collected by the monitoring aspects of the solution can be accessed.

Both controllers run NetView, System Automation and GDPS Continuous Availability control code, and the LifeLine Advisor. The Tivoli Monitoring components Tivoli Enterprise Monitoring Server and IBM Z NetView Enterprise Management Agent run on the Controllers. Figure 7-3 on page 213 shows that a portion of Tivoli Monitoring is not running on z/OS. The Tivoli Enterprise Portal Server component can run on Linux on IBM Z or on a distributed server.

Together with System Automation on the Controllers, you see the BCP Internal Interface (BCPii). On the Controller, GDPS uses this interface to perform hardware actions against the LPAR of production systems or the LPAR of the other Controller system, such as LOAD and RESET, and for performing hardware actions for capacity on demand, such as CBU or OOCoD activation.

Figure 7-3 on page 213 also shows the Support Element/Hardware Management Console (SE/HMC) local area network (LAN). This element is key for the GDPS Continuous Availability solution.

The SE/HMC LAN spans the IBM Z servers for both sysplexes in the two sites. This configuration allows for a Controller in one site to act on hardware resources in the other site. To provide a LAN over large distances, the SE/HMC LANs in each site are bridged over the WAN.

It is desirable to isolate the SE/HMC LAN on a network other than the client’s WAN, which is the network that is used for the Active-Active application environment and connecting systems to each other. When isolated on a separate network, Lifeline Advisor (which is responsible for detecting failures and determining whether a sysplex failed) can try to access the site that appears to fail over the WAN and SE/HMC LAN.

If the site is accessible through the SE/HMC LAN but not the WAN, Lifeline can conclude that only the WAN failed, and not the target sysplex. Therefore, isolating the SE/HMC LAN from the WAN provides another check when deciding whether the entire sysplex failed and whether a workload switch is to be performed.

7.3.1 GDPS Continuous Availability: A closer look

In this section, we examine more closely how GDPS Continuous Availability works by using an example of a GDPS Continuous Availability environment with multiple workloads (see Figure 7-4). In this example, we consider update workloads only. Extending this example with query workloads corresponding to one or more of the update workloads can be a simple matter.

Figure 7-4 GDPS Continuous Availability environment with multiple workloads: All active in one site

Figure 7-4 shows two sites (Site1 and Site2) and a Parallel Sysplex in each site: AAPLEX1 runs in Site1 and AAPLEX2 runs in Site2. Coupling facilities CF11 and CF12 serve AAPLEX1 structures. CF21 and CF22 serve AAPLEX2 structures.

Each sysplex consists of two z/OS images. The z/OS images in AAPLEX1 are named AASYS11 and AASYS12. The images in AAPLEX2 are named AASYS21 and AASYS22. Two GDPS Controller systems also are shown: AAC1 in Site1, and AAC2 in Site2.

Three workloads are managed by GDPS in this environment: Workload_1, Workload_2, and Workload_3. Workload_1 and Workload_2 are cloned, which are Parallel Sysplex-enabled applications that run on both z/OS images of the sysplexes. Workload_3 runs only in a single image in the two sysplexes.

Now, the transactions for all three workloads are routed to AAPLEX1. The workloads are running in AAPLEX2, but they are not processing transactions because no transactions are routed to AAPLEX2.

AAPLEX1 is the source for data replication for all three workloads, and AAPLEX2 is the target. Also shown in Figure 7-4 are reverse replication links from AAPLEX2 towards AAPLEX1. This configuration indicates that if the workload is switched, the direction of replication can be and is switched.

If AASYS12 incurs an unplanned z/OS outage, all three workloads continue to run in AASYS11. Depending on the sizing of the systems, it is possible that AASYS11 does not feature sufficient capacity to run the entire workload.

Also, AASYS11 is now a single point of failure for all three workloads. In such a case where no workload failed but a possible degradation of performance and availability levels exists, you must decide whether you want to continue running all three workloads in AASYS11 until AASYS12 can be restarted or whether you switch one or more (or possibly all three) workloads to run in AAPLEX2 systems. You must prepare for these decisions; that is, a so-called pre-planned unplanned scenario.

If you decide to switch one or more workloads to run actively in AAPLEX2, you often use a pre-coded planned action GDPS script to perform the switch of the workloads you want. Switching a workload in this case requires the following actions, by issuing a single GDPS command issued from a script, or by selecting the appropriate action from the GDPS GUI:

1. Stop the routing of transactions for the selected workloads to AAPLEX1.

2. Wait until all updates for the selected workloads on AAPLEX1 are replicated to AAPLEX2.

3. Stop replication for the selected workloads from AAPLEX1 to AAPLEX2.

4. Start the routing of transactions for the selected workloads to AAPLEX2.

After such a planned action script is started, it can complete the requested switching of the workloads in a matter of seconds.

As you can see, we do not stop the selected workloads in AAPLEX1. The workload does not need to be stopped for this particular scenario where we toggled the subject workloads to the other site to temporarily provide more capacity, remove a temporary single point of failure, or both.

We assumed in this case that AAPLEX2 had sufficient capacity available to run the workloads being switched. If AAPLEX2 did not have sufficient capacity, GDPS also can activate On/Off Capacity on Demand (OOCoD) on one or more servers in Site2 that is running the AAPLEX2 systems before routing transactions there.

Now, assume that you decide to switch Workload_2 to Site2, but you keep Site1/AAPLEX1 as the primary for the other two workloads. When the switch is complete, the resulting position is shown in Figure 7-5. In the figure, we assume that you also restarted in place the failed image, AASYS12.

Figure 7-5 GDPS Continuous Availability environment with different workloads active in different sites

The router cloud shows to which site the transactions for each of the workloads is routed. Based on routing, AAPLEX2 is now the active sysplex for Workload_2. AAPLEX1 remains the active sysplex for Workload_1 and Workload_3. Replication for the data for Workload_2 is from AAPLEX2 to AAPLEX1. Replication for the other two workloads is still from AAPLEX1 to AAPLEX2. You might hear the term dual Active/Active being used to describe this kind of an environment where both sites or sysplexes are actively running different workloads, but each workload is Active/Standby.

The example that we discussed was an outage of AASYS12 that runs only cloned instances of the applications for Workload_1 and Workload_2. In contrast, Workload_3 does not include any cloned instances and runs only on AASYS11.

An unplanned outage of AASYS11 result in a failure of Workload_3 in its current sysplex. This failure is detected and, based on your workload failure policy, can trigger an automatic switch of the failed workload to the sysplex that is standby for that workload.

However, if you do not want GDPS to perform automatic workload switch for failed workloads, you can select the option of an operator prompt. The operator is prompted whether GDPS is to switch the failed workload or not. If the operator accepts switching of the workload, GDPS performs the necessary actions to switch the workload. No pre-coded scripts are necessary for this kind of switch that results from a workload failure (automatic or operator confirmed). GDPS understands the environment and performs all the required actions to switch the workload.

Continuing with the same example where AASYS11 failed, which results in failure of Workload_3 in AAPLEX1, when GDPS performs the workload switch, AAPLEX2 becomes the active sysplex and AAPLEX1 is the standby. However, AAPLEX1 can serve only as standby when AASYS11 is restarted and Workload_3 is started on it.

Meanwhile, transactions are running in AAPLEX2 and updating the data for Workload_3. Until replication components of Workload_3 are restarted in AAPLEX1, the updates are not replicated from AAPLEX2 to AAPLEX1.

When replication components are restored on AAPLEX1, replication must be started for Workload_3 from AAPLEX2 to AAPLEX1. The replication components for Workload_3 on AAPLEX1 now resynchronize, and the delta updates that occurred while replication was down are sent across. When this process is complete, AAPLEX1 can be considered to be ready as the standby sysplex for Workload_3.

For an entire site/sysplex failure, GDPS provides similar capabilities as those capabilities for individual workload failure. In this case, multiple workloads might be affected.

Similar to workload failure, a policy determines whether GDPS is to automatically switch workloads that fail as a result of a site failure or perform a prompted switch. The only difference here is that the policy is for workloads that fail as a result of an entire site failure whereas in the previous example, we discussed the policy for individual workload failure.

You can specify for each workload individually whether GDPS is to perform an automatic switch or prompt the operator. Also, you can select a different option for each workload (automatic or prompt) for individual workload failure versus site failure.

For entire site or sysplex failures where multiple workloads are affected and switched, GDPS provides parallelization. The RTO for switching multiple workloads is much the same as switching a single workload.

Unplanned workload switches are expected to take slightly longer than planned switches because GDPS must wait to ensure that the unresponsive condition of the systems or workloads is not because of a temporary stall that can soon clear itself (that is, a false alarm). This safety mechanism is similar to the failure detection interval for systems that are running in a sysplex where in the Active-Active case, the aim is to avoid unnecessary switches because of a false alert. However, after the failure detection interval expires and the systems or workloads continue to be unresponsive, the workload switches are fast and performed in parallel for all workloads that are switched.

In summary, GDPS Continuous Availability manages individual workloads. Different workloads can be active in different sites. What is not allowed is for a particular workload to be actively receiving and running transactions in more than one site at any time.

7.3.2 Considerations for other non-Active-Active workloads

In the same sysplex where Active-Active workloads are running, you might have other workloads that are not managed by GDPS Continuous Availability.

In such an environment where Active-Active and non-Active-Active workloads coexist, it is important to provide the necessary level of isolation for the Active-Active workloads and data. The data that belongs to the Active-Active workloads is replicated under GDPS Continuous Availability control and must not be used by non-managed applications.

Assume that a workload is active in Site1 and standby in Site2. Also, assume that a non-managed application is in Site1 that uses the same data that is used by your managed workload.

If you now switch your managed workload to Site2, the non-managed workload that is not included in the Active-Active solution scope continues to update the data in Site1 while the managed workload started to update the database instance in Site2. Such use of data that belongs to Active-Active workloads by non-managed applications can result in data loss, potential data corruption, and serious operational issues.

For this reason, the data that belongs to Active-Active workloads must not be modified in any way by other applications. The simplest way to provide this isolation is to run Active-Active workloads and other workloads in different sysplexes.

We understand that it might not be easy or possible to provide sysplex-level isolation. In this case, if you isolated the Active-Active workloads and data, you might have other non-managed workloads and the data for such workloads coexisting in the same sysplex with Active-Active.

However, another technique in addition to GDPS Continuous Availability for the Active-Active workloads (perhaps hardware replication together with a solution such as GDPS Metro, GDPS GM, or GDPS XRC), must be used to protect the data and manage the recovery process for the non-Active-Active workloads.

GDPS Continuous Availability includes specific functions to cooperate and coordinate actions with GDPS Metro that is running on the same sysplex. GDPS Metro can protect the entire sysplex, not just the systems that are running the Active-Active workloads. For more information about this capability, see 7.5, “GDPS Continuous Availability co-operation with GDPS Metro” on page 239.

GDPS Continuous Availability also provides for integration of disk replication functions for a GDPS MGM configuration so that the GDPS Continuous Availability Controllers can act as a single point of management and control for GDPS Continuous Availability workloads and GDPS MGM replication. All data for all systems (for Active-Active and non Active-Active workloads) can be covered with GDPS MGM. For more information about this facility, see 7.6, “GDPS Continuous Availability disk replication integration” on page 241.

Because client environments and requirements vary, no “one size fits all” type of recommendation can be made. It is possible to combine GDPS Continuous Availability with various other hardware-replication-based GDPS products to provide a total recovery solution for a sysplex that houses Active-Active and other workloads.

If you cannot isolate your Active-Active workloads into a separate sysplex, discuss this issue with your IBM GDPS specialist, who can provide you with guidance that is based on your specific environment and requirements.

7.4 GDPS Continuous Availability functions and features

In this section, we provide a brief overview of the following functions and capabilities that are provided by the GDPS Continuous Availability product:

•GDPS graphical user interface (GUI)

•Standard Actions for system/hardware automation

•Monitoring and Alerting

•GDPS scripts

•GDPS application programming interfaces (APIs)

7.4.1 GDPS Continuous Availability graphical user interface

GDPS Continuous Availability is operated on the Controller systems by using an operator interface that is provided through a graphical user interface (GUI). The interface is intuitive and easy to use. Unlike other predecessor GDPS products, no 3270-based user interface is available with GDPS Continuous Availability.

Figure 7-6 Initial GUI window

The GUI window features the following sections:

1. A header bar that includes the following components:

– The name of the GDPS solution.

– An Actions button with which you can run commands that are relevant to the current window.

– A Systems button that is used to change to a different NetView instance.

– A TEP button that is used to access the Tivoli Enterprise Portal. In addition to providing a monitoring interface to the overall solution, the Tivoli Enterprise Portal allows you to set up specific situations for alerting of conditions, such as the replication latency exceeding a certain threshold.

The workload-related workspaces also can quickly show such things as the number of servers active in both sites and to where the routing is active. This information can be useful to correlate against that information that is shown in the GDPS web interface to confirm the status of any particular resources.

– A Help button.

– A button that indicates the logged-on user ID, from which you can change the refresh rate and the order of display of workloads, and log out.

2. An application menu, which provides access to Standard Actions, CPC Operations, Workload Management, Script management, SDF Alerts, CANZLOG, NetView, WTORs, Debug settings, and Dump settings.

3. The main area of the window, which is known as the Dashboard. It displays information about the state of replication of workloads between sysplexes.

4. The status summary area. It provides general information about the status of routing, replication, and switching, and the system and controllers, plus SDF Alerts and WTORs.

Most frames include a Help button to provide extensive help text that is associated with the information that is displayed and the selections that are available on that specific frame.

|

Note: Some windows that are provided as samples might not be the latest version of the window. They are intended to give you an idea of the capabilities that are available by using the GUI.

|

7.4.2 GDPS Standard Actions, CPC Operations, and Workload Management

GDPS Standard Actions, CPC Operations, and Workload Management are accessed by using the GUI from the icon that is shown in Figure 7-7 on page 223.

Figure 7-7 Accessing Standard Actions, CPC Operations, and Workload Management

GDPS Standard Actions

Figure 7-8 on page 224 shows the following GDPS Standard Actions that can be performed against the selected target system, which is available from the drop-down menu or by highlighting the target system then, clicking Actions:

•Manage Load Table

•Stop (graceful shutdown)

•Load

•Reset

•ReIPL

•Activate

•Deactivate

•IPL Info (modification and selection of Load Address and Load Parameters to be used during a subsequent LOAD operation)

Figure 7-8 Standard Actions frame showing available actions

Most of the GDPS Standard Actions require actions to be done on the HMC. The interface between GDPS and the HMC is through the BCP Internal Interface (BCPii). GDPS uses the BCPii interface that is provided by System Automation for z/OS.

When a specific Standard Action is selected by clicking the button for that action, more prompts and windows are displayed for operator actions, such as confirming that you want to perform the subject operation.

Although in this example we showed the use of GDPS Standard Actions to perform operations against the other Controller, in an Active-Active environment, you also use the same set of Standard Actions to operate against production systems in the environment.

If specific actions are performed as part of a compound workflow (such as planned shutdown of an entire site where multiple systems are stopped or the LPARs for multiple systems RESET and Deactivated), the operator often does not use the web interface. Instead, they perform the same tasks by using the GDPS scripting interface. For more information about GDPS scripts, see 7.4.6, “GDPS Continuous Availability scripts” on page 233.

The GDPS LOAD and RESET Standard Actions (available through the Standard Actions window or the SYSPLEX script statement) allow specification of a CLEAR or NOCLEAR operand. This feature provides operational flexibility to accommodate customer procedures, which eliminates the requirement to use the HMC to perform specific LOAD and RESET actions.

GDPS supports stand-alone dumps by using the GDPS Standard Actions window. The stand-alone Dump option can be used against any IBM Z operating system that is defined to GDPS. Customers that use GDPS facilities to perform HMC actions no longer need to use the HMC for stand-alone dumps.

CPC Operations

Under CPC operations, several tasks can be performed and information retrieved for a target CPC. The following tasks are available from a drop-down menu, which you access by right-clicking the target CPC, or highlighting the target CPC then, clicking Actions:

•Query the status of a CPC

•View records allows you to view installed temporary capacity upgrade records

•Capacity allows you to manage temporary capacity upgrade records

•Suspend a support element

•Resume a support element

Workload Management option

When you select Workload Management, a window that is similar to the window that is shown in Figure 7-9 opens, which provides a list of all your workloads. You can filter the list to see only a subset of the workloads. You also can perform several actions against selected workloads, such as start or stop on a selected sysplex, start or stop routing, start or stop replication, or force a switch.

Figure 7-9 Workload Management list of workloads

When you select a workload, a window that is similar to the window that is shown in Figure 7-10 opens. It is slightly different for different types of workload, but the general layout is the same.

Figure 7-10 View Workload window for a Db2 update workload

Information is provided concerning the workload and its routing situation, replication situation, and components. You can also start and stop a workload, routing, and replication.

7.4.3 GDPS Planned Actions, Batch Scripts, and Switch Scripts

GDPS Planned Actions, Batch Scripts, and Switch Scripts are accessed by using the icon in the GUI, as shown in Figure 7-11.

Figure 7-11 Accessing Planned actions, Batch scripts, and Switch scripts

GDPS provides facilities for scripting steps to be taken for certain planned and unplanned outages. Scripts can be run on the current Master only. The following GDPS Planned and Unplanned actions are available:

Planned/Control Planned Actions allows you to view and run scripts for planned scenarios such as site shutdown, site start, or CEC shutdown and start. These scripts can run at any time. Control scripts are started by the operator by using the GDPS UI from the Planned Actions Work Area. The intent of Control scripts is to provide an automated “workflow” of the steps that are required to process a planned outage scenario, and the return-to-normal scenarios applicable to these planned outages.

Batch These Planned actions cannot be started from the GDPS UI, but only from some other planned event. For example, the starting event can be a job or messages that are triggered by a job scheduling application. Batch scripts can only be started from outside of GDPS using the VPCEXIT2 batch interface exit.

Switch These Unplanned actions that are run as a result of a GDPS-detected workload or site failure. They cannot be activated manually. They are started automatically (if coded) as a result of an automatic or prompted workload or site switch action that was started by GDPS. The intent of Switch scripts is to complement the standard workload or site switch processing that is performed by GDPS (for example, to add capacity to the standby site).

For more information about scripts, see 7.4.6, “GDPS Continuous Availability scripts” on page 233.

7.4.4 SDF Alerts, CANZLOG, NetView, and WTORs

SDF Alerts, CANZLOG, the NetView command interface, and WTOR information are accessed by using the icon in the GUI, as shown in Figure 7-12.

Figure 7-12 Accessing SDF Alerts, CANZLOG, NetView, and WTORs

SDF Alerts

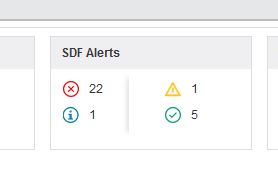

GDPS Continuous Availability Controller systems perform periodic monitoring of resources and conditions that are critical to, or important for, the healthy operation of the environment. For example, GDPS checks whether the workloads that are managed by GDPS are running on the active and standby sysplexes, that the BCP Internal Interface is functional, connectivity from the controller to the production system is intact, current replication latency, and so on. If GDPS discovers any exception situations, it raises Status Display Facility (SDF) alerts.

Status Display Facility (SDF) is the focal point for monitoring the GDPS Continuous Availability environment. You can view and manage SDF alerts by using the icon that is shown in Figure 7-12 on page 227. You can also use the SDF Alerts pane, which is always present at the bottom of the GUI window, as shown in Figure 7-13.

Figure 7-13 SDF Alerts pane

Either way, you see the window that is shown in Figure 7-14, from where you can view and reply to current SDF Alerts.

Figure 7-14 SDF Alerts window

If all is well and no alerts exist that indicate a potential issue with the environment, the status icons are displayed in green. If any SDF status icon is displayed in a color other than green, this status indicates that a real or a potential problem exists.

CANZLOG

Consolidated Audit, NetView, and z/OS Log (CANZLOG) captures anything that goes into the NetLog, and any syslog messages or commands that flow through the SSI in z/OS system or LPAR. The active CANZLOG is a z/OS data space setup in the NetView module. It can be used in a monoplex or a sysplex environment.

When you select CANZLOG (as shown in Figure 7-12 on page 227), a pane that is similar to the pane that is shown in Figure 7-15 opens. From this pane, you can apply a filter to see only the messages that interest you. The log (or specified parts of it) also can be exported to the Syslog or Netlog.

Figure 7-15 CANZLOG

NetView Command Interface

Access the NetView Command Interface by using the icon that is shown in Figure 7-12 on page 227. A NetView console is presented, which features a NetView command line and Submit button underneath the console, as shown in Figure 7-16 on page 230. From here, you can enter NetView commands as normal.

Figure 7-16 NetView command interface

WTORs

Outstanding GDPS WTORs (Write to Operator) are indicated in the status pop-up messages at the lower right of the dashboard window, as shown in Figure 7-17.

Figure 7-17 WTOR notification pop-up message

You can access the WTORs by using the icon that is shown in Figure 7-12 on page 227 or the WTOR box, which is always present at the bottom of the GUI window, as shown in Figure 7-18.

Figure 7-18 WTOR box

7.4.5 Settings and Debug

Figure 7-19 Accessing Settings and Debug

Options

As shown in Figure 7-20, this window provides basic information about the following parameters:

•TEP URL

•Master List

•LifeLine Advisor Failure

•Topology

Figure 7-20 Options window

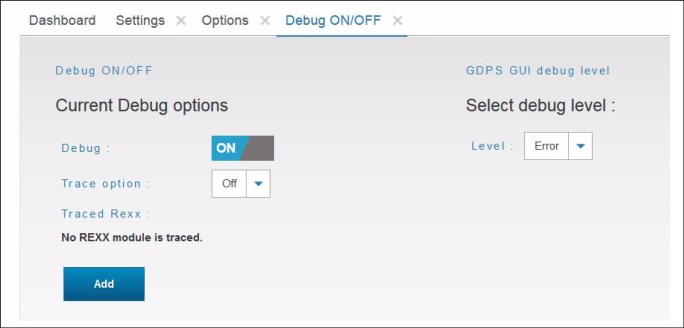

Debug ON/OFF

As a NetView based automation application, GDPS Continuous Availability uses the NetView log as the main repository for information logging. In addition to the NetView log, selected critical GDPS messages are sent to the z/OS system log.

The GDPS Debug facility enables logging in the NetView log, which provides more detailed trace entries that pertain to the operations that GDPS is performing. If you encounter a problem, you might want to collect debug information for problem determination purposes. If directed by IBM support, you might need to trace execution of specific modules. The GDPS debug facility also allows you to select the modules to be traced.

The Debug frame is shown in Figure 7-21.

Figure 7-21 Debug ON/OFF pane

7.4.6 GDPS Continuous Availability scripts

We reviewed the GDPS web interface, which provides powerful functions to help you manage your workloads and systems in the sites where they are running. However, the GDPS web interface is not the only means for performing these functions.

Nearly all of the functions that can be manually started by the operator through the web interface are also available through GDPS scripts. Other actions are not available through the web interface, such as activating capacity on demand (CBU or OOCoD) that are only possible by using GDPS scripts. In addition to the set of script commands that are supplied by GDPS, you can integrate your own REXX procedures and run them as part of a GDPS script.

A script is a procedure that is recognized by GDPS that pulls together into a workflow (or a list) one or more GDPS functions to be run one after the other. GDPS checks the result of each command and proceeds with the next command only if the previous command ran successfully. Scripts can be started manually through the GDPS windows (by using the Planned Actions interface), automatically by GDPS in response to an event (Unplanned Actions), or through a batch interface.

Scripts are easy to code. The use of scripts forces you to plan for the actions you must take for various planned and unplanned outage scenarios, and how to bring the environment back to normal. In this sense, when you use scripts, you plan even for an unplanned event and are not caught unprepared. This aspect is an important aspect of GDPS. Scripts are powerful because they can use the full capability of GDPS.

The ability to plan and script your scenarios and start all of the GDPS functions provides the following benefits:

•Speed

A script runs the requested actions as quickly as possible. Unlike a human, it does not need to search for the latest procedures or the commands manual. It can check results fast and continue with the next statement immediately when one statement is complete.

•Consistency

If you look into most computer rooms immediately following a system outage, what would you see? Mayhem. Operators frantically scrambling for the latest system programmer instructions. All the phones ringing. Every manager within reach asking when the service will be restored. And every systems programmer with access vying for control of the keyboards. All this chaos results in errors because humans naturally make mistakes when under pressure. But with automation, your well-tested procedures run in the same way, time after time, regardless of how much you shout at them.

•Thoroughly thought-out and tested procedures

Because they behave in a consistent manner, you can test your procedures over and over until you are sure that they do everything that you want, in exactly the manner that you want. Also, because you must code everything and cannot assume a level of knowledge (as you might with instructions that are intended for a human), you are forced to thoroughly think out every aspect of the action the script is intended to undertake. Because of the repeatability and ease of use of scripts, they lend themselves more easily to frequent testing than manual procedures.

•Reduction of requirement for onsite skills

How many times have you seen disaster recovery tests with large numbers of people onsite for the test and many more standing by for a call? How realistic is this scenario? Can all of these people be onsite on short notice if a catastrophic failure occurred?

The use of GDPS automation and scripts removes the need for the numbers and the range of skills that enterprises traditionally needed to do complex or compound reconfiguration and recovery actions.

Planned Actions

Planned Actions are GDPS scripts that are started from the GUI using the Planned Actions frame, as described in “Settings and Debug” on page 231. GDPS scripts are procedures that pull together into a list one or more GDPS functions to be run sequentially. Scripted procedures that you use for a planned change to the environment are known as control scripts.

A control script that is running can be stopped if necessary. Control scripts that were stopped or that failed can be restarted at any step of the script. These capabilities provide a powerful and flexible workflow management framework.

As a simple example, you can have a script that recycles a z/OS system. This action is perform if you apply maintenance to the software that required a re-IPL of the system. The script runs the STOP standard action, which performs an orderly shutdown of the target system followed by a LOAD of the same system.

However, it is possible that in your environment you use alternative system volumes. While your system runs on one set of system volumes, you perform maintenance on the other set. So, assuming that you are running on alternative SYSRES1 and you apply this maintenance to SYSRES2, your script also must point to SYSRES2 before it performs the LOAD operation.

As part of the customization that you perform when you install GDPS, you can define entries with names of your choice for the load address and load parameters that are associated with the alternative SYSRES volumes for each system. When you want to LOAD a system, you use a script statement to point to one of these pre-customized entries by using the entry name that you used when defining them to GDPS.

Example 7-1 shows a sample script to perform this action. In this example, MODE=ALTRES2 points to the load address and load parameters that are associated with alternative SYSRES2 where you applied your maintenance.

Example 7-1 Sample script to re-IPL a system on an alternative SYSRES

COMM=’Re-IPL system AASYS11 on alternate SYSRES2’

SYSPLEX=’STOP AASYS11’

IPLTYPE=’AASYS11 MODE=ALTRES2’

SYSPLEX=’LOAD AASYS11’

Example 7-2 shows a sample script to switch a workload that uses Db2 or IMS replication from its current active site to its standby site.

Example 7-2 Sample script to switch a workload between sites

COMM=’Switch WORKLOAD_1’

ROUTING ‘SWITCH WORKLOAD=WORKLOAD_1’

No target site is specified in the ROUTING SWITCH statement. This specification is not made because GDPS is aware of where WORKLOAD_1 is active and GDPS switches it to the other site. The single ROUTING SWITCH statement3 performs the following actions:

•Stops routing of update transactions to the original active site.

•Waits for replication of the final updates in the current active site to drain.

•Starts routing update transactions to the former standby site, which now becomes the new active site for this workload.

•If a query workload is associated with this update workload, and if, for example, 70% of queries were being routed to the original standby site, the routing for the query workload is changed to send 70% of queries to the new standby site after the switch.

All of these actions are done as a result of running a single script with a single command. This feature demonstrates the simplicity and power of GDPS scripts.

Our final example for the use of a script can be for shutting down an entire site, perhaps in preparation for disruptive power maintenance at that site. For this example, we use the configuration with three workloads, all active in Site1, as shown in Figure 7-22.

Figure 7-22 GDPS Continuous Availability environment sample for Site1 shutdown script

The following sequence is used to completely shut down Site1:

1. Stop routing transactions for all workloads to AAPLEX1.

2. Wait until all updates on AAPLEX1 are replicated to AAPLEX2. In this example, we are assuming these workloads do not support the ROUTING SWITCH function (non-Db2 or IMS replication based). Therefore, full automation for controlling whether data drained or is not yet available.

3. Stop replication from AAPLEX1 to AAPLEX2.

4. Activate On/Off Capacity on Demand (OOCoD) on the CECs that are running the AAPLEX2 systems and CFs (although not shown in t Figure 7-22, we assume the CECs are named CPC21 and CPC22 for this example).

5. Start routing transactions for all workloads to AAPLEX2.

6. Stop the AASYS11 and AASYS12 systems.

7. Deactivate the system and CF LPARs in Site1.

The planned action script to accomplish the Site1 shutdown for this environment is shown in Example 7-3.

Example 7-3 Sample Site1 shutdown script

COMM=‘Switch all workloads to Site2 and Stop Site1’

ROUTING=‘STOP WORKLOAD=ALL SITE=AAPLEX1’

ASSIST=‘WAIT UNTIL ALL UPDATES HAVE DRAINED - REPLY OK WHEN DONE’

REPLICATION=‘STOP WORKLOAD=ALL FROM=AAPLEX1 TO=AAPLEX2’

OOCOD=‘ACTIVATE CPC=CPC21 ORDER=order#’

OOCOD=‘ACTIVATE CPC=CPC22 ORDER=order#’

ROUTING=‘START WORKLOAD=ALL SITE=AAPLEX2’

SYSPLEX=‘STOP SYSTEM=(AASYS11,AASYS12)’

SYSPLEX=‘DEACTIVATE AASYS11’

SYSPLEX=‘DEACTIVATE AASYS12’

SYSPLEX=‘DEACTIVATE CF11’

SYSPLEX=‘DEACTIVATE CF12’

These sample scripts demonstrate the power of the GDPS scripting facility. Simple, self-documenting script statements drive compound and complex actions. A single script statement can operate against multiple workloads or multiple systems. A complex procedure can be described in a script by coding only a handful of statements.

Another benefit of such a facility is the reduction in skill requirements to perform the necessary actions to accomplish the task at hand. For example, in the workload switch and the site shutdown scenarios (depending on your organizational structure within the IT department), you might require database, application/automation, system, and network skills to be available to perform all of the required steps in a coordinated fashion.

Batch scripts

GDPS also provides a flexible batch interface to initiate scripts to make planned changes to your environment. These scripts, known as batch scripts, cannot be started from the GDPS GUI. Instead, they are started from some other planned event that is external to GDPS. For example, the starting event can be a job or messages that are triggered by a job scheduling application.

This capability, along with the Query Services that are described in , “GDPS Continuous Availability Query Services” on page 238, provides a rich framework for user-customizable automation and systems management procedures.

Switch scripts

As described in 7.3.1, “GDPS Continuous Availability: A closer look” on page 217, if a workload or entire site fails, GDPS performs the necessary steps to switch one or more workloads to the standby site. This switching, which is based on the selected policy, can be completely automatic with no operator intervention or can occur after operator confirmation. However, in either case, the steps that are required to switch any workload are performed by GDPS and no scripts are required for this process.

Although GDPS performs the basic steps to accomplish switching of affected workloads, you might want GDPS to perform more actions that are specific to your environment along with the workload switch steps. One such example can be activating CBU for more capacity in the standby site.

Switch scripts are unplanned actions that run as a result of a workload failure or site failure that is detected by GDPS. These scripts cannot be activated manually. They are started automatically if you coded them as a result of an automatic or prompted workload or site switch action that is started by GDPS. The intent of Switch scripts is to complement the standard workload or site switch processing that is performed by GDPS.

7.4.7 Application programming interfaces

GDPS provides two primary programming interfaces to allow other programs that are written by clients: Independent Software Vendors, and other IBM product areas to communicate with GDPS. These APIs allow clients, ISVs, and other IBM product areas to complement GDPS automation with their own automation code. The following sections describe the APIs provided by GDPS.

GDPS Continuous Availability Query Services

GDPS maintains configuration information and status information in NetView variables for the various elements of the configuration that it manages. GDPS Query Services is a capability that allows client-written NetView REXX programs to query the value for numerous GDPS internal variables. The variables that can be queried pertain to the GDPS environment (such as the version and release level of the GDPS control code), sites, sysplexes, and workloads that are managed by GDPS Continuous Availability.

In addition to the Query Services function, which is part of the base GDPS product, GDPS provides several samples in the GDPS SAMPLIB library to demonstrate how Query Services can be used in client-written code.

RESTful APIs

As described in “GDPS Continuous Availability Query Services”, GDPS maintains configuration information and status information about the various elements of the configuration that it manages. Query Services can be used by REXX programs to query this information.

The GDPS RESTful API also provides the ability for programs to query this information. Because it is a RESTful API, it can be used by programs that are written in various programming languages, including REXX, that are running on various server platforms.

In addition to querying information about the GDPS environment, the GDPS RESTful API allows programs that are written by clients, ISVs, and other IBM product areas to start actions against various elements of the GDPS environment. These actions include the following examples:

•Starting and stopping workloads

•Starting, stopping, and switching routing for one or more workloads

•Starting and stopping software replication

•IPLing and stopping systems

•Running scripts

•Starting GDPS monitor processing.

These capabilities enable clients, ISVs, and other IBM product areas provide an even richer set of functions to complement the GDPS functionality.

GDPS provides samples in the GDPS SAMPLIB library to demonstrate how the GDPS RESTful API can be used in programs.

7.5 GDPS Continuous Availability co-operation with GDPS Metro

In an Active-Active environment, each of the sysplexes that are running the Active-Active workloads must be is as highly available as possible. As such, we suggest that the Active-Active workloads are Parallel Sysplex enabled data sharing applications. Although this configuration eliminates a planned or unplanned system outage from being a single point of failure, disk data within each local sysplex is not protected by Parallel Sysplex alone.

To protect the data for each of the two sysplexes that comprise the GDPS Continuous Availability environment, these sysplexes can be running GDPS Metro with Metro Mirror replication and HyperSwap, which complement and enhance local high, continuous availability for the sysplex or sysplexes. For more information about the various capabilities that are available with GDPS Metro, see Chapter 3, “GDPS Metro” on page 53.

With GDPS Continuous Availability and GDPS Metro monitoring and managing the same production systems for a particular sysplex, certain actions must be coordinated. This requirement is necessary so that the GDPS that is controlling systems for the two environments do not interfere with each other or that one environment does not misinterpret actions that are taken by the other environment.

For example, it is possible that one of the systems in the sysplex needs to be re-IPLed for a software maintenance action. The re-IPL of the system can be performed from a GDPS Continuous Availability Controller or by using GDPS Metro that is running on all systems in the same sysplex.

Assume that you start the re-IPL from the GDPS Continuous Availability Controller. GDPS Metro detects that this system is no longer active. It interprets what was a planned re-IPL of a system as a system failure and issues a takeover prompt.

The GDPS Continuous Availability co-operation with GDPS Metro provides coordination and serialization of actions across the two environments to avoid issues that can stem from certain common resources being managed from multiple control points. In our example, when you start the re-IPL from the Active-Active Controller, it communicates this action to the GDPS Metro controlling system.

The GDPS Metro controlling system then locks this system as a resource so that no actions can be performed against it until the Active-Active Controller signals completion of the action. This same type of coordination occurs regardless of whether the action is started by GDPS Continuous Availability or GDPS Metro.

GDPS Continuous Availability can support coordination with GDPS Metro that is running in or both of the Active-Active sites.

In Figure 7-23 on page 240, we show a GDPS Continuous Availability environment across two regions, Region A and Region B. SYSPLEXA in Region A and SYSPLEXB in Region B comprise the two sysplexes that are managed by GDPS Active-Active. Systems AAC1 and AAC2 are the GDPS Continuous Availability Controller systems. Also, each of these sysplexes is managed by an instance of GDPS Metro, with systems KP1A/KP2A being the GDPS Metro controlling systems for SYSPLEXA and KP1B/KP2B being the GDPS Metro controlling systems in SYSPLEXB.

The GDPS Active-Active Controllers communicate to each of the GDPS Metro controlling systems in both regions. It is this communication that makes the cooperation possible.

Figure 7-23 GDPS Continuous Availability co-operation with GDPS Metro

SYSPLEXA contains the data for the Active-Active workloads, and other data for applications that are running in the same sysplex but not managed by Active-Active. Also included are the various system infrastructure data that is also not managed by Active-Active.

All of this data that belongs to SYSPLEXA is replicated within Region A by using Metro Mirror and is HyperSwap-protected and managed by GDPS Metro. The Active-Active data is replicated through software to SYSPLEXB.

Similarly, another instance of GDPS Metro is available that is managing SYSPLEXB with the Active-Active data and also any non Active-Active data belonging to SYSPLEXB being replicated through Metro Mirror and HyperSwap protected within Region B.

Each SYSPLEXA and SYSPLEXB can be running in a single physical site or across two physical sites within their respective regions.

All of the data within both sysplexes is HyperSwap protected, meaning that a disk within a region is not a single point of failure and the sysplex can continue to function during planned or unplanned disk outages. HyperSwap is transparent to all applications that are running in the sysplex (assuming that the data for all applications is replicated with Metro Mirror). Therefore, it is also transparent to all of the subsystems in charge of running the Active-Active workloads, replicating the Active-Active data, and monitoring the Active-Active environment.