Shared Memory Communications

Shared Memory Communications (SMC) on IBM Z platforms is a technology that can improve throughput by accessing data faster with less latency, while reducing CPU resource consumption compared to traditional TCP/IP communications. Furthermore, applications do not need to be modified to gain the performance benefits of SMC.

This chapter includes the following topics:

7.1 SMC overview

SMC is a technology that allows two peers to send and receive data by using system memory buffers that each peer allocates for its partner’s use. Two types of SMC protocols are available on the IBM Z platform:

•SMC - Remote Direct Memory Access (SMC-R)

SMC-R is a protocol for Remote Direct Memory Access (RDMA) communication between TCP socket endpoints in logical partitions (LPARs) in different systems. SMC-R runs over networks that support RDMA over Converged Ethernet (RoCE). It enables existing TCP applications to benefit from RDMA without requiring modifications. SMC-R provides dynamic discovery of the RDMA capabilities of TCP peers and automatic setup of RDMA connections that those peers can use.

•SMC - Direct Memory Access (SMC-D)

SMC-D implements the same SMC protocol that is used with SMC-R to provide highly optimized intra-system communications. Where SMC-R uses RoCE for communicating between TCP socket endpoints in separate systems, SMC-D uses Internal Shared Memory (ISM) technology for communicating between TCP socket endpoints in the same system. ISM provides adapter virtualization (virtual functions (VFs)) to facilitate the intra-system communications. Hence, SMC-D does not require any additional physical hardware (no adapters, switches, fabric management, or PCIe infrastructure). Therefore, significant cost savings can be achieved when using the ISM for LPAR-to-LPAR communication within the same IBM Z platform.

|

Important: Both SMC-R and SMC-D require an existing TCP link between the images that are configured to use the SMC protocol.

|

Both SMC protocols use shared memory architectural concepts, eliminating TCP/IP processing in the data path, yet preserving TCP/IP quality of service (QoS) for connection management purposes.

7.1.1 Remote Direct Memory Access

RDMA is primarily based on InfiniBand (IFB) technology. It has been available in the industry for many years. In computing, RDMA is direct memory access from the memory of one computer to that of another without involving either one's operating system (kernel). This permits high-throughput, low-latency network transfer, which is commonly used in massively parallel computing clusters.

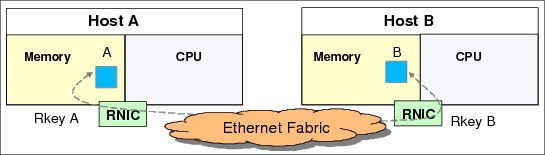

There are two key requirements for RDMA, as shown in Figure 7-1 on page 127:

•A reliable lossless network fabric (LAN for Layer 2 in data center network distance)

•An RDMA capable NIC and Ethernet fabric

Figure 7-1 RDMA technology overview

RoCE uses existing Ethernet fabric (switches with Global Pause enabled as defined by the IEEE 802.3x port-based flow control) and requires RDMA-capable NICs (RNICs), such as 25GbE RoCE Express2, 10GbE RoCE Express2, and 10GbE RoCE Express features.

7.1.2 Direct Memory Access

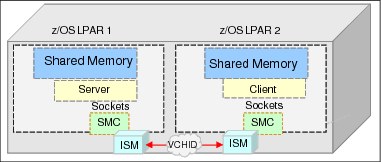

Direct Memory Access (DMA) uses a virtual PCI adapter that is called ISM rather than an RNIC as with RDMA. The ISM interfaces are associated with IP interfaces (for example, HiperSockets or OSA-Express) and are dynamically created, automatically started and stopped, and are auto-discovered.

The ISM does not use queue pair (QP) technology like RDMA. Therefore, links and link groups based on QPs (or other hardware constructs) are not applicable to ISM. SMC-D protocol has a design concept of a logical point-to-point connection called an SMC-D link.

The ISM uses a virtual channel identifier (VCHID) similar to HiperSockets for addressing purposes. Figure 7-2 shows the SMC-D LPAR-to-LPAR communications concept.

Figure 7-2 Connecting z/OS LPARs in the same Z platform using ISMs

7.2 SMC over Remote Direct Memory Access

SMC-R is a protocol that allows TCP sockets applications to transparently use RDMA. SMC-R defines the concept of the SMC-R link, which is a logical point-to-point link using reliably connected queue pairs between TCP/IP stack peers over a RoCE fabric. An SMC-R link is bound to a specific hardware path, meaning a specific RNIC on each peer.

SMC-R is a hybrid solution (see Figure 7-3) for the following reasons:

•It uses TCP connection to establish the SMC-R connection.

•Switching from TCP to “out of band” SMC-R is controlled by a TCP option.

•SMC-R information is exchanged within the TCP data stream.

•Socket application data is exchanged through RDMA (write operations).

•TCP connection remains to control the SMC-R connection.

•This model preserves many critical existing operational and network management features of an IP network.

Figure 7-3 Dynamic transition from TCP to SMC-R

The hybrid model of SMC-R uses the following key existing attributes:

•Follows standard IP network connection setup.

•Dynamically switches to RDMA.

•TCP connection remains active (idle) and is used to control the SMC-R connection.

•Preserves critical operational and network management IP network features:

– Minimal (or zero) IP topology changes.

– Compatibility with TCP connection-level load balancers.

– Preserves existing IP security model (for example, IP filters, policy, VLANs, or SSL).

– Minimal network administrative or management changes.

•Changing host application software is not required, so all host application workloads can benefit immediately.

7.2.1 SMC-R connectivity

IBM released with IBM z14 a new generation of RoCE, RoCE Express2. This generation includes 25GbE RoCE Express2 (FC 0430) and 10GbE RoCE Express2 (FC 0412) features. The previous generation, 10GbE RoCE Express (FC 0411), can be used as carry forward to the z14.

The key difference between the two features is the number of VFs that is supported per port:

•The 10GbE RoCE Express (FC 0411) has two ports. On z14, z14 ZR1, z13, and z13s, the feature supports up to 31 VFs by using both ports. On zEC12 and zBC12, the feature supports only one port and no virtualization.

•The 25GbE and 10GbE RoCE Express2 features have two ports and can support up to 63 VFs per port (126 per feature) on z14 and 31 VFs per port (62 per feature) on z14 ZR1.

25GbE RoCE Express2

IBM z14 GA2 introduces RoCE Express2 25GbE (FC 0430), which is based on the existing RoCE Express2 generation hardware and provides two 25GbE physical ports. The feature requires 25GbE optics. The following maximum number of features can be installed:

•Eight in z14

•Four in z14 ZR1

For 25GbE RoCE Express2, the PCI Function IDs (FIDs) are now associated with a specific (single) physical port (that is port 0 or port 1).

10GbE RoCE Express2

The 10GbE RoCE Express2 feature (FC 0412) is an RDMA-capable network interface card. It provides a technology refresh for RoCE on IBM Z. Most of the technology updates are related to internal aspects of the RoCE. It provides two physical 10 GbE ports (no change from the previous generation). The maximum number of features can be installed is:

•Eight in z14

•Four in z14 ZR1

On z14, both ports are supported. For 10GbE RoCE Express2, the PCI Function IDs (FIDs) are now associated with a specific (single) physical port (that is port 0 or port 1).

10GbE RoCE Express

The 10GbE RoCE Express feature (FC 0411) is an RDMA-capable network interface card. It is supported on the Z platform, and installs in the PCIe I/O drawer. Each feature has one PCIe adapter. The maximum number of features can be installed is:

•Eight in z14

•Four in z14 ZR1

•16 features in the z13, z13s, zEC12, and zBC12

On z14, z14 ZR1, z13, and z13s, both ports are supported. Only one port per feature is supported on zEC12 and zBC12. The 10GbE RoCE Express feature uses a short reach (SR) laser as the optical transceiver and supports use of a multimode fiber optic cable that terminates with an LC duplex connector. Both point-to-point connection and switched connection with an enterprise-class 10 GbE switch are supported.

On z14, z14 ZR1, z13, and z13s, the 10GbE RoCE Express feature can be shared across 31 partitions (LPAR). However, if the 10GbE RoCE Express feature is installed in a zEC12 or zBC12, the feature cannot be shared across LPARs, but instead is defined as reconfigurable.

Connectivity

25GbE RoCE Express2

The 25GbE RoCE Express2 feature requires Ethernet switch with 25GbE support. Switch port must support 25GbE (negotiation down to 10GbE is not supported). The switches must meet the following requirements:

•Pause frame function enabled, as defined by the IEEE 802.3x standard

•Priority flow control (PFC) disabled

•No firewalls, no routing, and no intraensemble data network (IEDN)

The maximum supported unrepeated distance for 25GbE RoCE Express2 feature, point-to-point, is 100 meters (328 feet).

A customer-supplied cable is required. The following types of cables can be used for connecting the port to the selected 25GbE switch or to the 25GbE RoCE Express2 feature on the attached IBM Z platform:

•OM3 50-micron multimode fiber optic cable that is rated at 2000 MHz-km, terminated with an LC duplex connector (up to 70 meters, or 229.6 feet)

•OM4 50-micron multimode fiber optic cable that is rated at 4700 MHz-km, terminated with an LC duplex connector (up to 100 meters, or 328 feet)

10GbE RoCE Express2 and 10GbE RoCE Express

The 10GbE RoCE Express2 and 10GbE RoCE Express features are connected to 10 GbE switches, which must meet the following requirements:

•Pause frame function enabled, as defined by the IEEE 802.3x standard

•Priority flow control (PFC) disabled

•No firewalls, no routing, and no intraensemble data network (IEDN)

The maximum supported unrepeated distance, point-to-point, is 300 meters (984 feet).

A customer-supplied cable is required. Three types of cables can be used for connecting the port to the selected 10 GbE switch or to the RoCE Express feature on the attached IBM Z platform:

•OM3 50-micron multimode fiber optic cable that is rated at 2000 MHz-km, terminated with an LC duplex connector (up to 300 meters, or 984 feet)

•OM2 50-micron multimode fiber optic cable that is rated at 500 MHz-km, terminated with an LC duplex connector (up to 82 meters, or 269 feet)

•OM1 62.5-micron multimode fiber optic cable that is rated at 200 MHz-km, terminated with an LC duplex connector (up to 33 meters, or 108 feet)

7.3 SMC over Direct Memory Access

SMC-D is a protocol that allows TCP socket applications to transparently use ISM. It is a hybrid solution (see Figure 7-4 on page 131) and has the following features:

•It uses a TCP connection to establish the SMC-D connection.

•The TCP connection can be either through OSA Adapter or IQD HiperSockets.

•A TCP option (SMCD) controls switching from TCP to out-of-band SMC-D.

•The SMC-D information is exchanged within the TCP data stream.

•Socket application data is exchanged through ISM (write operations).

•The TCP connection remains to control the SMC-D connection.

•This model preserves many critical existing operational and network management features of TCP/IP.

Figure 7-4 Dynamic transition from TCP to SMC-D

The hybrid model of SMC-D uses these key existing attributes:

•It follows the standard TCP/IP connection setup.

•The hybrid model switches to ISM (SMC-D) dynamically.

•The TCP connection remains active (idle) and is used to control the SMC-D connection.

•The hybrid model preserves the following critical operational and network management TCP/IP features:

– Minimal (or zero) IP topology changes.

– Compatibility with TCP connection-level load balancers.

– Preservation of the existing IP security model, such as IP filters, policies, virtual LANs (VLANs), and Secure Sockets Layer (SSL).

– Minimal network administration and management changes.

•Host application software is not required to change, so all host application workloads can benefit immediately.

•The TCP path can be either through an OSA-Express port or HiperSockets connection.

7.4 Software support

This section provides software support information for SMC-R and SMC-D.

|

Note: The SMC-R and SMC-D are hybrid protocols that have been designed and implemented to speed up TCP communication without making changes to applications.

SMC-R uses the RoCE Express features for providing (direct memory access) data transfer under the control of an established TCP/IP connection (OSA). SMC-D can use also an established TCP/IP connection over Hipersockets.

For Linux on Z, IBM is working with its Linux distribution partners to include support in future distribution releases. At the time of this writing (October 2018), SLES 12 SP3 includes support for Linux-to-Linux communication as “Tech Preview.”

For more information about RoCE features support, contact the distribution owners.

|

7.4.1 SMC-R

SMC-R with RoCE provides high-speed communications performance across physical processors. It helps all TCP-based communications across z/OS LPARs that are in different central processor complexes (CPCs).

These are some typical communication patterns:

•Optimized Sysplex Distributor intra-sysplex load balancing.

•IBM WebSphere Application Server Type 4 connections to remote IBM Db2, IBM Information Management System, and IBM CICS instances.

•IBM Cognos®-to-Db2 connectivity.

•CICS-to-CICS connectivity through Internet Protocol interconnectivity (IPIC).

Currently, IBM z/OS version 2.1 or later with program temporary fixes (PTFs) is the only OS that supports SMC-R protocol with RoCE. Also, consider the following factors:

•No rollback to previous z/OS releases

•Requires IOCP 3.4.0 or later input/output configuration program

•MOre PTF is required to support 25GbE RoCE Express2

z/VM V6.4 and later provide support for guest use of the RoCE Express feature of IBM Z. This feature allows guests to use RoCE for optimized networking. More PTFs are required to support 25GbE RoCE Express2.

7.4.2 SMC-D

SMC-D has the following prerequisites:

•z14, z14 ZR1, z13, or z13s with HMC/SE for ISM vPCI functions.

•At least two z/OS V2.2 or later LPARs in the same IBM Z platform with required service installed:

– SMC-D can only communicate with another z/OS V2.2 or later instance, and peer hosts must be in the same ISM PNet.

– SMC-D requires an IP Network with access that uses OSA-Express or HiperSockets, which has a defined PNet ID that matches the ISM PNet ID.

•If running as a z/OS guest under z/VM, z/VM 6.4 and later is required for guest access to RoCE (Guest Exploitation only).

|

Note: SMC (existing architecture) cannot be used in the following circumstances:

•Peer hosts are not within the same IP subnet and VLAN.

•TCP traffic requires IPSec or the server uses FRCA.

|

IBM is working with IBM Linux distribution partners to include support in future IBM Linux on IBM Z distribution releases.

7.5 Reference material

•IBM z/OS V2R2 Communications Server TCP/IP Implementation Volume 1: Base Functions, Connectivity, and Routing, SG24-8360

•IBM z13 Technical Guide, SG24-8251

•IBM z13s Technical Guide, SG24-8294

•IBM z14 Technical Guide, SG24-8451

•IBM z14 ZR1 Technical Guide, SG24-8651

..................Content has been hidden....................

You can't read the all page of ebook, please click here login for view all page.