High availability

This chapter describes the enhancements to IBM PowerHA SystemMirror® for i and related high availability functions in IBM i 7.2.

This chapter describes the following topics:

For more information about the IBM i 7.2 high availability enhancements, see the IBM i Technology Updates developerWorks wiki:

6.1 Introduction to IBM PowerHA SystemMirror for i

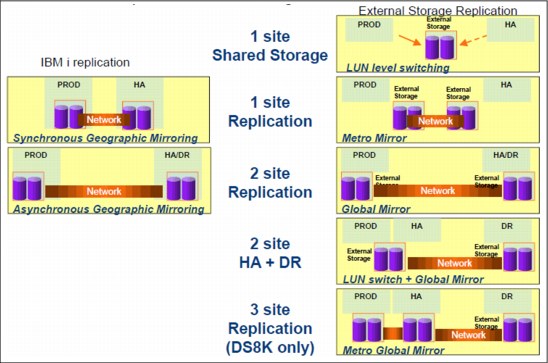

To realize a high availability (HA) and a disaster recovery (DR) IBM i environment, IBM provides PowerHA SystemMirror for i. PowerHA SystemMirror for i provides a hardware-based (disk-level) data and application resiliency solution that is an integrated extension of the IBM i operation-system and storage-management architecture, as shown in Figure 6-1

PowerHA SystemMirror for i also supports IBM i replication (Geographic Mirroring) and external storage, such as IBM DS8000, IBM Storwize, and SAN Volume Controller replication. PowerHA SystemMirror for i offers solutions covering a simple data center to a multiple-site configuration, enables 24 x 7 operational availability, and provides automation for planned and unplanned outages.

Figure 6-1 PowerHA SystemMirror for i architecture

The levels of the replication technology by PowerHA SystemMirror for i are divided into five levels, as described in Figure 6-2 on page 253:

•Level 1 technology is one-site shared-storage technology. The solution in this level is called LUN-level switching. LUN-level switching supports DS8000, Storwize, and SAN Volume Controller.

•Level 2 is one-site replication technology. IBM i based second-level replication is realized by Synchronous Geographic Mirroring. An external storage-based replication is realized by Metro Mirror. These two level technologies are applied as a high availability solution for a simple data center.

•Level 3 of the replication technology is two-site replication. This level is achieved by asynchronous replication technology, namely Asynchronous Geographic Mirroring as an IBM i based technology and Global Mirror as an external storage-based technology.

•Level 4 is a two-site HA and DR configuration. This level is accomplished by combining Level 1 technology and Level 3 technology. On the production site, one storage is shared by a production system and an HA standby system. When the outage of the production system occurs, the storage is switched to the HA standby system. When the production site shutdown occurs, the DR standby system is raised to a primary node by role swapping.

•Level 5 is three-site replication. This configuration is a combination of Level 2 and Level 3 technologies. By aligning the HA standby system with the different site from the production site, a higher level of availability can be accomplished than by Level 4 technology. This level is supported only on DS8000.

Figure 6-2 PowerHA SystemMirror for i Replication levels of replication technology

For more information about PowerHA SystemMirror for i, see PowerHA SystemMirror for IBM i Cookbook, SG24-7994.

6.2 IBM PowerHA SystemMirror for i enhancements

This section describes the following IBM i 7.2 enhancements for IBM PowerHA SystemMirror:

6.2.1 PowerHA SystemMirror for i packaging

In IBM i 7.2, PowerHA SystemMirror for i has the following three editions:

•Enterprise Edition (5770-HAS Option 1)

•Standard Edition (5770-HAS Option 2)

•Express Edition (5770-HAS Option 3)

Enterprise Edition has a multiple-site DR solution, and Standard Edition is for a simple data center HA solution. Both of these editions are available in IBM i 7.1. In IBM i 7.2, Express Edition was added to the lineup of PowerHA SystemMirror for i.

6.2.2 DS8000 HyperSwap support with PowerHA for i 7.2 Express Edition

With PowerHA SystemMirror for i 7.2, the first stage of the Express Edition offering enables single-node, full-system IBM HyperSwap® with the DS8700 system (or above). This provides customers with continuously available storage through either planned or unplanned storage outage events.

HyperSwap provides an instant switching capability between DS8000 servers that use Metro Mirror. After HyperSwap is configured, switching occurs automatically if there is a DS8000 outage with no downtime. There is also a command that is available for switching a storage node, which provides for planned outage events on the DS8000 side, as shown in Figure 6-3.

Figure 6-3 PowerHA SystemMirror for i Express Edition with DS8000 HyperSwap

To configure PowerHA SystemMirror for i Express Edition with DS8000 HyperSwap, complete the following steps:

1. Create LUNs (Logical Unit Numbers) on the source DS8000 system.

2. Assign LUNs on the source DS8000 system to the IBM i system.

3. Create LUNs on the target DS8000 system.

|

Note: Do not assign target LUNs to the IBM i system until after Metro Mirror is configured on the DS8000 side.

|

4. Configure Metro Mirror between the source and target DS8000 systems.

5. Assign the LUNs on the target DS8000 system to the same IBM i system.

6. Verify the HyperSwap configuration with the Display HyperSwap Status (DSPHYSSTS) CL command.

Figure 6-4 shows an example of result of the DSPHYSSTS CL command. The direction of arrows in the Copy Status column indicates the direction of mirroring between LUNs.

|

Display HyperSwap Status Y319BP17

10/15/14 12:18:04

Volume Copy Volume

Resource Identifier Status Resource Identifier

DMP366 IBM.2107-75TT911/3F00 ---> DMP004 IBM.2107-75BPF81/3D00

DMP006 IBM.2107-75TT911/3F01 ---> DMP005 IBM.2107-75BPF81/3D01

DMP013 IBM.2107-75TT911/3F02 ---> DMP007 IBM.2107-75BPF81/3D02

DMP369 IBM.2107-75TT911/3F03 ---> DMP009 IBM.2107-75BPF81/3D03

DMP371 IBM.2107-75TT911/3F04 ---> DMP010 IBM.2107-75BPF81/3D04

DMP373 IBM.2107-75TT911/3F05 ---> DMP011 IBM.2107-75BPF81/3D05

DMP376 IBM.2107-75TT911/3F06 ---> DMP012 IBM.2107-75BPF81/3D06

DMP393 IBM.2107-75TT911/3F07 ---> DMP014 IBM.2107-75BPF81/3D07

DMP380 IBM.2107-75TT911/3F08 ---> DMP016 IBM.2107-75BPF81/3D08

DMP395 IBM.2107-75TT911/3F09 ---> DMP017 IBM.2107-75BPF81/3D09

DMP384 IBM.2107-75TT911/3F0A ---> DMP018 IBM.2107-75BPF81/3D0A

DMP386 IBM.2107-75TT911/3F0B ---> DMP020 IBM.2107-75BPF81/3D0B

DMP388 IBM.2107-75TT911/3F0C ---> DMP021 IBM.2107-75BPF81/3D0C

DMP390 IBM.2107-75TT911/3F0D ---> DMP023 IBM.2107-75BPF81/3D0D

DMP392 IBM.2107-75TT911/3F0E ---> DMP025 IBM.2107-75BPF81/3D0E

More...

Press Enter to continue

F3=Exit F5=Refresh F11=View 2 F12=Cancel

|

Figure 6-4 Results of the Display HyperSwap Status (DSPHYSSTS) CL command

The Change HyperSwap Status (CHGHYSSTS) CL command that is shown in Figure 6-5 can be used for suspending and resuming mirror operations between a storage node and for switching a storage node manually. This solution can also be used for planned outage events on a storage node side.

|

Change HyperSwap Status (CHGHYSSTS)

Type choices, press Enter.

Option . . . . . . . . . . . . . *STOP, *START, *SWAP

|

Figure 6-5 Change HyperSwap Status (CHGHYSSTS) CL command prompt

When you suspend mirroring by running the CHGHYSSTS CL command, the Copy Status column in DSPHYPSTS panel changes from ‘--->’ to ‘-->’, as shown in Figure 6-6.

|

Display HyperSwap Status Y319BP17

10/15/14 12:19:11

Volume Copy Volume

Resource Identifier Status Resource Identifier

DMP366 IBM.2107-75TT911/3F00 --> DMP004 IBM.2107-75BPF81/3D00

DMP006 IBM.2107-75TT911/3F01 --> DMP005 IBM.2107-75BPF81/3D01

DMP013 IBM.2107-75TT911/3F02 --> DMP007 IBM.2107-75BPF81/3D02

DMP369 IBM.2107-75TT911/3F03 --> DMP009 IBM.2107-75BPF81/3D03

DMP371 IBM.2107-75TT911/3F04 --> DMP010 IBM.2107-75BPF81/3D04

DMP373 IBM.2107-75TT911/3F05 --> DMP011 IBM.2107-75BPF81/3D05

DMP376 IBM.2107-75TT911/3F06 --> DMP012 IBM.2107-75BPF81/3D06

DMP393 IBM.2107-75TT911/3F07 --> DMP014 IBM.2107-75BPF81/3D07

DMP380 IBM.2107-75TT911/3F08 --> DMP016 IBM.2107-75BPF81/3D08

DMP395 IBM.2107-75TT911/3F09 --> DMP017 IBM.2107-75BPF81/3D09

DMP384 IBM.2107-75TT911/3F0A --> DMP018 IBM.2107-75BPF81/3D0A

DMP386 IBM.2107-75TT911/3F0B --> DMP020 IBM.2107-75BPF81/3D0B

DMP388 IBM.2107-75TT911/3F0C --> DMP021 IBM.2107-75BPF81/3D0C

DMP390 IBM.2107-75TT911/3F0D --> DMP023 IBM.2107-75BPF81/3D0D

DMP392 IBM.2107-75TT911/3F0E --> DMP025 IBM.2107-75BPF81/3D0E

More...

Press Enter to continue

F3=Exit F5=Refresh F11=View 2 F12=Cancel

|

Figure 6-6 DSPHYSSTS CL command when mirroring is suspended

HyperSwap also can be combined with IBM i Live Partition Mobility (LPM), as shown in Figure 6-7 on page 257. By using HyperSwap with LPM, you can realize a minimal downtime solution to migrate from one server/storage combination to another.

Figure 6-7 HyperSwap with Live Partition Mobility

After the affinity between a Power Systems server and a DS8000 server is defined, moving a partition by LPM also triggers switching a DS8000 server by HyperSwap when appropriate. To define the affinity between a Power Systems server and a DS8000 server, run the Add HyperSwap Storage Desc (ADDHYSSTGD) CL command, as shown in Figure 6-8.

|

Add HyperSwap Storage Desc (ADDHYSSTGD)

Type choices, press Enter.

System Serial Number . . . . . . Character value

IBM System Storage device . . .

|

Figure 6-8 Add HyperSwap Storage Desc (ADDHYSSTGD) CL command prompt

For more information about how to configure and manage the PowerHA SystemMirror for i with a HyperSwap environment, see the following website:

6.2.3 Support for SVC/Storwize Global Mirror with Change Volumes

IBM PowerHA SystemMirror for i supports Global Mirror with Change Volumes (GCMV) of SAN Volume Controller and Storwize by applying a PTF. Here are the PTFs for each release of IBM PowerHA SystemMirror for i Version 7 supporting this enhancement:

•5770HAS V7R1M0 SI52687

•5770HAS V7R2M0 SI53476

GMCV was introduced in Version 6.3 for SAN Volume Controller and Storwize. Before this release, Global Mirror ensured a consistent copy on the target side, but the recovery point objective (RPO) was not tunable.

This solution requires enough bandwidth to support the peak workload. GMCV uses

IBM FlashCopy® internally to ensure the consistent copy, but offers a tunable RPO, called a cycling period. GMCV can be appropriate when bandwidth is an issue, although if bandwidth cannot support the replication, the cycling period might need to be adjusted from seconds up to 24 hours.

IBM FlashCopy® internally to ensure the consistent copy, but offers a tunable RPO, called a cycling period. GMCV can be appropriate when bandwidth is an issue, although if bandwidth cannot support the replication, the cycling period might need to be adjusted from seconds up to 24 hours.

Configuring GMCV is done from the SAN Volume Controller / Storwize user interface or Global Mirror. After the required PTFs are applied, IBM PowerHA SystemMirror for i recognizes that GMCV is configured and manages the replication environment.

For more information about Global Mirror with Change Volumes, see IBM System Storage SAN Volume Controller and Storwize V7000 Replication Family Services, SG24-7574.

6.2.4 GUI changes in IBM Navigator for i

In IBM Navigator for i, the High Availability Solution Manager GUI and the Cluster Resource Services GUI were removed. Alternatively, the PowerHA GUI can be used for configuring and managing a high availability environment, as shown in Figure 6-9.

Figure 6-9 PowerHA GUI in IBM Navigator for i

6.2.5 Minimum cluster and PowerHA versions for upgrading nodes to IBM i 7.2

When upgrading an existing IBM i cluster node to IBM i 7.2, you must consider the version of the cluster and PowerHA. In IBM i 7.2, the cluster version is 8 and the PowerHA version is 3.0, as shown in Figure 6-10 on page 259. The Change Cluster Version (CHGCLUVER) CL command can be used to adjust the cluster and PowerHA versions.

|

Display Cluster Information

Cluster . . . . . . . . . . . . . . . . : CLUSTER

Consistent information in cluster . . . : Yes

Current PowerHA version . . . . . . . . : 3.0

Current cluster version . . . . . . . . : 8

Current cluster modification level . . . : 0

Configuration tuning level . . . . . . . : *NORMAL

Number of cluster nodes . . . . . . . . : 2

Number of device domains . . . . . . . . : 0

Number of administrative domains . . . . : 0

Number of cluster resource groups . . . : 0

Cluster message queue . . . . . . . . . : *NONE

Library . . . . . . . . . . . . . . . : *NONE

Failover wait time . . . . . . . . . . . : *NOWAIT

Failover default action . . . . . . . . : *PROCEED

Press Enter to continue.

F1=Help F3=Exit F5=Refresh F7=Change versions F8=Change cluster

F12=Cancel F13=Work with cluster menu

|

Figure 6-10 Display Cluster Information CL command showing the cluster and PowerHA versions

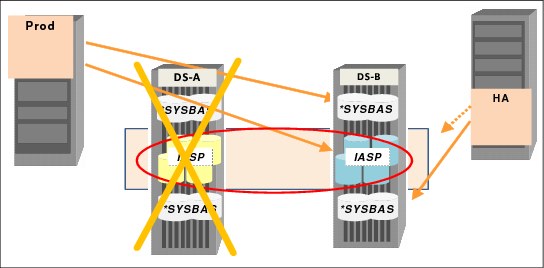

6.2.6 DS8000 IASP-based HyperSwap Technology Preview

In IBM i 7.2, the PowerHA SystemMirror for i product supports full system DS8000 HyperSwap as part of the Express Edition of PowerHA. This is not compatible with IASP-based replication because the HyperSwap relationship is defined at the system level, not at the ASP group level.

In a future IBM i 7.2 Technology Refresh, the HyperSwap relationship can be defined on an ASP within the partition, allowing SYSBAS and the IASPs to be in separate HyperSwap relationships.

A Technology preview of the IBM PowerHA SystemMirror for i IASP-based HyperSwap function is now available to interested customers who meet the criteria.

|

Important: This function is available only through the Technology Preview program currently.

|

Figure 6-11 shows the general configuration of DS8000 IASP-based HyperSwap, where SYSBAS and IASP can be defined in separate HyperSwap relationships.

Figure 6-11 DS8000 with IASP-based HyperSwap

With this support, HyperSwap can be used as near-zero downtime storage for outages. After HyperSwap is configured, the application I/O can automatically switch to use the auxiliary storage system if thee primary storage system fails, as shown in Figure 6-12.

Figure 6-12 HyperSwarp automatically switches when a storage outage occurs

If there is an operating system outage or disaster, PowerHA does a vary off/vary on of the IASP and switches to the secondary site, as shown in Figure 6-13.

Figure 6-13 PowerHA vary off/vary on of the IASP and a switch of the connection to a secondary site

Live Partition Mobility (LPM) can be used with IASP- based HyperSwap to migrate a partition to the secondary server due to a planned server outage or maintenance.

For more information about DS8000 HyperSwap with IASP Technology Preview, see the IBM PowerHA SystemMirror for i wiki:

6.3 Administrative domain

To maintain the consistency of objects in SYSBAS in a clustering environment, IBM i provides the administrative domain function. This section covers the following enhancements of the administrative domain in IBM i 7.2:

For more information about the IBM PowerHA SystemMirror for i enhancements in IBM i 7.2, see the IBM PowerHA SystemMirror for i wiki:

6.3.1 Increased administrative domain limit

Before IBM i 7.2, the supported number of monitored resources entries (MREs) was 25,000. In IBM i 7.2, the number of MREs is increased to 45,000.

To maintain MREs, you can use the Work with Monitored Resources (WRKCADMRE) CL command or PowerHA GUI in IBM Navigator for i.

For more information about the WRKCADMRE CL command, see IBM Knowledge Center:

6.3.2 Synchronization of object authority and ownership

Object authority and ownership can be monitored and synchronized with the administrative domain. The supported object attributes of these entries are object owner, authority entry, authorization list, and primary group. If you add new MREs in the IBM i 7.2 administrative domain, these four attributes are added by default. For existing MREs that were added with *ALL attributes, these four attributes will be synchronized after upgrading the PowerHA version to 3.0.

MREs are marked as inconsistent until a source node for the new attributes is determined. If you want to synchronize these attributes instantly, run the Remove Admin Domain MRE (RMVCADMRE) CL command followed by the Add Admin Domain MRE (ADDCADMRE) CL command for the specific objects or you can use the PowerHA GUI in IBM Navigator for i, which enables selecting a source node when synchronizing, as shown in Figure 6-14.

Figure 6-14 Manage MRE options in the IBM Navigator for i PowerHA GUI

For more information about the RMVCADMRE and ADDCADMRE CL commands, see IBM Knowledge Center:

•Remove Admin Domain MRE (RMVCADMRE) CL command:

•Add Admin Domain MRE (ADDCADMRE) CL command:

6.4 Independent Auxiliary Storage Pools

This section describes the following enhancements that are related to Independent Auxiliary Storage Pools (IASPs) in IBM i 7.2:

6.4.1 DSPASPSTS improvements for better monitoring of vary-on time

The Display ASP Status (DSPASPSTS) CL command now can preserve and display up to 64 vary histories and show additional information, such as UDFS and STATFS. Figure 6-15 shows an example of the DSPASPSTS ENTRIES(*ALL) CL command, which lists several histories of varying on and off an IASP.

|

Display ASP Vary Status

ASP Device . . . . : 033 IASP01 Current time . . . : 00:00:22

ASP State . . . . : AVAILABLE Previous time . . : 00:00:32

Step . . . . . . . : / 35 Start date . . . . : 10/14/14

Step Elapsed time

Cluster vary job submission

Waiting for devices - none are present 00:00:00

Waiting for devices - not all are present 00:00:00

DASD checker 00:00:00

Storage management recovery

Synchronization of mirrored data

Synchronization of mirrored data - 2 00:00:00

Scanning DASD pages

More...

Press Enter to continue

-------------------------------------------------------------------------------

Display ASP Vary Status

ASP Device . . . . : 033 IASP01 Current time . . . : 00:00:24

ASP State . . . . : VARIED OFF Previous time . . : 00:00:30

Step . . . . . . . : / 5 Start date . . . . : 10/14/14

Step Elapsed time

Cluster vary job submission 00:00:00

Ending jobs using the ASP

Waiting for jobs to end

Image catalog synchronization 00:00:03

Writing changes to disk 00:00:21

Bottom

Press Enter to continue

|

Figure 6-15 Display ASP Vary Status CL command displaying records of previous varying operations

Figure 6-16 shows an example of displaying UDFS information by using the Display User-Defined FS (DSPASPSTS) CL command.

For more information about the DSPASPSTS CL command, see IBM Knowledge Center:

|

Display User-Defined FS

User-defined file system . . . : /dev/IASP01/qdefault.udfs

Owner . . . . . . . . . . . . : QSYS

Coded character set ID . . . . : 37

Case sensitivity . . . . . . . : *MONO

Preferred storage unit . . . . : *ANY

Default file format . . . . . : *TYPE2

Default disk storage option . : *NORMAL

Default main storage option . : *NORMAL

Creation date/time . . . . . . : 10/14/14 13:27:15

Change date/time . . . . . . . : 10/14/14 13:27:15

Path where mounted . . . . . . : /IASP01

Description . . . . . . . . . :

Bottom

Press Enter to continue.

F3=Exit F12=Cancel

|

Figure 6-16 Display UDFS information by using the DSPASPSTS CL command

6.4.2 Reduced UID/GID processing time during vary-on

In IBM i 7.2, the processing time for /QSYS.LIB objects is eliminated and the processing time for IFS objects is minimized during the vary-on of an IASP. In addition, the processing time for correcting the mismatch of UID and GID is also reduced.

|

Note: It is still considered a preferred practice to synchronize the UID/GID between cluster nodes by using the administrative domain to eliminate all UID/GID processing time during vary-on.

|

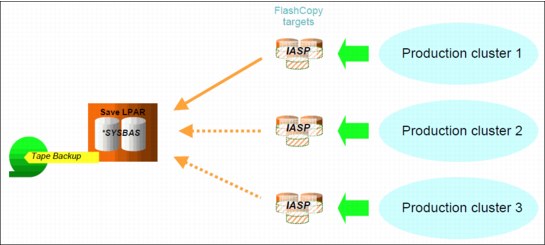

6.4.3 IASP assignment for consolidated backups

In IBM i 7.2, an existing IASP that is not in the cluster device domain can be attached to a partition. This enhancement provides a benefit to an environment that uses FlashCopy for minimizing a backup window.

Before IBM i 7.2, an IASP as a FlashCopy target must be attached to a partition within the cluster device domain. For the environment that is configured with multiple production clusters and is using FlashCopy in each cluster, each cluster requires a backup partition for attaching the FlashCopy target and must perform a backup operation on it. With this enhancement, only one partition is needed as a backup partition for multiple different clusters, as shown in Figure 6-17 on page 265.

Figure 6-17 Attach IASPs out of the cluster device domain to a single partition

|

Note: Only one IASP can be attached to a single backup partition at a time, and an IPL of that partition is required before a different IASP is attached.

|

Before attaching a different IASP to a partition, remove any existing IASPs and run the CFGDEVASP ASPDEV(*ALL) ACTION(*PREPARE) CL command that is shown in Figure 6-18 on that partition and then perform an IPL.

|

Configure Device ASP (CFGDEVASP)

Type choices, press Enter.

ASP device . . . . . . . . . . . *ALL Name, *ALL

Action . . . . . . . . . . . . . *PREPARE *CREATE, *DELETE, *PREPARE

|

Figure 6-18 CFGDEVASP ASPDEV(*ALL) ACTION(*PREPARE) CL command

After the IPL completes, a FlashCopy IASP can be recognized on a single backup partition by connecting it. Figure 6-19 shows the direct-attached IASP disk units panel of SST of a single backup partition. In Figure 6-19, disk unit DD003 is the target FlashCopy LUN that contains the IASP data on the production partition. By pressing Enter, the IASP, which consists of DD003, is configured.

|

Confirm Configure IASPs

Press Enter to confirm Configure IASPs.

Press F12=Cancel to return.

Serial Resource Hot Spare

ASP Unit Number Type Model Name Protection Protection

1 Unprotected

1 Y9D7RD3HCKHG 6B22 050 DMP002 Unprotected N

70 Unprotected

4002 50-050340F 2107 A81 DD003 Unprotected N

|

Figure 6-19 Direct-attached IASP disk units panel of SST

Figure 6-20 shows the Display Disk Configuration Status panel of SST, which shows that the IASP exists and that disk unit DD003 is assigned to it.

|

Display Disk Configuration Status

Serial Resource Hot Spare

ASP Unit Number Type Model Name Status Protection

1 Unprotected

1 Y9D7RD3HCKHG 6B22 050 DMP002 Configured N

70 Unprotected

4001 50-050340F 2107 A81 DD003 Configured N

|

Figure 6-20 'Display Disk Configuration Status panel of SST

For more information about multiple IASPs assignments to a single backup partition, see

IBM Knowledge Center:

IBM Knowledge Center:

6.5 Support for SAN Volume Controller and Storwize HyperSwap volumes

IBM i 7.2 now supports the HyperSwap function of the IBM SAN Volume Controller and IBM Storwize product family V5000 and V7000 hardware platform.

The HyperSwap function in the SAN Volume Controller software works with the standard multipathing drivers that are available on a wide variety of host types that support Asymmetric Logical Unit Access (ALUA).

The SCSI protocol allows a storage device to suggest to a host the preferred port to submit I/O. By using the ALUA state for a volume, a storage controller can know which path is active and which path is preferred for the host.

A quorum disk is required for the SAN Volume Controller HyperSwap configuration to provide two functions:

•Act as a tiebreaker in split-brain scenarios.

•Save critical configuration metadata.

The SAN Volume Controller quorum algorithm distinguishes between the active quorum disk and quorum disk candidates. There are three quorum disk candidates. At any time, only one of these candidates acts as the active quorum disk. All three quorum disks store configuration metadata, but only the active quorum disk acts as a tiebreaker for split-brain scenarios.

Figure 6-21 show the general setup of the Storwize HyperSwap implementation.

Figure 6-21 HyperSwap general setup

For more information, see IBM SpectrumVirtualize HyperSwap configuration, found at:

..................Content has been hidden....................

You can't read the all page of ebook, please click here login for view all page.