This chapter addresses all the processes and services in the organization’s information security scope that should be implemented. Processes related to security awareness, training, and simulated attacks are addressed in the next chapter.

Security Governance

Security governance is how you support, manage, and shape your organization’s efforts for security. It is different from security management. In “management”, you implement, whereas in “governance”, you oversee. Security governance is formed by the organization’s business objectives and risk appetite, as well as industry best practices, legislation, and regulations mandated to the organization. It should not stop business processes to have an ideal security posture, since it should support business functions to be more secure and resilient to threat and vulnerabilities.

Security governance includes information security processes and technologies, competent people managing these, roles and responsibilities of everyone in the organization, organizational structure of the information security function, key risk indicator metrics, policies, and set of other documentation, which should be bound by a formal program.

Policy management (exception management, self-assessment, annual review process of policies)

Compliance management (compliance to the organization policies, national regulations, cybersecurity frameworks, etc.)

Incorporation of information security to third-party risk management

Ability to track and manage information risks through a risk register

Providing targeted technical awareness and training

Capability mapping (ability to map organizational capabilities to requirements and information security threats)

Supporting risk management activities and residual risk management

Accountable (the boss): Senior business management such as CEO or CISO

Responsible (the doers): Mainly the information security function in the organization, plus the records and information management professionals, and people dealing with legal and compliance, business operations and management, risk management, data storage and archiving, and privacy in their areas of business

Consulted (the advisors): Includes the preceding professionals and auditors

Informed (the dependents): All employees

NIST 800-1002 and ISO 27014:20203 underline the importance of consistent participation in these roles for an effective information security program. In addition, these frameworks suggest that organizations should integrate information security into the planning of corporate strategies to have a sustainable information security program. There should also be an information security strategic plan for achieving the information security goals and objectives, which were defined in the program. Strategic plans are supported by tactical plans (mid-term schedules for goals) and operational plans (short-term detailed plans).

Overall enterprise-wide information security and ensuring information security is an integral part of all business processes

Development of information security strategy

Approving information security-related documentation, such as policies, procedures, standards, and system architecture

Monitoring organization’s information security projects and activities, their effectiveness against security threats and vulnerabilities, by setting up KRIs and KPIs

Deciding risk-based information security improvement activities considering the current threat landscape, cost, and allocated budget

Executing and maintaining the information security strategy

Coordinating and communicating the information security across the organization

Define and manage the security services (including but not limited to network security, data security, endpoint security, incident response, and security event monitoring)

Developing information security-related documentation, such as policies, procedures, standards, and system architecture

Providing expert advice on all aspects of information security to business functions

Running information security awareness programs and supporting information security-related training

Monitoring effectiveness of security controls by establishing KRIs and KPIs

Reporting overall status of all activities to the overseeing body

The rules to enforce in the information security program are mentioned in a policy, which is then supported by procedures, standards, guidelines, and instruction manuals.

Policies and Procedures

An information security policy refers to a set of directives documented to maintain the organization’s information security. It also shows top management’s commitment to information security, which is expected to be approved by the board. They are shaped by the organization’s risk appetite and strategic planning, laws, regulations, and business processes.

The information security policy should be aligned with other policies, such as IT policies, HR policies, general safety or physical security policies, and business processes. If not aligned, information security is seen as a burden in the organization, making it unsustainable.

Policies have general formal statements, and they should be supported by other documentation such as standards, procedures, baselines, and guidelines. All these documents should be communicated, and employees and third-party providers should be made aware of these documents upon hire and annually.

- Information Security Governance

Information Security Awareness and Training

Risk Management/Assessment

Compliance/Auditing

Acceptable Use of Technologies

Clean Desk

Ethics

Remote Working

- Data Protection

Data Classification and Labeling

Information and Intellectual Property Handling

Data Retention and Access

Records Management and Archiving

Data Privacy

- Endpoint Security

Software installation

Workstation Security

Server Security

Email Security

Mobile Device Security (BYOD, COPE)

Removable Media

- Application Security

Secure Software Development

Application Security Testing

- Network Security

Wired and Wireless Communication

Network Device Security

Network Configuration

Network Monitoring

Cryptographic Security and Key Management

Change and Patch Management

- Security Monitoring and Operations

Security Logging

Vulnerability Management

Penetration Testing

Threat Intelligence and Threat Hunting

- Incident Handling and Response

Cyber Crisis Management Plan

Response plan testing

Digital Forensics

- Recovery and Continuity

Business Continuity Planning

Disaster Recovery

- Identity and Access Management

Password Standards

Remote Access

Cloud Security

Cybersecurity and Risk Assessment

Risk management is how you treat your risks in the organization. Through risk assessments, you identify and evaluate risks. Based on your risk appetite and budget, you prioritize and treat them to decrease the risk by either minimizing the impact of the risk or decreasing the probability.

The overall definition of the risk is any threat in which an event, action, or non-action adversely impacts the organization’s ability to sustain its business or achieve its objectives. It is due to exploiting a vulnerability , which is a weakness or flaw in an asset or the lack of security controls.

The following is the general formula for risk.

Vulnerability: Passwords are vulnerable to brute-forcing via dictionary attacks.

Threat: A malicious actor can exploit the vulnerability to break into the system.

Likelihood is decided with the combination of threat and vulnerability. If the environment is prone to external attacks, the likelihood is more probable.

Impact: When the malicious actor gains unauthorized access, the resources (primarily data) are prone to modification, deletion, or being stolen.

There are several ways to identify the endless possible threats, which are the causes of an unwanted incident that results in financial or material loss, damage, modification of assets or data, unauthorized access, denial of service, or disclosure of sensitive information. One way is threat modeling , which tries to identify, categorize and analyze the loss, the probability, and the possible solutions to reduce the threat. It is especially useful when designing an application. It is an exercise of what is being built (assets), what can go wrong (threat and threat agents), how do we mitigate them (controls), and validate if they are gone (testing).

Spoofing: Can the attacker gain access by spoofed credentials, IP addresses, usernames, email addresses, MAC addresses, and so forth?

Tampering: Can there be any tampering of data, whether it is in use, transit, or at rest?

Repudiation: Can the attacker disguise themself from being audited or traced?

Information disclosure: Can sensitive information be disclosed to unauthorized parties?

Denial of service: Can the attacker harm the system so that the system is no longer responding to legitimate requests?

Elevation of privilege: Can the attacker elevate his/her privileges to a higher level of credentials so that he/she can access more context or have rights to do more?

Stage 1: Define business, security, and compliance objectives (function of the application, number of users, data inputs and outputs, and BIA)

Stage 2: Define the technical scope of assets and components (including application components, application boundaries, network topology, design diagrams, protocol and services, data interactions)

Stage 3: Decompose the application (users, roles, responsibilities, data flows, ACLs)

Stage 4: Threat analysis (threat intelligence, list of threat agents, threat scenarios, and likelihood)

Stage 5: Vulnerability detection (Any vulnerability in the application based on OWASP Top 10 or MITRE CWE)

Stage 6: Analyze and model attacks or attack enumeration (identification of attack surface, attack trees, and vectors, possible exploitation points)

Stage 7: Risk/ impact analysis and development of countermeasures (business impact of threats, any gaps in the security controls, calculation of residual risk, and mitigation actions)

After identifying threats, vulnerabilities, and impacts, you can easily understand the risk. Through risk assessments, you can identify further or prioritize the actions.

The asset value (AV) assigns monetary values to your assets.

The exposure factor (EF) or loss potential is the percentage of the cost of the loss that happens.

Single loss expectancy (SLE) (AV × EF) is the monetary value of the single loss.

The annualized rate of occurrence (ARO) estimates how many times in one year risk can happen.

The annualized loss expectancy (ALE) (ARO × SLE) is the cost of the total instances of this loss in one year.

The cost of countermeasures includes licensing, installation costs, maintenance, support, annual repairs, the cost for testing, and evaluation.

The end of the quantitative analysis compares the cost and benefits review, including the ALE. If you see an acceptable decrease in the risk, you can implement the offered countermeasure. It should be noted that quantitative analyses are subjective, and organizations need to identify the monetary values for each threat, vulnerability, or loss.

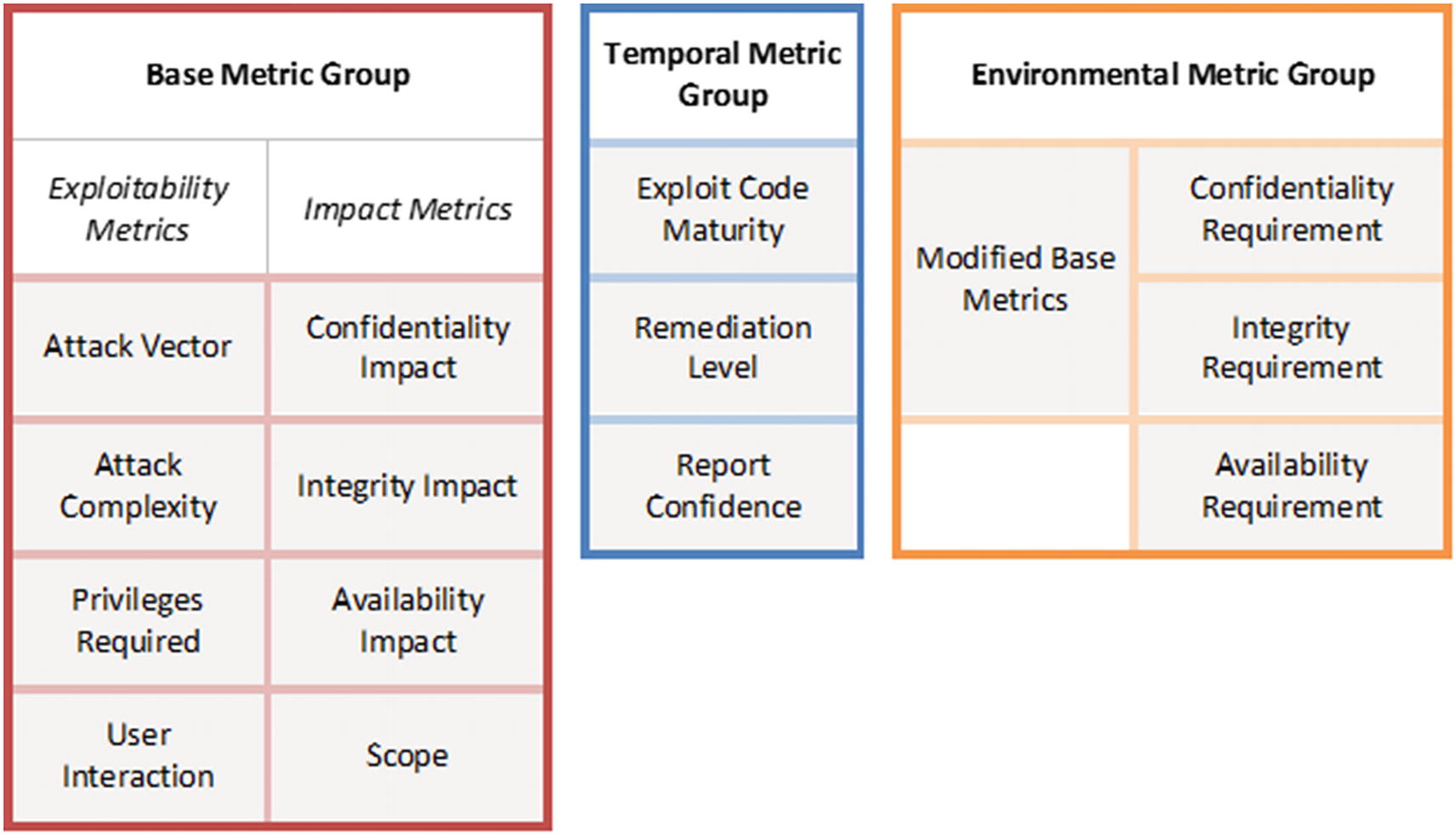

CVSS metric groups4

So how do you treat or manage your risks?

The answer for the preceding password example is easy: having a better password policy with account lockout would decrease the likelihood of the risk. Brute-forcing is still possible, but, for instance, the account is disabled automatically after six wrong attempts, which makes the malicious actor’s other attempts useless.

There may be some actions your organization cannot handle or afford. Then, you can accept the risk or transfer. Nowadays, many insurance companies are providing cyber-risk insurance policies, which may be helpful to transfer the risk.

- Reduction or mitigation (also known as treating the risk): By improving a security control and adding a new one, you decrease the risk’s likelihood and/or impact.

Patching of servers would eliminate the vulnerability.

Adding more perimeter controls, such as IPS, WAF, would prevent some attacks from happening.

Implementing multi-factor authentication would provide some protection against credential thefts.

Acceptance (also known as tolerate): Management decides that the risk is within the established risk acceptance criteria, so the organization accepts the risk.

Transfer: By transferring the risk or insuring it, the potential cost is undertaken by another entity or individual.

Deter: By implementing deterring actions to the risk, you discourage people aiming to cause the loss, which eventually reduces the likelihood of the risk. Security cameras, guards, WAFs, or MFA are examples.

Avoidance: By selecting alternative technologies or options that have less risk than the first one, or simply canceling the initiative. Choosing IPS instead of IDS is an example.

Reject: Pretend that the risk does not exist or simply ignore the risk. This is not a formal risk management activity nor recommended.

After the action, if there is still some remaining risk associated with the item, it is called residual risk . Organizations should aim to have minimal residual risks and preferably within the acceptable risk threshold.

Penetration Testing

A penetration test is a simulated attack against computing systems that mimics an actual attack to identify and validate vulnerabilities, configuration issues, and business logic flaws. It includes several discovery and attack types, including port scanning, fuzz testing, vulnerability scanning, and social engineering. It is part of the vulnerability management activities and should be conducted annually to all public-facing applications by accredited third-party companies or organizationally independent internal resources.

Vulnerability scanning is not penetration testing. The main difference from those scans is penetration testing is the combination of automated tools (not necessarily vulnerability scanners but can be included) blended with manual testing, interpretation of the results, and verification of identified issues. The penetration tester may choose not to use those automated tools, but it increases the time spent on that project. Most vulnerability scans are safe tests, i.e., they do not exploit the vulnerability, or at least they are non-intrusive. Whereas in penetration tests, the tester can exploit the vulnerability to see how far they can go.

Most of the time, penetration testing is performed on the applications. However, penetration testers can use vulnerability scans of servers, endpoints, web applications, wireless networks, network devices, mobile devices, and other potential points of exposure. Hence, both applications, networks, and devices can be tested.

In a typical penetration testing, the team first plans the activity, including the information gathering and threat modeling. In this phase, the penetration tester understands the expectations from the organization, analyzes the business flows, collects data from various sources, and prepares the tools for the testing.

Testing follows the planning. The tester starts and stops the activity to the agreed scope at the agreed times to avoid missing other security-related incidents. The testing can be done in various ways, including a black box, where the tester does not know the system, other than the public ones, or a white box, where the tester has been given lots of information, maybe even application credentials. Having white box certainly decreases the time spent on planning or discovery. Hence the tester can focus on finding more issues in limited-time engagements. Generally, organizations choose to have gray-box testing, which combines both, where the tester has been provided some information, such as IP addresses or application names. Preferably, the tests should not be performed on production systems but on pre-production systems where the setup is identical but with limited or anonymized data. It should be noted that some systems do not have a pre-production or testing environment, or the testing should be performed on production systems to see the real impact of an attack. In these cases, organizations must ensure that it does not affect the regular business flow.

During the penetration test execution, organizations should monitor their systems for any unintended changes. As mentioned, penetration tests can be intrusive. Likewise, the tester should notify the organization immediately if a certain service or application becomes unresponsive.

The last phase is the analysis and reporting, where the tester analyzes the results from various tools, validates if necessary, and then presents the report to the organization. Reporting should include an executive summary, the scope and time of the testing, the methodology used, any limitations encountered during the testing, and findings with descriptions, severity information, impacted devices, references, and possible solutions for remediation. The evidence (raw outputs of tools, screenshots, scan results, etc.) is sometimes added as appendixes. It should be noted that the processes for evidence retention and destruction must be documented and complied with.

Organizations must have the necessary means to track the findings discovered during a penetration test. After patching the vulnerabilities or changing the configurations, a retest is required to validate the remediation of the findings. This can be done by the same company or tester or by competent internal resources.

Offensive Security Certified Professional (OSCP)11 or Offensive Security Experienced Penetration Tester (OSEP)12

Certified Ethical Hacker (CEH)13

Licensed Penetration Tester Master (LPT)14

Global Information Assurance Certification (GIAC) (e.g., GPEN,15 GWAPT,16 or GXPN17)

CREST Penetration Testing Certifications18

NCSC IT Health Check Service (CHECK) certification19

CompTIA PenTest+20

The penetration testers should be guided by a strict code of conduct or ethics. They may access highly sensitive information, so they should understand and follow professional and ethics responsibilities. This is also required to ensure the trustworthiness of the results.

Red Teaming

A red team is your contractual opponent. You try to defend your organization, assets, or information against red teams while the red team continuously attacks your systems and people. Although both aimed to find issues and eventually make the organization have a better security posture, the main differences between penetration testing and red teaming are the scope of the test and amount of people involved in each exercise. In penetration testing, one or two penetration testers generally try to find as many vulnerabilities as possible and exploit them. However, red teaming involves more people planning, attacking, and exploiting. The scope is also different; penetration tests are focused on a specific application or service, whereas red teaming can focus on the entire organization, including human factors, other physical elements, and external third-party resources.

Even though organizations found many vulnerabilities and issues during penetration testing and successfully remediated all, they may still be susceptible to red teaming exercises. Red teaming includes finding human vulnerabilities, so one red team member can use social engineering to have someone turn off one or more crucial security controls.

Red teaming exercises need to have a scope and goal defined and agreed upon by both parties. This is stated in the rules of engagement, where the boundaries of the attack are drawn, with defined exceptions, if any. Allowed and disallowed actions, approved targets, and restricted movements in the internal network should also be mentioned in the rules of engagement. For example, you would consider that the attack was successful in a healthcare facility if the red team finds PII data. Still, it should not go further, as it is a breach of personal data privacy laws and regulations. Similarly, if the red team gains access to core banking systems with write privileges in a financial institution, you would not want to see forged transactions in your production environment. Therefore these boundaries should be established in the rules of engagement.

It should also be noted that most red teaming exercises should be performed without the prior knowledge of the blue teams (the defenders) to assess their effectiveness in detecting the adversary actions.

Reconnaissance: Gather as much information as possible about the organization, potential targets, and threat surfaces.

Weaponization: Create a weapon, such as an email attachment with a malicious payload, to create remote access to the organization.

Delivery: Send the weapon to the target.

Initial exploitation and installation: If payloads are successful, gain more grounds to have persistent access.

Internal Recon: Gather more information about the internal network to increase the possibility for lateral movement.

Lateral movement: Find possible ways to achieve the goal or objective.

Complete mission: When the goal is achieved, debrief, and report the findings with the vulnerable path.

In the exercise debrief, red team and blue team findings are compared, and missing monitoring or security controls are identified, which can be used for immediate remediation activities or prioritization of future investments in the organization.

Code Review and Testing

Code review is the activity of checking the source code of an application by a competent individual or a team. The main goal is to detect the quality problems of the application, such as logic errors, vulnerabilities, potential defects like backdoors, buffer overflow or injections, or conformity to the coding standards.

The code reviewers should be different from the code author(s) and should also have experience in coding to understand the syntax of the language of the code. The reviewers are different and are not testers. As in most functional testing, the code is not analyzed at all.

There can be static testing in code reviews, where the source code is manually reviewed without executing the program, or dynamic testing, where the program is evaluated in its runtime environment by providing true or fuzzed data and observing the outputs. The latter is preferred where the organization does not have the program’s source code.

Code reviews can also be done for learning or knowledge transfer. In peer reviews, two developers review each other’s codes. The junior developer can learn several techniques, solution models, or quality expectations of the organization from the senior developer’s code, and the senior developer can correct the mistakes or deficiencies of the others.

Code reviews should be a part of the SDLC (software development life cycle) or the DevOps processes (or SecDevOps). There are numerous ways to automate the code review process in software development. Organizations should use these techniques to remediate coding errors before deploying into production. Flawed code is always too difficult and expensive to remediate after release to production.

Code reviews have to be a standard to follow, which is a coding guideline that lists the libraries or coding techniques to use, naming conventions, and other procedures. Organizations must establish their standards or use industry-accepted frameworks on coding, such as Open Web Application Security Project (OWASP) guidelines.

OWASP Top Ten,21 which lists the top ten vulnerabilities in most of the applications and prevention techniques

OWASP Code Review Guide22

OWASP Security Testing Guides for web applications23 and mobile apps24

OWASP Security Knowledge Framework,25 which provides a learning and training platform for developers

ZAP (also known as OWASP Zed Attack Proxy),26 a web application scanner to detect vulnerabilities

Code reviews can be done manually or by automated tools. Automated tools can be used for a very large number of lines of coding in a time-efficient manner; however, it should be noted that these tools can produce false positives and false negatives. This does not mean that manual coding is perfect; there are always some coding issues that can be overlooked. To minimize that, tool-assisted code reviewing can be used, where an individual analyzes the code. The tool helps the reviewer with recent changes, affected libraries, and provides review tracking.

Compliance Scans

Compliance scans, often called security baseline checks , scan systems against a given set of configuration checks (baseline). The security baselines are the minimum configurations that every system must meet.

Compliance scans complement vulnerability scans and are essential to confirm systems hardening. Systems should be hardened before going live, and the way to ensure that they have the minimum intended level of security is through compliance scans.

Most vulnerability scanning management tools can perform compliance scans. The difference between vulnerability scans and compliance scans is that systems are scanned for known vulnerabilities in vulnerability scans. In contrast, systems configurations are checked against the security baseline defined for those systems in compliance scans.

Is the system’s password policy is aligned with your standards (i.e., at least 14 characters, maximum age of 90 days, etc.)

Are session timeouts defined to at most 15 minutes?

Have remote communications been configured to be secure?

Are unused Apache server modules disabled?

Is the Oracle DB using the default public privileges?

Has Microsoft Edge been configured to disallow downloads or add-ons from untrusted networks?

Compliance scans can be performed on operating systems, server software (web servers, virtualization, database servers, DNS, email, authentication servers), cloud providers, mobile devices, network devices, desktop software (web browsers, productivity software, online conferencing tools) and multi-function devices. As long as the system allows reading the configurations, a baseline can be created from scratch by the organization or from industry-accepted best practices.

Vulnerability Scans

Vulnerability scans help organizations to understand how exposed their assets are to vulnerabilities. Therefore, to have a comprehensive assessment of their assets, it is crucial to ensure that vulnerability scans cover all organization assets, supported by an effective asset management process that can also help detect dormant assets.

Agent-based scans require the installation of a vulnerability scan agent in all assets to produce more detailed assessment results.

Since it is impossible to install vulnerability scan agents in some devices (e.g., routers, IP phones, CCTV, etc.), organizations must also conduct network-based vulnerability scans to assess these devices.

To ensure the effectiveness of network-based vulnerability scans, the vulnerability scanners must reach all networks in all ports. This means that firewalls must be configured to allow traffic from the vulnerability scanner to all networks in all ports, making the vulnerability scanner a very attractive target since it can reach all the organization networks without any restrictions. Therefore, vulnerability scanners must be placed in an isolated network, ideally accessed only from a privileged access management (PAM) platform or through the vendor management console, and firewall rules should be scheduled to be active only during scan periods.

Another challenge of network-based vulnerability scans is that to produce more detailed results, scans must be authenticated. Vulnerability scanners must be integrated with PAM to ensure that these authentication credentials are not compromised or locally stored.

Network-based vulnerability scans should also assess the organization’s external perimeter. These scans can be done from a scanner based on the Internet and any other access from the Internet.

On-premises: Agents and network security scanners report to an on-premises control center where policies and vulnerability updates are distributed.

Cloud: Agents and network security scanners report to a cloud-based control center where policies and vulnerability updates are distributed.

Hybrid: Agents and network security scanners report to a cloud-based control center where policies and vulnerability updates are distributed. An additional on-premises control center synchronizes with the cloud control center. The on-premises can be used for reporting and integration with other on-premises platforms like asset management or risk register.

Information security professionals rely on standards like the Security Content Automation Protocol (SCAP) from NIST common classification and rating of vulnerabilities.

CVSS: Common Vulnerabilities Scoring System27

CVE: Common Vulnerabilities and Exposures

CCE: Common Configuration Enumeration

CPE: Common Platform Enumeration

XCCDF: Extensible Configuration Checklist Description Format

OVAL: Open Vulnerability and Assessment language

CVSS: Common Vulnerability Scoring System

CVSS v3.0 Ratings

Severity | Base Score Range |

|---|---|

None | 0.0 |

Low | 0.2 –3.9 |

Medium | 4.0 – 6.9 |

High | 7.0 – 8.9 |

Critical | 9.0 – 10.0 |

CVSS 3.0 has the following severity ranks based on base score range.

CVE: Common Vulnerabilities and Exposures

A weakness in the computational logic (e.g., code) found in software and hardware components that, when exploited, results in a negative impact on confidentiality, integrity, or availability. Mitigation of the vulnerabilities in this context typically involves coding changes but could also include specification changes or even specification deprecations (e.g., removal of affected protocols or functionality in their entirety).

The vulnerability CVE unique identifier is also associated with specific operating systems, databases, applications, and so forth.

Since the same vulnerability has the same CVE across the industry, it can be used across several platforms to search for details about that vulnerability.

CCE: Common Configuration Enumeration

CCE provides a unique identifier to security-related system configuration issues to facilitate and expedite the correlation of configuration information from several information sources and tools.

CPE: Common Platform Enumeration

Common Platform Enumeration (CPE) describes and identifies classes of applications, operating systems, and hardware devices from the organization’s assets. Unlike CVE, CPE is not a unique identifier of products or systems. CPE identifies abstract product classes.

Vulnerability scanning platforms can relate vulnerabilities (CVE) and configurations (CCE) with CPE, which can help system administrators understand how many specific classes (CPE) are exposed or misconfigured. Then, it can trigger a configuration management tool to verify if the software is properly configured in compliance organization’s policies and followed standards.

XCCDF: Extensible Configuration Checklist Description Format

Extensible Configuration Checklist Description Format (XCCDF) is a standardized language in XML format to specify security checklists, benchmarks, and configuration documentation, to replace the security hardening and analysis documentation written in a non-standardized way.

OVAL: Open Vulnerability and Assessment Language

Open Vulnerability and Assessment Language (OVAL) is an international information security standard for security testing procedures.

Vulnerability Scanning Procedures

Vulnerability scanning procedures must define the scanning frequency (daily, weekly, monthly, or quarterly) and the reporting frequency. Reports must reach the right people to conduct the appropriate mitigation actions.

The patch management process must also define mitigation SLAs according to the vulnerability severity (CVSS). A risk register must be maintained to keep track of the vulnerabilities that have exceeded the SLA thresholds.

Additionally, all new assets and applications must be subject to a vulnerability scan before connecting to the organization network or going live.

Firewalls and Network Devices Assurance

Since devices like firewalls, routers, and switches do not support vulnerability scanning agents, additional mechanisms must be implemented to detect vulnerabilities. They must also verify configuration and rule compliance with corporate policies that cannot be identified by network-based vulnerabilities scans.

Ensure that the firewall rule is compliant with corporate policies and standards

Ensure configuration compliance with corporate standards and security baselines (e.g., CIS security baselines)

Detect vulnerabilities that the vulnerability scans cannot detect

Periodic reviews should be implemented according to the exposure level and inherent risk, where review frequency of public-facing or Internet exposed and perimeter devices should be higher than internal devices. This process confirms that network segmentation is properly implemented. It also identifies unused, shadowed, and redundant rules; and recertifies rules, which is a regulatory requirement in some countries and mandated by some standards (e.g., PCI DSS requirement 1.1.7 states that organizations should review firewall and router rule sets at least every six months).

Security Operations Center

Like many other services, there are three possible implementation models for a security operations center (SOC) in-house or on-premises, outsourced and hybrid with an on-premises SOC with one or more managed security service providers.

The best implementation model for each organization depends on the organization’s infrastructure size, the information security management system’s maturity, and available resources, among many other possible factors.

In addition to the dedicated managed security services provider, the hybrid model can also include services from other cloud-based providers like endpoint detection and response (EDR) software or secure DNS vendors that can provide alert services.

The SOC must ensure that all security platforms, servers, and endpoints are on-boarded to SIEM and databases through a database activity monitoring platform since the SOC effectiveness depends on up-to-date and reliable internal and external information sources.

If the organization cannot have an internal SOC because it is too expensive, labor-intensive, or does not have the specific expertise, it can opt to outsource SOC operations to a managed security services provider (MSSP) that can provide constant real-time monitoring, response, and escalation services.

If the organization decides to implement an in-house SOC or hybrid, it must ensure adequate SOC personnel with appropriate roles.

Security analyst (tier one): Vulnerability monitoring, incident triage, and escalation

Security analyst (tier two): Incident investigation, response and recovery procedures to remediate impact

Threat hunter (tier three): Assesses the organization infrastructure based on the latest threat intelligence reports to identify malicious activities

Manager (tier four): Oversees the SOC team, reports findings, defines action plans and use cases, escalates incidents to the organization’s CISO

The SOC must be supported by the security engineering and architecture teams in the design, development, and maintenance of the security infrastructure.

Incident Response and Recovery

An information security incident is an event with a negative impact or potential impact on confidentiality, integrity, or availability of an organization’s systems due to malicious activities.

NIST SP 800-61, Computer Incident Security Incident Handling Guide,29 defines a “computer security incident as a violation or imminent threat violation of computer security policies, acceptable use policies or standard security practices.”

Although security incident response and recovery fall under SOC responsibilities, the entire organization must support the process (e.g., IT, legal, HR departments) to minimize the incident impact and contain it in the quickest possible way.

The SOC might also need to liaise with and seek support from external parties, including software vendors, ISPs, MSSPs, law enforcement, and national or regulator computer security incident response teams (CSIRTs).

Preparation

Detection & Analysis

Containment, Eradication & Recovery

Post-Incident Activity

Incident response life cycle

Preparation

establish appropriate capabilities

develop and implement incident response policy, plan, and procedures (such as information sharing and internal and external escalation mechanisms)

identify relevant stakeholders who should participate in the process according to incident type and impact

Relevant stakeholders and stand-by teams contacts

Incident reporting and escalation mechanisms (e.g., phone numbers, email addresses, online forms)

Secure storage facilities

Updated laptops and smartphones with the necessary analysis and forensic tools toolkit

Digital forensics and backup devices for evidence collection and preservation

Detection and Analysis

Assuring that SOC monitors all devices like IPS, SIEM, DAM, EDR, and antivirus, ensuring prompt detection and a comprehensive understanding of the incident is essential. This means that all technical security controls (EDR, antivirus, IPS, firewalls, honeypot, proxy, etc.) and security events from other systems (e.g., failed login attempts) must be monitored by the SOC.

Additionally, incidents can be triggered by other sources like users (e.g., phishing reports, suspicious emails) or threat intelligence services.

External/removable media

Attrition (e.g., a DDoS brute-force attack against an authentication mechanism)

Web

Email

Impersonation

Improper usage

Equipment loss or theft

Other (an attack that does not fit in any of the other categories)

After an incident is created, the SOC starts the analysis process. It checks if it is a false positive or a real incident and classifies it according to its severity and attack vectors to be prioritized.

In case of a false positive, the rules that triggered the incident must be reviewed and added to a knowledge base to avoid future false positives.

An accurate networks and systems profile

A clear understanding of the normal behaviors (to detect potential anomalies)

An effective log retention policy

Event correlation

Time is synchronized in all the organization assets

A knowledge base built with information from previous cases

Expertise from other teams

During the analysis process, all collected evidence must be preserved. NIST SP 800-86 Guide to Integrating Forensic Techniques into Incident Response30 provides more guidance on forensic techniques and evidence collection and preservation.

Containment, Eradication, and Recovery

The containment, eradication, and recovery procedures are shaped based on the nature and impact of the attack and are highly dependent on proper preparation.

Containment provides time to develop a remediation plan and can go from simply disconnecting a desktop from the network to shutting down a system.

Potential damage to and theft of resources

Need for evidence preservation

Service availability (e.g., network connectivity, services provided to external parties)

Time and resources needed to implement the strategy

Effectiveness of the strategy (e.g., partial containment, full containment)

Duration of the solution

After containing the incident, it might be necessary to eradicate or eliminate all traces of the incident, like delete malware or disable breached user accounts, identify all exploited vulnerabilities and mitigate them.

During recovery, systems are restored and confirmed that they are functioning normally. The recovery process may also imply restoring clean backups, rebuilding systems from scratch, replacing compromised files with clean versions, installing patches, changing passwords, and strengthening network security.

Comprehensive and accurate documentation and up-to-date data flows and interdependencies are essential to reduce the recovery efforts with minimal trouble.

Post-Incident Activity

The post-incident activities consist of reviewing the incident and identifying improvement opportunities (also known as lessons learned) using and preserving the collected data.

Exactly what happened? Review incident timeline.

What went wrong?

What could be done to prevent the incident?

How did the organization staff (including all staff, not only the responders) respond? For example, clicking a malicious email payload might indicate a lack of security awareness training.

What kind of information was required? And when? Was it available?

Were the analysis procedures effective?

What could be done to improve the process?

Could information sharing have helped to avoid or improve the response process?

Which measures should be implemented to avoid similar incidents?

The collected data during the incident can build a risk scenario to be added to the risk assessment and use case to be configured in the SIEM correlating data and improving response times.

The data collected during the incident should be kept in accordance with the organization’s retention policies and, where applicable, according to legal requirements.

Threat Hunting

Threat hunting, also known as cyber threat hunting, is the process conducted by SOC analysts of actively looking for undetected threats that might have secretly compromised an organization’s network by looking beyond known alerts and known malicious threats.

Attackers like advanced persistent threats (APTs) can compromise the organization network and remain active and undetected for a long time collecting data and stealing login credentials to laterally move to other devices uncompromised until then.

The threat hunting process goes beyond the usual detection technologies, like security information and event management (SIEM) and EDR, by looking for hidden malware and patterns of suspicious activities. This can be done by combining several sources of information in addition to SIEM and EDR, and correlate that information based on known use cases, the SOC analyst’s experience, artificial intelligence technologies, and threat intelligence, such as security advisories and alerts with indicators of compromise (IoCs) or indicators of attack (IoAs).

Intel-based hunting looks for IoCs provided by threat intelligence alerts and advisories IoCs to hunt for threats. These IoCs can be hash values, IP addresses, domain names, networks, or host artifacts provided by intelligence sharing platforms such as computer emergency response teams (CERT) that should be looked for by the SOC. For example, if one alert mentions a public IP address as an APT command and control, the SOC should look for it in all logs, such as proxy, to check if there were any attempts to access it. If confirmed, devices in the organization have been compromised.

Hypothesis hunting is a proactive hunting model that uses a threat hunting library and playbooks aligned with the MITRE ATT&CK framework to detect APTs and malware in the organization’s infrastructure. This approach uses known attackers IoAs and tactics, techniques, and procedures (TTP) to detect threats.

Custom hunting: Based on the organization requirements and use cases considering the organization context factors like geopolitical issues or specific activity-related issues (e.g., finance, credit card, defense), it can include both intel and hypothesis models. When the threat hunting detects malware or confirms malicious activity, an incident must be created, and the mitigation process managed according to the security incident management process.

Threat Intelligence

Threat intelligence is the prior knowledge of what will happen to your organization, assets, or information so that you can prepare yourself to prevent or mitigate those attacks. Threat intelligence can provide useful information on the attacker’s modus operandi, capabilities, sources, resources, and motives (also known as tactics, techniques, and procedures (TTP)). This information helps you build up efficient and focused defenses to protect the organization.

There are three categories of threat intelligence, strategic, tactical, and operational. Strategic threat intelligence helps the organization to understand the overall threat landscape. These are general information provided by security companies about the nation, the industry, or the organization. Tactical threat intelligence is more actionable. They may contain IoCs or the fingerprints of the malicious behavior so that organizations can act on security devices, such as proxy, email gateway, EDR, or IPS. Generally, these are malicious IP addresses, domain names, hashes of malicious payloads. Operational threat intelligence is the knowledge on potential cyberattacks or campaigns against the organization, with possible attack vectors.

Threat intelligent information mostly comes from the dark or deep web, where cybercriminals, threat actors, or hacktivists discuss their next attack, try to find support for their next moves, or try to sell stolen data. Organizations do not need to access those places to get that information; instead, they hire professional companies—generally, subscription-based software-as-a-service platforms provide threat intelligence feeds tailored to your organization or industry.

Establishing defenses before the actual incident is the main purpose of threat intelligence. However, it can also be used to decrease the incident response time. There are numerous threat intelligence platforms that analysts can send the hashes of files to check if they have been tagged in previous cyber incidents. In addition, threat intelligence can help to prioritize patching in larger organizations. For example, if the organization acquired an operational threat intelligence that their public website is attacked, the patching of those servers should be prioritized.

Threat intelligence data is also useful for risk management activities, particularly in deciding the likelihood of a certain threat. Moreover, most threat intelligence tools provide information on stolen customer data such as card details, names, and IDs, passwords. Organizations can use this information by either replacing the cards or forcing their end-users or consumers to change their passwords. Similarly, organizations can assess the risk that the third-party service providers introduce to their environment by cyber-risk scoring that the threat intelligence platforms offer nowadays.

In addition to commercial threat intelligence service providers, national CERTs also provide and expect other organizations to share threat intelligence information so that a national-level awareness and preparedness can be done.

Organizations can also use online threat intelligence tools to check their digital footprint on the Internet. There are many ready-to-use31 and ready-to-install32 tools for such purposes.

Security Engineering

Security engineering incorporates and integrates security controls with all other information systems from the organization.

Asset Management

The asset management process maintains oversight of the organization’s IT assets inventory. This should be a continuous process to ensure that all devices are protected by security controls like antivirus, EDR, or vulnerability scan agents to provide reliable information on the organization’s assets, security events, and vulnerabilities.

Asset management and discovery platform

Media Sanitation

Media Sanitation is another part of the asset management process. Organizations must implement an effective media sanitation policy to prevent data leakage to address all decommissioned and broken media.

NIST SP 800-53,33 Guidelines for Media Sanitization, offers guidance on how organizations can sanitize media.

In their media sanitation policy, organizations must consider the asset classification and choose the right method according to the asset information sensitiveness.

Media with highly confidential information should be degaussed or physically destroyed, and media with non-sensitive information can only be erased or overwritten. However, applying different sanitation methods might not be cost-effective and complex to manage. Therefore, some organizations opt to have only one highly effective sanitation method for the entire organization (e.g., physical destruction) or outsource this process.

Configuration and Patch Management

Network devices, security devices, and IT assets are dependent on configurations. It is fundamental to system hardening. Security professionals configure a device to be secure based on security baselines, organizational policies, or business needs for an application. All these configurations are needed to be done in configuration management.

In large organizations, where thousands of servers and devices are in place, it is nearly impossible to configure them manually, one by one. Hence, organizations are relying on automation by configuration management tools. These tools can detect the device’s current configuration, say a firewall or many firewalls, and apply any security patch or even change the firewall configuration to add a new zone to all devices in one click, for example. These tools also cover applications. Whenever a new release is required, the configuration management tool uploads the required package to the servers, stops the application services, replaces the files, and restarts the services in all servers in the cluster. After services are up and running, it can send the provisioning scripts and collect outputs.

Thanks to these tools, it is easier to track which systems have the latest configuration changes, which systems require changes, or which systems do not have a prerequisite package. In addition, some of these tools support workflows, which enable security professionals to track the actual approval processes of the changes, change windows, impacted or pending devices, and assess the impact easily.

A centralized configuration management tool for large organizations also helps with overall security. The monthly patches are needed to be discovered and applied to all servers. Configuration managers can detect the missing patches, apply them in a defined time frame, and report any issues during the patching. Moreover, they are useful for setting up new virtual servers. When you need a new server, it can be deployed and installed in seconds via an OS template (an image), already hardened.

Security Architecture

According to NIST, security architecture is “a set of physical and logical security-relevant representations (i.e., views) of system architecture that conveys information about how the system is partitioned into security domains and makes use of security-relevant elements to enforce security policies within and between security domains based on how data and information must be protected.”

The security architecture reflects security domains, the placement of security-relevant elements within the security domains, the interconnections and trust relationships between the security-relevant elements, and the behavior and interaction between the security-relevant elements. The security architecture, similar to the system architecture, may be expressed at different levels of abstraction and with different scopes.”34

In addition to security standards (device hardening, network devices, cryptographic, etc.) and security baselines, each organization must have a corporate security architecture to integrate all the technical security controls and clearly define the integration rules for every system.

The security architecture defines the corporate security standards (e.g., cryptographic, databases, authentication methods, file sharing, network segmentation, technologies, etc.) so project managers and system administrators know exactly where each component of their systems fit, the authorized technologies, and how to integrate it with the remaining systems.

This process should also include application security architecture (e.g., mobile application calls to third parties should be directly sent to the service provider or directed through the organization data center), including authentication methods (e.g., SAML, OAuth, etc.).

How will external parties connect from the Internet? (HTTPS, IPSec, SFTP?)

How will those parties authenticate? And which technologies should be used (directory services, authentication protocols, etc.)?

- How should the organization segment its perimeter and internal networks?

Critical, non-critical

Confidential, non-confidential

Cardholder, non-cardholder

Customer, partner, employee services

- How should this segmentation be implemented in communication devices?

Distinct devices per segment or perimeter zone?

- Which technology should be implemented for each layer? And how should it be hardened?

Front end

Middleware

Databases

Back office

Support Systems (DNS, Active Directory, file servers, internal portals, mail, etc.)

Legacy systems

How should virtualization platforms be implemented?

Which security controls should be implemented between each layer?

How will communication be done between layers?

- Which security controls should be implemented in each asset? And are new assets on-boarded?

Hardening

Antivirus

Directory services integration

Policies

EDR

Vulnerability scanner agent

SIEM integration

DAM integration (for databases)

How and where should a web application firewall be implemented?

How and where should an intrusion protection system be implemented?

How will all these systems be administered?

The security architecture should be a live process, frequently reviewed to define how new technologies should be on-boarded.