Automation is the application of machines to tasks once performed by human beings. Although the term mechanization is often used to refer to the simple replacement of human labor by machines, automation generally implies the integration of machines into a self-governing system. Automation has revolutionized those areas in which it has been introduced, and there is scarcely an aspect of modern life that has been unaffected by it.

Digital transformation and automation go hand in hand. Digital transformation is the act of harmonizing business processes with current, automation-based technology to make the entire breadth of cross-enterprise workflows convenient, optimized, and less erroneous. In other words, it shifts tasks from being siloed, department specific, and manually done to being streamlined, universally accessible, and strategically automated. It does this by introducing new software to the current systems. This software acts as an extension of those already in place, performing many of the repetitive and monotonous functions that would have previously fallen on multiple employees across multiple departments. Such was the main cause of business process lag times and chokepoints as parts of that workflow stalled between departmental queues. However, with digital transformation technologies in place, employees need to spend less doing things “the old way.” Bolstered by automated technology and streamlined, cross-department software, enterprises can now divert attention onto higher-level, high-order work, resulting in a fine-tuned enterprise workflow process that minimizes waste and maximizes resource productivity.

The world is moving toward automation, and there is no technical limitation on how much automation can be done in an enterprise. However, the benefits achieved from automation vis-à-vis the cost incurred on automation always need to be analyzed before making a decision. In simple terms, tasks which are highly repeatable need to be automated.

There are different tools and technologies that enable automation. Robotic process automation (RPA) is one such technology and is the term used for software tools that partially or fully automate human activities that are manual, rule based, and repetitive. They work by replicating the actions of an actual human interacting with one or more software applications to perform tasks, such as data entry, process standard transactions, or respond to simple customer service queries.

Robotic process automation tools are not replacements for the underlying business applications; rather, they simply automate the already manual tasks of human workers.

One of the key benefits of robotic process automation is that the tools do not alter existing systems or infrastructure. Traditional automation tools and technologies used to interact with systems using application programming interfaces (APIs), which means writing code which can lead to concerns about quality assurance, maintaining that code, and responding to changes in the underlying applications.

With robotic process automation, scripting or programming needs to be performed for automating a repetitive task for which a subject matter expert (SME) is required who understands how the work is done manually. In addition, the data sources and destinations need to be highly structured and unchanging – robotic process automation tools cannot apply intelligence to deal with errors or exceptions at all. But even with these considerations, there are tangible, concrete benefits from robotic process automation. Studies by the London School of Economics suggest RPA can deliver a potential return on investment of between 30% and 200% – and that is just in the first year.1 Savings on this scale will prove hard to resist. Last year, Deloitte found that while only 9% of surveyed companies had implemented RPA, almost 74% planned to investigate the technology in the next 12 months.

The limitation of RPA is that they mimic human behavior in a static way, and they lack a human’s ability or intelligence to adapt to change. Artificial Intelligence is the next big technology that complements RPA by making it possible for machines to mimic humans in functions such as learning and problem-solving. Artificial Intelligence could learn from the past data and perform tasks in much more intelligent way as compared to RPA which can perform only repetitive tasks.

RPA and Artificial Intelligence are coming together, and the cost savings and benefits are too compelling for companies to ignore. Robotic process automation (RPA), for example, can capture and interpret the actions of existing business process applications, such as claims processing or customer support. Once the “robot software” understands these tasks, it can take over running them, and it does so far more quickly, accurately, and tirelessly than any human. Complementing RPA with AI algorithms can provide additional benefit that the robot can learn from data and from its mistakes so that it can improve its accuracy and performance over the period of time.

Applicability of RPA and AI combination can span in improving cost as well as bringing new insights to the business based on which intelligent decisions can be made. A combination of RPA and Artificial Intelligence can provide maximum automation capabilities for enterprises and is one of the most important elements to be considered during digital transformation. These benefits are amplified further in an IoT context, which we will discuss in subsequent sections. Combining IoT with AI can create “smart machines” that simulate intelligent behavior to make well-informed decisions with little or no human intervention. The result is an acceleration in innovation which can significantly boost productivity for the enterprises involved. IoT and AI markets are developing rapidly and in tandem.

Robotic Process Automation

As discussed, robotic process automation (RPA) is a technology that allows to configure computer software or a “robot” to emulate and integrate the actions of a human interacting within digital systems to execute a business process. RPA utilizes the user interface to capture data and manipulate applications just like humans do. They interpret, trigger responses, and communicate with other systems in order to perform a vast variety of repetitive tasks.

Many enterprises are turning to RPA to streamline enterprise operations and reduce costs. With RPA, businesses can automate mundane rules-based business processes, enabling business users to devote more time to serving customers or other higher-value work. RPA bots called virtual IT support teams are replacing humans on repetitive mundane processes. Enterprises further are also supercharging their automation efforts by injecting RPA with cognitive technologies such as machine learning, speech recognition, and natural language processing, automating higher-order tasks that in the past required the perceptual and judgment capabilities of humans. This is called Artificial Intelligence.

The key difference that distinguishes RPA from enterprise automation tools like business process management (BPM) is that RPA uses software or cognitive robots to perform and optimize process operations rather than human operators. Unlike BPM, RPA is a quick and highly effective fix that does not require invasive integration or changes to underlying systems, allowing organizations to rapidly deliver efficiencies and cost savings mainly by replacing humans with software “robots.”

RPA is great for automation, but there are limitations which need to be kept in mind. As an example, because RPA usually interacts with user interfaces, even minor changes to those interfaces may lead to a broken process.

Artificial Intelligence

Artificial Intelligence (AI) is the “science of making machines smart.” Today, we can teach machines to be like humans. We can give them the ability to see, hear, speak, write, and move.

AI subfields

Machine learning – Machine learning provides systems the ability to automatically learn and improve from experience without being explicitly programmed. It focuses on the development of computer programs that can access data and use it to learn for themselves. Machine learning is the ability of computer systems to improve their performance by exposure to data without the need to follow explicitly programmed instructions. It is the process of automatically spotting patterns in large amounts of data that can then be used to make predictions.

Deep learning – This is a relatively new and hugely powerful technique that involves a family of algorithms that processes information in deep “neural” networks where the output from one layer becomes the input for the next one. Neural networks are computing systems vaguely inspired by the biological neural networks that constitute animal brains. The data structures and functionality of neural networks are designed to simulate associative memory. Deep learning algorithms have proved hugely successful in, for example, detecting cancerous cells or forecasting disease but with one huge caveat: there is no way to identify which factors the deep learning program uses to reach its conclusion.

Computer vision – It is the ability of computers to identify objects, scenes, and activities in images using techniques to decompose the task of analyzing images into manageable pieces, detecting the edges and textures of objects in an image, and comparing images to known objects for classification.

Natural language/speech processing – It is the ability of computers to work with text and language the way humans do, for instance, extracting meaning from text/speech or even generating text that is readable, stylistically natural, and grammatically correct.

Cognitive computing – A relatively new term, favored by IBM, cognitive computing applies knowledge from cognitive science to build an architecture of multiple AI subsystems, including machine learning, natural language processing, vision, and human-computer interaction, to simulate human thought processes with the aim of making high-level decisions in complex situations. According to IBM, the aim is to help humans make better decisions, rather than making the decisions for them.

Data Science

IoT concepts have matured in the last few years and are continuing to mature. There has been a growing focus on the importance of security, analytics at the edge, and other technologies and platforms that are necessary to make IoT projects successful. However, one of the most important elements that is necessary for an IoT use case to deliver results is data. In fact, one important element that is missing from the phrase “Internet of Things” is perhaps the most important piece of the puzzle – the data itself.

IoT is all about data. Almost all enterprises are out there collecting huge amounts of data to make business decisions based on this data. The more data enterprises have, the more business insights they can generate. Using data science, enterprises uncover patterns in data that they did not even know existed. For example, one can discover that they are at risk of an accident based on the data which another car in front of them sends. Data science is being used extensively in such scenarios. Enterprises are using data science to build recommendation engines, predict machine behavior, and much more. All of this is only possible when enterprises have enough amount of data so that various algorithms could be applied on that data to give more accurate results. Data science is all about applying Artificial Intelligence using machine learning algorithms on huge data sets to make predictions and also to discover patterns in the data.

The Link Between Artificial Intelligence and Data Science

The link between data science and Artificial Intelligence is a one-to-one mapping as we discussed earlier. This means that data science helps AIs figure out solutions to problems by linking similar data for future use. Fundamentally, data science allows AIs to find appropriate and meaningful information from those huge pools of data faster and more efficiently. Without data science, AI does not exist. AI is a collection of technologies that excel at extracting insights and patterns from large sets of data, then making predictions based on that information.

An example is Facebook’s facial recognition system which, over time, gathers a lot of data about existing users and applies the same techniques for facial recognition with new users. Another example is Google’s self-driving car which gathers data from its surroundings in real time and processes this data to make intelligent decisions on the road.

A typical non-AI system relies on human inputs to work just like an accounting software. The system is hardcoded with rules manually. Then, it follows those rules exactly to help do the taxes. The system only improves if human programmers improve it. But machine learning tools can improve on their own. This improvement comes from a machine assessing its own performance based on new data.

Importance of Quality Data

The key to AI success is having a high-quality data. AI can provide superior results only if it can learn from superior and high-quality data very specific to the use case enterprises are automating using AI. As an example, to solve a physics problem, if you provide mathematics-related data to an AI system, then results would not be encouraging. Your systems will learn something, but efforts probably would not help the system answer your test questions correctly. Another example, if you train a computer vision system for autonomous vehicles with images of sidewalks mislabeled as streets, the results could be disastrous. In order to develop accurate results using machine learning algorithms, you will need high-quality training data. To generate high-quality data, you will need skilled annotators to carefully label the information you plan to use with your algorithm.

If data is not good, then AI will fail in providing the desired results, because AI uses machine learning to learn from the data, and therefore enterprises need quality data. If data is not good, AI systems will not learn good and therefore will not give good results. This means that organizations using AI must devote huge amounts of resources to ensuring they have sufficient amounts of high-quality data so that their AI tools are giving desired results. This does not necessarily mean that enterprises need a fully functional enterprise-level big data platform to embark their journey on Artificial Intelligence. Artificial Intelligence can work on a subset of quality data specific to the use case, and enterprises can create a data store that can cater to the specific use case and use this data to train systems.

Today, almost all products available in the market, irrespective of what the tool specializes in, claim to have AI features built in the tool, which has caused a lot of confusion in the technology market on which tools to use for AI-driven use cases. However, one needs to understand that almost all tools whose primary tool capability is not AI or machine learning provide only generic AI capabilities and can perform very limited AI tasks. If one needs to apply Artificial Intelligence within their enterprises across a broad spectrum of IoT use cases, then they need to look at IoT Cloud Platforms that can perform AI on IoT data for that specific use case. Azure IoT Platform is one such example.

Artificial Intelligence and IoT

We discussed in previous sections that RPA uses predefined rules to perform activities, and since the last few years, RPA has been highly successful for several use cases. Even if we consider some of the world’s largest problems solved by organizations like NASA, it had been achieved with traditional rules or theories. As an example, for flying from Earth to Mars, NASA does not aim for where Mars is currently, it aims to find out where Mars will be nine months later, since it takes nine months for astronauts to travel from Earth to Mars. The rule which NASA applies to predict the location of Mars after nine months is based on the 300-year-old Newton’s law of motion. Newton’s theory works for all planets in our solar system, except Mercury. Mercury is another story anyways because it is too close to the sun, etc., and other reasons. A similar case is with the stock market. A predefined rule such as “buy stocks if the short-term average of the stock cuts over the long-term average of the stock” works quite well. Such predefined rules used to be the norm in the last few years when AI was not part of the enterprise strategy, which is not the case anymore. AI is becoming mainstream for most organizations, and there are more and more use cases that are proving the benefits of AI. Because of this renowned focus, enterprises have started moving away thinking about traditional rules and rule-based systems. The new norm is – give me data and I will create models by learning from the data, and the more I learn, the better the models I will create. This is what Artificial Intelligence is all about. For IoT use cases, Artificial Intelligence is amplifying benefits to such an extent that enterprises are able to exceed their profit margins, while many other enterprises are entering into new business domains which they never had thought about in the past.

IoT creates a lot of data. Devices send large volumes and varieties of information at high speed, which makes IoT data management very complex. We are going to discuss in detail about data management in IoT in Chapter 11. Managing such complex IoT data requires robust and tailored data architectures, policies, practices, and procedures that properly meet the IoT data life cycle needs. Traditional big data approaches and infrastructure are not sufficient, and enterprises need to validate how IoT Cloud Platforms or IoT Gateways are addressing IoT-specific data challenges during the evaluation process.

Given that the number of IoT devices will increase with time, say from 40 to 400,000 devices, how will IoT data architecture accommodate this?

Most of the IoT data has a short shelf life – this means that actions need to be taken right after devices generate the data. As an example, if a device records very high temperature in a furnace, the furnace needs to be immediately switched off, and this temperature data is useful only if actions are taken at that point in time. Enterprises need to understand the IoT solutions provided by IoT product vendors for real-time processing and analysis.

Once IoT data is received, how will it be stored ensuring enough space for new information?

How will inputs and outputs flow through devices without becoming clogged?

For IoT use cases where device data need to be combined with non-device data (e.g., metadata about users and passwords), what solutions exist that can combine such disparate data to make the data meaningful?

Lessons Learned in Applying AI in IoT Use Cases (Applied IoT)

Artificial Intelligence is highly dependent on quality data to make right predictions. Once enterprises have the enough quality data, the way AI works is that a machine learning model is given a set of data. With this first set of data, the model performs computations and predicts an outcome. If the outcome is wrong, it readjusts the rule and applies the rule on the second set of data and predicts the outcome. This cycle goes on until the outcome is correct. Once the outcome is correct, data is fed multiple times again to determine the accuracy of the outcome. After applying the rule on thousands of data sets and consuming loads and loads of data, a model that does the right predictions is created. This is what machine learning is all about.

Applied IoT use case focus areas

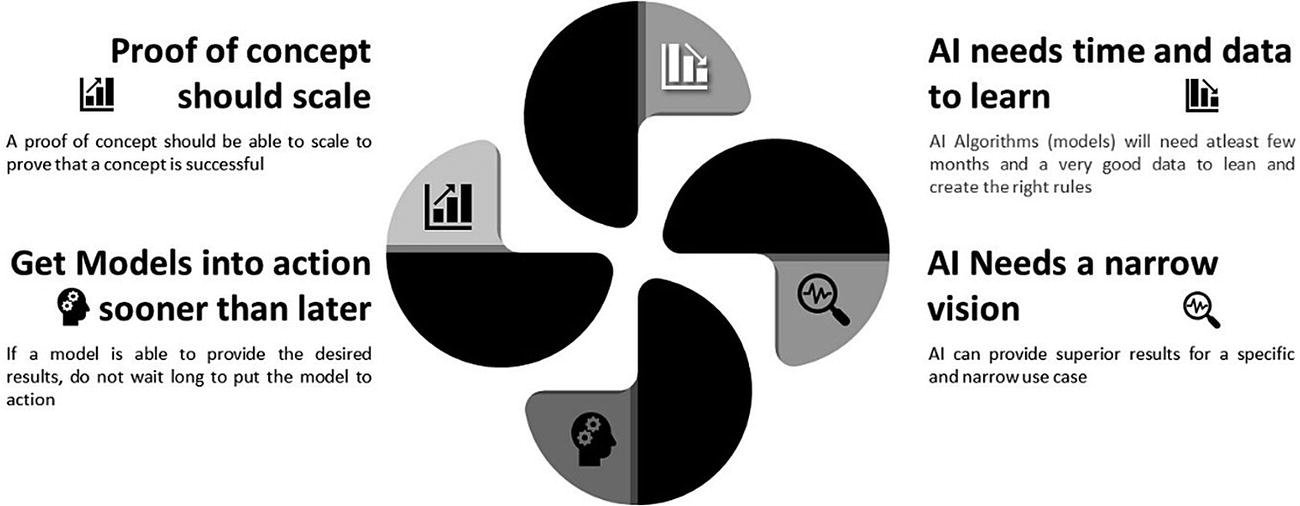

POC Is Not Successful, Until It Can Scale

The first rule is that a concept cannot be treated as successful with a proof of concept . A concept is successful if a proof of concept is able to scale.

Let me explain this with a case study. One of the top luxury retail brands with 2000 plus retail stores in the United States wanted us to create an Artificial Intelligence utility using machine learning that is able to alert the store manager in less than five seconds if any celebrity turns up at their store. The store manager then will be able to greet the celebrity and give them a warm welcome to the store. We developed the Applied AI use case and deployed it at five stores in Alaska using 400 cameras, and it gave us encouraging results with more than 95% success rate. We implemented the POC using a facial recognition technique and machine learning patterns for around 5000 celebrities using millions of data sets. It worked great at Alaska.

We used the same technique in Michigan and other cities with 10,000 cameras, and the results were not encouraging. We missed 32% of the celebrities that entered the stores in both Michigan and Detroit since the data used was not sufficient to recognize all the celebrities around the region. This case study taught us that if a proof of concept proves that it works within five seconds with 400 cameras and 5000 celebrities, it does not mean it can work for 40,000 cameras and across 50,000 celebrities. So the first lesson learned is that a POC is not successful unless it is proven that it can scale.

AI Needs Enough Data and Time to Learn

To predict and provide accurate results, AI models require a lot of data and time. A medium to complex Applied AI use case needs at least six to nine months of learning time to provide 95% accuracy. A manufacturing customer called Seagate asked us to deploy several devices across their entire production line with the requirements to receive real-time insights on their product line failures, because traditionally if one of their units fails, the whole production line stops, which can cause several hundred of dollar losses. But the key ask from the customer was that we cannot tell them of these failures too early or too late; they needed to be informed just before any incident will occur. To achieve such near-real-time failure alerts, we needed to have an enormous amount of data, and the model needed several months of learning to predict the failures. After feeding millions of IoT data sets to the machine learning model for more than nine months, the model was able to predict failure just about 85% of the time.

Be Specific on What to Achieve

AI is very effective if it is given one specific narrow task to perform.

We were called to a hospital where there were high incidences of tuberculosis (TB) in a city. It was decided by the government to perform a TB test on each and every person from the city above 40 years of age. Though there were scans being performed on a mass scale across the city, the challenge hospitals faced is that after a chest X-ray is taken, it took two weeks for doctors to come back and confirm if a person is positive or negative to TB. For positive cases, in the two-week timeframe the risk was that the infected person would transmit the disease to others around them. AI was applied to solve this problem. We asked doctors to share all X-rays of patients and categorized them into two buckets, namely, TB positive and TB negative. TB positive are patients who were infected with TB, and the rest were categorized as TB negative. Machine learning models were run on thousands of X-rays which were pulled from the labs across the city. Models were trained over and over based on the positive and negative reports. After learning from millions of X-rays, finally the AI model was able to predict from an X-ray if a person is TB positive or negative in just one second. A 95% success rate was achieved by the algorithm.

So the key learning from this case study is that enterprises need to be very specific on what they want to achieve (predict) from AI. In the hospital case study, we used only X-rays as the means to predict tuberculosis in patients – we did not use MRI or CT scan.

Get a Model into Action If It Works Fine, Instead of Aspiring to Do More AI

If a model works fine, it is always recommended to put the model into action based on the use case.

We were in Copenhagen where I was shown a mobile app. The mobile app shows that in a proximity of 100 kilometers to my location how many food stores are there which has food items that will expire in the next two days. Using this data, stores provide discounts between 50% and 70% on the expiring food items. I found around 2000 food items within the 7-kilometer radius from my hotel, and once I clicked a specific store, it gave me a list of all expiring items in that store. I was also able to click a specific item, and the app gave me a list of all nearby stores where the expiring item was available. This is a simple app which did not require AI to perform the job, and a few simple rules were sufficient. The company that developed this app started with an aspiration to develop the app using AI algorithms, but soon they realized that the app does not need AI, and a few simple rules would be sufficient to extract the expiry date information from the food items and share it with users. This means that once you spend enough time collecting data and you see results, it is best to implement the rule instead of forcing yourself to do Applied AI.

The lesson learned with this example is that enterprises do not always need to force the data collected to do AI, even though they have been funded to do AI. If you can already do good with the data you have using predefined rules, please use the rules to achieve the business outcome.

Summary

In this chapter, we discussed the key differences between robotic process automation and Artificial Intelligence. We also discussed and understood the different subfields within Artificial Intelligence such as machine learning, deep learning, computer vision, natural language/speech processing, and cognitive computing that aim to build machines that can do things.

The importance of big data in Artificial Intelligence is clearly articulated in this chapter. Data science helps AIs figure out solutions to problems by linking similar data for future use. Fundamentally, data science allows AIs to find appropriate and meaningful information from those huge pools of data faster and more efficiently. It goes without saying that enterprises can be successful in applying Artificial Intelligence for IoT use cases only if they have quality data and are able to use the data in the right way.

- 1.

IoT cannot be treated successful just because a POC has succeeded; POC should be able to scale, which is the first mantra.

- 2.

The second mantra is to give enough data and time for AI models to learn.

- 3.

The third mantra is to have a defined goal for AI models.

- 4.

The fourth is to start using the AI models if they are giving results already instead of aspiring to do more AI.

It is essential for enterprises to ensure that they select the right Smart IoT Gateways and IoT Cloud Platforms which have the support of Artificial Intelligence. Secondly, IoT use cases need to be carefully chosen where AI can play a role.

In the next chapter, we will discuss about the importance of data and analytics in an IoT ecosystem, after which we will discuss about the big data reference model.