I’m going to now share with you the shocking truth about computers—computers are really, really stupid. Many people get enamored with these devices and start to believe things about computers that just aren’t true. They may see some amazing graphics, some fantastic data manipulation, and some outstanding artificial intelligence and assume that there is something amazing happening inside the computer. In truth, there is something amazing, but it isn’t the intelligence of the computer.

2.1 What Computers Can Do

Computers can actually do very few things. Now, the modern computer instruction set is fairly rich, but even as the number of instructions that a computer knows increases in abundance, these are all primarily either (a) faster versions of something you could already do, (b) computer security related, or (c) hardware interface related. Ultimately, as far as computational power goes, all computers boil down to the same basic instructions.

In fact, one computer architecture, invented by Farhad Mavaddat and Behrooz Parham, only has one instruction, yet can still do any computation that any other computer can do.1

Do basic integer arithmetic

Do memory access

Compare values

Change the order of instruction execution based on a previous comparison

If computers are this limited, then how are they able to do the amazing things that they do? The reason that computers can accomplish such spectacular feats is that these limitations allow hardware makers to make the operations very fast. Most modern desktop computers can process over a billion instructions every second. Therefore, what programmers do is leverage this massive pipeline of computation in order to combine simplistic computations into a masterpiece.

However, at the end of the day, all that a computer is really doing is really fast arithmetic. In the movie Short Circuit, two of the main characters have this to say about computers—“It’s a machine… It doesn’t get happy. It doesn’t get sad. It doesn’t laugh at your jokes. It just runs programs.” This is true of even the most advanced artificial intelligence. In fact, the failure to understand this concept lies at the core of the present misunderstanding about the present and future of artificial intelligence.2

2.2 Instructing a Computer

The key to programming is to learn to rethink problems in such simple terms that they can be expressed with simple arithmetic. It is like teaching someone to do a task, but they only understand the most literal, exact instructions and can only do arithmetic.

There is an old joke about an engineer whose wife told him to go to the store. She said, “Buy a gallon of milk. If they have eggs, get a dozen.” The engineer returned with 12 gallons of milk. His wife asked, “Why 12 gallons?” The engineer responded, “They had eggs.” The punchline of the joke is that the engineer had over-literalized his wife’s statements. Obviously, she meant that he should get a dozen eggs, but that requires context to understand.

The same thing happens in computer programming. The computer will hyper-literalize every single thing you type. You must expect this. Most bugs in computer programs come from programmers not paying enough attention to the literal meaning of what they are asking the computer to do. The computer can’t do anything except the literal meaning.

Learning to program in assembly is helpful because it is more obvious to the programmer the hyper-literalness of how the computer will interpret the program. Nonetheless, when tracking down bugs in any program, the most important thing to do is to track what the code is actually saying, not what we meant by it.

- 1.

Go to the store.

- 2.

If the store has corn, buy the corn and return home.

- 3.

If the store doesn’t have corn, choose a store that you haven’t visited yet and repeat the process.

That sounds pretty specific. The problem is, what happens if no one has corn? We haven’t specified to the robot any other way to finish the process. Therefore, if there was a corn famine or a corn recall, the robot will continue searching for a new store forever (or until it runs out of electricity).

When doing low-level programming, the consequences that you have to prepare for multiply. If you want to open a file, what happens if the file isn’t there? What happens if the file is there, but you don’t have access to it? What if you can read it but can’t write to it? What if the file is across a network, and there is a network failure while trying to read it?

The computer will only do exactly what you tell it to. Nothing more, nothing less. That proposition is equally freeing and terrifying. The computer doesn’t know or care if you programmed it correctly, but will simply do what you actually told it to do.

2.3 Basic Computer Organization

The CPU (also referred to as the processor or microprocessor)

Working memory

Permanent storage

Peripherals

System bus

Let’s look at each of these in turn.

The CPU (central processing unit) is the computational workhorse of your computer. The CPU itself is divided into components, but we will deal with that in Section 2.7. The CPU handles all computation and essentially coordinates all of the tasks that occur in a computer. Many computers have more than one CPU, or they have one CPU that has multiple “cores,” each of which is more or less acting like a distinct CPU. Additionally, each core may be hyperthreaded, which means the core itself to some extent acts as more than one core. The permanent storage is your hard drive(s), whether internal or external, plus USB sticks, or whatever else you store files on. This is distinct from the working memory , which is usually referred to as RAM, which stands for “random access memory.”3 The working memory is usually wiped out when the computer gets turned off.

Everything else connected to your computer gets classified as a peripheral. Technically, permanent storage devices are peripherals, too, but they are sufficiently foundational to how computers work I treated them as their own category. Peripherals are how the computer communicates with the world. This includes the graphics card, which transmits data to the screen; the network card, which transmits data across the network; the sound card, which translates data into sound waves; the keyboard and mouse, which allow you to send input to the computer; etc.

Everything that is connected to the CPU connects through a bus, or system bus. Buses handle communication between the various components of the computer, usually between the CPU and other peripherals and between the CPU and main memory. The speed and engineering of the various computer buses is actually critical to the computer’s performance, but their operation is sufficiently technical and behind the scenes that most people don’t think about it. The main memory often gets its own bus (known as the front-side bus) to make sure that communication is fast and unhindered.

Physically, most of these components are present on a computer’s motherboard, which is the big board inside your desktop or laptop. The motherboard often has other functions as well, such as controlling fans, interfacing with the power button, etc.

2.4 How Computers See Data

As mentioned in the introduction, computers translate everything into numbers. To understand why, remember that computers are just electronic devices. That is, everything that happens in a computer is ultimately reducible to the flow of electricity. In order to make that happen, engineers had to come up with a way to represent things with flows of electricity.

What they came up with is to have different voltages represent different symbols. Now, you could do this in a lot of ways. You could have 1 volt represent the number 1, 2 volts represent the number 2, etc. However, devices have a fixed voltage, so we would have to decide ahead of time how many digits we want to allow on the signal and be sure sufficient voltage is available.

To simplify things, engineers ultimately decided to only make two symbols. These can be thought of as “on” (voltage present) and “off” (no voltage present), “true” and “false,” or “1” and “0.” Limiting to just two symbols greatly simplifies the task of engineering computers.

You may be wondering how these limited symbols add up to all the things we store in computers. First, let’s start with ordinary numbers. You may be thinking, if you only have “0” and “1,” how will we represent numbers with other digits, like 23? The interesting thing is that you can build numbers with any number of digits. We use ten digits (0–9), but we didn’t have to. The Ndom language uses six digits. Some use as many as 27.

Since the computer uses two digits, the system is known as binary. Each digit in the binary system is called a bit, which simply means “binary digit.” To understand how to count in binary, let’s think a little about how we count in our own system, decimal. We start with 0, and then we progress through each symbol until we hit the end of our list of symbols (i.e., 9). Then what happens? The next digit to the left increments by one, and the ones place goes back to zero. As we continue counting, we increment the rightmost digit over and over, and, when it goes past the last symbol, we keep flipping it back to zero and incrementing the next one to the left. If that one flips, we again increment the one to the left of that digit, and so forth.

0. 0

1. 1

2. 10 (we overflowed the ones position, so we increment the next digit to the left and the ones position starts over at zero)

3. 11

4. 100 (we overflowed the ones position, so we increment the next digit to the left, but that flips that one to zero, so we increment the next one over)

5. 101

6. 110

7. 111

8. 1000

9. 1001

10. 1010

11. 1011

12. 1100

As you can see, the procedure is the same. We are just working with fewer symbols.

Now, in computing, these values have to be stored somewhere. And, while in our imagination, we can imagine any number of zeroes to the left (and therefore our system can accommodate an infinite number of values), in physical computers, all of these numbers have to be stored in circuits somewhere. Therefore, the computer engineers group together bits into fixed sizes.

A byte is a grouping of 8 bits together. A byte can store a number between 0 and 255. Why 255? Because that is the value of 8 bits all set to “1”: 11111111.

Single bytes are pretty limiting. However, for historic reasons, this is the way that computers are organized, at least conceptually. When we talk about how many gigabytes of RAM a computer has, we are asking how many billions (giga-) of bytes (groups of 8 bits together) the computer has in its working memory (which is what RAM is).

Most computers, however, fundamentally use larger groupings. When we talk about a 32-bit or a 64-bit computer, we are talking about how the number of bits that the computer naturally groups together when dealing with numbers. A 64-bit computer, then, can naturally handle numbers as large as 64 bits. This is a number between 0 and 18,446,744,073,709,551,615.

Now, ultimately, you can choose any size of number you want. You can have bigger numbers, but, generally, the processor is not predisposed to working with the numbers in that way. What it means to have a 64-bit computer is that the computer can, in a single instruction, add together two 64-bit numbers. You can still add 64-bit numbers with a 32-bit or even an 8-bit computer; it just takes more instructions. For instance, on a 32-bit computer, you could split the 64-bit number up into two pieces. You then add the rightmost 32 bits and then add the leftmost 32 bits (and account for any carrying between them).

Note that even though computers store numbers as bits, we rarely refer to the numbers in binary form unless we have a specific reason. However, knowing that they are bits arranged into bytes (or larger groupings) helps us understand certain limitations of computers. Oftentimes, you will find values in computing that are restricted to the values 0–255. If you see this happen, you can think, “Oh, that probably means they are storing the value in a single byte.”

2.5 It’s Not What You Have, It’s How You Use It

So, hopefully by now you see how computers store numbers. But don’t computers store all sorts of other types of data, too? Aren’t computers storing and processing words, images, sounds, and, for that matter, negative or even non-integer numbers?

This is true, but it is storing all of these things as numbers. For instance, to store letters, the letters are actually converted into numbers using ASCII (American Standard Code for Information Interchange) or Unicode codes (which we will discuss more later). Each character gets a value, and words are stored as consecutive values.

Images are also values. Each pixel on your screen is represented by a number indicating the color to display. Sound waves are stored as a series of numbers.

So how does the computer know which numbers are which? Fundamentally, the computer doesn’t. All of these values look exactly the same when stored in your computer—they are just numbers.

What makes them letters or numbers or images or sounds is how they are used. If I send a number to the graphics card, then it is a color. If I add two numbers, then they are numbers. If I store what you type, then those numbers are letters. If I send a number to the speaker, then it is a sound. It is the burden of the programmer to keep track of which numbers mean which things and to treat them accordingly.

This is why files have extensions like .docx, .png, .mov, or .xlsx. These extensions tell the computer how to interpret what is in the file. These files are themselves just long strings of numbers. Programs simply read the filename, look at the extension, and use that to know how to use the numbers stored inside.

There’s nothing preventing someone from writing a program that takes a word processing file and treating the numbers as pixel colors and sending them to the screen (it usually looks like static) or sending them to the speakers (it usually sounds like static or buzzing). But, ultimately, what makes computer programs useful is that they recognize how the numbers are organized and treat them in an appropriate manner.

If this sounds complicated, don’t worry about it. We will start off with very simple examples in the next chapter.

What’s even more amazing, though, is that the computer’s instructions are themselves just numbers as well. This is why your computer’s memory can be used to store both your files and your programs. Both are just special sequences of numbers, so we can store them all using the same type of hardware. Just like the numbers in the file are written in a way that our software can interpret them, the numbers in our programs are written in a special way so that the computer hardware can interpret them properly.

2.6 Referring to Memory

Since a computer has billions of bytes of memory (or more), how do we figure out which specific piece of memory we are referring to? This is a harder question than it sounds like. For the moment, I will give you a simplified understanding which we will build upon later on.

Have you ever been to a post office and seen an array of post-office boxes? Or been to a bank and seen a whole wall of safety deposit boxes? What do they look like?

Usually, each box is the same size, and each one has a number on it. These numbers are arranged sequentially. Therefore, box 2345 is right next to box 2344. I can easily find any box by knowing the number on the outside of the box.

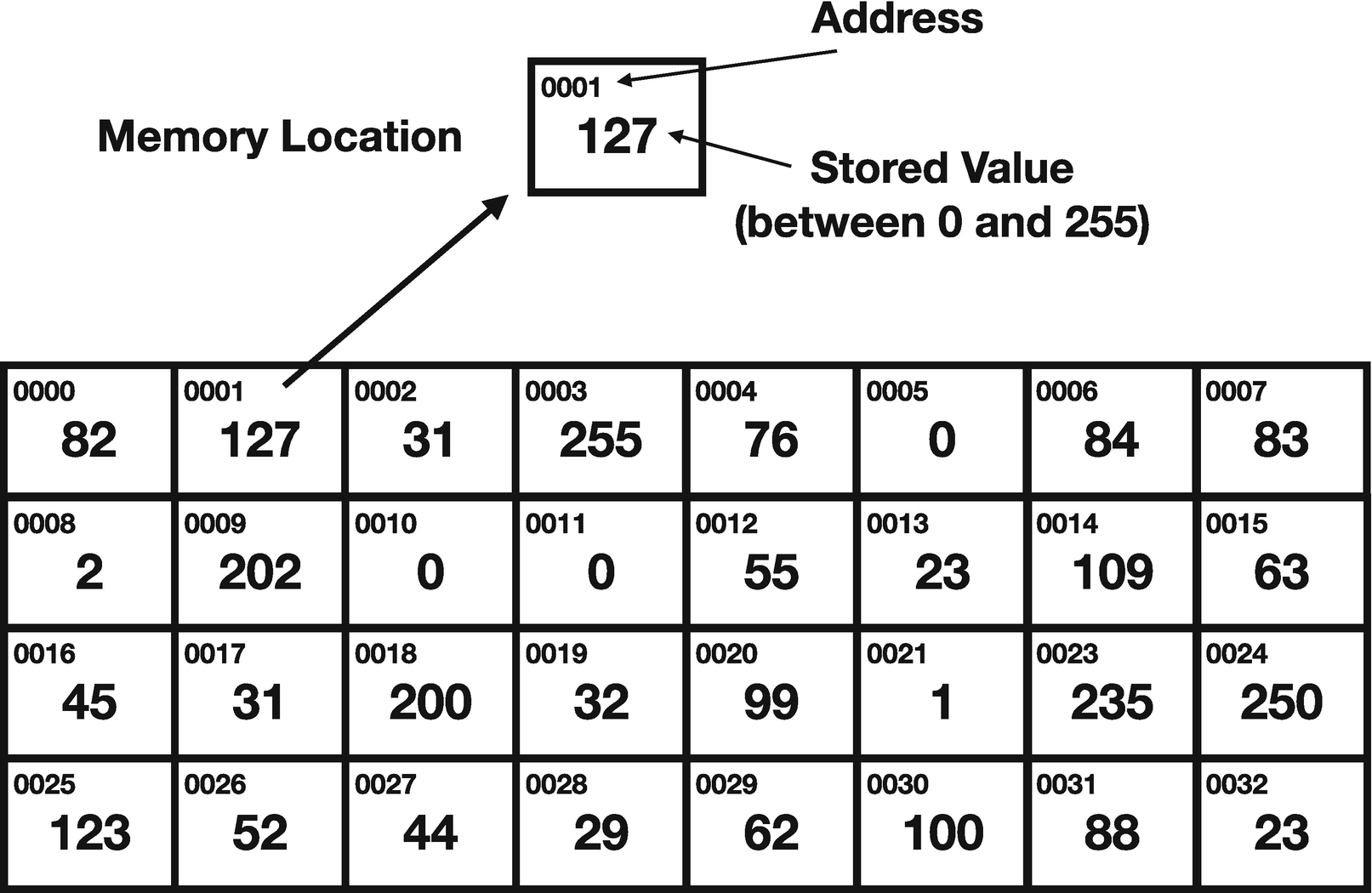

This is how memory is usually organized. You can think of memory as boxes, where each box is 1 byte big. Each memory box has an address, which tells the computer how to find it. I can ask for the byte that is at address 279,935 or at address 2,341,338. If I know the address, I can go find the value in that location. Because they are bytes, each value will be between 0 and 255. Figure 2-1 gives a visual for what this looks like.

Now, since we are on 64-bit computers, we can actually load bigger values. We will typically be loading 8 bytes at a time. So, instead of asking for a single byte, we will be asking for 8 bytes, starting with the one at the given address. So if we load from address 279,935, we will get all of the bytes from address 279,935 to 279,942.

1 byte (8 bits): Typically just referred to as a byte

2 bytes (16 bits): Known as a “word” or a “short”

4 bytes (32 bits): Known as a “double-word” or an “int”

8 bytes (64 bits): Known as a “quadword”4

Conceptual View of Memory

This figure shows the conceptual layout of 32 bytes of memory. Each location contains a value between 0 and 255 (which is 1 byte) and is labeled by an address, which is how the computer knows where to find it. The actual values here do not have any particular meaning, just shown to give examples of byte values.

2.7 The Structure of the CPU

The CPU itself has an organization worth considering. Modern CPUs are actually extremely complex, but they maintain a general conceptual architecture that has generally remained stable over time.

Registers

Control unit

Arithmetic and logic unit

Memory management unit

Caches

Registers are tiny blocks of memory inside the processor itself. These are bits of data that the processor can access directly without waiting. Most registers can be used for any purpose the programmer wishes. Essentially what happens is that programs load data from memory into the registers, then process the data in the registers using various instructions, and then write the contents of those registers back out to memory.

Some registers also have special purposes, such as pointing to the next instruction to be carried out, holding some sort of processor status, or being able to be used for some special processor function. Registers are standardized—that is, the available registers is defined by the CPU architecture, so you won’t get a different set of registers if you use an AMD chip or an Intel chip, as long as they are both implementing the x86-64 instruction set architecture.

Programming in assembly language involves a lot of register access.

The control unit sets the pacing for the chip. It handles the coordination of all the different parts of the chip. It handles the clock, which doesn’t tell time, but is more like a drum beat or a pacemaker—it makes sure that everything operates at the same speed.

The arithmetic and logic unit (ALU) is where the actual processing takes place. It does the additions, subtractions, comparisons, etc. The ALU is normally wired so that basic operations can be done with registers extremely quickly (typically in a single clock cycle).

The memory management unit is a little more complex, and we will deal with it further in Chapter 14. However, in a simple fashion, it manages the way that the processor sees and understands memory addresses.

Finally, CPUs usually have a lot of different caches . A cache is a piece of memory that holds other memory closer to the CPU. For instance, instructions are usually carried out in the same order that they are stored in memory. Therefore, rather than wait for the control unit to request the next instruction and then wait for the instruction to arrive from main memory, the CPU can preload a segment of memory that it thinks will be useful into a cache. That way, when the CPU asks for the next instruction, it doesn’t have to wait on the system bus to deliver the instruction from memory—it can just read it directly from the cache. CPUs implement all sorts of caches, each of which cache different things for different reasons, and even have different access speeds.

They key to understanding CPU architecture is to realize that the goal is to make maximal use of the CPU within the limits of computer chip engineering.

2.8 The Fetch-Execute Cycle

The way that the processor runs programs is through the fetch-execute cycle . The computer operates by reading your program one instruction at a time. It knows which instruction to read through a special register known as the instruction pointer (or IP), which is also known as the program counter (or PC).

- 1.

Read the instruction from the memory address specified by the instruction pointer.

- 2.

Decode the instruction (i.e., figure out what the instruction means).

- 3.

Advance the instruction pointer to the next instruction.5

- 4.

Perform the operation indicated by the instruction.

Load a value from memory into a register.

Store a value from a register into memory.

Do a single arithmetic operation.

Compare two values.

Go to a different location in the code (i.e., modify the instruction pointer) based on the result of a previous comparison.

You might be surprised, but those are pretty much all the instructions you really need in a computer.

You may be wondering how you get from instructions like that to doing things like displaying graphics in a computer. Well, graphics are composed of individual dots called pixels. Each pixel has a certain amount of red, green, and blue in them. You can represent these amounts with numbers. The graphics card has memory locations available for each pixel on your screen. Therefore, to display a graphic onscreen, you need only to move the color values to the correct places in memory.

Likewise, let’s think about input. When someone moves their mouse, this modifies a value in memory. This memory location can be loaded into a register, compared to other values, and then the appropriate code can be executed based on those movements.

Now, these are somewhat simplified explanations (in real computers, these operations are all mediated by the operating system), but they serve to give you a feel for how simply moving, storing, comparing, and manipulating numbers can bring you all of the things that computers offer.

2.9 Adding CPU Cores

Most modern computers have more than one CPU core. A CPU core is like a CPU, but more than one of them may exist on a single chip, and while each core is largely independent of the other cores on the same chip, the cores may share a certain amount of circuitry, such as caches.

Additional hardware has been developed to keep the different CPU cores synchronized with each other. For example, imagine if one core had a piece of memory stored in one of its caches and another core modified that same data. Getting that change communicated to the other cores can be a challenging prospect for hardware engineers. This is known as the cache coherence problem. It is usually solved by having the CPUs and caches implement what is known as the MESI protocol , which basically allows caches to tell other caches they need to update their values.

Thankfully, caching issues are handled almost entirely in hardware, so programmers rarely have to worry about them. There are a few instructions that we can use to do a minor amount of cache manipulation, such as flushing the cache, requesting that the cache load certain areas of memory, etc. However, for the most part, the complexity of modern CPUs (and the wide variety of implementations of that CPU architecture) usually means that the CPU will be much better at handling its cache than you could possibly be.

2.10 A Note About Memory Visualizations

One thing to note is that visualizations of computer memory is made difficult because sometimes we think of memory in terms of their addresses, in which case it seems obvious to put the higher addresses on the top and the lower addresses on the bottom, because we naturally arrange numbers that way. However, sometimes it is more natural to visualize something as “starting” at the top and “finishing” at the bottom, and, in those cases, we oftentimes put the lower memory addresses on the top and the higher ones on the bottom. All of this to say, the drawings of memory in this book will each indicate whether they are drawn with the lower addresses at the top of the drawing or at the bottom. So, when looking at memory visualizations in this book, please be sure to note which way the visualization is oriented.