6.3 The Adjoint of a Linear Operator

In Section 6.1, we defined the conjugate transpose A* of a matrix A. For a linear operator T on an inner product space v, we now define a related linear operator on V called the adjoint of T, whose matrix representation with respect to any orthonormal basis β

Let V be an inner product space, and let y∈V

Theorem 6.8.

Let V be a finite-dimensional inner product space over F, and let g: V→F

Proof.

Let β={v1, v2, …, vn}

Define h: V→F

Since g and h agree on β

To show that y is unique, suppose that g(x)=〈x, y′〉

Example 1

Define g: R2→R

Theorem 6.9.

Let V be a finite-dimensional inner product space, and let T be a linear operator on V. Then there exists a unique function T*: V→V

Proof.

Let y∈V

Hence g is linear.

We now apply Theorem 6.8 to obtain a unique vector y′∈V

To show that T* is linear, let y1, y2∈V

Since x is arbitrary, T*(cy1+y2)=cT*(y1)+T*(y2)

Finally, we need to show that T* is unique. Suppose that U: V→V

The linear operator T* described in Theorem 6.9 is called the adjoint of the operator T. The symbol T* is read “T star.”

Thus T* is the unique operator on V satisfying 〈T(x), y〉=〈x, T*(y)〉

so 〈x, T(y)〉=〈T*(x), y〉

For an infinite-dimensional inner product space, the adjoint of a linear operator T may be defined to be the function T* such that 〈T(x), y〉=〈x, T*(y)〉

Theorem 6.10 is a useful result for computing adjoints.

Theorem 6.10.

Let V be a finite-dimensional inner product space, and let β

Proof.

Let A=[T]β, B=[T*]β,

Hence B=A*

Corollary.

Let A be an n×n

Proof.

If β

As an illustration of Theorem 6.10, we compute the adjoint of a specific linear operator.

Example 2

Let T be the linear operator on C2

So

Hence

The following theorem suggests an analogy between the conjugates of complex numbers and the adjoints of linear operators.

Theorem 6.11.

Let V be an inner product space, and let T and U be linear operators on V whose adjoints exist. Then

-

(a) T+U

T+U has an adjoint, and (T+U)*=T*+U*(T+U)*=T*+U* . -

(b) cT has an adjoint, and (cT)*=ˉcT*

(cT)*=c¯T* for any c∈Fc∈F . -

(c) TU has an adjoint, and (TU)*=U*T*

(TU)*=U*T* . -

(d) T* has an adjoint, and T**=T

T**=T . -

(e) I has an adjoint, and I*=I

I*=I .

Proof.

We prove (a) and (d); the rest are proved similarly. Let x, y∈V

(a) Because

it follows that (T+U)*

(d) Similarly, since

(d) follows.

Unless stated otherwise, for the remainder of this chapter we adopt the convention that a reference to the adjoint of a linear operator on an infinite-dimensional inner product space assumes its existence.

Corollary.

Let A and B be n×n

-

(a) (A+B)*=A*+B*.

(A+B)*=A*+B*. -

(b) (cA)*=ˉcA*

(cA)*=c¯A* for all c∈F.c∈F. -

(c) (AB)*=B*A*.

(AB)*=B*A*. -

(d) A**=A.

A**=A. -

(e) I*=I

I*=I

Proof.

We prove only (c); the remaining parts can be proved similarly.

Since L(AB)*=(LAB)*=(LALB)*=(LB)*(LA)*=LB*LA*=LB*A*

In the preceding proof, we relied on the corollary to Theorem 6.10. An alternative proof, which holds even for nonsquare matrices, can be given by appealing directly to the definition of the conjugate transpose of a matrix (see Exercise 5).

Least Squares Approximation

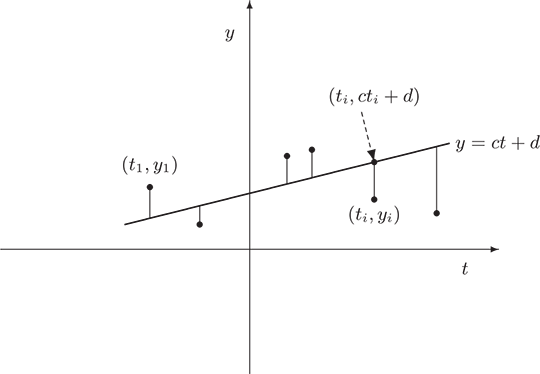

Consider the following problem: An experimenter collects data by taking measurements y1, y2, …, ym

Figure 6.3

Thus the problem is reduced to finding the constants c and d that minimize E. (For this reason the line y=ct+d

then it follows that E=||y−Ax||2

We develop a general method for finding an explicit vector x0∈Fn

First, we need some notation and two simple lemmas. For x, y∈Fn

Lemma 1.

Let A∈Mm×n(F), x∈Fn

Proof.

By a generalization of the corollary to Theorem 6.11 (see Exercise 5(b)), we have

Lemma 2.

Let A∈Mm×n(F)

Proof.

By the dimension theorem, we need only show that, for x∈Fn

so that Ax=0

Corollary.

If A is an m×n

Now let A be an m×n

To develop a practical method for finding such an x0, we note from Theorem 6.6 and its corollary that Ax0−y∈W⊥;

Theorem 6.12.

Let A∈Mm×n(F)

To return to our experimenter, let us suppose that the data collected are (1, 2), (2, 3), (3, 5), and (4, 7). Then

hence

Thus

Therefore

It follows that the line y=1.7t

Suppose that the experimenter chose the times ti(1≤i≤m)

Then the two columns of A would be orthogonal, so A * A would be a diagonal matrix (see Exercise 19). In this case, the computations are greatly simplified.

In practice, the m×2

Finally, the method above may also be applied if, for some k, the experimenter wants to fit a polynomial of degree at most k to the data. For instance, if a polynomial y=ct2+dt+e

Minimal Solutions to Systems of Linear Equations

Even when a system of linear equations Ax=b

Theorem 6.13.

Let A∈Mm×n(F)

-

There exists exactly one minimal solution s of Ax=b

Ax=b , and s∈R(LA*)s∈R(LA*) . -

The vector s is the only solution to Ax=b

Ax=b that lies in R(LA*)R(LA*) ; in fact, if u satisfies (AA*)u=b(AA*)u=b , then s=A*us=A*u .

Proof.

(a) For simplicity of notation, we let W=R(LA*)

by Exercise 10 of Section 6.1. Thus s is a minimal solution. We can also see from the preceding calculation that if ||v||=||s||

(b) Assume that v is also a solution to Ax=b

so v=s

Finally, suppose that (AA*)u=b

Example 3

Consider the system

Let

To find the minimal solution to this system, we must first find some solution u to AA*x=b. Now

so we consider the system

for which one solution is

(Any solution will suffice.) Hence

is the minimal solution to the given system.

Exercises

-

Label the following statements as true or false. Assume that the underlying inner product spaces are finite-dimensional.

-

(a) Every linear operator has an adjoint.

-

(b) Every linear operator on V has the form x→〈x, y〉 for some y∈V.

-

(c) For every linear operator T on V and every ordered basis β for V, we have [T*]β=([T]β)*.

-

(d) The adjoint of a linear operator is unique.

-

(e) For any linear operators T and U and scalars a and b,

(aT+bU)*=aT*+bU*. -

(f) For any n×n matrix A, we have (LA)*=LA*.

-

(g) For any linear operator T, we have (T*)*=T.

-

-

For each of the following inner product spaces V (over F) and linear transformations g: V→F, find a vector y such that g(x)=〈x, y〉 for all x∈V.

-

(a) V=R3, g(a1, a2, a3)=a1−2a2+4a3

-

(b) V=C2, g(z1, z2,)=z1−2z2

-

(c) V=P2(R) with 〈f(x), h(x)〉=∫10f(t)h(t) dt, g(f)=f(0)+f′(1)

-

-

For each of the following inner product spaces V and linear operators T on V, evaluate T* at the given vector in V.

-

(a) V=R2, T(a, b)=(2a+b, a−3b), x=(3, 5).

-

(b) V=C2, T(z1, z2)=(2z1+iz2, (1−i)z1), x=(3−i, 1+2i).

-

(c) V=P1(R) with 〈f(x), g(x)〉=∫1−1f(t)g(t) dt, T(f)=f′+3f,f(t)=4−2t

-

-

Complete the proof of Theorem 6.11.

-

-

(a) Complete the proof of the corollary to Theorem 6.11 by using Theorem 6.11, as in the proof of (c).

-

(b) State a result for nonsquare matrices that is analogous to the corollary to Theorem 6.11, and prove it using a matrix argument.

-

-

Let T be a linear operator on an inner product space V. Let U1=T+T* and U2=TT*. Prove that U1=U*1 and U2=U*2.

-

Give an example of a linear operator T on an inner product space V such that N(T)≠N(T*).

-

Let V be a finite-dimensional inner product space, and let T be a linear operator on V. Prove that if T is invertible, then T* is invertible and (T*)−1=(T−1)*.

-

Prove that if V=W⊕W⊥ and T is the projection on W along W⊥, then T=T*. Hint: Recall that N(T)=W⊥. (For definitions, see the exercises of Sections 1.3 and (a) 2.1.)

-

Let T be a linear operator on an inner product space V. Prove that ||T(x)||=||x|| for all x∈V if and only if 〈T(x), T(y)〉=〈x, y〉 for all x, y∈V. Hint: Use Exercise 20 of Section 6.1.

-

For a linear operator T on an inner product space V, prove that T*T=T0 implies T=T0. Is the same result true if we assume that TT*=T0?

-

Let V be an inner product space, and let T be a linear operator on V. Prove the following results.

-

(a) R(T*)⊥=N(T).

-

(b) If V is finite-dimensional, then R(T*)=N(T)⊥?. Hint: Use Exercise 13(c) of Section 6.2.

-

-

Let T be a linear operator on a finite-dimensional inner product space V. Prove the following results.

-

(a) N(T*T)=N(T). Deduce that rank(T*T)=rank(T).

-

(b) rank(T)=rank(T*). Deduce from (a) that rank(TT*)=rank(T).

-

(c) For any n×n matrix A, rank(A*A)=rank(AA*)=rank(A).

-

-

Let V be an inner product space, and let y, z∈V. Define T: V→V by T(x)=〈x, y〉z for all x∈V. First prove that T is linear. Then show that T* exists, and find an explicit expression for it.

The following definition is used in Exercises 15-17 and is an extension of the definition of the adjoint of a linear operator.

Definition.

Let T: V→W be a linear transformation, where V and W are finite-dimensional inner product spaces with inner products 〈⋅, ⋅〉1 and 〈⋅, ⋅〉2, respectively. A function T*:W→V is called an adjoint of T if 〈T(x), y〉2=〈x, T*(y)〉1 for all x∈V and y∈W.

-

Let T: V→W be a linear transformation, where V and W are finite-dimensional inner product spaces with inner products 〈⋅, ⋅〉1 and 〈⋅, ⋅〉2, respectively. Prove the following results.

-

(a) There is a unique adjoint T* of T, and T* is linear.

-

(b) If β and γ are orthonormal bases for V and W, respectively, then [T*]βγ=([T]γβ)*.

-

(c) rank(T*)=rank(T).

-

(d) 〈T*(x), y〉1=〈x, T(y)〉2 for all x∈W and y∈V.

-

(e) For all x∈V, T*T(x)=0 if and only if T(x)=0.

-

-

State and prove a result that extends the first four parts of Theorem 6.11 using the preceding definition.

-

Let T: V→W be a linear transformation, where V and W are finite-dimensional inner product spaces. Prove that (R(T*))⊥=N(T), using the preceding definition.

-

† Let A be an n×n matrix. Prove that det(A*)=¯det(A). Visit goo.gl/

csqoFY for a solution. -

Suppose that A is an m×n matrix in which no two columns are identical. Prove that A* A is a diagonal matrix if and only if every pair of columns of A is orthogonal.

-

For each of the sets of data that follows, use the least squares approximation to find the best fits with both (i) a linear function and (ii) a quadratic function. Compute the error E in both cases.

-

(a) {(−3, 9), (−2, 6), (0, 2), (1, 1)}

-

(b) {(1, 2), (3,4), (5, 7), (7, 9), (9,12)}

-

(c) {(−2, 4), (−1, 3), (0, 1), (1, −1), (2, −3)}

-

-

In physics, Hooke’s law states that (within certain limits) there is a linear relationship between the length x of a spring and the force y applied to (or exerted by) the spring. That is, y=cx+d, where c is called the spring constant. Use the following data to estimate the spring constant (the length is given in inches and the force is given in pounds).

Length x Force y 3.5 1.0 4.0 2.2 4.5 2.8 5.0 4.3 -

Find the minimal solution to each of the following systems of linear equations.

-

(a) x+2y−z=12

-

(b) x+2y−z=12x+3y+z=24x+7y−z=4

-

(c) x+y−z=02x−y+z=3x−y+z=2

-

(d) x+y+z−w=12x−y+w=1

-

-

Consider the problem of finding the least squares line y=ct+d corresponding to the m observations (t1, y1), (t2, y2), …, (tm, ym).

-

(a) Show that the equation (A*A)x0=A*y of Theorem 6.12 takes the form of the normal equations:

(m∑i=1t2i)c+(m∑i=1ti)d=m∑i=1tiyiand

(m∑i=1ti)c+md=m∑i=1yi.These equations may also be obtained from the error E by setting the partial derivatives of E with respect to both c and d equal to zero.

-

(b) Use the second normal equation of (a) to show that the least squares line must pass through the center of mass, (ˉt, ˉy), where

ˉt=1mm∑i=1tiandˉy=1mm∑i=1yi.

-

-

Let V and {e1, e2, …} be defined as in Exercise 23 of Section 6.2. Define T: V→V by

T(σ)(k)=∞∑i=kσ(i)for every positive integer k.Notice that the infinite series in the definition of T converges because σ(i)≠0 for only finitely many i.

-

(a) Prove that T is a linear operator on V.

-

(b) Prove that for any positive integer n, T(en)=∑ni=1ei.

-

(c) Prove that T has no adjoint. Hint: By way of contradiction, suppose that T* exists. Prove that for any positive integer n, T*(en)(k)≠0 for infinitely many k.

-