Appendix A

An Azure Security Tools Overview

This appendix does not focus on the individual Azure chapter objectives. It does, however, serve as an overview that outlines all of the most important tools in Azure that you need to know for the exam. The exam focuses heavily on how to use these tools to accomplish different security objectives, so it's critical that you understand what these tools are, how they differ, and what services they provide from a security perspective.

Let's begin our overview with Chapter 2.

Chapter 2, “Managing Identity and Access on Microsoft Azure”

Chapter 3 focuses on identity and access management on the Azure platform. The tools discussed in Chapter 2 focus on how to create and manage identities that are used in controlling access to the Azure resources. By the end of this chapter overview, you should understand how these tools contribute to access control within the Azure environment.

Azure Active Directory (AD)

Azure AD is a cloud-based identity and access management service. While it's similar to the traditional Windows AD, Azure AD is not a cloud version of that service—it is an independent service. Azure AD allows employees (or anyone on the on-premises network) to access external resources, including Microsoft 365, the Azure portal, and software-as-a-service (SaaS) applications. It can help users in Azure's cloud environment to access resources on your corporate network and intranet. You can also integrate this service with your on-premises Windows AD server, connect it to Azure AD, and extend your on-premises directories to Azure. Doing so allows users to use the same login credentials to access local and cloud-based resources.

Who Uses Azure AD?

According to Microsoft, there are three main groups that Azure AD is intended for:

- IT Admins: IT admins can use Azure AD to control access to their applications and application resources based on your business requirements. A common use case is using Azure AD to require multifactor authentication (MFA) when accessing important organizational resources. Overall, it's a tool for securing the authentication of your users within the Azure platform.

- Application developers: App developers can use Azure AD as a means for adding single sign-on (SSO) to their applications. This way, users can use their preexisting credentials to log in, as SSO both is secure and adds convenience to their applications. Finally, Azure AD has application program interfaces (APIs) that allow you to build a personalized app experience using existing organizational data about that user.

- Subscribers to Microsoft 365, Office 365, Azure, or Dynamics CRM Online: It allows you to manage access to any of your integrated cloud applications.

Most Important Features of Azure AD

The following are the most important features of Azure AD:

- Application management: The ability to manage your cloud and on-premises applications, including configuring Application Proxies and user authentication, and implementing SSO.

- User authentication: The facilitation of user authentication by allowing self-service password resets, enforcing MFA, using custom-banned password lists, and using smart lockout features.

- Conditional access: This ability provides users with access to resources only when certain conditions are met. These are facilitated by conditional access policies, which in simple terms are if-then statements that control whether a user is able to access a resource or complete an action. This feature provides users with very granular access control to Azure resources.

- Identity protection: This feature automatically detects potential vulnerabilities that might affect your organization's identities, configures policies that respond to suspicious actions, and can automatically take action to remediate such actions.

- Managed identities for Azure resources: A managed identity provides your Azure services with an identity that they can use to authenticate to any Azure AD-supported authentication service. Developers can use managed identities in their applications to access resources and to avoid having to manage secrets and credentials themselves.

- Privileged Identity Management (PIM): Azure AD contains special features for managing, controlling, and monitoring access to your organization's most privileged accounts, thus adding an extra layer of security for these accounts.

- Reports and monitoring: Azure AD collects insights into your security and usage patterns for your environment. It then allows you to visualize these insights easily and export them for integration with a third-party solution like security information and event management (SIEM).

- Windows Hello for Business: This tool replaces a traditional password with strong two-factor authentication on devices. Two-factor authentication is a type of user credential that includes the verification of a biometric or PIN and is meant to address the following common issues related to password-based authentication:

- Difficulty associated with remembering strong passwords: This issue results in users having to constantly reset their passwords or choosing to reuse passwords on multiple sites, which reduces the security of the password.

- Server breaches: A server breach can expose passwords, making them obsolete for ensuring authentication.

- Password exploitation: Passwords can be exploited using replay attacks, such as the reuse of a password hash to compromise an account.

- Password exposure: Users can accidentally expose their passwords when targeted by a phishing attack.

How Does Windows Hello for Business Work?

Windows Hello is set up on a user's device. During that setup, Windows asks the user to set a gesture, which typically is a biometric like a fingerprint or possibly a PIN. The user provides the biometric to verify their identity and Windows then uses Windows Hello to authenticate the user.

The biggest reason that Windows Hello is such a reliable form of authentication is because it allows fully integrated biometric authentication based on facial recognition or fingerprint matching. Windows Hello uses a combination of infrared cameras and software, which results in high accuracy of their biometric authentication while guarding against spoofing. Most major hardware vendors build devices that have Windows Hello–compatible cameras, so compatibility is rarely an issue. Most devices already have fingerprint reader hardware, and it can be added to those devices that don't have it fairly easily.

Difference between Windows Hello and Windows Hello for Business

Windows Hello and Windows Hello for Business varies as follows:

- Windows Hello: Windows Hello allows individuals to create a PIN or biometric gesture on their personal device for convenient sign-ins. The standard Windows Hello is unique to the device that it is set up on. It is not backed by asymmetric or certificate-based authentication.

- Windows Hello for Business: Windows Hello for Business is configured by a Group Policy or mobile device management policy. This affects multiple devices in an environment and always uses key-based or certificate-based authentication, making it much more secure than Windows Hello, which only uses a PIN.

Microsoft Authenticator App

This application helps you sign into your accounts when you're using two-factor verification, which helps you to use your accounts more securely, since passwords can be forgotten, stolen, and so on. Using two-factor verification that employs verification via your phone makes it much harder for your account to be compromised.

Authenticator is simple to use. The standard verification method is where one factor is a password. After you sign in using your username and password, you would need to either approve the notification or enter the provided verification code, usually via your smartphone.

Azure API Management

Azure API Management is a management platform for all APIs across all your Azure environments. APIs are important for simplifying application integrations and making data and services reusable and universally accessible to users. The Azure API is designed to make API usage easy for applications on the Azure platform.

Azure API Management consists of three elements: an API gateway, a management plane, and a developer portal. All these components are hosted in Azure and are fully managed by default.

API Gateway

Whenever a client application makes a request, it first reaches the API gateway, which then forwards the request to the proper backend services. The gateway acts as a proxy for the backend services and provides consistent configuration for routing, security, throttling, caching, and observability.

Using a self-hosted gateway, an Azure customer can deploy the API gateway to the same environments that host their APIs. Doing so allows them to optimize API traffic and ensures compliance with the local regulations and guidelines. The API gateway can perform the following actions:

- It can accept API calls and route them to preconfigured backends.

- It verifies API keys, JWT tokens, certificates, and other access credentials.

- It can enforce usage quotes and rate limits for your applications and resources.

- It can perform transformations to optimize requests and responses.

- It improves your caches' responses to enhance response latency and minimize the load on your backend services.

- It creates logs, metrics, and traces for monitoring, reporting, and troubleshooting.

Management Plane

The management plane is how you interact with the service; it provides you with full access to the API Management service. You can interact with it via the Azure portal, Azure PowerShell, the Azure command-line interface (CLI), a Visual Studio Code extension, or client software development kits (SDKs) in most programming languages. You can use the management plane to perform the following actions:

- Manage Azure users.

- Provision and configure API Management settings.

- Get insights on your applications and APIs from analytics.

- Set up policies like quotas or transformations for your APIs.

Developer Portal

The developer portal is an automatically generated and fully customizable website that holds the documentation for your APIs. An API provider can customize the look and feel of their developer portal. Some common examples include adding custom content to the site, changing its styles, or adding your branding.

The developer portal allows developers to discover APIs, onboard them for use, and learn how to consume them in their applications. Here are some actions you can perform in the developer portal:

- Read API documentation

- Call an API using the console

- Create and access API keys

- View analytics on your API usage

- Manage API keys

Chapter 3, “Implementing Platform Protections”

Chapter 3 focuses on how to implement platform protection in Azure. In this overview, we will review the security tools that you can use to secure your environment from outside attacks and ensure proper network segmentation.

Azure Firewall

Azure Firewall is a cloud-native and intelligent network firewall service designed to protect against threats to your cloud workloads. It's a stateful firewall since it's a service with high availability and unrestricted scalability. You can obtain it in either the standard or premium editions.

Azure Firewall Standard

The standard edition of Azure Firewall provides filtering and threat intelligence feeds directly from Microsoft's cybersecurity team. The firewall's threat intelligence-based filtering will alert you of and deny traffic to/from malicious Internet protocol (IP) addresses and domains. The firewall's database for malicious IP addresses and domains is consistently updated in real time to protect against new threats.

Azure Firewall Premium

The premium version of this firewall has quite a few improvements over the standard edition. First, it allows for a signature-based intrusion detection and protection system (IDPS) that allows for the rapid detection of attacks by looking for specific patterns of byte sequences in network traffic and known malicious instruction sequences used by known malware. The premium version has more than 58,000 signatures in over 50 categories that are constantly being updated in real time to protect against new and emerging exploits.

Azure Firewall Manager

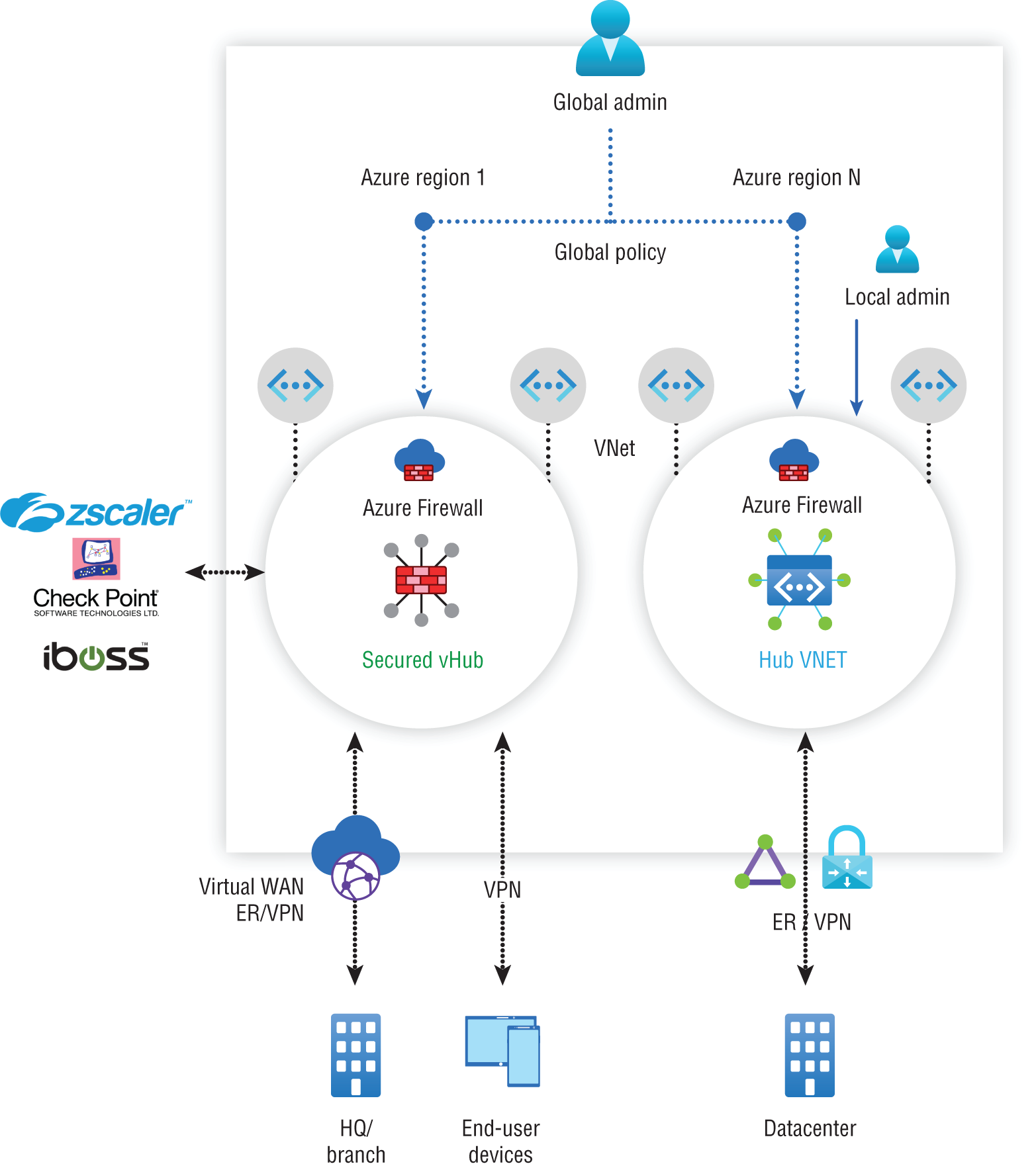

Azure Firewall Manager is a security management service that allows you to create central security policies and enforce route management for cloud-based security. You can use it to provide security management for the following two types of network architectures:

- Secured Virtual Hub: The Azure Virtual WAN Hub is a managed resource that allows you to create hub-and-spoke architectures. When security and routing policies become associated with one of these hubs, it is then referred to as a secured virtual hub.

- Hub Virtual Network: This hub is the normal Azure VNet that you will create and manage for your cloud environment.

Now that you understand what Azure Firewall Manager is, let's look at some of its key features:

- Azure Firewall deployment and configuration: The first feature allows you to deploy and configure instances of Azure Firewall across different regions and subscriptions, which is important for enforcing proper network segmentation. These firewall instances allow you to control traffic between subnets and between your cloud environment and the Internet. Azure Firewall Manager gives you one central hub from which you can deploy and manage these firewall instances.

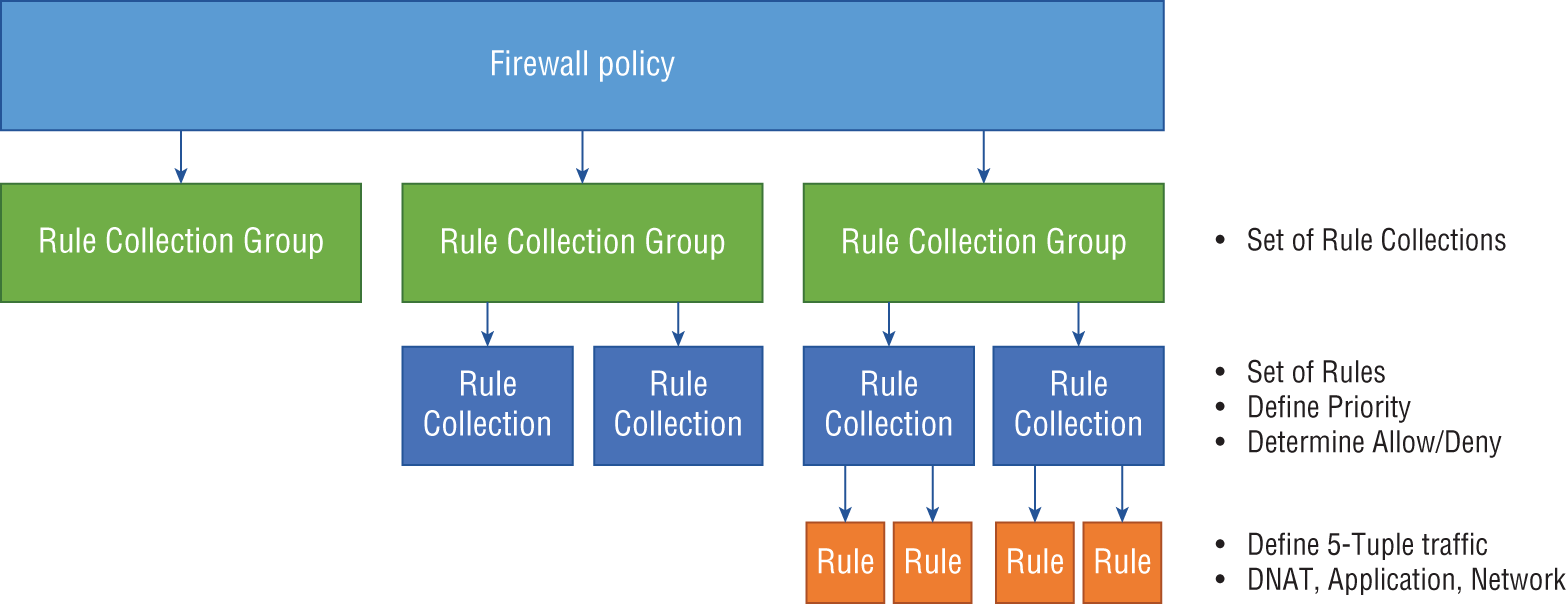

- Ability to create global and local firewall policies: You can use Azure Firewall Manager to create global and local firewall policies. It's important to understand the difference between a firewall and a firewall policy. While a firewall is a logical/physical device that filters traffic, a firewall policy is a top-level resource that contains the security and operational settings for Azure Firewall. You can think of a policy as the rules that govern how a firewall functions in Azure. The firewall policy organizes, prioritizes, and processes the rule sets based on a hierarchy of subcomponents of rule collection groups, rule collections, and rules. See Figure A.1.

FIGURE A.1 Components of a firewall policy

- Rule collection groups: This rule set is the highest in the hierarchy and is used to group rule collections. They are the first unit to be processed by Azure Firewall and follow a priority order based on their associated values. By default, there are three rule collection groups with a preset priority value for each (see Table A.1).

TABLE A.1 Rule collection groups

Rule collection group name Priority Default DNAT (Destination Network Address Translation) rule collection group 100 Default Network rule collection group 200 Default Application rule collection group 300 By default, you can't delete a default group or change their priority values, but you can add new groups and give them the priority value of your choice.

- Rule collections: A rule collection falls under a rule collection group and contains one or more rules. These rules are processed second by the firewall and follow a priority order based on values (similar to those listed previously). All rule collections must have a defined action that either allows or denies traffic and a priority value. The defined action will apply to all rules within that rule collection. Same as the previous rule, the priority values will determine the order in which the rule collections are processed. There are three types of rule collections:

- DNAT

- Network

- Application

Each of the rule collection types must match their parent rule collection group category. For example, DNAT rule collection must be part of the DNAT rule collection group.

- Rules: Last on the list are rules, which belong to a rule collection that specifies that traffic is allowed or denied in your network. Unlike the past elements, these do not follow a priority order based on values. Instead, they follow a top-down approach where all traffic that passes through the firewall is evaluated by defined rules for an allow or deny match. If there is no rule allowing the traffic, it will be denied by default.

- Integration with a third-party security-as-a-service (SaaS): Azure Firewall Manager supports the integration of third-party security providers, allowing you to bring in many of your favorite security solutions into your Azure environment. Currently, the supported security partners are Zscaler, Check Point, and iboss.

Integration is done through the use of automated route management, which doesn't require the setting up or managing of user-defined routes (UDRs). You can deploy secure hubs that are configured with the security partner of your choice in multiple Azure regions to get connectivity and security for your users. See Figure A.2.

- Route management: Route management is the ability to route traffic through your secured hubs for filtering or logging without needing to set up UDRs.

Azure Application Gateway

The Azure Application Gateway is Azure's web traffic load balancer that enables you to manage the amount of traffic going to your application, thus preventing it from becoming overloaded. Azure's Application Gateway is more advanced than a traditional load balancer. Traditionally, load balancers operate at the Transport layer of the OSI model (i.e., Layer 4 TCP and UDP) and can only route traffic based on the source IP address and port to a destination IP address and port. However, Azure's Application Gateway operates at Layer 7 of the OSI model and can make decisions based on additional attributes found in an HTTP request, such as URL-based routing. From a security viewpoint, this is very important for protecting against DDoS attacks and ensuring high uptimes for all of your network resources. In addition to load balancing, this tool comes with other useful features for security and scalability. Here are some of the most important features to remember:

FIGURE A.2 Secure hubs configuration

- Secure Sockets Layer (SSL/TLS) termination: To ensure that information is transported securely over the Internet, it's common to use encryption protocols like SSL/TLS. However, doing the encryption and decryption is extra overhead for the web server. Azure Application Gateway handles the encryption/decryption and allows data to flow unencrypted to the backend servers, thus reducing the work that the backend servers must do.

- Autoscaling: Azure Gateway supports autoscaling, which means it can scale up or down in the number of instances depending on your environment's traffic needs. This saves your company money because you won't have more resources provisioned than you actually need. You must specify a minimum and optionally maximum instance count, though, which will ensure that the application gateway doesn't fall below the minimum instance count, even without traffic.

- Zone redundancy: Azure Gateway can span across multiple availability zones, so there's no need to provision separate application gateways for different zones. This also means that you have zone redundancy because all of your gateway instances can be used to handle traffic in other zones as needed.

- Static VIP: This static virtual IP address enables the IP address connected to the application gateway to remain static (i.e., not change) over its lifetime, thus reducing the chances of misconfigurations and misdirection due to a change in IP addresses.

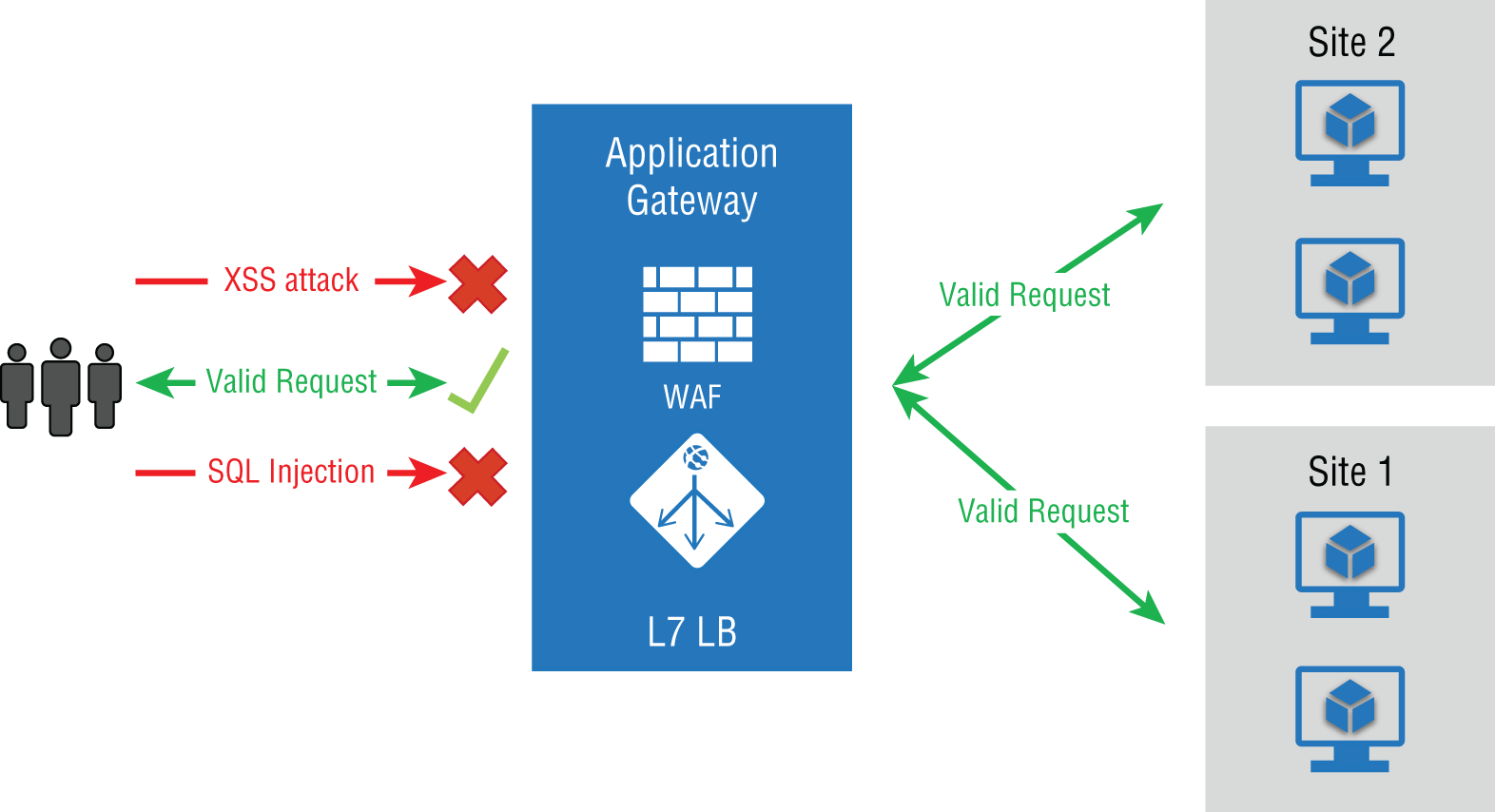

- Web Application Firewall (WAF): This feature gives you centralized protection for your web application from publicly known exploits. For example, it will filter out SQL injection attacks, cross-site scripting, directory traversals, and other attacks that come in through the web application itself. The WAF solution can also react by patching a known vulnerability once it is discovered. A single instance of Application Gateway can host up to 40 websites that are protected by a WAF.

The WAF also offers monitoring to detect any potentially malicious activity. It uses real-time WAF logs, which are integrated with Azure Monitor to track WAF alerts and monitor trends. It also integrates with Defender for Cloud, which gives you a central view of the security state of all your Azure, hybrid, and multicloud resources. See Figure A.3.

- Ingress controller for AKS: This feature allows you to use the application gateway as an ingress for an Azure Kubernetes Service (AKS). AKS is a service that allows you to deploy a managed Kubernetes cluster in Azure to offload the operational overhead to Azure.

- URL-based routing: This feature allows you to route traffic to backend server pools based on the URL paths in the request.

- Multiple-site hosting: Using this feature, you can configure routing based on a host or domain name for multiple web applications on the same application gateway.

- Redirection: This feature allows you to support automatic HTTP to HTTPS redirection. It ensures that all communications between an application and its users will be encrypted.

FIGURE A.3 Web Application Firewall

- Session affinity: When using this feature, you can use gateway-managed cookies that allows the application gateway to direct traffic from a user session to a server and maintain user sessions on the same server.

- WebSocket and HTTP/2 traffic: Azure's Application Gateway provides support for WebSocket and HTTP/2 protocols over ports 80 and 443.

- Connection draining: If you ever have a need to change the backend servers that your application uses (the backend pool), this feature ensures that all deregistering instances won't receive any new requests while allowing the existing requests to be sent to the appropriate servers for completion within the configured time limit. This includes instances that have been explicitly removed and those that have been reported as unhealthy by Azure's health probes.

- Custom error pages: Azure's Application Gateway allows you to display custom error pages instead of the defaults, which prevents you from displaying information that should be kept confidential. Also, it gives you the chance to use your own branding and layout in the custom error page.

- Ability to rewrite HTTP headers and URL: HTTP headers are used to allow the client and server to pass additional information with a request or response. By rewriting HTTP headers, you can add security-related information protection. You can remove header fields that may contain sensitive information and strip port information from the header. You can also rewrite URLs, and when you combine this with URL path-based routing, you can route requests to your desired backend pool of servers.

Azure Front Door

Azure Front Door is great for building, operating, and scaling out your web applications. It is a global, scalable entry point used to create fast, secure, and widely scalable web applications using Microsoft's global network. It's not limited to just new applications; you can use Azure Front Door with your existing enterprise applications to make them widely available on the web. Azure Front Door providers have a lot of options for traffic routing, and it comes with backend health monitoring so that you can identify any backend instances that may not be working correctly. Here's some of the key features that come with Azure Front Door:

- Better application performance using split TCP-based anycast protocol

- Intelligent health monitoring for all backend resources

- URL path-based routing for application requests

- Hosting of numerous websites via an efficient application infrastructure

- Cookie-based session affinity

- SSL/TLS offloading and certificate management

- Ability to define a custom domain

- Application security via an integrated web application firewall (WAF)

- Ability to redirect HTTP traffic to HTTPS seamlessly with a URL redirect

- A custom-forwarding path with an URL rewrite

- Native support for end-to-end IPv6 connectivity and support for HTTP/2 protocol

Web Application Firewall

A web application firewall (WAF) is a specific type of application firewall that monitors, filters, and, if necessary, blocks HTTP traffic to and from a web application. Azure's WAF uses Open Web Application Security Project (OWASP) rules to protect applications against common web-based attacks, such as SQL injection, cross-site scripting, and hijacking attacks. In Azure, WAFs are part of your Application Gateway, which we just discussed in the last section. All of WAF's customizations are contained in a WAF policy that must be associated with your Application Gateway. There are three types of WAF policies in Azure: global, per-site, and per-URI policies. Global WAF policies allow you to associate the policy with every site behind your Application Gateway with the same managed rules, custom rules, exclusions, or any other rules you define. A per-site policy allows you to protect multiple sites with different security needs. Lastly, the path-based rule (per URI) allows you to set rules for specific website pages. It's also important to know that a more specific policy will always override a more general one in Azure. That means you can have a global policy that applies to all machines and have per-site policies for specific instances, and the per-site policy will override the global policy.

Azure Service Endpoints

VNet service endpoints provide secure and direct connectivity to Azure services over an optimized route via the Azure backbone network. This network is a connection of hundreds of datacenters located in 38 regions around the world that are designed to provide near-perfect availability, high capacity, and the flexibility required to respond to unpredictable demand spikes, which provides you with a more secure and efficient route for sending and receiving traffic. It allows private IP addresses on a VNet to reach the endpoint of an Azure service without needing a public IP address on the VNet.

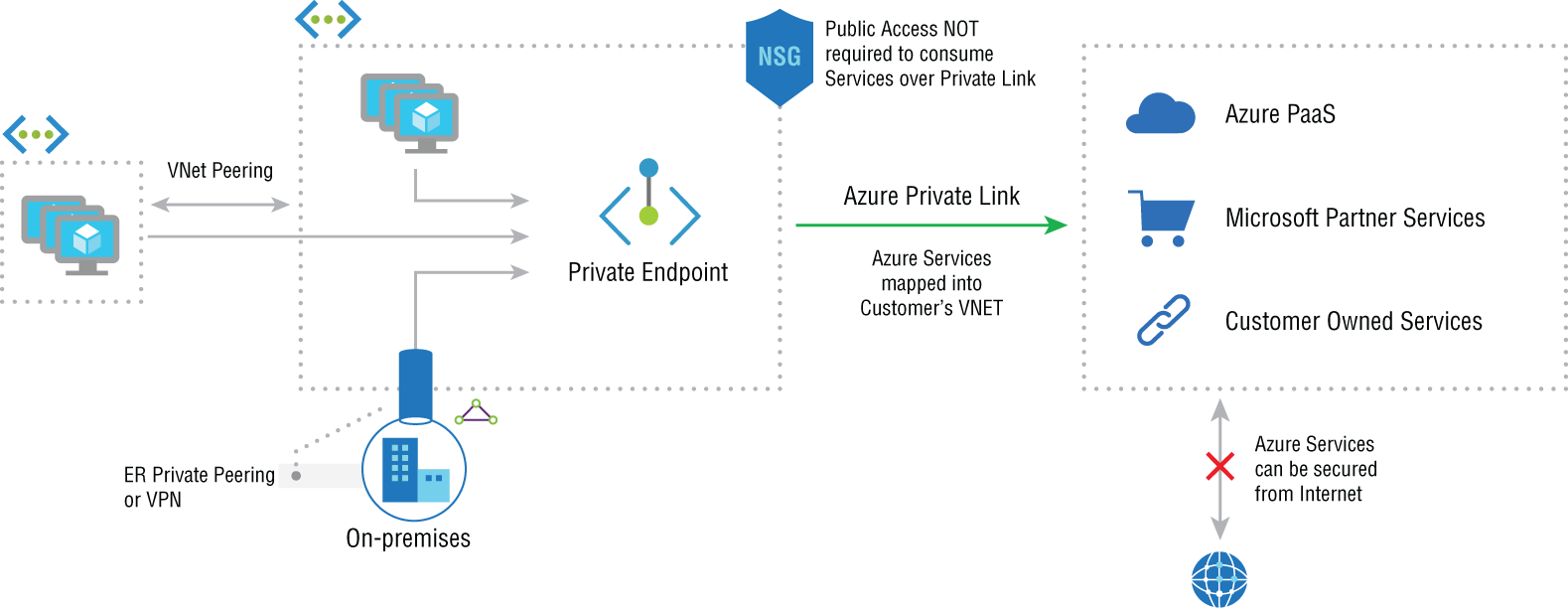

Azure Private Links

Azure Private Links allow you to access Azure PaaS services and Azure hosted customer-owned/partner services over a private endpoint. A private endpoint is simply any network interface that uses a private IP address from your virtual network. It simplifies network architecture and secures the connection between endpoints by keeping the traffic on the Microsoft global network and eliminating the potential for data exposure over the Internet. Here are the key benefits of using Azure Private Links:

- Ability to privately access services on Azure: Using a private link ensures that traffic between your virtual network and the service will occur along Microsoft's backbone network rather than exposing the service to the public Internet. Service providers can host their services in their own virtual network and consumers will be able to access those services in their local virtual network, which helps to ensure that their communications remain private and protected against potential eavesdropping attacks from people on the Internet.

- On-premises and peered networks: You can also access services running in Azure from your on-premises network over ExpressRoute private peering, VPN tunnels, and peered virtual networks. This structure gives you a secure way to migrate workloads from on-premises to Azure without needing to traverse the Internet to reach that service, helping to prevent any potential attacks or data leakages by keeping all communications on networks that you trust.

- Prevent data leakage: When using a private endpoint with a private link, consumers will be mapped to an instance of the PaaS resource instead of the whole service. Access to any other resource is blocked, which helps to prevent data leakage because you are only providing the access needed by the customer and nothing extra. See Figure A.4.

- Global reach: Using private links, you can connect to the services running in other regions, which allows you to provide secure connectivity to resources/services anywhere in the world.

FIGURE A.4 Preventing data leakage

Azure DDoS Protection

Azure DDoS Protection is Azure's default DDoS protection service. Unlike other tools in the list, this tool doesn't need to be configured by an administrator; every endpoint in Azure is protected by Azure's DDoS Protection basic version, free of cost. The important thing is to understand is the difference in features among the basic version and the paid version of DDoS Protection, which comes with two main features:

- Always-on traffic monitoring: This tool monitors your application traffic patterns 24 hours a day, 7 days a week, looking for indicators of DDoS attacks. Once an attack is detected, DDoS Protection Basic instantly and automatically mitigates the attack, with no actions required by the end user.

- Extensive mitigation scale: The basic version of this tool can detect over 60 different attack types and mitigate those attacks with global capacity, meaning that it can defend against attacks that come from/target resources in any part of the globe. This ability lends your applications protection against the largest known DDoS attacks.

Now, let's look at the features of the standard Azure DDoS protection tool, which is a paid-for resource:

- Native platform integration: Azure DDoS protection is natively integrated into Azure, which makes it extremely easy to use. You apply configurations through the Azure portal. DDoS Protection Standard also has the ability to understand your resources and resource configurations without the need for user input.

- Turnkey protection: As soon you enable DDoS Protection Standard, it automatically begins to protect all resources on your virtual network without any need for user intervention.

- Adaptive tuning: This allows for Azure DDoS protection to learn your application's traffic profile over time and then select the profile that best suits your service. A traffic profile is simply a set of measures over a specific period of time that allows Azure DDoS to understand what type of traffic is normal for your application and what isn't. Azure DDoS continues to observe your traffic and changes the profile accordingly over time so that it flags fewer false positives and misses fewer false negatives.

- Multilayered protection: Whenever you deploy a WAF, Azure DDoS Protection Standard provides additional security protection at Layers 3, 4, and 7 of the OSI model (i.e., the Network, Transport, and Application layers), helping to ensure that your application is protected by multilayers of security and a defense-in-depth approach.

- Attack analytics: Once the DDoS protection tool determines that an attack has started, it provides you with a detailed report every 5 minutes during the attack and a complete summary of the attack once it has completed. This way, you have documentation of what has occurred without having to do any manual work. Also, you can stream the flow logs that are created into Azure Sentinel or an offline SIEM for near-real-time monitoring of the attack against your systems.

- Attack metrics: This feature allows you to view summarized metrics for every attack against your resources via the Azure Monitor dashboard.

- Attack alerting: With this feature, you can configure alerts to be created at the start, at the stop, or over the duration of an attack using built-in metrics, which tell DDoS protection what the thresholds are for considering traffic to be an attack, allowing for customization on your end. These alerts can be integrated into an operational software of your choosing, such as Azure Monitor logs or Splunk for further analysis/monitoring.

- DDoS Rapid Response: You have the option of engaging the DDoS Protection Rapid Response (DRR) team to aid you with an attack investigation if the standard features aren't sufficient. This dedicated team of security experts within Microsoft can provide you with personalized support in an emergency situation.

- Cost guarantee: You can receive data transfer and application scale-out service credits for resource costs incurred due to documented DDoS attacks. So, in the event that DDoS protection doesn't deliver on its promised protection, you can obtain financial reimbursement from Microsoft to cover the costs of the attack, giving you another line of defense behind just the software itself.

Microsoft Defender for Cloud

Azure's endpoint protection feature has been integrated into a tool called Microsoft Defender for Cloud, which provides antimalware protection to Azure VMs in three primary ways:

- Real-time protection: In this way, endpoint protection acts similar to any other antimalware solution that alerts when you attempt to download a piece of software that might be malware or when you attempt to change important Windows security settings.

- Automatic and manual scanning: Endpoint protection comes with an automatic scanning feature that alerts you of any detected malware on your VMs. This feature can be turned on or off at your discretion.

- Detection/remediation: For severe threats, some actions will automatically be taken in an attempt to remove the malware and to protect your VMs from a potential or further infection. It can also reset some Windows settings to more secure settings.

Defender for Cloud also generates a secure score for all of your subscriptions, based on its assessment of your connected resources compared to the Azure security benchmark. This secure score helps you to understand at a quick glance how good your security is, and it provides a compliance dashboard that allows you to review your compliance using the built-in benchmark. Using the enhanced features, you can customize the standards used to assess compliance and add other regulations that your organization is subject to, such as NIST, Azure Center for Information Security (CIS), or other organization-specific security requirements.

Defender for Cloud also gives you hardening recommendations based on the security misconfigurations and weaknesses that it has found. You can use these recommendations to improve your organization's overall security.

Azure Container Registry

A container is a form of operating system virtualization. Think of this container as a package of software components. A container houses all of the necessary executables, code, libraries, and configuration files to run an application. However, a container doesn't house the operating system images, which makes it more lightweight and portable with less overhead. To support larger application deployments, you must combine multiple containers to be deployed as one or more container clusters.

The Azure Container Registry is a service for building, storing, and managing container images and their related artifacts and allows for the easy and quick creation of containers. When it comes to security, the Azure Container Registry supports a set of built-in Azure roles. These roles enable you to assign various permission levels to an Azure Container Registry, which allows you to use RBAC to assign specific permissions to users, service principals, or other identities that may need to interact with a container in that particular registry or the service itself. You can also create custom roles with a unique set of permissions. Here are some specific features of Azure Container Registry:

- Registry service tiers: This feature allows you to create one or more container registries in your Azure subscription and comes in three tiers: Basic, Standard, and Premium. It provides local, network-close storage of your container images by creating registries in the same Azure location as your deployments.

- Security and access: You can log into a registry via the Azure CLI or the standard Docker

logincommand. To ensure good security, Azure Container Registry transfers container images over HTTPS and supports TLS to provide secure client connections.When it comes to access control for container registries, you can use an Azure identity, an Azure Active Directory–backed service principal, or a provided admin account. Use Azure role-based access control (RBAC) to assign users or systems fine-grained permissions to a given registry.

- Content trust feature: In the premium version of Registry, you can use the Content Trust feature, which is a tool for verifying the source and integrity of data entering the system to ensure that it hasn't been improperly modified. There's also the option of integrating Defender for Cloud to allow for scanning of images whenever they are pushed to a registry to ensure they are safe before use.

- Supported images and artifacts: Azure Container Registries can include both Windows and Linux images. You can use Docker commands to push or pull images from a repository.

- Automated image builds: Azure allows you to automate many of the tasks related to building containers. You can use Azure Container Registry Tasks to automate building, testing, pushing, and deploying images in Azure. This is done through the use of task steps, which define the individual container image build and operations needed.

Azure App Service

Azure App Service quickly and easily creates enterprise-grade web and mobile applications for any platform or device and then deploys them with a reliable cloud infrastructure. Azure App Service Environment (ASE) allows you to have an isolated and dedicated hosting environment to run your functions and web applications. There are two ways to deploy an ASE: you can use an external IP address (External ASE), or you can use an internal IP address (ILB ASE). Doing so allows you to host both public and private applications in the cloud. It's important that you understand the following security features that Azure App Service offers for securing your cloud-hosting applications:

- App Service Diagnostics: This interactive tool helps with troubleshooting your application with no required configuration. When you run into application issues, you can use App Service Diagnostics to identify what went wrong, troubleshoot, and resolve the issue.

- Integration with Azure Monitor and Application Insights: This feature allows you to view the application's performance and health to make quick decisions about your application.

- Azure Autoscale and Azure Front Door: This feature allows your application to automatically react to traffic loads and perform traffic routing and load balancing.

- Azure Content Delivery Network: This feature reduces latency to your applications by moving your content assets closer to your customers.

- Azure Web Application Firewall: Azure App Service integrates with Azure Web Application Firewall to protect your applications against common web-based attacks.

Chapter 4, “Managing Security Operations”

This section focuses on the tools that automate and manage security operations in Azure. These tools help you to monitor and enforce your security standards in your organization.

Azure Policy

Azure Policy is a tool that helps enforce the standards of your organization and ensures the compliance of your Azure resources. An Azure Policy gives you the ability to define a set of properties that your cloud resources should have, and then it compares that defined list of properties to your resource's actual properties to identify those that are noncompliant. When defining these rules in an Azure policy, you describe them using the JavaScript Object Notation (JSON) format; the policy rules are known as policy definitions. You can assign policy definitions to any set of resources that Azure supports. These rules may use functions, parameters, logical operators, conditions, and property aliases to match the exact standards you want for your organization.

You can also control the response to a noncompliant evaluation of a resource with those policy definitions. For example, if a user wants to make a change to a resource that will result in it being noncompliant, you have multiple options: you can deny the requested change, you can log the attempted/successful change to that resource, and you can alter the resource before/after the change occurs, among other options. All these options are possible by adding what's called an effect in the policies in which you create.

You can create the policies from scratch, or you can use some of Azure's prebuilt policies that are created and available by default:

- Allowed Storage Account SKUs (Deny): This policy determines whether a storage account being deployed is within a predetermined set of stock keeping unit (SKU) sizes that's defined by your organization. It will deny all storage accounts that do not meet the set of defined SKU sizes.

- Allowed Resource Type (Deny): This policy defines the resource types that you are allowed to deploy. Any resources that aren't on this list will be denied.

- Allowed Locations (Deny): This policy allows you to restrict the location that your organization can select when deploying new resources and allows you to enforce geo-compliance for all new resources.

- Allowed Virtual Machine (VM) SKUs (Deny): This policy allows you to specify a set of VM SKUs that you are able to deploy in your organization. All others will be blocked.

- Add a Tag to Resources (Modify): This policy adds a required tag and its value to any resource that's created.

- Not Allowed Resource Types (Deny): This policy prevents a specified list of resource types from being deployed.

Creating a Custom Security Policy

To create individual policies, the easiest method to use is Azure Policy, a service that allows you to create, assign, and manage the policies that control or audit your cloud resources. You can use Azure Policy to create individual policies, or you can create initiatives, which are combinations of individual policies. There are three steps to implementing a policy in Azure Policy:

- Create a policy definition. A policy definition defines what you want your policy to evaluate and what actions it should take on each resource to which it is assigned. For example, say you want to prevent VMs from being deployed in certain Azure regions; just create a policy that prevents VM deployment in that region.

- Assign the definition to your target resources. Next, you need to assign the policy definition to the resources that you want them to affect. Referred to as a policy assignment, this step involves assigning a policy definition to a specified scope. For example, you may want the policy to affect all VMs in a certain geographical region. Policy assignments are inherited by all child resources within the scope by default. Therefore, any new resources created within that scope will automatically be assigned that same policy assignment.

- Review the evaluation results. Once a condition is evaluated against a resource, the resource is marked as either compliant or noncompliant. You then have the option to review the results and decide what action needs to be taken. Policy evaluations occur approximately once per hour.

Effects in Azure Policy

Every policy definition that you create in Azure Policy has an evaluation called an effect, which determines what will happen when a policy rule is evaluated for matching. The effect can be applied whether it's the creation of a new resource, an updated resource, or an existing resource. Here are the various effect types that you can create in Azure:

- Append: This effect is used to add extra fields to a resource during its creation or update. For example, let's say that you have a policy for all the storage resources in your environment. You can add an append effect that will specify what IP addresses are allowed to communicate with your storage resources, and it will be applied during the creation of any storage resource.

- Audit: This effect is used to create a warning event, which is stored in the activity logs when your policy evaluates a noncompliant resource. Note, however, that an audit will not stop the request. For example, if you have a policy that states all VMs must have the latest software patch applied and a VM exists that doesn't have that patch applied when the policy is evaluated, then it will create an event in the activity log that details this information.

- AuditIfNotExists: This effect goes a step further by allowing the effect to have if and then conditions. If you've programmed before, it works similar to an if/then statement. This effect allows you to audit resources related to the resource that matches the if condition but fails to match the then condition. For example, you can use this effect if you want to determine whether VMs have an antimalware extension installed, and then audit the VMs for a separate condition if the antimalware extension is missing.

- Deny: As the name suggests, this effect allows you to prevent a resource create/update request from being fulfilled if it doesn't match the standards outlined in the policy definition that you created.

- DeployIfNotExists: This effect executes a template deployment when a certain condition is met. Typically, that condition is the absence of a property. For example, you may have a policy that evaluates a Structured Query Language (SQL) server database to see whether or not TransparentDataEncryption is enabled. If it isn't, then a deployment is executed based on a predetermined template.

- Disable: This effect allows you to disable individual assignments when a policy is evaluated. Whereas Deny would prevent a resource create/update request from being fulfilled in its entirety, Disable allows you to remove individual assignments that might be causing the noncompliance.

- Modify: This effect is used to add, update, or remove properties/tags on a subscription or resource during its creation or update. Existing resources can be remediated with a remediation task. For example, you might want to add a tag with the value

testto all VMs that are created for testing purposes so that they don't get confused with production VMs.

Order of Evaluation

If you have multiple effects attached to a policy definition, there's a certain order in which the effects will be evaluated. This order of evaluation is as follows:

- Disabled: This effect is checked first to determine if a policy rule should be evaluated.

- Append and Modify: This effect is evaluated next. Because either one of these effects can cause a change in the request, it's possible that neither Deny or Audit will be evaluated at all.

- Deny: The next effect to be evaluated is Deny. It is important because by evaluating Deny now, the double-logging of an undesired resources is prevented.

- Audit: The Audit effect is always evaluated last.

Microsoft Threat Modeling Tool

Threat modeling is the process of identifying risks and threats that are likely to affect an organization. Microsoft has created its own threat modeling tool to allow for the easy creation of threat-modeling diagrams. This tool helps you plan your countermeasures and security controls to mitigate threats. When you are threat modeling, you need to consider multiple elements in order to obtain a good overview of your company's complete threat landscape, which consists of all threats pertaining to your organization.

Elements of Threat Modeling

There are three primary elements of threat modeling: threat actors, threat vectors, and the countermeasures you plan to use.

Threat Actors

The first element you must identify as part of your threat modeling process is the threat actors who will be targeting your organization. A threat actor is a state, group, or individual who has malicious intent. In the cybersecurity field, malicious intent usually means a threat actor is seeking to target private corporations or governments with cyberattacks for financial, military, or political gain. Threat actors are most commonly categorized by their motivations, and to some extent, their level of sophistication. Here are some of the most common types of threat actors:

- Nation-State Nation-state threat actors are groups who have government backing. Nation-state actors are typically the most advanced of the threat actors, with large amounts of resources provided by their governments; they have relationships with private sector companies and may leverage organized crime groups to accomplish their goals. They are likely to target companies that provide services to the government or that provide critical services like financial institutions or critical infrastructure businesses do. Their goal might be to obtain information on behalf of the government/crime syndicate backing them, to disable their target's infrastructure, and, in some cases, to seek financial gain.

- Organized Crime Groups/Cybercriminals These threat actors are organized crime groups/hackers who are working together or individually to commit cybercrimes for financial gain. One of the most common attack types that this group performs are ransomware attacks, where they hope to obtain a big payout from the company they are attacking. Generally, they will target any company with data that can be stolen or resold as well as companies with enough money to pay a high ransom to retrieve their data.

- Hacktivist A hacktivist is someone who breaks into a computer system with a political or socially motivated purpose. They disrupt services to bring attention to their cause, and typically don't target individual civilians or businesses for financial gain. Their main goal is to instigate some type of social change, and they will target companies whose actions go against what they believe is the correct conduct.

- Thrill Seekers This type of threat actor simply hacks for the thrill of the hack. While they don't intend to do any damage, they will hack into any business that piques their interest with the goal of testing their skills or gaining notoriety.

- Script Kiddies These individuals are the lowest level of hackers—they don't have much technical expertise and rely primarily on using prewritten hacking tools to perform attacks. Because they cannot target companies with customized exploits, they typically only target companies with vulnerabilities that can be easily detected by outside scans. Due to their inability to customize or create hacking tools of their own, script kiddies can be defended against by simply being up to date with patching and standard information security best practices.

- Insider Threats Known as insider threats, these individuals work for a legitimate company and are usually disgruntled employees seeking revenge or a profit. They can also be associated with any of the groups previously mentioned and work as an insider by providing them with company information and getting them access to the company's network from within.

Threat Vectors

A threat vector is the path or means by which a threat actor gains access to a computer by exploiting a certain vulnerability. The total number of attack vectors that an attacker can use to compromise a network or computer system or to extract data is called your company's attack surface. When threat modeling, your goal is to identify as many of your threat vectors as possible, and then to implement security controls to prevent these attackers from being able to exploit those threat vectors. Here are some common examples of threat vectors:

- Compromised Credentials These include stolen or lost usernames, passwords, access keys, and so forth. Once these credentials are obtained by attackers, they can be used to gain access to company accounts, and therefore, the company network.

- Weak Credentials Typically, these credentials are easily guessable or weak passwords that can be obtained using brute force or that can be cracked using software.

- Malicious Insiders Such insiders are disgruntled or malicious employees who may expose information about a company's specific vulnerabilities.

- Missing or Poor Encryption Missing or poor encryption can allow attackers to eavesdrop on a company's electronic communications and gain unauthorized access to sensitive information.

- Misconfiguration Having incorrect configurations gives users access that they should not have, or it creates security vulnerabilities that shouldn't exist. For example, if you have insecure services running on your Internet-facing machines, a threat actor may be able to exploit that vulnerability by scanning the machine.

- Phishing Emails Most malware is spread via email attachments in phishing emails. Such emails continue to be one of the most popular threat vectors used by hackers to get malware on corporate machines.

- Unpatched Systems Unpatched systems are one of the biggest entry points for attackers to gain entry into an organization. Most cyberattacks do not exploit zero-day vulnerabilities, which means most vulnerabilities are old and known. Most often, hackers exploit machines with vulnerabilities that haven't yet had patches applied.

- Poor Input Validation Having poor input validations allows attackers to perform many injection-based attacks, such as cross-site scripting (XSS), SQL injection attacks, and so on. This type of vulnerability is commonly exploited on web applications and websites.

- Third- and Fourth-Party Vendors Third- and fourth-party vendors play a big part in your company's security posture. Vendors typically have trusted relationships with your company where you open up aspects of your network, share information and client data, and use their products in your business. Attackers can then take advantage of this trust relationship to gain access to your company's internal environment. SolarWinds's attack in December 2020 is a good example. Hackers were able to hack into SolarWinds and place malware into the software update they pushed out to their clients. Because the clients already trusted SolarWinds, they downloaded the software update without performing any security checks, which resulted in hundreds of clients being infected with malware.

Threat Surface

Your cyberthreat surface consists of all the endpoints that can be exploited, which give an attacker access to your company's network. Any device that is connected to the Internet, such as smartphones, laptops, workstations, and even printers, is a potential entry point to your network and is part of your company's overall threat surface. It's important to map out your threat surface so that you understand what needs to be protected to prevent your business from being hacked. To map out this threat surface, it's extremely important that you have a complete inventory of all of your company's digital assets.

Countermeasures

Now that you have identified your threat surface, the most relevant threat actors for your business, and the threat vectors they will likely use, you can start planning your appropriate attack countermeasures. Countermeasures consist of a wide range of redundant security controls you can use to ensure that you have defense-in-depth coverage. Defense-in-depth simply means that every important network resource is protected by multiple controls so that no single control failure leaves the resource exposed. The key here is not only to have multiple layers of controls, but to also ensure that you use all the appropriate categories and multiple types of security controls to defend your company against attacks.

Control Categories

You must ensure that you have coverage for all of the following control categories so that your company is properly protected:

- Physical Controls These controls include all tangible/physical devices used to prevent or detect unauthorized access to company assets. They include fences, surveillance cameras, guard dogs, and physical locks and entrances.

- Technical Controls These controls include hardware and software mechanisms used to protect assets from nontangible threats. They include the use of encryption, firewalls, antivirus software, and intrusion detection systems (IDSs).

- Administrative Controls These controls refer to the policies, procedures, and guidelines that outline company practices in accordance with the company's security objectives. Some common examples of administration controls are employee hiring and termination procedures, equipment and Internet usage, physical access to facilities, and the separation of duties.

Control Types

In addition to having coverage for all the control categories to protect your company, you must ensure that you have coverage for all the following control types:

- Preventive Controls A preventive security control is what you use to prevent a malicious action from happening. It typically is the first type of control you want, and when working correctly, it provides the most effective overall protection. Preventive controls are part of all the control categories. Here are some examples, along with their control category:

- Computer Firewalls (Technical) A firewall is a hardware or software device that filters computer traffic and prevents unauthorized access to your computer systems.

- Antivirus (Technical) Antivirus software is software programs that prevent, detect, and remove malware from your organization's computer systems.

- Security Guards (Physical) Security guards (i.e., people) are typically assigned to specific areas and are responsible for ensuring that people do not enter restricted areas unless they can prove they have a right to be there.

- Locks (Physical) Locks refer to any physical lock on a door that prevents individuals from entering without having the proper entrance key.

- Hiring and Termination Policies (Administrative) During the hiring process, hiring policies like background checks help to prevent people with a history of bad behavior (e.g., sexual violence) from being hired by a company. Termination policies are policies that allow managers to get rid of people who are causing problems for the company.

- Separation of Duties (Administrative) The Separation of Duties is a company policy that requires more than one person to complete any given task. It prevents people from committing fraud, because every process requires multiple people for process completion, and any individuals trying to commit fraud would be noticeable by those responsible for carrying out said processes.

- Detective Controls Detective controls are meant to find any malicious activities in your environment that have snuck past the preventive measures. Realistically, you're not going to stop all the attacks against your company before they occur, so you must have a way to discover when something has failed in order to be able correct it. Here are some examples, along with their control category:

- Intrusion Detection Systems (Technical) Intrusion detection systems monitor a company's network for any signs of malicious activities and send out alerts whenever any abnormal activity is found.

- Logs and Audit Trails (Technical) Logs and audit trails are records of activity on a network or computer system. By reviewing these logs or trails, you can discover if malicious activity occurred on the network or computer system.

- Video Surveillance (Physical) Video surveillance includes setting up cameras to video important areas of a company, and then having security monitor those video feeds to see if anyone who isn't supposed to be there is able to obtain access.

- Enforced Staff Vacations (Administrative) Enforced staff vacations help to detect fraud by forcing individuals to leave their work and have someone else pick up that work in their absence. If someone has been taking part in fraudulent activity, it will become apparent to the new person performing their work.

- Review Access Rights Policies (Administrative) By reviewing an individual's access rights policies, you can see who has access to resources they shouldn't, and you can review who has been accessing those resources.

- Deterrent Controls Deterrent controls attempt to discourage people from performing activities that are harmful to your company. By incorporating deterrent controls, your company will have fewer threats to deal with, because it becomes harder to perform the fraudulent action and the consequences for getting caught are well-known. Here are some examples, along with their control category:

- Guard Dogs (Physical) Having guard dogs patrolling the company property can intimidate potential trespassers and help deter crime.

- Warning Signs (Physical) Advertising that your property is under video surveillance and has security alarms deters people from trying to break in.

- Pop-up Messages (Technical) By displaying messages on users' computers or corporate homepages, your company can warn people of certain bad behaviors (e.g., no watching porn on a company laptop).

- Firewalls (Technical) You may have experienced a firewall when you try to browse certain sites on a corporate laptop and are blocked from so doing and a warning message then pops up, stating that certain sites are not permitted on the laptop. These messages help to deter people from trying to browse certain sites on company laptops.

- Advertise Monitoring (Administrative) Many companies make it known that administrative account activities are logged and reviewed, which helps deter people from using those accounts with malicious intent.

- Employee Onboarding (Administrative) During employee onboarding, the company's representative can highlight the penalties for misconduct in the workplace, which helps deter employees from engaging in malicious behavior.

- Recovery Controls Recovery controls are controls that try to return your company's systems back to a normal state following a security incident. Here are some examples of recovery controls, along with their control categories:

- Reissue Access Cards (Physical) In the event of a lost or stolen access card, the access card will need to be deactivated and a new access card reissued.

- Repair Physical Damage (Physical) In the event of a damaged door, fence, or lock, you will need a process in place for repairing it very quickly.

- Perform System and Data Backups (Technical) Your company should be performing regular backups of important data and have a process in place for quickly restoring the last known good backup in the event of a security incident.

- Implement Patching (Technical) In the event of a possible vulnerability that places your company at risk, you should have a process in place for quickly implementing a patch on your company's systems in order to return your company's network to a “secure state.”

- Develop a Disaster Recovery Plan (Administrative) A disaster recovery plan outlines how to return your company back to a normal state of operations following a natural or human-made disaster (e.g., a fire or earthquake). It includes instructions on repairing both the physical buildings and the company's computing network.

- Develop an Incident Response Plan (Administrative) An incident response plan outlines the steps you can take to return to normal business operations following a cybersecurity breach.

Microsoft Sentinel

Microsoft Sentinel is the cloud-native security information and event management (SIEM) and security orchestration, automation, and response (SOAR) technology that leverages AI to provide advanced threat detection and response based on the information collected across your company's environment. First, let's look at the SIEM aspect of it.

A SIEM is responsible for collecting and analyzing security data, which is collected from the different systems within a network to discover abnormal behavior and potential cyberattacks. Some common technologies that feed data into a SIEM for analysis are firewalls, endpoint data, antivirus software, applications, and network infrastructure devices. The second aspect of Microsoft Sentinel is that it acts as a SOAR, which is designed to coordinate, execute, and automate tasks between different people and tools within a single platform. For example, using SOAR, you can define an automated playbook that tells the system what actions it should take when a certain condition is met. If it suspects a file is malicious, it may automatically quarantine or delete that file. In the following sections, we will look at the features of Azure Sentinel broken down into its SIEM and SOAR features:

How Does a SIEM Work?

A SIEM works in the following ways:

- It collects data from different sources: First, you must configure your SIEM to obtain data from all the data sources of interest to you. These sources include network devices, endpoints, domain controllers, and any other device or service that you want to monitor and perform an analysis on.

- It aggregates the data: Once all the devices and services you care about are connected to the SIEM, the SIEM must aggregate and normalize the data coming from all those various sources so the data can be analyzed. Aggregation is the process of moving data from different sources into a common repository; think of it as collecting data from all the devices and putting it in one centralized location. Normalization means taking different events from several different places and putting them into common categories so that analysis can begin. For example, if you have multiple devices in various time zones, the SIEM can convert them all to one time zone so that a consistent timeline can be created. Finally, the SIEM can perform data enrichment by adding supplemental information, such as geolocations, transaction numbers, or application data, which allows for better analysis and reporting.

- It create policies and rules: SIEMs allow you to define profiles that specify how a system should behave under normal conditions. Some SIEMs use machine learning to automatically detect anomalies based on this normal behavior. However, you can also manually create rules and thresholds that determine which anomalies are considered a security incident. Then when the SIEM is analyzing the data that comes in, it can compare it to your normal profile and the rules you created to determine if something is wrong with your systems that requires investigation.

- It analyzes the data: The SIEM looks at the data gathered to determine what has happened among the various data sources, and then it identifies trends and discovers any threats based on the data. In addition, if you create rules for a certain threshold like five failed login attempts in a row, then the SIEM will raise an alert when that rule is violated.

- It assists in the investigation: Once an investigation begins, you can query the data stored in the SIEM to pinpoint certain events of interest. This allows you to trace back in the events to find an incident's root cause and to provide evidence to support your conclusions.

The next aspect to Microsoft Sentinel is security orchestration, automation, and response (SOAR). SOAR is a combination of software that enables your organization to collect data about security threats and to respond to those security events without the need for human intervention.

Security Orchestration

Security orchestration focuses on connecting and integrating different security tools/systems with one another to form one cohesive security operation. Some of the common systems that might be integrated are vulnerability scanners, endpoint security solutions, end-user behaviors, firewalls, and IDS/IPS. This aspect can also connect external tools like an external threat intelligence feed. By collecting and analyzing all this information together, you can gain insights that might not have been found if you'd analyzed all that information separately. However, as the datasets grow larger, more and more alerts will be issued—and ultimately a lot more false positives and noise will be created that must be sorted through in order to get to the useful information.

Security Automation

The data and alerts collected from this security orchestration are then used to create automated processes that replace manual work. Traditional tasks, which would need to be performed by analysts—tasks such as vulnerability scanning, log analysis, and ticket checking—can be standardized and performed solely by a SOAR system. These automated processes are defined in playbooks, which contain the information required for the automated processes. The SOAR system can also be configured to escalate a security event to humans if needed. As you can imagine, this automated system will save your company a lot of money and time on human capital. Also, machines tend to be more reliable and consistent than humans, which leads to fewer mistakes in your security processes.

Security Response

As the name suggests, security response is all about providing an efficient way for analysts to respond to a security event. It's where a SOAR creates a single view for analysts to provide planning, managing, monitoring, and the reporting of actions once a threat is detected. In addition to providing a single view of information for the analyst, a SOAR can respond to potential incidents on behalf of an analyst through automation.

Threat Hunting

Microsoft Sentinel also offers threat-hunting capabilities. Sentinel's threat-hunting search and query tools are based on the MITRE framework, and they enable you to proactively hunt for threats across your Azure environment. You can use Azure's prebuilt hunting queries, or you can create your own custom detection rules during threat hunting.

How Does Microsoft Sentinel Work?

Microsoft Sentinel ingests data from services and applications by connecting to the service and forwarding the events and logs of interest to itself. To obtain data from physical and virtual machines, you can install a log analytics agent to collect the logs and to forward them to Microsoft Sentinel. For firewalls and proxies, you will need to install the log analytics agency agent on a Linux syslog server, and from there the agent will collect the log files and forward them to Microsoft Sentinel.

Once you have connected all of the data sources you want to Microsoft Sentinel, you can begin using it to detect suspicious behavior. You can do this in two ways: you can either use Microsoft's prebuilt detection rules, or you can create custom detection rules to suit your needs. Microsoft recommends that people leverage their prebuilt rules because they have been created to allow for the easy detection of malicious behavior and are regularly updated on Microsoft's security teams. These templates were designed by Microsoft's in-house security experts and analysts and are based on known threats and patterns of suspicious activity and common attack vectors. You also have the option of customizing them to your liking, which is usually easier than creating a new one from scratch.

Automation

Automated responses in Microsoft Sentinel are facilitated by automation rules. An automation rule is a set of instructions that allow you to perform actions around incidents without the need for human intervention. For example, you can use these rules to automate processes like assigning incidents to certain people, closing noisy incidents/false positives, and changing an incident's severity or adding tags to incidents based on predetermined characteristics. Automation rules also allow you to run playbooks in response to incidents.

A playbook is a set of procedures that can be executed by Sentinel as an automated response to an alert or an incident. Playbooks are used to automate and orchestrate your response and can be configured to run automatically in response to specific alerts or incidents. This automated run is configured by attaching the playbook to an analytics rule or an automation rule. Playbooks can also be triggered manually if need be.

Chapter 5, “Securing Data and Applications”

This section focuses on how you can secure your data and applications within the Azure platform. Primarily this refers to database security, using secure data storage, creating data backups, and ensuring proper encryption throughout your environment. Your goal should be to understand all of the different Azure tools that you can use to achieve each of these goals.

Azure Storage Platform

Azure's Storage platform is Microsoft's cloud storage solution for data storage. Azure Storage is designed to offer highly available, scalable, secure, and reliable storage of data objects in the cloud. In Azure, data storage is facilitated through an Azure Storage account. You can find the complete list of Azure Storage account types at https://docs.microsoft.com/en-us/azure/storage/common/storage-account-overview, but it's important to note that each account supports one or more type of Azure Storage data service. These services are as follows:

- Azure Blobs: These are large scalable objects that store text and binary data.

- Azure Files: These are managed file shares for cloud or on-premises deployments.

- Azure Queues: This a service for storing large numbers of messages, particularly the messages between application components.

- Azure Tables: This is a NoSQL store for the storage of structured data.

- Azure Disks: These provide block-level storage volumes for Azure VMs.

Table A.2 contains a breakdown of the different storage accounts that Azure supports.

TABLE A.2 Various Azure-supported storage accounts and their breakdown

| Type of storage account | Supported storage services | Redundancy options | Usage |

|---|---|---|---|

| Standard general-purpose v2 | Blob (including Data Lake Storage), Queue, Table storage, Azure Files | LRS/GRS/RA-GRS ZRS/GZRS/RA-GZRS | This is the standard storage account for blobs, file shares, queues, and tables. You will want to use this standard for the majority of scenarios in Azure Storage. |

| Premium block blobs | Blob storage (including Data Lake Storage) | LRS ZRS | This is the premium storage account for blobs and appended blobs. It should be used in scenarios where there are high transaction rates, where smaller objects are being used, or in situations that require consistently low storage latency. |

| Premium file shares | Azure Files | LRS ZRS | This is a premium storage account for file shares. It should be used for enterprise or high-performance applications. It is a storage account that can support both SMB and NFS file shares. |

| Premium page blobs | Page blobs only | LRS | This is a premium storage account for page blobs only. |

Benefits of Azure Storage

The benefits of Azure Storage are as follows:

- Secure: All data written to an Azure Storage account is automatically encrypted to protect the data for unauthorized access. Azure Storage also provides you with very detailed control over who can access your data using RBAC.

- Scalable: Azure Storage is designed to be scalable to accommodate for the storage and performance needs of today's applications.

- Automatically managed: Azure handles hardware maintenance, updates, and critical issues on behalf of the user.

- Worldwide accessibility: Azure Storage data is accessible from any location in the world over HTTP or HTTPS.

- Highly resilient and available: Azure creates redundancy to ensure that your data is safe and available in the event of hardware failures. You can also choose to replicate data across data centers for additional protection from a potential catastrophe.

Azure SQL Database

Azure SQL Database is a platform as a service (PaaS) database engine that handles the majority of Azure's database management functions. Most of these functions can be performed without user involvement, including upgrading, patching, backups, and monitoring.

Azure SQL allows you to create data storage for applications and solutions in Azure while providing high availability and good performance. It allows applications to process both relational data and nonrelational structures, such as graphs, JSON, and XML.

Deploying SQL Databases

When deploying an Azure SQL database, you have two options:

- Single Database: In this deployment, this fully managed and isolated database will have its own set of resources and its own configuration.

- Elastic Pools: This collection of individual databases shares a set of resources like memory. You can move an individual database in and out of an elastic pool after its creation.

Resource Scaling in Azure SQL Databases

In Azure, you can define the amount of resources allocated to your databases as follows:

- Single Databases: For a single database, each will have its own amount of computer memory and storage resources. You can dynamically scale these resources up or down, depending on the needs of that single database.

- Elastic Pools: In this case, you can assign resources to a pool of machines that are shared by all databases in that pool. To save money, you will need to move existing single databases into the resource pool to maximize the use of your resources.

Databases can scale the resources being used in two ways: via dynamic scalability and autoscaling. Autoscaling is when a service automatically scales based on certain criteria, whereas dynamic scalability allows for the manual scaling of a resource with no downtime.

Monitoring and Alerting

Azure SQL Database comes with built-in monitoring and troubleshooting features to help you determine how your databases are performing and to help you monitor and troubleshoot database instances. Query Store is a built-in SQL Server monitoring feature that records the performance of your queries in real time. It helps you find potential performance issues and the top resources for consumers. It uses a feature called automatic tuning, an intelligent performance service that continuously monitors queries that are executed on a database, and it uses the information it gathers to automatically improve their performance. Automatic tuning in SQL Database gives you two options: you can manually apply the scripts required to fix the issue, or you can let SQL Database apply the fix automatically. In addition, SQL Database can test and verify that the fix provides a benefit. Based on its evaluation, it then will either retain or revert the change.