10

Smart Assistants

![]() n this chapter, we’ll look at a common household use of ML: smart assistants like Siri, Alexa, or Google Home that can do simple jobs for you when you ask, like set an alarm, start a timer, or play some music.

n this chapter, we’ll look at a common household use of ML: smart assistants like Siri, Alexa, or Google Home that can do simple jobs for you when you ask, like set an alarm, start a timer, or play some music.

Smart assistants are ML systems trained to recognize the meaning of text. You’ve seen that you can train a computer so that when you give it some writing, the computer can recognize what you’re trying to say. And if a computer can understand what you mean, it can understand what you’re asking it to do.

To create a program that categorizes text based on recognizing the text’s intention (intent classification), we collect a large number of examples of each type of command that we want it to recognize and then use ML to train a model.

From the projects you’ve done so far, you’re already familiar with the classification part of intent classification. For example, messages can be classified as compliments or insults, and newspaper headlines can be classified as tabloids or broadsheets. The computer knows about some categories of writing, and when you give it some text, it tries to classify that text, or work out which category that text should go into. The intent part is because we’re using the ability to classify the text to recognize its intention.

Intent classification is useful for building computer systems that we can interact with in a natural way. For example, a computer could recognize that when you say, “Turn on the light,” the intention is for a light to be switched on. This is described as a natural language interface. In other words, instead of needing to press a switch to turn the light on, you’re using natural language—a language that has evolved naturally in humans, not one designed for computers—to communicate that intent.

The computer learns from the patterns in the examples we give it—patterns in the words we choose, the way we phrase commands, how we combine words for certain types of commands, and when we use commands that are short versus longer, just to name a few.

In this chapter, you’ll make a virtual smart assistant that can recognize your commands and carry out your instructions (see Figure 10-1).

Figure 10-1: Making a smart assistant in Scratch

Let’s get started!

Build Your Project

To start with, you’ll train the ML model to recognize commands to turn two devices—a fan and a lamp—on or off.

Code Your Project Without ML

As we saw in Chapter 7, it’s useful to see the difference that ML makes by trying to code an AI project without it first. You can skip this step if you feel you have a good grasp of the difference between a rule-based approach and ML and would rather go straight to using ML.

- Go to Scratch at https://machinelearningforkids.co.uk/scratch3/.

- Click Project templates at the top of the screen, as shown in Figure 10-2.

Figure 10-2: Project templates include starter projects to save you time.

- Click the Smart Classroom template.

- Copy the script shown in Figure 10-3.

Figure 10-3: Coding a smart assistant using rules

This script asks you to enter a command. If you type

Turnon(oroff)thefan(orlamp), Scratch will play the corresponding animation. Let’s try it out. - Test your project by clicking the Green Flag. Type the command

Turn on the fanand check that the fan really does start spinning.What happens if you spell something wrong? What happens if you change the wording (for example, “Turn on the fan please”)? What happens if you don’t mention the word fan (for example, “I’m very hot, we need some air in here!”)?

Why don’t these work?

Do you think it’s possible to write a script that would work with any phrasing of these four commands?

Think back to the definition in Chapter 1, where I said ML is not the only way to create AI systems. Here you’ve created an AI project using a rules-based approach instead of ML. By trying other techniques like this one and seeing where they fall short, you can better understand why ML is preferred for so many projects.

Train Your Model

- Create a new ML project, name it

Smart Classroom, and set it to learn to recognize text in your preferred language. - Click Train, as shown in Figure 10-4.

Figure 10-4: The first phase is to collect training examples.

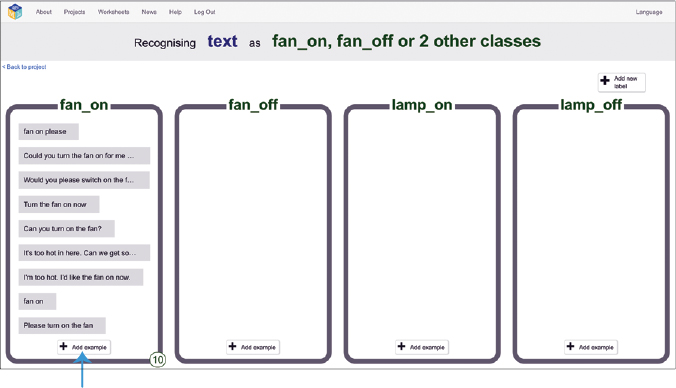

- Click Add new label, as shown in Figure 10-5, and create a training bucket called

fan on. Repeat this step to create three more training buckets namedfan off,lamp on, andlamp off. (The underscores will be added automatically.)

Figure 10-5: Create training buckets for the commands to recognize.

- Click Add example in the fan_on bucket and type an example of how you would ask someone to turn on the fan, as shown in Figure 10-6.

Figure 10-6: Collecting examples of how to ask for the fan to be turned on

It can be short (for example, “fan on please”) or long (“Could you turn the fan on for me now, please?”).

It can be polite (“Would you please switch on the fan?”) or less polite (“Turn the fan on now”).

It can include the words fan and on (“Can you turn on the fan?”) or neither (“It’s too hot in here. Can we get some air in here, please?”).

Type as many as you can think of, as shown in Figure 10-6. You need at least five examples, but I’ve given you six already, so that should be easy!

- Click Add example in the fan_off bucket, as shown in Figure 10-7.

This time, type as many examples as you can think of for asking someone to turn off the fan. You need at least five examples. These are the examples your ML model will use to learn what a “fan off” command looks like.

Try to include some examples that don’t include the words fan or off.

Figure 10-7: Collecting examples of how to ask for the fan to be turned off

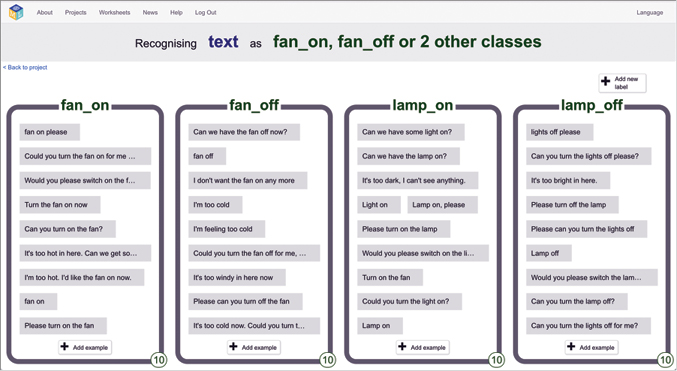

- Repeat this process for the last two buckets, until you have at least five examples for all four commands, as shown in Figure 10-8.

Figure 10-8: Training data for the smart assistant project

- Click Back to project in the top-left corner of the screen.

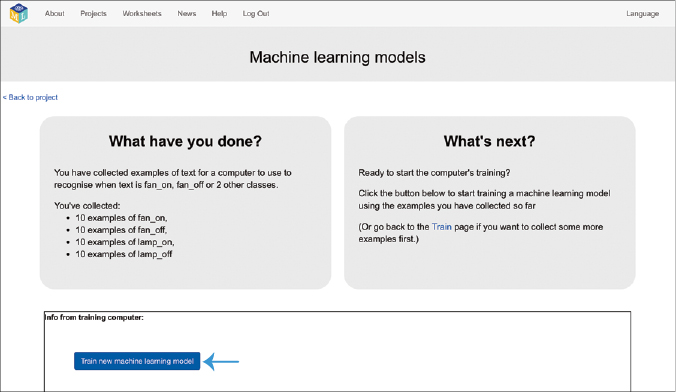

- Click Learn & Test.

- Click Train new machine learning model, as shown in Figure 10-9.

The computer will use the examples you’ve written to learn how to recognize your four commands. This might take a minute.

Figure 10-9: Train an ML model for your smart assistant.

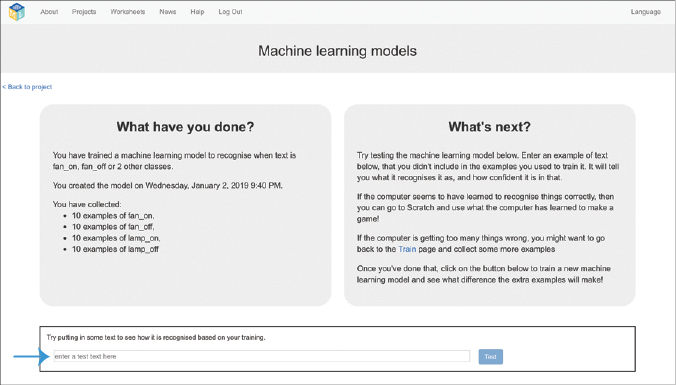

- After training an ML model, we test it to see how good it is at recognizing new commands. Type a command into the Test box, as shown in Figure 10-10.

Figure 10-10: Testing your ML model

If the model makes mistakes, you can go back to the Train phase and add more examples of the commands that it keeps getting wrong. This is like a teacher using a student’s poor exam result to figure out which subjects they need to review with the student to help improve the student’s understanding.

Once you’ve added more examples, go back to the Learn & Test phase and train a new ML model. Then test it again to see if the computer is any better at recognizing commands.

Code Your Project with ML

Now that you have an ML model that is able to recognize your commands, you can re-create the earlier project to use ML instead of the rules you used before.

- Click Back to project in the top-left corner of the screen.

- Click Make.

- Click Scratch 3, and then click Open in Scratch 3 to open a new window in Scratch.

You should see a new set of blocks for your ML project in the Toolbox, as shown in Figure 10-11.

Figure 10-11: Your ML project will be added to the Scratch Toolbox.

- Click Project templates in the top menu bar and choose the Smart Classroom template.

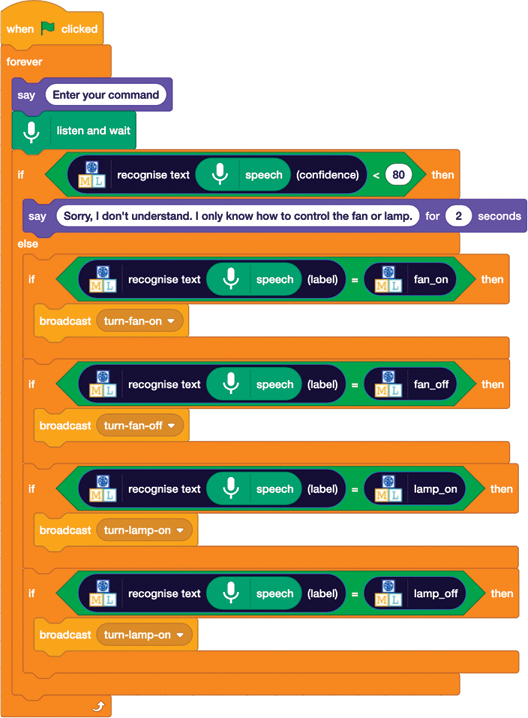

- Copy the script shown in Figure 10-12.

When you give this script commands, it will use your ML model to recognize the command and carry out the instruction.

Figure 10-12: ML approach for a smart assistant

Test Your Project

Test your project by clicking the Green Flag and entering a variety of commands, phrased in lots of different ways. See how your smart assistant performs now compared to the version that didn’t use ML.

Review and Improve Your Project

You’ve created your own smart assistant: a virtual version of Amazon’s Alexa or Apple’s Siri that can understand and carry out your commands! What could you do to improve the way that it behaves?

Using Your Model’s Confidence Score

Back in the Learn & Test phase, you should have noticed the confidence score displayed when you tested your model. That tells you how confident the computer is that it has recognized a command.

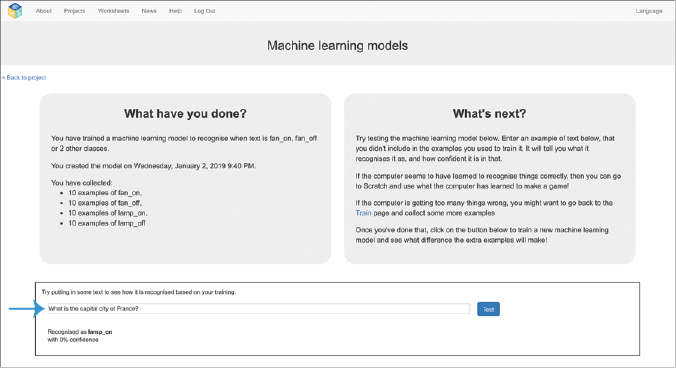

Go back to the Learn & Test phase now and try typing something that doesn’t fit into one of the four commands that the computer has learned to recognize.

For example, you could try “What is the capital city of France?” as shown in Figure 10-13.

Figure 10-13: Testing your smart assistant

My ML model recognized it as “lamp on,” but it had 0 percent confidence in that classification. That was the ML model’s way of telling me that it hadn’t recognized the command.

“What is the capital city of France?” doesn’t look like any of the examples I’ve given the ML model. The question doesn’t match the patterns it has identified in the examples I used to train it. This means it can’t confidently recognize the question as one of the four commands it’s been trained to recognize.

Your ML model might have a higher confidence than 0, but it should still be a relatively low number. (If not, try adding more examples to train your ML model with.)

Experiment with other questions and commands that don’t have anything to do with a fan or lamp. Compare the confidence scores your ML model gives with those it displays when it recognizes actual fan on, fan off, lamp on, and lamp off commands. What kinds of confidence scores does your ML model give when it’s correctly recognized something?

Once you have a feel for how the confidence scores work for your ML model, you can use that in your Scratch project. Update your script to look like Figure 10-14.

Now, if the model isn’t at least 80 percent confident that it has understood the command correctly, it will display a “sorry” response for 2 seconds and not carry out the action.

You’ll need to change the 80 value in this script to a percentage that matches the behavior of your own ML model.

What else could you do to improve your project?

Figure 10-14: Using confidence scores in your ML project

Using Speech Input Instead of Typing

You could modify your project to be more like real-world smart assistants by using voice input instead of typing.

In the Toolbox, click the Extensions Library icon (it looks like two blocks and a plus sign), add the Speech to Text extension, and update your script as shown in Figure 10-5.

Figure 10-15: Adding speech recognition to your smart assistant

What else could you do to improve your project?

Collecting Training Data

ML is often used for recognizing text because it’s quicker than having to write rules. But training a model properly requires lots and lots of examples. To build these systems in the real world, we’d need more efficient ways of collecting examples than simply typing them all yourself like you’ve done so far. For example, instead of asking one person to write 100 examples, it might be better to ask 100 people to write one example each. Or 1,000 people. Or 10,000 people.

If you can figure out when your ML model gets something wrong, you can collect more examples to add to your training buckets. For example, what if the ML model has a very low confidence score? Or what if someone keeps giving a similar command in slightly different ways? That probably means that the ML model isn’t recognizing the commands correctly or doing what the person wants, and that’s helpful feedback for your training. What if the person clicks a thumbs-down “I’m not happy” button? What if they end up pressing a button to do something? What if they sound more and more annoyed?

There are lots of ways to guess that something hasn’t worked well. And every time that happens, that’s an example you could collect and add to one of your training buckets so a newer ML model can work a little better next time.

We use all these sorts of techniques (collecting training examples from large numbers of people, getting feedback from users, and many more) to help us build computers and devices that can understand what you mean.

What You Learned

In this chapter, we’ve looked at how ML is used to recognize the meaning of text, and how it can be used to build computer systems that can understand what we mean and do what we ask.

In your project, you used the same type of ML technology that enables smart assistants like Amazon’s Alexa, Google Home, Microsoft’s Cortana, and Apple’s Siri. Natural language interfaces let us tell our devices what we want them to do by using languages like English, instead of only by pressing screens or buttons.

When you ask a smartphone what the time is, or to set an alarm or a timer, or to play your favorite song, the computer needs to classify that command. It needs to take that series of words that you chose and recognize their intent.

The makers of smartphones and smart assistants trained an ML model to recognize the meaning of user commands by working out a list of categories—all of the possible commands they thought users might want to give. And then for each one, they collected lots and lots of examples of how someone might give that command.

In both this project and the real world, the process works like this:

- Predict commands that you might give.

- Collect examples of each of those commands.

- Use those examples to train an ML model.

- Script or code what you want the computer to do when it recognizes each command.

To create a real smart assistant, you’d have to repeat these steps for thousands of commands, not just four. And you would need thousands, or tens of thousands, of examples for each command.

In the next chapter, you’ll use this capability to build programs that can answer questions.