Chapter 11: Security and Vulnerabilities in Python Modules

Python is a language that allows us to scale up from start up projects to complex data processing applications and support dynamic web pages in a simple way. However, as you increase the complexity of your applications, the introduction of potential problems and vulnerabilities can be critical in your application from the security point of view.

This chapter covers security and vulnerabilities in Python modules. I'll review the main security problems we can find in Python functions, and how to prevent them, along with the tools and services that help you to recognize security bugs in source code. We will review Python tools such as Bandit as a static code analyzer for detecting vulnerabilities, and Python best practices from a security point of view. We will also learn about security in Python web applications with the Flask framework. Finally, we will learn about Python security best practices.

The following topics will be covered in this chapter:

- Exploring security in Python modules

- Static code analysis for detecting vulnerabilities

- Detecting Python modules with backdoors and malicious code

- Security in Python web applications with the Flask framework

- Python security best practices

Technical requirements

The examples and source code for this chapter are available in the GitHub repository at https://github.com/PacktPublishing/Mastering-Python-for-Networking-and-Security-Second-Edition.

You will need to install the Python distribution on your local machine and have some basic knowledge about secure coding practices.

Check out the following video to see the Code in Action: https://bit.ly/2IewxC4

Exploring security in Python modules

In this section, we will cover security in Python modules, reviewing Python functions and modules that developers can use and that could result in security issues.

Python functions with security issues

We will begin by reviewing the security of Python modules and components, where we can highlight the eval, pickle, subprocess, os, and yaml modules.

The idea is to explore some Python functions and modules that can create security issues. For each one, we will study the security and explore alternatives to these modules.

For example, Python modules such as pickle and sub-process can only be used bearing in mind security and the problems that can appear as a result of their use.

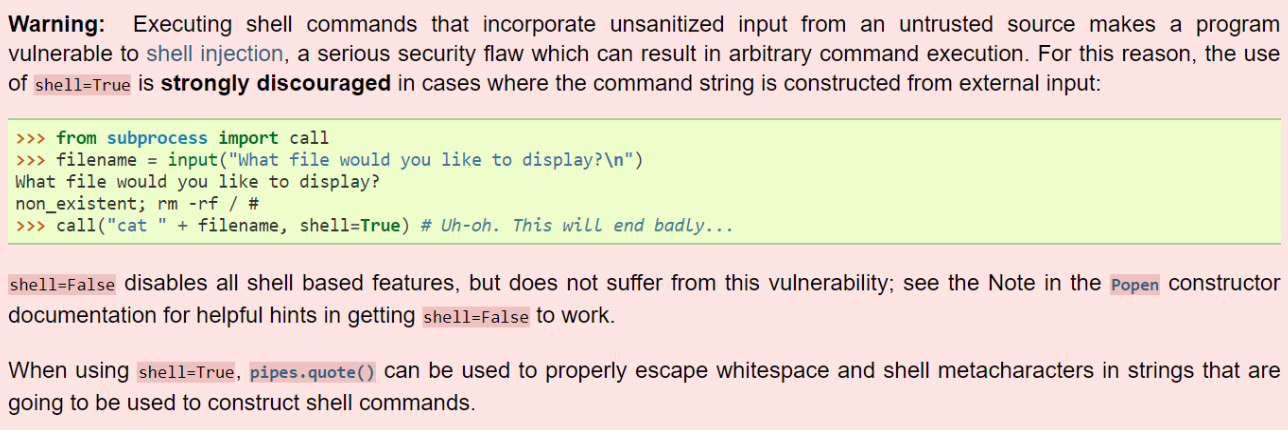

Usually, Python's documentation includes a warning regarding the risks of a module from the security point of view, which looks something like this:

Figure 11.1 – Python module warning related to a security issue

The following can be typical potential security issues to watch for:

- Python functions with security issues such as eval()

- Serialization and deserialization objects with pickle

- Insecure use of the subprocess module

- Insecure use of temporary files with mktemp

Now, we are going to review some of these functions and modules and analyze why they are dangerous from a security point of view.

Input/output validation

The validation and sanitation of inputs and outputs represents one of the most critical and frequent problems that we can find today and that cause more than 75% of security vulnerabilities, where attackers may make a program accept malicious information, such as code data or machine commands, which could then compromise a machine when executed.

Input and output validation and sanitization are among the most critical and most often found problems resulting in security vulnerabilities. In the following example, the arg argument is being passed to a function considered as insecure without performing any validation:

import os

for arg in sys.argv[1:]:

os.system(arg)

In the preceding code, we are using the user arguments within the system() method from the os module without any validation.

An application aimed at mitigating this form of attack must have filters to verify and delete malicious content, and only allow data that is fair and secure for the application. The following example is using the print function without validating the variable filename controlled by the user input:

import os

if os.path.isfile(sys.argv[1]):

print(filename, 'exists')

else:

print(filename, 'not found')

In the preceding code, we are using the user arguments within the isfile() method from the os.path module without any validation.

From a security point of view, unvalidated input may cause major vulnerabilities, where attackers may trick a program into accepting malicious input such as code data or device commands, which can compromise a computer system or application when executed.

Eval function security

Python provides an eval() function that evaluates a string of Python code. If you allow strings from untrusted input, this feature is very dangerous. Malicious code can be executed without limits in the context of the user who loaded the interpreter. For example, we could import a specific module to access the operating system.

You can find the following code in the load_os_module.py file:

import os

try:

eval("__import__('os').system('clear')", {})

print("Module OS loaded by eval")

except Exception as exception:

print(repr(exception))

In the preceding code, we are using the built-in __import__ function to access the functions in the operating system with the os module.

Consider a scenario where you are using a Unix system and have the os module imported. The os module offers the possibility of using operating system functionalities, such as reading or writing a file. If you allow users to enter a value using eval(input()), the user could remove all files using the instruction os.system('rm -rf*').

If you are using eval(input)) in your code, it is important to check which variables and methods the user can use. The dir() method allows you to see which variables and methods are available. In the following output, we see a way to obtain variables and methods that is available by default:

>>> print(eval('dir()'))

['__annotations__', '__builtins__', '__doc__', '__loader__', '__name__', '__package__', '__spec__']

Fortunately, eval() has optional arguments called globals and locals to restrict what eval() is allowed to execute:

eval(expression[, globals[, locals]])

The eval() method takes a second statement describing the global values that should be used during the evaluation. If you don't give a global dictionary, then eval() will use the current globals. If you give an empty dictionary, then globals do not exist.

This way, you can make evaluating an expression safe by running it without global elements. The following command generates an error when trying to run the os.system ('clear') command and passing an empty dictionary in the globals parameter.

Executing the following command will raise a NameError exception, indicating that "name 'os' is not defined" since there are no globals defined:

>>> eval("os.system('clear')", {})

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "<string>", line 1, in <module>

NameError: name 'os' is not defined

With the built-in __import__ function, we have the capacity to import modules. If we want the preceding command to work, we can do it by adding the corresponding import of the os module:

>>> eval("__import__('os').system('clear')", {})

The next attempt to make things more secure is to disable default builtins methods. We could explicitly disable builtins methods by defining an empty dictionary in our globals.

As we can see in the following example, if we disable builtins, we are unable to use the import and the instruction will raise a NameError exception:

>>> eval("__import__('os').system('clear')", {'__builtins__':{}})

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "<string>", line 1, in <module>

NameError: name '__import__' is not defined

In the following example, we are passing an empty dictionary as a globals parameter. When you pass an empty dictionary as globals, the expression only has the builtins (first parameter to the eval()). Although we have imported the os (operating system) module, the expression cannot access any of the functions provided by the os module, since the import was effected outside the context of the eval() function.

Because we've imported the os module, expressions can't access any of the os module's functions, as can be seen in the following instructions:

>>> print(eval('dir()',{}))

['__builtins__']

>>> import os

>>> eval("os.system('clear')",{})

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "<string>", line 1, in <module>

NameError: name 'os' is not defined

In this way, we are improving the use of the eval() function by restricting its use to what we define in the global and local dictionaries.

The final conclusion regarding the use of the eval() function is that it is not recommended for code evaluation, but if you have to use it, it's recommended using eval() only with input validated sources and return values from functions that you can control.

In the next section, we are introducing a number of techniques to control user input.

Controlling user input in dynamic code evaluation

In Python applications, the main way to evaluate code dynamically is to use the eval() and exec functions. The use of these methods can lead to a loss of data integrity and can often result in the execution of arbitrary code. To control this case, we could use regular expressions with the re module to validate user input.

You can find the following code in the eval_user_input.py file:

import re

python_code = input()

pattern = re.compile("valid_input_regular_expressions")

if pattern.fullmatch(python_code):

eval(python_code)

From the security standpoint, if the user input is being handed over to eval() without any validation, the script could be vulnerable to a user executing arbitrary code. Imagine running the preceding script on a server that holds confidential information. An attacker may probably have access to this sensitive information depending on a number of factors, such as access privileges.

As an alternative to the eval() function, we have the literal_eval() function, belonging to the ast module, which allows us to evaluate an expression or a Python string in a secure way. The supplied string can only contain the following data structures in Python: strings, bytes, numbers, tuples, lists, dicts, sets, or Booleans.

Pickle module security

The pickle module is used to implement specific binary protocols. These protocols are used for serializing and de-serializing a Python object structure. Pickle lets you store objects from Python in a file so that you can recover them later. This module can be useful for storing anything that does not require a database or temporary data.

This module implements an algorithm to convert an arbitrary Python object into a series of bytes. This process is also known as object serialization. The byte stream representing the object can be transmitted or stored, and then rebuilt to create a new object with the same characteristics. In simple terms, serializing an object means transforming it into a unique byte string that can be saved in a file, a file that we can later unpack and work with its content.

For example, if we want to serialize a list object and save it in a file with a .pickle extension, this task can be executed very easily with a couple of lines of code and with the help of this module's dump method:

import pickle

object_list =['mastering','python','security']

with open('data.pickle', 'wb') as file:

pickle.dump(object_list, file)

After executing the preceding code, we will get a file called data.pickle with the previously stored data. Our goal now is to unpack our information, which is very easy to do with the load method:

with open('data.pickle', 'rb') as file:

data = pickle.load(file)

Since there are always different ways of doing things in programming, we can use the Unpickler class to take our data to the program from another approach:

with open('data.pickle', 'rb') as file:

data = pickle.Unpickler(file)

list = data.load()

From a security perspective, Pickle has the same limitations as the eval() function since it allows users to build inputs that execute arbitrary code.

The official documentation (https://docs.python.org/3.7/library/pickle.html) gives us the following warning:

"The pickle module is not secure against erroneous or maliciously constructed data. Never unpickle data received from an untrusted or unauthenticated source."

The documentation for pickle makes it clear that it does not guarantee security. In fact, deserialization can execute arbitrary code. Between the main problems that the pickle module has from the security standpoint, we can highlight what makes it vulnerable to code injection and data corruption:

- No controls over data/object integrity

- No controls over data size or system limitations

- Code is evaluated without security controls

- Strings are encoded/decoded without verification

Once an application deserializes untrusted data, this can be used to modify the application's logic or execute arbitrary code. The weakness exists when user input is not sanitized and validated properly prior to transfer to methods such as pickle.load() or pickle.loads().

In this example, the use of pickle.load() and yaml.load() is insecure because the user input is not being validated.

You can find the following code in the pickle_yaml_insecure.py file:

import os

import pickle

import yaml

user_input = input()

with open(user_input,'rb') as file:

contents = pickle.load(file) # insecure

with open(user_input) as exploit_file:

contents = yaml.load(exploit_file) # insecure

From the security point of view, the best practice at this point is to never load data from an untrusted input source. You can use alternative formats for serializing data, such as JSON, or more secure methods, such as yaml.safe_load().

The main difference between both functions is that yaml.load() converts a YAML document to a Python object, while yaml.safe_load() limits this conversion to simple Python objects such as integers or lists and throws an exception if you try to open the YAML that contains code that could be executed.

In this example, we are using the safe_load() method to securely serialize a file. You can find the following code in the yaml_secure.py file:

import os

import yaml

user_input = input()

with open(user_input) as secure_file:

contents = yaml.safe_load(secure_file) # secure

One of the main security problems of the Pickle module is that it allows us to modify the deserialization flow. For example, we could intervene and execute when an object deserializes. To do this, we could overwrite the __reduce__ method.

If we overwrite the __reduce__ method, this method is executed when you try to deserialize a pickle object. In this example, we see how we can obtain a shell by adding to the __reduce__ method the logic to execute a command on the machine where we are executing the script.

You can find the following code in the pickle_vulnerable_reduce.py file:

import os

import pickle

class Vulnerable(object):

def __reduce__(self):

return (os.system, ('ls',))

def serialize_exploit():

shellcode = pickle.dumps(Vulnerable())

return shellcode

def insecure_deserialize(exploit_code):

pickle.loads(exploit_code)

if __name__ == '__main__':

shellcode = serialize_exploit()

print('Obtaining files...')

insecure_deserialize(shellcode)

To mitigate malicious code execution, we could use methods such as new chroot or sandbox. For example, the following script represents a new chroot, preventing code execution on the root folder itself.

You can find the following code in the pickle_safe_chroot.py file:

import os

import pickle

from contextlib import contextmanager

class ShellSystemChroot(object):

def __reduce__(self):

return (os.system, ('ls /',))

@contextmanager

def system_chroot():

os.chroot('/')

yield

def serialize():

with system_chroot():

shellcode = pickle.dumps(ShellSystemChroot())

return shellcode

def deserialize(exploit_code):

with system_chroot():

pickle.loads(exploit_code)

if __name__ == '__main__':

shellcode = serialize()

deserialize(shellcode)

In the preceding code, we are using the context-manager decorator in system_chroot() method. In this method, we are using the os module to establish a new root when deserializing the pickle object.

Security in a subprocess module

The subprocess module allows us to work directly with commands from the operating system and it is important to be careful with the actions that we carry out using this module. For example, if we execute a process that interacts with the operating system, we need to analyze parameters we are using to avoid security issues. You can get more information about the subprocess module by visiting the official documentation:

- https://docs.python.org/3/library/subprocess.html

- https://docs.python.org/3.5/library/subprocess.html#security-consideration

Among the most common subprocess methods, we can find subprocess.call(). This method is usually useful for executing simple commands, such as listing files:

>>> from subprocess import call

>>> command = ['ls', '-la']

>>> call(command)

This is the format of the call() method:

subrocess.call (command [, shell=False, stdin=None, stdout=None, stderr=None])

Let's look at these parameters in detail:

- The command parameter represents the command to execute.

- shell represents the format of the command and how it is executed. With the shell = False value, the command is executed as a list, and with shell = True, the command is executed as a character string.

- stdin is a file object that represents standard input. It can also be a file object open in read mode from which the input parameters required by the script will be read.

- stdout and stderr will be the standard output and standard output for error messages.

From a security point of view, the shell parameter is one of the most critical since it is the responsibility of the application to validate the command so as to avoid vulnerabilities associated with shell injection.

In the following example, we are calling the subprocess.call(command, shell = True) method in an insecure way since the user input is being passed directly to the shell call without applying any validation.

You can find the following code in the subprocess_insecure.py file, as shown in the following script:

import subprocess

data = input()

command = ' echo ' + data + ' >> ' + ' file.txt '

subprocess.call(command, shell = True) #insecure

with open('file.txt','r') as file:

data = file.read()

The problem with the subprocess.call() method in this script is that the command is not being validated, so having direct access to the filesystem is risky because a malicious user may execute arbitrary commands on the server through the data variable.

Often you need to execute an application on the command line, and it is easy to do so using the subprocess module of Python by using subprocess.call() and setting shell = True. By setting Shell = true, we will allow a bad actor to send commands that will interact with the underlying host operating system. For example, an attacker can set the value of the data parameter to "; cat /etc/passwd " to access the file that contains a list of the system's accounts or something dangerous.

The following script uses the subprocess module to execute the ping command on a server whose IP address is passed as a parameter.

You can find the following code in the subprocess_ping_server_insecure.py file:

import subprocess

def ping_insecure(myserver):

return subprocess.Popen('ping -c 1 %s' % myserver, shell=True)

print(ping_insecure('8.8.8.8 & touch file'))

Tip

The best practice at this point is to use the subprocess.call() method with shell=False since it protects you against most of the risks associated with piping commands to the shell.

The main problem with the ping_insecure() method is that the server parameter is controlled by the user, and could be used to execute arbitrary commands; for example, file deletion:

>>> ping('8.8.8.8; rm -rf /')

64 bytes from 8.8.8.8: icmp_seq=1 ttl=58 time=6.32 ms

rm: cannot remove `/bin/dbus-daemon': Permission denied

rm: cannot remove `/bin/dbus-uuidgen': Permission denied

rm: cannot remove `/bin/dbus-cleanup-sockets': Permission denied

rm: cannot remove `/bin/cgroups-mount': Permission denied

rm: cannot remove `/bin/cgroups-umount': Permission denied

This function can be rewritten in a secure way. Instead of passing a string to the ping process, our function passes a list of strings. The ping program gets each argument separately (even if the argument has a space in it), so the shell doesn't process other commands that the user provides after the ping command ends:

You can find the following code in the subprocess_ping_server_secure.py file:

import subprocess

def ping_secure(myserver):

command_arguments = ['ping','-c','1', myserver]

return subprocess.Popen(command_arguments, shell=False)

print(ping_secure('8.8.8.8'))

If we test this with the same entry as before, the ping command correctly interprets the value of the server parameter as a single argument and returns the unknown host error message, since the added command, (; rm -rf), invalidates correct pinging:

>>> ping_secure('8.8.8.8; rm -rf /')

ping: unknown host 8.8.8.8; rm -rf /

In the next section, we are going to review a module for sanitizing the user input and avoid security issues related to a command introduced by the user.

Using the shlex module

The best practice at this point is to sanitize or escape the input. Also, it's worth noting that secure code defenses are layered and the developer should understand how their chosen modules work in addition to sanitizing and escaping input. In Python, if you need to escape the input, you can use the shlex module, which is built into the standard library, and it has a utility function for escaping shell commands:

shlex.quote() returns a sanitized string that can be used in a shell command line in a secure way without problems associated with interpreting the commands:

>>> from shlex import quote

>>> filename = 'somefile; rm -rf ~'

>>> command = 'ls -l {}'.format(quote(filename)) #secure

>>> print(command)

>>> ls -l 'somefile; rm -rf ~'

In the preceding code, we are using the quote() method to sanitize the user input to avoid security issues associated with commands embedded in the string user input. In the following section, we are going to review the use of insecure temporary files.

Insecure temporary files

There are a number of possibilities for introducing such vulnerability into your Python code. The most basic one is to actually use deprecated and not recommend temporary files handling functions. Among the main methods that we can use to create a temporary file in an insecure way, we can highlight the following:

- os.tempnam(): This function is vulnerable to symlink attacks and should be replaced with tempfile module functions.

- os.tmpname(): This function is vulnerable to symlink attacks and should be replaced with tempfile module functions.

- tempfile.mktemp(): This function has been deprecated and the recommendation is to use the tempfile.mkstemp() method.

From a security point of view, the preceding functions generate temporary filenames that are inherently insecure because they do not guarantee exclusive access to a file with the temporary name they return. The filename returned by these functions is guaranteed to be unique on creation, but the file must be opened in a separate operation. By the way, there is no guarantee that the creation and open operations will happen atomically, and this provides an opportunity for an attacker to interfere with the file before it is opened.

For example, in the mktemp documentation, we can see that using this method is not recommended. If the file is created using mktemp, another process may access this file before it is opened.

As we can see in the documentation, the recommendation is to replace the use of mktemp by mkstemp, or use some of the secure functions in the tempfile module, such as NamedTemporaryFile.

The following script opens a temporary file and writes a set of results to it in a secure way.

You can find the following code in the writing_file_temp_secure.py file:

from tempfile import NamedTemporaryFile

def write_results(results):

filename = NamedTemporaryFile(delete=False)

print(filename.name)

filename.write(bytes(results,"utf-8"))

print("Results written to", filename)

write_results("writing in a temp file")

In the preceding script, we are using NamedTemporaryFile to create a file in a secure way.

Now that we have reviewed the security of some Python modules, let's move on to learning how to get more information about our Python code by using a static code analysis tool for detecting vulnerabilities.

Static code analysis for detecting vulnerabilities

In this section, we will cover Bandit as a static code analyzer for detecting vulnerabilities. We'll do this by reviewing tools we can find in the Python ecosystem for static code analysis and then learning with the help of more detailed tools such as Bandit.

Introducing static code analysis

The objective of static analysis is to search the code and identify potential problems. This is an effective way to find code problems cheaply, compared to dynamic analysis, which involves code execution. However, running an effective static analysis requires overcoming a number of challenges.

For example, if we want to detect inputs that are not being validated when we are using the eval() function or the subprocess module, we could create our own parser that would detect specific rules to make sure that the different modules are used in a secure way.

The simplest form of static analysis would be to search through the code line by line for specific strings. However, we can take this one step further and parse the Abstract Syntax Tree, or AST, of the code to perform more concrete and complex queries.

Once we have the ability to perform analyses, we must determine when to run the checks. We believe in providing tools that can be run both locally and in the code that is being developed to provide a rapid response, as well as in our line of continuous development, before the code merges into our base code.

Considering the complexity of these problems and the scale of the code bases in a typical software project, it would be a benefit to have some tools that could automatically help to identify security vulnerabilities.

Introducing Pylint and Dlint

Pylint is one of the classic static code analyzers. The tool checks code for compliance with the PEP 8 style guide for Python code. Pylint also helps with refactoring by tracking double code, among other things. An optional type parameter even checks whether all of the parameters accepted by the Python script are consistent and properly documented for subsequent users.

Tip

The Python user community has adopted a style guide called PEP 8 that makes code reading and consistency between programs for different users easier: https://www.python.org/dev/peps/pep-0008.

Developers can use plugins to extend the functionality of the tool. On request, Pylint uses multiple CPU cores at the same time, speeding up the process, especially for large-scale source code. You can also integrate Pylint with many IDEs and text editors, such as Emacs, Vim, and PyCharm, and it can be used with continuous integration tools such Jenkins or Travis.

A similar tool with a focus on security is Dlint. This tool provides a set of rules called linters that define what we want to search for and an indicator that allows those security rules to be evaluated on our base code. This tool contains a set of rules that verify best practices when it comes to writing secure Python.

To evaluate these rules on our base code, Dlint uses the Flake8 module, http://flake8.pycqa.org/en/latest. This approach allows Flake8 to do the work of parsing Python's AST parsing tree, and Dlint focuses on writing a set of rules with the goal of detecting insecure code. In the Dlint documentation, we can see the rules available for detecting insecure code at https://github.com/duo-labs/dlint/tree/master/docs.

Next, we will review Bandit as a security static analysis tool that examines Python code for typical vulnerabilities; hence, it is recommended on top of Pylint and Dlint. The tool examines in particular XML processing, problematic SQL queries, and encryption. Users can enable and disable the completed tests individually or add their own tests.

The Bandit static code analyzer

Bandit is a tool designed to find common security issues in Python code. Internally, it processes every source code file of a Python project, creating an Abstract Syntax Tree (AST) from it, and runs suitable plugins against the AST nodes. Using the ast module, source code is translated into a tree of Python syntax nodes.

The ast module an only parse Python code, which is valid in the interpreter version from which it is imported. This way, if you try to use the ast module from a Python 3.7 interpreter, the code should be written for 3.7 in order to parse the code. To analyze the code, you only need to specify the path to your Python code.

Since Bandit is distributed on the PyPI repository, the best way to install it is by using the pip install command:

$ pip install bandit

With the -h option, we can see all the arguments of this tool:

Figure 11.2 – Bandit command options

The use of Bandit can be customized. Bandit allows us to use custom tests that are carried out through different plugins. If you want to execute the ShellInjection plugin, then you can try with the following command:

$ bandit samples/*.py -p ShellInjection

You can find some examples to analyze in the GitHub repository: https://github.com/PyCQA/bandit/tree/master/examples

For example, if we analyze the subprocess_shell.py script located in https://github.com/PyCQA/bandit/blob/master/examples/subprocess_shell.py, we can get information about the use of the subprocess module.

Bandit scans the selected Python file by default and presents the result in an abstract tree of syntax. When Bandit finishes scanning all the files, it produces a report. Once the testing is complete, a report is produced that lists the security issues found in the source code of the target:

$ bandit subprocess_shell.py -f html -o subprocess_shell.html

In the following screenshot, we can see the output of executing an analysis over the subprocess_shell.py script:

Figure 11.3 – Bandit output report

In summary, Bandit scans your code for vulnerabilities associated with Python modules, such as common security issues involving the subprocess module. It rates the security risk from low to high and informs you which lines of code trigger the security problem in question.

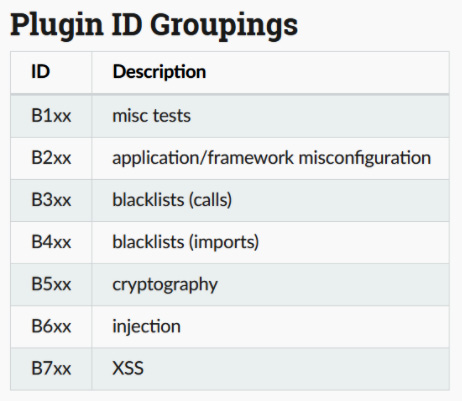

Bandit test plugins

Bandit supports a number of different tests in Python code to identify several security problems. These tests are developed as plugins, and new ones can be developed to expand the functionality Bandit provides by default.

In the following screenshot, we can see the available plugins installed by default. Each plugin provides a different analysis and focuses on analyzing specific functions:

Figure 11.4 – Plugins available for analyzing specific Python functions

For example, B602 plugin: subprocess_popen_with_shell_equals_true performs searches for the subprocess, the Popen submodule, as an argument in the shell = True call. This type of call is not recommended as it is vulnerable to some shell injection attacks.

At the following URL, we can view documentation pertaining to the B602 plugin:

https://bandit.readthedocs.io/en/latest/plugins/b602_subprocess_popen_with_shell_equals_true.html

This plugin uses a command shell to search for a subprocess device to use. This form of subprocess invocation is dangerous since it is vulnerable to multiple shell injection attacks.

As we can see in the official docs in the shell injection section, this plugin has the capacity to search methods and calls associated with the subprocess module, and can use shell = True:

shell_injection:

# Start a process using the subprocess module, or one of its

wrappers.

subprocess:

- subprocess.Popen

- subprocess.call

In the following screenshot, we can see an output execution of this plugin:

Figure 11.5 – Executing plugins for detecting security issues with subprocess modules

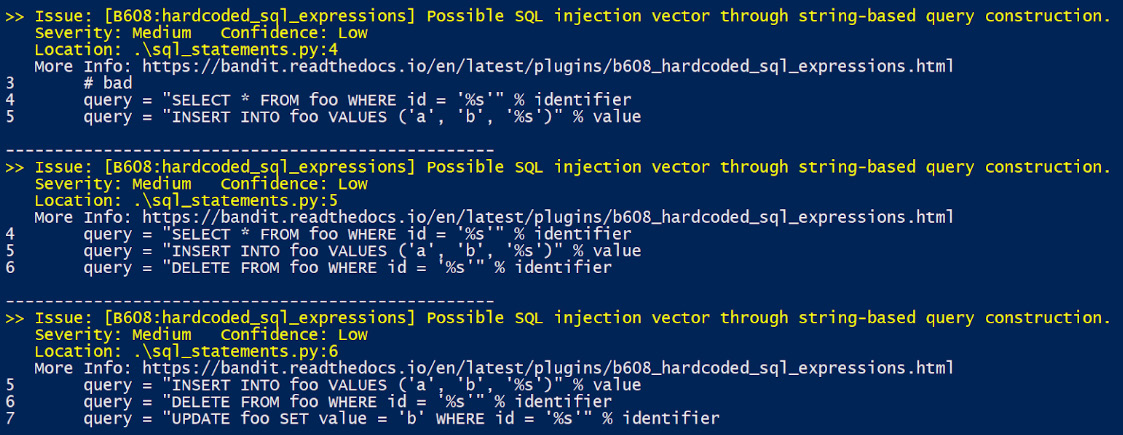

A SQL injection attack consists of a SQL query being inserted or injected through the input data provided to an application. B608: Test for SQL Injection plugin looks for strings that resemble SQL statements involving some type of string construction operation. For example, it has the capacity to detect the following strings related to SQL queries in the Python code:

SELECT %s FROM derp;" % var

"SELECT thing FROM " + tab

"SELECT " + val + " FROM " + tab + …

"SELECT {} FROM derp;".format(var)

In the following screenshot, we can see an output execution of this plugin:

Figure 11.6 – Executing plugins for detecting security issues associated with SQL injection

In addition, Bandit provides a checklist that it performs to detect those functions that are not being used in a secure way. This checklist tests data on a variety of Python modules that are considered to have possible security implications. You can find more information in the Bandit documentation.

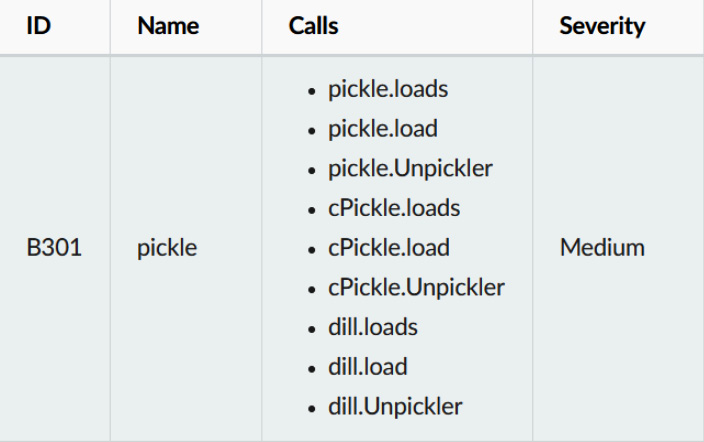

In the following screenshot, we can see the calls and functions that can detect the pickle module:

Figure 11.7 – Pickle module calls and functions

If you find that the pickle module is being used in your Python code, this module has the capacity to detect unsafe use of the Python pickle module when used to deserialize untrusted data.

Now that we have reviewed Bandit as a static code analysis tool for detecting security issues associated with Python modules, let's move on to learning how to detect Python modules with backdoors and malicious code in the PyPi repository.

Detecting Python modules with backdoors and malicious code

In this section, we will be able to understand how to detect Python modules with backdoors and malicious code. We'll do this by reviewing insecure packages in PyPi, covering how to detect backdoors in Python modules, and with the help of an example of a denial-of-service attack in a Python module.

Insecure packages in PyPi

When you import a module into your Python program, the code is run by the interpreter. This means that you need to be careful with imported modules. PyPi is a fantastic tool, but often the code submitted is not verified, so you will encounter malicious packages with minor variations in the package names.

You can find an article analyzing malicious packages found to be typo-squatting in the Python Package Index at the following URL: https://snyk.io/blog/malicious-packages-found-to-be-typo-squatting-in-pypi.

For example, security researchers have found malicious packages that have been published to PyPi with similar names to popular packages, but that execute arbitrary code instead. The main problem is that PyPi doesn't have a mechanism for software developers to report software that is malicious or may break other software. Also, developers usually install packages in their system without checking their content or origins.

Backdoor detection in Python modules

In recent years, security researchers have detected the presence of "backdoors" in certain modules. The SSH Decorate module was a Paramiko decorator for Python that offered SSH client functionality. Although it was not very popular, it exemplifies how this type of incident can occur, making it an easy target to use to spread this backdoor.

Unfortunately, malicious packages have been found that behave differently to the original package. Some of these malicious packages download a file in a hidden way and run a background process that creates an interactive shell without a login.

This violation of a module, together with the recent incidents published on other modules and repositories, focuses on the security principles present in repositories such as Pypi, where, today, there is no quick or clear way of being able to report these incidents of malicious modules, nor is there a method to verify them by signature.

The main problem is that anyone might upload a project with malicious code hidden in it and naive developers could install this package, believing it's "official" because it's on PyPi. There is an assumption, since pip is part of the core Python, that the packages you install through pip might be more reliable and conform to certain standards than packages you can install from GitHub projects.

Obviously, malicious packages that have been detected have been removed from the repository by the PyPI security team, but we will likely encounter such cases in the future.

Denial-of-service vulnerability in urllib3

urllib3 is one of the main modules that is widely used in many Python projects related to the implementation of an HTTP client. Due to its widespread use, discovering a vulnerability in this module could expose many applications to a security flaw. The vulnerability detected in this module is related to a denial-of-service issue.

You can find a documented DoS with urllib3 at the following URL: https://snyk.io/vuln/SNYK-PYTHON-URLLIB3-559452.

This vulnerability has been detected in version 1.25.2, as we can see in the GitHub repository: https://github.com/urllib3/urllib3/commit/a74c9cfbaed9f811e7563cfc3dce894928e0221a.

The problem was detected in the _encode_invalid_chars method since, under certain conditions, this method can cause a denial of service due to the efficiency of the method and high CPU consumption under certain circumstances:

Figure 11.8 – urllib3 code vulnerability in _encode_invalid_chars()

The key problem associated with this method is the use of the percent encodings array, which contains all percent encoding matches, and the possibilities contained within the array are infinite. The size of percent encodings corresponds to a linear runtime for a URL of length N. The next step concerning the normalization of existing percent-encoded bytes also requires a linear runtime for each percent encoding, resulting in a denial of service in this method.

To fix the problem, it's recommended to check your urllib3 code and update it to the latest current version where the problem has been solved.

Now that we have examined the Python modules with code that could be the origin of a security issue, let's move on to learning about security in Python web applications with the Flask framework.

Security in Python web applications with the Flask framework

Flask is a micro Framework written in Python with a focus on facilitating the development of web applications under the Model View Controller (MVC), which is a software architecture pattern that separates the data and business logic of an application from its representation.

In this section, we will cover security in Python web applications with the Flask framework. Because it is a module that is widely used in many projects, from a security point of view, it is important to analyze certain aspects that may be the source of a vulnerability in your code.

Rendering an HTML page with Flask

Developers use Jinja2 templates to generate dynamic content. The result of rendering a template is an HTML document in which the dynamic content generation blocks have been processed.

Flask provides a template rendering engine called Jinja2 that will help you to create dynamic pages of your web application. To render a template created with Jinja2, the recommendation is to use the render_template() method, using as parameters the name of our template and the necessary variables for its rendering as key-value parameters.

Flask will look for the templates in the templates directory of our project. In the filesystem, this directory must be at the same level in which we have defined our application. In this example, we can see how we can use this method:

from flask import Flask, request, render_template

app = Flask(__name__)

@app.route("/")

def index():

parameter = request.args.get('parameter', '')

return render_template("template.html", data=parameter)

In the preceding code, we are initizaling a flask application and defining a method for attending a request. The index method gets the parameter from the URL and the render_template() method renders this parameter in the HTML template.

This could be the content of our template file:

<!DOCTYPE html>

<html lang="es">

<head>

<meta charset="UTF-8">

<title>Flask Template</title>

</head>

<body>

{{ data }}

</body>

</html>

As we can see, the appearance of this page is similar to a static html page, with the exception of {{data}} and the characters {% and%}. Inside the braces, the parameters that were passed to the render_template() method are used. The result of this is that during rendering, the curly braces will be replaced by the value of the parameters. In this way, we can generate dynamic content on our pages.

Cross-site scripting (XSS) in Flask

Cross-Site Scripting (XSS) vulnerabilities allow attackers to execute arbitrary code on the website and occur when a website is taking untrusted data and sending it to other users without sanitation and validation.

One example may be a website comment section, where a user submits a message containing specific JavaScript to process the message. Once other users see this message, this JavaScript is performed by their browser, which may perform acts such as accessing cookies in the browser or effecting redirection to a malicious site.

In this example, we are using the Flask framework to get the parameter from the URL and inject this parameter into the HTML template. The following script is insecure because without escaping or sanitizing the input parameter, the application becomes vulnerable to XSS attacks.

You can find the following code in the flask_template_insecure.py file:

from flask import Flask , request , make_response

app = Flask(__name__)

@app.route ('/info',methods =['GET' ])

def getInfo():

parameter = request.args.get('parameter','')#insecure

html = open('templates/template.html').read()

response = make_response(html.replace('{{ data }}',parameter))

return response

if __name__ == ' __main__ ':

app.run(debug = True)

The instruction line parameter = request.args.get('parameter') is insecure because it is not sanitizing and validating the user input. If we are working with Flask, an easy way to avoid this vulnerability is to use the template engine provided by the Flask framework.

In this case, the template engine, through the escape function, would take care of escaping and validating the input data. To use the template engine, you need to import the escape method from the Flask package:

from flask import escape

parameter = escape(request.args.get('parameter',''))#secure

Another alternative involves using the escape method from the HTML package.

Disabling debug mode in the Flask app

Running a Flask app in debug mode may allow an attacker to run arbitrary code through the debugger. From a security standpoint, it is important to ensure that Flask applications that are run in a production environment have debugging disabled.

You can find the following code in the flask_debug.py file:

from flask import Flask

app = Flask(__name__)

class MyException(Exception):

status_code = 400

def __init__(self, message, status_code):

Exception.__init__(self)

@app.route('/showException')

def main():

raise MyException('MyException', status_code=500)

if __name__ == ' __main__ ':

app.run(debug = True) #insecure

In the preceding script, if we run the showException URL, when debug mode is activated, we will see the trace of the exception. To test the preceding script, you need to set the environment variable, FLASK_ENV, with the following command:

$ export FLASK_ENV=development

To avoid seeing this output, we would have to disable debug mode with debug = False. You can find more information in the Flask documentation.

Security redirections with Flask

Another security problem that we may experience while working with Flask is linked to unvalidated input that can influence the URL used in a redirect and may trigger phishing attacks. Attackers can mislead other users to visit a URL to a trustworthy site and redirect it to a malicious site via open redirects. By encoding the URL, an attacker will have difficulty redirecting to a malicious site.

You can find the following code in the flask_redirect_insecure.py file:

from flask import Flask, redirect, Response

app = Flask(__name__)

@app.route('/redirect')

def redirect_url():

return redirect("http://www.domain.com/", code=302) #insecure

@app.route('/url/<url>')

def change_location(url):

response = Response()

headers = response.headers

headers["location"] = url # insecure

return response.headers["location"]

if __name__ == ' __main__ ':

app.run(debug = True)

To mitigate this security issue, you could perform a strict validation on the external input to ensure that the final URL is valid and appropriate for the application.

You can find the following code in the flask_redirect_secure.py file:

from flask import Flask, redirect, Response

app = Flask(__name__)

valid_locations = ['www.packtpub.com', 'valid_url']

@app.route('/redirect/<url>')

def redirect_url(url):

sanitizedLocation = getSanitizedLocation(url) #secure

print(sanitizedLocation)

return redirect("http://"+sanitizedLocation,code=302)

def getSanitizedLocation(location):

if (location in valid_locations):

return location

else:

return "check url"

if __name__ == ' __main__ ':

app.run(debug = True)

In the preceding script, we are using a whitelist called valid_locations with a fixed list of permitted redirect URLs, generating an error if the input URL does not match an entry in that list.

Now that we have reviewed some tips related to security in the Flask framework, let's move on to learning about security best practices in Python projects.

Python security best practices

In this section, we'll look at Python security best practices. We'll do this by learning about recommendations for installing modules and packages in a Python project and review services for checking security in Python projects.

Using packages with the __init__.py interface

The use of packages through the __init__.py interface provides a better segregation and separation of privileges and functionality, providing better architecture overall. Designing applications with packages in mind is a good strategy, especially for more complex projects. The __init__.py package interface allows better control over imports and exposing interfaces such as variables, functions, and classes.

For example, we can use this file to initialize a module of our application and, in this way, have the modules that we are going to use later controlled in this file.

Updating your Python version

Python 3 was released in December 2008, but some developers tend to use older versions of Python for their projects. One problem here is that Python 2.7 and older versions do not provide security updates. Python 3 also provides new features for developers; for example, input methods and the handling of exceptions were improved. Additionally, in 2020, Python 2.7 doesn't have support, and if you're still using this version, perhaps you need to consider moving up to Python 3 in the next months.

Installing virtualenv

Rather than downloading modules and packages globally to your local computer, the recommendation is to use a virtual environment for every project. This means that if you add a program dependency with security problems in one project, it won't impact the others. In this way, each module you need to install in the project is isolated from the module you could have installed on the system in a global way.

Virtualenv supports an independent Python environment by building a separate folder for the different project packages used. Alternatively, you should look at Pipenv, which has many more resources in which to build stable applications.

Installing dependencies

You can use pip to install Python modules and its dependencies in a project. The best way from a security standpoint is to download packages and modules using a special flag available with the pip command called --trusted-host.

You can use this flag by adding the pypi.python.org repository as a trusted source when installing a specific package with the following command:

pip install –trusted-host pypi.python.org Flask <package_name>

In the following screenshot, we can see the options of the pip command to install packages where we can highlight the option related to a trusted-host source:

Figure 11.9 – The trusted-host option for installing packages in a secure way

In the following section, we are going to review some online services for checking security in Python projects.

Using services to check security in Python projects

In the Python ecosystem, we can find some tools for analyzing Python dependencies. These services have the capacity to scan your local virtual environment and requirements file for security issues, to detect the versions of the packages that we have installed in our environment, and to detect outdated modules or that may have some kind of vulnerability associated with them:

- LGTM (https://lgtm.com) is a free service for open source projects that allows the checking of vulnerabilities in our code related to SQL injection, CSRF, and XSS.

- Safety (https://pyup.io/safety) is a command-line tool you can use to check your local virtual environment and dependencies available in the requirements.txt file. This tool generates a report that indicates whether you are using a module with security issues.

- Requires.io (https://requires.io/) is a service with the ability to monitor Python security dependencies and notify you when outdated or vulnerable dependencies are discovered. This service allows you to detect libraries and dependencies in our projects that are not up-to-date and that, from the point of view of security, may pose a risk for our application. We can see for each package which version we are currently using, and compare it with the latest available version, so that we can see the latest changes made by each module and see whether it is advisable to use the latest version depending on what we require from our project.

- Snyk (https://app.snyk.io) makes checking your Python dependencies easy. It provides a free tier that includes unlimited scans for open source projects and 200 scans every month for private repositories. Snyk recently released improved support for Python in Snyk Open Source, allowing developers to remediate vulnerabilities in dependencies with the help of automated fix pull requests.

LGTM is a tool that follows the business model and the functioning of others such as Travis. In other words, it allows us to connect our public GitHub repositories to execute the analysis of our code. This service provides a list of rules related to Python code security.

Next, we are going to analyze some of the Python-related security rules that LGTM has defined in its database:

Figure 11.10 – LGTM Python security rules

Among the list of rules that it is capable of detecting, we can highlight the following:

- Incomplete URL substring sanitization: Sanitizing URLs that may be unreliable is an important technique to prevent attacks such as request spoofing and malicious redirects. This is usually done by checking that the domain of a URL is in a set of allowed domains. We can find an example of this case at https://lgtm.com/rules/1507386916281.

- Use of a broken or weak cryptographic algorithm: Many cryptographic algorithms such as DES provided by the libraries for cryptography purposes are known to be weak. This problem can be solved by ensuring the use of a powerful, modern cryptographic algorithm such as AES-128 or RSA-2048 for encryption, and SHA-2 or SHA-3 for secure hashing. We can find an example of a weak cryptographic algorithm at the URL https://lgtm.com/rules/1506299418482.

- Request without certificate validation: Making a request without certificate validation can allow man-in-the-middle attacks. This issue can be resolved by using verify=True when making a request. We can find an example of this case at the URL https://lgtm.com/rules/1506755127042.

- Deserializing untrusted input: The deserialization of user-controlled data will allow arbitrary code execution by attackers. This problem can be solved by using other formats in place of serialized objects, such as JSON. We can find an example of this case at the URL https://lgtm.com/rules/1506218107765.

- Reflected server-side cross-site scripting: This problem can be overcome by escaping the input to the page prior to writing user input. Most frameworks also feature their own escape functions, such as flask.escape(). We can find an example of this case at the URL https://lgtm.com/rules/1506064236628.

- URL redirection from a remote source: URL redirection can cause redirection to malicious websites based on unvalidated user input. This problem can be solved by keeping a list of allowed redirects on the server, and then selecting from that list based on the given user feedback. We can find an example of this case at the URL https://lgtm.com/rules/1506021017581.

- Information exposure through an exception: Leaking information about an exception, such as messages and stack traces, to an external user can disclose details about implementation that are useful for an attacker in terms of building an exploit. This problem can be solved by sending a more generic error message to the user, which reveals less detail. We can find an example of this case at the URL https://lgtm.com/rules/1506701555634.

- SQL query built from user-controlled sources: Creating a user-controlled SQL query from sources is vulnerable to user insertion of malicious SQL code. Using query parameters or prepared statements will solve this issue. We can find an example of this case at the URL https://lgtm.com/rules/1505998656266/.

One of the functionalities offered by these tools is the possibility that every time a pull request is made on a repository, it will automatically analyze the changes and inform us whether it presents any type of security alert.

Understanding all of your dependencies

If you are using the Flask web framework, it is important to understand the open source libraries that Flask is importing. Indirect dependencies are as likely to introduce risk as direct dependencies, but these risks are less likely to be recognized. Tools such as those mentioned before can help you understand your entire dependency tree and have the capacity of fixing problems with these dependencies.

Summary

Python is a powerful and easy to learn language, but it is necessary to validate all inputs from a security point of view. There are no limits or controls in the language and it is the responsibility of the developer to know what can be done and what to avoid.

In this chapter, the objective has been to provide a set of guidelines for reviewing Python source code. Also, we reviewed Bandit as a static code analyzer to identify security issues that developers can easily overlook. However, the tools are only as smart as their rules, and they usually only cover a small part of all possible security issues.

In the next chapter, we will introduce forensics and review the primary tools we have in Python for extracting information from memory, SQLite databases, research about network forensics with PcapXray, getting information from the Windows registry, and using the logging module to register errors and debug Python scripts.

Questions

As we conclude, here is a list of questions for you to test your knowledge regarding this chapter's material. You will find the answers in the Assessments section of the Appendix:

- Which function does Python provide to evaluate a string of Python code?

- Which is the recommended function from the yaml module for converting a YAML document to a Python object in a secure way?

- Which Python module and method returns a sanitized string that can be used in a shell command line in a secure way without any issues to interpret the commands?

- Which Bandit plugin has the capacity to search methods and calls related to subprocess modules that are using the shell = True argument?

- What is the function provided by Flask to escape and validate the input data?

Further reading

- ast module documentation: https://docs.python.org/3/library/ast.html#ast.literal_eval

- Pickle module documentation: https://docs.python.org/3.7/library/pickle.html

- shlex module documentation: https://docs.python.org/3/library/shlex.html#shlex.quote

- mkstemp documentation: https://docs.python.org/3/library/tempfile.html#tempfile.mkstemp

- NamedTemporayFile documentation: https://docs.python.org/3/library/tempfile.html#tempfile.NamedTemporaryFile

- Pylint official page: https://www.pylint.org

- Jenkins: https://jenkins.io, and Travis: https://travis-ci.org are continuous integration/continuous deployment tools

- GitHub repository Dlint project: https://github.com/duo-labs/dlint

- GitHub repository Bandit project: https://github.com/PyCQA/bandit

- Bandit documentation related to blacklist calls: https://bandit.readthedocs.io/en/latest/blacklists/blacklist_calls.html

- Jinja2 templates documentation: https://palletsprojects.com/p/jinja/

- html module documentation: https://docs.python.org/3/library/html.html#html.escape

- Flask documentation: https://flask.palletsprojects.com/en/1.1.x/quickstart/

- LGTM Python security rules: https://lgtm.com/search?q=python%20security&t=rules