CHAPTER 4: MEASUREMENT COMMON GROUND FOR CLIENT AND PROVIDER?

Things would run a lot more smoothly if we didn’t have the passengers to contend with. Anonymous rail manager

Providing an IT service is a bit like running a train service. People soon know when things go wrong and they tend not to be very patient about it. They just expect things to work like a Swiss watch. And everybody thinks it’s simple. Yet, behind the scenes, it needs reliable hardware and software and constant management attention, with well-defined processes, both to minimise problems and to deal with them efficiently when they do arise.

Measurement can be the glue holding things together. So long as there is a willingness on both sides, it can provide a common vocabulary that helps customer and provider to understand what the customer is getting. However, if the trade-offs and constraints involved in deciding acceptable service levels have not been made plain to enable user buy-in, measurement will probably just highlight unresolved differences that need to be reconciled before it can be used as a beneficial tool.

What are the numbers telling you?

A train passenger comments:

‘All I want is for them to run on time. As it is you have to get up an hour earlier than you should, just to make sure you get to your meetings on time. And when you get delays, they never tell you till you’re a captive audience and you can’t go another way. You’re lucky if they tell you what’s gone wrong and they always say it’ll be fixed faster than it really is. I’m surprised they don’t lose the franchise because they’re awful.’

A train company manager responds:

‘We’re actually achieving good punctuality and reliability figures, well above the contractual requirement. We’re committed to delivering the best possible service because that way we improve our revenues, we grow our business and we’re more likely, with a good reputation, to win the franchise again. We’ve put a lot of effort into fleet reliability, which is now very good, and we’re working more closely than ever with the rail infrastructure company to address the remaining track and signalling issues. Our operational practices are proven to be effective. Our incident and problem management is well developed, so when things do go wrong we deal with both the immediate impact and the underlying cause. The figures bear it out.’

An independent observer comments:

‘What we aren’t being told is that the communication between provider and customers isn’t as good as it should be. The company does an annual customer satisfaction survey and the results show this every single time. The hard measures show things are fine (though there’s always room for improvement) but the soft customer measures have a different story to tell. Both sides actually have access to a common set of figures that show that service is perfectly respectable, but customer care and communications are in need of improvement. All they need to do is understand the numbers and act on what the numbers are telling them.’

Align your goals

In this book, a key theme is the alignment of client and provider measures, around what both sides expect of IT provision.

It’s surprising how often you hear of misalignment, even with an internal provider, for example:

- IT and business goals not aligned;

- the business is dissatisfied with IT facilities;

- IT quality and change-handling are putting the business at risk.

While measurement will help to identify and diagnose delivery problems, it can’t resolve a failure to line up IT and business goals in the first place. Indeed, if you don’t line these up, there is an increased risk of using measurement to prop up the wrong decisions!

Inputs, outputs and outcomes

Let us further consider measurement of inputs, outputs and outcomes, which we introduced earlier. IT, like any business activity, has inputs and outputs, which are pivotal to provider/client relationships. IT inputs and outputs contribute to client outcomes; just as importantly, but of less interest in a chapter on common ground, IT inputs and outputs also contribute to provider outcomes like profitability and repeat business.

What constitutes an input and what constitutes an output depends on your perspective. Inputs are the resources used to do the work. For an IT service providing support to one or more businesses, the inputs will include the IT provider staff, the hardware and software, and the funding to pay for them.

The outputs will be the service, which will be expected to comply with the contract or SLA. The handling of change requests, problems and complaints will be included as service outputs, probably measured as volumes handled and statistics on efficiency of handling (e.g. on-time closure). The outputs are in effect the IT provided to the business.

Inputs tend to be common across most IT service provision, with quantitative variations (e.g. in annual costs) reflecting things like scale of operation, efficiency and customer requirements. Many IT service outputs are qualitatively common across customer organisations, but there are variations in the subset of generic services provided (e.g. application development).

Businesses are also interested in outcomes – the effects of the IT on the business it is supporting and, typically, on the business’s customers, for example, the number of new accounts opened or of mortgages let. The outcomes reflect what the service is being provided for, so are much more customer-specific than inputs and outputs. But it is important for the IT provider to understand what the customer outcomes are expected to be, even if they are not contractual; this is because the business is quite likely to judge the IT provider on whether the IT supports the business’s fundamental drivers.

Summing up, the business and the provider will be interested in common measures of outputs (compliance with SLA/contract), some input measures, especially costs, and in fulfilment of outcomes. Achievement of client outcomes will probably be measurable, but the IT provider’s part in contributing to client outcomes may not be very amenable to measurement.

The provider is highly likely to need additional measures to show how effectively it is operating, including measures concerned with its own processes.

Overlapping perspectives

Beauty is in the eye of the beholder. Origin obscure.

Funder’s perspective

The IT client organisation, whose business will probably depend on IT, will want to define what it is to get for its money and check that it gets it, typically in terms of:

- services

- availability and reliability

- capacity

- customer service

- responsiveness to change and problems.

Some form of customer satisfaction check may be included in the client organisation’s assessment of whether it is getting what it is paying for.

User’s perspective

The users want a service that performs as advertised. Actually they probably want better than that, but their absolute expectation – or hope – will be ‘reliably available and usable’. Then there’s the question of whether it supports what they need to do. How does the provider treat users when things go wrong? How does it handle the situation generally when things go wrong? Does it fix faults, or do the users have to complain about the same things over and over again? How does the provider handle change? Does the bill mean the users are getting value for money?

Provider’s perspective

The provider’s main purpose is to serve. It will probably have financial constraints, such as overall or unit cost ceilings, and it may have revenue targets related to the service being provided. It will generally want to provide a service that meets or exceeds the commitments in the contract or SLA and, at the same time, satisfy or delight customers and stakeholders.

Thus, the provider will want to run the services as advertised or slightly better than that. It’ll want people to be satisfied with customer care, especially when there are changes and problems. But it lives in the real world. The funding available may not stretch to providing everybody with what they ideally would like. Things do go wrong and people get frustrated. How these realities are addressed, and how good customer communications are, will then be of pivotal importance.

The stakeholders and customers have no real interest in the details underpinning an effective performance. The provider, on the other hand, must track those aspects that underpin performance and affect both the quality of service provided and the cost of provision. Things like quality and availability of staff and reliability of infrastructure (mean time between failure and time to fix) will contribute to a reliable performance. The time taken to restore normality after service faults will directly affect customers, but the time taken to deal with underlying problems, which is essential information for the provider, may only be of passing interest to the customer.

The IT provider will need to track performance against the measures of interest to customers and other stakeholders, and also be on top of things like change, problem and incident management performance, infrastructure availability and reliability, people performance and productivity.

Providers may need to delve deeper into their processes to check if they are effective, efficient and repeatable. Process repeatability is important for quality and efficiency; the alternative is for staff to keep reinventing the wheel, which costs money and is unreliable. Compliance with process standards and process effectiveness and efficiency will be of particular concern, if they are suspected of being below par.

What are the measures in common?

The business using IT, its funders and users all share an interest with the provider in measures of service quality and cost. We’ll look at these in more detail later, but they will typically include (but not necessarily be limited to) adherence to contractual (or SLA) obligations, which themselves could include some or all of the following:

- percentage availability of the service;

- percentage availability of key applications;

- incidence and duration of outages and other significant incidents, categorised by incident severity (incident duration statistics are sometimes expressed as mean time to fix). Note that the related statistic on the mean time to fix underlying problems, which has an impact on incident frequency because an unresolved problem can cause an incident to recur, is often of no interest to the client organisation, although it ought to be of interest if the service is unstable;

- delivery performance of projects and changes: on time, to specification (or customer satisfaction) and to budget? Depending on the nature and make-up of the project, this indicator can be a reflection of client-side behaviour as well as provider performance. For example, if the client organisation keeps changing its requirements or is unavailable to make key decisions when they are scheduled, then it should not be surprised if the project goes over time or over budget;

- value for money, assessed through statistics like cost per user and project/change efficiency;

- customer satisfaction, assessed through a standardised, repeatable measure (questionnaire).

As we saw earlier, there will quite likely be a desire for the client’s and provider’s IT-related interests to be strategically aligned. That is an aspect of the relationship, however, that isn’t readily assessed by measurement.

The people on the provider side responsible for driving and improving performance, and for achieving the best possible value for money, will need more measures than those above to guide their work.

In the rest of this book, we’ll look in more detail at measurements for client and provider, illustrated with some examples.

The focus of measurement depends on the context

Whereas both client and provider will have a common interest in the generic measures just outlined, the focus of attention for both will depend on the context.

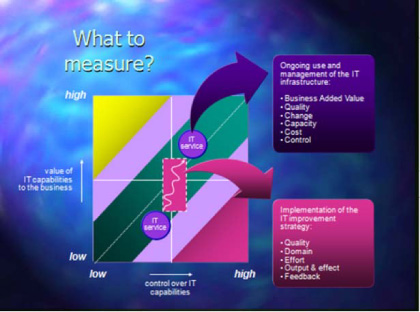

Figure 3: Measurement focus

Figure 3 neatly illustrates the importance of context. If the business’s control of the IT on which it depends is low, then it needs to get a grip.

Maybe the provider needs to get a grip too – or maybe the client needs to get a grip on the provider. Regardless, as shown in Figure 3, attention needs to be paid to implementing IT improvement, which means measuring and acting on:

- IT quality

- IT domains (areas of focus)

- effort/resources deployed

- outputs and outcomes

- customer and staff feedback.

If the client needs to secure better business value from its IT, then both client and provider need to be able to assess and act on the following aspects of the client’s IT:

- business added value (is it doing as much for our business as it could?)

- quality

- effective handling of business- and IT-engendered change

- capacity management

- cost management

- overall control.

These focuses aren’t either/or. You need to control your IT and improve its business value. You can’t easily do the latter if you haven’t brought your IT under reasonable control. But you should pay particular attention to your most significant challenge.