A simple program that runs from top to bottom

This is called procedural programming , because you write simple statements, and they are executed from top to bottom. Even if you add statements that can alternate the execution flow, it’s still easy to follow. If you a call function when working with procedural programming , your program will “jump” to a different place in memory and execute its contents, but the execution of these lines will also be done procedurally in the same order they were written until control flow is returned to the caller.

Even people who are not programmers can follow any instruction set if they are written in a specific order and if they are doing one thing at a time. Someone following a cooking recipe, or someone building a doghouse from an online tutorial may not be a programmer, but people are naturally good at doing something if they have the steps clearly laid down.

But computer programs grow and become more complex. While it is true that a lot of complex software can be written that follows such a linear execution flow , often programs will need to start doing more than one thing at once; rather than having a clear code execution path that you can follow with your bare eyes, your program may need to execute in such a way that it’s not obvious to tell what’s going on at a simple glance of the source code. Such programs are multithreaded, and they can run multiple (and often – but not always – unrelated) code paths at the same time.

In this book, we will learn how to use Apple’s async/await implementation for asynchronous and multithreaded programming. In this chapter, we will also talk about older technologies Apple provides for this purpose, and how the new async/await system is better and helps you to not concern yourself with traditional concurrency pitfalls.

Important Concepts to Know

Concurrency and asynchronous programming are very wide topics. While I’d love to cover everything, it would go out of the scope of this book. Instead, we will define four important concepts that will be relevant while we explore Apple’s async/await system , introduced in 2021. We will define them with as few words as possible, because it’s important that you keep them in mind while you work through the chapters of this book. The concepts of the new system itself will be covered in the upcoming chapters.

Apple is not the original creator of the async/await system . The technology has been used in other platforms in the past. Microsoft announced C# would get async/await support in 2011, and C# with these features was officially released to the public in 2012.

Threads

The concept of Thread can vary even when talked about in the same context (in this case, concurrency, and asynchronous programming ). In this book, we will treat a thread as a unit of work that can run independently. In iOS, the Main Thread runs the UI of your app, so every UI-related task (updating the view hierarchy, removing and adding views) must take place in the main thread. Attempting to update the UI outside of the main thread can result in unwanted behavior or, even worse, crashes.

In low-level multithreading frameworks , multithreading developers will manually create threads and they need to manually synchronize them, stop them, and do other thread management operations on their own. Manually handling threads is one of the hardest parts of dealing with multithreading in software.

Concurrency and Asynchronous Programming

Concurrency is the ability of a thread (or your program) to deal with multiple things at once. It may be responding to different events, such as network handlers, UI event handlers, OS interruptions , and more. There may be multiple threads and all of them can be concurrent.

Biometric unlock is an asynchronous task

(1) calls context.evaluatePolicy, which is a concurrent call . This will ask the system to suspend your app so it can take over. The system will request permission to use biometrics while your app is suspended. The thread your app was running on may be doing something entirely different and not even related to your app while the system is running context. evaluatePolicy . When the user responds to the prompt, either accepting or rejecting the biometric request, it will deliver the result to your app. The system will wait for an appropriate time to notify your app with the user’s selection. The selection will be delivered to your app in the completion handler (also called a callback ) on (2), at which point your app will be in control of the thread again. The selection may be delivered in a different thread than the one which launched the context.evaluatePolicy call – this is important to know, because if the response updates the UI, you need to do that work on the main thread . This is also called a blocking mechanism or interruption , as evaluatePolicy is a blocking call for the thread. If you have done iOS for at least a few months now, you are familiar with this way of dealing with various events. URLSession, image pickers, and more APIs make use of this mechanism.

People often think that asynchronous programming is the act of running multiple tasks at once. This is a different concept called Multithreading , and we will talk about it in the next point.

If you are thinking on implementing biometric unlock to resources within your app, please don’t use the code above. It has been simplified to explain how concurrency works, and it doesn't have the right safety measures to protect your user's data.

Multithreading is the act of running multiple tasks at once. Multiple threads (hence its name – multithreading) are usually involved. Many tasks can be running at the same time in the context of your app. Downloading multiple images from the internet at the same time or downloading a file from your web browser while you open some tabs are some examples of multithreading. This allow us to run tasks in parallel and is sometimes called parallelism .

Multithreading Pitfalls

Concurrency and multithreading are traditionally hard problems to solve. Ever since their introduction in the computer world, developers have had to develop paradigms and tools to make dealing with concurrency easier. Because programmers are used to thinking procedurally, writing code that executes at unexpected times is hard to get right.

In this section we will talk about some of the problems developers who write low-level multithreading code often face, and the models they have created to work with them. It is important you understand this section because these traditional problems are real, but their solutions are already implemented in the async/await system . It will also help you decide which technology you should use next time you need to implement concurrency or multithreading in your programs.

Deadlocks

In the context of multithreading, a deadlock occurs when two different processes are waiting on the other to finish, effectively making it impossible for any of them to finish to begin with.

A schematic diagram depicts the execution flow of Threads A and B, which consists of resource C with the correct symbol at Thread A and resource D with the incorrect symbol and vice versa of symbols at Thread B.

Deadlocking in action

Figure 1-1 illustrates how Thread A may try to access Resource C and Resource D, one after the other, and how Thread B may try to do the same but in different order. In this example, the deadlock will happen rather soon, because Thread A will get hold of Resource C while Thread B gets hold of Resource D. Whoever needs each other’s resource first will cause the deadlock .

Despite how simple this problem looks, it is the culprit of a lot of bugs in a lot of multithreaded software. The simple solution would be to prevent each process from attempting to access a busy resource. But how can this be achieved?

Solving the Deadlocking Problem

The deadlock problem has many established solutions. Mutex and Semaphores being the most used ones. There is also Inter-process communication through pipes, but we will not talk about it because it goes beyond a single program.

Mutex

A schematic diagram depicts the execution flow of Thread A and B, which consists of Resources C and D with the lock symbol, continue execution with locked resources, and wait for resources to get unlocked with the refresh symbol.

Using a mutex

Keep in mind that in this specific situation, it means that Thread A and Thread B, while multithreaded, will not run strictly at the same time, because Thread B needs the same resources as Thread A and it will wait for them to be free. For this reason, it is important to identify tasks that can be run in parallel before designing a multithreaded system.

Semaphores

A Semaphore is a type of lock, very similar to a mutex . With this solution, a task will acquire a lock to a resource. Any other tasks that arrive and need that resource will see that the resource is busy. When the original thread frees the resources, it will signal interested parties that the resource is free, and they will follow the same process of locking and signaling as they interact with the resource.

Mutex and Semaphores sound similar, but the key difference lies in what kind of resource they are locking, and if you ever find yourself in a situation in which you need to decide what to protect (you won’t find such a case when using async/await), it is important to think about what makes sense in your use case. In general, a mutex can be used to protect anything that doesn’t have any sort of execution on its own, such as files, sockets, and other filesystem elements. Semaphores can be used to protect execution in your program itself such us shared code execution paths. These can be mutating functions or classes with shared state. There may be cases in which executing a function by more than one thread will have unintended consequences, and semaphores are a good tool for that. For example, a function that writes a log to disk may be protected with a semaphore because if multiple processes write to the log at the same time, the log will become corrupted for the end user. Whoever has a semaphore to the logging function needs to signal the other interested parties that it is free so they can continue their execution.

A rule of thumb is to always add a timeout whenever a semaphore is acquired and let processes timeout if they take too long. If this is acceptable in the context of your program (no data corruption can occur, or there can be no other unintentional consequences to canceling tasks), consider adding a timeout so the semaphore is free again after a certain period.

The use of semaphores will allow threads to synchronize, as they will be able to coordinate the resources amongst each other so they both have their fair usage of them.

Starvation

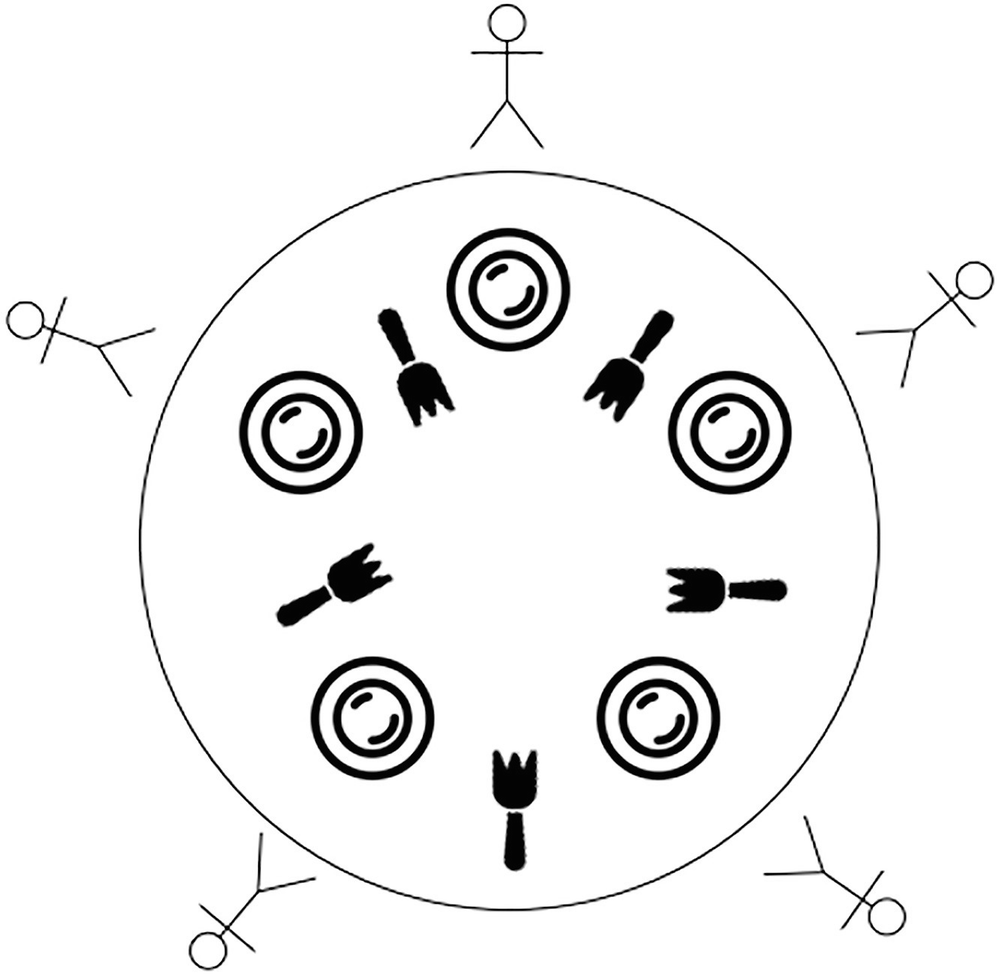

A circular model represents the five people symbol outside the circle, which resembles a dining table with five forks and plates.

The dining philosophers problem

Figure 1-3 describes what is known as the dining philosophers problem, and it is a classical representation of the starvation problem .

The problem illustrates five philosophers who want to eat at a shared table. All of them have their own dishes, and there are five forks. However, each philosopher needs two forks to eat. This means that only two philosophers get to eat, and only the ones who are not sitting directly next to each other. When a philosopher is not eating, he puts both forks down. But there can be a situation in which a philosopher decides to overeat, leaving another to starve.

Luckily for low-level multithreading developers, it’s possible to use the semaphores we talked about above to solve this problem. The idea is to have a semaphore that keeps track of the used forks, so it can signal another philosopher when a fork is free to be used.

Race Conditions

A schematic diagram depicts the history log in the center, with Thread A and Thread B pointing towards it.

Multiple threads writing to the same file at the same time

If a history log is being written to by any threads with no control, events may be registered in a random order, and one thread may override the line another thread just wrote. For this reason, the History Log should be locked at the writing operation. A thread writing to it will get a mutex to the log, and it will free it after it’s done using it. Anyone else needing to write to the log will be waiting until the lock is freed.

Deadlocks and race conditions are very similar. The main difference, in deadlocks, is we have multiple processes waiting for the other to finish, whereas in race conditions we have both processes writing data and corrupting it because no processes know that the resource in question is being used by someone else. This means that the solutions for deadlocking also apply to race conditions , so just block your resource with a mutex or semaphore to guarantee exclusive access to the resource by one process only. This is called an Atomic Operation.

Livelocks

There is a saying in life that goes “you can have too much of a nice thing.” It is important to understand that throwing locks willy-nilly is not a solution to all multithreading problems. It may be tempting to identify a multithreading issue, throw a mutex at it, and call it a day. Unfortunately, it’s not so easy. You need to understand your problem and identify where the lock should go.

Similarly, this problem can occur when processes are too lenient with the conditions under which they can release and attain resources. If Thread A can ask Thread B for a resource and Thread B complies without any thought, and Thread B can do the same to Thread A, it may happen that eventually they will not be able to ask each other for the resource back because they can’t even get to that point of the execution.

This is a classic example in real life. Suppose you are walking towards a closed door and someone else arrives to it at almost the same time as you. You may want to let the other person go in first, but they may tell you that you should go first instead. If you are familiar with this awkward feeling, you know what a livelock feels like for our multithreaded programs.

Existing Multithreading and Concurrency Tools

Apple offers some lower-level tools to write multithreaded and concurrent code. We will show examples for some of them. They are listed from lower level to higher level. The lower the level, the harder it is to use that system correctly, and the less likely it is that you will encounter it in the real world anyway. You should be aware of these tools so you can be prepared to work with them if you ever need to.

pthreads

pthreads (POSIX Threads) are the implementation of a standard defined by the IEEE.1 The POSIX part of their name (Portable Operating System Interface) tells us that they are available in many platforms, and not only on Apple’s operating systems. Traditionally, hardware vendors used to sell their products offering their own, proprietary multithreading APIs. Thanks to this standard you can expect to have a familiar interface in multiple POSIX systems. The great advantage of pthreads is that they are available in a wide array of systems if they are POSIX compliant, including some Linux distributions.

The disadvantage is that pthreads are purely written in C. C is a very low-level programming language, and it is a language that is slowly fading from the knowledge base of many developers. The younger they are, the less likely they are to know C. While I do not expect C to disappear any time soon, the truth is that it’s very hard to find iOS developers who know the C programming language, let alone use it correctly. pthreads are the lowest-level multithreading APIs we have available, and as such they have a very steep learning curve. If you opt to use pthreads , it’s because you have highly specific needs to control the entire multithreading and concurrency flow, though most developers will not need to drop down to these levels often, if at all. You will be launching threads and managing resources such as mutex more manually. If you are familiar with pthreads because you worked with them in another platform, you can use your knowledge here too, but be aware future programmers who will maintain your code may not be familiar with either pthreads or the C language itself.

NSThreads

NSThread is a Foundation object provided by Apple. They are low level, but not as low level as pthreads. Since they are foundation objects, they offer an Objective-C interface . Offering this interface will expose the tool to many more developers, but as time goes on, less iOS developers are likely to know Objective-C. In fact, it’s entirely possible to find iOS developers with some years of experience who have never had to use this language before, although it can be used in Swift as well.

If you want to work with multithreading, you will end up creating multiple NSThread objects, and you are responsible for managing them all. Each NSThread object has properties you can use to check the status of the thread, such as executing, cancelled, and finished. You can set the priority of each thread which is a very real use case in multithreading. You can even get the main thread back and ask if the current thread is the main thread to avoid doing expensive work on it.

Just like pthreads , most developers will not have to drop down to this level often, if at all.

The Grand Central Dispatch (GCD)

Calling code on the main thread

Looks familiar? This is one of the most famous calls on the GCD because it allows you to defer some work quickly and painlessly to be done on the main thread . This call is likely to be found after a subclass of URLSessionTask finishes some work and you want to update your UI, for example.

The pyramid of doom

Listing 1-4 shows hypothetical code that would retrieve a user’s data and their favorite movies from somewhere. This usage is very typical with the GCD . When your work requires you to do more than one call that depends on the task of another, your code starts looking like a pyramid of doom . There are ways to solve this (like creating different functions for fetching the user data and their movies), but it’s not entirely elegant.

Despite its drawbacks, the GCD is a very popular way to create concurrent and multithreaded code. It has a lot of protections in place already for you, so you don’t have to be an expert in the theory of multithreading to avoid making mistakes. The samples we saw here only show a very tiny bit of functionality it offers. While the tool is high level enough to save you from many headaches, it exposes a lot of lower level functionality, and it gives you the ability to directly interact with some primitives such as semaphores .

Finally, this technology is open source, so it’s possible to find in platform outside of anything Apple develops. The GCD is big, and talking about its features would go outside the scope of this book, but be aware that it has been in use for a very long time and it’s possible you are going to see some advanced techniques with it in your career.

The NSOperation APIs

Sitting at a higher level than the GCD , the NSOperation APIs are a high-level tool to do multithreading. Despite the fact they are not as widely known as the GCD, they found their place in some parts of the SDK. For example, the CloudKit APIs make use of this technology.

Multithreaded usage of the NSOperation APIs

Using these APIs is very simple. You begin by creating an OperationQueue. This queue’s responsibility is to execute any task you add to it. You can also give it a name to refer to it later, or if you need to search for it.

In Listing 1-5 , we create two tasks (in this case, instances of BlockOperation – the original API is in Objective-C, so in Swift, it’s a closure), from1To10 and from11To20. They begin executing as soon as they are submitted to the queue via the OperationQueue.addOperation call. In this example, you will see that the numbers get printed almost in an interleaved manner. The results you get are going to be different each time you run the program.

It’s very easy to run multiple tasks at once, but what if you want to run one task after the other because one of them depends on the data of another, or simply because it makes sense to do so?

Adding operations as dependencies of other operations

It’s worth to note that while you don’t have thread management abilities with this framework, you can check the status of each operation (isCancelled, isFinished, etc.), and you can cancel your operations at any time (by calling cancel on it). If an operation gets cancelled, other operations that depended on it will also be cancelled.

Using the NSOperation APIs is simple, as you have evidenced. It can still be a great tool when you have simple multithreaded needs.

Introducing async/await

async/await is a high-level system to write concurrent and multithreaded code. By using it, you don’t have to think about manual thread management or deadlocks . The system will take care of all those details and more for you under the hood. By using very simple constructs, you will be able to write safe and powerful concurrent and multithreaded code. It is worth noting that this system is very high level. It’s hard to use it incorrectly. While the system won’t give you fine-grain control over the concurrency and multithreading primitives, it will give you a whole set of abstractions to think of multithreaded code very differently. Semantically, it’s hard to compare async/await with any of the other systems we have explored before, because async/await is deeply integrated into Swift, the language, itself. It’s not an add-on in the form of a framework or a library. The primitives this system exposes are easier to understand thanks to Swift’s focus on readability.

async/await in action

Listing 1-7 has gotten rid of a lot of code in Listing 1-4. You can see that the code that defers the results to the main thread is gone. You can also see that the code can be read from top to bottom – just like a normal procedural programming style would do! This version of the user data fetching API is simpler to read and simpler to write. @MainActor is what’s known as a global actor. We will explore actors and global actors later in the book. For now, know that the @MainActor will ensure that member updates within a function or class marked with it will run in the main thread .

By understanding when to use the system’s keywords directly (Listing 1-7 shows that async and await are keywords themselves) and the rest of the system’s primitives, you will be able to write concurrent and multithreaded code that any programmer will be able to understand. And to make things even better, if a developer is new to Apple platform development but they have experience with another technology that makes use of async/await , they will be able to quickly become familiar with the system. Your codebase will be cleaner and welcoming for new developers in your team.

Using async/await will prevent you from having to write low-level concurrent code. You will never manage threads directly. Locks are also not your responsibility at all. As you saw, this new system allows us to write expressive code, and pyramids of doom are a thing of the past.

It is important to remember that as much as a high-level system this is, you may find the extremely peculiar case in which you need a lower-level tool. That said, most developers will be able to go through their careers without finding a single use case to put async/await aside.

Requirements

To follow along, you will need to download at least Xcode 13 from the App Store. If you want to use async/await in iOS 14 and iOS 13, you will need Xcode 13.3. You should be comfortable reading and writing Swift code. Some examples will be written in SwiftUI so as to prevent the UI code distracting you from the actual contents of each chapter.

Summary

pthread s

NSThread s

The Grand Central Dispatch (GCD)

NSOperation and related APIs

async/await

Most developers will never need to go down to the pthread or NSThread level . The GCD , and NSOperation APIs provide higher-level abstractions, whereas “async/await” not only will provide abstractions for the multithreading primitives, but it will also provide a whole system that most developers can use without even knowing what the components underneath are. This new system allows us to write shorter code that is easier to read and easier to write. It’s worth studying this new system in detail, and that’s what this book is about.

Exercises

- 1.

When using traditional concurrency tools, what are some pitfalls you can fall into if you don’t use them correctly?

- 2.

Prior to async/await, what were some concurrency tools available on Apple’s platforms?

- 3.

What advantages does async/await have over other, lower-level concurrency tools?