10

Loading JSON Data

Although many JSON datasets are available, sometimes they are problematic to feed into a database. Often, at the start of a new programming project, a developer will be provided with some sample data that needs to be shoehorned onto the server, usually with little guidance other than “just get it on the server.” This chapter offers some examples of how to do just that.

From Download to Database

Our first example involves a list of US postal, or ZIP, codes. It can be downloaded from http://jsonstudio.com/resources/ and it is free. (There are other similar datasets available that could use the same steps.) Taking the data from a download and converting it into a useful database requires several steps that will be detailed in the pages to follow.

Step 1: Examine the Data

The data for this example is supplied in a file named zips.zip. To unpack it, use unzip zips.zip. This will produce a file named zips.json. Example 10-1 shows the first rows of the file.

Example 10-1 Examining the first rows of the zips.json datafile

The file contains almost 30,000 lines, with one record per line. Before you load all those lines into the server, it helps to determine some of the uses of that information. For illustration purposes, suppose we need to create a datafile in which a user entering a five-digit ZIP code would have city and state values returned. Or a user could enter a city and state set of values and receive the ZIP code.

But what does the data provide to us? It includes fields for city, loc, pop, state, and _id (which is the ZIP code itself). It makes sense to use _id as a primary key.

Step 2: Create the Table

ZIP codes require five numbers in length and some have a leading zero. MySQL’s INT fields will drop any leading zeros, but CHAR fields will not. In this case, the leading zeros need to be retained because they are important, so a CHAR field will be used.

Example 10-2 Creating a table for the JSON data

Step 3: Load the Data Using a Wrapper

Data often needs some tinkering to enable it to be imported into a database—even a schemaless database. We need a way to wrap each line of data into a SQL INSERT statement. This can be done with a very simple BASH script, as shown in Example 10-3.

Example 10-3 BASH script file to wrap the individual lines of zips.json with a SQL statement

This script reads the data from the zips.json file, line by line, and then echoes the content wrapped in a SQL statement. This script can have its output piped to a MySQL session or sent to a file, as shown in Example 10-4.

Example 10-4 Using the script from Example 10-3 to create a file with the generated SQL statements

![]()

You can see in Example 10-5 that the data is now in a proper format for inserting into the database.

Example 10-5 The first several lines of the output of the shell script, after transformation

Finally, we can load the data with a simple mysql -u root -p test < foo command.

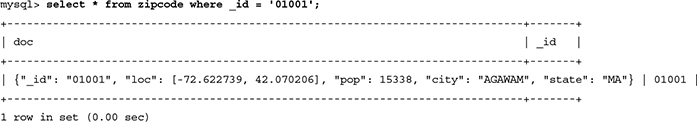

Step 4: Double-Check the Data

At this point, the data needs to be checked for quality. A good place to start is with the first record in the datafile to determine whether the data is complete. For ZIP codes, leading zeros are important and must be retained, so checking for an example with a leading zero assures that this was completed correctly.

Example 10-6 Examining a known good example from the database

Compare the first line in both the raw data and the foo file to ensure that they contain the same data. Then check the data in the database table. This also provides a way for you to check that the leading zero for the _id field has not been stripped (which would have occurred if an INT been used instead of a CHAR data type).

But what about looking up a ZIP code of a given a city and a state?

Example 10-7 Finding the ZIP code given the city and state fields

Many other validations can be performed on the data to spot-check the validity of the information, but the first tests are a good idea. From this point, you could take other steps to ease access of the data, such as using generated columns, views, stored procedures, or indexes on the data for future queries that are known to be desired at this point in development.

jq: JSON CLI Parser

Another option is the jq, a lightweight and flexible command-line JSON processor. This processor acts much like sed in that it enables you to slice, filter, map, and transform data from one format to another. For instance, it can be used to convert JSON data into CSV (comma-separated values) for loading into a non-JSON–columned MySQL database. You can download it from https://stedolan.github.io/jq/, and there is an online version at https://jqplay.org/ for experimentation. Plus, jq uses the Perl Compatible Regular Expressions (PCRE) parser, like many other languages. (This wonderful tool deserves to have much more written about it than the simple examples here, and reading the manual is a quick way to become acquainted with the many features of this tool.)

With No Arguments

With no arguments to jq, it will “pretty print” the JSON document. This is very handy for extremely complex documents with many layers of embedded objects and arrays that are hard to view on a single flat line.

Example 10-8 Using jq without arguments will “pretty print” the JSON document.

Select Certain Fields

On some occasions, not all the data in a JSON document will be of interest, and you can use jq to reform the data and provide only selected parts.

Example 10-9 Using jq to output only some of the data from the source JSON document

In Example 10-10, the value of the city key is converted into a value. Then that new key is given the value of the state key/value pair. The flexibility of jq can be a great asset.

Example 10-10 Modifying the data to convert a key into a value

The Restaurant Collection

MongoDB proved to be a popular NoSQL document store, and one of its example datasets is known as the restaurant collection. It is 25,359 lines of restaurant data that provides a good example for showing how to load data into MySQL. The entire collection can be downloaded from the URL listed in the appendix of this book.

First, notice that there is no _id key/value pair, unlike the ZIP code example. There is, however, a restaurant_id key/value pair that is unique for all the records. With working with InnoDB tables, it helps for you to have a primary key index of your choice. So the restaurant_id is an easy choice for use as a primary key.

Each line of this collection is a JSON object, bounded by curly brackets. Using a script similar to Example 10-3 to load the data seems like a good idea; however, this creates a problem in the data. Many of the records include apostrophes in the restaurant name, which will cause those records to fail with a SQL error—anything after the second apostrophe fails the syntax checker. To fix this, we need to change the single quote (') to a double single quote (''), which the server interprets as a properly escaped single quote in the middle of a literal. There are many ways of doing this, including using a favorite text editor. But large source files may be beyond the size that a text editor can handle. Linux users can use a stream editor such as sed. Once the single quotes are turned into double single quotes, the data can be fed into the server.

In Example 10-11, the data highlighted in bold italics shows what the MySQL syntax checker will actually check. The apostrophe in Kenny's will cause the checker to stop checking the SQL query. To fix this, we’d need to change Kenny's to Kenny''s so that the MySQL server would correctly escape the apostrophe in the name.

Example 10-11 The syntax checker will check data up to the apostrophe in the word “Kenny’s,” but the apostrophe will stop the checker in its tracks.

One of the many commands that we could use is sed. The sed utility is a stream editor from the early days of the UNIX operating system. It is easy to tell it to search for single, single quotes and turn them into two single quotes.

Example 10-12 Here, sed is used to convert single, single quotes into double single quotes.

![]()

Then we can use updated data with the loader script to create a table to feed to the database. This type of data cleaning is typical of what is needed to load third-party data into a database server.

Example 10-13 The example record after the data has been cleaned up and then fed into the server