Clustering Node.js Processes

In multithreaded systems, doing more work in parallel means spinning up more threads. But Node.js uses a single-threaded event loop, so to take advantage of multiple cores or multiple processors on the same computer, you have to spin up more Node processes.

This is called clustering and it’s what Node’s built-in cluster module does. Clustering is a useful technique for scaling up your Node application when there’s unused CPU capacity available. Scaling a Node application is a big topic with lots of choices based on your particular scenario, but no matter how you end up doing it, you’ll probably start with clustering.

To explore how the cluster module works, we’ll build up a program that manages a pool of worker processes to respond to ØMQ requests. This will be a drop-in replacement for our previous responder program. It will use ROUTER, DEALER, and REP sockets to distribute requests to workers.

In all, we’ll end up with a short and powerful program that combines cluster-based, multiprocess work distribution and load-balanced message-passing to boot.

Forking Worker Processes in a Cluster

Back in Spawning a Child Process, we used the child_process module’s spawn() function to fire up a process. This works great for executing non-Node processes from your Node program. But for spinning up copies of the same Node program, forking is a better option.

Each time you call the cluster module’s fork() method, it creates a worker process running the same script as the original. To see what I mean, take a look at the following code snippet. It shows the basic framework for a clustered Node application.

| | const cluster = require('cluster'); |

| | |

| | if (cluster.isMaster) { |

| | // fork some worker processes |

| | for (let i = 0; i < 10; i++) { |

| | cluster.fork(); |

| | } |

| | |

| | } else { |

| | // this is a worker process, do some work |

| | } |

First, we check whether the current process is the master process. If so, we use cluster.fork() to create additional processes. The fork method launches a new Node.js process running the same script, but for which cluster.isMaster is false.

The forked processes are called workers. They can intercommunicate with the master process through various events.

For example, the master can listen for workers coming online with code like this:

| | cluster.on('online', function(worker) { |

| | console.log('Worker ' + worker.process.pid + ' is online.'); |

| | }); |

When the cluster module emits an online event, a worker parameter is passed along. One of the properties on this object is process—the same sort of process that you’d find in any Node.js program.

Similarly, the master can listen for processes exiting:

| | cluster.on('exit', function(worker, code, signal) { |

| | console.log('Worker ' + worker.process.pid + ' exited with code ' + code); |

| | }); |

Like online, the exit event includes a worker object. The event also includes the exit code of the process and what operating-system signal (like SIGINT or SIGTERM) was used to halt the process.

Building a Cluster

Now it’s time to put everything together, harnessing Node clustering and the ØMQ messaging patterns we’ve been talking about. We’ll build a program that distributes requests to a pool of worker processes.

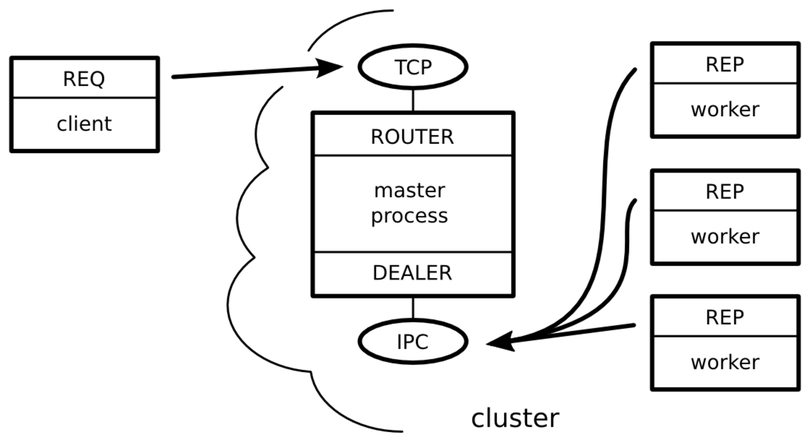

Our master Node process will create ROUTER and DEALER sockets and spin up the workers. Each worker will create a REP socket that connects back to the DEALER.

The following figure illustrates how all these pieces fit together. As in previous figures, the rectangles represent Node.js processes. The ovals are the resources bound by ØMQ sockets, and the arrows show which sockets connect to which endpoints.

Figure 8. A Node.js cluster that routes requests to a pool of workers

The master process is the most stable part of the architecture (it manages the workers), so it’s responsible for doing the binding. The cluster’s worker processes and clients of the service all connect to endpoints bound by the master process. Remember that the flow of messages is decided by the socket types, not which socket happens to bind or connect.

Now let’s get to the code. Open your favorite editor and enter the following.

| messaging/zmq-filer-rep-cluster.js | |

| Line 1 | 'use strict'; |

| - | const |

| - | cluster = require('cluster'), |

| - | fs = require('fs'), |

| 5 | zmq = require('zmq'); |

| - | |

| - | if (cluster.isMaster) { |

| - | |

| - | // master process - create ROUTER and DEALER sockets, bind endpoints |

| 10 | let |

| - | router = zmq.socket('router').bind('tcp://127.0.0.1:5433'), |

| - | dealer = zmq.socket('dealer').bind('ipc://filer-dealer.ipc'); |

| - | |

| - | // forward messages between router and dealer |

| 15 | router.on('message', function() { |

| - | let frames = Array.prototype.slice.call(arguments); |

| - | dealer.send(frames); |

| - | }); |

| - | |

| 20 | dealer.on('message', function() { |

| - | let frames = Array.prototype.slice.call(null, arguments); |

| - | router.send(frames); |

| - | }); |

| - | |

| 25 | // listen for workers to come online |

| - | cluster.on('online', function(worker) { |

| - | console.log('Worker ' + worker.process.pid + ' is online.'); |

| - | }); |

| - | |

| 30 | // fork three worker processes |

| - | for (let i = 0; i < 3; i++) { |

| - | cluster.fork(); |

| - | } |

| - | |

| 35 | } else { |

| - | |

| - | // worker process - create REP socket, connect to DEALER |

| - | let responder = zmq.socket('rep').connect('ipc://filer-dealer.ipc'); |

| - | |

| 40 | responder.on('message', function(data) { |

| - | |

| - | // parse incoming message |

| - | let request = JSON.parse(data); |

| - | console.log(process.pid + ' received request for: ' + request.path); |

| 45 | |

| - | // read file and reply with content |

| - | fs.readFile(request.path, function(err, data) { |

| - | console.log(process.pid + ' sending response'); |

| - | responder.send(JSON.stringify({ |

| 50 | pid: process.pid, |

| - | data: data.toString(), |

| - | timestamp: Date.now() |

| - | })); |

| - | }); |

| 55 | |

| - | }); |

| - | |

| - | } |

Save this file as zmq-filer-rep-cluster.js. This program is a little longer than our previous Node programs, but it should look familiar to you since it’s based entirely on snippets we’ve already discussed.

Notice that the ROUTER listens for incoming TCP connections on port 5433 on line 11. This allows the cluster to act as a drop-in replacement for the zmq-filer-rep.js program we developed earlier.

On line 12, the DEALER socket binds an interprocess connection (IPC) endpoint. This is backed by a Unix socket like the one we used in Listening on Unix Sockets.

By convention, ØMQ IPC files should end in the file extension ipc. In this case, the filer-dealer.ipc file will be created in the current working directory that the cluster was launched from (if it doesn’t exist already).

Let’s run the cluster program to see how it works:

| | $ node --harmony zmq-filer-rep-cluster.js |

| | Worker 37174 is online. |

| | Worker 37172 is online. |

| | Worker 37173 is online. |

So far so good—the master process spun up the workers, and they’ve all reported in. In a second terminal, fire up our REQ loop program (zmq-filer-req-loop.js):

| | $ node --harmony zmq-filer-req-loop.js |

| | Sending request 1 for target.txt |

| | Sending request 2 for target.txt |

| | Sending request 3 for target.txt |

| | Received response: { pid: 37174, data: '', timestamp: 1371652913066 } |

| | Received response: { pid: 37172, data: '', timestamp: 1371652913075 } |

| | Received response: { pid: 37173, data: '', timestamp: 1371652913083 } |

Just like our earlier reply program, this clustered approach answers each request in turn.

But notice that the reported process ID (pid) is different for each response received. This shows that the master process is indeed load-balancing the requests to different workers.

Now you have the tools to build a Node.js cluster. There are a few more benefits to using the cluster module for managing worker processes. We’ll come to those in Chapter 6, Scalable Web Services.

Up next, we’ll examine one more messaging pattern offered by ØMQ before closing out the chapter.