3. Oracle Solaris Zones

Operating system virtualization (OSV) was virtually unknown in 2005, when Solaris 10 was released. That release of Solaris introduced Solaris Zones—also called Solaris Containers, and now called Oracle Solaris Zones—making it the first fully integrated, production-ready implementation of OSV. In response, several other implementations have been developed or are planned for other operating systems (OS). Zones use a basic model similar to an earlier technology called jails. Chapter 1, “Introduction to Virtualization,” provided a complete description of OSV. To summarize the key points here, OSV creates virtual OS instances, which are software environments in which applications run; these instances are isolated from each other but share one copy of an OS, or part of an OS and one OS kernel.

Oracle Solaris Zones provide a software environment that appears to be a complete OS instance from within the zone. To a process running in a zone, the primary differences are effects of the robust yet configurable security boundary around each zone. Zones offer a very rich set of features, most of which were originally features of Solaris, but then were applied to zones. The tight level of integration between zones and the rest of Solaris minimizes software incompatibility and improves the overall “feel” of zones. Zones provide the following capabilities:

![]() Configurable isolation and security boundaries

Configurable isolation and security boundaries

![]() Multiple namespaces—one per zone

Multiple namespaces—one per zone

![]() Flexible software packaging, deployment, and flexible file system assignments

Flexible software packaging, deployment, and flexible file system assignments

![]() Resource management controls

Resource management controls

![]() Network access

Network access

![]() Optional direct access to devices

Optional direct access to devices

![]() Centralized or localized patch management

Centralized or localized patch management

![]() Management of zones (e.g., configure, boot, halt, migrate)

Management of zones (e.g., configure, boot, halt, migrate)

This chapter describes the most useful features that can be used with zones, and offers reasons to use them. Although a complete description of each of these features is beyond the scope of this book, more details can be found at docs.oracle.com. The next few sections describe these features and provide simple command-line examples of their usage.

This chapter assumes that you have experience with a UNIX/Linux operating system and are familiar with other Solaris features, including the Solaris 11 packaging system (IPS) and ZFS.

The command examples in this chapter use the prompt GZ# to indicate that a privileged user has assumed the root role so as to execute commands in the computer’s global zone. The prompt zone1# indicates that a command is entered as a privileged user who has assumed the root role in the zone named zone1.

3.1 Introduction

Since their release at the beginning of 2005 as a new feature set of Solaris 10, Oracle Solaris Zones have become a standard environment in many data centers. Further, Solaris Zones are a key architectural element in many Oracle Optimized Solutions. This widespread adoption has been driven by many factors, including zones’ isolation, resource efficiency, observability, configurable security, flexibility, ease of management, and no-cost inclusion in Solaris.

As a form of operating system virtualization, Solaris Zones provide software isolation between workloads. A workload in one zone cannot interact with a workload in another zone. This isolation provides a high level of security between workloads.

Zones are also among the most resource-efficient server virtualization technologies. Their implementation does not insert additional instructions into the code path followed by an application, unlike most hypervisors. For this reason, applications running in zones rarely suffer from excess performance overhead, unlike many other types of virtualization.

Chapter 1 discussed another advantage of OSV over virtual machines: observability. From the management area, called the global zone, a system administrator with appropriate privileges can observe the actions occurring inside zones. This insight improves the system’s manageability, making it easier to develop a holistic, yet detailed view of the consolidated environment.

Combining multiple workloads into one larger system can enable one workload to temporarily run faster because more resources are available to it. In contrast, a prolonged state of overutilization can prevent another workload from meeting its desired response time. Solaris includes a comprehensive set of resource management features that may be used to limit resource consumption in such situations.

To tailor your implementation of zones, you can choose from among a variety of Solaris Zone structures and characteristics. You can also choose the best model for your environment based on the characteristics and features it offers.

The original model, often called “native zones,” reduces the labor involved in managing many virtual environments (VEs). Most software packaging operations are executed in the global zone and applied to all of these zones automatically. Management is easiest from the global zone, but all of these zones are tied to the global zone, and are updated together as a unit.

Immutable Zones are a variant of the previous model, and offer the most robust security. The Solaris file systems in an Immutable Zone cannot be changed from within the zone, even by a privileged user. This model is the ideal choice for web-facing workloads, which are always the most accessible to malicious users.

New to Solaris 11.2, Kernel Zones offer more independence between a zone and the system’s global zone. This model is appropriate when a zone will be managed as a separate entity. One feature that sets kernel zones apart from other types of zones is the ability to move them to a different computer while they are running—that is, live migration.

Solaris 10 Zones are a Solaris 10 software environment in a Solaris 11 system. Software that will not run in a Solaris 11 environment may run in a Solaris 10 Zone instead. These zones are not affected by the package management operations that modify native zones.

Other types also exist, such as cluster zones and labeled zones. This book does not describe them in detail.

The rest of this chapter describes the features that can be modified to tailor the characteristics of Oracle Solaris Zones.

3.2 What’s New in Oracle Solaris 11 Zones

Solaris 10 users may benefit from a concise list of the new features and significant changes in Solaris 11 Zones:

![]() Kernel Zones offer increased isolation and flexibility, and enable live migration.

Kernel Zones offer increased isolation and flexibility, and enable live migration.

![]() Immutable Zones enhance security and manageability by preventing changes to the operating environment.

Immutable Zones enhance security and manageability by preventing changes to the operating environment.

![]() IPS simplifies Solaris software packaging and ensures consistent and supportable configurations.

IPS simplifies Solaris software packaging and ensures consistent and supportable configurations.

![]() Integration with the Solaris 11 Automated Installer enables automated provisioning of systems with Solaris Zones.

Integration with the Solaris 11 Automated Installer enables automated provisioning of systems with Solaris Zones.

![]() Solaris Unified Archives dramatically increase the flexibility of deployment and recovery, including any-to-any transformation.

Solaris Unified Archives dramatically increase the flexibility of deployment and recovery, including any-to-any transformation.

![]() Multiple per-zone boot environments improve the system’s availability and flexibility.

Multiple per-zone boot environments improve the system’s availability and flexibility.

![]() Solaris 10 Zones deliver an operating environment that is compatible with Solaris 10.

Solaris 10 Zones deliver an operating environment that is compatible with Solaris 10.

![]() Zones may be NFS servers.

Zones may be NFS servers.

![]() Integration with network virtualization features improves the network isolation of high-scale environments, and enables the creation of arbitrary network architectures within one Solaris instance.

Integration with network virtualization features improves the network isolation of high-scale environments, and enables the creation of arbitrary network architectures within one Solaris instance.

![]() Live zone reconfiguration improves the availability of Solaris Zones.

Live zone reconfiguration improves the availability of Solaris Zones.

![]() Zone monitoring is greatly simplified with a new command,

Zone monitoring is greatly simplified with a new command, zonestat(1M).

These new features and changes are described in this chapter.

3.3 Feature Overview

The Solaris Zones feature set was introduced in the initial release of Solaris 10 in 2005, and later extended in Solaris 11. The Solaris Zones implementation of OSV includes a rich set of capabilities. This section describes the features of zones and provides brief command-line examples illustrating the use of those features.

Solaris Zones are characterized by a high degree of isolation, with the separation between them being enforced by a robust security boundary. They serve as the underlying framework for the Solaris Trusted Extensions feature set. Trusted Extensions has achieved the highest commonly recognized global security certification, which is a tribute to the robustness of the security boundary around each zone.

The Oracle Solaris 10 documentation used two different terms to refer to combinations of its OSV feature set: containers and zones. Solaris 11 no longer distinguishes between those terms, and this book uses the term zones exclusively. However, Solaris Zones are similar in many ways to other OSV implementations that have been added to other operating systems since the release of Solaris Zones. Many of those implementations are also called “containers.”

This chapter describes the features of a Solaris 11.3 system, providing brief examples of their use. Some of the features described in this section did not exist in early updates to Solaris 11. To determine the availability of a particular feature, see the “What’s New” documents for Solaris 11 at docs.oracle.com.

3.3.1 Basic Model

When you install Oracle Solaris 11 on a system, the original operating environment—a traditional UNIX-like system—is also called the global zone. A sufficiently privileged user running in the global zone can create “non-global zones,” which are usually called “zones” for simplicity. Several types of zones exist. We will call the default “native zones” or simply “zones” when there is no ambiguity.

Most types of zones cannot contain other zones, as shown in Figure 3.1. The exception is kernel zones, which may contain their own non-global zones. If a kernel zone does have its own zones, we will call its global zone the kernel global zone; its non-global zones are called “hosted zones.” However, we discourage nesting zones in this manner, due to the additional complexity and lack of compelling use cases for this practice.

In a system with Solaris Zones, the global zone is the platform management area with the sole purpose of managing zones and the system’s hardware and software resources. It is similar to the control domain (or “dom0”) of a hypervisor-based system in two ways:

![]() The global zone requires resources to operate, such as CPU cycles and RAM. If an application running in the global zone consumes too much of a resource needed by the global zone’s management tools, it may become difficult or even impossible to manage the system.

The global zone requires resources to operate, such as CPU cycles and RAM. If an application running in the global zone consumes too much of a resource needed by the global zone’s management tools, it may become difficult or even impossible to manage the system.

![]() Users and processes in the global zone are not subject to all of the rules of zones. In terms of process observability, for example, a process in one zone cannot detect processes in other zones. As with a one-way mirror, processes in the global zone—even non-privileged ones—can detect processes in the zones, but users in the zones cannot see processes in the global zone. There is one exception to this observability rule: Processes in a kernel zone are invisible to processes in the global zone.

Users and processes in the global zone are not subject to all of the rules of zones. In terms of process observability, for example, a process in one zone cannot detect processes in other zones. As with a one-way mirror, processes in the global zone—even non-privileged ones—can detect processes in the zones, but users in the zones cannot see processes in the global zone. There is one exception to this observability rule: Processes in a kernel zone are invisible to processes in the global zone.

For these reasons, we recommend limiting the set of users who can access the global zone to system administrators, and limiting their activities to tasks related to the management of hardware and system software.

The system administrator uses Solaris commands in the global zone to perform tasks directly related to hardware, including installation of device driver software. The Solaris Fault Management Architecture (FMA) is also managed from the global zone, because its tools require direct access to hardware. Most zones do not have direct access to hardware. Zone management tools such as zonecfg(1M) and zoneadm(1M) can be used only in the global zone.

A kernel zone is a special case. It can manage the resources that are available to it, but cannot manage those resources that have not been assigned to it. As an example, suppose you assign two CPU cores to a kernel zone. The kernel zone can then manage those two CPU cores, but it cannot use other cores at all. In general, we will use the phrase “global zone” to mean the base instance of Solaris running on the hardware, with direct access to all hardware, rather than to mean the operating environment of a kernel zone.

Zones have their own life cycle, which remains somewhat separate from the life cycle of the global zone. After Solaris has been installed and booted, zones can be configured, installed, booted, and used as if they were discrete systems. They can easily and quickly be booted, halted, and rebooted. In fact, booting or halting a zone takes less than 5 seconds, plus any time needed to start or halt applications running in the zone. When you no longer need a zone, you can easily destroy it.

One difference between Solaris Zones and virtual machines is the relationship between the VE and the underlying infrastructure. Zones do not use processes in the global zone to perform any tasks, except the occasional use of management features. The processes associated with a zone access the Solaris kernel directly, using exactly the same methods—system calls—as they use when they are not running in a zone. When a zone’s process accesses an I/O device, or a kernel resource, it uses the same methods and the same code. This consistency explains the lack of performance overhead incurred by an application running in a native zone.

Kernel zones use a different method, because they run their own copies of the Solaris kernel. A kernel zone’s kernel makes efficient, customized calls into the global zone’s kernel so as to access devices. This method is similar in some ways to the paravirtualization used by some virtual machines.

Zones are very lightweight entities, because they are not an entire operating system instance. They do not need to test the hardware or load a kernel or device drivers. Instead, the base OS instance does all that work once—and each zone then benefits from this work when it boots and then accesses the hardware, if permitted by the list of privileges configured for the zone (see Section 3.3.2.1 for a discussion of privileges). Given these characteristics, zones, by default, use very few resources.

A default configuration uses approximately 3 GB of disk space, and approximately 90MB of RAM when running; other types of zones may use more space. When it is not running, a zone does not use any RAM at all. Further, because there is no virtualization layer that runs on a CPU, zones have negligible performance overhead. (All OSV technologies should have this trait.) In other words, a process in a zone follows the same code path that it would outside of a zone unless it attempts to perform an operation that is not allowed in a zone. Because it follows the same code path, it has the same performance.

Solaris Zones were originally designed to support VEs that use the same version of Solaris. To enable the use of other versions (called “brands”), a framework was created to perform translations of low-level computer operations.

One of these brands is Solaris 10. A Solaris 10 Zone behaves just as a Solaris 10 system does, with a few exceptions. Another brand implements low-level high-availability (HA) functionality for Solaris Cluster. The newest brand is kernel zones, which are described later in this chapter.

Although a zone is a slightly different environment from a normal Oracle Solaris system, a basic design principle underlying all zones is software compatibility. A good rule of thumb is this: “If an application runs properly as a non-privileged user in a Solaris 11 system that does not have zones, it will run properly in a native zone.” Nevertheless, some applications must run with extra privileges—traditionally, applications that must run as a privileged user. These applications may not be able to gain the privileges they need and, therefore, may not run correctly in a zone. In some cases, it is possible to enable such applications to run in a zone by adding more privileges to that zone, a topic discussed later in this chapter. Kernel zones do not have this limitation, as we shall see later.

3.3.2 Isolation

The primary purpose of zones is to isolate workloads that are running on one Oracle Solaris instance. Although this functionality is typically used when consolidating workloads onto one system, placing a single workload in a single zone on a system has a number of benefits. By design, the isolation provided by zones includes the following factors:

![]() Each zone has its own objects: processes, file system mounts, network interfaces, and System V IPC objects.

Each zone has its own objects: processes, file system mounts, network interfaces, and System V IPC objects.

![]() Processes in one zone are prevented from accessing objects in another zone.

Processes in one zone are prevented from accessing objects in another zone.

![]() Processes in different zones are prevented from directly communicating with each other, except for typical intersystem network communication.

Processes in different zones are prevented from directly communicating with each other, except for typical intersystem network communication.

![]() A process in one zone cannot obtain any information about a process running in a different zone—even confirmation of the existence of such a process.

A process in one zone cannot obtain any information about a process running in a different zone—even confirmation of the existence of such a process.

![]() Each zone has its own namespace and can choose its own naming services, which are mostly configured in

Each zone has its own namespace and can choose its own naming services, which are mostly configured in /etc. For example, each zone has its own set of users (via LDAP, /etc/passwd, and other means).

![]() Architecturally, the model of one application per OS instance maps directly to the model of one application per zone while reducing the number of OS instances to manage.

Architecturally, the model of one application per OS instance maps directly to the model of one application per zone while reducing the number of OS instances to manage.

In addition to the functional or security isolation constraints listed here, zones provide for resource isolation, as discussed in the next section.

3.3.2.1 Oracle Solaris Privileges

The basis for a zone’s security boundary is Solaris privileges. Thus, understanding the robust security boundary around zones starts with an understanding of Solaris privileges.

Oracle Solaris implements two sorts of rights management. User rights management determines which privileged commands a non-privileged user might execute. Consider the popular sudo program as an example of this kind of rights management. Process rights management determines which low-level, fine-grained, system-call–level actions a process can carry out.

Oracle Solaris privileges implement process-rights management. Privileges are associated with specific actions—usually actions that are not typically permitted for non-privileged users. For example, there is a Solaris privilege associated with modifying the system’s clock. Normally, only privileged users are permitted to change the clock. Solaris privileges reduce security risks: Instead of giving a person the root password just so that person can modify the system clock, the person’s user account is given the appropriate privilege. The user is not permitted to perform any other actions typically reserved for the root role. Instead, the Solaris privileges allow the system administrator to grant a process just enough privilege to carry out its function but no more, thereby reducing the system’s exposure to security breaches or accidents.

For that reason, in contrast to the situation noted with earlier versions of Solaris and with many other UNIX-like operating systems, the root role in Oracle Solaris is able to perform any operation not because its UID number is zero, but rather because it has the required privileges. However, a privileged user can grant privileges to another user, enabling specific users to perform specific tasks or sets of tasks. When a process attempts to perform a privileged operation, the kernel determines whether the owner of the process has the privilege(s) required to perform the operation. If the user—and therefore the user’s process—has that privilege, the kernel permits that user to perform the associated operation.

3.3.2.2 Zones Security Boundary

A zone has a specific configurable subset of all privileges. The default subset provides normal operations for the zone, and prevents the zone’s processes from learning about or interacting with other zones’ users, processes, and devices. The root role in the zone inherits all of the privileges that the zone has. Non-privileged users in a zone have, by default, the same set of privileges that non-privileged users in the global zone have.

The platform administrator can configure zones as necessary, including increasing or decreasing the maximum set of privileges that a zone has. No user in that zone can exceed that maximum set—not even privileged users. Those privileged users can modify the set of privileges assigned to the various users in that zone, but cannot modify the set of privileges that the overall zone can have. In other words, the maximum set of privileges that a zone has cannot be escalated from within the zone. At the same time, processes with sufficient privileges, running within the global zone, can interact with processes and other types of objects in zones. This type of interaction is necessary for the global zone to manage zones. For example, the privileged users in the global zone must be able to diagnose a performance issue caused by a process in one zone. They can use DTrace to accomplish this task because privileged processes in the global zone can interact with processes in zones in certain ways.

Also, unprivileged users in the global zone can perform some operations that are commonplace on UNIX systems, but that are unavailable to non-privileged users in a zone. A simple example is the ability to list all processes running on the system, whether they are running in zones or not. For some systems, this capability is another reason to prevent user access to the global zone.

The isolation of zones is very thorough in Oracle Solaris. The Solaris Zones feature set is the basis for the Solaris Trusted Extensions feature set, and the capabilities of Solaris Trusted Extensions are appropriate for systems that must compartmentalize data. Solaris 11 with Solaris Trusted Extensions achieved Common Criteria Certification at Evaluation Assurance Level (EAL) 4+, the highest commonly recognized global security certification. This certification allows Solaris 11 to be deployed when multilevel security (MLS) protection and independent validation of an OS security model is required. Solaris 11 achieved this certification for SPARC and x86-based systems, for both desktop and server functionality.

The isolation of zones is implemented in the Oracle Solaris kernel. As described earlier, this isolation is somewhat configurable, enabling the global zone administrator to customize the security of a zone. By default, the security boundary around a zone is very robust. This boundary can be further hardened by removing privileges from the zone, which effectively prevents the zone from using specific features of Solaris. The boundary can be selectively enlarged by enabling the zone to perform specific operations such as setting the system clock.

The entire list of privileges can be found on the privileges(5) man page. Table 3.1 lists the privileges that are most commonly used to customize a zone’s security boundary. The third column in Table 3.1 indicates whether the privilege is part of the default privilege set for zones. Note that some nondefault settings described elsewhere, such as ip-type=shared, change the list of privileges automatically.

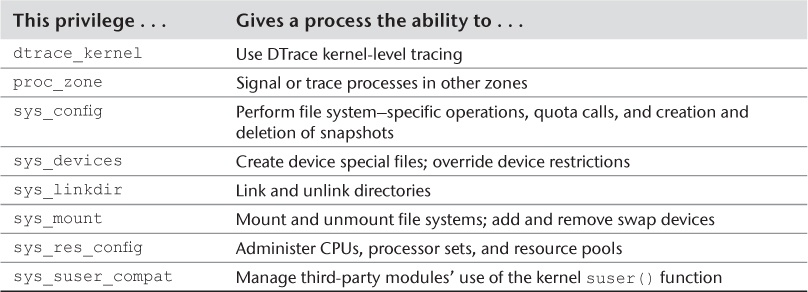

Some privileges can never be added to a zone. These privileges control hardware components directly (e.g., turning a CPU off) or control access to kernel data. The prohibition against accessing the kernel data is intended to prevent one zone from examining or modifying data about another zone. Table 3.2 lists these privileges.

The configurable security of zones is very powerful and flexible. In addition to the privileges listed in Table 3.1, each zone has additional properties that you can use to tailor the security boundary and capabilities of Solaris Zones. Table 3.3 lists these resources and properties and indicates the section in this book that describes them.

3.3.3 Namespaces

Each zone has its own namespace. In UNIX systems, a namespace is the complete set of recognized names for entities such as users, hosts, printers, and others. In other words, a namespace represents a mapping of human-readable names to names or numbers that are more appropriate to computers. The user namespace maps user names to user identification numbers (UIDs). The host name namespace maps host names to IP addresses. As in any Oracle Solaris system, namespaces in zones can be managed using the /etc/nsswitch.conf file.

One simple outcome of having an individual namespace per zone is separate mappings of user names to UIDs. When managing zones, remember that a user in one zone with UID 238 is different from a user in another zone with UID 238. This concept should be familiar to people who have managed NFS clients. Also, each zone has its own Service Management Facility (SMF). SMF starts, monitors, and maintains network services such as SSH. As a consequence, each zone appears on the network just like any other Solaris system, using the same well-known port numbers for common network services.

3.3.4 Brands

Each zone includes a property called its brand. A zone’s brand determines how it interacts with the Oracle Solaris kernel. Most of this interaction occurs via Solaris system calls. A native zone uses the global zone’s system calls directly, whereas other types add a layer of software that translates the zone’s system call definitions into the system calls provided by the kernel for that Solaris distribution. A kernel zone has its own kernel, which uses a special mechanism to communicate with the system’s global zone.

Each Solaris distribution—for example, Solaris 11—has a default brand for its zones. The default brand for Solaris 11 is called solaris, but other brands exist for Solaris 11. Table 3.4 lists the current set of brands.

3.3.5 Packaging and File Systems

Solaris engineers tightly integrated the different feature sets of Solaris so that each benefits from the capabilities of the others. This section describes the integration of zones, IPS, and ZFS.

3.3.5.1 File System Location

A zone’s Solaris content exists in ZFS file systems. By default, the file systems are part of the global zone’s root pool. You can choose to implement a zone in its own pool, which enables zone mobility. Non-Solaris content, such as application data, may be stored in ZFS or non-ZFS file systems.

A set of ZFS file systems is created for each native zone. This step is necessary so that the Solaris 11 packaging system and boot environments will work correctly. In turn, the zone benefits from all of the advantages of ZFS.

Most zones use ZFS storage that is managed in the global zone. Such an approach to storage uses ZFS mirroring or RAIDZ to protect against data losses due to disk drive failure, and automatically benefits from ZFS checksums, which protect against myriad forms of data corruption.

When you create a zone, Oracle Solaris creates the necessary file systems, in which all of the zone’s directories and files reside. The highest level of those file systems is mounted on the zone’s zonepath, one of the many properties of a zone. The default zonepath is /system/zones/<zonename>, but you can also change it to a valid file system name in a ZFS pool.

3.3.5.2 Packaging

Solaris 11 uses IPS for operating system and application software package management. IPS and related features enable you to manage the entire life cycle of software in a Solaris environment, including package creation, manual and automated deployment of Solaris and applications, and updating of those software packages. This section assumes that you are already familiar with IPS, so it does not describe this system’s features except in terms of their application to Solaris Zones. For more information on IPS and related features such as the Automated Installer and Distribution Constructor, see the Oracle Solaris Information Library at www.oracle.com.

With IPS and its related features, you can perform the following functions:

![]() Understand the set of packages and their contents that are installed in a native zone or that are available to a native zone

Understand the set of packages and their contents that are installed in a native zone or that are available to a native zone

![]() Add optional software to a zone, and update or remove that software

Add optional software to a zone, and update or remove that software

![]() Create and destroy boot environments in a native zone

Create and destroy boot environments in a native zone

![]() Create repositories in a zone, including Solaris repositories and repositories of other software

Create repositories in a zone, including Solaris repositories and repositories of other software

In the context of IPS, an “image” is an installed set of packages. An image that can be booted is called a boot environment (BE). An instance of Solaris can have one or more BEs. Each BE consists of several ZFS file systems, but in general you do not manage the file systems individually. You use the command beadm(1M) to manage BEs, including creating and destroying them, activating the BE to be booted next, and mounting a BE temporarily to view or modify it, among other tasks.

A native zone in a S11 instance is called a “linked image.” Each linked image has its own copy of most of the Solaris package’s content, but not all of it. Its Solaris content has hard-coded dependencies on some global zone packages; that is, these dependencies cannot be modified by the user. In the documentation, this type of linking is occasionally called a “parent–child” relationship, where the global zone is the parent and the native zone’s linked image is the child.

Every native zone has a BE that matches a BE in the global zone. However, global zone BEs that predate the installation of a native zone will not have a matching BE in the native zone.

3.3.5.3 Updating Packages

Solaris IPS greatly simplifies the process of updating Solaris on a computer. When used in a system without zones, the following command will update all of the packages maintained by IPS to the newest versions available:

GZ# pkg update

If certain core packages will be updated, Solaris automatically creates a new BE, using ZFS snapshots, and prepares the system to use that BE when Solaris is next rebooted. If a new BE is not needed, the software updates may be available immediately.1

1. We advise the frequent use of ZFS snapshots as an “undo” feature.

When you update Solaris in the global zone using that method, all of the native zones are automatically updated. This operation will also update all of the packages installed in the global zone. If you have installed additional packages in native zones, they will be updated as well.

By default, native zones are updated serially. If desired, you can use the -C option to indicate that a certain number of zones should be updated simultaneously.

In addition, you can choose to update any packages that you installed separately into a zone. However, as with all updates, IPS will update these packages according to the dependency rules that are configured into each package. For example, you might find that you must update the global zone first, before updating a specific package in a zone.

3.3.6 Boot Environments

Solaris 11 automatically uses multiple boot environments, which are related bootable Solaris environments of one Solaris instance. The name and the purpose of this feature are similar to those of the corresponding features in Solaris 10 boot environments, but the implementation in Solaris 11 is new, and much more tightly integrated with other Solaris 11 features such as ZFS and zones. This level of integration leverages the combined strengths of the BEs and the other Solaris 11 features.

When you update Solaris 11, it automatically creates a new boot environment that will be used the next time that Solaris boots. When it creates that boot environment, it also creates a new boot environment for each native zone that is currently installed. Moreover, rebooting into a different boot environment configures the associated BEs configured for those zones. When any one of them boots, it will boot into the BE associated with the global zone’s currently running BE.

You should be aware of some subtle side effects of this implementation. If you install Solaris, and then update it, create a zone, and reboot into the original BE, it will seem as if the zone has disappeared. That is an illusion, however: The zone is still available in the updated BE. However, because it did not exist when the original BE was running, booting back into that BE returns the system to its state at the time when the original BE was running—a state that did not include the zone.

A zone can only boot into a BE associated with the BE currently running in the global zone. The output from the command beadm list marks unbootable BEs with the flag “!”. However, a privileged user in a native zone can create a new BE that will be associated with the currently running BE in the global zone. At that point, the zone will have two BEs from which it can choose. Either BE may be modified, and a privileged user in that zone may activate either BE and reboot the zone.

3.3.7 Deployment

You can use a few different methods to deploy native zones. The most basic method requires the use of two Solaris commands, zonecfg(1M) and zoneadm(1M). The Solaris Automated Installer can install zones into a system while it is also installing Solaris. Solaris Unified Archives can store a zone or a Solaris instance that contains one or more zones. The rest of this section will describe the two zone administrative commands, as well as zone-specific information for Unified Archives.

Using the command zonecfg, you can specify a zone’s configuration or modify the configuration, potentially while the zone is running. The zoneadm command controls the state of a zone. For example, you would use zoneadm to install Solaris into a zone, or to boot a zone.

Solaris Unified Archives enable you to manage archives of Solaris environments, both physical and virtual. The Unified Archives tools also perform transformations during deployment, which means converting an archive of a physical system into a virtual environment, or vice versa. Further, an archive of a physical system can include its Solaris Zones, and they can be deployed individually or together.

The Unified Archives command can create two types of archives: system recovery archives and clone archives. System recovery archives are useful for restoring a system during a disaster recovery event. In contrast, clone archives allow you to easily provision a large number of almost identical systems or zones. Unified Archives are described in detail later in this chapter.

3.3.8 Management

As the global zone administrator, you can configure one or more other global zone users as administrator(s) of a zone. The following abilities can be delegated to a zone administrator, as described later in this chapter:

![]() Use

Use zlogin(1) from the global zone, to access the zone as a privileged user

![]() Perform management activities such as installing, booting, and migrating the zone

Perform management activities such as installing, booting, and migrating the zone

![]() Clone the zone to create a new one

Clone the zone to create a new one

![]() Modify the zone’s configuration for the next boot or reboot

Modify the zone’s configuration for the next boot or reboot

![]() Temporarily modify the zone’s configuration while it runs

Temporarily modify the zone’s configuration while it runs

3.4 Feature Details

Features that can be applied to zones include all of the configuration and control functionality you would expect from an operating system and from a virtualization solution. Fortunately, almost all of the features specific to zones are optional. Thus, you can investigate and use each set of features separately from the others.

The zone-related features can be classified into the following categories:

![]() Creation and basic management, such as booting

Creation and basic management, such as booting

![]() Packaging

Packaging

![]() File systems

File systems

![]() Security

Security

![]() Resource controls

Resource controls

![]() Networking

Networking

![]() Device access

Device access

![]() Advanced management features, such as live migration

Advanced management features, such as live migration

Many of the configuration features may be modified while the zone runs. For example, you can add a new network interface to a running zone. This ability is described in a later section, “3.4.7.2 Live Zone Reconfiguration.”

The following sections describe and demonstrate the use of these features.

3.4.1 Basic Operations

The Solaris Zones feature set includes both configuration tools and management tools. In the typical case, you will begin the configuration of a zone using one command. After you have configured the zone, Solaris stores the configuration information. Management operations, such as installing and booting the zone, are performed with another command; this section describes those commands.

3.4.1.1 Configuring an Oracle Solaris Zone

The first step in creating a zone is to configure it with at least the minimum information.

All initial zone configuration is performed with the zonecfg(1M) command, as illustrated in the following example. The first command merely shows that there are no non-global zones on the system yet.

GZ# zoneadm list -cv

ID NAME STATUS PATH BRAND IP

0 global running / solaris shared

GZ# zonecfg -z myzone

myzone: No such zone configured

Use 'create' to begin configuring a new zone.

zonecfg:myzone> create

create: Using system default template 'SYSdefault'

zonecfg:myzone> exit

GZ# zoneadm list -cv

ID NAME STATUS PATH BRAND IP

0 global running / solaris shared

- myzone configured /zones/roots/myzone solaris excl

GZ# zonecfg -z myzone info

zonename: myzone

zonepath: /system/zones/myzone

brand: solaris

autoboot: false

autoshutdown: shutdown

bootargs:

file-mac-profile:

pool:

limitpriv:

scheduling-class:

ip-type: exclusive

hostid:

tenant:

fs-allowed:

anet:

linkname: net0

lower-link: auto

...

The output from the info subcommand of zonecfg shows all of the global properties of the zone as well as some default settings. Table 3.5 lists most of the global properties and their meanings. Other properties are discussed in the subsections that follow.

A zone can be reconfigured after it has been configured, and even after it has been booted. However, any change in its configuration will not take effect until the next time that the zone boots unless you use the –r option with zoneadm, as described in the section “3.4.7.2 Live Zone Reconfiguration.” The next example changes the zone so that the next time the system boots, the zone boots automatically.

GZ# zonecfg -z myzone

zonecfg:myzone> set autoboot=true

zonecfg:myzone> exit

3.4.1.2 Installing and Booting the Zone

After you have configured the zone, you can install it, making it ready to run.

GZ# zoneadm -z myzone install

Preparing to install zone <myzone>.

Creating list of files to copy from the global zone.

Copying <7503> files to the zone.

Initializing zone product registry.

Determining zone package initialization order.

Preparing to initialize <1098> packages on the zone.

Initialized <1098> packages on zone.

Zone <myzone> is initialized.

The file </zones/roots/myzone/root/var/sadm/system/logs/install_log> contains a log of

the zone installation.

GZ# zoneadm list -cv

ID NAME STATUS PATH BRAND IP

0 global running / native shared

- myzone installed /zones/roots/myzone native shared

During the installation process, zoneadm creates the file systems and directories required by the zone. Directories that contain system configuration information, such as /etc, are created in the zone’s file structure and populated with the default configuration files.

Zones boot much faster than virtual machines, mostly because there is so little to do. The global zone creates a zinit process that starts the zone’s Services Management Facility (SMF) and creates a few other processes. Those processes then spawn other processes that initialize the system’s services within the zone. At that point, the zone is ready for use.

GZ# zoneadm -z myzone boot

GZ# zoneadm list -cv

ID NAME STATUS PATH BRAND IP

0 global running / native shared

1 myzone running /zones/roots/myzone native shared

When first booting after installation, the zone needs system configuration information to complete this step; this information is usually collected by Solaris systems when you are installing Solaris. You can provide this information via the zlogin(1) command, which enables privileged global zone users to access a zone. With just one argument—the zone’s name—zlogin enters a shell in the zone as the root role. Arguments after the zone’s name are passed as a command line to a shell in the zone, and the output is displayed to the user of zlogin. One important option is -C, which provides access to the zone’s virtual console. A privileged user in the global zone can use that console whenever it is not already in use. The privilege to use zlogin can be granted either through Solaris RBAC, with the solaris.zone.login authorization, or with the delegated administration feature. For more information on the latter, see the section “3.4.7.1 Delegated Administration” later in this chapter.

The first time that the zone boots, it immediately issues its first prompt to the zone’s virtual console. After connecting to the virtual console, you might need to press Enter once to get a new terminal type prompt. After you respond to a short series of questions, the zone will reboot and is then ready for use.

Alternatively, instead of providing the system configuration information manually as just described, you could automate the configuration process. The first step in this process is to create a system configuration file; this file should then immediately be renamed so that you do not overwrite it the next time this automated process runs.

goldGZ# sysconfig create-profile –o /opt/zones

<questions and responses omitted>

goldGZ# mv /opt/zones/sc_profile.xml /opt/zones/web_profile.xml

The other commands are similar to the previous examples, but use the configuration profile that you just created. These two commands configure and install a new zone:

voyGZ# zonecfg –z web20 create

voyGZ# zoneadm –z web20 install –c /opt/zones/web_profile.xml

3.4.1.3 Halting a Zone

Not only does the zoneadm command install and boot zones, but it also stops them. Two methods exist to terminate these zones; one is graceful, and the other is not.

The shutdown subcommand gracefully stops the zone. Using it is the same as executing the following command:

GZ# zlogin myzone /usr/bin/init 0

The halt subcommand to zoneadm simply kills the processes in the zone. It does not run scripts that gracefully shut down the zone but, as you might suspect, it is faster than the shutdown command.

Figure 3.2 shows the entire life cycle of a zone, along with the commands you can use to manage one.

3.4.1.4 Modifying a Zone

Before installation of a zone, all of its parameters may be modified—both resources and properties. A zone may subsequently be modified after its installation is complete, and even later, after it has been running. Nevertheless, a few parameters, such as the brand, may not be modified after the zone has been installed. The rest of the parameters may be modified so that the changes to them will take effect the next time that the zone boots. To cause changes to parameters to take effect immediately, you must specifically indicate this preference by including the appropriate option to the zonecfg command. The section “3.4.7.2 Live Zone Reconfiguration” describes this type of modification.

Although the zonepath and rootzpool parameters may not be modified after the zone’s installation, a zone may be moved by using the zoneadm command. Moving a zone that uses a rootzpool will not move the contents of the zone, but rather will merely modify the zonepath.

The list of resources and properties that can be changed, for each type of zone, is listed on the brand-specific man page: solaris(5), solaris10(5), and solaris-kz(5).

3.4.1.5 Modifying Zone Privileges

Earlier in this chapter, we discussed Oracle Solaris privileges, including the fact that you can modify the set of privileges that a zone can have. If you change the privileges that a zone can have, you must reboot the zone before the changes will take effect. The following example depicts the steps to add the sys_time privilege to an existing zone:

GZ# zonecfg -z web

zonecfg:web> set limitpriv="default,sys_time"

zonecfg:web> exit

GZ# zoneadm -z web boot

3.4.2 Packaging

Solaris systems retrieve packages from a package repository. Native zones use the global zone’s “system repository” to access repositories. In essence, the global zone acts as a proxy, retrieving information and package content on behalf of the native zone. In addition, a zone can configure other publishers and obtain content from them. The system repository can proxy http, https, file-based repositories available to the global zone, and repository archives (.p5p files). The last option is the most convenient method to move and install a single custom package.

You can configure a global zone with a publisher that the global zone does not use, but that is used by its zones. For this scheme to work correctly, the global zone must be able to access the publisher. Such an approach may be very useful when multiple zones will install packages that the global zone should not install. If only one zone needs access to a publisher, it may be simpler to configure that access within the zone itself.

A native zone can see the global zone’s publisher configuration and, therefore, the configurations of the publishers it automatically accesses via the global zone. For example, in the global zone, the following output lists two publishers, neither of which is proxied:

jvictor@tesla:~$ pkg publisher -F tsv

PUBLISHER STICKY SYSPUB ENABLED TYPE STATUS URI PROXY

solaris true false true origin online http://pkg.oracle.com/solaris/release/ -

However, in a native zone, the same command distinguishes between publishers that use the global zone as a proxy (“solaris”) and publishers configured in the zone itself (“localrepo”). The latter might be an NFS mount, as shown in the second command here:

testzone:~# mount -F nfs repo1:/export/myrepo /mnt/localrepo

testzone:~# pkg set-publisher –g file:///mnt/repo localrepo

testzone:~# pkg publisher -F tsv

PUBLISHER STICKY SYSPUB ENABLED TYPE STATUS URI PROXY

localrepo true true true origin online file:///mnt/repo/ -

solaris true true true origin online http://pkg.oracle.com/solaris/release/ http://localhost:1008

A native zone accesses a system repository via two required services. One service, the zone’s proxy client, runs in the zone; its complete name is application/pkg/zones-proxy-client:default. The other service runs in the global zone: application/pkg/zones-proxyd:default. Both services are enabled automatically, but examination of their states may simplify the process of troubleshooting packaging problems in zones. A native zone cannot change the configuration of publishers that it accesses via the global zone.

Changing a global zone’s publishers also changes the repositories from which its zones get packages. For that reason, before you remove a publisher from the global zone’s list, you should confirm that its zones have not installed any packages from the soon-to-be-removed publisher. If the zone can no longer access that publisher, it will not be able to update the software, and it may not be able to uninstall that package.

3.4.3 Storage Options

Several options exist that specify the location of storage for the zone itself and the locations of additional storage beyond the root pool. Also, some options related to storage enable you to further restrict the abilities of a zone, thereby enhancing its security. These options are discussed in this section.

3.4.3.1 Alternative Root Storage

Instead of storing a zone in the global zone’s ZFS root pool, you can store it in its own zpool. Although that pool can reside on local storage, an even better option is to use remote storage that can be accessed by another Solaris computer. Ultimately, remote shared storage simplifies migration of zones.

The configuration of a zone onto shared storage requires only one additional configuration step: specifying the location of the storage. To configure the root pool storage for a zone, use zonecfg(1M) and its rootzpool resource. The storage can be any of these types, and different storage types can be mixed within one pool. Note, however, that mixing storage types can lead to adverse performance and has security implications. Currently, the following types of storage are supported:

![]() Direct device (e.g.,

Direct device (e.g., dev:dsk/c0t0d0s0)

![]() Logical unit (FC or SAS) (e.g.,

Logical unit (FC or SAS) (e.g., lu.name.naa.5000c5000288fa25)

![]() iSCSI (e.g.,

iSCSI (e.g., iscsi:///luname.naa.600144f03d70c80000004ea57da10001 or iscsi:///target.iqn.com.sun:02:d0f2d311-f703,lun.6)

For more details on those storage types and their specification, see the suri(5) man page.

Solaris will automatically create a ZFS pool in the storage area.

3.4.3.2 Adding File Systems

Access to several types of file systems may be added to a zone. Some of these types allow multiple zones to share a file system. This ability can be useful, but is vulnerable to two pitfalls: synchronization of file modifications and security concerns.

Storage managed by ZFS can be added in two different ways. The first approach is to mount a ZFS file system that exists in the global zone into a non-global zone. In the zone, this file system is simply a directory. The second method is to make a global zone file system appear to be a pool in a non-global zone.

The first method assumes that the ZFS file system rpool/myzone1 exists in the global zone. When the zone boots, its users will be able to access that file system at the directory /mnt/myzfs1. Processes running in the zone can access the storage available to the ZFS file system rpool/myzone.

GZ# zonecfg -z myzfszone

zonecfg:myzone> add fs

zonecfg:myzone:fs> set dir=/mnt/myzfs1

zonecfg:myzone:fs> set special=rpool/myzone1

zonecfg:myzone:fs> set type=zfs

zonecfg:myzone:fs> end

This method cannot be used to share files between zones. When this approach is used, if two zones are configured with the same ZFS file system, the first to boot will run correctly. An attempt to boot the second zone, however, will fail. Further, the file system will not be available to users in the global zone.

We recommend setting a quota on all file systems assigned to zones so that one zone does not fill up the pool and prevent other workloads from using the pool.

The second method assigns a ZFS data set to the zone. This method delegates administration of the data set to the zone. A privileged user in the zone can control attributes of the data set and create new ZFS file systems in it. That user also controls attributes of those file systems, such as quotas and access control lists (ACLs).

You can add a ZFS file system or volume to a zone as a ZFS pool. To the global zone, this resource appears to be a file system. Inside the zone, it appears to be a ZFS pool. Administration of the data set is delegated to the zone, so that a privileged user in the zone can control attributes of the data set and create new ZFS file systems in it. That user also controls attributes of those file systems, such as quotas and ACLs.

Although a privileged user in the zone can manage the data set from within, a global zone administrative user can manage the top-level data set, including setting a quota on it.

When using the zonecfg command, you supply the name of the data set as it is known in the global zone, along with an “alias”—the name of the zpool as seen in the zone. If you omit the alias, Solaris uses the final component of the file system name as the name of the pool.

GZ# zonecfg –z myzone

zonecfg:myzone> add dataset

zonecfg:myzone:dataset> set name=mypool/myfs

zonecfg:myzone:dataset> set alias=zonepool

zonecfg:myzone:dataset> end

zonecfg:myzone> exit

Regardless of the type of file system being used, any arbitrary directory that exists in the global zone can be mounted into a zone, with either read-only or read-write status. This step requires the use of a loopback mount, as shown in the next example for a different zone:

GZ# zonecfg -z myufszone

zonecfg:myzone> add fs

zonecfg:myzone:fs> set dir=/shared

zonecfg:myzone:fs> special=/zones/shared/myzone

zonecfg:myzone:fs> set type=lofs

zonecfg:myzone:fs> end

zonecfg:myzone> exit

In this example, the dir parameter specifies the global zone’s name for that directory. The special parameter specifies the directory name in the zone on which to mount the global zone’s directory.

A brief digression is warranted here: When managing zones, keep in mind the two different perspectives that are possible for all objects such as files, processes, and users. In the last example, a process in the global zone would normally use the path name /zones/shared/myzone to refer to that directory. A process in the zone, however, must use the path /shared.

Alternatively, a non-ZFS file system can be mounted into a zone so that only the zone can access it. Processes in other zones then cannot use that file system, and only privileged users in the global zone can access the file system. In the next example, dir has the same meaning as in the previous example, but special indicates the name of the block device that contains the file system. The raw property specifies the name of the raw device.

GZ# zonecfg -z myufszone

zonecfg:myzone> add fs

zonecfg:myzone:fs> set dir=/mnt/myfs

zonecfg:myzone:fs> set special=/dev/dsk/c1t0d0s5

zonecfg:myzone:fs> set raw=/dev/rdsk/c1t0d0s5

zonecfg:myzone:fs> set type=ufs

zonecfg:myzone:fs> end

zonecfg:myzone> exit

All of these mounts—ZFS, UFS, lofs, and others—are created when the zone is booted. For more information on the various types of file systems that can be used within a zone, see the Oracle Solaris 11 documentation.

3.4.3.3 Read-Only Zones

It seems that every month brings a news story describing the latest theft of personal or corporate data from supposedly secure computers. Solaris offers a diverse set of security features intended to prevent various types of attacks. One of these features is read-only, or “immutable,” zones.

Some attacks rely on the perpetrator’s ability to change the configuration of a system, or modify some of its system software. To thwart this type of attack, Solaris 11 Zones can be configured in such a way that the configuration, or the programs that make up Solaris, cannot be modified from within the zone, even by someone who was able to gain some or all of the privileges available within that zone.

Three choices are available for this kind of configuration, each of which specifies the Solaris files that are writable:

1. Only configuration files can be modified, including everything in /etc/*, /var/*, and root’s home directory /root/*. With this method, packages cannot be installed, and the configuration of syslog and the audit subsystem can be changed. This choice of configuration, which is intended to prevent an attacker from modifying commands and leaving Trojan horses, is named flexible-configuration.

2. Only content in /var/* may be modified, except for some directories that contain configuration information. This method prevents attacks that require changing passwords, adding users, changing network information, and more. It is named fixed-configuration.

3. No content in the root pool can be changed. This method prevents attacks that require modifying any parts of Solaris. Unfortunately, it may also prevent some software from functioning correctly, such as software that temporarily stores files in /var/tmp. This choice is named strict.

You can achieve this level of protection by adding just one line to the configuration:

GZ# zonecfg –z troy

zonecfg:troy> create

zonecfg:troy> set file-mac-profile=strict

zonecfg:troy> exit

These strategies raise a key question: If even a privileged user in a zone cannot change its configuration files, how can those files be changed when necessary? The Trusted Path feature enables a privileged user in the global zone to use the –T option to the zlogin command. With this feature, the user can modify files that cannot be modified from within the zone. To use this feature, a user either will need the Solaris RBAC authorization solaris.zone.manage/<zonename> or must be delegated the authorization named manage.

For more details on the latter strategy, see the section entitled “Delegated Administration” later in this chapter.

3.4.4 Resource Management

Resource management includes monitoring resource usage for the purpose of setting initial control levels, and modifying controls in the future to accommodate normal growth. The Solaris Zones feature set includes comprehensive resource management features, also called resource controls. These controls allow the platform administrator to manage those resources that are typically controlled in VEs. Use of these controls is optional, and most are disabled by default. We strongly recommend that you take advantage of these features, as they can prevent many problems caused by over-consumption of a resource by a workload. The following resources were mentioned in previous chapters:

![]() CPU capacity—that is, the portion of a CPU’s clock cycles that a VE can use

CPU capacity—that is, the portion of a CPU’s clock cycles that a VE can use

![]() Amount of RAM used

Amount of RAM used

![]() Amount of virtual memory or swap space used

Amount of virtual memory or swap space used

![]() Network bandwidth consumed

Network bandwidth consumed

![]() Use of kernel data structures—both structures of finite size and ones that use another finite resource such as RAM

Use of kernel data structures—both structures of finite size and ones that use another finite resource such as RAM

This section describes the resource controls available for zones. It also demonstrates the use of Solaris tools to monitor resource consumption by zones. You should apply these monitoring tools before attempting to configure the resource controls, so that you will clearly understand the baseline resource needs of each workload.

The zonecfg command is the most useful tool for setting resource controls on zones. By default, its settings do not take effect until the next time the zone boots, but a command-line option may be used to specify immediate reconfiguration of a running zone.

The zonestat(1M) command is a very powerful monitoring tool. It reports on both resource caps and resource usage. Examples are provided in the sections that follow.

Other useful commands that apply resource controls include prctl(1) and rcapadm(1M), both of which have an immediate effect on a zone. Resource control settings can also be viewed with the commands prctl, rcapstat(1), and poolstat(1M), in addition to zonestat.

Oracle Solaris 11 includes a wide variety of tools that report on consumption of specific resources, including prstat, poolstat(1M), mpstat(1M), rcapstat(1M), and kstat(1M). Many of these features can be applied to zones using command-line options or other methods. In addition, Oracle Enterprise Manager Ops Center was designed as a means to perform complete life-cycle management of both virtual and physical systems, including monitoring of resource consumption by the global zone and by individual zones. This section discusses only those tools that are included with Oracle Solaris 11. Chapter 7, “Automating Virtualization,” discusses OEM Ops Center and OpenStack, another virtualization life-cycle system.

One of the greatest strengths of Solaris Zones is common to most OSV implementations but absent from other forms of virtualization—namely, the ability of tools running in the management area to provide a holistic view of system activity, by aggregating all VEs, as well as a detailed view of the activity in each VE. The monitoring tools described in this section are widely used Solaris tools that provide either a holistic view, a detailed view, or options to obtain both views.

Oracle Solaris includes a text-based “dashboard” for Solaris Zones: zone-stat(1). Similar to other “stat” monitoring commands, zonestat takes an argument that sets the length of the interval(s) during which it collects data, reporting averages at the end of each interval. Without any other arguments, the output shows basic information.

GZ$ zonestat 3

Collecting data for first interval...

Interval: 1, Duration: 0:00:03

SUMMARY Cpus/Online: 4/4 PhysMem: 31.8G VirtMem: 33.8G

----------CPU---------- --PhysMem-- --VirtMem-- --PhysNet—

ZONE USED %PART STLN %STLN USED %USED USED %USED PBYTE %PUSE

[total] 0.06 1.53% 0.00 0.00% 9200M 28.2% 6652M 19.2% 459 0.00%

[system] 0.00 0.00% - - 7710M 23.6% 4606M 13.3% - -

global 0.06 1.52% - - 1398M 4.29% 1972M 5.69% 459 0.00%

zone1 0.00 0.00% - - 91.0M 0.27% 73.6M 0.21% 0 0.00%

The output shown here has several components:

![]() An announcement that data is being collected

An announcement that data is being collected

![]() An announcement of the first interval report

An announcement of the first interval report

![]() The interval report itself, which includes:

The interval report itself, which includes:

![]() Data aggregated across the global zone and all zones

Data aggregated across the global zone and all zones

![]() Data limited to processes running in the global zone

Data limited to processes running in the global zone

![]() Data aggregated across each zone during the interval

Data aggregated across each zone during the interval

The section labeled “CPU” shows the amount of CPU time used for each entry, normalized to one CPU thread, as well as the portion of CPU time used compared to that available to the zone during that interval. The “PhysMem” and “VirtMem” sections provide data about RAM and virtual memory. The latter considers both RAM and swap space used, and compares them to the total amount configured. The final section, “PhysNet,” displays network data.

The zonestat monitoring command can be used with a plethora of options to produce final aggregated reports, details of individual groups of CPUs and network ports, both physical and virtual, and much more. Some of these options will be illustrated later in this chapter.

3.4.4.1 CPU Controls

Oracle Solaris offers multiple methods for controlling a zone’s use of CPU time. The simplest is Dynamic Resource Pools, which enable you to exclusively assign CPUs to a zone; the resulting set of CPUs is called a pool. Perhaps the most flexible means of CPU control is the Fair Share Scheduler (FSS). FSS allows multiple zones to share the system’s CPUs, or a subset of them, while ensuring that each zone receives sufficient CPU time to meet its service level agreement (SLA). Lastly, CPU caps enforce a maximum amount of computational ability on a zone. Although you can use a zone without any CPU controls, we recommend applying these controls to achieve consistent performance.

Dynamic Resource Pools

By default, all processes in all zones share the system’s processors. An alternative—Solaris Dynamic Resource Pools—ensures that a workload has exclusive access to a set of CPUs. When this feature is used, a zone is configured to have its own pool of CPUs for its exclusive use. Processes in the global zone and in other zones never run on that set of CPUs. This type of resource pool is called a temporary pool, because it exists only when the zone is running. Alternatively, it may be called a private pool, reflecting that those CPUs are dedicated (private) to just one zone and its processes.

A resource pool can be of fixed size, or it can be configured to vary in size within a range that you choose. In the latter situation, the OS will shift CPUs between pools as their needs change, as shown in Figure 3.3. Each CPU is assigned to either a (non-default) resource pool or the default pool. The default pool, which always exists, holds all CPUs that have not been assigned to other pools. CPUs in the default pool are used by global zone processes and processes in zones that are not configured to use a pool.

A private pool is not created until its zone boots. If sufficient CPUs are not available to fulfill the needs of the configuration, the zone will not boot, and a diagnostic message will be displayed instead. In that case, you must take one of the following steps to enable the zone to boot:

![]() Reconfigure the zone with fewer CPUs.

Reconfigure the zone with fewer CPUs.

![]() Reconfigure the zone to share CPUs with other zones.

Reconfigure the zone to share CPUs with other zones.

![]() Shift CPUs out of the private pools of other zones and into the default pool.

Shift CPUs out of the private pools of other zones and into the default pool.

![]() Move the zone to a system with sufficient CPUs.

Move the zone to a system with sufficient CPUs.

The simplest approach is to configure pools to have fixed size (e.g., 4 CPUs). A zone that uses a private, fixed-size pool will always be able to use all of the compute capacity of those CPUs. If you choose to use fixed-size pools, you can ignore the ability to dynamically adjust the sizes of the resource pools.

Other situations call for flexibility—specifically, the ability to automatically react to a change in processing needs. For example, consider Figure 3.3, which shows a 32-CPU system with a default pool of 4–32 CPUs and with zones named Web, App, and DB, which are configured with pools of 2–6 CPUs, 8–16 CPUs, and 8–12 CPUs, respectively. Solaris will attempt to assign the maximum quantity of CPUs to each pool, while leaving the default pool with its minimum quantity of CPUs. By default, the quantity of CPUs assigned to each pool is balanced within the configuration constraints.

In the example shown in Figure 3.3, the Web zone will get 6 CPUs, and the App and DB zones will each get 11 CPUs, leaving 4 CPUs for the default pool. After the zones have been running for a while, if the CPU utilization of a pool exceeds a configurable threshold, Solaris will automatically shift 1 CPU into it from an under-utilized pool.

Figure 3.4 shows graphs of CPU allocation and utilization for three different zones and the system’s default pool. As the workloads become more active, they need more processing capacity, and Solaris dynamically provides this resource as necessary.

For example, “Web CPUs” in Figure 3.4 is the number of CPUs configured in the Web zone’s pool as time passes. As the utilization of one pool grows so that it exceeds a configurable threshold, Solaris shifts a CPU into the pool. As the workload increases, Solaris shifts more CPUs until it has reached the maximum quantity configured for that pool, or until there are no more CPUs that can be shifted to the pool. After the utilization of a pool has decreased below the threshold for a suitably long period, Solaris shifts a CPU out of the zone’s pool and back into the default pool.

Note that the App zone’s pool and the DB zone’s pool have a minimum of 8 CPUs each. During at least some periods of time, these pools have excess capacity that other zones cannot use. Maintaining that excess capacity offers a notable benefit: Those zones can use that capacity instantly when they need it, without waiting for Solaris to shift a CPU to their own pools. In other words, they can react more quickly to changing needs for processor time.

Configuring Resource Pools

Configuring a zone to use a private pool is very easy, with a variety of options being available to increase the flexibility of this process. To dedicate two CPUs to a zone named web, run the following commands:

GZ# zonecfg -z web

zonecfg:web> add dedicated-cpu

zonecfg:web:dedicated-cpu> set ncpus=2

zonecfg:web:dedicated-cpu> end

zonecfg:web> exit

GZ# zoneadm -z web boot

The set of CPUs assigned to a zone using this method can be changed while the zone runs, using the live zone reconfiguration feature described later in this chapter.

Global zone administrators can view the current configuration of pools, and CPU usage relative those pools, with the zonestat command.

GZ# zonestat -r psets 3

Collecting data for first interval...

Interval: 1, Duration: 0:00:03

PROCESSOR_SET TYPE ONLINE/CPUS MIN/MAX

pset_default default-pset 2/2 1/-

ZONE USED %USED STLN %STLN CAP %CAP SHRS %SHR %SHRU

[total] 0.48 16.2% 0.00 0.00% - - - - -

[system] 0.03 1.26% - - - - - - -

global 0.44 14.9% - - - - - - -

PROCESSOR_SET TYPE ONLINE/CPUS MIN/MAX

web dedicated-cpu 2/2 2/2

ZONE USED %USED STLN %STLN CAP %CAP SHRS %SHR %SHRU

[total] 0.00 0.09% 0.00 0.00% - - - - -

[system] 0.00 0.05% - - - - - - -

web 0.00 0.04% - - - - - - -

Note that the zone is associated with a processor set that contains the CPUs. It is possible to manually create a resource pool and assign one or more zones to that pool. The zonestat output would then show multiple zones in the listing for one processor set. This technique will not be explored in detail, as it is beyond the scope of this book.

To change the pool size so that it will be a dynamic quantity the next time the zone boots, you can run the following commands to allocate between two and four processors to the zone. The first command enables the service poold, which dynamically adjusts the pool size but is not needed for static pools. This service monitors resource pools that have been configured with a variable quantity of CPUs. It tracks CPU utilization of those pools and, if one is over-utilized, shifts a CPU to it from an under-utilized pool. If multiple pools are over-utilized, the importance parameter informs the OS of the relative importance of this pool compared to other pools.

GZ# svcadm enable pools/dynamic

GZ# zonecfg -z web

zonecfg:web> add dedicated-cpu

zonecfg:web:dedicated-cpu> set ncpus=2-4

zonecfg:web:dedicated-cpu> set importance=5

zonecfg:web:dedicated-cpu> end

zonecfg:web> exit

GZ# zoneadm -z web boot

The size of a pool can be changed while the zone runs by manually shifting CPUs from one pool to another. This step is performed by using the live zone reconfiguration feature described later in this chapter.

Until now we have ignored the meaning of the acronym “CPU” while describing features that address it, the CPU’s use, and the Solaris kernel’s interpretation of it. For decades, “CPU” meant the chip that ran machine instructions, one at a time. The operating system created a process, branched execution to it, and cleaned up when it was done. A multiprogramming OS ran one process for a small period of time, then switched the CPU to another process if one was ready to run.

Today, however, both multicore processors and multithreaded cores are available. Multicore processors are simply multiple CPUs implemented on a single piece of silicon, although some models provide some shared resources for those cores, such as shared cache. Multithreaded cores improve throughput performance by duplicating some—but not all—of a core’s circuitry within the core. To make the most of those hardware threads, the operating system must be able to efficiently schedule processes onto the threads, preferably with some knowledge of the CPU’s internal workings and memory architecture.

Oracle Solaris has a long history of efficiently scheduling hundreds of processes on dozens of processors, going back to the E10000 with its 64 single-core CPUs. Because of its scalability, Solaris was modified to schedule processes on any hardware thread in the system, maximizing the total throughput of the platform.

Unfortunately, this scheme can be a bit confusing when you are configuring resource pools, because each type of CPU has its own type and amount of hardware threading. Each CPU also has its own per-thread performance characteristics. Table 3.6 lists various CPUs that will run Oracle Solaris 11 and core and thread data. It also shows some sample systems.

Because Solaris identifies each hardware thread as a CPU, you can create very flexible resource pools. For example, on an Oracle T7-2, a SPARC-based computer with 2 CPU chips, you can create twelve 32-CPU pools and still have 128 CPUs left for the global zone! Most multithreaded CPUs have some shared cache, so if you want to optimize performance you should configure CPU quantities in multiples of 4 or 8, depending on the architecture of that CPU.

Certainly, all of that flexibility is very useful—but in many situations, other factors are even more important. Some workloads will run best if you configure the zone with one or more entire cores. Similar features enable you to choose specific cores by identification number. The psrinfo -t command displays the ID number of CPU threads, CPU cores, and CPU sockets. The following example shows the difference in syntax, and configures four cores for use by a zone:

GZ# zonecfg –z dbzone

zonecfg:dbzone> add dedicated-cpu

zonecfg:dbzone:dedicated-cpu> set cores=4-7

zonecfg:dbzone:dedicated-cpu> end

zonecfg:dbzone> exit

Variations of that feature allow you to choose specific hardware threads or entire CPU chips, which are called “sockets.”

Finally, the kernel does not enforce a limit on the total number of CPUs configured in different pools or zones until they are actually running. Thus you could configure and install ten 120-core zones on a T5-8 as long as you run only one zone at a time. Alternatively, you could configure and run 10 zones, each with 100 CPUs (threads). In other words, you can over-subscribe CPUs.

Fair Share Scheduler

The Fair Share Scheduler was discussed in Chapter 2, “Use Cases and Requirements.” FSS can control the allocation of available CPU resources among workloads based on the level of importance that you assign to them. This importance is expressed by the number of CPU shares that you assign to each workload. FSS compares the number of shares assigned to a particular workload to the aggregate number of shares assigned to all workloads. For example, if one workload has 100 shares and the total number of shares assigned to all workloads is 500, the scheduler will ensure that the workload receives at least one-fifth of the compute capacity of the available CPUs.