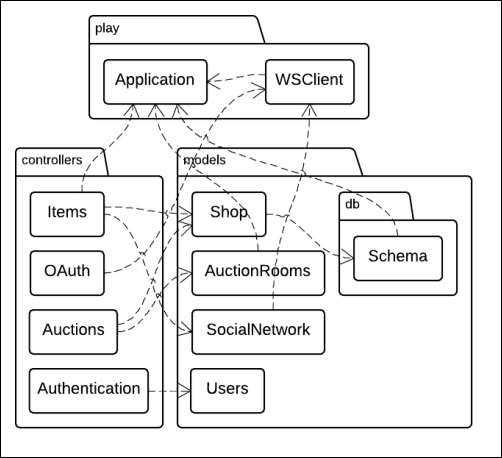

As our shop application grew in size, we added code but we never went back to see how the components are interrelated. The following figure shows the classes involved in our application and the dependency links between them:

A diagram of the classes involved in the shop application and the dependency links between them. Note that the models.db.Schema component has no equivalent in the Java version.

We observe that there are many interdependencies between the parts of the application. Of course, controllers depend on services, but we also see that both services and controllers might depend on a web service client (for example, the OAuth controller and the SocialNetwork service in our case). More importantly, the same applies to the Play Application class. Both controllers and services might depend on the underlying application (the Application class has methods to get the application's configuration, plugins, or classpath).

Until now, I didn't give any particular recommendation regarding the way you can resolve these dependencies. In my code examples, everything was implemented as object singletons (or static methods in Java) that were directly referred to by the code depending on it. Though this design has the merit of being simple, it makes it less modular, and therefore, it is harder to test. For instance, there is no way to use a mocked service to test a controller because this one is tightly coupled to the service singleton.

My favorite solution to make the code more modular is to apply the inversion of control principle. Instead of letting the components instantiate or have hardcoded references to their dependencies, you (or a dependency injection process) supply these dependencies to the components. This way, you can use a mocked service layer to test a controller. In practice, defining each component as a class that takes its dependencies as constructor parameters works quite well. We used objects everywhere (or Java classes with only static methods), but now we have to trade them for regular classes.

Unfortunately, this solution does not work so well in the case of Play applications. Indeed, as we see in the previous figure, there is a component that several other components depend on, and that is not controlled by you: Application. You don't control this component because it is managed by Play when it handles your project's life cycle. When Play runs your code, it creates an Application instance from your project and registers it as the current application. The significant advantage of this design is that Play is able to hot reload your project when it detects changes in the development mode, without requiring anything from you. On the other hand, if your code depends on a feature provided by the Application instance (for example, getting the application's configuration), there is no other way to access it than reading the Play global state, which is play.api.Play.current (or its Java equivalent, play.Play.application()). This function returns the current application if there is already such an application started; otherwise it throws an exception. As you don't control the application creation and because the current application is not a stable reference, you cannot use play.api.Play.current (or play.Play.application() in Java) to supply an Application object to the constructor of a component that has such a dependency.

Although this design makes it impossible for you to statically instantiate all the components of your application, it is yet possible to use a runtime dependency injection system. Such a system instantiates your components at runtime, based on an injection configuration. If you can tell your injection mechanism how to resolve the current Application class, your injection mechanism can instantiate your application's components.

Note

I usually do not recommend using runtime dependency injection systems because they might have hard-to-debug behaviors or produce exceptions at runtime. However, it is worth noting that Play 2.4 aims to make it possible to fully achieve a compile-time dependency injection (though at the time of writing this, the implementation is still subject to discussion). Therefore, my advice remains: write your components as classes taking their dependencies as constructor parameters.

Finally, to modularize your code, you have to achieve two steps: turning each component into a class taking its dependencies as constructor parameters and setting up a runtime dependency injection system.

Applying the first step in your Scala code leads to the following changes in the business layer. The models.db.Schema object becomes a class that takes an Application object as the constructor parameter. Consequently, the models.Shop object becomes a class that takes models.db.Schema as a constructor parameter. Similarly, the models.AuctionRooms object becomes a class that depends on an Application (this one is used to retrieve the Akka actor system). Finally, the models.SocialNetwork object becomes a class that depends on a WSClient object.

Tip

Now that the Schema class constructor takes an Application as a parameter, we clearly see that our service layer depends on the Play framework. Indeed, our application relies on Play evolutions to set up the database. However, in a real-world project, your service layer might be completely decoupled from the Play framework. In such a case, the Schema class will take Database as a constructor parameter instead of a Play application.

In Java, the models.Shop implementation uses the JPA plugin, which itself uses a hardcoded reference to the currently running Play application (it uses play.Play.application()), so you cannot achieve inversion of control for this component. Similarly, the Play Akka integration API directly uses the currently running Play application, so you can not either achieve inversion of control for the models.AuctionRooms component. You should nevertheless turn them into regular classes (with methods instead of static methods) so that they can be injected into your controllers. Finally, the models.SocialNetwork component can be turned into a class that takes WSClient as a constructor parameter.

The controller layer is, in turn, affected by the application of the inversion of control principle. However, if you turn a controller object into a class (or define actions as methods instead of static methods in Java), you will encounter a problem—your routes expect actions to be statically referenced. Thankfully, Play allows you to have a dynamic controller by prepending the action call of a route with the character @. So, you have to prepend all the action calls of your routes that use a dynamic controller with @:

GET /items @controllers.Items.list POST /items @controllers.Items.create # etc. for other routes # the following route remains unchanged, though GET /assets/*file controllers.Assets.versioned(path="/public", file: Asset)

How does Play handle such route definitions? When a request matches the URL pattern, it retrieves the corresponding controller instance by calling the getControllerInstance method of your project's Global object and then calls the corresponding action. You are responsible for implementing this method, which has the following type signature:

def getControllerInstance[A](controllerClass: Class[A]): A

In Java it has the following type signature:

public <A> A getControllerInstance(Class<A> controllerClass)

This method provides Play with a means of using your runtime dependency injection system. A common pattern is to define an injector (as per your dependency injection system) in your Global object and then use it to implement the getControllerInstance method.

Several dependency injection systems exist, especially in the Java ecosystem. In Scala, runtime dependency injection systems are not very popular. That's why in this book I provide only an example using Guice, both in Scala and Java.

Here is a Scala implementation of a drop-in GlobalSettings mixin that achieves dependency injection using Guice:

import com.google.inject.{Provider, Guice, AbstractModule}

import play.api.{Application, GlobalSettings}

trait GuiceInjector extends GlobalSettings {

val injector = Guice.createInjector(new AbstractModule {

def configure() = {

val applicationClass = classOf[Application]

bind(applicationClass) toProvider new Provider[Application] {

def get() = play.api.Play.current

}

}

})

override def getControllerInstance[A](ctrlClass: Class[A]) =

injector.getInstance(ctrlClass)

}This trait creates an injector whose configuration is instructed on how to resolve a dependency to an Application object (by reading the play.api.Play.current variable). Then, the getControllerInstance method just delegates to this injector. When Guice is asked to get a class instance, it figures out the class dependencies by looking at its constructor parameters (using reflection). It then tries to inject them by resolving them according to the injector configuration (if the configuration tells you nothing for a given class, it tries to create an instance by calling its constructor, after having resolved its dependencies, and so on).

To use this trait in your project, just mix it in your Global object:

object Global extends WithFilters(CSRFFilter())

with GuiceInjector { … }In Java, an equivalent implementation is as follows:

import com.google.inject.AbstractModule;

import com.google.inject.Guice;

import com.google.inject.Injector;

import com.google.inject.Provider;

import play.Application;

import play.GlobalSettings;

public class Global extends GlobalSettings {

final Injector injector =

Guice.createInjector(new AbstractModule() {

@Override

protected void configure() {

bind(Application.class)

.toProvider(new Provider<Application>() {

@Override

public Application get() {

return play.Play.application();

}

});

}

});

@Override

public <A> A getControllerInstance(Class<A> controllerClass) {

return injector.getInstance(controllerClass);

}

}As is the case with most dependency injection systems, your code has to be adapted to be injectable. That is to say, Guice needs to know which constructor it should use to instantiate a class. In Java (and in Guice), the JSR-330 specification defines standard annotations for this purpose. This means that the constructor you want Guice to use must be annotated with the @javax.inject.Inject annotation.

Note

Note that this adaptation step is not required by some dependency injection systems, for instance, PicoContainer in Java and Subcut (using the injected macro) and MacWire in Scala. Though they do not suffer from this inconvenience, their usage seems to be less prevalent than Guice. This is why I sticked to Guice in this book. Nevertheless, feel free to give them a try.

Furthermore, in the case of controllers, we don't want to create a new instance each time getControllerInstance is called (that is, each time a request is routed to a dynamic controller call), but we want to reuse the same controller instance during the whole application's lifetime. Fortunately, Guice can do this for us if we annotate our controllers with the @javax.inject.Singleton annotation.

In order to avoid having your entire code base polluted with such annotations, you can restrict the perimeter of the classes that are managed by Guice to the controllers. Thus, you can define a controllers.Service class that wires up your services, and then you can make your controllers depend on this class instead of depending on the services (Guice won't instantiate them so that you don't have to annotate them):

package controllers

import javax.inject.{Singleton, Inject}

import models.{SocialNetwork, Users, AuctionRooms, Shop}

import db.Schema

import play.api.libs.ws.WS

@Singleton

class Service @Inject() (val app: play.api.Application) {

val ws = WS.client(app)

val shop = new Shop(new Schema(app))

val auctionRooms = new AuctionRooms(app)

val users = new Users

val socialNetwork = new SocialNetwork(ws)

}The equivalent Java code is as follows:

package controllers;

import com.google.inject.Singleton;

import models.AuctionRooms;

import models.Shop;

import models.SocialNetwork;

import models.Users;

import play.libs.ws.WSClient;

import play.libs.ws.WS;

@Singleton

public class Service {

public final WSClient ws = WS.client();

public final Shop shop = new Shop();

public final AuctionRooms auctionRooms = new AuctionRooms();

public final Users users = new Users();

public final SocialNetwork socialNetwork =

new SocialNetwork(ws);

}In Java, we don't take an Application object as a constructor parameter because as mentioned before, the JPA and Akka APIs have a hardcoded dependency on the currently started Play application.

Finally, you can define your controllers as injectable classes depending on this service. Here is the relevant code for the Items controller:

@Singleton class Items @Inject() (service: Service)

extends Controller { … }The equivalent Java code is the following:

@Singleton

public class Items extends Controller {

protected final Service service;

@Inject

public Controller(Service service) {

this.service = service;

}

…

}Note that the code of your controllers might also depend on an Application object (for example, the cache API uses an implicit Application parameter). So, you might also need to add the following implicit definition to your Scala controllers:

implicit val app = service.app

Finally, you can avoid repeating this boilerplate code for each controller by factoring it out in a controllers.Controller trait (or class in Java):

package controllers

class Controller(val service: Service)

extends play.api.mvc.Controller {

implicit val app = service.app

}The equivalent Java code is as follows:

package controllers;

public class Controller extends play.mvc.Controller {

protected final Service service;

public Controller(Service service) {

this.service = service;

}

}Then, all you need is to make your controllers extend controllers.Controller:

@Singleton class Items @Inject() (service: Service)

extends Controller(service) { … }The equivalent Java code is as follows:

@Singleton

public class Items extends Controller {

@Inject

public Items(Service service) {

super(service);

}

…

}Your application should now compile and run properly.

Now that your architecture is more modular, you can leverage it to independently test each component. In particular, you can now test the controller layer without going through the service layer even if controllers depend on services. You can do so by mocking a service while testing a controller that depends on it. For this purpose, Play includes Mockito, a library for mocking objects.

Let's demonstrate how to test, for instance, the Auctions.room action with a mocked Shop service in a test/controllers/AuctionsSpec.scala file:

package controllers import org.specs2.mock.Mockito class AuctionsSpec extends PlaySpecification with Mockito { class WithMockedService extends { val service = mock[Service] } with WithApplication( FakeApplication(withGlobal = Some(new GlobalSettings { val injector = Guice.createInjector(new AbstractModule { def configure(): Unit = { bind(classOf[Application]) .toProvider(new Provider[Application] { def get() = play.api.Play.current }) bind(classOf[Service]).toInstance(service) } }) override def getControllerInstance[A](clazz: Class[A]) = injector.getInstance(clazz) }))) "Auctions controller" should { val request = FakeRequest(routes.Auctions.room(1)) "redirect unauthenticated users to a login page" in new WithMockedService { route(request) must beSome.which { response => status(response) must equalTo (SEE_OTHER) } } "show auction rooms for authenticated users" in new WithMockedService { val shop = mock[Shop] service.shop returns shop shop.get(1) returns Some( Item(1, "Play Framework Essentials", 42) ) route(request.withSession( Authentication.UserKey -> "Alice") ) must beSome.which(status(_) must equalTo (OK)) } } }

The preceding code defines a WithMockedService scope, which extends WithApplication and that uses a global object that defines custom dependency injection logic. This dependency injection logic binds the Service class to a mocked instance, defined as a service member in the early initialization block. The first test specification does not actually rely on this mock; it checks that an unauthenticated user is effectively redirected to the login page when he attempts to access an auction room. The second test specification creates a mock for the Shop class, configures the service mock to use the shop mock, configures the shop mock to return an Item object, and finally checks whether an authenticated user effectively gets the auction room page. The AuctionsSpec specification class extends the Mockito trait, which provides the integration with the Mockito API.

Something similar can be achieved in Java, in a test/controllers/AuctionsTest.java file:

package controllers; import static org.mockito.Mockito.*; public class AuctionsTest extends WithApplication { Service service; @Override protected FakeApplication provideFakeApplication() { return fakeApplication(new GlobalSettings() { { service = mock(Service.class); } Injector injector = Guice.createInjector(new AbstractModule() { @Override protected void configure() { bind(Application.class) .toProvider(new Provider<Application>() { @Override public Application get() { return play.Play.application(); } }); bind(Service.class).toInstance(service); } }); @Override public <A> A getControllerInstance( Class<A> controllerClass) throws Exception { return injector.getInstance(controllerClass); } }); } @Test public void redirectUnauthenticatedUsers() { Result response = route(fakeRequest(routes.Auctions.room(1))); assertThat(status(response)).isEqualTo(SEE_OTHER); } @Test public void acceptAuthenticatedUsers() { Shop shop = mock(Shop.class); when(service.shop()).thenReturn(shop); when(shop.get(1L)).thenReturn( new Item(1L, "Play Framework Essentials", 42.0) ); Result response = route(fakeRequest(routes.Auctions.room(1)) .withSession(Authentication.USER_KEY, "Alice")); assertThat(status(response)).isEqualTo(OK); } }

Here, the AuctionsTest class extends WithApplication and overrides the provideFakeApplication method to return a fake application with global settings that define the custom dependency injection logic. This logic binds the Service class to a mock created in the GlobalSettings instance initializer. As in the Scala version, the first test specification does not actually rely on the mock. It checks whether an unauthenticated user is redirected to the login page when they attempt to access an auction room. The second test specification configures the service mock to use a shop mock, then configures the shop mock to return an Item, and finally checks that an authenticated user effectively gets the auction room page. The Mockito API is brought by a static import of org.mockito.Mockito.*.

You can go one step further and split your code into independent artifacts so that the service layer does not even know the existence of the controller layer.

You can achieve this by defining several sbt projects, for instance, one for the controller layer and another one for the service layer, by making the controller depend on the service. Defining completely separated sbt projects can be cumbersome to work with as you need to republish them to your local repository each time they change so that projects depending on them can see the changes. Alternatively, you can define subprojects within the same sbt project. In this case, a change in a project whose another project depends on automatically causes a recompilation of this one.

The sbt documentation explains in detail how to set up a multi-project build. The remaining of this section shows how to define separate projects for the controller and service layers.

A way to achieve this consists of keeping the Play application (that is, the controller layer) in the root directory and placing the service layer in the service/ subdirectory. In our case, the service layer contains the code under the models package, so move everything from shop/app/models/ to shop/service/src/main/scala/models/ (or shop/service/src/main/java/models/ in Java) and everything from shop/test/models/ to shop/service/src/test/scala/models/ (or shop/service/src/test/java/models/ in Java). Note that the subproject is a standard sbt project, not a Play application, so it follows the standard sbt directory layout (sources are in the src/main/scala/ and src/main/java/ directories and tests are in the src/test/scala/ and src/test/java/ directories).

Declare the service project in your build.sbt file:

lazy val service = project.settings(

libraryDependencies ++= Seq(

"com.typesafe.slick" %% "slick" % "2.0.1",

jdbc,

ws,

"org.specs2" %% "specs2-core" % "2.3.12" % "test",

component("play-test") % "test"

)

)The service layer depends on Slick for database communication, on the Play JDBC plugin to manage database evolutions, on the Play WS library for the social network integration, and on specs and the play-test library for tests. We also need the play-test library in order to create a fake Play application that manages our database evolutions. Note that for the evolutions plugin to detect evolution files, you have to move them to the shop/service/src/main/resources/evolutions/default/ directory.

In Java, the service subproject definition looks like the following:

lazy val service = project.settings(

libraryDependencies ++= Seq(

javaWs,

javaJpa,

/* + your JPA implementation */,

component("play-test") % "test"

)

)The service layer depends on the Play WS library, the Play JPA plugin, the JPA implementation of your choice and the play-test library. As for the Scala version, you have to move your evolution scripts to the shop/service/src/main/resources/evolutions/default/ directory. You also have to move your persistence.xml file to the shop/service/src/main/resources/META-INF/ directory.

Finally, make the root project depend on the service project:

lazy val shop = project.in(file("."))

.enablePlugins(PlayScala)

.dependsOn(service)

The equivalent code for Java projects is as follows:

lazy val shop = project.in(file("."))

.enablePlugins(PlayJava)

.dependsOn(service)

It could be interesting to pull the OAuth controller out of your application so that you can reuse it in another project. However, if you can easily split your service layer into different artifacts with no particular constraint, just as you would do with any other sbt project, then splitting the controller layer is not as simple if you also want to split the route definitions.

Indeed, splitting the route definitions has two consequences. First, all projects that define some routes should be Play projects so that they benefit from the routes compiler. Second, you need a means to include the routes defined by another project in your final application.

You can define a Play subproject in your build just as you would define a regular sbt subproject (just as you did with the service layer), with this key difference: enable PlayScala (or PlayJava in Java) for the project. Consequently, the directory layout is the same as with Play applications (that is, sources live in the app/ subdirectory, and so on). In the case of the OAuth component, create an oauth/ directory and put the controller code in an app/ subdirectory. Define the corresponding subproject in your build.sbt file and make the shop project depend on it:

lazy val oauth = project.enablePlugins(PlayScala).settings( libraryDependencies ++= Seq( ws, "com.google.inject" % "guice" % "3.0" ) ) lazy val shop = project.in(file(".")) .enablePlugins(PlayScala) .dependsOn(service, oauth)

Our OAuth controller uses the Play WS library and Guice to be injectable. In Java, the relevant parts of the build.sbt file are as follows:

lazy val oauth = project.enablePlugins(PlayJava).settings( libraryDependencies ++= Seq( javaWs, "com.google.inject" % "guice" % "3.0" ) ) lazy val shop = project.in(file(".")) .enablePlugins(PlayJava) .dependsOn(service, oauth)

The design of the Play router has important consequences when you define several projects with routes.

Indeed, each route file is compiled into both a router and a reverse router. The router is a regular Scala object whose qualified name is, by default, Routes. As each routes file produces a dedicated router, if you have multiple routes file they must produce routers with different names (otherwise, it issues a name clash). This can be achieved by giving the routes file a different name; a conf/xxx.routes file will produce a router in the xxx package. For our OAuth module, I suggest that you name the routes file conf/oauth.routes so that the generated router is named oauth.Routes.

Reverse routers are defined per routes file and per controller. They are objects named xxx.routes.Yyy, where xxx.Yyy is the fully qualified name of the controller (for example, a route that refers to a controllers.Application controller produces a reverse router named controllers.routes.Application). For the same routes file, if the routes refer to controllers of the same package, their reverse routers are merged. However, if different routes file define routes using controllers of the same package, the generated reverse routers of the different projects have name clashes. That's why you should always use specific package names for your subprojects that define routes. For our OAuth module, I suggest that you place the OAuth controller in a controllers.oauth package so that the reverse router is named controllers.oauth.routes. So, the routes file of the OAuth modules is named shop/oauth/conf/oauth.routes and contains the following definition:

GET /callback @controllers.oauth.OAuth.callback

Finally, the last step consists of importing the routes of the OAuth module into the shop application. Write the following in the shop routes file:

-> /oauth oauth.Routes

This route definition tells Play to use the routes defined by the oauth.Routes router using the path prefix /oauth. All the routes of the imported router are prefixed with the given path prefix. In our case, the oauth.Routes router defines only one route, so its inclusion in the shop project is equivalent to the following single route:

GET /oauth/callback @controllers.oauth.OAuth.callback

Now, you have a reusable OAuth controller that is imported in your shop application!