C H A P T E R 3

![]()

Late Binding and Caching

Late binding is binding that happens at run time, and it is the essence of what makes a language dynamic. When binding happens at run time as opposed to compile time, it's usually several orders of magnitude slower. And that's why the DLR has a mechanism to cache the late-binding results. The caching mechanism is as vital as air to a framework like the DLR, and it's based on an optimization technique called polymorphic inline caching. Although caching is not a feature you would normally use directly, it is always there working for you behind the scenes. The DLR uses what are called binders to do late binding. These binders have two main responsibilities—caching and language interoperability. In this chapter, we are going to look at the caching aspect of binders. The next chapter will explore their role in language interoperability.

Binders mostly concern only language implementers. As you'll see in Chapter 5, if you are implementing just some library based on the DLR's dynamic object mechanism, not a programming language, you will have little exposure to binders. When you read through this chapter and the next, it will help your understanding of binders if you put yourself in the shoes of a language implementer.

We saw binders and late binding in Chapter 2. In that chapter, when we looked at DLR expressions, the DynamicExpression class stands out among the other expression classes because of its late-binding capability. The late-binding capability of DynamicExpression actually comes from binders. That's why, when we created an instance of DynamicExpression using the Expression.Dynamic method back in Listing 2-25, we needed to pass in a binder object. How DynamicExpression uses a binder object internally will become clear after you read through this chapter.

This chapter will discuss the general concept of binding and the two flavors of it—static binding and dynamic binding. Related to the concept of binding are things like call sites, binders, rules, and call site caching, and I'll explain these terms and provide code examples. Along the way, I'll show you how to write DLR-based code that works on both .NET 2.0 and .NET 4.0. Once we are able to run DLR-based code on .NET 2.0, I'll show you how to debug into the DLR source code. Being able to do this helped me a lot in learning and understanding how the DLR works and I'm sure it will help you too.

This chapter follows nicely from the previous chapter. It will use the concepts and knowledge you learned from your study of DLR expressions. Call sites and binders are important components of the DLR, and you'll see how the infrastructure of these components builds on DLR expressions and leverages the “code as data”' concept. But first, let's begin with a look at the fundamental concept of binding.

Binding

To introduce binding, I'll start with the static method invocation we are all familiar with in C#. The idea is to use the familiarity we have with C# to provide a context for easing our way into the concepts of call sites and bindings.

In a very broad sense, binding means associating or linking one thing with another. In programming, binding is the association between names (i.e., identifiers) and the targets they refer to. For example, in the code snippet below, the words in bold are all names. The word String is the name of a class; the words bob and lowercaseBob are variable names. The word ToLower is the name of a method.

String bob = "Bob";

String lowercaseBob = bob.ToLower();

Because binding is the association between names and the targets they refer to, it is also often called name binding. There are many approaches for determining how binding is done. We can categorize those different approaches using two aspects of binding—scope and time, as shown in Table 3-1. In terms of scope, binding can be lexical or dynamic. Lexical scoping is also called static scoping. We saw these in Chapter 2 when we looked at the DLR Expression scoping rules. To recap, scopes provide contexts for binding names. A name might refer to different objects, variables, classes, or other things when it occurs in different scopes.

If you think of scope as the spatial dimension of bindings, then the time aspect is the temporal dimension. In terms of time, binding can be early or late. As noted earlier, early binding is also called compile-time binding, because the resolution of a name to its target happens at compile time. That's when the compiler determines what a name refers to. In contrast, late binding is often called run-time binding. In this case, binding of a name to its target happens at run time. As we discussed in Chapter 1, late binding is a key characteristic of dynamic languages.

One thing I want to emphasize in Table 3-1 is that the two aspects are largely orthogonal, yet they aren't completely uncorrelated. They are orthogonal in the sense that lexical scoping rules can be applied to bind names at either compile time or run time. It doesn't matter when (compile time or run time) and who (compiler or language runtime) apply those rules. Those rules can be the same rules and the results of name bindings can be the same regardless of the when and who.

However, though the scope and time aspects are largely orthogonal, there is a little correlation between them and that is the cell marked with X in Table 3-1. If a language uses dynamic scoping rules for name binding, those rules, due to their dynamic nature, require some run-time information when they are applied to bind names. Because of that, it's not possible to design a compiler that applies those rules at compile-time. That's why the cell is marked with X—to indicate that no language falls into that category.

Table 3-1.Categorization of Binding Approaches by Scope and Time

| Scope Time | Compile-time (early) | Run-time (late) |

| Lexical (static) | C# | IronPython |

| Dynamic | X | Lisp |

Call Sites and Early Binding

One concept related to binding is that of the call site,which refers to the location in your code that invokes a method. How the binding and the call site are related is best explained with an example. The following C# code snippet should be familiar. I used it to explain binding and I use it again to explain call sites.

String bob = “Bob”;

String lowercaseBob = bob.ToLower();

What we are interested here is the method invocation in the second line of the code. This is the place (the site) that calls the method. So we say it's a call site.

The second line of code has a call site that calls the ToLower method of the String class. The word ToLower here is just a name. Something needs to link that name to the real ToLower method of the String class. In this case, that something is the C# compiler. This is the name binding we talked about in the previous section. In the case of a static language like C#, the compiler does the name binding. The linkage between the call site and the ToLower method is resolved at compile time and burned into the generated IL. Listing 3-1 shows what that IL code looks like. Don't be scared away by the code listing. Most people, including me, don't write code at the IL level and therefore are not familiar with IL code. The IL code shown here is not much and should be straightforward to understand. You don't need any knowledge or experience with IL to follow along.

Listing 3-1. IL Code That Shows Compile Time Name Binding

locals init (

[0] string bob, //local variable 0 is bob.

[1] string lowercaseBob) //local variable 1 is lowercaseBob.

ldstr "Bob"

stloc.0 //sets local variable 0.

ldloc.0 //load local variable 0.

callvirt instance string [mscorlib] System.String::ToLower()

stloc.1 //sets local variable 1.

The line most important to our current discussion is the one in bold, which shows that the name ToLower in the C# code is bound to the ToLower method of the System.String class in the mscorlib.dll assembly. The binding is done at compile time and is burned into IL.

Call Sites and Late Binding

The last section showed a simple C# code snippet and its early binding behavior when compiled into IL. Now let's look at the other half of the subject—call sites and late binding. Here's a C# code snippet that has a call site and does late binding:

dynamic bob = "Bob";

String lowercaseBob = bob.ToLower();

There is only one word that's different between this code snippet and the previous one, and that's the dynamic keyword in bold. Instead of declaring the type of variable bob to be String, the code here declares the type to be dynamic. Because of that, the code is very different. The second line of the code still has a call site that calls the ToLower method. But it also has another call site that calls something that converts the result of ToLower to a String object. The conversion is necessary because the type of variable bob is dynamic and there is nothing to tell the C# compiler what the return type of the ToLower method is. The C# compiler can't even be sure that the ToLower method is defined for the variable bob.

Without knowing the type of variable bob, what does the C# compiler do in compiling the code snippet above? Since it does not know the type of variable bob,it can't simply bind the name ToLower to the ToLower method of the String class; it can only do that when it knows that the type of bob is String. So it compiles the code into something that has objects that know how to do the binding at run time. Those objects are called binders. Methods of those binders have the logic for carrying out the necessary late binding given the run-time type of the variable bob. Of course, for this simple code snippet, we know by looking at the code that the run-time type of variable bob is System.String. Unlike us, the binder in this case only knows at run time that the type of variable bob is System.String. When the binder gets that type information, it starts the binding by finding out whether System.String has a ToLower method that does not take any arguments. If the binder finds such a method, the binding is successful and program execution continues. Otherwise, the binding fails and an exception is thrown.

It is too abstract to just mention the binders and describe what they do at a conceptual level. So the next few sections will look at the DLR's implementations of the binders and show some code examples of how those binders perform late binding.

DLR Binders

The DLR defines several classes for representing different kinds of binders. Figure 3-1 shows the class hierarchy of the DLR binder classes. The base DLR class that represents binders is CallSiteBinder; all other binder classes derive directly or indirectly from it. The main responsibility of the CallSiteBinder class, as we will soon examine in detail, is caching late binding results. Caching is what boosts the performance of DLR-based languages and libraries. It is one of the most important features that make the DLR a practical platform for running dynamic language code.

Figure 3-1. Class hierarchy of DLR binder classes

All the subclasses of CallSiteBinder shown in Figure 3-1 are designed for the purpose of language interoperability. As I mentioned earlier, DLR binders have two main responsibilities—caching and language interoperability. The class hierarchy in Figure 3-1 shows good software design on the DLR team's part, which separated those two binder responsibilities into separate classes. Because of that good design, we can use only the caching capability of DLR binders if we like, and that's what we'll do in this chapter. I will focus this discussion on the caching mechanism of DLR binders and hence on the CallSiteBinder class only. None of the code examples in this chapter will involve anything related to the subclasses of CallSiteBinder. That's what we'll cover in the next chapter. We'll take a dive deep into those subclasses and see how they enable different languages to interoperate with one another.

Set Up Code Examples

The setup of this chapter's code examples is the same as that of the other chapters, except that I want to use this chapter's examples to show you how to (a) develop DLR-based code that targets both .NET 2.0 and .NET 4.0, and (b) debug the DLR source code. I didn't call out .NET 3.5 explicitly here because if your code runs on .NET 2.0, it should be straightforward to make it run on .NET 3.5. In principle, you can apply the steps I will be showing here to the code examples of other chapters and make those code examples run on .NET 2.0.

Making a Debug Build of the DLR for .NET 2.0

Normally we write DLR-based code that targets .NET 2.0 because there's a business need for doing so. Maybe we are developing a library and we want the library to be accessible to developers who remain on the .NET 2.0 platform. Another reason for running DLR-based code on .NET 2.0 is it allows us to debug into the DLR source code. This is because in .NET 4.0, a great portion of the DLR source code is packaged into the System.Core.dll assembly. I searched the Web and there does not seem to be a debug build of that assembly. So the solution I came up with is to develop the code examples in such a way that they run on both .NET 2.0 and .NET 4.0. That way, when I need to debug into the DLR source code, I'll run the examples that target .NET 2.0 in debug mode. Here are the steps to follow if you want to set up the environment so you can debug into the DLR source code.

- Download the DLR source code from the DLR CodePlex website. Unzip the downloaded file to a folder of your choice. I'll assume the folder you choose is C:Codeplex-DLR-1.0.

- Open the solution file C:Codeplex-DLR-1.0srcCodeplex-DLR-VSExpress.sln in Visual Studio C# 2010 Express. The solution file is for Visual Studio 2008. When you open it in Visual Studio C# 2010 Express, a wizard dialog will pop up and it will take you through the process of converting the solution file to the new Visual Studio 2010 format.

- After the conversion is done, make sure all the projects' configuration is set to Debug. That way, when you build the solution, Visual Studio will generate debug builds of those projects. When you build the whole solution, you will get some compilation errors for the Sympl35 and sympl35cponly projects. Those projects are for an exemplary DLR-based language called Sympl. You can ignore those errors.

- Copy the files in the C:Codeplex-DLR-1.0BinDebug folder to C:ProDLRlibDLR20debug. The files are the binaries generated by the previous step.

Developing for Both .NET 2.0 and .NET 4.0

If you follow the steps outlined in the previous section, you should be able to open this chapter's solution file C:ProDLRsrcExamplesChapter3Chapter3.sln in Visual Studio C# 2010 Express and build the code examples. The solution contains two projects—CallSiteBinderExamples and CallSiteBinderExamples20. The CallSiteBinderExamples project requires .NET 4.0. The CallSiteBinderExamples20 project requires.NET 2.0. Here are the important things to note about how the projects are configured to target different .NET versions.

First, notice that the CallSiteBinderExamples project does not reference any .NET assemblies. This is because the System.Core.dll assembly is implicitly referenced by default. And because the part of the DLR used in the CallSiteBinderExamples project is already packaged into System.Core.dll, the project does not need any additional references to other .NET assemblies. On the other hand, the CallSiteBinderExamples20 references some of the assemblies you built in the previous section. Those are debug version assemblies of the DLR for .NET 2.0.

Next, notice that all the C# source files in CallSiteBinderExamples20 are links to corresponding files in CallSiteBinderExamples. This way, whenever we change a file in one project, the other project will automatically pick up the changes.

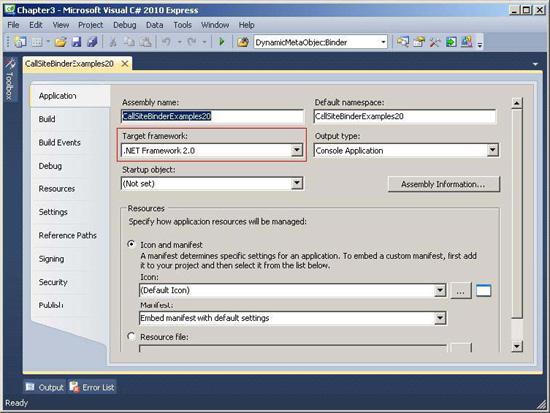

Now look at the properties of the CallSiteBinderExamples20 project. If you right-click on the CallSiteBinderExamples20 project and select Properties in the context menu, you'll see a screen that looks like Figure 3-2. To make a project target .Net 2.0 as the runtime platform, you need to set the “Target framework” dropdown option to “NET Framework 2.0,” as highlighted with a red box in Figure 3-2.

Figure 3-2. Setting the target .NET version of a project

The last thing to take note of is a conditional compilation flag. Because CallSiteBinderExamples and CallSiteBinderExamples20 share the C# source files, we need a flag to compile different parts of the code depending on the target .NET version. If you select the Build tab on the left of the screen shown in Figure 3-2, you'll see a screen that says “Conditional compilation symbols” near the top. For the CallSiteBinderExamples20 project, the conditional compilation symbol “CLR2” is defined. For the CallSiteBinderExamples project, “CLR2” is not defined.

So those are the things I did in order to make the chapter's code examples run on both .NET 2.0 and .NET 4.0. With the environment setup out of the way, let's now look at the CallSiteBinder class, the focus of this chapter.

The CallSiteBinder Class

The C# language runtime has classes that derive from CallSiteBinder. Those classes implement the run-time late binding logic C# needs. Instances of those classes are binders that know how to do late binding for operations such as method invocation. To show you the essence of binders, let's imagine that, for some reason, you want to redefine C#'s late-binding behavior so that all dynamic code like the bob.ToLower() we saw earlier will return integer 3.

Listing 3-2 shows the binder class with the needed binding logic. The class derives from CallSiteBinder and overrides CallSiteBinder's Bind method. You'll see later that, more often than not, you would derive from the class DynamicCallSiteBinder or one of its derivatives rather than CallSiteBinder when implementing your own late-binding logic. For now, let's stay the course and focus on the code example.

The Bind method is where the binding logic resides. In the DLR, the result of all late binding is an instance of the Expression class. That's why the return type of the Bind method is Expression. It's also a sign indicating that DLR Expression is the backbone of the DLR. As the requirement demands, the Bind method in Listing 3-2 returns a constant expression whose value is integer 3. It doesn't matter what is in the args array or the parameters collection. It doesn't matter whether the dynamic code is a method invocation or a property setter/getter invocation. Regardless of all of these, ConstantBinder will always return integer 3 as the binding result.

Listing 3-2. ConstantBinder.cs

public class ConstantBinder : CallSiteBinder

{

public override Expression Bind(object[] args,

ReadOnlyCollection<ParameterExpression> parameters, LabelTarget returnLabel)

{

return Expression.Return(

returnLabel,

Expression.Constant(3)

);

}

}

Although to meet the stated requirement the Bind method in ConstantBinder disregards the args and parameters arguments, it can't disregard the returnLabel argument. If you recall our discussion about GotoExpression in Chapter 2, you know returnLabel, as an instance of LabelTarget, marks a location in code that we can jump to. In this case, the returnLabel argument marks the location at which the program should continue its execution after the late binding finishes. Figure 3-3 shows a pictorial view of this.

Figure 3-3. Return label and program flow

Figure 3-3 shows that somewhere during program execution, a call to constantBinder.Bind is made. Here constantBinder is an instance of the ConstantBinder class. The figure shows that after the Bind method finishes, the program is supposed to continue its execution at the location marked by returnLabel. The trick here is that program execution will not jump to the location marked by returnLabel unless we tell it to do so. That's why in Listing 3-2, the code calls the Expression.Return factory method and passes it returnLabel. That creates an instance of GotoExpression that jumps to the location marked by returnLabel. If the code does not do this jump, the Bind method, once called, will be called again and again endlessly.

DLR Call Sites

The last section showed the code for a binder that returns a constant value for all late binding operations. Earlier I explained the relation between binding and call site. Basically, a call site is a place in code that invokes an operation that needs to be bound. So, in order to use the ConstantBinder class developed in the previous section, we need a call site that invokes some late-bound operation. Because the operation is late bound, the DLR will need us to pass it a binder that knows how to do the late binding. And the binder we will use in this case is an instance of the ConstantBinder class. With this high-level understanding of all the pieces involved, let's see how they are put together in code.

Listing 3-3 shows the code that uses ConstantBinder to perform late binding. The code first creates an instance of ConstantBinder called binder. Then it creates an instance of CallSite<T> by calling CallSite<T>.Create. When calling CallSite<T>.Create, the code passes binder as the input parameter. This is how the code tells the DLR which binder to use for performing late binding. At this point, the example code has the variable site that represents the call site and the variable binder that contains the late-binding logic. The variable site knows to delegate to binder when it comes time to do late binding. And that time comes when the example code calls the Target delegate on the variable site. After the late binding is done, the Target delegate returns and the result of late binding is assigned to the variable result. If you run the code in Listing 3-3, you'll see the text “Result is 3” printed on the screen.

private static void RunConstantBinderExample()

{

CallSiteBinder binder = new ConstantBinder();

CallSite<Func<CallSite, object, object, int>> site =

CallSite<Func<CallSite, object, object, int>>.Create(binder);

//This will invoke the binder to do the binding.

int result = site.Target(site, 5, 6);

Console.WriteLine("Result is {0}", result);

}

The generic type T in CallSite<T> deserves some explanation. Recall that the main purpose of a DLR call site is to invoke some late-bound operation. The invocation of the late-bound operation is triggered by calling the Target delegate on a call site. And the type of the Target delegate is the generic type parameter T. Because Target represents some callable operation, T has to be a delegate type. Besides that, the DLR further requires the Target delegate's first parameter to be of type CallSite. In summary, the generic type parameter T can't be just any type. It has to meet the following two requirements:

- It must be a delegate type.

- The type of the delegate's first parameter must be

CallSite.

In our example code, T is Func<CallSite, object, object, int>. That means the late-bound operation takes three input parameters and returns a value of type int. The types of the three parameters are CallSite, object and object respectively. Because T is the type of the Target delegate, in Listing 3-3 when the code calls Target on the variable site, the input parameters it passes to the call need to meet the method signature of the Target delegate.

Binding Restrictions and Rules

We saw in Listing 3-2 that a binding result is expressed in the form of an expression that represents the number 3. However, the example we saw there is a special case of a more generic way to represent binding results. Recall that the goal of the code in Listing 3-2 is to have a binder whose binding logic will make all dynamic (i.e., late binding) code return integer 3. In that requirement statement, one not so obvious condition is the bolded word “all.” We can imagine a similar requirement that requires a binder that makes dynamic code to return 3 only when, say, the type of some xyz variable is System.Int32.

Having a binding result that is valid without any condition is a special case of having a binding result that is valid only under certain conditions. It is a special case because we can regard it as having a condition that always evaluates to true. This section extends the example in Listing 3-2 and handles the generic case. Listing 3-4 shows the new code example, the ConstantWithRuleBinder class. The binder class implements the binding logic that returns integer 10 only when the value of the first input parameter is greater than or equal to 5; otherwise, it returns integer 1.

The example code gets the value of the first parameter from the args array (line 8). For each input parameter, in addition to receiving its value, the Bind method also receives a representation of that parameter in the form of a ParameterExpression object. The example code gets the ParameterExpression object of the first input parameter from the parameters collection (line 9). The first input parameter's value, firstParameterValue, and its ParameterExpression object, firstParameterExpression, are used to construct the expression that represents the binding result according to the requirement we'd like to meet.

In this example, the binding result is not just expressed in the form of any expression. It is expressed in the form of a conditional expression. The conditional expression that represents the late binding result returned by a binder is called a rule. A rule consists of two parts—restrictions and the binding result. The restrictions are the conditions under which the binding result is valid. The need for restrictions has to do with something called call site caching, which I'll explain in the next section.

In Listing 3-4, for the case where the value of the first parameter is greater than or equal to 5, the example code calls Expression.GreaterThanOrEqual to create the restrictions (line 14). It creates the binding result similar to the example code in Listing 3-2. It calls Expression.IfThen to combine the restrictions and binding result into a rule. The rule is the final expression that the Bind method returns.

Similarly, for the case where the value of the first parameter is less than 5, the example code calls Expression.LessThan to create the restrictions (line 25). It also calls Expression.IfThen to combine the restrictions and binding result (line 24).

Listing 3-4. ConstantWithRuleBinder.cs

1) public class ConstantWithRuleBinder : CallSiteBinder

2) {

3) public override Expression Bind(object[] args,

4) ReadOnlyCollection<ParameterExpression> parameters, LabelTarget returnLabel)

5) {

6) Console.WriteLine("cache miss"); //This will be explained later in the chapter.

7)

8) int firstParameterValue = (int) args[0];

9) ParameterExpression firstParameterExpression = parameters.First();

10)

11) if (firstParameterValue >= 5)

12) {

13) return Expression.IfThen( //rule

14) Expression.GreaterThanOrEqual( //restrictions

15) firstParameterExpression,

16) Expression.Constant(5)),

17) Expression.Return( //binding result

18) returnLabel,

19) Expression.Constant(10))

20) );

21) }

22) else

23) {

24) return Expression.IfThen( //rule

25) Expression.LessThan( //restrictions

26) firstParameterExpression,

27) Expression.Constant(5)),

28) Expression.Return( //binding result

29) returnLabel,

30) Expression.Constant(1))

31) );

32) }

33) }

34) }

Now let's see what happens when we use ConstantWithRuleBinder to do late binding. Listing 3-5 shows the client code that uses ConstantWithRuleBinder. The code creates an instance of ConstantWithRuleBinder called binder. It creates a call site object and assigns the call site object to the variable site, then it calls the Target delegate on the variable site. All of this is similar to what we saw in Listing 3-3. The main difference is that in this example the client code calls the Target delegate on the object site twice. The first call to the Target delegate has integer 8 as the value of the second input parameter. That value becomes the value of the first input parameter when it gets to the Bind method of the binder object. Because the value is greater than 5, the result of the late binding is 10. The second call to the Target delegate has integer 3 as the value of the second input parameter. Because of that, the result of the late binding is 1.

Listing 3-5. Program.cs

private static void RunConstantWithRuleBinderExample()

{

CallSiteBinder binder = new ConstantWithRuleBinder();

CallSite<Func<CallSite, int, int>> site =

CallSite<Func<CallSite, int, int>>.Create(binder);

int result = site.Target(site, 8);

Console.WriteLine("Late binding result is {0}", result);

result = site.Target(site, 3);

Console.WriteLine("Late binding result is {0}", result);

}

If you run the code in Listing 3-5, you should see output like the following:

cache miss

Late binding result is 10

cache miss

Late binding result is 1

Checking Binding Rules in Debug Mode

In the previous examples, we saw how binders and call sites work together to perform late binding. Next I want to show you how the rules that represent binding results are cached. But before I get into that, I'd like to show you a useful debugging technique that helped me a lot in learning the DLR. The Visual Studio debugger comes with a tool called Text Visualizer that you can use to visualize expression trees. In this section, we'll debug into the DLR source code and use the Text Visualizer to view the binding rules returned by the code example in Listing 3-5.

If you open the ConstantWithRuleBinder.cs file in this chapter's code download, you'll see the following code snippet:

#if CLR2

using Microsoft.Scripting.Ast;

#else

using System.Linq.Expressions;

#endif

This code snippet uses the conditional compilation symbol CLR2 that we saw in the “Developing for Both .NET 2.0 and .NET 4.0” section. The compilation symbol is needed because when we compile the example code to run on .NET 2.0, some DLR classes used in the example code are in the Microsoft.Scripting.Ast namespace. However, those DLR classes are in the System.Linq.Expressions namespace when we compile the example code to run on .NET 4.0. The reason for the namespace difference is because in .NET 4.0, some DLR code is put into namespaces like System.Linq.Expressions and packaged into the System.Core.dll assembly.

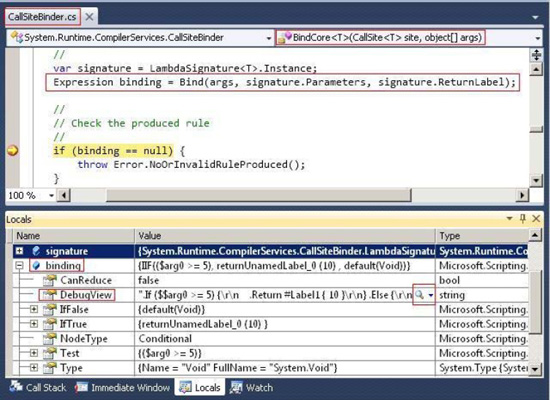

If you run the code in Listing 3-5 from the CallSiteBinderExamples20 project in debug mode, you should be able to debug into the DLR source code. Figure 3-4 shows the screen capture of a debug session I ran. In this session, I set a break point at the line right after the call to the Bind method. The break point is in the BindCore<T> method of the CallSiteBinder class, and we have debugged into the DLR source code. The value returned by the call to the Bind method is assigned to the binding variable (the line of code is marked with a red box in Figure 3-4) and it is essentially the rule that represents the late-binding result.

Figure 3-4. Debug view of running the code in Listing 3-5 in debug mode.

If we expand the binding variable in the bottom half of Figure 3-4 and look at its contents, we can see that under the binding variable, there is a DebugView entry. To the right of the DebugView entry, you'll see a magnifier icon with a down arrow. If you right-click on the arrow and select Text Visualizer, a dialog window pops up that will display the textual visualization of the binding rule that ConstantWithRuleBinder returns. Listing 3-6 shows the textual visualization of the binding rule when the first argument is greater than or equal to 5.

Listing 3-6. Textual Visualization When the First Argument Is Greater Than or Equal to 5

.If ($$arg0 >= 5) {

.Return #Label1 { 10 }

} .Else {

.Default(System.Void)

}

The rule shown in Listing 3-6 basically says “if the first argument (arg0) is greater or equal to 5, then return 10; otherwise, return some void value to indicate that a rebinding is required.” The rule matches the code logic in the ConstantWithRuleBinder class. Now, if we let the debug session continue from the break point, the program execution will stop at the break point again because, in Listing 3-5, the code calls the Target delegate to do late binding again with a different input parameter. This time, the Text Visualizer displays the textual visualization of the binding rule as Listing 3-7 shows. And you can see that the rule shown in Listing 3-7 matches the code logic we have in the ConstantWithRuleBinder class for the case where the first input argument is less than 5.

Listing 3-7. Textual Visualization When the First Argument Is Less Than 5

.If ($$arg0 < 5) {

.Return #Label1 { 1 }

} .Else {

.Default(System.Void)

}

In summary, the code in Listing 3-5 calls the Target delegate on the call site twice, and we saw the binding rules for those two late-binding operations in Text Visualizer. When the code in Listing 3-5 calls the Target delegate for the first time, a late-binding process takes place and the rule in Listing 3-6 is produced and cached. When the code in Listing 3-5 calls the Target delegate for the second time, another late-binding process takes place because the cached rule returns a value that indicates the need for a rebinding. So the Bind method of ConstantWithRuleBinder is called again and the rule in Listing 3-7 is produced and cached. At this point, you may notice that the two rules in Listing 3-6 and Listing 3-7 can actually be combined into one single rule like this:

.If ($$arg0 >= 5) {

.Return #Label1 { 10 }

} .Else {

.Return #Label1 { 1 }

}

The new rule is more economic and efficient because it requires only one late-binding operation for the code in Listing 3-5. Here's why. When the code in Listing 3-5 calls the Target delegate for the first time, a late-binding process takes place. If the new rule is produced and cached as the result of the late binding, then the second time the Target delegate is called, the new rule will return integer 1 instead of a value that indicates the need for a rebinding. In order to make the binder produce the new binding rule, you need to change the implementation of ConstantWithRuleBinder in Listing 3-4 to the code in Listing 3-8, which calls the Expression.IfThenElse factory method to create a ConditionalExpression instance whose if-branch returns integer 10 and else-branch integer 1.

Listing 3-8. A More Efficient Implemenation of the Binding Logic

public override Expression Bind(object[] args,

ReadOnlyCollection<ParameterExpression> parameters, LabelTarget returnLabel)

{

Console.WriteLine("cache miss");

ParameterExpression firstParameterExpression = parameters[0];

int firstParameterValue = (int)args[0];

return Expression.IfThenElse( //rule

Expression.GreaterThanOrEqual( //restrictions

firstParameterExpression,

Expression.Constant(5)),

Expression.Return( //binding result

returnLabel,

Expression.Constant(10)),

Expression.Return( //binding result

returnLabel,

Expression.Constant(1))

);

}

Before we make the code change in Listing 3-8, we see two lines of “cache miss” printed on the screen when we run the example. After the code change in Listing 3-8, when we run the example we see only one line of “cache miss.” So what is “cache miss” about? That's the topic of the next section.

Caching

Late binding is expensive because it needs to make several methods calls to get the Expression instance that represents the rule, i.e., restrictions plus binding result. Then the rule needs to be interpreted or compiled into executable IL code by the DLR. The whole late-binding process usually makes the code run much slower than early-bound code. Because of that, caching the result of late binding is crucial to the success of a framework like the DLR. The previous section previewed the DLR's caching functionality but didn't explain the details. Now we'll look at this important feature of the DLR and use examples to show how the caching works.

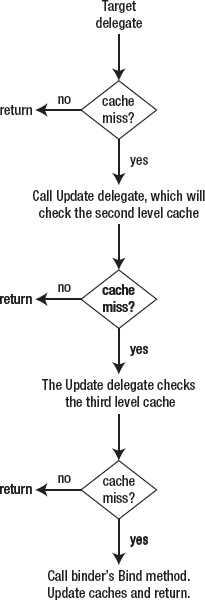

Three Cache Levels

There is not just one cache for storing late-binding results, but three. The Target delegate we saw earlier is a cache. There's also a cache maintained in a call site, and another cache in a binder. The way they work in the late binding process is illustrated in Figure 3-5.

Figure 3-5. The three cache levels and the late binding process

When client code invokes the Target delegate on a call site for the first time to perform late binding, the DLR will first check whether there is any rule in the three caches that can serve as the result of the late binding. Because this is the first invocation of the Target delegate, the Target delegate, as a first-level (L0) cache, doesn't have anything cached yet. So the Target delegate proceeds to the second-level (L1) cache, which is the call site's cache, by calling the call site's Update delegate. The second-level cache doesn't have any rules in it because no client call has invoked the site to do late binding. The Update delegate therefore proceeds to the third and last (L2) cache, which is the binder's cache. The binder might or might not have a suitable rule in its cache for the late binding. If it does, that rule is returned to the call site and the expensive late-binding operation is avoided. The returned rule is put into the second-level cache as well as the first-level cache. On the other hand, if the binder does not have a suitable rule in its cache for the late binding, the binder's Bind method is called to do the expensive late binding. When a binder's Bind method returns a rule expression to a call site, the rule is put into level 3 and level 2 caches and is also assigned to the call site's Target delegate.

If client code performs subsequent invocations of the Target delegate on the same call site, since the Target delegate has been invoked, the rule the Target delegate caches might happen to be the right rule for the late binding. A rule is the right rule if its restrictions evaluate to true in the late binding context. For example, assuming the suboptimal implementation of the ConstantWithRuleBinder class shown in Listing 3-4 is used, the following line in Listing 3-5 does not pass the restrictions of the Target delegate's cached rule.

result = site.Target(site, 3);

Prior to this line of code, Listing 3-5 calls the Target delegate and passes it integer 8. Because of that, the Target delegate's cached rule has the restrictions shown in the following in bold. The restrictions test whether the first parameter of the late-binding operation is greater than or equal to 5. This is the same code you see in the suboptimal version of the ConstantWithRuleBinder class's Bind method.

Expression.IfThen(

Expression.GreaterThanOrEqual(

firstParameterExpression,

Expression.Constant(5)),

... //binding result omitted

);

When we call the Target delegate on the same call site the second time, with integer 3 as the input parameter, that integer 3 is part of the late-binding context. The rule cached in the Target delegate has restrictions that test whether the first input parameter is greater than or equal to 5. So the cached rule is not the right rule because its restrictions evaluate to false in the late binding context. In that situation, the Target delegate invokes the call site's Update delegate and proceeds to the second-level cache.

It should be clear now how important rules and their restrictions are to the DLR's cache mechanism. Without them, the cache mechanism would be useless because there would be no information for determining whether a cached result is suitable in a late-binding context or whether an expensive call to the binder's Bind method is needed.

Late-Binding Context

The previous paragraphs mentioned the term late-binding context. What is it? And what's in it? A late-binding context represents the environment (i.e., the context) in which a late binding takes place. In the previous example, we saw that integer 3, the first parameter of the late-binding operation, is part of the context in which the late binding takes place. In fact, all the input parameters of a late-binding operation are in the context. Besides the input parameters, each parameter's name and type are part of the context. The order of the input parameters is also included in the context. What's more, the return type of the late-binding operation is part of the context as well. Every late-binding operation has a return type because DLR binders use DLR expressions to represent binding results and, as Chapter 2 mentioned, DLR expressions, unlike statements, always have a return type.

The information in a late-binding context can be categorized into two kinds—compile-time and run-time. For a late-binding operation, all the input parameters' types and the return type are run-time information because they are generally not known at compile time; otherwise, it would be an early-bound operation, not late bound. For compile-time information in a context, the DLR doesn't limit what you can put there. A good example of compile-time information in a late-binding context is the name of the invoked method. The C# code snippet invokes the ToLower method on the dynamic object hello. The method invocation is late bound and the C# compiler will compile the method invocation into a call site and a binder. The binder will have the method name ToLower stored in it so that when the binder needs to perform late binding, it knows the name of the method to bind. The method name ToLower is available at compile time and is a piece of information in the late-binding context.

dynamic hello = "Hello";

String helloInLowerCase = hello.ToLower();

It might seem redundant to include a parameter's type in the context when the parameter (i.e., the parameter value, the integer 3 in our example) itself is already in the context. You can simply use reflection to get the type of a parameter value. As it turns out, a parameter's type might not be the same as the type of the parameter's value. For example, a late-binding operation might expect a parameter of some base class and the type of the parameter might be a derived class.

All of the information in a late-binding context is accessible to a binder for performing late binding. When no suitable rule is in the L0, L1, or L2 caches for a late-binding operation, the call site will prepare all the information that makes up the late-binding context and passes that to the binder's Bind method, which has the following method signature:

public override Expression Bind(

object[] args, //parameter values

ReadOnlyCollection<ParameterExpression> parameters, //paramter names, types and order

LabelTarget returnLabel) //return type and name

As you can see from the Bind method's signature, there is a correspondence between the pieces of run-time information in a late-binding context and the Bind method's input parameters. The second input parameter of the Bindmethod is of type ReadOnlyCollection<ParameterExpression>. The class ReadOnlyCollection<T> implements IList<T>. The order of the elements in ReadOnlyCollection<ParameterExpression> is the order of a late binding operation's input parameters. The Name and Type properties of the ParameterExpression class represent an input parameter's name and type in the late-binding context. The Name and Type properties of the LabelTarget class represent the return value's name and type in the late-binding context.

Table 3-2 summarizes each piece of the run-time information in a late-binding context and its corresponding input parameter in the Bind method's signature. The last column of the table uses the line result = site.Target(site, 3) in Listing 3-5 as an example and shows the value of each piece of the run-time information in the late-binding context.

Table 3-2.Late-Binding Context

This section explains the three cache levels and how they play a part in the late binding process. Let's see some examples of these cache levels in action in the next few sections. For the purpose of demonstration, all the examples in the rest of this chapter will use the suboptimal implementation of the ConstantWithRuleBinder class shown in Listing 3-4.

L0 Cache Example

This section demonstrates how the L0 cache works. Listing 3-9 shows the client code that uses the ConstantWithRuleBinder class we saw earlier for the late-binding logic. The code is similar to the code in Listing 3-5. In this example, the code calls site.Target twice. When the code first calls site.Target, the Target delegate does not have any rule in the L0 cache. There isn't any rule in the L1 and L2 caches either. So the binder's Bind method is invoked to perform the late binding. Every time the binder's Bind method is invoked, the text “cache miss” will be printed to the screen so that we can clearly see when a cache miss happens and when a cache match is found.

After the first call to site.Target, all three caches will contain the rule returned by the binder's Bind method. Then the client code calls site.Target the second time and passes it integer 9. Because integer 9 is greater than 5, the restrictions of the rule in L0 cache evaluate to true and no expensive late binding is needed.

Listing 3-9. L0 Cache Example

private static void L0CachExample()

{

CallSiteBinder binder = new ConstantWithRuleBinder();

CallSite<Func<CallSite, int, int>> site =

CallSite<Func<CallSite, int, int>>.Create(binder);

//This will invoke the binder to do the binding.

int result = site.Target(site, 8);

Console.WriteLine("Late binding result is {0}", result);

//This will not invoke the binder to do the binding because of L0 cache match.

result = site.Target(site, 9);

Console.WriteLine("Late binding result is {0}", result);

}

If you run the code in Listing 3-9, you'll see the following output on the screen. Notice that there is only one cache miss.

L1 Cache Example

This section demonstrates how the L1 cache works. Like Listing 3-9, the client code in Listing 3-10 uses the ConstantWithRuleBinder class we saw earlier in Listing 3-4 for the late-binding logic. In this example, the code calls site.Target three times. When the code first calls site.Target, the Target delegate doesn't have any rule in the L0 cache. There isn't any rule in the L1 and L2 caches either. So the binder's Bind method is invoked to perform the late binding. So far everything is the same as in the preceding example.

After the first call to site.Target, all three caches will contain the rule returned by the binder's Bind method. To ease our discussion, let's call this rule 1. The client code calls site.Target a second time and passes it integer 3. Because integer 3 is neither greater than nor equal to 5, the restrictions of the rule the in L0 cache evaluate to false. The restrictions of the same rule in the L1 and L2 caches also evaluate to false. So the binder's Bind method is invoked to perform late binding. Let's call the rule returned this time rule 2. Rule 2 is cached together with rule 1 in the L1 and L2 caches. The L1 cache can hold up to 10 rules while the L2 cache can hold up to 128 rules per delegate type. Don't worry about the L2 cache and its cache size for now. I'll explain that in detail in the next section. The important thing to note here is that rule 1 and rule 2 are both in the L1 and L2 caches. But only rule 2 is in the L0 cache.

Because of this, when the client code calls site.Target the third time and passes it integer 9, and 9 is not less than 5, the restrictions of rule 2 in L0 cache evaluate to false. The Target delegate thus calls the Update delegate to proceed to the L1 cache. The L1 cache contains both rule 1 and rule 2. In this case, rule 1 is suitable for the late binding and therefore no call to the binder's Bind method is necessary.

Listing 3-10. L1 Cache Example

private static void L1CachExample()

{

CallSiteBinder binder = new ConstantWithRuleBinder();

CallSite<Func<CallSite, int, int>> site =

CallSite<Func<CallSite, int, int>>.Create(binder);

//This will invoke the binder to do the binding.

int result = site.Target(site, 8);

Console.WriteLine("Late binding result is {0}", result);

//This will invoke the binder to do the binding.

result = site.Target(site, 3);

Console.WriteLine("Late binding result is {0}", result);

//This will not invoke the binder to do the binding because of L1 cache match.

result = site.Target(site, 9);

Console.WriteLine("Late binding result is {0}", result);

}

If you run the code in Listing 3-10, you'll see the following output on the screen:

L2 Cache Example

The L2 cache example in this section is a bit more complex than those in the previous sections. The reason for the complexity is that the same binder may be shared by multiple call sites. This will be easier to understand with some examples.

In the previous sections, we call the Target delegate on the same call site instance. This usually happens when you have C# code that looks like the following:

void Foo(dynamic name) {

name.ToLower(); //call site is here.

}

Foo("Bob");

Foo("Rob");

There's only one call site instance in the Foo method. In the code snippet, the Foo method is called twice. Each time Foo is called, the same L0 and L1 caches of the one and the only call site in the Foo method are searched for a suitable rule.

Now let's see a slightly different example. If the Foo method looks like the one in the code snippet below, there will be two separate call sites, i.e., two instances of CallSite<T>. The two call sites are totally independent of each other. Each of the two call sites has its own L0 and L1 cache. Those caches are not shared across call sites.

void Foo(dynamic name) {

name.ToLower(); //call site is here.

name.ToLower(); //another call site is here.

}

To share cached rules across call sites, you share binders. By sharing a binder across call sites, rules in the binder's cache are shared. The code in Listing 3-11 demonstrates the sharing of an L2 cache across two call sites. The example code creates one instance of ConstantWithRuleBinder and two call sites, site1 and site2. The binder is shared between the two call sites. When the example code calls site1.Target, the binder's Bind method is invoked to do the late binding. The result of the late binding is cached in site1's L0 and L1 caches. It is also cached in the binder's L2 cache. So when site2.Target is invoked, even though site2's L0 and L1 caches don't have a suitable rule for the late binding, the binder's L2 cache has one. Therefore, the binder's Bind method is again not invoked.

Listing 3-11. L2 Cache Example

private static void L2CachExample()

{

CallSiteBinder binder = new ConstantWithRuleBinder();

CallSite<Func<CallSite, int, int>> site1 =

CallSite<Func<CallSite, int, int>>.Create(binder);

CallSite<Func<CallSite, int, int>> site2 =

CallSite<Func<CallSite, int, int>>.Create(binder);

//This will invoke the binder to do the binding.

int result = site1.Target(site1, 8);

Console.WriteLine("Late binding result is {0}", result);

//This will not invoke the binder to do the binding because of L2 cache match.

result = site2.Target(site2, 9);

Console.WriteLine("Late binding result is {0}", result);

}

If you run the code in Listing 3-11, you'll see the following output on the screen. Notice that there is only one cache miss.

cache miss

Late binding result is 10

Late binding result is 10

Now, for comparison, Listing 3-12 shows the same example, except this time each site uses its own binder. The object site1 uses binder1 and site2 uses binder2.

Listing 3-12. Not Sharing the L2 Cache

private static void L2CachNoSharingExample()

{

CallSiteBinder binder1 = new ConstantWithRuleBinder();

CallSiteBinder binder2 = new ConstantWithRuleBinder();

CallSite<Func<CallSite, int, int>> site1 =

CallSite<Func<CallSite, int, int>>.Create(binder1);

CallSite<Func<CallSite, int, int>> site2 =

CallSite<Func<CallSite, int, int>>.Create(binder2);

//This will invoke the binder to do the binding.

int result = site1.Target(site1, 8);

Console.WriteLine("Late binding result is {0}", result);

//This will invoke the binder to do the binding because of no L2 cache match.

result = site2.Target(site2, 9);

Console.WriteLine("Late binding result is {0}", result);

}

If you run the code in Listing 3-12, you'll see the following output on the screen. Notice that there are two cache misses in the output.

Creating Canonical Binders

It should be clear that sharing binders across call sites is important to the performance of a DLR-based language. However, that doesn't mean a language implementer should use one binder for all call sites. The proper criterion for sharing a binder across multiple call sites is when those call sites have the same compile-time information in their late binding context. A binder shared across multiple call sites that have the same compile-time information in their late-binding context is called a canonical binder.

Listing 3-13 shows an example of when to use a canonical binder. The listing shows a C# code snippet that involves some late-binding operations. The code assigns a string literal to the variable x and another string literal to the variable y. Then it invokes the ToLower method on x in line 3 and on y in line 4. The two method invocations are late bound. In lines 5 and 6, the example code accesses the Length property of x and y respectively. The two property-access operations are also late bound. The question is, for the two method invocations and the two property access operations, how many binders should we use if we are implementing something like the C# compiler? Because the two method invocations are of the same kind of late-binding operations (i.e., the method invocation operation) and because they invoke the same method name ToLower (i.e., their compile-time information in their late-binding context is the same), the two method invocations should share the same canonical binder for their late binding. Similarly, because the two property-access operations are of the same kind of late-binding operations (i.e., the property get-access operation) and because they access the same property name Length (i.e., their compile-time information in their late binding context is the same), the two property-access operations should share one canonical binder for their late binding.

Listing 3-13. An Example of When to Use a Canonical Binder

1) dynamic x = “foo”;

2) dynamic y = “bar”;

3) dynamic z = x.ToLower(); //call site is here.

4) z = y.ToLower(); //another call site is here.

5) z = x.Length;

6) z = y.Length;

Summary

In this chapter, we looked at the caching mechanism of DLR binders. We saw code examples of the three cache levels in action, showing when a cache miss occurs and when a cached rule is reused. I also introduced canonical binders, and described when to share binders across multiple call sites. Along the way, I showed how to build debug versions of the DLR assemblies for .NET 2.0, how to write DLR-based code that targets both .NET 2.0 and .NET 4.0, and how to debug into the DLR source code to see the rules that represent binding results in Text Visualizer. We have covered a lot in this chapter about DLR binders and caching. The next chapter will examine how DLR binders enable language interoperability.