With great power comes great responsibility and with deeper models comes deeper problems. A fundamental challenge with deep learning is striking the right balance between generalization and optimization. In the deep learning process, we are tuning hyperparamters and often continuously configuring and tweaking the model to produce the best results based on the data we have for training. This is optimization. The key question is how well does our model generalize in performing predictions on unseen data?

As professional deep learning engineers, our goal is to build models with good real-world generalization. However, generalization is subjective to the model architecture and the training dataset. We work to guide our model for maximum utility by reducing the likelihood it learns irrelevant patterns or learning simple similar patterns found in the data used for training. If this is not done it can affect the generalization process. So a good solution is to provide the model with more information that is likely to have a better (more complete and often complex) signal of what you're trying to actually model by getting more data to train on and to work to optimize the model architecture. Here are few quick tricks which can improve your model by preventing overfitting:

- Get more data for training.

- Reduce network capacity by altering the number of layers or nodes.

- Employ L2 (and try L1) weight regularization techniques.

- Add dropout layers or polling layers in the model.

L2 regularization, where the cost added is proportional to the square of the value of the weights coefficients also known as L2 norm or weight decay.

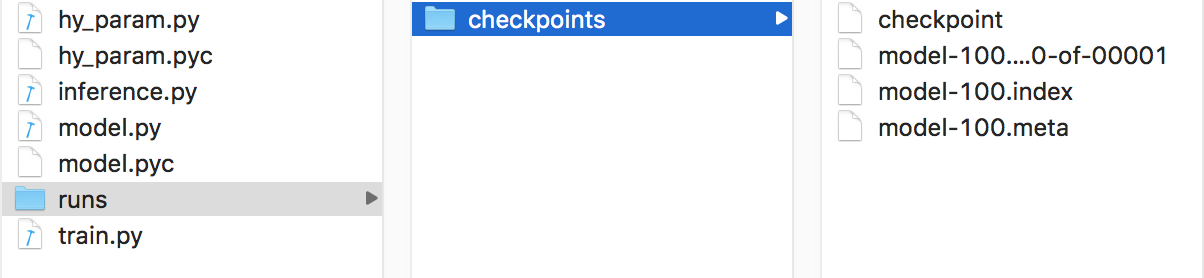

When the model gets trained completely its output as checkpoints will get dumped into the folder called /runs which will have the binary dump of checkpoints as shown in the following screenshot: