Chapter 7. Transaction Log

Every database in SQL Server has one or more transaction log files in addition to data files. The transaction log stores information about the changes made in the database and allows SQL Server to recover databases to transactionally consistent states in case of an unexpected shutdown or crash. Every data modification in the database is stored there, and high log file throughput is essential for good system performance.

In this chapter, I’ll explain how SQL Server logs transactions and how the transaction log works internally. Next, I’ll cover several best practices for transaction log configuration and talk about how to address “Transaction log full” situation. Finally, I’ll discuss how to troubleshoot insufficient transaction log throughput.

Transaction Log Internals

SQL Server uses a transaction log to keep each database in a transactionally consistent state, meaning that data modifications done from within transactions must either be committed or rolled back in full. SQL Server never allows data to be transactionally inconsistent by applying just a subset of the changes from uncommitted transactions.

The transaction log guarantees consistency. It stores the stream of log records generated by data modifications and some internal operations. Every log record has a unique, auto-incrementing Log Sequence Number (LSN) and describes the data change. It includes information about the affected row, the old and new versions of the data, the transaction that performed the modification, and so forth.

Every data page keeps the LSN of the last log record that modified it. During recovery, SQL Server can compare the LSNs of the log records and data pages and find out if the most recent changes were saved to the data files. There is enough information stored in a log record to undo or redo the operation if needed.

SQL Server uses Write-Ahead Logging (WAL), which guarantees that log records are always written to the log file before dirty data pages are saved to the database. Sharp-eyed readers may notice that in Chapter 3, I mentioned that log records are saved synchronously with data modifications, while data pages are saved asynchronously during the checkpoint process. While that is conceptually correct, I will be more precise here: SQL Server caches the log records in small memory caches called log buffers, writing them in batches to reduce the number of of log-write I/O operations.

Each database has its own log buffer, consisting of 60KB structures called log blocks. Each log buffer (and database) can have up to 128 log blocks. SQL Server writes log blocks to the log file in a single I/O operation. However, it does not always wait until the log block is full. The typical size of a log-writing I/O operation varies, from 512 bytes to 60KB.

Unfortunately, the. SQL Server documentation is inconsistent in its terminology, and often references log blocks as log buffers. Just remember that SQL Server caches the log records in memory before writing them on disk.

Data Modifications and Transaction Logging

Let’s look at how SQL Server modifies data in more detail. Figure 11-1 shows a database with an empty log buffer and transaction log. The last transaction in the log has an LSN of 7314.

Let’s assume that there are two active transactions: T1 and T2. The BEGIN TRAN log records for both of those transactions have already been saved in the log and are not shown in the diagram.

Figure 7-1. Data modifications and transaction logging: Initial state

Let’s assume that transaction T1 updates one of the rows on page (1:24413). This operation generates a new log record, which will be placed into the log buffer. It will also update the data page, marking it as dirty and changing the LSN in the page header. Figure 11-2 illustrates that.

At this point, the log record has not been saved to the log file (this is often called hardened). It does not introduce any issues as long as the data page has not been saved in the data file. In event of an SQL Server crash, both the log record and the modifications on the data page will be gone—which is fine, because the transaction has not been committed.

Figure 7-2. Data modifications and transaction logging: State after the first update

Next, let’s assume that transaction T2 inserts a new row into page (1:27013), while transaction T1 deletes another row on the same page. Those operations generate two log records. These are placed into the log buffer, as shown in Figure 11-3. Right now, all log records are still in the log buffer.

Figure 7-3. Data modifications and transaction logging: State after two data modifications

Now let’s assume that an application commits transaction T2. This action generates a COMMIT log record and forces SQL Server to write (harden) the content of the log block to disk. It writes all log records from the buffer to disk, regardless of what transaction generated them (Figure 11-4).

Figure 7-4. Data modifications and transaction logging: Commit operation

The applications receive confirmation that the transaction has been committed only after all log records are hardened. Even though the data page (1:27013) is still dirty and has not been saved into the data file, the hardened log records on the disk have enough information to re-apply the changes made by the committed T2 transaction if needed.

The dirty pages from the buffer pool will be saved to data files on the checkpoint. This operation also generates a CHECKPOINT log record and immediately hardens it into the log. Figure 11-5 shows that state.

Figure 7-5. Data modifications and transaction logging: Checkpoint

After the checkpoint occurs, the pages in the data file may store data from uncommitted transactions (T1, in our example). However, log records in the transaction log include enough information to undo the changes if needed. When this is the case, SQL Server performs compensation operations, executing actions opposite to those that made the original data modifications and generating compensation log records.

Figure 11-6 shows such an example, rolling transaction T1 back. Here, SQL Server has performed a compensation update, generating a compensation log record (LSN: 7320) to reverse the changes of the original update operation (LSN: 7315). It has also generated a compensation insert (LSN: 7321) to compensate for the delete operation (LSN: 7317).

Figure 7-6. Data modifications and transaction logging: Rollback

There are two transaction logging-related waits to monitor.

- WRITELOG

-

WRITELOG waits occur when SQL Server is waiting for the completion of an I/O operation that writes a log block to disk. With the exception of delayed durability (covered later in this chapter), this type of wait is synchronous, since it prevents transactions from committing while the write I/O is in progress. Your goal should be to minimize that wait and improve transaction-log throughput as much as possible.

- LOGBUFFER

-

LOGBUFFER waits occur when SQL Server is waiting for an available log block to save the log records. In most cases, this happens due to insufficient I/O throughput, when SQL Server cannot write log blocks to disk fast enough. Usually, when LOGBUFFER waits are present, you’ll also see WRITELOG waits. Improving transaction log throughput would help to address that.

I will talk how to troubleshoot and improve log-file throughput later in this chapter. However, you can also improve transaction-log performance by reducing the amount of logging. You can do this by removing unnecessary and unused indexes (more on those in Chapter 14), tuning your index-maintenance strategy to reduce page splits, and reducing the row size in frequently modified indexes.

You can also improve your transaction-management strategy by avoiding autocommitted transactions. This greatly reduces the amount of logging and the number of write log I/O requests in the system. Let’s look at that in more detail.

Explicit and Autocommitted Transactions and Log Overhead

As you learned in Chapter 8, SQL Server always executes statements in the context of a transaction. If you don’t have any explicit or implicit transactions started, SQL Server runs the statement in an autocommitted transaction, as if that statement was wrapped into a BEGIN TRAN ... COMMIT block.

Logging autocommitted transactions means including BEGIN XACT and COMMIT XACT transaction log records, which can significantly increase the amount of logging in the system. More importantly, it also decreases log performance, since SQL Server has to flush the log blocks after each statement on every COMMIT operation.

Figure 11-7 illustrates this. INSERT_1, UPDATE_1 and DELETE_1 operations run in autocommitted transactions, generating additional log records and forcing the log buffer to flush on each COMMIT. Alternatively, running those operations in explicit transactions leads to more efficient logging.

Figure 7-7. Explicit and autocommitted transactions

The code in Listing 11-1 shows the overhead involved in autocommitted transactions as compared to explicit transactions. It performs an INSERT/UPDATE/DELETE sequence 10,000 times in the loop, in autocommitted and explicit transactions, respectively. It then compares their execution time and transaction log throughput using the sys.dm_io_virtual_file_stats view.

Example 7-1. Explicit and autocommitted transactions

CREATE TABLE dbo.TranOverhead

(

Id INT NOT NULL,

Col CHAR(50) NULL,

CONSTRAINT PK_TranOverhead

PRIMARY KEY CLUSTERED(Id)

);

-- Autocommitted transactions

DECLARE

@Id INT = 1

,@StartTime DATETIME = GETDATE()

,@num_of_writes BIGINT

,@num_of_bytes_written BIGINT

SELECT @num_of_writes = num_of_writes, @num_of_bytes_written = num_of_bytes_written

FROM sys.dm_io_virtual_file_stats(db_id(),2);

WHILE @Id <= 10000

BEGIN

INSERT INTO dbo.TranOverhead(Id, Col) VALUES(@Id, 'A');

UPDATE dbo.TranOverhead SET Col = 'B' WHERE Id = @Id;

DELETE FROM dbo.TranOverhead WHERE Id = @Id;

SET @Id += 1;

END;

SELECT

DATEDIFF(MILLISECOND,@StartTime,GETDATE())

AS [Time(ms): Autocommitted Tran]

,s.num_of_writes - @num_of_writes

AS [Number of writes]

,(s.num_of_bytes_written - @num_of_bytes_written) / 1024

AS [Bytes written (KB)]

FROM

sys.dm_io_virtual_file_stats(db_id(),2) s;

GO

-- Explicit Tran

DECLARE

@Id INT = 1

,@StartTime DATETIME = GETDATE()

,@num_of_writes BIGINT

,@num_of_bytes_written BIGINT

SELECT @num_of_writes = num_of_writes, @num_of_bytes_written = num_of_bytes_written

FROM sys.dm_io_virtual_file_stats(db_id(),2);

WHILE @Id <= 10000

BEGIN

BEGIN TRAN

INSERT INTO dbo.TranOverhead(Id, Col) VALUES(@Id, 'A');

UPDATE dbo.TranOverhead SET Col = 'B' WHERE Id = @Id;

DELETE FROM dbo.TranOverhead WHERE Id = @Id;

COMMIT

SET @Id += 1;

END;

SELECT

DATEDIFF(MILLISECOND,@StartTime,GETDATE())

AS [Time(ms): Explicit Tran]

,s.num_of_writes - @num_of_writes

AS [Number of writes]

,(s.num_of_bytes_written - @num_of_bytes_written) / 1024

AS [Bytes written (KB)]

FROM

sys.dm_io_virtual_file_stats(db_id(),2) s;

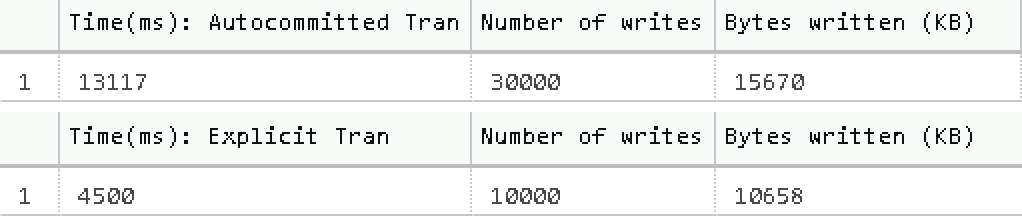

You can see the output from the code in my environment in Figure 11-8. Explicit transactions were about three times faster and generated three times less log activity than autocommitted ones.

Figure 7-8. EPerformance of explicit and autocommitted transactions

As I have stated, proper transaction management with explicit transactions can significantly improve your transaction-log throughput. Remember, however, the impact of long-running transactions on blocking. Exclusive (X) locks are held until the end of the transaction. Keep this locking behavior in mind as you design your transaction strategy and write your code, as well as considering the factors discussed in Chapter 8.

Unfortunately, changing transaction strategy in existing systems is not always possible. If your system suffers from a large number of autocommitted transactions and can tolerate a small amount of data loss, consider using another feature: Delayed Durability. This feature is available in SQL Server 2014 and above.

Delayed Durability

As you already know, SQL Server flushes the contents of the log block into a log file at the time of commit. It sends a confirmation to the client only after a commit record has been hardened to disk. This may lead to a large number of small log-write I/O requests with autocommitted transactions.

Delayed durability changes this behavior, making commit operations asynchronous. The client receives confirmation that the transaction has been committed immediately, without having to wait for the commit record to be hardened to disk. The commit record stays in the log buffer until one or more of the following conditions occur:

-

The log block is full

-

A fully durable transaction in the same database is committed, and its commit record flushes the contents of the log buffer to disk

-

A CHECKPOINT operation occurs

-

A sp_flush_log stored procedure is called

-

A log buffer flush operation is triggered based on the log generation rate and/or timeout thresholds

There is obviously some risk here. If SQL Server crashes before the commit record is hardened, the data modifications from that transaction will be rolled back at recovery, as if the transaction had never been committed at all. However, other transactions would be able to see the data modifications made by the delayed durability transaction between the commit and the crash.

You can control delayed durability on both the database and transaction levels. The database option DELAYED_DURABILITY supports three different values:

- DISABLED

-

This is the default option. It disables delayed durability in the database regardless of the transaction durability mode. All transactions in the database are always fully durable.

- FORCED

-

This option forces delayed durability for all database transactions regardless of the transaction durability mode.

- ALLOWED

With this option, delayed durability is controlled at the transaction level. Transactions are fully durable unless delayed durability is specified. Listing 11-2 shows how to specify it at the transaction level.

Example 7-2. Controlling delayed durability on transaction level

BEGIN TRAN /* Do the work */ COMMIT WITH (DELAYED_DURABILITY=ON);

Delayed durability may be used in chatty systems with large numbers of autocommitted transactions and insufficient log throughput. In most cases, however, I prefer to avoid it. I use it as a last resort, only when all other log throughput improvement techniques have been unsuccessful and only when data loss is acceptable. Use with care!

In-Memory OLTP Transaction Logging

Although covering In-Memory OLTP in detail would be outside the scope of this book, I need to mention In-Memory OLTP transaction logging. As opposed to row-based and column-based technologies, In-Memory OLTP generates transaction log records at the time of the COMMIT operation and only when the transaction is successfully committed. Logging is also optimized. Transactions usually generate just one transaction log record, or a few large ones, even when they modify large amounts of data. Those records are stored in the regular log file and backed up with all the other log records.

This behavior may change I/O patterns for log operations. In-Memory OLTP log writes may lead to larger write requests, especially with large In-Memory OLTP transactions. Moreover, the log files are continuously read by In-Memory OLTP’s continuous checkpoint process, which parses log records and updates In-Memory OLTP data persisted on disk.

You don’t need to worry about those details in most cases; however, remember about I/O patterns when you design an I/O subsystem for databases that use In-Memory OLTP.

VIRTUAL LOG FILES

Internally, SQL Server divides physical log files into smaller parts called Virtual Log Files (VLF). SQL Server uses them as a unit of management, and they can be active or inactive.

Active VLFs store the active portion of the transaction log, which contains log records required to keep the database transactionally consistent, provide point-in-time recovery, and support active SQL Server processes such as transactional replication and AlwaysOn Availability Groups. An inactive VLF contains the truncated (inactive) and unused parts of the transaction log.

Figure 11-9 shows an example transaction log file and VLFs. The active portion of the log starts with VLF3, the oldest active transaction in the system. In case of a rollback, SQL Server would need to access log records generated by that transaction.

Figure 7-9. Transaction log and VLFss

In Figure 11-9, the only process that keeps VLF3 active is the active transaction. When this transaction commits, SQL Server truncates the log, marking VLF3 as inactive (Figure 11-10). Truncating the transaction log does not reduce the size of the log file on disk; it just means that parts of the transaction log (one or more VLFs) are marked as inactive and ready for reuse.

Figure 7-10. Transaction log and VLFs – After commit

SQL Server uses VLFs as the unit of truncation. A VLF cannot be marked as inactive if it contains the single log record from the active portion of the log. This is one reason why having very large VLFs is not a good idea.

Transaction-log truncation behavior is controlled by the Database Recovery Model setting. There are three recovery models. Each guarantees that the active portion of the log has enough data to keep the database consistent; however, the models may provide different levels of recoverability in case of disaster, and SIMPLE and BULK-LOGGED models may prevent you from using some SQL Server technologies.

- SIMPLE

In the SIMPLE recovery model, the log is truncated at checkpoint. All data pages with LSNs prior to checkpoint LSN are saved on-disk. SQL Server does not need to access log records prior to the checkpoint to re-apply them to data pages in the event of an unexpected shutdown or crash. Old active transactions and transaction replication may defer truncation, keeping VLFs active.

In this recovery model, SQL Server does not use transaction log backups. It prevents you from performing a point-in-time recovery and may lead to data loss if either of the database files (data or log) becomes corrupted. The recovery point (RPO) for the database, in this model, becomes the time of the last full backup.

In some cases, such as when data is static or can be recreated from other sources, the SIMPLE recovery model may be completely acceptable. However, when you encounter this during a system health check, you need to confirm this with the system stakeholders and discuss the possibility of data loss.

- FULL

In the FULL recovery model, SQL Server fully logs all operations in the database and requires you to perform transaction log backups to truncate the transaction log. Because the transaction log backups store all log records in the database, this mode supports point-in-time recovery, as long as the sequence of backup files (backup chain) is available. With a few exceptions, you will probably want to use the FULL recovery model in production databases.

To support various SQL Server features and technologies that rely on transaction log records, the FULL recovery model is required. Those technologies may also defer truncation of the log, even when log backups are taken. For example, if an AlwaysOn Availability Group node goes offline, SQL Server will be unable to truncate the log until the node is back and catches up with the replication.

- BULK-LOGGED

The BULK-LOGGED recovery model works similarly to the FULL model, except that some operations are minimally logged: for example, index creation or BULK INSERT statements. With minimally logged operations, SQL Server logs only the page allocation information in the log file. While this reduces the log file usage, you cannot perform a point-in-time recovery when minimally logged operations are present. As with the SIMPLE recovery model, analyze the frequency of bulk-logged operations and the risks of potential data loss when you see databases with this recovery model in production.

You can analyze VLFs by using the sys.dm_db_log_info data management view in SQL Server 2016 and above or with the DBCC LOGINFO command in older versions of SQL Server. Listing 11-3 shows the code that uses this view against one of the databases.

Example 7-3. Analyzing VLFs in the database

SELECT *

FROM sys.dm_db_log_info(DB_ID());

SELECT

COUNT(*) as [VLF Count]

,MIN(vlf_size_mb) as [Min VLF Size (MB)]

,MAX(vlf_size_mb) as [Max VLF Size (MB)]

,AVG(vlf_size_mb) as [Avg VLF Size (MB)]

FROM sys.dm_db_log_info(DB_ID());

Figure 11-11 shows the output from the view for a database with an incorrect log file configuration that uses a 10% auto-growth setting. As you can see, the database has a large number of unevenly sized VLFs.

Figure 7-11. Inefficient VLF configuration

Transaction Log Configuration

SQL Server works with transaction logs sequentially while writing and reading a stream of log records. Even though the log may have multiple physical files, SQL Server does not usually benefit from them; in most cases, a single log file is easier to maintain and manage.

There are a couple of edge cases when multiple log files can be beneficial:

SQL Server may zero-initialize log file in parallel. This could speed up database creation or restoration if it uses large (multi-terabyte) log files.

If you want to place the transaction log on a fast but small drive, you can create a file pre-allocating the size to fill the fast drive and add another small log file on a larger and slower drive. SQL Server will use the file on the fast drive most of the time; however, the small file will protect you if the transaction log is full and not truncating.

It is better to manage transaction log size manually, avoiding the overhead of zero-initializing at the time of auto-growth. You can analyze a transaction log’s size and recreate the log, pre-allocating the size as needed. Be sure to take log-intensive operations such as index maintenance into account when you do the analysis.

As you learned stated in Chapter 1, you can rebuild the log by shrinking it to the minimal size and then pre-allocate the space using chunks of 1,024 to 4,096 MB. I usually use 1,024MB chunks, which will create 128MB VLFs. If I need very large log files – hundreds of gigabytes or even terabytes—I might use larger chunks.

Do not restrict the log’s maximum size and auto-growth: you need to be able to grow the log in case of emergencies.

Log Truncation Issues

Excessive transaction log growth is a common problem that junior or accidental DBAs should handle. It happens when SQL can’t truncate the transaction log and reuse the space in the log file. In such cases, the log file continues to grow until it fills the entire disk, switching the database to read-only mode and raising a "Transaction log full" error (error code 9002).

The best way to handle this condition is to avoid it in the first place. As I discussed in the Monitoring Disk Space Usage section in Chapter 9, it is essential to monitor for low disk space condition and set up alerts. If you pre-allocate the log file to fit the entire drive, monitor the amount of free space in the log file and have it send an alert when it is low.

If you end up in a Transaction Log Full situation, my first and most important advice is: Don’t panic. First, you need to analyze the root cause of the issue and see if you can mitigate it quickly. You can do this by looking at the log_reuse_wait_desc column in the sys.databases view, either querying it directly or using the more sophisticated version shown in Listing 11-4. This column shows you why the log is not truncated.

Example 7-4. Analyzing the log_reuse_wait_desc column in the sys.databases view

CREATE TABLE #SpaceUsed

(

database_id SMALLINT NOT NULL,

file_id SMALLINT NOT NULL,

space_used DECIMAL(15,3) NOT NULL,

PRIMARY KEY(database_id, file_id)

);

EXEC master..sp_MSforeachdb

N'USE[?];

INSERT INTO #SpaceUsed(database_id, file_id, space_used)

SELECT DB_ID(''?''), file_id,

(size - CONVERT(INT,FILEPROPERTY(name, ''SpaceUsed''))) / 128.

FROM sys.database_files

WHERE type = 1;';

SELECT

d.database_id, d.name, d.recovery_model_desc

,d.state_desc, d.log_reuse_wait_desc, m.physical_name

,m.is_percent_growth

,IIF(m.is_percent_growth = 1

,m.growth

,CONVERT(DECIMAL(15,3),m.growth / 128.0)

) AS [Growth (MB or %)]

,CONVERT(DECIMAL(15,3),m.size / 128.0) AS [Size (MB)]

,IIF(m.max_size = -1

,-1

,CONVERT(DECIMAL(15,3),m.max_size / 128.0)

) AS [Max Size(MB)]

,s.space_used as [Space Used(MB)]

FROM

sys.databases d WITH (NOLOCK)

JOIN sys.master_files m WITH (NOLOCK) ON

d.database_id = m.database_id

LEFT OUTER JOIN #SpaceUsed s ON

s.database_id = m.database_id AND

s.file_id = m.file_id

ORDER BY

d.database_id;

Figure 11-12 shows example output from Listing 11-4.

Figure 7-12. Analysing log_reuse_wait_desc data

Let’s look at the most common reasons for deferred log truncation and log_reuse_wait_desc values.

LOG_BACKUP Log Reuse Wait

The LOG_BACKUP log reuse wait is one of the most common waits for databases in the FULL and BULK-LOGGED recovery models. It indicates that the log cannot be truncated due to a lack of recent transaction log backup.

When you see this log reuse wait, check the status of the transaction log backup job. Make sure it is not failing due to a lack of space in the backup destination, or for any other reasons. It is also possible that log backup frequency is not fast enough during log-intensive operations. For example, index maintenance can generate enormous amounts of transaction log records in a very short time.

You can mitigate the issue by performing a transaction log backup. Remember to keep the backup file if you run this operation manually using a non-standard backup destination. The file becomes part of your backup chain and will be required for database recovery.

If you don’t use any technologies that rely on the FULL recovery model, you can temporarily switch the database to SIMPLE mode, which will truncate the transaction log. Remember that this will leave you exposed to data loss. Switch back to the FULL recovery model and reinitialize the backup chain by performing FULL and LOG backups as quickly as possible.

Finally, in many cases, this situation may be avoided by properly monitoring the health of the backup jobs. Set up alerts for continuous log backup failures.

ACTIVE_TRANSACTION Log Reuse Wait

The ACTIVE_TRANSACTION log reuse wait indicates that the log cannot be truncated due to the presence of the old active transaction. The most common case for that is incorrect transaction management in the application, which leads to runaway uncommitted transactions. For example, the application may issue multiple BEGIN TRAN statements without corresponding COMMIT for each of them.

You can see the list of active transactions using the code from Listing 11-5. The code may provide you multiple rows for each transaction, because it gets log usage information on a per-database basis.

Example 7-5. Getting active transactions

SELECT

dt.database_id

,DB_NAME(dt.database_id) as [DB]

,st.session_id

,CASE at.transaction_state

WHEN 0 THEN 'Not Initialized'

WHEN 1 THEN 'Not Started'

WHEN 2 THEN 'Active'

WHEN 3 THEN 'Ended (R/O)'

WHEN 4 THEN 'Commit Initialize'

WHEN 5 THEN 'Prepared'

WHEN 6 THEN 'Committed'

WHEN 7 THEN 'Rolling Back'

WHEN 8 THEN 'Rolled Back'

END AS [State]

,at.transaction_begin_time

,es.login_name

,ec.client_net_address

,ec.connect_time

,dt.database_transaction_log_bytes_used

,dt.database_transaction_log_bytes_reserved

,er.status

,er.wait_type

,er.last_wait_type

,sql.text AS [SQL]

FROM

sys.dm_tran_database_transactions dt WITH (NOLOCK)

JOIN sys.dm_tran_session_transactions st WITH (NOLOCK) ON

dt.transaction_id = st.transaction_id

JOIN sys.dm_tran_active_transactions at WITH (NOLOCK) ON

dt.transaction_id = at.transaction_id

JOIN sys.dm_exec_sessions es WITH (NOLOCK) ON

st.session_id = es.session_id

JOIN sys.dm_exec_connections ec WITH (NOLOCK) ON

st.session_id = ec.session_id

LEFT OUTER JOIN sys.dm_exec_requests er WITH (NOLOCK) ON

st.session_id = er.session_id

CROSS APPLY

sys.dm_exec_sql_text(ec.most_recent_sql_handle) sql

ORDER BY

dt.database_transaction_begin_timel

You can kill the session that holds active transaction using the KILL command. Later, you can analyze why the transaction was not properly managed.

AVAILABILITY_REPLICA Log Reuse Wait

As you can guess by the name, the AVAILABILITY_REPLICA log reuse wait may occur in systems that use AlwaysOn Availability Groups. In that technology, the primary node communicates with the secondary nodes by sending them the stream of transaction log records. The log cannot be truncated until those records have been sent and replayed on the secondaries.

The AVAILABILITY_REPLICA log reuse waits usually occur during certain issues in Availability Groups: most commonly the secondary node being unavailable, replication between the nodes falling behind, or secondaries being unable to replay changes fast enough to keep up with the load.

When you see this wait, check the health of the Availability Group. In most cases, the only quick option to address the issue, besides adding more space to the log, is removing a problematic secondary node from the Availability Group. I’ll discuss Availability Groups in more detail in the next chapter.

DATABASE_MIRRORING Log Reuse Wait

The DATABASE_MIRRORING log reuse wait occurs in systems that use database mirroring technology. This technology was a predecessor of AlwaysOn Availability Groups and behaves similarly to it, communicating through the stream of log records.

I’m not going to discuss how to troubleshoot database mirroring in this book, since that technology has long been obsolete. Conceptually, it is similar to troubleshooting Availability Groups issues.

As with AVAILABILITY_REPLICA reuse waits, analyze the health of database mirroring when you see a DATABASE_MIRRORING reuse wait.

REPLICATION Log Reuse Wait

The REPLICATION log reuse wait occurs when the Log Reader agent falls behind while harvesting log records for transactional replication or Change Data Capture (CDC) processes. When you see this log reuse wait, check the status of the Log Reader agent and address the issues you discover.

One particular issue you may experience is the Log Agent query timing out. By default, it is 30 minutes, which is sufficient in most cases. However, it may not be enough when system processes very large data modifications (millions of rows) in the replicated tables. You can increase the QueryTimeout in the Log Agent profile if this is the case.

There are two “nuclear” options: you can remove the replication or mark log records as harvested by using the sp_repldone command. Both approaches may require you to reinitialize the replication later.

ACTIVE_BACKUP_OR_RESTORE Log Reuse Wait

The ACTIVE_BACKUP_OR_RESTORE reuse wait indicates that the log cannot be truncated due to active database backup or restore processes, regardless of what type of backup or restore is running.

One of the common cases for this wait is degraded performance of network or backup storage during the large FULL backup. Check the status of the active backup and restore jobs when you see this reuse wait.

Other Mitigation Strategies

It isn’t always possible to mitigate the root cause of an issue quickly. Sometimes it isn’t even feasible. For example, removing unavailable replica from Availability Group or disabling replication may lead to significant work rebuilding them later.

You can add another log file or expand the size of the log drive as a temporary solution. This allows the database to operate and gives you some time to mitigate the root cause of the issue.

Remember that you could have multiple issues preventing transaction log truncation simultaneously. For example, a network outage could prevent the server from communicating with Availability Group replicas and from accessing the backup destination. Check the log_reuse_wait_desc value and the amount of free space in the log file after you address each issue.

Finally, learn from the experience. Transaction Log Full issues are serious, and you should avoid it at all costs. Do the root-cause analysis, evaluate your monitoring strategy, and perform capacity planning to reduce the possibility of this happening again.

Transaction Log Throughout

The impact of bad transaction log throughput is not always visible. While any operation that changes something in the database writes to transaction log, those writes are considered part of the operation. Engineers tend to look at the “big picture” and performance of an entire operation as a whole, overlooking the impact of individual components.

Think about index maintenance, for example. This is an extremely log-intensive operation and bad log throughput greatly affects its performance. Nevertheless, database administrators usually try to reduce index maintenance impact and duration by adjusting its schedule or excluding indexes from the maintenance, overlooking slow log writes. (There are some exceptions; however, they are few and far between.)

It is also easy to overlook the impact of bad transaction log throughput on the regular workload in OLTP systems. High log write latency increases queries execution time, though people rarely look at it during query tuning. In either case, improving transaction log performance always improves system performance.

A word of caution, though: While improving transaction log performance is always beneficial, it is not a magic solution to every problem. Nor will impact always be visible to users. You may get better ROI from addressing other bottlenecks first.

From a wait statistics standpoint, you can look at WRITELOG and LOGBUFFER waits: as I’ve mentioned, WRITELOG occurs when SQL Server waits for the log write to complete. LOGBUFFER happens when SQL Server does not have the available log buffer to cache the log records or can’t flush log blocks to disk fast enough.

I wish I could tell you an exact percentage threshold when those waits start to represent a problem, but that would be impossible. You’ll always see them in the system, but you need to look at general I/O health and throughput to estimate their impact. You’ll also often see WRITELOG wait together with other I/O waits (PAGEIOLATCH, etc), especially when log and data files are sharing the same physical storage and network I/O path. In most cases, this is the sign that the I/O subsystem is either overloaded or configured incorrectly.

As Chapter 3 discussed, the sys.dm_io_virtual_file_stats view provides information about database files latency and throughput. I generally like to see an average write latency in the log files of within 1 to 3 milliseconds when network-based storage is used.

In high-end OLTP systems, you can place log files to DAS NMVe drives, which should bring the latency into the sub-millisecond range. In some edge cases, you can also utilize persistent memory technologies to reduce latency even further.

Pay attention to the average size of log writes. Small writes are less efficient and may impact log throughput. In most cases, those writes are a sign of autocommitted transactions. You can reduce them with proper transaction management or by enabling delayed durability (in cases when you can tolerate a small amount of data loss).

There are several performance counters in the Databases object that you can use to monitor transaction log activity in real time. These include:

- Log Bytes Flushed/sec

-

The Log Bytes Flushed/sec counter shows how much data has been written to the log file.

- Log Flushes/sec

-

The Log Flushes/sec counter indicates how many log write operations have been performed every second. You can use it with the Log Bytes Flushed/sec counter to estimate the average log write size.

- Log Flush Write Time(ms)

The Log Flush Write Time(ms) counter shows the average time of a log write operation. You can use it to see log write I/O latency in real time.

- Log Flush Waits/sec

The Log Flush Waits/sec counter provides the number of commit operations per second while waiting for the log records to be flushed. Ideally, this number should be very low. High values indicate a log-throughput bottleneck.

Keep in mind that log-intensive operations may dramatically change the numbers. For example, an unthrottled index rebuild can generate an enormous number of log records very quickly, saturating your I/O subsystem and log throughput.

I like to run a SQL Agent job and collect data from the sys.dm_io_virtual_file_stats view every 5 to 15 minutes, then persist it in the DBA utility database. This provides detailed information about I/O workload overtime. This information is extremely useful when you’re analyzing transaction-log throughput.

In either case, you may need to look at a few different areas to improve transaction log performance and throughput. First, analyze the hardware – it is essential to use a fast disk subsystem with the log files. Next, look at the log configuration, and try to reduce the overhead of large number of VLFs and growth management. Finally, look for opportunities to reduce log generation through proper transaction management and efficient database maintenance jobs.

Summary

SQL Server uses Write-Ahead Logging to support database consistency requirements. Log records fare hardened to the log file before transactions are committed. Insufficient transaction log throughput impacts system performance. Put transaction logs to the fast low-latency storage when possible.

Transaction log throughput issues present themselves with WRITELOG and LOGBUFFER waits. Troubleshoot log performance when these waits become noticeable. You can use sys.dm_io_virtual_file_stats view and performance counters for troubleshooting.

You can improve transaction log performance by reducing the databases’ log generation rate. It can be done by tuning index maintenance strategy, removing unnecessary indexes, refactoring database schema, and improving transaction management. You can enable delayed durability for databases that handle large numbers of autocommitted transactions and can sustain small data losses.

Make sure that the log file is properly configured and the number of VLFs in the file is manageable. Consider rebuilding the log if you detect suboptimal configuration.

In the next chapter, we’ll talk about AlwaysOn Availability Groups and the issues you may encounter when using them.

Troubleshooting Checklist

Review and adjust transaction log configuration for the databases

Analyze number of VLFs and rebuild logs if needed

Check databases’ recovery model and discuss disaster recovery strategy with stakeholders

Reduce transaction log generation rate when possible

Analyze and improve transaction log throughput