Chapter 2. The Ecosystem

In this chapter we will explore the current serverless computing offerings and the wider ecosystem. We’ll also try to determine whether serverless computing only makes sense in the context of a public cloud setting or if operating and/or rolling out a serverless offering on-premises also makes sense.

Overview

Many of the serverless offerings at the time of writing of this report (mid-2016) are rather new, and the space is growing quickly.

Table 2-1 gives a brief comparison of the main players. More detailed breakdowns are provided in the following sections.

| Offering | Cloud offering | On-premises | Launched | Environments |

|---|---|---|---|---|

AWS Lambda |

Yes |

No |

2014 |

Node.js, Python, Java |

Azure Functions |

Yes |

Yes |

2016 |

C#, Node.js, Python, F#, PHP, Java |

Google Cloud Functions |

Yes |

No |

2016 |

JavaScript |

iron.io |

No |

Yes |

2012 |

Ruby, PHP, Python, Java, Node.js, Go, .NET |

Galactic Fog’s Gestalt |

No |

Yes |

2016 |

Java, Scala, JavaScript, .NET |

IBM OpenWhisk |

Yes |

Yes |

2014 |

Node.js, Swift |

Note that by cloud offering, I mean that there’s a managed offering in one of the public clouds available, typically with a pay-as-you-go model attached.

AWS Lambda

Introduced in 2014 in an AWS re:Invent keynote, AWS Lambda is the incumbent in the serverless space and makes up an ecosystem in its own right, including frameworks and tooling on top of it, built by folks outside of Amazon. Interestingly, the motivation to introduce Lambda originated in observations of EC2 usage: the AWS team noticed that increasingly event-driven workloads were being deployed, such as infrastructure tasks (log analytics) or batch processing jobs (image manipulation and the like). AWS Lambda started out with support for the Node runtime and currently supports Node.js 4.3, Python 2.7, and Java 8.

The main building blocks of AWS Lambda are:

-

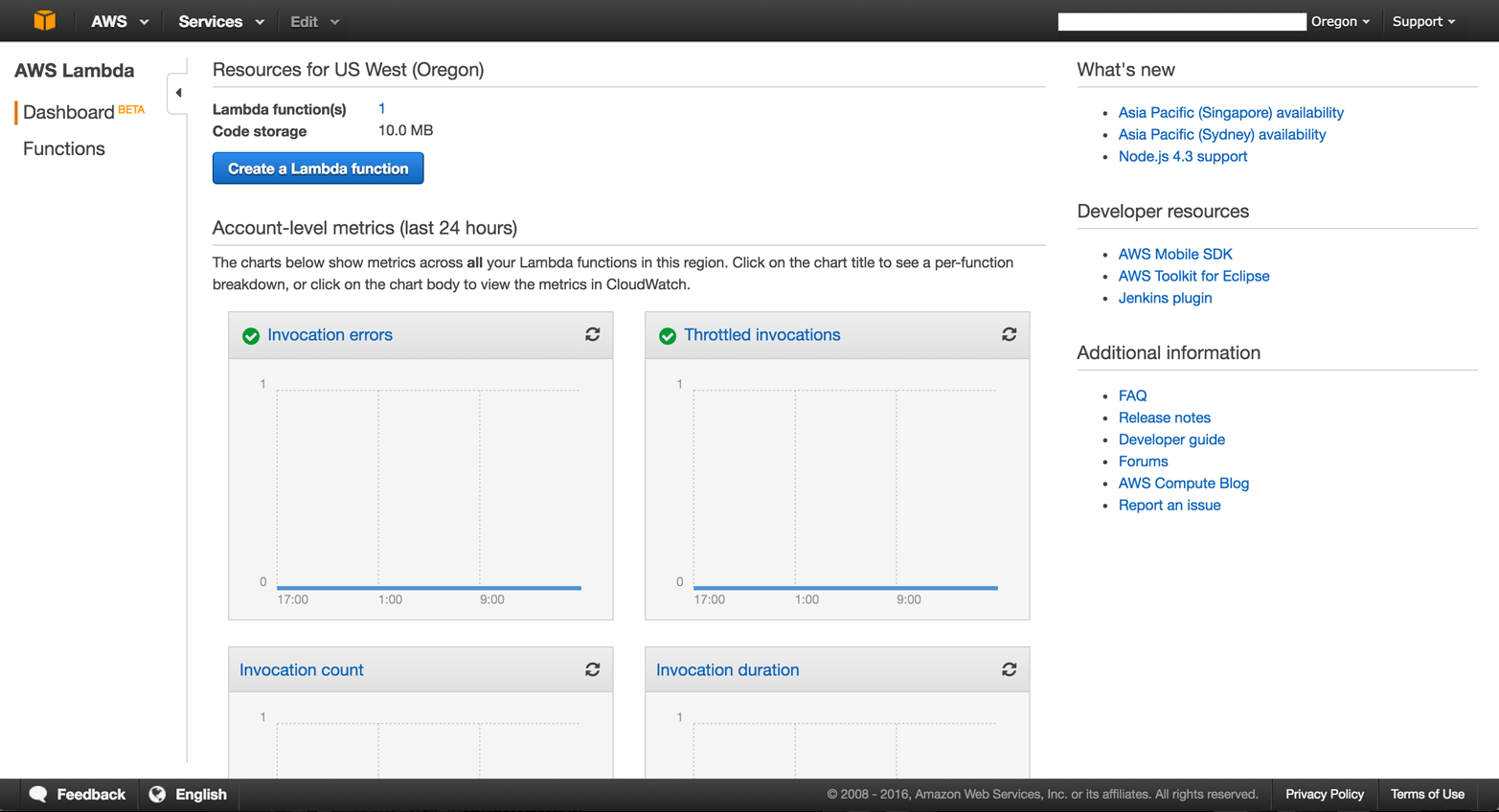

The AWS Lambda Web UI (see Figure 2-1) and CLI itself to register, execute, and manage functions

-

Event triggers, including, but not limited to, events from S3, SNS, and CloudFormation to trigger the execution of a function

-

CloudWatch for logging and monitoring

Figure 2-1. AWS Lambda dashboard

Pricing

Pricing of AWS Lambda is based on the total number of requests as well as execution time. The first 1 million requests per month are free; after that, it’s $0.20 per 1 million requests. In addition, the free tier includes 400,000 GB-seconds of computation time per month. The minimal duration you’ll be billed for is 100 ms, and the actual costs are determined by the amount of RAM you allocate to your function (with a minimum of 128 MB).

Availability

Lambda has been available since 2014 and is a public cloud–only offering.

We will have a closer look at the AWS Lambda offering in Chapter 4, where we will walk through an example from end to end.

Azure Functions

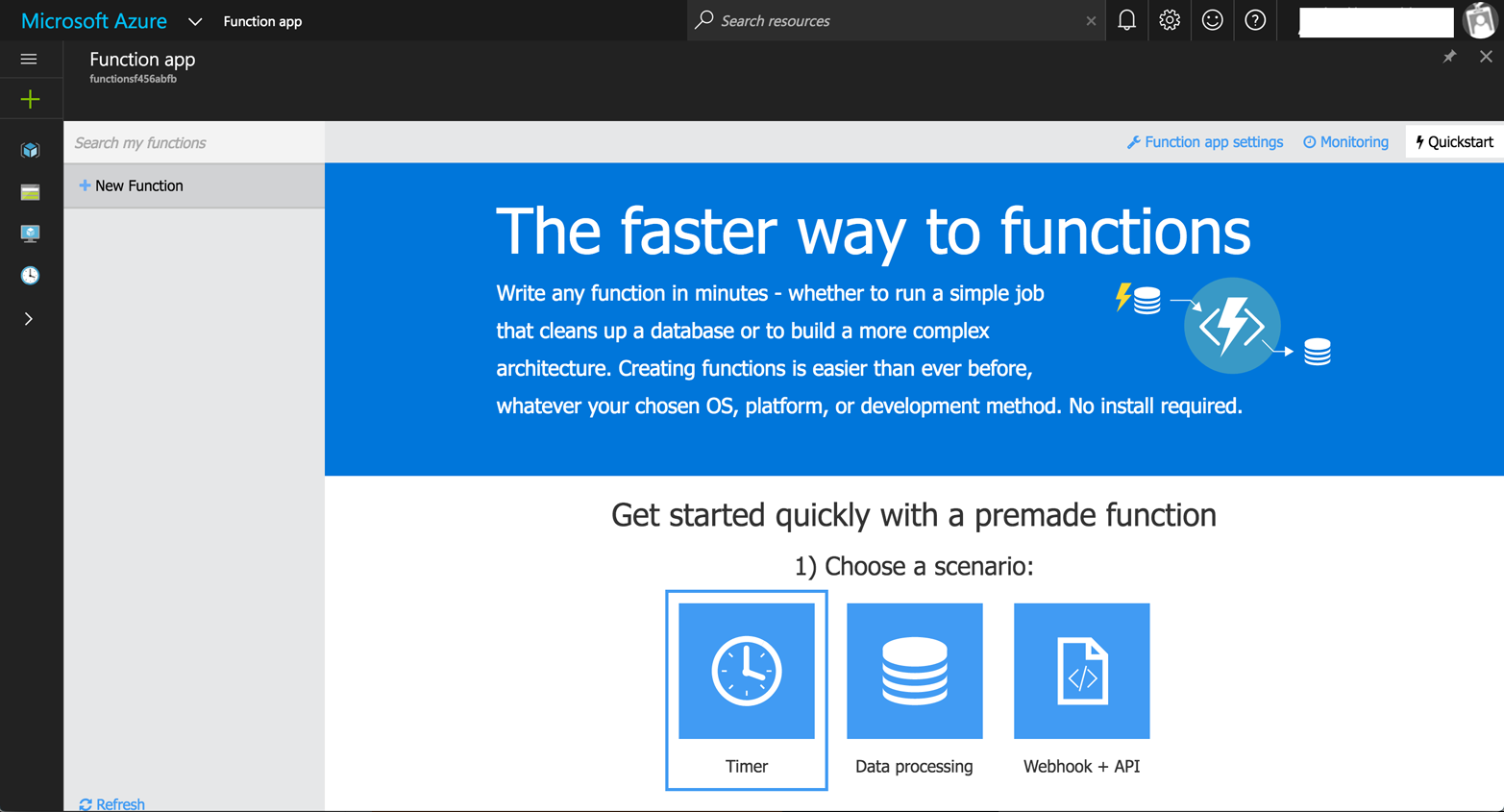

During the Build 2016 conference Microsoft released Azure Functions, supporting functions written with C#, Node.js, Python, F#, PHP, batch, bash, Java, or any executable. The Functions runtime is open source and integrates with Azure-internal and -external services such as Azure Event Hubs, Azure Service Bus, Azure Storage, and GitHub webhooks. The Azure Functions portal, depicted in Figure 2-2, comes with templates and monitoring capabilities.

Figure 2-2. Azure Functions portal

As an aside, Microsoft also offers other serverless solutions such as Azure Web Jobs and Microsoft Flow (an “if this, then that” [IFTTT] for business competitors).

Pricing

Pricing of Azure Functions is similar to that of AWS Lambda; you pay based on code execution time and number of executions, at a rate of $0.000008 per GB-second and $0.20 per 1 million executions. As with Lambda, the free tier includes 400,000 GB-seconds and 1 million executions.

Availability

Since early 2016, the Azure Functions service has been available both as a public cloud offering and on-premises as part of the Azure Stack.

Google Cloud Functions

Google Cloud Functions can be triggered by messages on a Cloud Pub/Sub topic or through mutation events on a Cloud Storage bucket (such as “bucket is created”). For now, the service only supports Node.js as the runtime environment. Using Cloud Source Repositories, you can deploy Cloud Functions directly from your GitHub or Bitbucket repository without needing to upload code or manage versions yourself. Logs emitted are automatically written to Stackdriver Logging and performance telemetry is recorded in Stackdriver Monitoring.

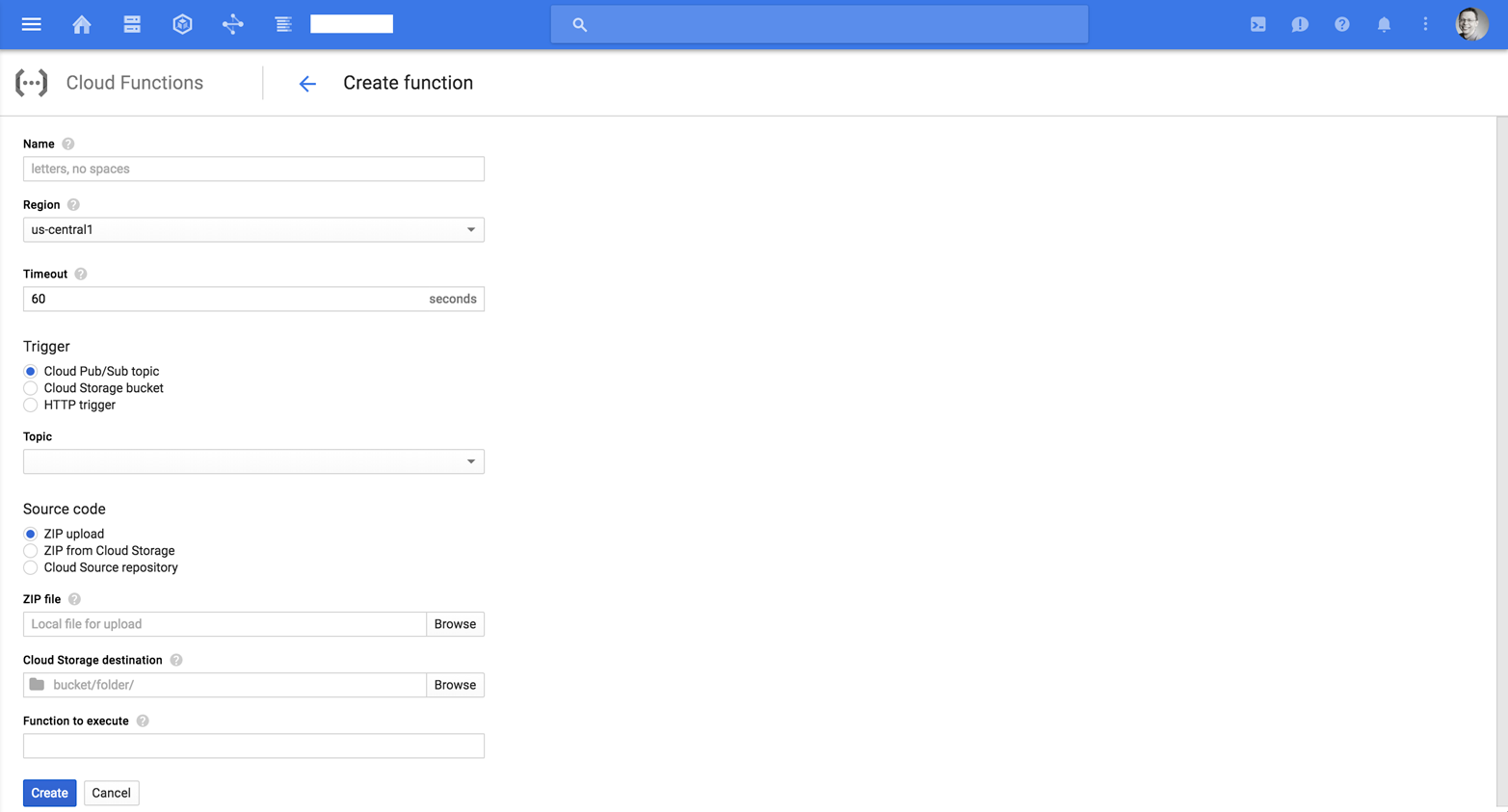

Figure 2-3 shows the Google Cloud Functions view in the Google Cloud console. Here you can create a function, including defining a trigger and source code handling.

Figure 2-3. Google Cloud Functions

Pricing

Since the Google Cloud Functions service is in Alpha, no pricing has been disclosed yet. However, we can assume that it will be priced competitively with the incumbent, AWS Lambda.

Availability

Google introduced Cloud Functions in February 2016. At the time of writing, it’s in Alpha status with access on a per-request basis and is a public cloud–only offering.

Iron.io

Iron.io has supported serverless concepts and frameworks since 2012. Some of the early offerings, such as IronQueue, IronWorker, and IronCache, encouraged developers to bring their code and run it in the Iron.io-managed platform hosted in the public cloud. Written in Go, Iron.io recently embraced Docker and integrated the existing services to offer a cohesive microservices platform. Codenamed Project Kratos, the serverless computing framework from Iron.io aims to bring AWS Lambda to enterprises without the vendor lock-in.

In Figure 2-4, the overall Iron.io architecture is depicted: notice the use of containers and container images.

Figure 2-4. Iron.io architecture

Pricing

No public plans are available, but you can use the offering via a number of deployment options, including Microsoft Azure and DC/OS.

Availability

Iron.io has offered its services since 2012, with a recent update around containers and supported environments.

Galactic Fog’s Gestalt

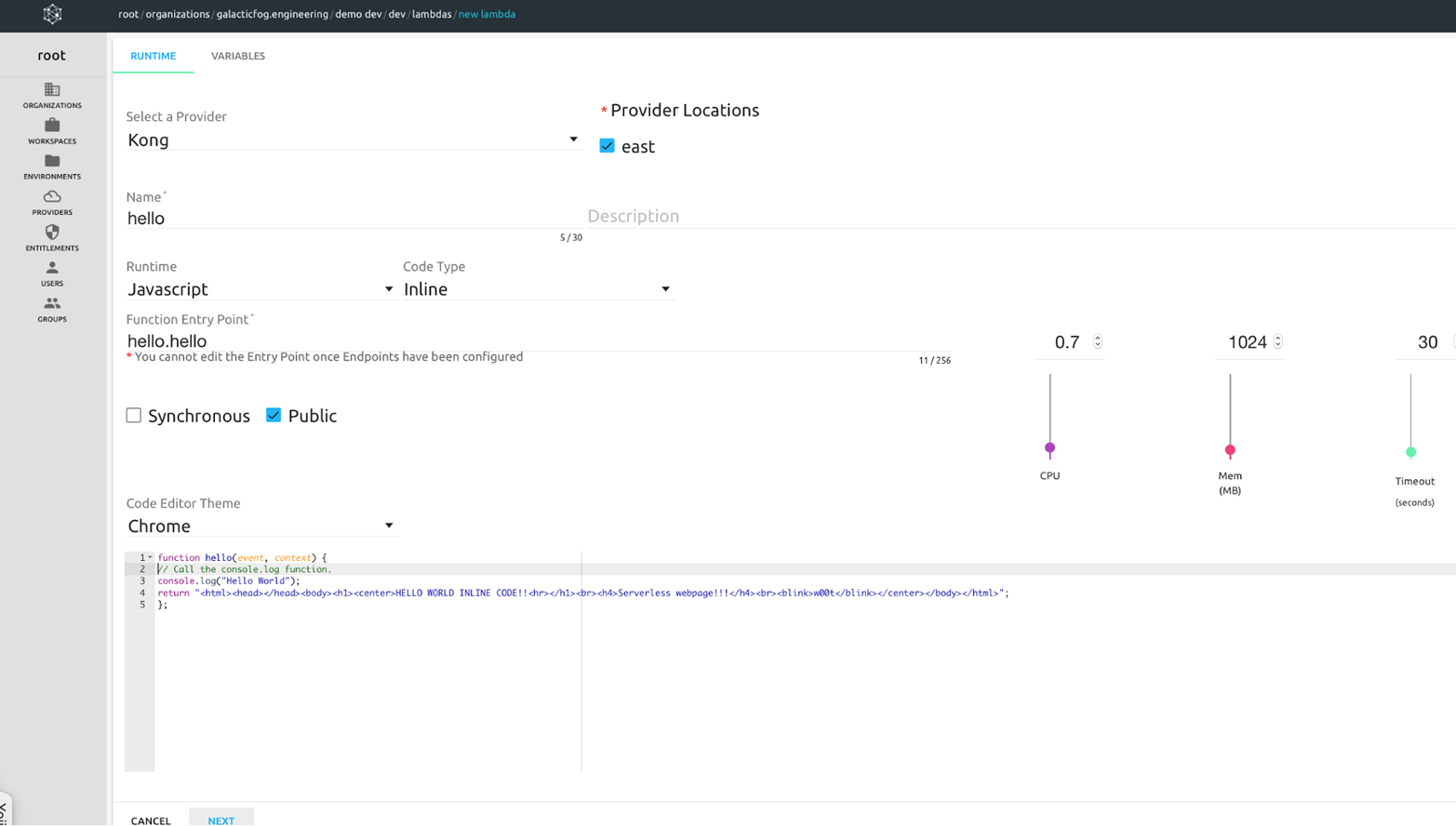

Gestalt (see Figure 2-5) is a serverless offering that bundles containers with security and data features, allowing developers to write and deploy microservices on-premises or in the cloud.

Figure 2-5. Gestalt Lambda

Pricing

No public plans are available.

Availability

Launched in mid-2016, the Gestalt Framework is deployed using DC/OS and is suitable for cloud and on-premises deployments; no hosted service is available yet.

See the MesosCon 2016 talk “Lamba Application Servers on Mesos” by Brad Futch for details on the current state as well as the upcoming rewrite of Gestalt Lambda called LASER.

IBM OpenWhisk

IBM OpenWhisk is an open source alternative to AWS Lambda. As well as supporting Node.js, OpenWhisk can run snippets written in Swift. You can install it on your local machine running Ubuntu. The service is integrated with IBM Bluemix, the PaaS environment powered by Cloud Foundry. Apart from invoking Bluemix services, the framework can be integrated with any third-party service that supports webhooks. Developers can use a CLI to target the OpenWhisk framework.

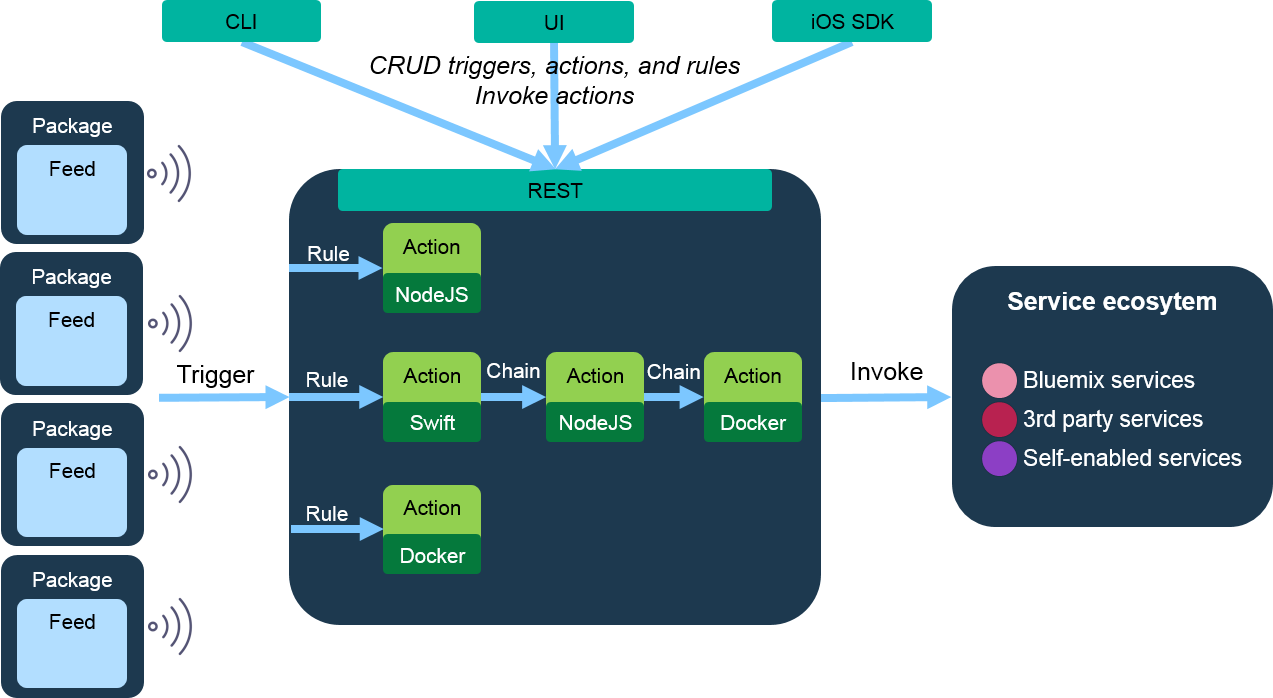

Figure 2-6shows the high-level architecture of OpenWhisk, including the trigger, management, and integration point options.

Figure 2-6. OpenWhisk architecture

Pricing

The costs are determined based on Bluemix, at a rate of $0.0288 per GB-hour of RAM and $2.06 per public IP address. The free tier includes 365 GB-hours of RAM, 2 public IP addresses, and 20 GB of external storage.

Availability

Since 2014, OpenWhisk has been available as a hosted service via Bluemix and for on-premises deployments with Bluemix as a dependency.

See “OpenWhisk: a world first in open serverless architecture?” for more details on the offering.

Other Players

In the past few years, the serverless space has seen quite some uptake, not only in terms of end users but also in terms of providers. Some of the new offerings are open source, some leverage or extend existing offerings, and some are specialized offerings from existing providers. They include:

-

OpenLambda, an open source serverless computing platform

-

Nano Lambda, an automated computing service that runs and scales your microservices

-

Webtask by Auth0, a serverless environment supporting Node.js with a focus on security

-

Serverless Framework, an application framework for building web, mobile, and Internet of Things (IoT) applications powered by AWS Lambda and AWS API Gateway, with plans to support other providers, such as Azure and Google Cloud

-

IOpipe, an analytics and distributed tracing service that allows you to see inside AWS Lambda functions for better insights into the daily operations

Cloud or on-Premises?

A question that often arises is whether serverless computing only makes sense in the context of a public cloud setting, or if rolling out a serverless offering on-premises also makes sense. To answer this question, we will discuss elasticity features, as well as dependencies introduced when using a serverless offering.

So, which one is the better option? A public cloud offering such as AWS Lambda, or one of the existing open source projects, or your home-grown solution on-premises? As with any IT question, the answer depends on many things, but let’s have a look at a number of considerations that have been brought up in the community and may be deciding factors for you and your organization.

One big factor that speaks for using one of the (commercial) public cloud offerings is the ecosystem. Look at the supported events (triggers) as well as the integrations with other services, such as S3, Azure SQL Database, and monitoring and security features. Given that the serverless offering is just one tool in your toolbelt, and you might already be using one or more offerings from a certain cloud provider, the ecosystem is an important point to consider.

Oftentimes the argument is put forward that true autoscaling of the functions only applies to public cloud offerings. While this is not black and white, there is a certain point to this claim: the elasticity of the underlying IaaS offerings of public cloud providers will likely outperform whatever you can achieve in your datacenter. This is, however, mainly relevant for very spiky or unpredictable workloads, since you can certainly add virtual machines (VMs) in an on-premises setup in a reasonable amount of time, especially when you know in advance that you’ll need them.

Avoiding lock-in is probably the strongest argument against public cloud serverless deployments, not so much in terms of the actual code (migrating this from one provider to another is a rather straightforward process) but more in terms of the triggers and integration points. At the time of writing, there is no good abstraction that allows you to ignore storage or databases and work around triggers that are available in one offering but not another.

Another consideration is that when you deploy the serverless infrastructure in your datacenter you have full control over, for example how long a function can execute. The public cloud offerings at the current point in time do not disclose details about the underlying implementation, resulting in a lot of guesswork and trial and error when it comes to optimizing the operation. With an on-premises deployment you can go as far as developing your own solution, as discussed in Appendix A; however, you should be aware of the investment (both in terms of development and operations) that is required with this option.

Table 2-1 summarizes the criteria discussed in the previous paragraphs.

| Offering | Cloud | On-premises |

|---|---|---|

Ecosystem |

Yes |

No |

True autoscaling |

Yes |

No |

Avoiding lock-in |

No |

Yes |

End-to-end control |

No |

Yes |

Note that depending on what is important to your use case, you’ll rank different aspects higher or lower; my intention here is not to categorize these features as positive or negative but simply to point out potential criteria you might want to consider when making a decision.

Conclusion

In this chapter, we looked at the current state of the serverless ecosystem, from the incumbent AWS Lambda to emerging open source projects such as OpenLambda. Further, we discussed the topic of using a serverless offering in the public cloud versus operating (and potentially developing) one on-premises based on decision criteria such as elasticity and integrations with other services such as databases. Next we will discuss serverless computing from an operations perspective and explore how the traditional roles and responsibilities change when applying the serverless paradigm.