In the book titled The age of AI and our human future by Henry Kissinger, Eric Schmidt, Daniel Huttenlocher, they defined AI as machines that can perform tasks that require human-level intelligence. AI, powered by new algorithms and increasingly plentiful and inexpensive computing power, is becoming ubiquitous.

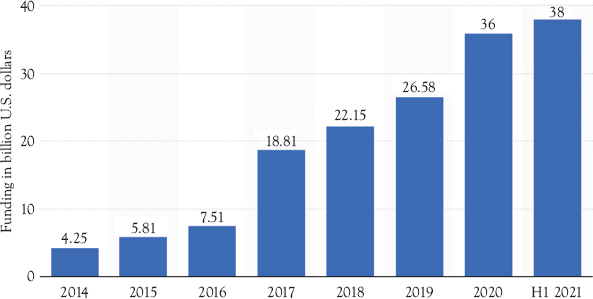

According to Statista, the amount of money invested annually into start-up companies working in the AI market worldwide has continuously increased from 2014 to 2021. In 2020, AI start-ups attracted around 36 billion U.S. dollars in investment. Just in the first six months of 2021, this figure surpassed and reached 38 billion U.S. dollars.

Source: Statista, March 17, 2022. Funding of AI start-up companies worldwide from 2014 to 2021 (in billions U.S. dollars).

Generative AI—One of the Top Strategic Technologies in 2022

Generative AI was listed by Gartner as one of the top strategic technologies in 2022 in a report released on October 18, 2021.

Explaining Gartner 2022 top strategic technology: Generative AI

What Is Generative AI?

When it comes to generative AI, it has recently exploded on the Internet in the United States, the UK, and India. So what exactly is it?

Generative AI is simply a technique in which a computer uses existing text, audio files, or images to create new and similar content.

Source: Gartner, top strategic technology trends for 2022.

Can Content Generated by Artificial Intelligence Look Like Real Content?

Let’s first take a look at a video released in February 2021 (see the video at the end of the section). Is the Tom Cruise in the video a real person? This video has more than five million views and is considered by many super fans to be a trailer for a new movie that Tom Cruise is about to shoot.

Source: Theverge.com, TikTok Tom Cruise deepfake creator; left: Miles Fisher, right: Tom Cruise

This kind of video, called deepfakes, replaces the human face in the video with the face of the celebrity. To achieve this effect, a deep learning model is needed. The model is then trained by finding as many photos of the celebrity as possible, analyzing various expressions from all angles, and finally generating a model that contains all the expressions of the celebrity.

The reason for this resemblance is that the videos were made by Miles Fisher (known as the world’s most Tom Cruise lookalike) in late 2020. He has gained millions of fans and hundreds of millions of views as a result.

How Is Generative AI Achieved?

Before answering this question, let’s take a look at another example of generative AI. Here are three photos. Guess which ones were generated by AI.

Photo 1

Photo 2

Photo 3

Source: ThisPersonDoesNotExist.com

All three photos were generated by AI. Did you guess it? There is a website called ThisPersonDoesNotExist.com where every time the page is refreshed, a photo of a person who does not exist in reality appears.

Here I introduce a concept—generative adversarial networks (GANs). In 2014, researchers used computers for the first time to create realistic human faces using GANs. How was this achieved?

The researchers set up a confrontation game in which two AIs play against each other to try to create the most realistic synthetic content. As the two AI’s compete against each other, one tries to generate the best image and the other tries to detect where the generated image can be made more realistic, so that the quality of the image generated by AI will be continuously improved.

What Is the Use of Generative AI?

Generative AI can be used for a range of activities, such as creating software code, facilitating drug development, and targeted marketing, but it can also be used for fraud, scams, political disinformation, falsifying identities, and so on.

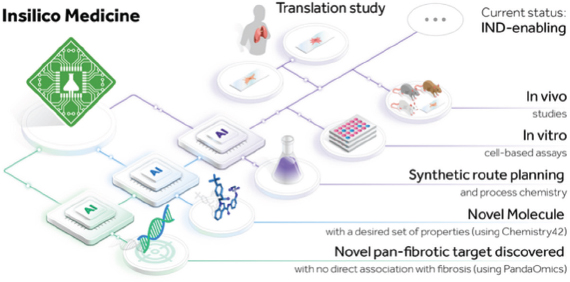

Here I introduce one of the most promising generative AI hailed by the industry—Insilico Medicine, founded in 2014 and headquartered in Hong Kong, China. The company is dedicated to extending human lifespan through research in biomarker discovery, drug development, digital medicine, and aging.

Source: Nature.com, Insilico Medicine drug discovery breakthrough.

Insilico Medicine has pioneered the application of GANs and reinforcement learning to generate new molecular structures for diseases with known and unknown targets. In 2017, Nvidia selected Insilico Medicine as one of the top five AI companies with potential for social impact. In 2018, the company was named one of the top 100 global AI companies by CB Insights.1

In July 2021, Insilico Medicine and GenFleet Therapeutics, a Shanghai-based pharmaceutical company, entered into a strategic partnership. GenFleet Pharmaceuticals, a clinical-stage biotech company focused on cutting-edge therapies in oncology and immunology, will synergize its own R&D systems with Insilico Medicine’s end-to-end AI drug discovery platform to jointly address novel and those more difficult targets in cancer treatment.

In August, Insilico Medicine and Westlake Pharma announced a strategic partnership to jointly develop innovative small molecule drugs against the new coronavirus (COVID-19).

According to Gartner, by 2025, 10 percent of the world’s data will be generated by generative AI, compared to less than 1 percent today.2 As the old saying goes, seeing is believing, but as technologies like generative AI continue to evolve, are you ready to live in a world where seeing is not necessarily believing?

Video version (www.youtube.com/watch?v=cv-iw65kaic).

Do You Think Intuition Can Be Simulated by Artificial Intelligence?

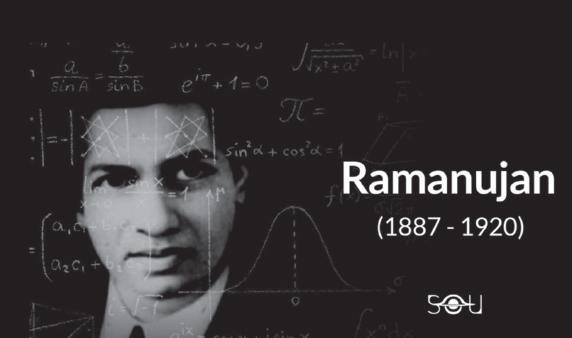

If you study mathematics in India, there is a high probability that your mathematics teacher will ask you to watch a movie made in 2015 called The Man Who Knew Infinity. This was a British film based on a true story. It described the legendary but short life of Ramanujan, a talented mathematician in India. Why mention him? Because a project Ramanujan machine supported by the mysterious Google X Lab was named after him. The Ramanujan machine used AI to automatically generate mathematical conjectures about constants.

Can AI simulate mathematical intuition? With this question in mind, let me briefly introduce Ramanujan.

Can intuition be simulated by AI? The Ramanujan machine

Who Is Ramanujan?

According to Wikipedia, Srinivasa Ramanujan was an Indian mathematician who lived during the British Rule in India.3 Though he had almost no formal training in pure mathematics, he made substantial contributions to mathematical analysis, number theory, infinite series, and continued fractions, including solutions to mathematical problems then considered unsolvable.

Ramanujan was a vegetarian. He died at the age of 32 due to chronic malnutrition and liver parasites. He wrote 4,000 formulas in his life, mostly about mathematical constants.

Source: Secretsofuniverse.in, Srinivasa Ramanujan.

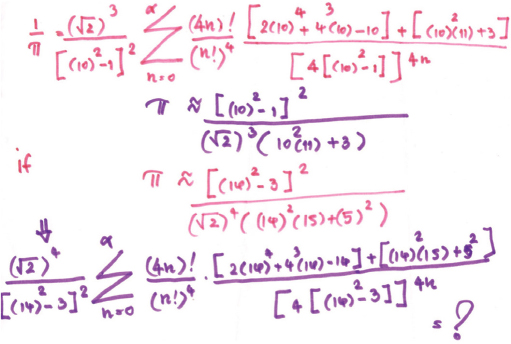

What Do These Formulas Look Like?

Source: Sawat Layuheem, Ramanujan pi equations.

Do You Have a Headache When You Look at these Formulas?

What is the mathematical constant? For example, the pi ratio (π) is a constant, which is the ratio of the circumference of any circle to the diameter of the circle, about 3.1415926. This seems simple, but the reason why π could be calculated depends on the accumulation of mathematicians over the past hundreds of years.

Ramanujan was born in a desolate Brahmin caste family. Due to living conditions, after finishing junior high school, he did not enter the university because his grades in other subjects, except math, were very poor. He worked as a clerk in an accounting firm. In his spare time, he concentrated on researching a book Summary of Pure Mathematics and Applied Science. He could deduce every formula and the promotion and modification of formulas in the book, and then publish papers in Indian academics journals. Later, he was invited by G.H. Hardy, a professor of mathematics at the University of Cambridge, to study at Trinity College in Cambridge. In 1918, he became a member of the Royal Society and later became an academician of Trinity College.

But Ramanujan’s life and study in Cambridge were not smooth at the time, because his mathematical research relied entirely on intuition and conjecture. At that time, many professors in the mathematics department could not read his notes. When they asked him how to derive those complicated formulas, he replied that it was God’s guidance. So, during his stay in Cambridge, he was questioned.

Throughout history, there are only a handful of top scientists in the pyramid of science, because great scientists rely heavily on their talents.

The Ramanujan machine project was launched in 2019. On February 3, 2021, an article Generating Conjectures on Fundamental Constants with the Ramanujan Machine was published in the Nature magazine.

Now I will answer the question of whether AI can simulate mathematical intuition. The answer is yes. In fact, the mathematician Gauss often guesses the pi ratio π by intuition, and this took Gauss’s entire life. How long did the AI simulation take? In just a few hours, the Ramanujan machine had discovered all the π-related formulas that Gauss discovered in his life, as well as a large number of formulas that other mathematicians could not guess.

What Is the Use of Ramanujan Machine?

Throughout the development of mankind, practical applications have emerged one after another, mostly using scientific and technological means to improve the efficiency of solving practical problems. But the breakthroughs in basic science are very limited. Most of the breakthroughs stem from the intuition and speculation of a handful of geniuses, such as Newton and Einstein. If AI can simulate the intuition of these geniuses, it is equivalent to helping us create countless geniuses. It also helps human scientists overcome the shortcomings of human nature. For example, some scholars focus on publishing papers to gain reputation without paying attention to the actual value of their research. If the level of basic research is greatly improved, there will be more applications based on these conjectures in the future.

Do You Want to Participate in the Ramanujan Machine Project?

There are three ways you can participate in this project:

1. First, if you don’t have time to participate, you can contribute your computer’s computing power. When you are not using it, let your computer discover new conjectures. If this conjecture was made on your computer, it will be named after you!

2. Second, if you are engaged in basic mathematical research, you can prove any conjecture discovered by Ramanujan, and the formula will be named after you.

3. Third, if you have time to code, you can propose or develop an algorithm to explore new mathematical structures, then you can name the algorithm by your own name.

If you are interested in the Ramanujan Machine Project (www.ramanujanmachine.com/), you can contact them directly at [email protected].

Video version (www.youtube.com/watch?v=OGS2YDXuxP8).

The Application of Artificial Intelligence in the Medical and Health Field—AI Depression Detection

What Is the Leading Cause of Human Disability in the World?

According to the World Health Organization, people with severe mental illness die prematurely—over a period of up to 20 years—due to preventable medical conditions.4

AI depression detection

A year into the COVID-19 pandemic, the Centers for Disease Control and Prevention (CDC) estimates that one in two Americans is likely to develop depression in the aftermath of the pandemic. Medical estimates suggest that about two-thirds of depression cases go undiagnosed.

The current news is reporting on the increase of depression and the increase in the number of suicides around the world. Every year, one in 10 people in the world suffer from varying degrees of depression. Depression is the main cause of disability for people in the 15 to 44 age group. Every 13 minutes, someone in a corner of the world commits suicide because of depression.

What Are the Difficulties in Treating Depression?

The causes of depression are very complicated, and there are many patients who behave normally or pretend to be normal in most cases. There is a symptom of depression called smiling depression, which refers to the fact that although the patient is depressed or anxious, he still pretends to be smiling, as if wearing a mask.

The current quality of the diagnostic process and care for depression varies. Diagnosis of mental health problems is based on screening tools such as the Patient Health Questionnaire (PHQ). Whether these questions are objective or not depends heavily on what the patient can remember from the past few weeks and the accuracy of the memory. The doctor’s diagnosis then depends on the accuracy of the images reproduced from the patient’s memory.

Another difficulty is that there are now biomarkers (quantitative indicators of health changes) that can be used to measure the severity of depression in patients, but each patient’s symptoms are different. The effectiveness of medication selection and treatment for individual symptoms needs to be further improved.

What Percentage of Mental Health Cases Are Currently Being Accurately Detected?

Not surprisingly, only 47.3 percent of mental health cases are accurately detected by professionals. Imagine sending one in two patients home, assuring them that they have no problems, only to find out that their problems have worsened or become life-threatening.

How Artificial Intelligence Predicts Depression

AI helps predict depression currently in two categories:

1. Judge the risk of illness by analyzing language style and language coherence

2. By tracking and analyzing people’s micro-expression, micro-behavior, and subtle tone changes

At present, the most popular AI solution is a team composed of members of IBM Research’s computational psychiatry and neuroimaging team and universities around the world, using natural language processing (NLP) AI methods to detect problems in patient language, and whether patients’ language and their thought expression matches. The patient’s psychosis can be assessed and predicted relatively accurately, with an accuracy rate of 83 percent.

In October 2020, IBM Research improved the previous system. Seven researchers, including Pengwei Hu, PhD and Hui Su of the Hong Kong University of Science and Technology, proposed a new system called BlueMemo for social media screening of patients with depression. Based on the real-time posts collected from Twitter, the research team extracted the learned text features, image features, and visual attributes into three modalities, and input them into the multimodal fusion and classification model to improve the accuracy of the system.

Depression is difficult to cure. Even if the patient receives psychotherapy and can return to a normal mental state, there is still a high recurrence.

Video version (www.youtube.com/watch?v=yEpc0UdyAIc).

Use Case—Conversational AI Solution

Chatbots have been used in banking for a long time, but there is still a long way to go to achieve widespread utility, as most chatbots lack the ability to handle complex conversations.

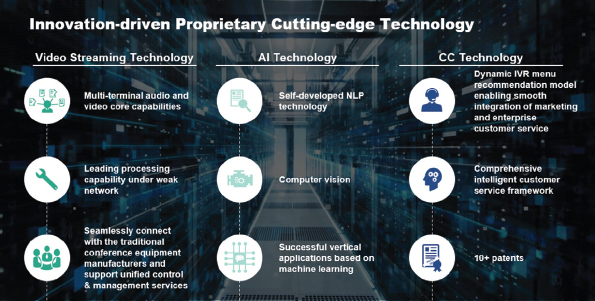

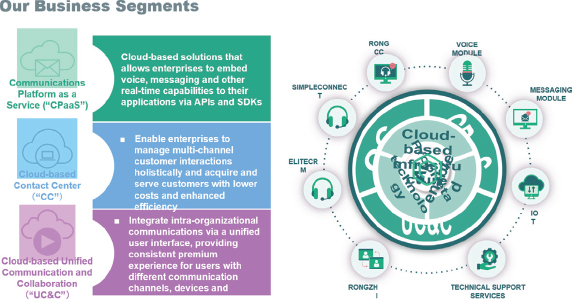

Here I would like to introduce a company that won the 2021 China Technology Star—Innovative Solution Award—Cloopen.

Cloopen has accumulated more than 10 years of experience in cloud communication. As the largest cloud communication provider in China, Cloopen is now rapidly expanding its global business footprint, including Japan, Philippines, Singapore, Malaysia, North America, Korea, and the Middle East.

Cloopen Cloud empowers corporate clients to take user service as the entry point through cognitive intelligence technology with NLP as the core and innovative and diversified vertical intelligent customer service applications to help organizations achieve internal business upgrades, management process upgrades, and so on, and help enterprises reduce costs and increase efficiency.

Source: Cloopen. Cloopen company introduction.

Featured Product—SimpleConnect

Cloopen offers a full suite of cloud-based communications solutions. Its most popular SaaS product is SimpleConnect, which is used by 250,000 agents globally. The solution provides call center agents the opportunity to work from anywhere using a laptop and Internet connection, such as service customer requests via website chat, phone call, and social media.

Source: Cloopen. Featured products SimpleConnect.

Cloopen is customer-centric, investing in production and research in projects with strong localization needs in various industries such as banking, insurance, automobiles, and so on. It actively completes the adaptation (the products have been certified by China E-Cloud, Kirin Software NeoCertify, Dongfang TongWeb, and other Xinchuang and Unisys software) to meet the diversified localization needs of customers and continue to collaborate to lead the development of intelligent customer service industry.

Business segments

Source: Cloopen.

SimpleConnect product features include:

1. Web client—SimpleConnect is used via a web browser. Contact center agents operate inbound and outbound calls directly from their PC, and no installation is required. Agents can effortlessly launch calls to the end users, and a customer relationship management (CRM) is provided to record the service activities.

2. Web phone—Outbound calls can be tagged and batched. Inbound calls can be automatically directed to assigned agents, or the way that most fits your business operation. Agents have many options as well, such as setting up their status, answer, transfer, join, or ask other colleagues to join a call.

3. Website live chat to a live call—With SimpleConnect webchat, website visitors can chat with employees/agents in real time and can be switched to a live call anytime. All in one web system, the days of minimizing/maximizing windows are over!

4. Omnichannel—With e-mail, Line, Facebook, and live chat integration, agents can handle all text-based inquiries in one place. Same as web calls, they can be answered, transferred, and resolved.

5. Add-ons and compatibility—SimpleConnect is not just a communication platform that combines all together. It provides other functions such as real time QC, which make sure your customer is satisfied with your service. Report gives a clear guideline on how well employees and agents perform within a given time. IVR offers a higher operational efficiency that removes repetitive processes away from agents. These features all go along with the core communication functions.

6. Customization—SimpleConnect offers tailor-made products as well. Cloopen understands how operations are different from industry to industry. Therefore, it can provide SimpleConnect in the shape best fit your business.

7. Minimum management—SimpleConnect requires minimum management. Cloopen can provide professional service for system configuration. Agents can simply log in and build connections with customers.

Moreover, SimpleConnect is much more affordable compared to other contact center as a service (CCaaS) solutions.

Future Plan

Cloopen is building more advanced AI capabilities to enrich its product offerings and improve operational efficiency, and plans to offer more AI-based SaaS modules to its 12,000 clients.

Innovation-driven proprietary cutting-edge tech

Source: Cloopen.

“Cloopen aspires to drive the digital transformation of the enterprise communications industry by providing innovative marketing and operational strategies and SaaS-based tools. For the international market, Cloopen aims to build an open ecosystem and grow together with local telecom partners,” said Mr. Lu Xing, Vice President of Cloopen’s international business.

For more information or international partnership opportunities, you can contact Mr. Fred Yang, General Manager of Cloopen Singapore at [email protected], WhatsApp +65 96145257.

Autonomous Driving

The World Health Organization’s 2018 Global State of Road Safety Report highlights that 1.35 million road traffic deaths have been reported each year. And one of the saddest facts about car accidents is that most of them are preventable. A 2016 study by the National Highway Transportation Safety Administration (NHTSA) found that human error accounts for 94 to 96 percent of all auto accidents.

Our roads will be safer if we remove the human element. This is one of the reasons why countries around the world are actively investing in the R&D of autonomous driving technology. However, self-driving cars have their own challenges, and it may take years before they become mainstream.

Why Can Aurora Be Ranked Second on the List of the 100 Most Promising Artificial Intelligence Companies in the World?

Aurora Innovation (Nasdaq: AUR) is the second-ranked start-up company among the 100 most promising AI companies selected globally. It went public in November 2021 and had a market cap of US$6.67 billion as of March 30, 2022.

Aurora: The second-ranked 100 most promising AI companies

Aurora was established in 2017. It has raised a total of $2.1 billion in seven funding rounds, with investment institutions including Sequoia Capital and Amazon.

The three cofounders are all heavyweight experts in the field of autonomous driving. They include:

Its cofounder and CEO Chris Urmson is known as the Henry Ford of Self-Driving Cars. When he was at Google, he pioneered Google’s self-driving car plan, now called Waymo.

Chris Urmson, cofounder and CEO of Aurora

Source: Aurora.

The other cofounder is Sterling Anderson, who led the Tesla Model X project.

Sterling Anderson, cofounder of Aurora

Source: Aurora.

And Drew Bagnell, who ran a research laboratory at Carnegie Mellon, and later joined Uber and was responsible for research on autonomous vehicles.

Drew Bagnell, cofounder of Aurora

Source: Aurora.

Strategic Positioning of Aurora

Aurora has positioned itself as an independent provider of software, hardware, and data services for autonomous driving from the beginning. Its core product is called Aurora Driver, which is a combination of software, hardware, and sensor kits that can be installed on different types of cars to make them drive autonomously. The system has been integrated into six different types of vehicles, including cars, sport utility vehicles (SUVs), minivans, commercial trucks, and Class 8 freight trucks, all of which are currently in the development and testing stage.

Whereas many self-driving car companies conduct tests on actual roads, Aurora focuses on testing vehicles in a simulated environment. Aurora hopes to test the car’s response to special situations that rarely occur in the real environment, such as a fallen tree blocking the road, or a serious crash blocking traffic. When the car learns how to deal with these special situations in a simulated environment, it will respond better on the real road.

Strategic Partnership of Aurora

Aurora acquired Advanced Technologies Group (ATG), Uber’s autonomous driving division, at the end of 2020, mainly to strengthen and accelerate the development of its first Aurora Driver for heavy-duty trucks. With this acquisition, Aurora added nearly 1,000 employees, and the number of employees more than doubled to 1,600. This transaction also gave Aurora a big benefit, that is, the right to provide taxi services in Uber’s taxi network, and Uber also invested $400 million in Aurora.

We know that Volkswagen announced in 2019 that it will become a software-driven car company, and Volkswagen has also signed a long-term cooperation agreement with Aurora. Other partners include Fiat Chrysler, Hyundai, and Byton (a new energy vehicle brand under Nanjing Zhixing New Energy Technology Development Co., Ltd. (FMC)). In February 2021, Aurora also reached an agreement with Toyota that Toyota’s Sienna minivan will become the first model equipped with Aurora drivers and will begin testing the first fleet this year.

As for when the Aurora driver can be purchased commercially, the company has not yet set a timetable. Their goal is that autonomous driving must be safer, more convenient, and cheaper than human driving.

Toyota’s Sienna Minivan

Source: Toyota.

Which Chinese Driverless Start-Up Has Toyota Invested in?

Also of interest is that Toyota invested US$462 million in Pony.ai in 2020, a Chinese driverless start-up company founded in 2016.

Pony.ai’s self-driving car

Source: Pony.ai.

Pony.ai’s cofounder and CEO Peng Jun was the former chief designer of Baidu’s self-driving car, and once introduced the self-driving car business at the Baidu World Congress. Another cofounder won twice in the Google Global Programming Challenge. He is China’s top programming expert Lou Tiancheng (also known as Lou Jiaozhu).

Pony.ai closed $102 million in Series A1 funding in 2018, making it China’s first unicorn for unmanned vehicles. It raised $367 million in Series C funding in 2021.

Peng Jun, cofounder and CEO (left) and Lou Tiancheng, cofounder (right)

Source: Pony.ai.

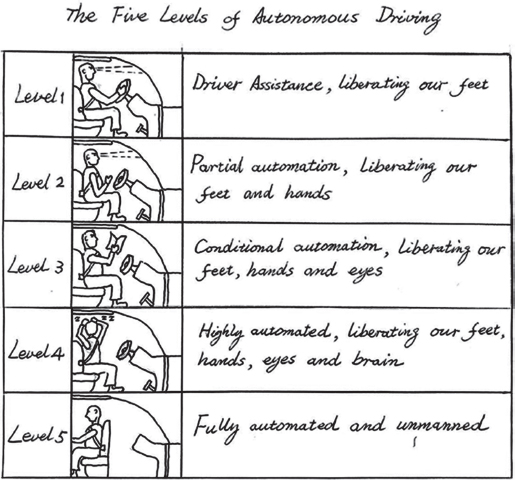

Some colleagues have said that they don’t know the different levels of autonomous driving. So, in closing, I put together a set of definitions to help you.

The five levels of autonomous driving

Hand-drawn by Sam Luo.

Level 1 is driver assistance, liberating our feet.

Level 2 is partial automation, liberating our feet and hands.

Level 3 is conditional automation, liberating our feet, hands, and eyes.

Level 4 is highly automated, liberating our feet, hands, eyes, and brain.

Level 5 is fully automated and unmanned.

What Is a Core Component of the Brain of a Self-Driving Car?

2021 can be said to be a watershed year for the development of semiautonomous driving and autonomous vehicles. According to data from the analysis company VSI Labs, at least 15 of the top automakers have already started or planned to produce cars with a certain degree of autonomy, ranging from hands-off to eyes-off driving.

What is a core component of the brain of a self-driving car?

What Is One of the Biggest Challenges in Achieving Fully Autonomous Driving?

One of the biggest challenges in achieving fully autonomous driving in passenger cars is to achieve precise positioning and the latest map information that reflects current road conditions.

You may think that this is not unusual, as Google Maps and Baidu Maps have already done a good job. But let’s not forget that we are talking about fully autonomous driving. While maps are traditionally designed by humans and used by humans, fully autonomous driving focuses on designing maps for machines.

What Is a Core Component of the Brain of a Self-Driving Car? The answer is the map. It is the sum of road knowledge from all the cars and sensors that have been traversed. It is crowdsourced. By aggregating sensor perception data from on-board cameras, radars, and LiDARs, the high-definition (HD) map contains a three-dimensional description of the road and surrounding environment, as well as rich semantic information, such as road signs, lane markings and connections, road attributes, and so on. Therefore, the map has become a core component of the self-driving car brain.

Combined with navigation information, these rich maps serve as the computer’s collective understanding of the drivable road environment, and essentially serve as the memory of the car.

What Are the Difficulties in Designing Maps for Machines? A map accurate to within a few meters is good enough to provide turning instructions for humans. However, automated vehicles (AVs) require higher accuracy. They must run with centimeter-level accuracy so that the vehicle has a stronger self-positioning ability on the road to achieve accurate positioning.

In addition, correct positioning requires constantly updated maps. These maps must also reflect current road conditions, such as work zones or lane closures, and effectively span the size of the AV fleet, processing information quickly while also ensuring minimal data storage. Finally, they must be able to function on a global scale.

Which Company Focuses on Creating Maps for AV? Here I introduce a company that focuses on creating maps and positioning services of autonomous vehicles—DeepMap. It was founded in 2016 and headquartered in Palo Alto, California.

Source: DeepMap.

The race to commercialize self-driving cars has reached a fever pitch. DeepMap has grasped the pain points and key points of the industry, which is that “machine-readable maps are a key enabling technology for safe self-driving.” DeepMap fills the vacuum in the market.

The company was formed to help companies avoid the unnecessary effort of developing their own maps, and to save costs for customers by creating a map engine service.

The company addressed three important factors:

1. Accurate HD maps

2. Ultra-accurate real-time positioning

3. A service infrastructure that supports large-scale global expansion

In terms of business strategy, DeepMap has chosen to partner with leading companies in the global automotive sector.

DeepMap has cooperated with car manufacturers such as Ford, Honda, Mercedes-Benz, and SAIC, as well as domestic high-precision map service providers like Baidu, creating good financing channels for the company.

At the same time, the cooperation with map data service providers and upstream and downstream chain companies in each country has also facilitated DeepMap to conduct map data collection and analysis in various countries.

The founding team is a world-class team from Google Earth, Google Maps, Apple Maps, Baidu Maps, Nvidia, and so on. What a luxurious lineup!

James Wu—Cofounder and CEO. James Wu is the cofounder and CEO of DeepMap. He was previously the chief architect at Baidu and the chief engineer at Upthere. James graduated from Tianjin University with a BS in Computer Science and received his PhD in Computer Science from the University of Alabama at Birmingham.

James Wu, cofounder and CEO of DeepMap Inc.

Source: DeepMap.

Mark Wheeler—Cofounder and CTO. Mark Wheeler is the cofounder and CTO of DeepMap. He has a PhD in Computer Science from Carnegie Mellon University and has worked as a senior scientist at Apple and as a software engineer at Google.

Mark Wheeler, cofounder and CTO of DeepMap Inc.

Source: DeepMap.

What Are the Latest Developments of DeepMap?

Nvidia Corporation completed the acquisition of DeepMap on August 26, 2021.

The acquisition is a win–win for both companies, as DeepMap’s technology will enhance the mapping and localization capabilities on NVIDIA DRIVE, ensuring that self-driving vehicles always know exactly where they are and where they are going.

NVIDIA DRIVE is a software-defined, end-to-end platform that enables continuous improvement and deployment with over-the-air updates, from deep neural network training and validation in the data center to high-performance computing in the vehicle.

DeepMap can also use Nvidia’s software-defined platform to quickly scale its maps across self-driving fleets and update over-the-air without using much data storage.

DeepMap RoadMemory. In June 2021, DeepMap announced the launch of RoadMemory, a smarter map made by machines for machines.

RoadMemory aims to meet the needs of automakers seeking large-scale, high-performance, and cost-effective mapping capabilities to support increased autonomy for vehicles coming into production. Increased autonomy includes driver support features such as highway assist, smart braking, and traffic jam pilot.

Video version (www.youtube.com/watch?v=C6ic4Y1MJ0g).

Explaining the Self-Driving Technology Difficulties—Brain in a Jar

What Are the Problems With Current AI Solutions?

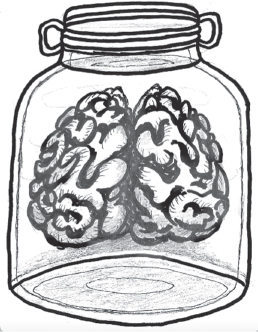

Many of the commonly adopted AI solutions on the market today effectively ingest large datasets, run algorithms, and generate reports, recommendations, or visualizations for employees to take action. In other words, they operate like a brain in a jar: each AI service is a separate brain in the jar, to which we input data and return answers. One brain recognizes images and the other interprets language. We can train a third brain to look for abnormal patterns in business data. Each of them is very good at what they do, but they know nothing about the world outside their own jar.

Brain in a jar

Source: Hand-drawn by Sam Luo.

How Can Artificial Intelligence Make Autonomous Decisions?

I want to ask you first, how does the brain receive and transmit information?

You might have guessed, it is a neural network. In the brain, a typical neuron collects signals from other neurons through a series of fine structures called dendrites. The brain in a jar is equivalent to a brain without a nervous system.

The self-driving tech difficulty brain in a jar

How to add a nervous system to AI? We can think of application programming interfaces (APIs) and application networks as a neural system.

What Is an API?

An API is a connection between computers or between computer programs. It is a software interface that provides services for other software. Documents or standards describing how to establish or use such connections or interfaces are called API specifications. The role of an API can be roughly compared to that of a waiter in a restaurant. When you order a meal in a restaurant, the waiter takes your order to the back kitchen, and when the chef is done, the waiter brings the dishes to you. The waiter acts as a connector between you and the back kitchen.

When the number of APIs expands from 10 to 100 or more, it becomes very complicated to connect effectively. Current AI can analyze large amounts of data to advise humans in making final decisions. However, current AI is not yet aware of the large number of permutations, exceptions and variables. It is challenging to make fully autonomous decisions based on the real-time data collected.

Is There a Company Focused on Designing Autonomous Driving Systems?

Here I want to introduce Drive.ai, a company that designs autonomous driving brains. Drive.ai is headquartered in Mountain View, California. It was founded in 2015 by former graduate students working in the Stanford University AI Laboratory, which is managed by the well-known AI expert Andrew Ng.

Founder of Drive.ai—Carol Reiley. Carol Reiley is a pioneer in remote control and autonomous robotic systems for self-driving cars. She is the first female engineer to be featured on the cover of the MAKE magazine and has been recognized as a leading entrepreneur and influential scientist by Forbes and Quartz.

Carol Reiley, founder of Drive.ai

Source: World Government Summit.

Carol is pursuing her PhD at Stanford University’s AI Lab. She is married to Andrew Ng. The two tech gurus also have a different approach to showing their love. The wedding ring was 3D printed according to their own preferences, and their engagement news was announced in IEEE Spectrum, a journal published by the Institute of Electrical and Electronics Engineers.

Drive.ai is led by Singapore-based CGV Capital; other investment institutions include New Enterprise Associates, one of the world’s largest venture capital companies, and Nvidia GPU Ventures. Apple acquired Drive.ai in June 2019. After the acquisition, Apple chose to close the company. The purpose of the acquisition was to leverage Drive.ai’s technical talent and the company’s 12 patents in the self-driving control category.

Apple Self-Driving Cars. In May 2016, Apple invested US$1 billion in Didi Chuxing.5 In July 2016, Uber announced the merger of its business in China with Didi Chuxing, further expanding Didi Chuxing’s presence in China. Didi Chuxing provides a large amount of data for Apple’s self-driving car project in order to improve its self-driving algorithm.

Apple has added 23 new drivers for its self-driving car project recently, bringing the total to 137 drivers. But the number of actual autonomous vehicles in the fleet remains at 69. What’s more, Apple hasn’t applied for a permit that would allow the cars to drive themselves.

According to Bloomberg News, Apple’s self-driving car project is at an early stage and will not be ready for launch until 2026–2028. Apple is refocusing its automotive program, under the leadership of Kevin Lynch, on autonomous vehicles that require no driver interaction, a goal that other automakers such as Tesla have yet to achieve.

Video version (www.youtube.com/watch?v=SCZeCFKgASg).

What Are Other Difficulties of Autonomous Driving?

According to a study by RAND Intelligence, autonomous driving requires at least 17.7 billion km of accumulated driving data to perfect the algorithm if it wants to reach the level of human drivers.6 We can simply calculate that if a self-driving fleet of 100 test vehicles is formed and tested 24/7 at an average speed of 40 km/h, it will take 500 years to complete the target mileage.

As of September 2021, Baidu’s Apollo L4 autonomous driving road test mileage has exceeded 16 million km, and the autonomous driving simulation test mileage has exceeded one billion km.7 Their self-driving cars already have a good ability to deal with various road conditions, but the data of real special scenarios is still scarce, and the self-driving system’s ability to respond to extreme scenarios is still very weak. In addition, as most of the tests are limited to specific areas, it is difficult to quickly extend the iterated algorithms to more complex scenarios.

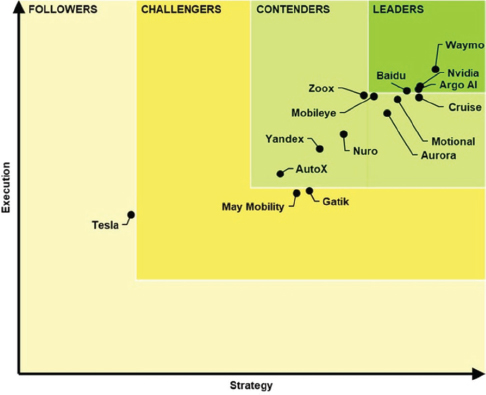

Autonomous Driving Leaderboard 2021

Source: Guidehouse Insights.

In April 2021, Guidehouse, the world’s leading public and business consulting company, released the latest autonomous driving leaderboard. Baidu once again entered the international autonomous driving leader category, the only Chinese company on the leader list. Baidu and Waymo are currently the only two companies in the world that have completed tens of millions of kilometers of autonomous driving test mileage.

As of the first half of 2021, Baidu’s Apollo self-driving travel service has accumulated more than 400,000 passengers, the test mileage has exceeded 14 million km, and the number of autonomous driving patents has exceeded 2,900.

Baidu opened up its passenger service in Beijing, Guangzhou, Changsha, and Cangzhou. It released its new and upgraded unmanned vehicle travel service platform—Carrot Run. In the future, Baidu will develop more travel scenarios to solve the pain points of travel.

Baidu self-driving car

Source: Baidu.

What Are the New Ideas for Autonomous Driving?

Autonomous driving is now so hot that major auto companies and technology companies all want to jump into the game, and they invest tens of billions of dollars at every turn. But do you know how expensive autonomous driving research is?

Take Google’s self-driving car project Waymo as an example. According to a report on the Forbes website, Waymo has raised about US$3 billion for road testing. You may think that this is not much. But only 600 cars have been used for road testing. This is equivalent to a cost of approximately US$5 million per vehicle, not including other costs. You can imagine how expensive autonomous driving research is.

Then you might say that the relatively mature self-driving trucks should be less expensive, right?

Let’s take the UK market as an example to calculate what is the operating cost of using self-driving trucks.

At present, the price of a traditional truck is about 70,000 to 80,000 pounds. If the driver works 16 hours a day, the annual compensation may be closer to 150,000 pounds. If we don’t need a truck driver, the cost will be greatly reduced. Correct?

What are the new ideas for autonomous driving? Introducing Vay

Up to now, fully automatic vehicles require the presence of engineers to pay close attention to the hardware. The point is that their salary will be higher because they are qualified engineers, not just drivers. In order to be able to achieve autonomous driving, the truck needs to be equipped with other equipment, such as computers, LiDARs, and cameras.

It will take a few years to realize fully autonomous driving. Is there any compromise solution that can not only ensure automatic driving, but also save costs?

Vay—An Alternative Method of Automatic Driving

The answer is yes. Here I want to introduce a start-up company called Vay, established in Berlin, Germany, in 2018. What makes Vay unique is its automatic driving method that allows remote driving. Today, Vay’s fleet is equipped with safety drivers throughout Berlin. Starting 2022, it is expected to launch the first safety-certified driverless commercial fleet on public streets in Europe.

Source: Vay.

How Does Vay Work?

Let me describe a scene for you:

In the morning, when you are still enjoying a nutritious breakfast, you can book Vay’s self-driving car service with your mobile phone. The certified tele-driver will remotely drive the car downstairs to your home, and you can drive the car to work to save costs. After arriving at the company, you don’t need to consider issues such as finding a parking space. The remote tele-driver will drive the vehicle to the next customer’s designated location.

This maximizes the efficiency of the use of the same car, saves passengers’ time, and ensures road safety. It also creates a new profession. Perhaps in the near future, taxi drivers can consider the new profession of tele-driver. It is safe, and it can be done indoors easily without having to sit in the car and drive for a long time.

Vay’s Team and Angel Investors

Vay combines the best of both worlds: Silicon Valley’s software and product experience and European automotive hardware and safety engineering. Currently, the company has more than 70 employees, and their team members have previously worked in Tesla, Google, Waymo, Zoox, Byton, Argo, Amazon, Uber, Audi, BMW, Daimler, and other companies.

Cofounders of Vay

Source: Vay.

Vay has worked from leading European venture capital companies such as Atomico, Creandum, LaFamiglia, System.One, Visionaries Club, Signals, and business angel investors include:

• Patrick Pichette—Partner of Inovia Capital, chairman of the board of Twitter, former Google global chief financial officer

• Cristina Stenbeck—Spotify board member, chairman of the Board of Zalando

• Qasar Younis—Cofounder and CEO of Applied Intuition, former COO and partner of Y Combinator

• 2016 Formula One World Championship champion Nico Rosberg

What do you think of the autonomous driving route chosen by Vay? Video version (www.youtube.com/watch?v=owIcoeAM1kc).

What Is One of the Core Breakthroughs in Autonomous Driving?

The R&D cost of self-driving vehicles is high. At present, the hardware cost of a self-driving car is about 160,000 U.S. dollars. In addition, from the perspective of safety, current autonomous vehicles have limited perception and prediction capabilities in extreme situations, such as extreme weather, unfavorable lighting, and object occlusion.

If part of the autonomous driving function is ceded to the smart road—the intelligentization of road infrastructure, it can fill the shortcomings of single-vehicle intelligence, massively reduce costs, and make autonomous driving safer.

What Is Vehicle-Road Collaboration?

Vehicle–road coordination is to use advanced wireless communication and new-generation Internet technologies to implement dynamic real-time information exchange between vehicles and vehicles, vehicles and roads, and vehicles and people in an all-round way. Based on the collection and fusion of dynamic traffic information in space and time, it can carry out active safety control and collaborative road management. Vehicle–road collaboration can fully realize the effective coordination of people, vehicles, and roads, ensure traffic safety and improve traffic efficiency, thus forming a safe, efficient, and environmentally friendly road traffic system. It is one of the core breakthroughs in autonomous driving.

Vehicle–road collaboration includes four core components: communication platform, terminal layer (vehicle side/road side), edge computing, and cloud control platform.

1. Communication platform—Vehicle–road collaborative communication technology includes vehicle–vehicle and vehicle–road communication. As the connection pipeline in vehicle–road coordination, the communication platform is mainly responsible for providing the information pipelines for real-time transmission between vehicle and vehicle and vehicle and road, and ensuring real-time information interaction between vehicle side and road side through a network environment with low latency, high reliability, and fast access.

2. Terminal layer—The terminal layer is divided into vehicle terminal and roadside terminal. On the basis of the original equipment, the two are equipped with sensors such as LiDAR and cameras through intelligent transformation to realize interconnected monitoring between vehicles, and environmental monitoring at the roadside to conduct information data transmission and generate interactive behaviors.

3. Edge computing—Edge computing refers to a computing model that allocates tasks such as computing, storage, and communication to the edge of the network close to the application scenario, and provides edge intelligence services nearby.

4. Cloud control platform—The cloud control platform is the core link of the Internet of Vehicles, and it is the key basic technology to realize the collaborative perception, decision making, and control of the Internet. The cloud control platform includes a cloud control basic platform and a cloud control application platform.

Why Deploy Edge Computing Power on the Roadside? In real road traffic scenarios, the demand for cloud computing is very complex. Some scenarios require rapid analysis and calculation based on real-time local information and feed the results back to surrounding vehicles, such as dangerous road conditions avoidance, traffic accident warning; some scenarios require aggregation of global information, overlooking the big picture unified analysis, such as traffic situation analysis, road restriction control. This is exactly the application scenario of edge computing.

What Is the Role of the Cloud Control Platform? The cloud control platform can provide device management control, data fusion, and cloud data exchange, and domainwide event information dissemination for different levels of intelligent networked and autonomous vehicles. It provides dynamic basic data such as vehicle operation, infrastructure, traffic environment, and traffic management for management and service agencies, and is a cloud support platform that supports the practical application needs of smart connected vehicles.

Which Company Is Developing Autonomous Driving Vehicle-Road Collaboration?

In 2016, Baidu began to deploy the full-stack research and development of vehicle–road collaboration, and has made staged progress in the accumulation of scene data and test mileage. At the end of 2018, Baidu officially open-sourced the Apollo vehicle–road collaboration solution, allowing autonomous driving to enter a new stage of mutual synergy between smart cars and smart roads, and comprehensively build a human– vehicle–road–cloud global data perception intelligence. The road network can support the wide-area viewing angle, redundant perception, and over-the-horizon perception requirements of autonomous vehicles.

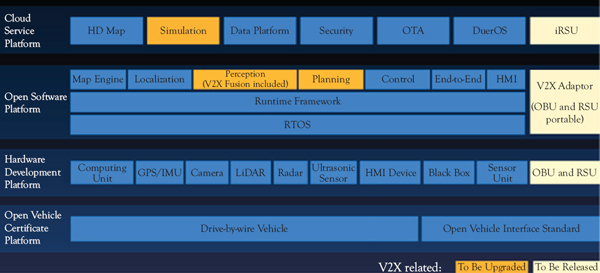

Apollo’s open-source vehicle-road collaboration will upgrade vehicle– road collaboration-related modules at the software, hardware, and cloud service levels based on the existing four-layer open technology framework of the Apollo open platform.

• At the reference hardware layer, Apollo will add reference hardware on the vehicle and roadside to complete the information transmission and analysis between the autonomous vehicle and the roadside.

• At the open-source software layer, Apollo has upgraded

the perception and decision planning modules, which can complete the fusion processing of V2X information related to vehicle–road collaboration on the vehicle side of Apollo system; at the same time, it provides software packages that can run on vehicle-end and roadside reference hardware, which are responsible for the related preprocessing of V2X information.

Apollo vehicle–road collaboration open-source solution technical framework

Source: Baidu.

• In the cloud service layer, Apollo opens up intelligent roadside services to provide roadside perception and prediction information required for autonomous driving, and open-source roadside perception and prediction algorithms.

• Upgrade the simulation service capability to expand the simulation scenarios in the vehicle–road collaboration environment.

All of the aforementioned will help developers and enterprises to collaborate and innovate at a higher level without having to reinvent the wheel.

Use Case—How to Achieve Vehicle–Road Collaboration?

In July 2018, at the crossroads of Houchang Village near Baidu Science and Technology Park, a distinctive traffic light appeared—a 64-line LiDAR at the top, and a computing unit, surround-view camera, GPS antenna, differential positioning, V2X roadside communication units, and other equipment.

The first smart traffic light near Baidu Science Park

Source: Baidu.

This is a complete set of roadside sensing and communication equipment. Due to the high price of LiDAR, Baidu’s engineers joked that this may be “the most expensive traffic light in China.”

This equipment mainly perceives the full volume of traffic participants at the intersection in real time at object level, and sends them to nearby unmanned vehicles through V2X wireless direct communication for collaborative sensing, prediction, and planning closed-loop testing. It also analyzes the traffic flow at the intersection and delays in each direction at the intersection, and based on this, makes real-time adjustments to the traffic light release time.

In May 2021, Baidu and the Institute for AI Research (AIR) of Tsinghua University jointly released Apollo Air, a set of vehicle–road collaboration technology.8 It uses pure roadside perception capabilities to realize L4-level autonomous driving on open roads. This system puts roadside perception in a very important position. A simple understanding is to cover all the sensors on a self-driving car, making it blind, and relying solely on roadside sensors for perception while the vehicle can still perform autonomous driving.

Baidu is not trying to replace car-side perception with Apollo Air, but to further strengthen the road-side perception capability so that the vehicle–road collaboration system can reach the same high standard as the single-vehicle intelligent system, so as to provide strong support for future self-driving cars and future travel services.

How Big Is the Market for Vehicle–Road Coordination in China? China pays great importance to the development of supporting infrastructure, and places more emphasis on the simultaneous development of intelligence and network connectivity. It builds an overall solution of people– vehicle–road–cloud with network connectivity, which reduces the difficulty of developing single-vehicle intelligence and supports autonomous driving. The required communication infrastructure is constantly improving.

As of July 2021, China has built 916,000 5G base stations, accounting for 70 percent of the global total; the number of 5G-connected devices has exceeded 365 million, accounting for 80 percent of the global total. The vehicle wireless communication network (LTE-V2X, etc.) achieves regional coverage, and the new generation of vehicle wireless communication network (5G-V2X) is gradually applied in some cities and highways.

According to Baidu’s calculations, it is assumed that two roadside units are required for each kilometer of highway, and one roadside computing unit is needed for every 50 km. By 2030, the application penetration rate of roadside units in China will be 30 percent. The penetration rate of precise maps is 5 percent, then by 2025, the cumulative investment scale of China’s main IT equipment for vehicle–road collaboration (roadside units, in-vehicle units, high-precision maps, and roadside computing units) will be 91.2 billion yuan, around $14.4 billion. By 2030, the cumulative investment in major IT equipment for vehicle–road collaboration will reach 283.4 billion yuan, approximately $44.6 billion.

Which Is the Largest Market in the Current Autonomous Driving Scenario? Self-driving taxis are the largest market in the current autonomous driving scenario. McKinsey predicts that the global market for self-driving car sales and travel services will exceed $500 billion in 2030; UBS predicts that the global self-driving taxi market will exceed $2 trillion in 2030; KPMG predicts that self-driving cars and services will become an industry worth more than $1 trillion in 2030. Among them, leading autonomous driving technology companies such as Waymo and Baidu will become the main players in the future self-driving taxi market.

In December 2018, Waymo One, Waymo’s self-driving passenger-carrying service, was launched in Phoenix, which is regarded as the beginning of the commercial self-driving taxis worldwide. In 2020, Waymo’s fully driverless self-driving taxis hit the roads in suburban Phoenix.

In June 2021, Cruise received a permit from the California Public Utilities Commission (CPUC) to become California’s first fully driverless self-driving passenger service company on public roads.

In August 2021, Baidu upgraded Apollo Go and launched the self-driving travel service platform—Radish Run. Today, Baidu has successively conducted commercial trial operations of Radish Run in five cities, namely Changsha, Cangzhou, Beijing, Guangzhou, and Shanghai, to explore the industry positioning and pricing model of autonomous driving.

As of November 2021, Baidu has obtained 411 autonomous driving test licenses in China. Baidu is the first company to conduct driverless testing in both China and the United States. It has autonomous driving test licenses in Beijing, Changsha, Cangzhou in China, and California in the United States.

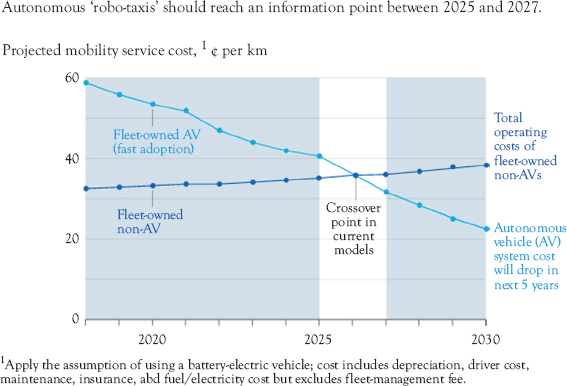

Autonomous robo-taxis should reach an inflection point between 2025 and 2027

Source: McKinsey & Company.

What Is the Role of the Government in Facilitating the Mass Use of Self-Driving Cars? In addition to the technological advancement and cost reduction of self-driving cars, the government plays a key role in the mass adoption of self-driving cars, including the following aspects:

• Formulate relevant industrial policies—relevant laws and regulations on intelligent transportation, autonomous vehicle R&D, sales, and so on. In terms of standards, such as intelligent network connection, vehicle–road coordination, road infrastructure, cloud control infrastructure platform, and other standards and regulatory systems.

• Integrated planning and leading operations—intelligent transportation is a huge project, including network construction, urban digital governance, and a large amount of security and privacy-related data in various fields such as public security, traffic management, and urban management.

• The government is the commander-in-chief of intelligent transportation network construction, leading investment and encouraging innovation so that enterprises dare to invest in innovation and expand the scale of applications.

The in-depth cooperation between Baidu Apollo and Guangzhou, China, in the field of intelligent transportation began in August 2020, when Guangzhou Huangpu District, Guangzhou Development Zone, and Baidu Apollo launched the Guangzhou Huangpu District Guangzhou Development Zone for Autonomous Driving and Vehicle-Road Collaboration Smart Transportation New Infrastructure Project. The project plans to deploy urban C-V2X standard digital pedestals, intelligent transportation AI engines, and six city-level intelligent transportation ecological application platforms on a large scale in 133 km of urban open roads and 102 intersections in Huangpu District, and to realize docking applications with existing transportation information systems.

Yizhuang District in Beijing is the world’s first city-level “high-level autonomous driving demonstration zone.” Baidu Apollo is currently working with Yizhuang District to promote the construction of a high-level autonomous driving demonstration zone, which will include five systems: “smart cars, smart roads, real-time clouds, reliable networks and accurate maps” in the 60 sq. km development zone. In the first phase, the two parties will carry out the intelligent transformation of vehicle–road collaboration at 12.1 km and 28 intersections to support high-level autonomous driving demonstration operations.

Taking Yizhuang District in Beijing as an example, under the intelligent transportation operator model, the roles of government departments, platform companies, and various participants are:

• Yizhuang district government: the investor and leading party

• Car network technology companies: intelligent transportation operator

• Digital infrastructure construction companies: infrastructure construction and maintenance; such as planning and implementing where to build smart light poles and what equipment to install

• Enterprises: technology providers (e.g. Baidu) and ecological application developers

What Is the Future Trend of AI Hailed by Forbes Magazine?

No-code AI has been hailed by the Forbes magazine as the future trend of AI.

No-code AI: The future trend of AI hailed by the Forbes magazine

Why Is It so Difficult to Implement an AI Solution?

Over the years, many tech companies have tried to implement AI solutions, but have faced many problems. For example, the high cost, the annual salary of data scientists has gone up. Analytics Insights has calculated that in the United States, Israel, Germany, Canada, the Netherlands, and other countries, the average annual salary of data scientists is between 60,000 and 88,000 U.S. dollars, as shown in Table 3.1.9

Some companies outsource to third-party companies, but algorithms and data scientists are not enough to make AI truly solve real-world problems. We did a fraud detection solution for a city bank in 2017, and although it was equipped with the world’s top deep learning experts and data science team, the tasks such as data cleaning, parameter selection required the participation of subject matter experts with years of banking experience. According to a survey by Deloitte, 37 percent of managers simply do not have enough expertise to successfully implement an AI solution.10

Table 3.1 The average annual salary of data scientists

Countries | Average Annual Salary in U.S. dollars |

United States | $88,000 |

Israel | $88,000 |

Germany | $81,300 |

Canada | $81,000 |

Netherlands | $75,000 |

Japan | $70,000 |

UK | $66,000 |

Italy | $60,000 |

Source: Analytics Insights.

Are There Ways to Overcome These Bottlenecks and Make Better Use of Artificial Intelligence?

Yes, there is. It is the no-code AI.

What Is No-Code Artificial Intelligence?

In fact, we are all familiar with no code, which is to visualize those abstract and complex workflows, so that users without technical background can use these functions. For example, we can use the Shopify website to conduct e-commerce, create your own product pages, manage marketing campaigns, and logistics. No need to build your own system.

No-code AI, on the other hand, is usually achieved through a custom-developed platform or model that user companies can integrate into their current technology stack to immediately use AI for a variety of activities, such as data classification and analysis, or to build their own proprietary AI models for specific business purposes.

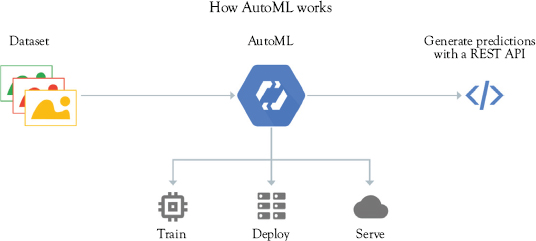

Is There a Difference Between No-Code AI and AutoML Provided by Google?

AutoML is Google’s cloud-based modeling package that currently includes vision (visual and video intelligence, the latter in beta) and language (NLP and translation) and structured data (tabular) capabilities.

How AutoML works

Source: Google.

AutoML in general already covers a lot of ground without code, but it is aimed at professional developers; no-code AI is for nontechnical people.

Are There Any Start-Ups Focused on Developing No-Code AI Solutions?

Yes, there are. I will introduce a start-up called Levity.

Levity builds custom deep learning-driven automated systems that can be built without code. These deep learning models are based on data for each of their users’ specific use cases and can be developed without any prior domain knowledge or expertise. Founded in 2020, Levity is headquartered in Berlin, Germany.

Nontechnical staff using Levity’s system can create their own AI-driven automated workflows in minutes with their intuitive interface for any size business by simply entering images, documents, PDFs, ask ah-see into the system without code.

Levity focuses on providing end-to-end solutions and is able to integrate with all the tools users use on a daily basis. Users can define any category or label they want on the platform and train the system as needed. Users can start building their own datasets at any time, even if they don’t have any data. Levity has an integrated labeling system and several pretrained models that can be used out of the box.

Unlike many other software tools, Levity doesn’t charge per seat and user companies can use the system for free across the organization. This allows clients to provide the same abilities to anyone in their team at no additional cost.

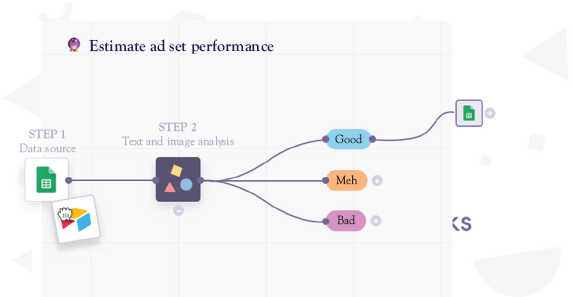

Use Case—Testing the Ad Performance

Here is an example of Levity’s ability to test the effectiveness of ads.

Every company wants to test how effective the ads they publish are. One of the commonly used methods is A/B testing. Some companies test hundreds of ad groups every month. Not only is A/B testing costly, but it can also cause ad fatigue among potential customers, and in the worst case scenario, loss of trust in the brand.

Levity’s system solves this problem by helping companies predict the effectiveness of their ads before they are tested. Levity trains a custom AI model based on the historical performance of the ad set.

Estimate ad set performance

Source: Levity.

The company’s nontechnical staff simply feeds the ad images or copies into the system and can predict which ad groups will perform better before publishing the ads. The whole process is fully automated.

How to Identify No-Code AI?

To determine whether a system provides no-code AI functions, it is mainly based on the following points:

• Tools that enable users to build solutions from scratch that previously required one or more (ML) engineers to build. That is, it can still be used without any historical data.

• No-code AI systems create value for users and companies of all sizes—not just an enterprise-grade developer tool.

• Available to nontechnical people—this is essentially the core of the no-code movement.

As a reminder, don’t get hung up on using AI solutions. If other methods can achieve the same results faster and more economically, then go with other means. After all, AI is just one of the tools that can help you achieve your company’s goals.

Video version (www.youtube.com/watch?v=MgU9xRSHAIc).