Chapter 6. Performance Testing and the Mobile Client

The mobile phone…is a tool for those whose professions require a fast response, such as doctors or plumbers.

Umberto Eco

The rise of the mobile device has been rapid and remarkable. This year (2014) will see the mobile device become the greatest contributor to Internet traffic, and is unlikely to easily relinquish this position. As a highly disruptive technology, the mobile device has impacted most areas of IT, and performance testing is no exception. This impact is significant enough in my opinion that I have included this new chapter to provide help and guidance to those approaching mobile performance testing for the first time. I have also made reference to mobile technology where relevant in other chapters. I will first discuss the unique aspects of mobile device technology and how they impact your approach to performance testing, including design considerations, and then examine mobile performance testing challenges and provide a suggested approach.

What’s Different About a Mobile Client?

Depending on your point of view, you could say, “not much” or “a whole lot.” As you might be aware, there are four broad types of mobile client:

- The mobile website

-

This client type differs the least from traditional clients in that as far as the application is concerned it is really just another browser user. The technology used to build mobile sites can be a little specialized (i.e., HTML5), as can the on-device rendering, but it still uses HTTP or HTTPS as its primary protocol so can usually be recorded and scripted just like any other browser client. This is true for functional and performance testing. You might have to play around with the proxy setting configuration in your chosen toolset, but this is about the only consideration over and above creating a scripted use case from a PC browser user. Early implementations of mobile sites were often hosted by SaaS vendors such as UsableNet; however, with the rapid growth of mobile skills in IT, those companies that choose to retain a mobile, or m., site are generally moving the hosting in-house. There is a definite shift away from mobile sites to mobile applications, although both are likely to endure at least in the medium term.

- The mobile application

-

The mobile application is more of a different animal, although only in certain ways. It is first and foremost a fat client that communicates with the outside world via one or more APIs. Ironically, it has much in common with legacy application design, at least as far as the on-device application is concerned. Mobile applications have the advantage of eliminating the need to ensure cross-browser compliance, although they are, of course, still subject to cross-device and cross-OS constraints.

- The hybrid mobile application

-

As the name suggests, this type of application incorporates elements of mobile site and mobile application design. A typical use case would be to provide on-device browsing and shopping as an application and then seamlessly switch to a common browser-based checkout window, making it appear to the user that he is still within the mobile application. While certainly less common, hybrids provide a more complex testing model than an m. site or mobile application alone.

- The m. site designed to look like a mobile application

-

The least common commercial option, this client was born of a desire to avoid the Apple App Store. In reality this is a mobile site, but it’s designed and structured to look like a mobile application. While this client type is really relevant only to iOS devices, there are some very good examples of noncommercial applications, but they are increasingly rare in the commercial space. If you wish to explore this topic further, I suggest reading Building iPhone Apps with HTML, CSS, and JavaScript by Jonathan Stark (O’Reilly).

Mobile Testing Automation

Tools to automate the testing of mobile devices lagged somewhat behind the appearance of the devices themselves. Initial test automation was limited to bespoke test harnesses and various forms of device emulation, which allowed for the testing of generic application functionality but nothing out-of-the-box in terms of on-device testing. The good news is that there are now a number of vendors that can provide on-device automation and monitoring solutions for all the principal OS platforms. This includes on-premise tooling and SaaS offerings. I have included a list of current tool vendors in Appendix C.

Mobile Design Considerations

As you may know, there are a number of development IDEs available for mobile:

-

BlackBerry

-

iOS for Apple devices

-

Android

-

Windows Phone for Windows Phone devices

Regardless of the tech stack, good mobile application (and mobile site) design is key to delivering a good end-user experience. This is no different from any other kind of software development, although there are some unique considerations:

- Power consumption

-

Mobile devices are battery-powered devices. (I haven’t yet seen a solar-powered device, although one may exist somewhere.) This imposes an additional requirement on mobile application designers to ensure that their application does not make excessive power demands on the device. The logical way to test this would be to automate functionality on-device and run it for an extended period of time using a fully charged battery without any other applications running. I haven’t personally yet had to do this; however, it seems straightforward enough to achieve. Another option to explore would be to create a leaderboard of mobile applications and their relative power consumption on a range of devices. While this is probably more interesting than useful, it could be the next big startup success!

- The cellular WiFi connection

-

Mobile devices share the cellular network with mobile phones, although phone-only devices are becoming increasingly rare. Interestingly, with the proliferation of free metropolitan WiFi, a lot of urban mobile comms doesn’t actually use the cellular network anymore. Cellular-only traffic is pretty much limited to calls and text messages unless you are using Skype or similar VoIP phone technology. Cellular and WiFi impose certain characteristics on device communication, but in performance testing terms this really comes down to taking into account the effect of moderate to high latency, which is always present, and the reliability of connection, which leads nicely into the next point.

- Asynchronous design

-

Mobile devices are the epitome of convenience: you can browse or shop at any time in any location where you can get a cellular or wireless connection. This fact alone dictates that mobile applications should be architected to deal as seamlessly as possible with intermittent or poor connections to external services. A big part of achieving this is baking in as much async functionality as you can so that the user is minimally impacted by a sudden loss of connection. This has less to do with achieving a quick response time and more to do with ensuring as much as possible that the end user retains the perception of good performance even if the device is momentarily offline.

Mobile Testing Considerations

Moving on to the actual testing of mobile devices, we face another set of challenges:

- Proliferation of devices

-

Mainly as a result of mobile’s popularity, the number of different devices now has reached the many hundreds. If you add in different browsers and OS versions, you have thousands of different client combinations—basically a cross-browser, cross-device, cross-OS nightmare. This makes ensuring appropriate test coverage, and by extension mobile application and m. site device compatibility, a very challenging task indeed. You have to focus on the top device (and browser for mobile site) combinations, using these as the basis for functional and performance testing. You can deal with undesirable on-device functional and performance defects for other combinations by exception and by using data provided by an appropriate end user experience (EUE) tool deployment (as we’ll discuss in the next chapter).

- API testing

-

For mobile applications that communicate using external APIs (which is the vast majority), the volume performance testing model is straightforward. In order to generate volume load, you simply have to understand the API calls that are part of each selected use case and then craft performance test scripts accordingly. Like any API-based testing, this process relies on the relevant API calls being appropriately documented and the API vendor (if there is one) being prepared to provide additional technical details should they be required. If this is not the case, then you will have to reverse-engineer the API calls, which has no guarantee of success—particularly where the protocol used is proprietary and/or encrypted.

Mobile Test Design

Incorporating mobile devices into a performance testing scenario design depends on whether you are talking about a mobile m. site, a mobile application, or indeed both types of client. It also depends on whether you are concerned about measuring on-device performance or just about having a representative percentage of mobile users as part of your volume load requirement. Let’s look at each case in turn.

On-Device Performance Not in Scope

In my view, on-device performance really should be in scope! However, that is commonly not the case, particularly when you are testing a mobile site rather than a mobile application.

- Mobile site

-

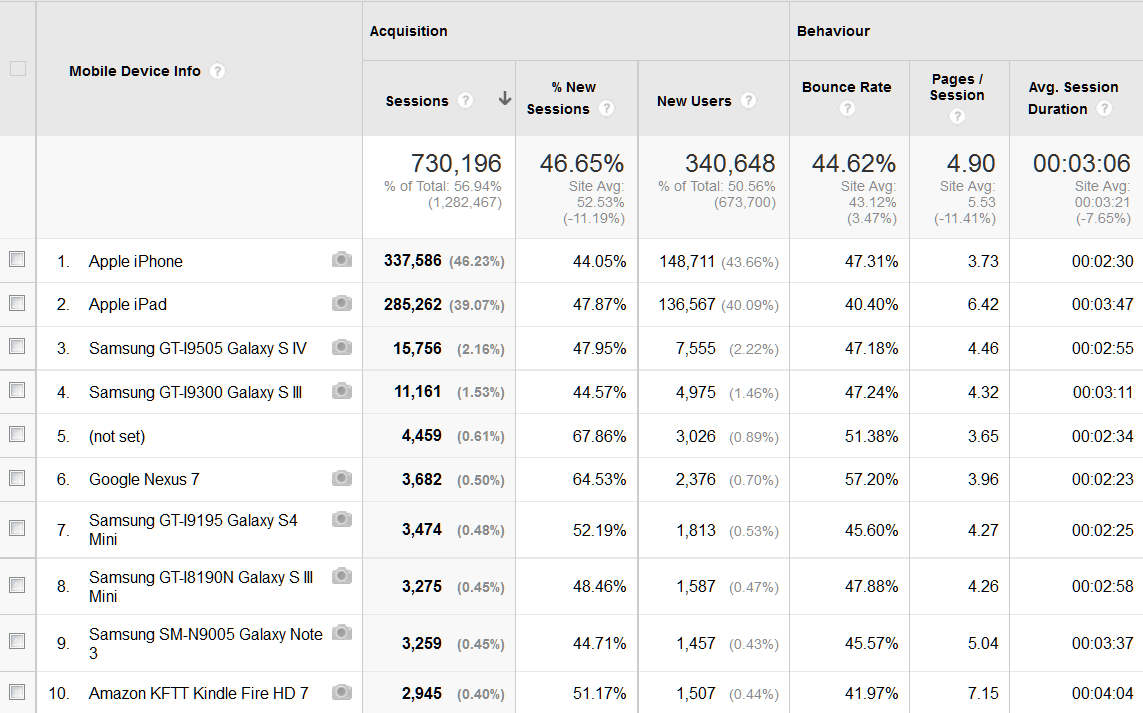

Test design should incorporate a realistic percentage of mobile browser users representing the most commonly used devices and browsers. You can usually determine these using data provided by Google Analytics and similar business services. The device and browser type can be set in your scripts using HTTP header content such as

User agent, or this capability may be provided by your performance tooling. This means that you can configure virtual users to represent whatever combination of devices or browser types you require. - Mobile application

-

As already mentioned, volume testing of mobile application users can really only be carried out using scripts incorporating API calls made by the on-device application. Therefore, they are more abstract in terms of device type unless this information is passed as part of the API calls. As with mobile site users, you should include a representative percentage to reflect likely mobile application usage during volume performance test scenarios. Figure 6-1 demonstrates the sort of mobile data available from Google Analytics.

Figure 6-1. Google Analytics mobile device analysis

On-Device Performance Testing Is in Scope

Again, it’s certainly my recommendation for on-device performance testing to be in scope, but it’s often challenging to implement depending on the approach taken. It is clearly impractical to incorporate the automation of hundreds of physical devices as part of a performance test scenario. In my experience a workable alternative is to combine a small number of physical devices, appropriately automated with scripts working at the API or HTTP(S) level, that represent the volume load. The steps to achieve this are the same for mobile sites and mobile applications:

-

Identify the use cases and test data requirements.

-

Create API level and on-device scripts. This may be possible using the same toolset, but assume that you will require different scripting tools.

-

Baseline the response-time performance of the on-device scripts for a single user with no background load in the following sequence:

-

Device only. Stub external API calls as appropriate.

-

Device with API active.

-

-

Create a volume performance test scenario incorporating where possible the on-device automation and API-level scripts.

-

Execute only the on-device scripts.

-

Finally execute the API-level scripts concurrently with the on-device scripts.

As API level volume increases, you can observe and compare the effect on the on-device performance compared to the baseline response times. Measuring on-device performance for applications was initially a matter of making use of whatever performance-related metrics could be provided by the IDE and OS. Mobile sites were and still are easier to measure, as you can make use of many techniques and tooling common to capturing browser performance. I remain a fan of instrumenting application code to expose a performance-related API that can integrate with other performance monitoring tooling such as APM. This is especially relevant to the mobile application, where discrete subsets of functionality have historically been challenging to accurately time. As long as this is carefully implemented, there is no reason why building in code-hook should create an unacceptable resource overhead. It also provides the most flexibility in the choice of performance metrics to expose. As an alternative, the major APM tool vendors and others now inevitably offer monitoring solutions tailored for the mobile device. I have included appropriate details in Appendix C.