2

Describing Graphical Information

Before we represent images and interfaces in a graphical form on a computer screen, the underlying digital information is subject to different processes. Although the surface level is always based on screen pixels, the description of images follows different forms and formats: from bitmap images to 2D and 3D vector graphics, etc. Accordingly, the kind of processes that can be performed varies for each type of image.

When we use a software application to process graphical information, we have at our disposition a series of predefined options, parameters and operations for working with information. This is a practical entry point to start discovering the functionalities harnessed by the software environment. However, we are often also confronted with the necessity of understanding what is happening “under the hood” and sometimes we even want to go further than the given possibilities at hand.

This chapter is devoted to the foundations of graphical information. In this part, we consider images as data and digital information. We look at the technical aspects that define a digital image: from data types and data structures required to render an image on the computer screen to seminal algorithms and image processing techniques. Our aim is to offer a technical overview of how images are handled by the computer and to identify how they are implemented at higher levels of description, that is, in graphical visualizations of data, user interfaces, and image interfaces for exploring information.

2.1. Organizing levels of description

Digital images can be seized from different angles: as a series of pixels on screen, as a computer code, as mathematical descriptions of vertices and edges, or as sequences of bits and bytes. Different forms depend on the level of description at which we study them. Generally speaking, a level of description uses its own language, notation system, and model rules in order to understand and explain the components of a lower level.

Scientist Douglas Hofstadter observes that any aspect of human thinking can be considered a “high level description of a system [the brain] which, at a lower level, is managed by simple and formal rules” [HOF 85, p. 626]. Lower levels can be so complex that for practical reasons, we take for granted their internal mechanisms and we produce a semantic abstraction of them. Thus, “the meaning of an object is not located in its interior” [HOF 85, p. 653]; it rather comes from multidimensional cognitive structures: previous experiences, intuitions, mental representations, etc. Following this argument, we will understand software from its material components and material relationships: programming languages and graphical interfaces that allow using algorithms that have been designed according to determinate data structures and electronic circuitry, that rely on more basic data types that give shape to bytes and bits.

So how and where should we start analyzing digital images and interfaces? If we choose the software level as entry point, then we would have to take for granted lower layers that might be useful in our account. Moreover, which aspect of software should we consider: the compiled application, the source code or the graphical user interface? And what about the relationship between software and hardware, including display, storing and processing components?

With these questions in mind, the model called “generative trajectory of expression”, proposed by semiotician Jacques Fontanille [FON 08] (briefly introduced in section 1.4.2), will help us distinguish among different layers of meaning and description. The term “generative” is borrowed from semiotician Algirdas Greimas, who uses it to accentuate the act and mode of production–creation. The term “trajectory” makes reference to the fact that there are several components that intervene and cooperate in the production mode. This trajectory goes, similar to Hofstadter, from simple to complex, from abstract to concrete levels. Although Fontanille has continued to develop his model in the analysis of practices and forms of life, we have discussed it and adapted it to more fundamental levels regarding digital images.

Table 2.1 summarizes the different levels through which digital images are used and produced. Each level claims a double face (or interface as Fontanille calls them [FON 08, p. 34]), where there is always a formal part that points to a lower level structure, and a material–substantial part directed towards the manifestation in a higher level. In other words, what is formal at one level derives from what is substantial in a lower level; and what is substantial is delimited by its formal support. To study the intricacies at any given level, it is necessary to revise how it is produced from its different components.

In the following parts of this chapter, we will follow this generative trajectory to offer a technical overview of how images are handled by the computer and to identify how they are implemented at higher levels of description. We will develop formally the first three levels: signs, texts and objects. The remaining levels can be implied in the following chapters.

In Table 2.1, the emphasis is made on the “expression plane” rather than on the “content plane”. In semiotic studies, the expression is part of the meaning process that is perceptible, while the content is the abstract, internal and interpretative part that is evoked or suggested in front of an expression.

For a different look at how semiotics has been approached to study computing processes, or if the reader desires more insights from the “content” perspective, we might point to seminal works by Peter Andersen [AND 97], Clarisse de Souza [DES 05] and Kumiko Tanaka-Ishii [TAN 10]. Historically, Heinz Zemanek [ZEM 66] was among the first to study relationships between language and compilers, asking for instance, what do different translating principles and what do different compilers do to the language? The articulation of Zemanek’s semiotic thinking is grounded on the Vienna Circle, which was influenced by Bertrand Russell and Charles Morris, the latter being a follower of Charles Peirce and who distinguished formalization in terms of syntactic, semantic and pragmatic dimensions. More recently, the linguistic turn is also considered by Frederica Frabbeti [FRA 15], who connects saussurean signifiers to voltages and micro-circuitry and to their signified meaning according to the rules of programming languages in which the code is written. She is interested in how the formalization of language makes them an instrument [FRA 15, p. 134]; reading Hayles, Kittler, Derrida and Stiegler, among others, to investigate the larger question of code and metaphysics.

Table 2.1. Levels of description. Semiotic trajectory of expression with an adaptation to digital images

| Level of pertinence | Interface | Expression in digital image (Formal/Material) | Experience |

| 1. Signs | Source of formants | Electron diffusion, binary code | Figuration |

| Recursive formants | Data types, data structures, algorithms (logical and mathematical rules) | ||

| 2. Texts | Figurative isotopies of expression | Syntax and semantics of programming languages, programming styles, elements of the graphical user interface (GUI) | Interpretation |

| Enunciation/inscription device | Programming code, graphical user interfaces, file formats, application software | ||

| 3. Objects | Formal support of inscription | Raster grid | Corporeity |

| Morphological praxis | Display technologies (screens CRT, LCD, LED, DLP); capturing devices (CCD, CMOS); printing devices (2D and 3D) | ||

| 4. Scenes of practice | Predicative scenes | Manipulating, retouching, drawing, designing, experimenting, discovering, etc. | Practice |

| Negotiation processes | Different practices, e.g. artistic, aesthetic, commercial, educational, professional | ||

| 5. Strategies | Strategic management of practices | Fields and domains such as image processing, computer vision, computer graphics, digital humanities, UX/UI | Conjuncture |

| Iconisation of strategic behaviors | Working with images as a scientist, or as an artist, or as a designer, or as a social researcher | ||

| 6. Life forms | Strategic styles | Digital culture, digital society | Ethos and behavior |

2.2. Fundamental signs of visual information

At the beginning of the trajectory, meaning starts to take form in basic units of expression. In the case of digital information, such units are essentially abstract. Digital computers are considered multipurpose precisely because they can be programmed to perform a wide variety of operations. That means the same hardware components can be configured to support many diverse uses and applications. This is possible because the fundamental type of information that digital systems handle is in abstract binary form.

In this section, we explain how fundamental units of expression are configured from abstract to more concrete idealizations that help achieve envisioned operations with digital computers. In other words, our goal is to take a glance at the basic pieces underlying software environments. To do that, we go from data types to data structures in order to identify how they allow the implementation of solutions to recurrent problems. Conversely, it also occurs that the nature of problems dictates how information should be organized to obtain more efficient results.

2.2.1. From binary code to data types

The binary form is represented with binary digits, also called bits. Each bit has only two possible values: 0 or 1. A series of bits is called binary code, and it can express notations in different systems. For example, human users use different notation systems to refer to numbers: instead of using a 4-digit binary code such as 0101, we will likely say 5 in our more familiar decimal system, or we could have written it using another notation: “V” in Roman.

Hence, all sign systems that can be simulated by computers are ultimately transformed into binary code. As we can imagine, sequences of binary code become complex very rapidly because they increase in length while using the same elementary units. On top of binary code, other number systems exist with the intention to overcome this difficulty. While octal and hexadecimal number systems have been used, the latter remains more popular; it compacts four binary digits into one hex digit. Table 2.2 summarizes the equivalencies for numerical values, from 0 to 15, in hexadecimal code and in 4-digit binary code.

Table 2.2. Numerical notations: decimal, hexadecimal and binary

| Decimal number | Hexadecimal | 4-digit binary code |

| 0 | 0 | 0000 |

| 1 | 1 | 0001 |

| 2 | 2 | 0010 |

| 3 | 3 | 0011 |

| 4 | 4 | 0100 |

| 5 | 5 | 0101 |

| 6 | 6 | 0110 |

| 7 | 7 | 0111 |

| 8 | 8 | 1000 |

| 9 | 9 | 1001 |

| 10 | A | 1010 |

| 11 | B | 1011 |

| 12 | C | 1100 |

| 13 | D | 1101 |

| 14 | E | 1110 |

| 15 | F | 1111 |

In terms of memory storage of bits, the industry standard since early 1960s are 8-bit series also called bytes or octets. Generally speaking, a byte can accommodate a keyboard character, an ASCII character, or a pixel in 8-bit gray or color scale. Bytes also constitute the measure of messages we send by email (often in kilobytes), the size of files and software (often in megabytes), or the capacity of computing devices (often in giga or terabytes). As we will see, the formal description in terms of 8-bit series is manifested at higher levels of graphical interface: from the specification to the parameterization of media quality and visual properties.

In practice, binary code is also called machine language. Bits represent the presence or absence of voltage and current electrical signals communicating among the physical components of digital computers such as the microprocessor, the memory unit, and input/output devices. Currently, machine language is of course hardly used. What we use to write instructions to computers are higher-level languages. One step above machine language we find assembly language and, at this level, it is interesting to note that the literature in electrical, electronic and computer engineering distinguishes between “system software” and “applications software” [DAN 02, p. 9].

In general terms, assembly language is used for developing system software such as operating systems (OS), and it is specific to the type of hardware in the machine (for example, a certain kind of processor). On the other hand, applications software run on the OS and can be written in languages on top of assembler, such as C. The passage from one level to another requires what we would call “meta-programs”. An assembler converts assembly into machine language, while a compiler does the same but from higher-level language.

Before moving from the formal level of binary signs into a more material level of programming languages, we should clarify that this level (as it may occur in any other level of the generative trajectory) could be further elaborated if we operate a change of “scale”. We could be more interested to know how, for instance, signals behave among digital components, or how they are processed by more basic units such as the algorithmic and logic unit (ALU), or how circuits are designed logically, or how they are interconnected. In order to do that, we should turn to the area of study called “digital and computer design”.

2.2.2. Data types

As we have seen, bits are assembled to represent different systems of signs. Our question now is: which are those different systems and how do computers describe them? We already saw that binary code is used to describe numbers and letters, and from there we can create words and perform mathematical operations with numbers.

Data types represent the different kinds of values that a computer can handle. Any word and any number are examples of values: “5”, “V”, or “Paris”. From the first specifications of programming languages, we recognize a handful of basic data types, or as computer scientist Niklaus Wirth called them: “standard primitive types” [WIR 04, pp. 13–17]:

- – INTEGER: for whole numbers

- – REAL: numbers with decimal fraction

- – BOOLEAN: logical values, either TRUE or FALSE

- – CHAR: a set of printable characters

- – SET: small sets of integers, commonly no greater than 31 elements

These particular data types were first used in languages in which Wirth was involved, from Algol 68 and Pascal in 1969 to Oberon in 1985. Currently, many programming languages use the same data types although with different names and abbreviations. As a matter of fact, the situation of identifying which data types are supported by any language is experienced most of the time when we learn a new language. Considerable time is spent in distinguishing those syntax differences.

Data types allow performing, programming and iterating actions with them. For example, basic operations with numbers include addition, subtraction, division and multiplication, while operations with words are conjunction and disjunction of characters. However, data types can be combined and organized in order to support more complicated operations, as we will see in the following section.

In the case of graphical information, there are two fundamental approaches to data types. From the standpoint of image processing and computer vision, the accent is placed on bitmap and raster graphics because many significant processes deal with capturing and analyzing images. A different view of data types is that of computer graphics whose focus is on vector graphics as a model to describe and synthetize 2D figures and 3D meshes that can be later rasterized or rendered as a bitmap image. We will now take a brief look at both perspectives.

2.2.2.1. Data types and bitmap graphics

The bitmap model describes an image as a series of finite numerical values, called picture elements or pixels, organized into a 2D matrix. In its most basic type, each value allocates one bit, thus it only has one possible brightness value, either white or black. The described image in this model is also known as monochrome image or 1-bit image.

In order to produce gray scale images, the amount of different values per pixel needs to be increased. We refer to 8-bit images when each pixel has up to 255 different integer values. If we wonder why there are only 255 values, the explanation can be made by recalling Table 2.2: the 4-bit column shows all the different values between 0000 and 1111 and their corresponding decimal notations. An 8-bit notation adds 4 bits to the left and counts from 00000000 to 11111111, where the highest value in decimal notation is 255.

Nowadays, the most common data type used for describing color images is 24-bit color. Taking as primary colors the red, green and blue, every pixel contains one 8-bit layer for each of these colors, thus resulting in a 24-bit or “true color” image. As such, the color for a given pixel can be written in a list of three values. In programming languages such as Processing, the data type COLOR1 exists together with other types, like BOOLEAN, CHAR, DOUBLE, FLOAT, INT and LONG.

The red, green and blue color combination has been adopted as the standard model for describing colors in electronic display devices. From the generic RGB color model, there are more specific color spaces in use, for example:

- – RGBA: adds an extra layer for the alpha channel that permits modification of transparency. In this case, images are 32-bit.

- – sRGB: a standardized version by the International Electrotechnical Commission (IEC) and widely used in displaying and capturing devices.

- – HSB (Hue, Saturation, Brightness) or HSL (Hue, Saturation, Lightness): a color representation that rearranges the RGB theoretical cube representation into a conical and cylindrical form (we will revise those models in section 2.4).

In the form of data types, colors are handled as binary codes of 24 or 32 bits. Table 2.3 shows some RGB combinations, their equivalent in HSB values, in hexadecimal notation, in binary code, and their given name by the World Wide Web Consortium (W3C).

Table 2.3. Color notations: W3C name, RGB, HSB, hexadecimal, binary code

| Name | RGB | HSL | Hex | 24-digit binary code |

| Black | 0, 0, 0 | 0, 0%, 0% | #000000 | 00000000 00000000 00000000 |

| Red | 255, 0, 0 | 0, 100%, 50% | #FF0000 | 11111111 00000000 00000000 |

| Lime (Green) | 0, 255, 0 | 120, 100%, 50% | #00FF00 | 00000000 11111111 00000000 |

| Blue | 0, 0, 255 | 240, 100%, 50% | #0000FF | 00000000 00000000 11111111 |

| Cyan or Aqua | 0, 255, 255 | 180, 100%, 50% | #00FFFF | 00000000 11111111 11111111 |

| Magenta or Fuchsia | 255, 0, 255 | 300, 100%, 50% | #FF00FF | 11111111 00000000 11111111 |

| Yellow | 255, 255, 0 | 60, 100%, 50% | #FFFF00 | 11111111 11111111 00000000 |

| Gray | 128, 128, 128 | 0, 0%, 50% | #808080 | 10000000 10000000 10000000 |

| White | 255, 255, 255 | 0, 0%, 100% | #FFFFFF | 11111111 11111111 11111111 |

| Tomato | 255, 99, 71 | 9, 100%, 64% | #FF6347 | 11111111 01100011 01000111 |

In domains like astronomy, medical imagery, and high dynamic range imagery (HDRI), 48- and 64-bit images are used. For these types, each image component has 16 bits. The reason for adding extra layers is to allocate space for different light intensities in the same image, or to describe pixel values in trillions of colors (what is also called “deep color”). However, although software applications that allow us to manipulate such amounts of data have existed for some time, the hardware for capturing and displaying those images is still limited to specialized domains.

2.2.2.2. Data types and 2D vector graphics

Vector graphics describe images in terms of the geometric properties of the objects to be displayed. As we will see, the description of elements varies depending on the type of images that we are creating – currently 2D or 3D. In any case, such description of images occurs before its restitution on screen; this means that graphics exist as formulae yet to be mapped to a position on the raster grid (such positions are called screen pixels; see section 2.4.1.).

The equivalent to data types in vector graphics are the graphics primitives. In 2D graphics, the elementary units are commonly:

- – Points: represent a position along the X and Y axes. The value and unit of measure for points in space are commonly real (or float) numbers expressed in pixels, for example, 50.3 pixels. Furthermore, the size and color of points can be modified. Size is expressed in pixels, while color can be specified with values according to RGB, HSL or HEX models (see Table 2.3).

- – Lines: represent segments by two points in the coordinate system. Besides the position of points and the color of points, the line or both, line width and line style can also be modified. The latter with character strings (solid, dot or dash pattern), and the former with pixel values.

- – Polylines: connected sequences of lines.

- – Polygons: closed sequences of polylines.

- – Fill areas: polygons filled with color or texture.

- – Curves: lines with one or more control points. The idea of control points is to parameterize the aspect of the curve according to the polygonal boundary created by the set of control points, called the convex hull. Thus, we can position points in space but also the position of control points, both expressed in real number values. There are several kinds of curves, each of them representing different geometrical properties, conditions and specifications:

- – Quadratic curves: curves with one control point.

- – Cubic curves: curves with two control points.

- – Spline curves (or splines): curves with several cubic sections.

- – Bézier curves: curves based on Bernstein polynomials (mathematical expressions of several variables).

- – B-splines (contraction of basis splines): curves composed of several Bézier curves that introduce knots, a kind of summarization including different control points from single Bézier curves.

- – Circles, ellipses and arcs: a variety of curves with fill areas properties. That means that while a circle can be easily imagined to have a color or texture fill, it is also the case for only a fragment of its circumference. In that instance, the segment is an arc that closes the shape at its two points. The ellipse is a scaled circle in a non-proportional manner.

Today, many programming languages provide combinations of already made prototypical shapes. The most common examples are ellipses and rectangles, which means that triangles and other polygons (pentagons, hexagons, irregular shapes with curved edges, etc.) have to be specified through vertices points. Another case is text, which typically needs to load an external font file (file extensions OTF (OpenType Font) or TTF (TrueType Font)) containing the description of characters in geometrical terms.

2.2.2.3. Data types and 3D vector graphics

In 3D graphics, the notion of graphics primitives depends on the modeling technique used to construct a digital object. In general, we can identify two major approaches: surface methods and volumetric methods. The first method implies the description and representation of the exterior surface of solid objects while the second method considers its interior aspects. Allow us to explore briefly both the approaches.

- – Surface methods: polygons can be extended to model 3D objects, in an analogous fashion to 2D graphics. Today, many software applications include what are called standard graphics objects, which are predefined functions that describe basic solid geometry such as cubes, spheres, cones, cylinders and regular polyhedra (tetrahedron, hexahedron, octahedron, dodecahedron and icosahedron, containing 4, 6, 8, 12 and 20 faces, respectively).

For different kinds of geometries, another technique of modeling objects consists of considering basic graphics units in terms of vertices, edges and surfaces. In this respect, it is necessary to construct the geometry of an object by placing points in space. The points can then be connected to form edges, and the edges form planar faces that constitute the external surface of the object.

Besides polygons, it is also possible to model surfaces from parametric curves. We talk about spline surfaces, Bézier surfaces, B-spline surfaces, beta-splines, rational splines, and NURBS (non-uniform rational B-splines). The main advantage of using curves over polygons is the ability to model smooth curved objects (through interpolation processes). Overall, a spline surface can be described from a set of at least two orthogonal splines. The several types of curves that we mentioned stand for specific properties of control points and boundary behaviors of the curve2.

- – Volumetric approaches: we can mention volume elements (or voxels) as basic units for a type of modeling that is often used for representing data obtained from measuring instruments such as 3D scanners. A voxel delimits a cubic region of the virtual world, in a similar manner to pixels, but of course a voxel comprises the volume of the box. If an object exists in a particular region, then it could be further described by subdividing the interior of the region into octants, forming smaller voxels. The amount of detail depends on the necessary resolution to be shown adequately on the screen.

A couple of other different techniques for space-partitioning representation can be derived from combining basic elements. For instance, constructive solid geometry methods unveil superposed regions of two geometrical objects. Basically, there are three main operations on such sets: union (both objects are joined), intersection (only the intersected area is obtained) and difference (a subtraction of one object from the other). The second case is called extrusion or sweep representations. It consists of modeling the volume of an object from a curve that serves as a trajectory and a base model that serves as the shape to be extruded.

The other example of the volumetric approach considers scalar, vectors, tensors and multivariate data fields as the elementary type for producing visual representations (in the next section, we will cover vectors such as data structures in more detail). These cases are more used in scientific visualization, and they consist of information describing physical properties such as energy, temperature, pressure, velocity, acceleration, stress and strain in materials, etc. The kind of visual representations that are produced from these kinds of data types usually take the form of surface plots, isolines, isosurfaces, and volume renderings [HEA 04, pp. 514–520].

As can be inferred from the last example, an object should be further described in order to parameterize its color, texture, opacity, as well as how it reacts to light (reflecting and refracting it, for instance). Without this information, it could be impossible to explore or see inside a volumetric object. Together with its geometrical description, a digital object also includes data called surface normal vectors, which is information that defines the simulated angle of light in relation to the surface.

Finally, for all cases in 3D graphics, we can also think about triangles as primitive elements. Any surface of an object is tessellated into triangles or quadrilaterals (the latter being a generalization of the former because any rectangle can be divided into two triangles) in order to be rendered as an image on screen. Even in the case of freeform surfaces, they often consist of Bézier curves of degree 3 as they can be bounded into triangles more easily.

2.2.3. Data structures

Generally speaking, we can approach data structures or information structures as a special form of organizing data types. We create data structures for practical reasons; for example, a data structure can hold information that describes a particular geometrical form or, more simply, it can store a bunch of data as a list. In fact, the way in which data is structured depends on what we intend to do with that data. Therefore, data structures are intimately related to algorithms – which are the specific topic of section 2.2.4.

Computer scientist Niklaus Wirth considered data structures as “conglomerates” of already existing data types. One of the main issues of grouping and nesting different types of information has always been arranging them efficiently, both for retrieval and access, but also for storing. Data representation deals with “mapping the abstract structure onto a computer store… storage cells called bytes” [WIR 04, p. 23]. Wirth distinguished between static and dynamic structures that he implemented in the programming languages where he was involved as a developer. Static structures had a predefined size, and thus the allocated memory cells were found in a linear order. Examples of this type are arrays, records, sets and sequences (or files). On the contrary, dynamic structures derivate from the latter but vary in size, values, and can be generated during the execution of the program. In this case, the information is distributed along different non-sequential cells and accessed by using links. Examples of this type are pointers, linked lists, trees and graphs.

With similar goals but different perspective, computer scientist Donald Knuth categorized information structures into linear and nonlinear. Porting his theoretical concepts to his programming language MIX, Knuth counts as linear structures: arrays, stacks, queues and simple lists (circular and doubly linked) [KNU 68, p. 232]. Nonlinear structures are mainly linked lists and trees and graphs of different sorts: binary trees, free trees, oriented trees, forests (lists of trees) [KNU 68, p. 315].

Data structures are used extensively at different levels of the computing environment: from the design and management of OS to the middleware to the web applications layer. Moreover, data structures can be nested or interconnected, becoming larger and complex. In some cases, it is necessary to transform them, from one structure to another. Although it is out of our scope to study data structures in detail, we review those that seem to be fundamental for visual information.

2.2.3.1. Data structures for image processing and analysis

In the last section, we mentioned that common techniques for image processing are performed on bitmap images, which are composed of picture values named pixels. Such values are orderly arranged into a bi-dimensional array of data called a matrix. A bitmap is thus a kind of array data structure.

- – Arrays: the array is one of the most popular data structure of all time. It defines a collection of elements of the same type, organized in a linear manner and that can be accessed by an index value that is, in its turn, an integer data type.

- – Matrices: elements of the array can also be themselves structured. This kind of array is called a matrix. A matrix whose components describe two element values is a 2D matrix. In the case of bitmap images, those values constitute the rows and columns of the pixel values that describe an image (X and Y coordinates). Of course, different kinds of matrices exist: multidimensional matrices (as in 3D images) or jagged arrays (when the amount of elements in arrays is not regular), to mention a couple.

- – Quadtrees: these are known as nonlinear data structures. They are based on the concept of trees or graphs. A quadtree structure is created on top of a traditional rectangular bitmap in order to subdivide it in four regions (called quadrants). Then the structure decomposes a subregion into smaller quadrants to go into further detail. The recursive procedure extends until the image detail arrives at the size of a pixel. In the following section, we will illustrate some of the uses and applications of quadtrees.

- – Relational tables: Niklaus Wirth thought of them as matrices of heterogeneous elements or records. Tables associate rows and columns by means of keys. By combining a tree structure to detect boundaries and contours, the table can be used to associate semantic descriptions with the detected regions.

2.2.3.2. Data structures for image synthesis

Image synthesis is a broad field interested in image generation. In visual computing, it is the area of computer graphics that deals more specifically with image synthesis. From this perspective, we observe three main models for image representation: 1) meshes; 2) NURBS and subdivisions (curves and surfaces); and 3) voxels. The first model has been widely adopted for image rendering techniques (for rasterizing vector graphics), the second in object modeling (shaping surfaces and objects), and the third in scientific visualization (creating volumetric models out of data).

Each one of these models takes advantage of the fundamental data structures (arrays, lists, trees) in order to build more complex structures adapted to special algorithmic operations. These structures are known as graphical data structures, including geometrical, spatial and topological categories. By abstraction and generalization of their properties, they have been used to describe and represent 1D, 2D and 3D graphics.

At the most basic level, graphics consist of vertices and edges. One way to describe them is according to two separate lists: one for the coordinates of vertices and the other for the edges, describing pairs of vertices. The data structure for this polyline is called 1D mesh [HUG 14, p. 189].

Of course, points coordinates are not the only way to describe a line. Vector structures are a different approach that exploits two properties: magnitude and direction. In this sense, the line is no longer fixed in the space, but becomes a difference of points. It maintains its properties even if the positions of points are transformed. The magnitude property defines the distance between two points while the direction defines the angle with respect to an axis. As we mentioned earlier, vectors are used in scientific domains because it is straightforward to simulate velocity and force with them as both the phenomena show magnitude and direction properties. The latter quantity is the amount of push/pull and the former quantity refers to the speed of moving an object.

Here we should evoke briefly tensors, which are a generalization of vectors. Tensors are defined by coefficient quantities called ranks, for example, the number of multiple directions at one time. They are useful, for instance, to simulate transformation properties like stress, strain and conductivity. For terminology ends, tensors with rank 0 are called scalars (magnitude but no direction); with rank 1 are called vectors (magnitude and direction); with rank 2 are called matrices; with rank 3 are often called triad; and with rank 4 tetrads, and so on. As we will explore in further chapters, vectors and tensors have gained popularity as they are used for modeling particle systems, cellular automata, and machine learning programs.

In 2D and 3D, vertices and edges are grouped in triangles. A series of triangles connected by their edges produce a surface called triangle mesh. In this case, the data structure consists mainly of three tables: one for vertices, one for triangles and one for the neighbor list. The latter table helps identify the direction of the face and the normal vectors (this is important when surfaces are illuminated, and it is necessary to describe how light bounces off or passes through them).

Besides triangle meshes, it is also possible to model objects with quadrangles (also named quads) and other polygons of more sides. A simple case of using quads would be to insert a simple bitmap image (a photograph, an icon, etc.) inside a container plane (sometimes called a pixmap). There is today some debate around the reasons to choose quads over triangles (most notably in the digital modeling and sculpting communities); however, current software applications often process in the background the necessary calculations to convert quads into triangles. Triangles anyhow are preferred because of their geometric simplicity: they are planar, do not self-intersect, they are irreducible and therefore are easier to join and to rasterize.

With the intention to keep track, find intersections, and store values and properties of complex objects and scenes, the field of computer graphics has developed spatial data structures. Such structures are built on the concept of polymorphism, which states that structures of some primitive type be allowed to implement their operations on a different type. We can cite two main kinds of spatial data structures: lists and trees.

- – Lists: they can be considered as a sequence of values whose order is irrelevant for the operations that they support. Lists are among the most basic and earliest forms of data structure. Donald Knuth, for example, identified and implemented two types of lists still popular today: stacks and queues. Stacks allow deleting or inserting elements from one side of the list (the top, for instance, representing the latest element added and the first element deleted). Queues allow deleting data from one side (for example, the first element) while adding new data to the other end. In the case of visual information, lists can be generalized to higher dimensions: each element of the list can be a different structure, for instance, an array containing two or three position values.

- – Trees: as we mentioned earlier, trees are nonlinear structures that store data hierarchically. The elements of trees are called nodes, and the references to other nodes are edges or links. There are several variations of trees (some lists have tree-like attributes): linked lists (where nodes point to another in a sequential fashion); circular linked lists (where the last node points back to the first); doubly linked lists (where each node points to two locations, the next and the precedent node); binary trees and binary search trees (BST) (where each node points simultaneously to two nodes located at a lower level).

Applied to visual information, there are three important variations of trees:

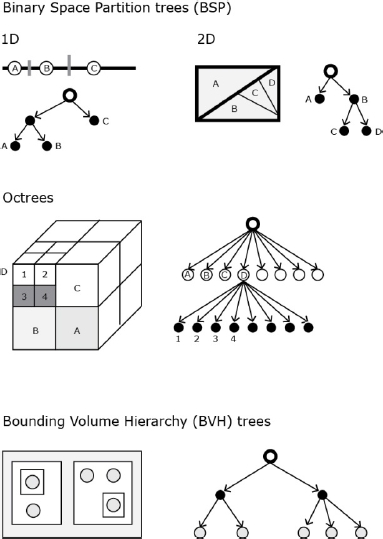

- – Binary space partition (BSP) tree: while a 2D spatial tree will divide the space by means of 2D splitting lines, a BSP will partition the inner space of volumes into subspaces. Depending on the algorithmic operations, subspaces can be 2D polygons, 3D polyhedra or higher-dimensional polytopes [HUG 14, p. 1084].

- – Octrees: octrees extend the idea of quadtrees from two dimensions to three dimensions. The tree structure consists of nodes pointing to eight children that describe the volume space. The principle is to start from the cube volume that surrounds a model and to recursively subdivide it into smaller cubes. As in the case of quadtrees, each division is performed at the center of its parent.

- – Bounding volume hierarchy (BVH) tree: this structure separates space in nesting volumes by means of axis-aligned boxes (bounding tight clusters of primitives). Its implementation requires first a BSP tree and then boxes form bottom-top (from the leaves to the root). Even though boxes at the same level often overlap in this passage, it has demonstrated better efficiency over octrees and has gained recent popularity for ray tracing and collision detection operations.

To close this section, we should point to the fact that image synthesis considers the generated or modeled graphical object together with the virtual world, or environment, where it exists. For example, in 3D graphics, the data structure scene graph arranges the whole universe of the image, from the scene to the object to its parts.

2.2.4. Algorithms

If we imagine data structures as objects, algorithms then would be the actions allowed on those objects. The close relationship between both can be seized from what we expect to do with our data. Just as in dialectic situations, a data structure is conceived to support certain operations, but algorithms are also designed based on the possibilities and limits of data structures.

Computer scientist Donald E. Knuth defined algorithms simply as a “finite set of rules that gives a sequence of operations for solving a specific type of problem” [KNU 68, p. 4]. In this approach, the notion can be related to others like recipe, process, method, technique, procedure or routine. However, he explains, an algorithm should meet five features [KNU 68, pp. 4–6]:

- – Finiteness: an algorithm terminates after a finite number of steps. A counter example of an algorithm that lacks finiteness is better called a computational method or procedure, for example, a system that constantly communicates with its environment.

- – Definiteness: each step must be precisely, rigorously and unambiguously defined. A counter example would be a kitchen recipe: the measures of ingredients are often described culturally: a dash of salt, a small saucepan, etc.

- – Input: an algorithm has zero or more inputs that can be declared initially or that can be added dynamically during the process.

- – Output: it has one or more quantities that have a relation with the inputs.

- – Effectiveness: the operations must be basically enough so that they could be tested or simulated using pencil and paper. Effectiveness can be evaluated in terms of the number of times each step is executed.

Algorithms have proliferated in computer science and make evident its relationship to mathematics. Because data types are handled as discrete numerical values, an algorithm takes advantage of calculations based on mathematical concepts: powers, logarithms, sums, products, sets, permutations, factorials, Fibonacci numbers, asymptotic representations. Besides those, in visual computing, we also found: algebra, trigonometry, Cartesian coordinates, vectors, matrix transformations, interpolations, curves and patches, analytic geometry, discrete geometry, geometric algebra. The way in which an algorithm implements such operations varies enormously, in the same sense that various people might solve the same problem very differently. Thus, there are algorithms that are used more often than others, not only because they can be used in several distinct data structures, but also because they solve a problem in the most efficient yet simple fashion.

To describe an algorithm, its steps can be listed and written in a natural language, but it can also be represented as mathematical formulae, as flow charts, or as diagrams depicting states and sequences3 (Figure 2.4). The passage from these forms to its actual execution goes through its enunciation as a programming code (the topic of section 2.3.1).

Figure 2.4. Euclid’s algorithm and flowchart representation [KNU 68, pp. 2–3]

Before diving into algorithms for visual information, we briefly review the two broadest types of algorithms used in data in general: sorting and searching.

2.2.4.1. Sorting

Sorting, in the words of Niklaus Wirth, “is generally understood to be the process of rearranging a given set of objects in a specific order. The purpose of sorting is to facilitate the later search for members of the sorted set” [WIR 04, p. 50]. D. E. Knuth exemplifies the use of sorting algorithms on items and collections by means of keys. Each key represents the record and establishes a sort relationship, either a < b, or b < a, or a = b. By the same token, if a < b and b < c, then a < c [KNU 73, p. 5]. There exist many different sorting algorithms and methods; among the most used, we have:

- – Sorting by insertion: items are evaluated one by one. Each item is positioned in its right place after each iteration process of the steps.

- – Sorting by exchange: pairs of items are permutated to their right locations.

- – Sorting by selection: items are separated, or floated, starting with the smallest and going up to the largest.

The first and the most basic algorithms evolved as the amounts and type of data to be sorted increased and differed. Examples of advanced algorithms invented around the 1960s but still in use today are: Donald Shell’s diminishing increment, polyphase merge, tree insertion, oscillating sort; Tony Hoare’s quicksort; J. W. J. William’s heapsort.

2.2.4.2. Searching

Searching is related to processes of finding and recovering information stored in the computer’s memory. In the same form of sorting, data keys are also used in searching. D. E. Knuth puts the problem as: “algorithms for searching are presented with a so-called argument, K, and the problem is to find which record has K as its key” [KNU 73, p. 389]. Generally speaking, there have been two main kinds of searching methods: sequential and binary.

- – Sequential searching starts at the beginning of the set or table of records and potentially visits all of them until finding the key.

- – Binary searching relies in sorted data that could be stored in tree structures. There are different binary searching methods. Knuth already identifies binary tree searching (BTS), balanced trees, multiway trees, and we observe more recent derivations such as red-black trees or left-leaning red-black trees (LLRB) (which were evoked at the end of the last section as an example of esoteric data structure). Anyhow, the overall principle of these searching methods is to crawl the tree in a symmetric order, “traversing the left subtree of each node just before that node, then traversing the right subtree” [KNU 73, p. 422].

We will now discuss some algorithms introduced specifically within the context of visual data. For practical reasons, we continue distinguishing techniques from different fields in visual computing4, and we also relate how these techniques apply on specific data structures.

2.2.4.3. Geometric Transformations

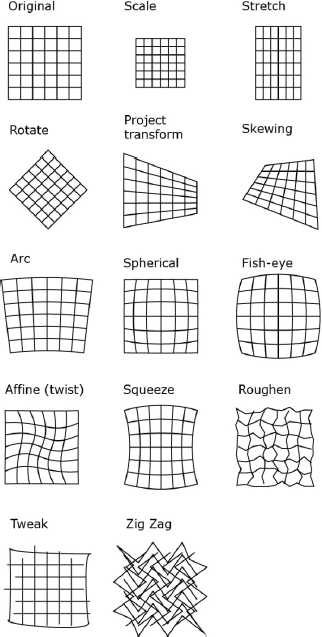

Geometric transformations imply modification of the coordinates of pixels in a given image. They are based consistently on arrays and matrices data structures. They define operations for translating (i.e. moving linearly along the axes), rotating (along two or three axes) and scaling (i.e. dimensioning an image). These techniques can be applied globally – that is, to the entire bitmap image – or only onto smaller layered images contained in the space, such as sprites in 2D graphics or meshes in 3D graphics.

Common variations of these methods include: stretching or contracting, shearing or skewing, reflecting, projecting (rotating and scaling to simulate perspective). More complex methods exist such as nonlinear distortions. In this case, the points are mapped onto quadratic curves resulting in affine, projective, bilinear, twirl, ripple and spherical transformations, for example.

2.2.4.4. Image transformation (image filtering)

The second important family of transformations that are also based on arrays and matrices of pixels are filters. The general idea of filtering is to define a region of pixels inside the image (also called a neighborhood or spatial mask, kernel, template, window), then to perform an operation on that region before applying it to the entire image space [GON 08, p. 167]. There are two main types of filtering in visual computing literature. First, those performed on the image space:

- – Intensity transformations: such as negative transformations, contrast manipulation, and histogram processing.

- – Smoothing filters: used for blurring and noise reduction, either by averaging or ranking (ordering) the pixels in the mask.

- – Sharpening filters: enhance edges by spatial differentiation [GON 08, p. 179].

Figure 2.5. Geometrical transformations (created with Adobe Illustrator filters)

Second, there are filters that perform better when the image is viewed as a signal. As with sound frequency and amplitude, images also show variations of visual information that can be associated with frequencies. The standard mechanism to transform image coordinates into frequencies is the Fourier transform.

- – Fourier transform: this helps to determine the amount of different frequencies in a signal. To do this, the surface of the image is first converted to sine and cosine curves. “The values of the pixels in the frequency domain image are two component vectors” [PAR 11, p. 254]. Variations of this transform are discrete Fourier transform or DFT (applied to sampled signals) and the fast Fourier transform or FFT (an optimization of the latter).

- – High-pass filters: these are based on small frequencies; they apply transformations to edges and small regions.

- – Low-pass filter: refer to slow variation in visual information such as big objects and backgrounds.

Figure 2.6. Image filtering. FFT images were produced with ImageJ, all other with Adobe Photoshop

As it might be guessed, some filter operations can be achieved faster in the frequency space than in the image space, and vice versa: particular methods like correlation and convolution5 address those issues. Nowadays, there are new techniques and ongoing research that extend the use of image filtering into specialized fields within the domains of image processing and computer vision dedicated to image enhancement, image correction, image restoration, image reconstruction, image segmentation, image measurement, image and object detection, and image and object recognition. Among many other standalone or combined filters introduced for special uses, we may cite: noise, noise reduction, thresholding, and motion blur.

2.2.4.5. Color quantization (color segmentation)

Color quantization refers to those procedures by which the amount of colors available in a bitmap image is reduced or mapped or indexed to a different scale. Today, this is mainly used for image acquisition (for example, when an image is captured through the camera lens, the colors of the natural world have to be sampled for digital representation) and for image content-based search [PAR 11, p. 399].

Two main categories of methods for color quantization can be identified: 1) the scalar method, which converts the pixels from one scale to another in a linear manner; and 2) the vector method, which considers a pixel as a vector. The latter technique is used more for image quality and counts more efficient algorithms, including: the popularity algorithm (takes the most frequent colors in the image space to replace those which are less frequent); the octree algorithm, which as we saw in the last section is a hierarchical data structure. It partitions the RGB cube consecutively by eight nodes, where each node represents a sub-range of the color space); and the median cut algorithm (similar to the partitions in the octree, but it starts with a color histogram and the representative color pixel corresponds to the median vector of the colors analyzed) [BUR 09, p. 89].

Figure 2.7. Color segmentation. Images were produced with Color Inspector 3D for ImageJ. For a color version of the figure, see www.iste.co.uk/reyes/image.zip

As with the frequency space view, vector-based color segmentations work better with the 3D view of the RGB color space in order to facilitate the evaluation of color distributions and proximities.

2.2.4.6. Image compression

Another family of algorithms that builds on pixel values is dedicated to image storing and transmission purposes. Broadly speaking, the goal of image compression algorithms is to reduce the amount of data necessary to restitute an image. Such algorithms can be classified as lossless or lossy: the former is used to preserve in its entirety the visual information that describes an image, while the latter reduces the file size mainly through three methods [GON 08, p. 547]: by eliminating coding redundancy, by eliminating spatial redundancy, and by eliminating information invisible for human visual perception.

Lossy algorithms have been successfully implemented in image file formats like JPEG and PNG (section 2.3.3 deals with image formats in more detail). The way in which pixel values are handled implies transforming them into code symbols that will be interpreted at the moment of image restitution (the process is called encode/decode; codecs are the programs in charge of performing these operations). PNG files, for example, are based thoroughly on spatial redundancies. The format uses the LZW algorithm (developed by Lempel, Ziv and Welch in 1984) for assigning predefined code symbols to visual information.

On the other hand, formats like JPEG use the Huffman coding algorithm (introduced in 1952 and also used for text compression) in order to determine “the smallest possible number of code symbols” [GON 08, p. 564] based on ordering probabilities. Moreover, just like the Fourier transform, JPEG formats also use a frequency field representation called discrete cosine transform. The difference image describes visual variations “only with cosine functions of various wave numbers” [BUR 09, p. 183] which are ordered by their importance to represent those visual variations (spatial regions, chromatic values, etc.)

2.2.4.7. Image analysis and features

Image analysis also constructs on pixel values. It extends image-processing techniques by taking a different direction towards feature detection and object recognition techniques. The algorithms designed for such tasks analyze a digital space according to two categories: image features and shape features.

- – Image features are those properties that can be measured in an image such as points, lines, surfaces and volumes. Points delimit specific locations in the image (the surrounding area is called keypoint features or interest points, for example, mountain peaks or building corners [SZI 10, p. 207]), while lines are used to describe broader regions based on object edges and boundaries. Formal descriptors for these features are SIFT (scale invariant feature transform) and SURF (speeded up robust features).

- – Shape features constitute the metrics or numerical properties of descriptors that characterize regions inside an image. There are several types, classifications and terminologies of shape descriptors:

- – Geometrical features: they are algorithms to determine the perimeter, area and derivations based on shape size (eccentricity, elongatedness, compactness, aspect ratio, rectangularity, circularity, solidity, convexity [RUS 11, p. 599]).

- – Fractal analysis: mostly used to summarize the roughness of edges into one value. A simple calculation implies starting at any point on the boundary and follow the perimeter around; “the number of steps multiplied by the stride length produces a perimeter measurement” [RUS 11, p. 605].

- – Spectral analysis (Fourier descriptors or shape unrolling): this describes shapes mathematically. It starts by plotting the X and Y coordinates of the region boundary and then converting the resulting values into the frequency field.

- – Topological analysis: these descriptors quantify shape in a structural manner, which means they describe, for example, how many regions or holes between borders are in an image. Algorithms for optical character recognition (OCR) are an example where topological analysis is applied: they describe the topology of characters as a skeleton.

Later in this book, we will show some applications using shape descriptors and we will note that they can be combined. They can also be generalized to 3D shapes and, more recently, they are available as programmatic categories in order to implement machine learning algorithms.

2.2.4.8. Image generation (computational geometry)

These types of algorithm make reference to methods and techniques that generate or synthesize geometric shapes. From a mathematical perspective, a distinction is made between a geometrical and topological description of shapes. Geometry simply defines positions and vertices coordinates, while topology sees the internal properties and relationships between vertices, edges and faces. When pure geometrical description is needed, a set of vertices or an array of positions is enough, but it is common to use a vertex list data structure that organizes the relations between vertices and faces and the polygon. We will now explore prominent techniques for image generation.

- – Voronoi diagrams and Delaunay triangulation: these geometric structures are closely interrelated although they can be studied and implemented separately. Voronoi diagrams are commonly attributed to the systematic study of G. L. Drichlet in 1850 and later adapted by G. M. Voronoi in 1907 [DEB 08, p. 148]. Basically, they divide planar spaces into regions according to a “mark” or “seed” (which can be a given value, or a cluster or values, or a given shape inside an image); the following step consists of tracing the boundaries of regions by calculating equal distances from those “marks”. On its own, the resulting geometry of the diagram can help identify emptier and larger spaces, and also to calculate distances from regions and points (through the nearest neighbor algorithm, for example).

Voronoi diagrams can be extended into Delaunay triangulations by relaying the marker points and tracing perpendicular edges to the boundaries. While there are several triangulation methods, the popularity of Delaunay’s algorithm (named after its inventor, mathematician Boris Delone) resides in its efficiency for reducing triangles with small angles (a situation that is desired for their subsequent uses such as creating polygonal and polyhedral surfaces)6.

- – Particle systems: these are typically collections of points where each dot is independent from the others, but follows the same behavior. More clearly, single points have physics simulation attributes such as forces of attraction, repulsion, gravity, friction and constraints such as collisions and flocking clusters. It is documented that the term “particle system” was coined in 1983 by William Reeves after the production of visual effects for the film Star Trek II: the wrath of Khan at Lucasfilm [SHI 12, ch. 4]. As in that case, particle systems are used to generate and simulate complex objects such as fire, fireworks, rain, snow, smog, fog, grass, planets, galaxies or any other object composed of thousands of particles. Because particle systems are often used in computer animation, algorithms for velocity and acceleration are also used. Common methods involve considering particles as vectors and adapting array list data structures to keep track and ensure collision detection procedures.

- – Fractals: these are geometric shapes that, following the definition by mathematician Benoît Mandelbrot who coined the term in 1975, can be divided into smaller parts, but each part will always represent a copy of the whole. In terms of image generation, fractals use iteration and recursion. That means a set of rules is applied over an initial shape and, as soon as it finishes, it starts again on the resulting image. Famous examples of fractal algorithms are the Mandelbrot set, the Hilbert curve, the Koch curve and snowflake.

Fractals have been adapted into design programs by using grammars. The idea of grammar-based systems comes from botanist Aristid Lindenmayer, who in 1968 was interested in modeling the growth pattern of plants. His L-system included three components: an alphabet, an axiom, and rules. The alphabet is composed of characters (e.g. A, B, C), the axiom determines the initial state of the system, and the rules are instructions applied recursively to the axiom and the new generated sentences (e.g. (A→AB)) [SHI 12, ch. 8].

- – Cellular automata: these are complex systems based on cells that exist inside a grid and behave according to different states of a neighborhood of cells. The states are evaluated and the system evolves in time. The particularity of these systems is their behavior: it is supposed to be autonomous, free, self-reproducing, adapting, and hierarchical [TER 09, p. 168]. Uses and applications of cellular automata can be seen in video games (such as the famous Game of Life by John Conway in 1970), modeling of real-life situations, urban design, and computer simulation.

- – Mesh generation: procedures of this kind are related to 3D reconstruction techniques, tightly bounded to surface and volumetric representation as well as mesh simplification methods. Mesh generation is commonly associated with creating 3D models, useful in medical imaging, architecture, industrial design, and recent approaches to 3D printing. The design of these algorithms first considers the kind of input data because clean mesh surfaces are not always the starting points (i.e. planar, triangle-based, with explicit topology that facilitate interpolation or simplification).

- – Points: these algorithms consider scattered points around the scene. They can be seen as generating a particle system as a surface. A simple method tends “to have triangle vertices behave as oriented points, or particles, or surface elements (surfels)” [RUS 11, p. 595].

- – Shape from X: this is a generalization of producing shapes from different sources, for example, shades, textures, focuses, silhouettes, edges, etc. The following section will give an overview of shading and texturing, but the principle in here is to reconstruct shapes by implying the illumination model and extracting patterns of texture elements (called textels).

- – Image sequences: let’s suppose we have a series of images positioned one after another along the Z-axis, like a depth stack. Algorithms have been designed to register and align the iterated closest matches between the surfaces (the ICP algorithm). Then, another algorithm such as the marching cubes can help generate the intersection points between the surface and the edges of cubes through vertices values. In the end, a triangulation method can generate the mesh tessellation. Of course the differences between a clean mesh surface and one generated as an isosurface reside in the complexity and quantity of vertices and edges. It is precisely for these cases that mesh decimation or mesh triangulation are used to reduce and simplify the size of models.

2.2.4.9. Image rendering

Once an object has been generated, either within a 2D or a 3D world, there is a necessary passage from the geometrical information to the visual projection on the screen. In other words, we go from image pixel values to “screen pixel values”, a process generally called image rendering. There are actually two main rendering methods, rasterization and ray casting. Both take into account more visual information than geometry.

- – Viewing and clipping: image viewing is related to the virtual camera properties. This is the same as choosing the right angle, position, tilt and type of lens before we take a photograph. In the case of visual information, image viewing consists of four main transformations: position, orientation, projection (lens, near and far plans, or view frustrum), and viewport (shape of the screen). In parallel, image clipping relates to eliminating geometrical information outside the viewing volume of the scene. Algorithms such as Sutherland and Hodgman are adapted to clip polygons [HUG 14, p. 1045].

- – Visible surface determination: this family of algorithms is also called hidden surface removal depending on the literature and specificities ([HEA 04] argues that the case of wire-frame visibility algorithms might invigorate the term visible surface determination as it is more encompassing). Anyhow, their main goal is to determine which parts of the geometrical information will be indeed shown on the screen. Although there are abundant algorithms depending on the hardware, software or type of geometry, we can exemplify two broad approaches. On the one hand, the family of image space methods, such as the depth-buffer or z-buffer algorithms, builds on hardware graphics power to trace, screen pixel by screen pixel, all polygons existing in the virtual world, and showing only those closest to the view point. On the other hand, the family of object space methods, such as the depth sorting or the painter’s algorithm, first sort objects according to the view point. Then, they render from the farthest object to the closest, like layers on a canvas or celluloid sheets. It is interesting to note that the latter method is currently implemented in graphical user interfaces (see section 2.3.2).

- – Lighting and shading: objects and meshes do not have only solid colors to be visible. Mainly for photorealistic objectives, the visual aspect of surfaces can be considered a combination of colors, textures and light that bounces around the scene. This is essentially what we call shading. The model of light underlying shading algorithms consists of the light source itself (its location, intensity, and color spectrum) as well as its simulated behavior on surfaces:

- - Diffuse reflection (Lambertian or matte reflection): distributes light uniformly in all directions.

- - Specular reflection (shine, gloss or highlight): mostly depends on the direction of the bouncing light.

- - Phong shading: a model that combines diffuse and specular with ambient illumination. It follows the idea that “objects are generally illuminated not only by point light sources but also by a general diffuse illumination corresponding to inter-reflection” [SZI 10, p. 65].

The BDRF (bidirectional reflectance distribution function) is a model that also takes into account diffuse and specular components; nevertheless, more recent programming environments embrace vertex and fragment shaders functions that allow for integrating more complex models in real-time rendering.

- – Texturing: another visual feature of objects is their simulated texture. That means that instead of geometrically modeling the tiniest corrugation, we use 2D images as textures that simulate such depth. Lastly, the 2D image is handled as an array whose elements are called textels. In terms of [ANG 12, p. 366]: “The mapping algorithms can be thought of as either modifying the shading algorithm based on a 2D array (the map), or as modifying the shading by using the map to alter surface parameters, such as material properties and normal.” There are three main techniques:

- – Texture mapping: this is the texture in its common sense meaning. One way to do this is to associate a texture image with each triangle of the mesh. Other methods unwrap the surface onto one or more maps (called UV mapping).

- – Bump mapping: the goal of this technique is to alter the normal vectors of models in order to simulate shape imperfections (recall that normal vectors designate the direction of faces according to the convex hull).

- – Environment mapping: this refers to techniques where an image is used to recreate how objects in the scene reflect light, but without tracing the actual rays of light. Most of the time, these maps are built on polar coordinates or parametric coordinates [HUG 14, p. 549].

- – Ray tracing: in contrast to the last paragraph, these algorithms actually trace the path of light rays in a scene. The method consists of starting from a screen pixel and then searching for intersections with objects. Of course although in the physical world there are endless light bounces, in here it is necessary to delimit the depth of level of intersections (often three levels). In the end, it is possible to “combine the color of all the rays which strike our image plane to arrive at the final color for each pixel” [GOV 04, p. 179]. An alternative contemporary method to ray tracing is called radiosity, which is inspired by thermal heat transfer to describe light as energy emitters and receivers.

2.3. Visual information as texts

The next level in our generative trajectory refers to texts, which we understand in a broader sense. Texts have to do with configurations that are made out of the entities encountered at the fundamental level (data types, data structures, and algorithms). Texts are enunciations: manifest interpretations of how and which information is present (to the emitter and the receiver). Thus, for text to be comprehensible and transferable, it should follow some syntactic and semantic rules shared by the sender and the receiver. Moreover, as occurs with texts in general (for example, a narrative prose and a poem are structurally different from scientific texts), programming codes have writing styles that reflect on computation models underlying the representation they make of data.

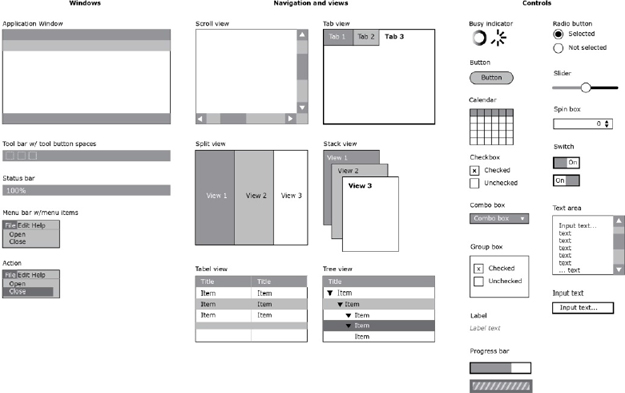

In this section, we describe how the units of the fundamental level are manifested in the form of text. We have chosen three main entry points. The first is in terms of programming code, and we will discuss programming languages and their categorizations. The second concerns graphical user interfaces. At the end of the section, we take a look at file formats as they can also be seen as texts.

2.3.1. Programming languages

In section 2.2.1, we evoked different levels of programming languages. At the closest part to the binary code (the lowest level), there are machine languages. Then, on top of them, we identify assembly languages, which facilitate programming by conventionalizing instructions, that is, using mnemonic conventions instead of writing binary code. A third layer consists of high-level programming languages, which assist in focusing on the problem to be tackled rather than on the lower-level computing details (such as memory allocation). These languages are essentially machine-independent (we can write and execute them in different OS) and generally can be regarded as compiled or interpreted. Compiled languages are commonly used for developing standalone applications because the code is converted into its machine language equivalent. On the other hand, interpreted languages use a translator program to communicate with a hosting software application (that means the code executes directly on the application). Examples of the first category include languages like Java and C++, while the second is associated with languages such as JavaScript and Python, among many others.

Programming languages provide the means to manipulate data types and data structures as well as to implement algorithms. Computer scientist Niklaus Wirth has observed analogies between methods for structuring data and those for structuring algorithms [WIR 04, p. 129]. For instance, a very basic and recurrent operation in programming is to “assign an expression’s value to a variable”. This is done simply on scalar and unstructured types. Another pattern is “repetition over several instructions”, written with loop sentences on an array structure. Similarly, the pattern “choosing between options” would build on conditional sentences using records. As we will see, in programming terms, sentences are called statements (also known as commands or instructions): they contain expressions (the equivalent of text phrases), and they can be combined to form program blocks (the equivalent of text paragraphs).

In this section, we will observe the overall characteristics of programming languages, from the syntactic, semantic and pragmatic point of view. Then we will present some programming styles (also called paradigms or models) and conclude by revisiting major programming languages for visual information.

2.3.1.1. Syntactics, semantics and pragmatics

In contrast to natural languages such as English, French, Spanish, etc., programming languages are formal languages. That means they are created artificially to describe symbolic relationships, like in mathematics, logics, and music notation. One way of studying programming languages has been to distinguish between the syntactic, semantic and pragmatic dimensions, just as they were originally envisioned by semiotician Charles Morris (see section 2.1) and early adopted in computer science literature [ZEM 66].

Syntactics refers broadly to the form of languages. We can say it encompasses two main parts: the symbols to be used in a language, and their correct grammatical combinations.

The first part of syntactics is known as concrete syntax (or context-free grammars); it includes the character set (alphanumerical, visual or other alphabet), the reserved special words (or tokens, which are predefined words by the language), the operators (specific symbols used to perform calculations and operations), and grouping rules (such as statement delimiters – lines or semicolons – or delimiter pairs – parentheses, brackets, or curly brackets).

The second part is named syntactic structure or abstract grammar. It defines the logical structure or how the elements are “wired together” [TUR 08, p. 20]. This part gives place to language constructs where we can list, ranging from simple to complex: primitive components, expressions, statements, sequences and programs. Expressions like “assigning value to a variable” demand using the dedicated token for the data type, the identifier of the variable, an assignment operator and the assigned value (for example, int num = 5). Regarding statements, there are several types (conditional, iteration and case), each identified with their own tokens (if-else, for or while, switch) and delimited by grouping rules. A statement is considered by many languages as the basic unit of execution.

Semantics refers to the meaning of programs at the moment of their execution. In other words, it is about describing the behavior of what language constructs do when they are run. Because programs are compiled or interpreted on machine and layers of software, it is said that semantics depends on the context of execution or the computational environment. Although most of the time we express the semantics in natural language prose as informal description (as it occurs in language references, documentation, user’s guides, the classroom, workshops, etc.), there are a several methods to formalize the semantics of programming language, which are mainly used for analysis and design.

Even though it is not our intention to deal with semantic issues, we only evoke two common methods for specifying programming languages. First, denotational semantics, which explains meaning in terms of subparts of language constructs. And, complementary, operational semantics, which observes language constructs as a step-by-step process. Examples of programming language subparts are: 1) the essential core elements (the kernel); 2) the convenient constructs (syntactic sugar); and 3) the methods provided by the language (the standard library) [TUR 08, p. 207]. Examples of operations at execution are naming, states, linking, binding, data conversions, etc. Together, these methods are useful for identifying and demonstrating language properties (for instance, termination7 or determinism8).

Before tackling pragmatics, we illustrate two practical cases of syntactics and semantics at the heart of most programming languages. Table 2.4 summarizes different operators: the symbol and its meaning at execution. Table 2.5 extends operators by showing their implementation, from a mathematical notation into programming language syntax. We have chosen JavaScript language syntax for both the tables.

Table 2.4. Common operator symbols and their description in the JavaScript language

| Operator symbol | Description |

| + | Addition |

| - | Subtraction |

| * | Multiplication |

| / | Division |

| % | Modulus (division remainder) |

| ++ | Increment |

| -- | Decrement |

| == | Equal to |

| === | Equal value and equal type |

| != | Not equal |

| !== | Not equal value or not equal type |

| > | Greater than |

| < | Less than |

| >= | Greater than or equal to |

| <= | Less than or equal to |

| && | And |

| || | Or |

| ! | Not |

Pragmatics refers to the use and implementation of programming languages. It has to do with the different forms of solving a problem, like managing the resources available in the most efficient way (memory, access to peripherals). In computer science literature, pragmatics is associated with programming models (also referred to as programming styles and programming paradigms).

Table 2.5. From mathematical notation to programming syntax, adapted from Math as code by Matt DesLauriers9. A reference of mathematical symbols for UTF-8 formatting is also available online10

| Mathematical notation | Description | Programming code |

| √(x)2 = x i = √-1 |

Square root. Complex numbers. JavaScript requires an external library such as MathJS. |

Math.sqrt(x); var math = require(‘mathjs’); var a = math.complex(3, −1); var b = math.sqrt(−1); math.multiply(a,b); |

| 3k o j | Vector multiplication. Other variations are: Dot or scalar product of a vector (K o j) and cross product k × j. |

var s = 3; var k = [ 1, 2 ]; var j = [ 2, 3 ]; var tmp = multiply(k, j); var result = multiplyScalar(tmp, s); function multiply(a, b) { return [ a[0] * b[0], a[1] * b[1] ] } function multiplyScalar(a, scalar) { return [a[0] * scalar, a[1] * scalar ] } |

| Sigma or summation. Some derivations are: |

var sum = 0; for (var i = 1; i <= 100; i++) { sum += i } |

|

| Capital Pi or big Pi. | var value = 1; for (var i = 1; i <= 6; i++) { value *= i; } |

|

| |x| | Absolute value. | Math.abs(x); |

| ||v|| | Euclidean norm. “Magnitude” or “length” of a vector. |

var v = [ 0, 4, −3 ]; length(v); function length (vec) { var x = vec[0]; var y = vec[1]; var z = vec[2]; return Math.sqrt(x * x + y * y + z * z); } |

| |A| | Determinant of matrix A. | var determinant = require(‘glmat2/determinant’); var matrix = [ 1, 0, 0, 1 ]; var det = determinant(matrix); |

| â | Unit vector. | var a = [ 0, 4, -3 ]; normalize(a); function normalize(vec) { var x = vec[0]; var y = vec[1]; var z = vec[2]; var squaredLength = x * x + y * y + z * z; if (squaredLength > 0) { var length = Math.sqrt(squaredLength); vec[0] = vec[0] / length; vec[1] = vec[1] / length; vec[2] = vec[2] / length; } return vec; } |

| A ={3, 9, 14}, 3 ∈ A | An element of a set. | var A = [ 3, 9, 14 ]; A.indexOf(3) >= 0; |

| Set of real numbers. | function isReal (k) { return typeof k === ‘number’ && isFinite(k); } |

|

| Functions can also have multiple parameters. | function length (x, y) { return Math.sqrt(x * x + y * y); } |

|

| Functions that choose between two “sub-functions” depending on the input value. | function f (x) { if (x >= 1) { return (Math.pow(x, 2) - x) / x; } else { return 0; } } |

|

| The signum or sign function. | function sgn (x) { if (x < 0) return −1; if (x > 0) return 1; return 0; } |

|

| cos θ sin θ |