Chapter 2. Examples and Building Blocks

To guide the rest of the discussion, I’ll start by presenting a basic framework for the IoT: a population of (physically) small computing devices, tied to the physical world with sensors and actuators, possibly communicating with one another and with backend systems.

Computing Devices

To make the book self-contained for the reader without a detailed computer science background, this section discusses the basics of computer systems (central processing unit, memory, software structure) and how “embedded systems” differ from regular ones.

Basic Elements

Generally speaking, computing devices consist of a central processing unit (CPU), memory, and some kind of input/output (I/O). The memory typically includes some kind of nonvolatile storage (so there’s a program to run when the machine first powers up) and some kind of slower but more efficient backing store for longer-term storage. In a simpler universe, the CPU would fetch an instruction from memory, figure out what the instruction means, carry it out, and then go on to the next one. “Carrying out” the instruction would involve reading or writing to memory or an I/O device or an internal CPU register, and/or doing some mathematical operation. However, the universe is not that simple anymore. The field traded simplicity for speed (as I have to cover in the “dirty tricks” section of my architecture course): pieces of many instructions may be carried out at the same time and may be executed out of order or speculatively; parts of memory may be tucked away in smaller but faster caches.

I/O typically includes some way of interacting with a human (e.g., keyboard and display) and some kind of networking to enable interaction with other computing devices.

The hardware also requires software: the BIOS to run at boot time, application programs, and an operating system to provide a clean and hopefully protected environment for the applications.

Moore’s Law

An important part of understanding the long-term progression of computing is Moore’s Law. Despite its official-sounding name, it’s not actually a “law” (like those of Newton), and it’s not as well defined as one might like: ask two computing professionals, and you will likely hear two different versions.

Moore’s Law refers to a pattern observed by Intel cofounder Gordon Moore decades ago, at the dawn of the modern computing era. Every N years, the complexity of integrated circuits—measured in various ways, such as transistor density—doubles (see Figure 2-1). The pattern of growth has continued to the present day, despite regularly occurring reports that some fundamental physical or engineering limit has been reached and it cannot possibly be sustained. It’s also generally acknowledged that the continued existence of this pattern is in some sense a self-fulfilling prophesy: it can take several years to design the next-generation microprocessor, and the industry may start the design assuming a fabrication ability that that does not yet exist but is forecast to exist by the time the design is done. (Using a simile from American football, some1 have quipped it’s “like throwing a Hail Mary pass, then running down the field and catching it.”)

Figure 2-1. Computation has followed the prediction of Moore’s “Law”: doubling every two years, power increases exponentially. (Adapted from WikiMedia Commons, public domain.)

When we step back and look at the progress of computing, the exponential growth characterized by Moore’s Law has several implications. The “current"-generation CPU keeps getting exponentially more complex. As one consequence, computing applications that might previously have seemed silly and impractical (because they would take far too much computing time and memory) become practical and commonplace. (The things done today by ubiquitous smartphones would have been dismissed as too crazy to consider had they been proposed as research projects in 1987—I was there, and I was one of the ones doing the dismissing.) We also get the flip side of increased complexity: large systems (especially software systems) are harder to get right and can have surprising behaviors and error cases.

Another consequence is that things get cheaper—particularly as previous-generation processors become repurposed in embedded devices. As an XKCD cartoon observed, even if you stay two steps behind the technology curve, you still see the same exponential growth—only it’s much cheaper! In the classroom, I’ve taken to borrowing a colleague’s trick: comparing the surprising prevalence of previous-generation processors to the surprising prevalence of “lesser” species, such as insects.

How IoT Systems Differ

By definition, most IoT devices will be embedded systems: computers embedded in things that don’t look like computers.

If one were to ask how embedded systems differ from standard computers, the initial answer would be, “they’re much smaller and don’t do as much.” To save money and because the systems might not have to do very much, the physical size and computational power might be limited. Systems that must run on batteries will also be designed to reduce power consumption. Systems that do not work directly with humans may skip the human I/O devices; systems without the need to interact with other systems may skip the networking. Systems that do not do very much may also skip the nonvolatile backing store (if there’s not much to store) or use semiconductor flash memory instead of disks; such systems may additionally have an OS that does not support much multitasking (if there’s not much to be done), or even skip the OS altogether.

However, thanks to Moore’s Law, this initial answer is starting to be less true, because “small, cheap” computational engines are also becoming more powerful. When it comes to prognosticating the future IoT, Moore’s Law has two somewhat different implications:

-

Thanks to the exponential curve, many IoT devices deployed in the future will be powerful, strong computational engines, by today’s standards. Indeed, we’re already seeing this creeping functionality and power in IoT devices, for instance, full Linux installations and network support and built-in web servers. (I recently discovered my Kindle reader has a web browser!)

-

IoT devices embedded in long-lived “things” will then fall behind the curve; by the standards current N years from now, the computing devices embedded in older refrigerators, cars, and bridges will likely seem quaint and archaic.

However, note that I opened this section with the qualifier “most.” A computer is a digital circuit that does things (certain inputs trigger certain outputs and cause the internal state to change), but structured in a particular way: one piece executing instructions coded as binary numbers stored in another piece. One can build digital circuits to provide such behaviors without the full Turing generality of a computing engine: indeed, this why Dartmouth’s Engineering School offers both a “Digital Design” course and a “Microprocessors” course. My soapbox conjecture is that, due to the incredible flexibility and reusability of standard components—as well as the computational nature of many of the tasks we want them to do—the IoT will mainly consist of computers, rather than noncomputational engines.

Another way embedded systems can differ from traditional computers is in their lifetime: a computer embedded in some other kind of thing needs to live as long as that thing. Greeting cards that play “Happy Birthday” don’t live very long, but automobiles and appliances may last decades, and bridges and electrical grid equipment even longer. Chapter 4 will discuss some of the relevant security issues arising from computational power, exposed interfaces, software complexity, and long lifetimes.

Architectures for an IoT

The preceding discussion frames how we might think of future IoT systems. To start with, they will be embedded computing systems that are likely to be fairly rich, by standards current not too long before the systems get created.

Just to make things tangible, Figure 2-2 shows an already-old IoT module with a 500 MHz processor, half a gigabyte of RAM, flash and an SD card for nonvolatile storage, and Ethernet. Not that many years ago, these would have been the specs of a nice desktop device—and when they were, did anyone imagine that engineering would so quickly advance that it would soon be economically feasible to put physically tiny versions in household appliances?

Figure 2-2. An already-old “system on a module” for IoT applications has the specs of what would have been a nice desktop platform not too long ago. (From Wikimedia Commons, public domain.)

Other parameters in an informal taxonomy of IoT architecture include connection to other computers, connection to the physical world, and connection to big backend servers. Let’s consider each issue in turn.

Connection to Other Computers

Will IoT devices be networked? Yes, it seems a bit ironic that something we’re calling the Internet of Things may in fact not involve networking, but the working definition we’ve been using—adding computational devices to everything around us—allows for that possibility. (Indeed, I recall a very elegant and effective “smart home appliance” pilot where computation added to the appliance could sense when the electric grid was stressed and adjust its internal operation appropriately without ever needing to talk to anything else.)

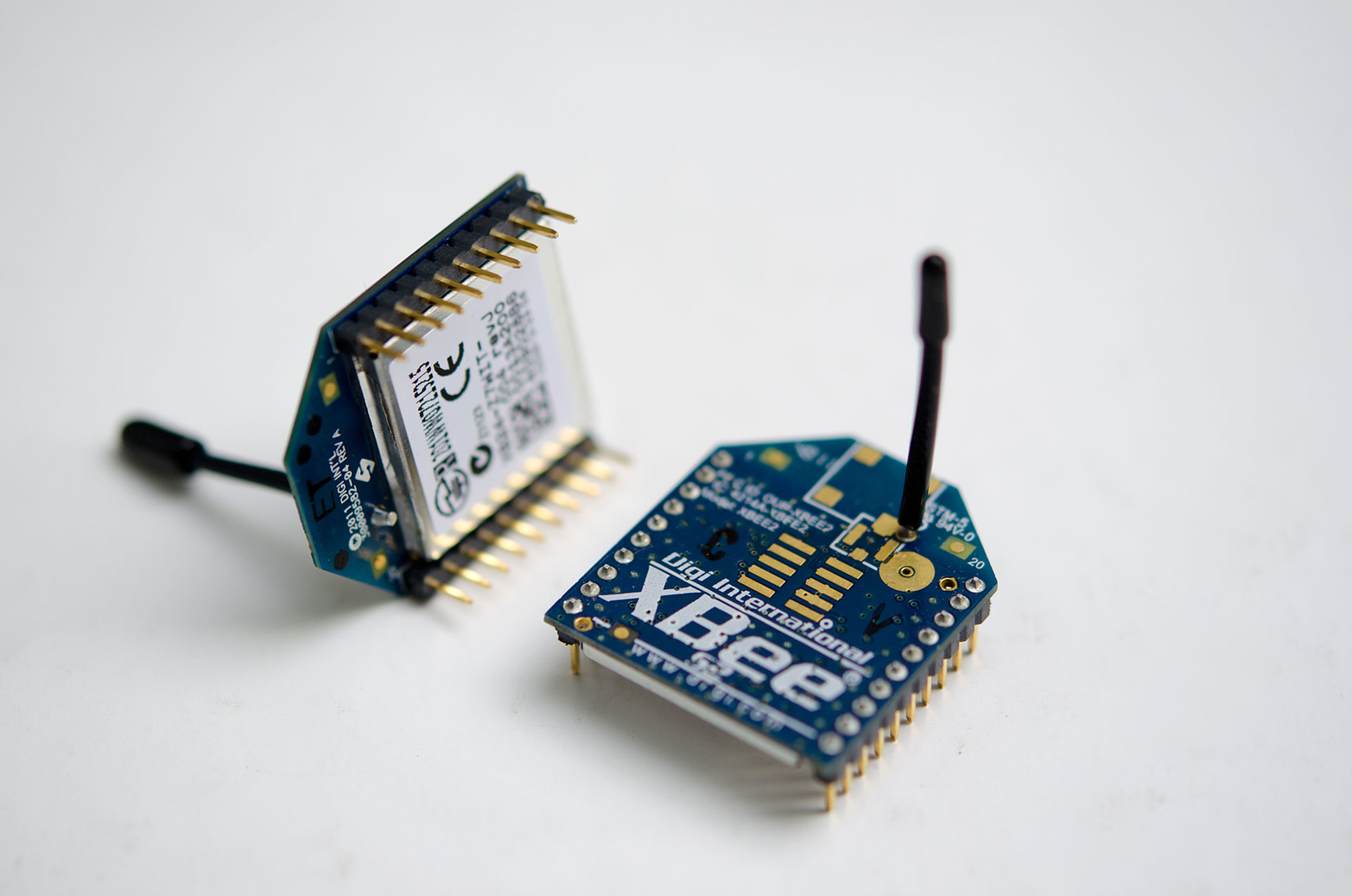

For devices that are networked, what is the physical medium of communication? The natural choices here are wire (e.g., the Ethernet connection in Figure 2-2) or radio. (To make things tangible, Figure 2-3 shows some inch-sized radio modules for IoT applications.) In the research world, a colleague of mine is even exploring the potential of using light as a medium, even in a dark room.

Figure 2-3. Example of small, inexpensive radio modules for IoT applications. (From Wikimedia Commons, Mark Fickett.)

When it comes to radio, it’s important to remember that electromagnetic radiation is not magic. Depending on the choice of power and wavelength (proportional to frequency), textbook-level analysis predicts signals from wireless networking will travel anywhere from a few centimeters to many kilometers. However, textbooks are not the same as the real world; an old-time engineer I worked with often ranted that young computer scientists would believe that if the spec said signals would travel 10 meters, then any device within 10 meters would receive them perfectly but any device beyond 10 meters would hear nothing. As anyone who has tried to make a cellphone call in Vermont or northern New Hampshire knows, it’s not that simple.

When discussing radio-based networking, it’s tempting to use the term “WiFi.” However, this is somewhat akin to using the term “Kleenex” for a facial tissue: the WiFi Alliance established the term for wireless networking conforming to certain (evolving) IEEE standards, but there are other approaches as well, such as Bluetooth (an example of closer-range communication) and ZigBee (which has been seeing deployment in industrial control settings). Many in the industry eagerly await the coming of 5G wireless networking, heralded to provide vastly greater speeds and increased reliability.

One interesting consequence of new wireless stacks and the shrinking packaging from Moore’s Law is that, initially, it can be hard to probe the wireless interface for security holes due to unplanned input (see “Instance: Failure of Input Validation”) because the interface is buried inside a larger module; the article “Api-do: Tools for exploring the wireless attack surface in smart meters” discusses some of my lab’s work in this space [5].

Beyond the physical medium itself, there’s the question of the protocols used to communicate. Again, “internet” in the term “Internet of Things” suggests to some that the IoT must, of necessity, use some flavor of the standard Internet Protocol (IP) stack. As with any engineering decision, there are advantages and disadvantages to this design choice—on the one hand, it’s nice to be interoperable with the known universe (i.e., the Internet of Computers). But on the other hand, incorporating the IP stack may incur performance and complexity costs that don’t fit within the constraints of the real-world application. daCosta [2] makes an excellent case for this latter point. (And, as Chapter 5 will explore, many IoT application scenarios may not require the fully general communication pattern of the internet.)

In the Internet Protocol, each device receives a unique address. The emerging IPv6 (which has been in the process of succeeding the current IPv4 for a decade or so) is often touted as a special enabler of the IoT because IPv4 only allowed for addresses (approximately 4 billion), whereas IPv6 allows for —a factor of a trillion billion billion more.

Connection to the Physical World

IoT devices will interact with the physical world around them.

Because most humans have experience with the IoC, we are used to the idea of keys and buttons being input channels to computing devices, and screens and displays and blinking lights being output channels. Because computers are electronic devices, it’s also not a stretch to think of electrical actions as I/O, for example, a computer embedded in an air conditioner turning on a fan. However, IoT devices can also employ components—sensors and actuators—enabling more direct kinetic input and output.

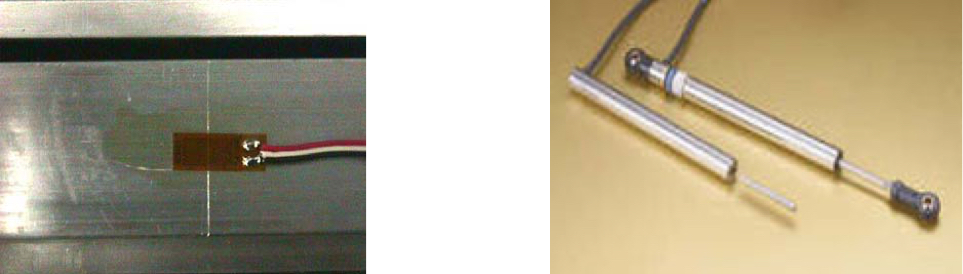

Fu and Jaradat’s (free) report [4], prepared for the Maryland State Highway Administration, shows many nice examples of such sensors—strain gauges and displacement transducers (Figure 2-4), inclinometers, GPS sensors, accelerometers—and their use in letting smart devices monitor the state of physical infrastructure such as bridges and dikes. Stepper motors (Figure 2-5) are just one example of an actuator enabling a computer to trigger precisely controlled physical movement.

Figure 2-4. Sensors such as strain gauges (left) and displacement transducers (right) may be used for the smartening of physical infrastructure, such as bridges. (From [4], used with permission.)

Figure 2-5. An IoT device may use actuators such as this stepper motor to change the state of physical infrastructure in which it’s embedded. (From Wikimedia Commons, Dolly1010 - Vlastní fotografie.)

When I taught my “Risks of the IoT to Society (RIOTS)” class at Dartmouth, I launched the students with Raspberry Pis and stacks of Sunfounder sensor/actuator modules. Figure 2-6 shows one example of what happened, described by the students as follows [3]:

The PiPot greatly simplifies the process of growing indoor plants by automating watering and light exposure. The Pi is attached to a moisture sensor, a plant grow lamp, and a watering system. The watering system uses a storage bag for water, connected to a tube that goes through a solenoid valve….

The grow lamp is programmed to turn on between 5pm and 11pm. However, weather is taken into consideration—this model is for plants that are indoors, but are near windows and receive some natural sunlight. Between 7am and 5pm, the Pi will check to see if the weather outside is “fair” or “clear”….

Every hour, the attached moisture sensor will check moisture levels in the soil. If moisture is below the desired threshold, in our case under 150, the solenoid valve will open to water the plant for three seconds. An hour later, the sensor checks again. This cycle of lighting and watering continues every day until the user ends the program.

Figure 2-6. One student project in my “Sophomore Summer” IoT class used moisture sensors and daylight and weather information to control watering and lights for indoor plants. (Ke Deng and Dylan Scandinaro, used with permission.)

The Backend

As discussed earlier, Moore’s “Law” is bringing exponential increases in semiconductor density; leading to increases in the power and storage ability of computing; in the complexity of what becomes “feasible” to realize in computer software; and in affordability. However, these trends affect not just small devices deployed in the IoT but also the big devices in the IoC. The computing industry has seen consequences in many areas: Cloud computing:: Giant (but virtual and remote) servers available for inexpensive rental. Big data:: Massive amounts of available data on which to compute. Big data analytics:: Increases in the sophistication of what can be feasibily calculated from these large data sets. Deep learning:: Increases in the sophistication of one particular family of approaches.

(A deeper explication of analytics and deep learning is beyond the scope of this book, but for an introduction, see Chapter 17 in my earlier security textbook [8].)

Other industries have been quick to exploit these consequences. To quote a McKinsey report [6]:

The data that companies and individuals are producing and storing [in one year] is equivalent to filling more than 60,000 US Libraries of Congress….

Big data levers offer significant potential for improving productivity at the level of individual companies…. For instance, Tesco’s loyalty program generates a tremendous amount of customer data that the company mines to inform decisions from promotions to strategic segmentation of customers. Amazon uses customer data to power its recommendation engine “you may also like …” based on a type of predictive modeling technique called collaborative filtering…. Progressive Insurance and Capital One are both known for conducting experiments to segment their customers systematically and effectively and to tailor product offers accordingly.

These transformations of “big” computing are relevant to the tiny devices in the IoT because they can be tied together. Connecting the IoT to the IoC lets the IoT take advantage of this big data backend, both by providing new sources of “big data” and by taking actions driven by the big data analytics. Indeed, the tie is so tight that a set of guidelines to bring security to IoT product development was recently issued—by the Cloud Security Alliance [1]. In their Harvard Business Review article [7] (discussed further in Chapter 7), Porter and Heppelmann see the IoT/IoC connection as a critical part of how the IoT will transform business (and, hence, the world). On the flip side, some lament the problem of digital exhaust: the fact that much of the data being generated by an instrumented world is not actually used.

Of course, the tie-in also lets us use all the fashionable buzzwords—IoT, cloud, big data, analytics—in a single sentence while keeping a straight face.

The Bigger Picture

This chapter sketched some basic building blocks for the IoT as examples. However, this sketch is just an illustrative slice of a very large and active field. For example, venture capitalist Matt Turck and colleagues have been putting together an annual “Internet of Things Landscape” showing various companies making moves in this space. The 2016 landscape lists almost 200 companies active in just the “Building Blocks” domain, and another 1,000 spread over “Applications” and “Platforms and Enablement.” The rather complicated Figure 2-7 from Beecham Research provides an incomplete but representative sketch of IoT applications.

Figure 2-7. The technology market analysis firm Beecham Research draws this map of business development activity in IoT—it’s from 2009 and already incomplete. (Used with permission.)

What’s Next

With this basic sketch of the IoT and its IoC backend in hand, we now dive into the rest of the story.

Works Cited

-

CSA, Future-Proofing the Connected World: 13 Steps to Developing Secure IoT Products. Cloud Security Alliance IoT Working Group, 2016.

-

F. daCosta, Rethinking the Internet of Things: A Scalable Approach to Connecting Everything. Apress Media, 2013.

-

K. Deng and D. Scandinaro, Final Project: PiPot. Dartmouth College COSC69 Fancy Application Report, Summer 2015.

-

C. C. Fu and Y. Jaradat, Survey and Investigation of the State-of-the-Art Remote Wireless Bridge Monitoring System. Maryland Department of Transportation State Highway Administration, 2008.

-

T. Goodspeed and others, “Api-do: Tools for exploring the wireless attack surface in smart meters,” in Proceedings of the 45th Hawaii International Conference on System Sciences, 2012.

-

J. Manyika and others, Big data: The Next Frontier for Innovation, Competition, and Productivity. McKinsey Global Institute, May 2011.

-

M. E. Porter and J. E. Heppelmann, “How smart, connected products are transforming competition,” Harvard Business Review, November 2014.

-

S. Smith and J. Marchesini, The Craft of System Security. Addison-Wesley, 2008.

1 Sometimes, this quote is attributed to Dave Patterson, professor in Computer Science at UC Berkeley.