Hearing

Contents

The study of the nervous system’s cognitive response to sound stimuli is known as psychoacoustics: it is part acoustics and part psychology. The visual system is often considered the more important sensory modality, but the auditory system is far faster in its analysis and response to incoming sensory information. It is when we first begin to work with sound recording that we become aware of many of the subtleties present in our auditory system. For example, phenomena such as masking, in which only the louder of two sounds close together in frequency is perceived, are attributable to the behavior of our auditory physiology. All one needs to do to appreciate our native sound-processing capabilities is listen to how different the world sounds through microphones and headphones rather than just through our ears. A complete study of the function of the auditory system is exceedingly complex and beyond the scope of this discussion; however, we can still appreciate some of the features of the system that affect directly how we perceive sounds, especially when they are critical to the processes employed in the recording of sound.

Our auditory system is incredibly sensitive, allowing perception over many orders of magnitude in both amplitude and frequency. We can discriminate tiny changes in sound timbres and accurately determine where in space a sound originates. We experience sound through our ears and nervous system, so our perception cannot be divorced from these mechanisms, and their characteristics influence what we hear. The pattern of air pressure vibrations striking our ears provides the input, but just as the structure of a room alters sound waves as they pass through it, so the apparatus of hearing changes the information we ultimately receive in our brains’ auditory processing areas. We have the advantage of an adaptive brain, which learns how to process the sensory inputs we receive from the cochlea, the organ that converts sound wave energy into neuronal signals, and adapts to the inherent imperfections of our own auditory system. Nevertheless, we must process sound information through our hearing organs, and our perception includes distortions – alterations in the way sounds are transmitted and converted to neuronal signals and in the way our brain interprets these inputs and renders for us what we refer to as hearing.

THE AUDITORY SYSTEM

Musical sounds contain a complex combination of individual sinusoidal components, each with a particular amplitude, frequency, and phase relationship with the other elements. By combining these components, the characteristic sounds of different instruments and voices are created. These combinations are known as timbre, a quality that distinguishes the sound of different instruments even when they play the same note at the same loudness. This explains in part why a horn sounds different from a stringed instrument: each produces a specific combination of mathematically related frequencies known as harmonic overtones that result in the characteristic sound. (Some sounds, such as bells, contain non–harmonically related overtones.) The timbre of a sound must be preserved in the recording process in order for a sound to be perceived as natural sounding. Timbre alone is not sufficient to fully discriminate between instrument sounds, however, as the time course of onset and decay of notes is also characteristic of instrument sounds. Instruments with similar timbres will sound different from each other if their attack, sustain, and release characteristics are different.

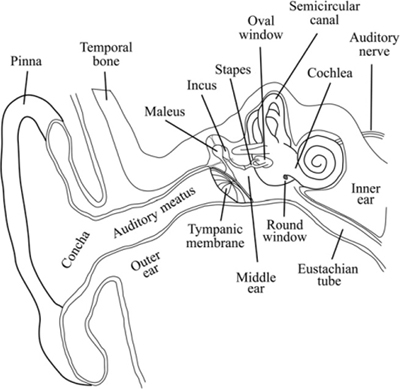

We often assume that what we perceive as pitch is exactly equivalent to the actual vibratory frequency of the sound wave and that what we perceive as loudness is directly proportional to the amplitude of the sound wave pressure variations. In fact, the operation of our auditory system deviates somewhat from these ideals, and we must factor these deviations into our understanding of the process of hearing: the first stage in the process of hearing, handled by the outer ear (pinna) and ear canal (Figure 4-1), distort the incoming pressure wave intentionally. The ridges of the ear reflect particular frequencies from certain directions in order to create an interference pattern that can be used to extract information about the elevation from which a sound originates. Sounds originating from above are reflected with increased high-frequency content relative to the same sound originating at the level of the ear. Front-to-rear discrimination also depends in part on the shadowing of rear-originating sounds by the pinna. The external auditory meatus, or auditory canal – the guide conducting the sound wave to the eardrum – is a resonant tube that further alters the frequency balance of the sound wave. The resonant frequency falls in the same frequency range as the peak in our sensitivity, around 3kHz, and creates a maximum boost of about 10 dB. This is the frequency range that conveys much of the information contained in speech; in fact, the wired telephone system transmits frequencies from only 300 to 3400 Hz. The vibrations that finally excite the eardrum differ from the original sound pressure variations: they contain an altered balance of frequency components, affecting the timbre of the sound.

Figure 4-1 The anatomy of the human ear.

The tympanic membrane, or eardrum, is a flattened conical membrane that stretches across the inner end of the ear canal. It is open to the auditory canal on the outside and in contact with a set of three tiny middle ear bones, the ossicles, on the inside. The pressure on the outside of the tympanic membrane is determined by the sound wave and the static atmospheric pressure. On the inside of the tympanic membrane, the static air pressure in the middle ear is equilibrated through the Eustachian tube to the throat, while the sound vibrations are conducted through the bones to the cochlea. Equilibrating middle ear pressure with the outer ear pressure reduces any damping of the tympanic membrane caused by pressure differences that result in unequal forces on opposite sides of the membrane. The mechanical characteristics of the middle ear allow for active control of the transmission efficiency between the eardrum and the cochlea. The tiny muscles connecting and suspending the bones and ligaments can contract, stiffening the connection and drawing the ossicles away from their attachments to the tympanic membrane and cochlear oval window. This contraction allows adjustments of the sensitivity of the hearing process through the acoustic reflex, which may be activated by loud sounds (to protect the inner ear from possible damage) as well as by the intention to begin vocalizing. The acoustic reflex mechanically reduces the dynamic range of the input to the cochlea, much like a compressor or limiter. The time course of activation and release of the reflex can be used to determine the attack and release of electronic compression characteristics so that they sound natural.

The primary function of the bones of the middle ear is to amplify mechanically the airborne vibrations in preparation for transfer to a liquid medium. Because liquids are denser than gases and less dense than solids like bone, we encounter a potential problem when converting the energy in one medium to energy in another: the systems require different amounts of force to drive them. The bones act to focus the vibrations of the relatively large eardrum and deliver them efficiently to the small oval window of the cochlea as well as to protect the cochlea from too much input. They act as an impedance converter, efficiently coupling the low-impedance air pressures with higher-impedance liquid pressures inside the cochlea.

Because the cochlea is stimulated by mechanical vibrations, it may be activated by vibrations of the surrounding temporal bone that do not come through the ear canal, an effect known as bone conduction. Although the strength of the bone conduction is well below that of sounds conducted through the middle ear bones, it is still audible. It partially explains why our voices sound different in recordings from when we are vocalizing – recordings do not contain the internally conducted sound we hear through bone conduction.

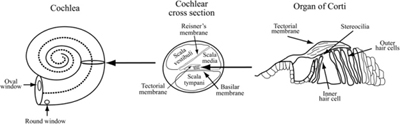

The cochlea (Figure 4-2) is a dual-purpose structure: it converts mechanical vibrations into neuronal electrical signals and separates the frequency content of the incoming sound into discrete frequency bands. It functions like a spectrum analyzer, a device that breaks sounds or other signals into their discrete frequency components. It is, however, an imperfect analyzer, and there is some overlap between the bands whereby strong signals in one band slightly stimulate adjacent ones, creating harmonic distortion. It is up to the brain to sort out the raw data from the cochlea and produce the sensation we call hearing.

Figure 4-2 The structure of the cochlea. The oval window is in contact with the scala vestibuli and the round window is at the far end of the scala tympani. The scala vestibuli and scala tympani are connected at the apex of the cochlear coil.

COCHLEAR PHYSIOLOGY

The cochlea performs the task of separating different frequencies by providing an array of sensing cells, the inner hair cells, that are mechanically stimulated by the movement of the two membranes between which they are connected. The membranes are caused to vibrate by the fluid filling the chambers, which is in turn caused to vibrate by the bones of the middle ear. Different areas of the cochlear membranes displace maximally at different frequencies as the traveling wave from the oval window moves toward the apex, separating the areas maximally stimulated by different frequencies of vibration along the cochlea’s length. High frequencies stimulate the area nearest the oval window, and low frequencies drive the far end. Due to its placement, each inner hair cell responds to vibrations of a specific range of frequencies, and each is connected to a nerve that sends signals to the brain. The bases of the hair cells are attached to the basilar membrane, and projections from their apical ends called stereocilia attach to a second overlying membrane, the tectorial membrane. As the cochlear fluid vibrates, it produces relative movement of the membranes, causing the stereocilia to shear, which opens ion channels in the hair cells that then depolarize the affected cells.

The voltage across cell membranes is determined by the distribution of positive and negative ions on each side of the membrane, usually with more sodium and calcium on the outside and more potassium on the inside. In the resting state, these ion concentrations are maintained by cellular metabolic activity so that cellular interiors are negatively charged relative to the extracellular fluids. In free solution, ions distribute themselves to maintain a constant and evenly dispersed concentration. When membranes impermeable to the ions separate the inside and outside concentrations, a voltage is created by the concentration gradient across the membrane with a separate contribution from each specific ionic gradient. Ion channels embedded in the membrane that allow specific ions to pass through the membrane may be opened by chemicals, the voltage across the membrane, and, in the case of inner hair cells, by mechanical means. When ions are allowed to flow down their concentration gradient through the membrane, the membrane voltage decreases and the cell is said to depolarize. When the membrane potential depolarizes enough to reach a threshold voltage, the hair cell releases excitatory neurotransmitter chemicals that trigger an action potential in the adjacent nerve fiber that is then conducted to the brain through the auditory nerve.

The cochlea is known to be extremely selective in its tuning, a feature thought to be accentuated by an active feedback mechanism. A second variety of hair cell, the outer hair cell, is also attached between the basilar and tectorial membranes, but these cells receive neuronal inputs from the brain. They are thought to affect the tension of the tectorial membrane in localized areas, effectively increasing the tuning sharpness of the cochlea. In fact, sounds actually originate from the cochlea and may be heard in the ear canal, a phenomenon known as otoacoustical emission. It appears that this system may be partly responsible for a form of distortion inherent in the auditory system, the generation of sum and difference frequencies that occur when two separate sinusoidal sounds are presented to the ear.

Prolonged stimulation of the cochlea can deplete the metabolic energy available to the hair cells, an action that can shift the threshold of hearing based on the loudness of incoming signals. This process can have a long recovery time, producing a phenomenon called threshold shift. After exposure to loud sounds, the sensitivity of the auditory system can be reduced for hours, a fact often observed after hours of playing or mixing loud music. The delicate hair cells are susceptible to physical damage from excessive excitation. Overexposure to loud sound can destroy these cells, leading to permanently diminished hearing sensitivity, as the individual frequency detectors are damaged. Although early research indicates that some regeneration might be possible, the condition should be considered irreversible and avoided at all cost. Reducing exposure to loud sound should be a primary consideration for everyone working in audio.

PERCEPTION OF SOUND

Exactly how our brains process auditory inputs and create for us the conscious awareness of hearing sound is still within the realm of mystery. Although research has elucidated much of the structure of the neuronal pathways and processing centers in the brain, the explanation of exactly how we perceive sound is still incomplete. Fortunately, for the understanding required to become adept at sound recording, we need only appreciate the operational characteristics of the system as it applies to how we perceive sound.

There are critical features of our auditory system that we must consider to appreciate which characteristics of sounds are necessary to preserve in our recordings. In order to preserve the cues we use in localizing the positions of sound sources in space, we need to understand how we tell where in space sounds originate. To make accurate sound recordings, we need to be aware of how we perceive the amplitude and frequency information in sound waves. To convincingly manipulate sounds in the studio, we need to know how sounds behave in our environment.

Any sound originating in space reaches us through our two ears. Because they are separated by several inches, sounds not originating directly ahead or behind reach them at slightly different times. Further, they strike the closer ear with slightly more energy, making that side sound louder. By using these relative time-of-arrival and loudness cues, we determine where in space a sound originated. We can make use of these cues to fool the ear, as we might when mixing sounds in the studio, placing sounds in different apparent positions in a mix. Preserving these cues is critical to making stereo recordings that capture the realism we desire. Sound striking one ear first is a very strong cue to the position of a sound source. By delaying an element panned to one side in mix by a few milliseconds, it can take many decibels of gain to make that sound seem as loud as the same element panned undelayed to the opposite side. The pan controls built into most mixers use only the apparent loudness cue to pan signals from left to right, ignoring the time-of-arrival difference.

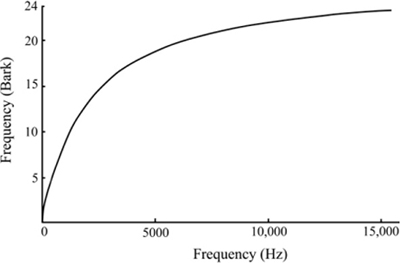

The phenomenon of masking is also important in sound recording. Cochlear regions that respond to the same frequency range are called critical bands. These bands are not fixed filters but rather localized areas of the cochlea that vibrate maximally at a characteristic range of frequencies. The width of critical bands increases with increasing frequency; resolution is sharper for low frequencies. Each band contains over a thousand individual hair cells that respond when that area of the tectorial and basilar membranes are deformed by vibration. Each critical band responds to the sum of the energy in that frequency range, so we perceive only the louder of two sounds of similar frequency as long as there is a sufficient level difference between the sounds. This principle allows some types of noise reduction processing and methods of audio data compression like mp3s to work. It also complicates mixing two sounds with similar frequency content. With the increasing reliance on masking curves, a frequency scale called the Bark scale was devised to reflect the cochlear critical bands. The Bark scale has 24 bands that correspond to cochlear critical bands. Figure 4-3 shows the correspondence between frequency and the Bark scale. The curve reflects the widening of critical bands as frequency increases. The Bark scale is used in perceptual audio coding research.

Our perception of timbre depends on the frequency response behavior of our auditory system. Because we do not perceive all frequencies to be equally loud at the same intensity, discussing loudness demands a measure of loudness that takes the sensitivity to frequency into account. A unit known as the phon is used in loudness comparisons; it is the intensity (sound pressure level in decibels) of a 1kHz sine wave tone judged to have the same loudness as the signal in question. A similar unit, the mel, relates absolute frequency to perceived pitch. Both perceived pitch and loudness deviate from linearity with regard to frequency and amplitude.

Figure 4-3 The Bark scale translates frequency into cochlear critical bands.

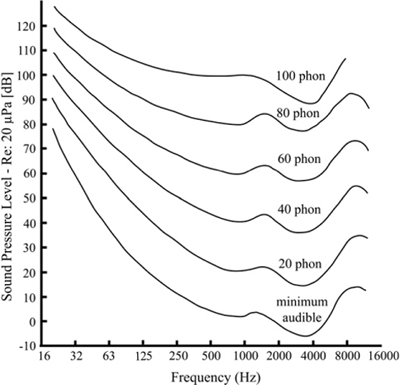

Due to the physical characteristics of the auditory system, we do not perceive all frequencies as equally loud for the same sound pressure level. Further, the variation in perceived loudness as a function of frequency changes with the loudness level. The curves of equal loudness, often called Fletcher-Munson curves after the original researchers, show the effect of sound level on the spectral sensitivity of our auditory system. The measurements vary significantly among individuals and are average values. Fletcher and Munson used headphones and pure tones in their work; Robinson and Dadson later used loudspeakers to reproduce the pure tones in an anechoic room. The two sets of curves differ, but both show a peak of sensitivity at around 4 kHz, near the resonant frequency of the auditory canal, and a significant decrease in sensitivity at extreme frequencies, especially low frequencies. Figure 4-4 shows the latest data obtained by the ISO (International Organization for Standardization), which differ somewhat from the earlier research, especially in the lower frequencies. Using pure tones to determine sensitivity will produce different curves than those produced using noise bands that stimulate the cochlea more broadly, because each hair cell responds to a range of frequencies (its critical band), more of which is stimulated by narrow-band noise than by a pure tone. Also, as frequency increases, more energy is contained in each critical band, somewhat increasing the sensitivity with increasing frequency relative to measurements using pure tones.

Figure 4-4 Revised equal loudness curves (data from ISO 226:2003). The curves show how much sound pressure is required to sound equally loud at each frequency.

The flattening of the sensitivity curves has important implications for sound mixing: the level at which we listen affects our perception of the balance of frequencies present in the signal. As can be seen from Figure 4-4, the equal loudness curves flatten out at around 80 dB SPL, and not coincidentally, that is close to the loudness often designated as the standard listening level, 85 dB SPL. By mixing at that average loudness, the spectral balance will be perceived to be correct at usual listening levels. When listening at low levels, low frequencies are particularly inefficient, and there is thus a tendency to boost them. When that mix is played at higher listening levels, the bass will sound accentuated. It is therefore important to listen and mix at levels close to the intended listening level.

REFERENCES

Acoustics—Normal equal-loudness-level contours. (2003). International Organization for Standardization. ISO 226.

Kessel, R. G., & Kardon, R. H. (1979). Tissues and Organs: A Text-Atlas of Scanning Electron Microscopy. W. H. Freeman and Company. ISBN 0-7167-0091-3.

Robinson, D. W., & Dadson, R. S. (1956). A re-determination of the equal loudness relations for pure tones. British Journal of Applied Physics, 7, 166–181.

SUGGESTED READING

Gulick, W. L., Gescheider, G. A., & Frisina, R. D. (1989). Hearing: Physiological Acoustics, Neural Coding, and Psychoacoustics. Oxford University Press. ISBN 0-19-504307-3.

Pickles, J. O. (1988). An Introduction to the Physiology of Hearing. Academic Press Limited. ISBN 0-12-554754-4. Chapters 1–5 detail the physiology; later chapters address the central nervous system and psychoacoustic principles based in the physiology of the auditory system.