Now, here, you see, it takes all the running you can do, to keep in the same place.

—Lewis Carroll, Through the Looking-Glass and What Alice Found There

Alice never could quite make out, in thinking it over afterwards, how it was that they began: all she remembers is, that they were running hand in hand, and the Queen went so fast that it was all she could do to keep up with her: and still the Queen kept crying “Faster! Faster!” but Alice felt she COULD NOT go faster, though she had not breath left to say so.

The most curious part of the thing was, that the trees and the other things round them never changed their places at all: however fast they went, they never seemed to pass anything. “I wonder if all the things move along with us?” thought poor puzzled Alice. And the Queen seemed to guess her thoughts, for she cried, “Faster! Don't try to talk!”

Not that Alice had any idea of doing THAT. She felt as if she would never be able to talk again, she was getting so much out of breath: and still the Queen cried “Faster! Faster!” and dragged her along. “Are we nearly there?” Alice managed to pant out at last.

“Nearly there!” the Queen repeated. “Why, we passed it ten minutes ago! Faster!” And they ran on for a time in silence, with the wind whistling in Alice's ears, and almost blowing her hair off her head, she fancied.

“Now! Now!” cried the Queen. “Faster! Faster!” And they went so fast that at last they seemed to skim through the air, hardly touching the ground with their feet, till suddenly, just as Alice was getting quite exhausted, they stopped, and she found herself sitting on the ground, breathless and giddy.

The Queen propped her up against a tree, and said kindly, “You may rest a little now.”

Alice looked round her in great surprise. “Why, I do believe we’ve been under this tree the whole time! Everything's just as it was!”

“Of course it is,” said the Queen, “what would you have it?”

“Well, in OUR country,” said Alice, still panting a little, “you'd generally get to somewhere else—if you ran very fast for a long time, as we've been doing.”

“A slow sort of country!” said the Queen. “Now, HERE, you see, it takes all the running YOU can do, to keep in the same place. If you want to get somewhere else, you must run at least twice as fast as that!” 1

When Lewis Carroll penned the above excerpt from his 1871 novel, Through the Looking-Glass and What Alice Found There, he hardly could have imagined his words would find staying power in today’s lexicon. What is now referred to as the Red Queen Race (also known as the Red Queen Hypothesis or Red Queen Effect) is a useful metaphor for an evolutionary interspecies arms race of sorts, whereby predators and prey can and must selectively mutate in response to the other’s capabilities to more successfully kill or survive. The notion of running as fast as one can to simply stay in the same place speaks to the accelerating challenges of survival (either as predator or prey) with each successive evolutionary mutation in its counterpart.

Take the most primitive of these species as an example: microbes. Staphylococcus aureus is one such pernicious variety, responsible for approximately 20,000 deaths in the United States annually. 2 Though it resides harmlessly within some 30 percent of the population via the nose or on the skin, Staphylococcus aureus is also known for insidiously penetrating its host via such pathways and infecting him with diseases ranging from treatable boils or abscesses to life-threatening pneumonia. In 1928, medicine fortuitously took a quantum leap forward when Alexander Fleming accidently discovered a colony of mold growing alongside the staph (as it is more commonly known) bacteria in a petri dish. Where the mold was forming, the staph microbes were noticeably absent. Fleming named the antibacterial compound penicillin, coining one of medicine’s most notable discoveries in the process.

Penicillin’s rise to fame started on a sluggish climb before reaching meteoric momentum. It took ten years following Fleming’s discovery before Oxford researchers conducted the drug’s first clinical trial. Though the patient in question, a policeman suffering from a severe staph infection, ultimately died, his improvement within five days of receiving the drug (before supplies ran dry) was nothing short of remarkable. The medical community had its poster child for vanquishing infectious bacterial diseases. Penicillin was heralded as a miracle drug and supplies increased exponentially to treat Allied soldiers fighting in World War II.

As the general public gained access to the antidote in 1944, giddy with the prospect of eradicating menacing diseases, Fleming himself foretold a more sobering future. When accepting the Nobel Prize for his work in 1945, Fleming cautioned,

It is not difficult to make microbes resistant to penicillin in the laboratory by exposing them to concentrations not sufficient to kill them, and the same thing has occasionally happened in the body. 3

Indeed, less than five years following Fleming’s warning, antibiotic resistance promised a future where diseases were determined to survive. In one English hospital alone, the proportion of resistant staph infections quadrupled from 14 percent in 1946 to 59 percent just two years later. 4 What was happening to cause the once proclaimed miracle drug to fall so quickly from the utopian hype of disease eradication? Fleming had anticipated correctly that by stroke of evolutionary luck, some bacteria would be naturally resistant to even a wonder drug like penicillin due to one mutation in their genetic code. With enough bacteria to battle, the law of numbers would dictate that penicillin’s effects would ultimately reach strains that were genetically blessed for resistance, as it were. But, even Fleming had no idea just how powerful bacteria are to survive against a new threat in their environment.

As it turns out, bacteria have an added advantage against medical warfare. Bacteria can actually transfer their antibiotic resistance to one another. These microbes have the intelligence to exchange small pieces of DNA, called plasmids , the carriers for resistance to antibiotics, not only between parent and offspring but also from neighbor to neighbor. It’s this sharing behavior that is the single largest contributor to bacteria becoming drug resistant.

With the benefit of hindsight and an acute understanding of how microbes mutate, it should come as no surprise that penicillin’s effectiveness as a cure-all was limited from the start. Nature had already preprogrammed certain bacterial species, such as E. coli, impervious to penicillin’s effects from the very beginning. It was only a matter of time before these strains of bacteria passed their genetic advantage on to their neighbors, such as those malignant staph infections that fell early to penicillin’s might, giving their disadvantaged counterparts new genetic weaponry in the fight. And, the more antibiotics grew in popularity—from doses in livestock feed (given antibiotics have also been found to enhance body weight) to prescriptions that may be unnecessary (such as using antibiotics to treat infections for which the cause is unknown) to patients failing to complete their entire dosage (which actually serves to make surviving microbes resilient)—the more resistant previously “curable” diseases became.

The Red Queen Race was on. Researchers scrambled to find new chemical compounds capable of fighting ever-evolving microbes. In a ten-year period, new antibiotics entered the scene—streptomycin, chloramphenicol, tetracycline, and erythromycin—each designed to take out a specific bacterial strain previously immune to defeat. 5 As scientists tinkered in their labs, they began adding synthetic improvements to nature’s raw materials, delivering a blow to infectious diseases that had acquired genetic mutations via the natural course of evolution. Methicillin, immune to enzymes that could destroy penicillin, debuted in 1960. Since enzymes were powerless against it, Staphylococcus aureus simply found another way—it acquired a mutant protein from a neighboring staph species, which rendered methicillin ineffective. Within two years following methicillin’s introduction, methicillin-resistant S. aureus (more commonly known as MRSA) became the latest dreaded bacterial infection invulnerable to antibiotic effect—manmade or otherwise.

On and on the race went—and still goes—with microbes mutating faster than scientists are able to respond. As additional varieties of antibiotics have entered the scene in more recent decades, innovation has slowed. Chemical adaptations could extend the life of former drugs, but not by more than a few years. At the same time, antibiotics became prolific and cheap, creating more difficult incentives for drug companies to invest in curing the seemingly incurable. And, finding new compounds capable of wiping out a bacterial strain, without doing the same to the host, is a crapshoot at best. Determining where a compound may fail is an unsolvable problem. Trial-and-error experimentation is costly, time-consuming, and uncertain. The money pit that results in building an arsenal for an escalating biological arms race is all too real—it takes approximately ten years and a billion dollars to bring one new drug to market. 6 And that figure doesn’t include the expense associated with fighting existing antibiotic-resistant infections, such as MRSA, which costs the US health care system up to $10 billion per year on its own. 7

All the while, deadly contagions have reached pandemic proportions, with MRSA-related staph infections infiltrating 60 percent of American hospitals in 2002 and killing more Americans than HIV (human immunodeficiency virus ) and tuberculosis combined by 2005. 8 Indeed, the human race is running faster just to stay in the same place. And, the adversary continues to change its approach, literally down to its DNA, to overcome ever-sophisticated countermeasures—a familiar allegory to the cybersecurity race accelerating at this very moment.

Losing the Race

March 6 is an otherwise ordinary date without much fanfare. Don’t get us wrong—there are definitely historic events worth celebrating on that date, but their popularity appeals to niche audiences at best. Baseball fans, particularly fans of the New York Yankees, may drink a celebratory toast to late hero Babe Ruth for extending his contract with the franchise on that date in 1922. Londoners can thank the date for marking the introduction of minicabs in 1961. On that day in 1964, one Cassius Clay shed his name to become the great Muhammad Ali. Sure, these are momentous occasions in their own right, but otherwise hardly worthy of a major global phenomenon. Yet, in the weeks and days leading up to March 6, 1992, the world would learn the date meant something special to an anonymous hacker determined to have it live on in cybersecurity infamy.

As it turns out, March 6 is also the date of Michelangelo’s birthday. To commemorate the occasion, a hacker created a virus of the Renaissance man’s namesake, transferred via floppy disk and programmed to lay dormant until March 6, at which point in time unsuspecting victims would boot up their computers to unleash its curse and have it erase their hard drives. Thanks to some organizations realizing the virus was resident on their computers, the world had an early tip that Michelangelo was scheduled to detonate on that fateful day. Consumers and companies scrambled to disinfect potentially compromised computers and protect themselves from further infestation via the latest antivirus software, courtesy of burgeoning cybersecurity software vendors. As a major newspaper advised just three days before doomsday:

In other words, run, don’t walk, to your software store if you have recently “booted” your computer from a 5.25-inch floppy in the A drive. Buy a full-purpose antiviral program that does more than just snag the Michelangelo virus. . . . Such programs also scan new disks and programs to prevent future infections. 9

Hundreds of thousands of people dutifully obeyed, bracing themselves for an epic meltdown expected to crash up to a million computers. March 6, 1992, arrived with much anticipation and anxiety. The date so many feared would come with a bang left barely a whimper. In the end, no more than a few thousand computers were infested. Journalists turned their ire to those greedy cybersecurity software companies for hyperbolizing the potential effects and duping so many consumers into buying their wares.

As is customary after a major hype cycle, critics admonished the hysteria and attempted to bring the conversation back to reality: viruses like Michelangelo were automatically contained by locality. Unlike viruses of the biological variety that can spread freely, computer viruses in a pre-Internet age were confined by passage from one computer to the next, usually via floppy disk. As long as one didn’t receive an infected disk from a neighbor, the chances of being victimized by said virus’ plague were virtually nil. While some extremists estimated that up to 50 percent of an organization’s computers could be at risk for eventual infection, empirical data suggested the actual probability was far below 1 percent. 10 The widespread panic for Michelangelo and its ilk was quickly extinguished, convincing Joe Q. Public that a nasty superbug of the technical kind was still the imagination of science fiction zealots.

Even as the risk of infestation increased with the dawning of an Internet age, antivirus software packages seemed to largely address the threat. Once a form of malware was released and detected, the equivalent of its fingerprint was also captured. These antivirus software solutions were programmed to recognize the fingerprint—referred to as a signature in industry parlance—of known malware to prevent further infestation. It seemed that, for all intents and purposes, computer viruses were no more harmful than the common cold in many respects—affordably treated and somewhat inoculated.

The days of ignorant bliss were short-lived. Much like their biological counterparts, these viruses, courtesy of their creators, simply mutated to avoid demise. Polymorphic viruses , capable of changing their signature on a regular basis to conceal their identity and intention, entered the scene, rendering existing antivirus software powerless against their evasion tactics. Within 20 years of the Michelangelo fizzle, those same antivirus software companies were again taking heat from critics—this time for developing a mousetrap that had purportedly far outlived its usefulness. With the number of new strains of malware increasing from one million in 2000 to nearly 50 million just ten years later, traditional antivirus capabilities struggled to keep pace, even as consumers and businesses spent billions per year attempting to protect an increasingly digital asset heap. 11 Criticizers pointed to studies showing initial detection rates of these software packages failing to identify up to 95 percent of malware and the days, weeks, months, or even years required to spot a new threat. 12 For such identification to occur, the malware in question would first need to strike at least one victim in order for its signature to be obtained. And, with more customized and targeted attacks on the rise, preventing one’s organization from being that dreaded Patient Zero required white hats to run faster still. In short, the black hats were winning the Red Queen Race .

The More Things Change . . .

Early scientific research on microbes was a precarious trade. In determining the qualities of a particular culture, researchers were confounded in how to study the microbe in a controlled environment, where it could behave normally, without simultaneously contaminating themselves in the process. In 1887, German microbiologist Julius Richard Petri invented a way for scientists to observe the behavioral properties of unknown microbes, safely and effectively. The petri dish, as it would fittingly be called, revolutionized microbiology and allowed researchers to examine not only the characteristics of potentially unsafe organisms but also the possible antidotes that could render them harmless.

Fast forward more than a century following Petri’s invention. Cybersecurity professionals, perplexed by zero-day attacks that seeped through porous antivirus software packages, were hard-pressed to identify these unknown threats before they had an opportunity to do widespread harm. Taking a page from Petri, what promised to be a more advanced approach to cybersecurity was launched as the latest weapon in the white hats’ arsenal—sandbox technology. Sandboxing was nothing new for the traditional information technology (IT) guard, who knew of the benefits of containing a new software release in a test environment, where all bugs could be identified and removed before deployment. Sandboxing in cybersecurity took a similar approach, though this time the software in question would be comparable to those unknown microbes in Petri’s day—potential malware attempting to circumvent traditional antivirus defenses to find its Patient Zero. At a very basic level, sandboxes were virtual petri dishes, allowing organizations to send any suspicious file or program into a contained environment, where it would operate normally and could be observed to determine if it was friend or foe. If the former, it was cleared for passage. If the latter, the sandbox would also act as the virtual bouncer, denying it entry.

Much like antivirus software before it, the early growth of sandbox technology was explosive. White hats rushed to purveyors of the latest silver bullet, designed to finally thwart against the feared zero-day exploit. The sexiness of the new technology could hardly be ignored as industry analysts goaded overwhelmed cybersecurity professionals to adopt in droves, lest they face ridicule. In the words of one expert dispensing advice to these white hats:

Very soon, if you are not running these technologies and you’re a security professional, your colleagues and counterparts will start to look at you funny. 13

Again, similar to antivirus software , the world felt a little safer thanks to the latest cybersecurity remedy. And, at least in the beginning, adversaries were foiled in their attempts. However, it wouldn’t take long for threat actors to find new ways to dupe their intended targets, developing ever more sophisticated evasion techniques to consign sandboxing to the latest innovation with diminishing returns. Just two of the more common countermeasure techniques show how creative and intelligent adversaries can be in mutating their behavior.

The first can be loosely explained as playing dead. Borrowing from this instinctive animal behavior, malware authors program their creation to remain asleep while the anti-malware analysis of the sandbox runs its computation. Understanding sandboxes are limited in the amount of time that can be spent observing a program for malevolent behavior (usually less than a few minutes); adversaries simply wait out these defensive appliances with an extended sleep function in their creation. Of course, any reasonable sandbox would quickly identify a program that did nothing, so black hats outsmart their counterparts by concealing their malware behind useless computation that gives the illusion of activity—all while the malevolent aspect of their code lies in wait. As long as the malware remains asleep while in the sandbox, it will likely be incorrectly assessed as benign, free to pass through the perimeter where it ultimately will awaken to inflict damage.

The second involves a technical judo of sorts that allows malware to readily identify a sandbox, based on its operating conditions, before it can do the same to the specimen in question. In particular, a sandbox is a machine, easily recognized as such by malware programmed to detect non-human cues. For instance, early sandboxes didn’t use mouse clicks. After all, there is no need for a machine to click a mouse when running its computation. Malware authors seized this vulnerability, programming their affliction to recognize a mouse click as a virtual cue that it was no longer operating in a potential sandbox and was now safe to wreak its havoc in the wild. Sandbox companies responded in kind by mimicking mouse clicks in later generations of their invention, but adversaries persist in mutating once more (such as waiting for a certain number of mouse clicks before detonating or measuring the average speed of page scrolls as an indicator of human behavior). In the end, it’s increasingly difficult to program human tendencies into a sandbox that is anything but.

Once again disillusioned by how quickly their lead in the Red Queen Race had vanished, white hats realized that sandbox technology was just the latest in a string of point solutions, effective for a brief period of time before adversaries adapted their countermeasures. The sandbox was just the latest cybersecurity defense mechanism to enter the scene. It had followed various firewall technologies, network detection innovations, and, of course, antivirus software capabilities before it. Each eventually was relegated to diminishing returns once black hats mutated their response.

Realizing that any defense mechanism would ultimately be rendered fallible, cybersecurity professionals struggled to find a new way forward. While the technologies in question relied on identifying malware to prevent it from doing harm (a form of virtual blacklisting), another approach involved first identifying sanctioned programs and files and only allowing their use within the organization (virtual whitelisting). While whitelisting can certainly prove useful in some instances, even it is not sustainable as the cure-all to the problem. With cloud and mobility all but obliterating the perimeter of the organization, whitelisting becomes unwieldy at best, leaving strained cybersecurity professionals as the last line of defense in authorizing applications and web sites from a seemingly infinite market and for an increasingly intolerant workforce. Whitelisting as the only means of cybersecurity is the equivalent of discovering a promising compound that not only eradicates disease but does the same to the host itself, though it certainly has its place as one additional weapon in an ever-growing arsenal. Once again, cybersecurity professionals are forced to run twice as fast simply to remain in the same spot—but it can at least be helpful to understand how the race favors the Red Queen from the onset.

The Red Queen’s Head Start

One key question is, Why do we see this pattern where a new cybersecurity threat defense technology, like antivirus or sandboxing solutions, works well at first, but later falls apart or re-baselines to a new equilibrium of effectiveness? The answer, like so much else in this book, is driven by incentives. In this case, the confluence of the incentives for black hat attackers and white hat defenders, along with the forces of time and delay, create a cyclical pattern of efficacy.

Before the starting pistol is fired: Part 1 of this book focused on the black hat perspective and the asymmetric ability for adversaries to continuously innovate on how they will construct new attacks and expand the scope of their targets. In essence, this innovation phase commences before white hats even enter the race. Threat actors establish the problem statement. They dictate the rules of the race initially. The security industry must then respond with tools and technology that equip cybersecurity operations teams for the run. Typically, it is not until new techniques are used in the wild or published at security research venues that a refined problem statement is exposed and white hats are aware a new race has begun.

Getting out of the starting blocks: part of the challenge in understanding the problem statement from the security industry's perspective is to identify what threat vectors will matriculate into issues of enough impact and volume that enable a profitable defensive market. If a cybersecurity technology company predicts that a threat will be a major issue in the future and develops defensive technology and the threat does not materialize, the misstep will result in lost profits and create an opportunity cost that could have been invested in more viable areas. As we noted earlier, ransomware was seen in its embryonic forms in the late 1980s; however, it did not cause major pandemic until the 2010s. If a zealous cybersecurity company had invested in defensive ransomware technology back in the 1980s, it would have been due for a long wait of 30 years before the market realized a need. There are many potential areas that could represent the next wave of threat vector be they attacking firmware to causing permanent destruction in future ransomware environments to moving into adjacent areas such as smart and connected devices and vehicles. The challenge in predicting “what’s next” causes many cybersecurity technology companies to take a more responsive approach and invest in defeating the latest high-impact threat techniques that have recently become real and damaging to potential customers who are willing to pay for a defense.

When a cybersecurity vendor initially builds a new defensive technology, incentive structures are the metaphorical wind at their back in the race. Specifically, this is where cybersecurity software purveyors cause black hats to pick up their pace. The earlier a cybersecurity technology is in its market maturity, the less customers will have deployed it as a countermeasure to the latest threat vector. If you are a black hat creating said threat vector, you must now accelerate the speed of propagating your scourge before white hats begin deploying the latest technology designed with countermeasures against it. As the market begins adopting the latest defense mechanism, black hats run into complexity—their pace is affected in the race. Therefore, there are incentives on both sides to move fast—black hats must ship their wares quickly and white hats must step up their defenses. For example, initial viruses could be mass-produced and distributed as identical copies as there was no concept of antivirus signature detection to detect and remove them. Similarly, early malware could initially assume it had reached its target victim. There was no need for it to identify if it was, in fact, running in a forensic analysis sandbox. The implication of this phenomenon is that the first generation of a new defensive technology is typically very effective against the “in the wild” threat that it is targeting. Ironically, it takes time associated with market maturity of a particular defensive technology until the black hats are sufficiently incentivized to create countermeasures of their own.

The early pace of the race: there is an inherent lag between the development of a new defensive technology and its pervasive existence as a component of the cybersecurity defense landscap e. In the early phase of the technology’s existence, it will have strong technical appeal due to its marked improvement in efficacy as compared to existing technologies. This benefit often leads to rapid acquisition and deployment; however, there are counterforces that slow adoption. Procurement processes, quality assurance cycles, and certification can add significant lag to operationalizing a new defensive technology. As examples, IT needs to ensure that new capabilities don’t create incremental false positives or network or functional bottlenecks—both of which impede the productivity of the organization and slow the adoption curve.

The deployment ramp of a new defense technology will directly impact when and how aggressively threat actors must develop countermeasures, which directly affects their return on investment from their “products.” Rather than using their time and resource to distribute and operationalize their malware, adversaries are instead forced to convert their investment into researching and developing new countermeasures. A good way to think of this is with the following equation:

Where ICM (incentive to develop countermeasures and evasion tactics) is directly driven by the combination of how effective the new defensive technology is (E) and how pervasively deployed it is within the environments that are being targeted (DV). ICM starts very low when a technology is released, even if the efficacy is remarkably high (this is because there is nascent deployed volume). The ICM value will typically be exponential as almost all organizations will be prevented from an immediate deployment due to internal processes associated with procurement and installation lead times, as stated above, but timelines quickly hasten as adoption starts. For example, once the quality and negative impact hurdles have been overcome by one Fortune 50 company, this tacit endorsement clears the way for the technology to be adopted as “proven” by others. This effect creates a snowball of customer references that exponentially builds upon itself in spurring adoption of the latest technology by white hats.

Changing the lead runner: when ICM hits a threshold that indicates that the new defensive technology is preventing maximized value to the adversary without offensive countermeasures, a tipping point is reached where the threat actor must develop an “upgrade” focused on circumventing and evading the new defense. Much like the first cycle of defense development favors early white hat deployment, this initial cycle of offensive countermeasure innovation is very much in favor of the black hat. Similar to a first generation of malicious technology being relatively simple to defeat with a new approach, adversaries developing their initial countermeasures are offered the maximum number of options to evade newly deployed defenses. Additionally, given that the defensive technology is typically sold as a commercial offering, it is possible for threat actors to procure (or steal) the technology to analyze exactly what they need to evade. Here again, the race shifts in favor of the black hat, who can painstakingly tweak every version of a malicious exploit. In contrast, cybersecurity professionals require stability of commercial offerings to be acceptable to IT operating environments. Whether polymorphism to defeat antivirus, sandbox fingerprinting (the technique for a piece of malware to determine if it is running in a sandbox) or delay tactics, the first set of countermeasures can redefine a defense technology from being a silver bullet to yet another element in the complex arsenal of defense.

The Red Queen advantage: the first cycles in development, deployment, and countermeasure creation are amplified by time incentives. At the end of the initial countermeasure development, most technologies will move into an equilibrium cycle where there will be both improvements in the core technology and evasion tactics. Yet, even during this equilibrium portion of the race, the advantage goes to the black hat, who enjoys virtually no restraints in one-upping his opponent. As sandbox fingerprinting alone illustrates, the difficulty in programming a sandbox to realistically mimic human behavior is a defensive complexity that can be readily exploited by adversarial countermeasures.

It’s grossly unfair. White hats are forced to run a race they neither started nor can win, as their opponent consistently gains an advantage in playing by a different set of rules. There will be some who read this chapter and inherently understand they are running a race they cannot complete, let alone win. Yet, at the same time, they won’t be able resist fantasizing about the next great technology promising to turn the race finally in their favor.

For example, at the time of this writing, the industry is abuzz with the potential of machine learning, artificial intelligence, and big data analytics. Before being seduced by the next “it” technology, it is critical to first contemplate the full life cycle of its defensive capabilities and project how countermeasures and evasion tactics will impact both its near-term efficacy and long-term effectiveness when it reaches an equilibrium point. With regard to our forward-looking example, we need to understand all the tools that black hats will use to confuse and poison our models. We need to imagine how excessive noise and false-positive bombardment will be used by the adversary to muddy the waters—because they will. Cybersecurity professionals, already inundated with too much noise in the system, will adjust their latest machine-learning savior to compensate for the false-positive deluge. In the process, they will unwillingly open the door for their adversary to strike with a highly targeted and devastating threat through which to circumvent even the smartest analytics. It is not to say that these capabilities and technologies are not important in our forward-looking architectures, but, rather, we should not base their long-term value solely on the results we measure at initial launch and inception.

As hard as it is to accept, very often a deployed technology will have little to no incremental effectiveness within a corporate infrastructure. So why would anyone have deployed it? Technologies are implemented when they have either initial or equilibrium value that justifies the operational costs. In cases in which the equilibrium efficacy value is less than the ongoing costs, those defensive technologies should be removed from the environment to make way for others with higher impact.

Running the race in the same way—deploying one point product after another in a disintegrated fashion, convoluting one’s cybersecurity operations environment in the process—yields the advantage to the adversary every time. To flip the script on the Red Queen requires white hats to think differently about their approach to cybersecurity—one that places speed on their side.

A Second Thought

There is no such thing as a super antibiotic, capable of killing all potential bacterial microbes, any more than there is an equivalent comparison in cybersecurity. As previously discussed, there are multiple ways through which predators can encroach upon a virtual castle’s “keep.” In an effort to protect their organization’s expanding digital footprint, cybersecurity professionals have rushed headlong into purchasing the latest cybersecurity technology, creating several problems:

The latest cybersecurity technology’s effectiveness reaches diminishing returns the more it is deployed in market. Here is another interesting observation where cybersecurity departs from traditional IT notions: In cybersecurity, it usually doesn’t pay to be a late adopter. While traditional IT favors followers who adopt technologies once others have had an opportunity to work out the bugs and bring down the price point (after all, who wants to be the market guinea pig?), cybersecurity follows the exact opposite pattern. Similar to microbes that build up a resistance as antibiotics are more widely distributed, cybercriminals quickly learn how to mutate their creations to circumvent cybersecurity technology that stands in their way. First movers of cybersecurity technology, such as early adopters of those antivirus or sandbox technology solutions, saw immediate benefit from their investments. But, the more the market begins adopting the latest cyber wonder product, the more incentive adversaries have to develop countermeasures. Being first often pays in cybersecurity.

Deploying the latest cybersecurity technologies creates other challenges for white hats. As discussed in Chapter 7, there simply aren’t enough white hats in the race. Oftentimes, the best laid intentions of implementing the latest innovation literally collect dust on the shelf as cybersecurity professionals struggle to install the new wares in their environment. This dichotomy of needing to move quickly despite not having sufficient resources to operationalize and maintain the market’s latest widget creates enormous pressure for white hats to run faster still.

Maximizing cybersecurity return on investment (ROI)becomes a race against time itself. If there is no such thing as a particular product capable of eradicating all threats permanently, then the existing race is not a fair match. Black hats will always win if white hats run the race set in their adversaries’ favor. Instead of pursuing the elusive better product, cybersecurity professionals are better served striving for a superior platform—one that allows for swift onboarding of new technologies over an architecture backed by common tools and workflows, along with automation and orchestration capabilities to lessen the burden on an already strained workforce. As cybersecurity software companies continue to evolve any given brainchild deployed in this environment, the technology in question moves toward a sustaining steady state of innovation. This level of innovation does not exceed that of the initial technology breakthrough but rather progressively responds to each successive mutation by threat actors, thereby extending the life of the product in question (much the same way pharmaceutical adaptations can prolong the effectiveness of drugs, if only by a few years).

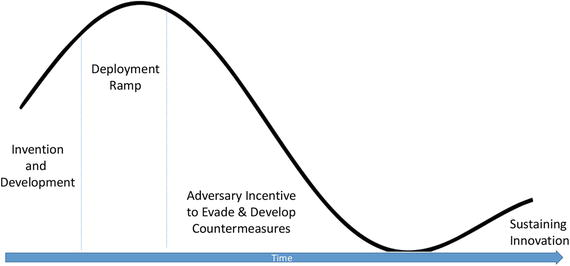

In short, new cybersecurity technologies generally follow the threat effectiveness curve shown in Figure 8-1.

Figure 8-1. Grobman’s Curve of Threat Defense Effectiveness

The goal for cybersecurity professionals becomes deploying new technologies as quickly as possible with the lowest level of effort to capitalize on the threat effectiveness associated with early adoption. In turn, the goal for cybersecurity software vendors is to minimize the slope of descending threat effectiveness by anticipating future offensive countermeasures and building resiliency into their creations with these evasion tactics in mind to prolong product utility. The result is an approach that maximizes speed and ROI of cybersecurity investments, with cybersecurity operations represented by shifting the bull’s eye as far left to the curve as possible with the lowest level of effort and the software vendor community focused on decreasing the slope of descent by delivering greater product resiliency (Figure 8-2).

Figure 8-2. Grobman’s Curve of Maximizing Defense Effectiveness

Grobman’s Curve , named after the book’s lead author, provides a different perspective through which to examine an organization’s cybersecurity posture. New cybersecurity technologies will come and go. It’s the nature of an industry where human beings on the good and bad side of the fight are incentivized to continue mutating their approach. In this escalating race, cybersecurity professionals are outmatched given the reality of Grobman’s curve, which suggests that, just as many are deploying the latest cybersecurity defense mechanism, its effectiveness is due to significantly deteriorate as adversaries have sufficient time to develop successful evasion techniques. White hats are always disadvantaged in this race if they do not reexamine their approach. An integrated platform that enables swift adoption of the latest widget allows these professionals to maximize ROI, elongate the effectiveness of their deployed products, and establish a framework through which running faster results in going farther.

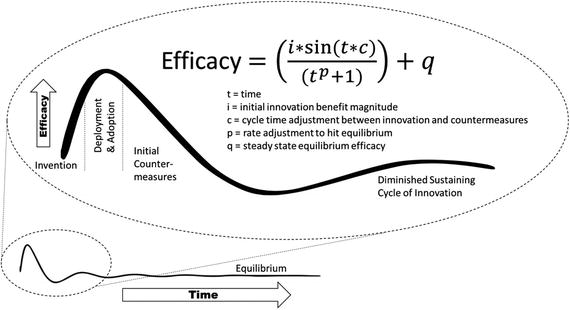

It’s important not to think of the curve as a single oscillation but, rather, a sequence of volleyed innovation by the black and white hats working to counter each other’s tactics. At each cycle, the amount of effort increases as low-hanging fruit dries up. The result is that defensive technologies will find an equilibrium for their general effectiveness driven by the continuous innovation and evasion investments made by the technologists on both sides of the equation. Although the general pattern is common for all technologies, the exact shaping of the curve and speed to equilibrium will vary for every technology. One way to represent this mathematically would be with the approach shown in Figure 8-3.

Figure 8-3. Finding an equilibrium

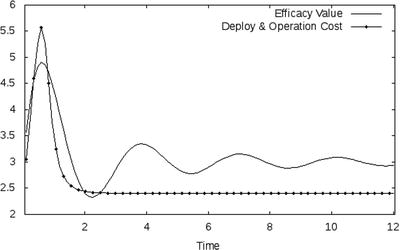

By grasping this phenomenon, key questions can help guide the selection and adoption of technologies by understanding the efficacy pattern and examining it along with the cost and complexity of deploying a solution.

When a technology has an equilibrium efficacy value that is greater than its operational costs in the long run, it will generally make sense to adopt, regardless of the point in time on the curve. A good example of this is deterministic signature based anti-malware. This mature technology is key to reducing the volume of threats that need to be analyzed with more sophisticated approaches and has reasonable return on the cost structure required to operate the capability. One could think of this scenario looking like the curve in Figure 8-4.

Figure 8-4. Long term positive ROI

There are clearly benefits of deploying early, but given that the equilibrium efficacy value is greater than operational equilibrium, the technology makes sense in the long run regardless of when it is deployed. Contrast this with an example where benefits are strong at first but the long-term efficacy collapses below the operational management costs (Figure 8-5).

Figure 8-5. Short-term benefit, version A

In this case, the long-term value cannot be realized, and likely, the opportunity cost of maintaining the capability in the long run would be better suited elsewhere. The caveat is that if it was possible to do a rapid deployment right when technology is launched but then remove it from the environment, the same curve would look like Figure 8-6.

Figure 8-6. Short-term benefit, version B

Although this is theoretically possible, many of the point products and technologies that exhibit this type of curve will have significant deployment challenges in their initial offering, decreasing the viability of a comprehensive deployment in the initial amplified oscillation of efficacy.

The question then becomes: is there a way to use the curve to the white hat’s advantage? Using a platform approach (Figure 8-7) where a pipeline of technologies is able to flow through a framework managing deployment and messaging, along with supplier business models that allow access to technologies without requiring a full procurement cycle, is the solution. This will allow a pipeline of leading-edge efficacy defensive measures to be practically deployed into an environment. For each technology in the pipeline, it can efficiently be managed to support either a long-term sustaining role in the configuration or a short-term benefit if countermeasures are identified that defeat its ROI.

Figure 8-7. Platform approach

Thankfully, a different approach may also be the answer in the biological race that started this chapter. Scientists have discovered a new antibiotic, teixobactin, which attacks microbes in a very distinct way. Rather than hitting one defined target, such as an important protein in a particular cell, teixobactin hits two targets, both of which are polymers that build the bacterial cell wall. 14 Unlike a cell’s protein , which is comprised of DNA that can be mutated through genetic evolution and the sharing between microbial neighbors, these polymers are made of enzymes, not genes. In short, there is nothing to mutate. In shifting the antibiotic approach, researchers may just have discovered how to avert an impending superbug crisis—a good thing, since the first superbug resistant to all antibiotics of last resort entered the United States in 2016. While the Red Queen Race accelerates, choosing to run it a different way actually results in getting somewhere in The Second Economy.

Notes

Lewis Carroll, “2: The Garden of Live Flowers,” in Martin Gardner (ed.), Through the Looking-Glass and What Alice Found There (The Annotated Alice ed.) (New York: The New American Library, 1998 [1871]), p. 46. https://ebooks.adelaide.edu.au/c/carroll/lewis/looking/chapter2.html . Accessed July 24, 2016.

E. Klein E, D. L. Smith, and R. Laxminarayan, Hospitalizations and deaths caused by methicillin-resistant Staphylococcus aureus, United States, 1999–2005. Emergent Infectious Disease, December 2007, wwwnc.cdc.gov/eid/article/13/12/07-0629, accessed July 24, 2016.

Alexander Fleming, Penicillin, Nobel Lecture, December 11, 1945, www.nobelprize.org/nobel_prizes/medicine/laureates/1945/fleming-lecture.pdf , accessed July 25, 2016.

Katherine Xue, “Superbug: An Epidemic Begins,” Harvard Magazine, May-June 2014, http://harvardmagazine.com/2014/05/superbug , accessed July 26, 2016.

Ibid.

Ibid.

Klein et al., note 2 supra.

Xue, note 4 supra.

Peter H. Lewis, “Personal Computers: Safety in Virus Season,” The New York Times, March 3, 1992, www.nytimes.com/1992/03/03/science/personal-computers-safety-in-virus-season.html , accessed July 27, 2016.

John Markoff, “Technology; Computer Viruses: Just Uncommon Colds After All?,” The New York Times, November 1, 1992, www.nytimes.com/1992/11/01/business/technology-computer-viruses-just-uncommon-colds-after-all.html , accessed July 27, 2016.

Nicole Perlroth, “Outmaneuvered at Their Own Game, Antivirus Makers Struggle to Adapt,” The New York Times, December 31, 2012, www.nytimes.com/2013/01/01/technology/antivirus-makers-work-on-software-to-catch-malware-more-effectively.html?_r=0 , accessed July 28, 2016.

Ibid.

Ibid.

Cari Romm, “A New Drug in the Age of Antibiotic Resistance,” The Atlantic, January 7, 2015, www.theatlantic.com/health/archive/2015/01/a-new-drug-in-the-age-of-antibiotic-resistance/384291/ , accessed July 29, 2016.

Matthew L. Holding, James E. Biardi, and H. Lisle Gibbs, “Coevolution of venom function and venom resistance in a rattlesnake predator and its squirrel prey,” Proceedings of the Royal Society B, April 27, 2016, http://rspb.royalsocietypublishing.org/content/283/1829/20152841.full , accessed July 29, 2016.