1. The Security Process

You've just hung up the phone after speaking with a user who reported odd behavior on her desktop. She received a pop-up message that said “Hello!” and she doesn't know what to do. While you listened to her story, you read a trouble ticket opened by your network operations staff noting an unusual amount of traffic passing through your border router. You also noticed the delivery of an e-mail to your abuse account, complaining that one of your hosts is “attacking” a small e-commerce vendor in Massachusetts. Your security dashboard joins the fray by offering its blinking red light, enticing you to investigate a possible intrusion by external parties.

Now what?

This question is familiar to anyone who has suspected one or more of their computers have been compromised. Once you think one of your organization's assets has been exploited, what do you do next? Do you access the suspect system and review process tables and directory listings for improper entries? Do you check firewall logs for odd entries, only to remember you (like most organizations) only record traffic rejected by the firewall?1 (By definition, rejected traffic can't hurt you. Only packets allowed through the firewall have any effect, unless the dropped packets are the result of a denial-of-service attack.) Do you hire consultants who charge $200+ per hour, hoping they can work a one-week miracle to solve problems your organization created during a five-year period?

There must be a better way. My answer is network security monitoring (NSM), defined as the collection, analysis, and escalation of indications and warnings to detect and respond to intrusions. This book is dedicated to NSM and will teach you the tools and techniques to help you implement NSM as a model for security operations. Before describing the principles behind NSM, it's helpful to share an understanding of security terminology. Security professionals have a habit of using multiple terms to refer to the same idea. The definitions here will allow us to understand where NSM fits within an organization's security posture. Readers already familiar with security principles may wish to skim this chapter for highlighted definitions and then move directly to Chapter 2 for a more detailed discussion of NSM.

What Is Security?

Security is the process of maintaining an acceptable level of perceived risk. A former director of education for the International Computer Security Association, Dr. Mitch Kabay, wrote in 1998 that “security is a process, not an end state.”2 No organization can be considered “secure” for any time beyond the last verification of adherence to its security policy. If your manager asks, “Are we secure?” you should answer, “Let me check.” If he or she asks, “Will we be secure tomorrow?” you should answer, “I don't know.” Such honesty will not be popular, but this mind-set will produce greater success for the organization in the long run.

During my consulting career I have met only a few high-level executives who truly appreciated this concept. Those who believed security could be “achieved” were more likely to purchase products and services marketed as “silver bullets.”3 Executives who grasped the concept that security is a process of maintaining an acceptable level of perceived risk were more likely to commit the time and resources needed to fulfill their responsibilities as managers.

The security process revolves around four steps: assessment, protection, detection, and response (see Figure 1.1).4

- Assessment is preparation for the other three components. It's stated as a separate action because it deals with policies, procedures, laws, regulations, budgeting, and other managerial duties, plus technical evaluation of one’s security posture. Failure to account for any of these elements harms all of the operations that follow.

- Protection is the application of countermeasures to reduce the likelihood of compromise. Prevention is an equivalent term, although one of the tenets of this book is that prevention eventually fails.

- Detection is the process of identifying intrusions. Intrusions are policy violations or computer security incidents. Kevin Mandia and Chris Prosise define an incident as any “unlawful, unauthorized, or unacceptable action that involves a computer system or a computer network.”5

As amazing as it may sound, external control of an organization's systems is not always seen as a policy violation. When confronting a determined or skilled adversary, some organizations choose to let intruders have their way—as long as the intruders don't interrupt business operations.6 Toleration of the intrusion may be preferred to losing money or data.

- Response is the process of validating the fruits of detection and taking steps to remediate intrusions. Response activities include “patch and proceed” as well as “pursue and prosecute.” The former approach focuses on restoring functionality to damaged assets and moving on; the latter seeks legal remedies by collecting evidence to support action against the offender.

Figure 1.1. The security process

With this background, let's discuss some concepts related to risk.

What Is Risk?

The definition of security mentioned risk, which is the possibility of suffering harm or loss. Risk is a measure of danger to an asset. An asset is anything of value, which in the security context could refer to information, hardware, intellectual property, prestige, and reputation. The risk should be defined explicitly, such as “risk of compromise of the integrity of our customer database” or “risk of denial of service to our online banking portal.” Risk is frequently expressed in terms of a risk equation, where

risk = threat × vulnerability × asset value

Let's explore the risk equation by defining its terms in the following subsections.

Threat

A threat is a party with the capabilities and intentions to exploit a vulnerability in an asset. This definition of threat is several decades old and is consistent with the terms used to describe terrorists. Threats are either structured or unstructured.

Structured threats are adversaries with a formal methodology, a financial sponsor, and a defined objective. They include economic spies, organized criminals, terrorists, foreign intelligence agencies, and so-called information warriors.7

Unstructured threats lack the methodology, money, and objective of structured threats. They are more likely to compromise victims out of intellectual curiosity or as an instantiation of mindless automated code. Unstructured threats include “recreational” crackers, malware without a defined object beyond widespread infection, and malicious insiders who abuse their status.

Some threats are difficult to classify, but structured threats tend to be more insidious. They pursue long-term systematic compromise and seek to keep their unauthorized access unnoticed. Unstructured threats are less concerned with preventing observation of their activities and in many cases seek the notoriety caused by defacing a Web site or embarrassing a victim.

A few examples will explain the sorts of threats we may encounter. First, consider a threat to the national security of the United States. An evil group might hate the United States, but the group poses a minor threat if it doesn't have the weapons or access to inflict damage on a target. The United States won't create task forces to investigate every little group that hates the superpower.

Moving beyond small groups, consider the case of countries like Great Britain or the former Soviet Union. Great Britain fields a potent nuclear arsenal with submarines capable of striking the United States, yet the friendship between the two countries means Great Britain is no threat to American interests (as least as far as nuclear confrontation goes).8 In the 1980s the Soviet Union, with its stockpile of nuclear forces and desire to expand global communism, posed a threat. That nation possessed both capabilities and intentions to exploit vulnerabilities in the American defensive posture.

Let's move beyond national security into the cyber realm. A hacking group motivated by political hatred of oil companies and capable of coding attack tools designed for a specific target could be a threat to the Shell oil company. An automated worm unleashed by a malicious party is a threat to every target of the worm's attack vector. A frustrated teenager who wants to “hack” her boyfriend's e-mail account but doesn't understand computers is not a threat. She possesses the intentions but not the capabilities to inflict harm.

Threats are expressed within threat models, which are descriptions of the environment into which an asset is introduced. The threat model for the early Internet did not include malicious hackers. The threat model for early Microsoft Windows products did not encompass globally interconnected wide area networks (WANs). The deployment of an asset outside the threat model for which it was designed leads to exploitation. The method by which a threat can harm an asset is an exploit. An exploit can be wielded in real time by a human or can be codified into an automated tool.

The process by which the intentions and capabilities of threats are assessed is called threat analysis. The Department of Homeland Security (DHS) Advisory System uses a color-coded chart to express the results of its threat analysis process.9 This chart is a one-word or one-color summarization of the government's assessment of the risk of loss of American interests, such as lives and property. The system has been criticized for its apparent lack of applicability to normal Americans. Even when the DHS announces an orange alert (a high risk of terrorist attacks), government officials advise the public to travel and go to work as normal. Clearly, these warnings are more suited to public safety officials, who alter their levels of protection and observation in response to DHS advisories.

DHS threat conditions (ThreatCons) are based on intelligence regarding the intentions and capabilities of terrorist groups to attack the United States. When the DHS ThreatCon was raised to orange in February 2003, the decision was based on timing (the conclusion of the Muslim pilgrimage, or hajj), activity patterns showing intent (the bombings of a nightclub in Bali in October 2002 and a hotel in Mombasa, Kenya, in November 2002), and capabilities in the form of terrorist communications on weapons of mass destruction.10 Security professionals can perform the same sorts of assessments for the “computer underground,” albeit with lesser tools for collecting information than those possessed by national agencies.

Vulnerability

A vulnerability is a weakness in an asset that could lead to exploitation. Vulnerabilities are introduced into assets via poor design, implementation, or containment.

Poor design is the fault of the creator of the asset. A vendor writing buggy code creates fragile products; clever attackers will exploit the software's architectural weaknesses. Implementation is the responsibility of the customer who deploys a product. Although vendors should provide thorough documentation on safe use of their wares, customers must ultimately use the product. Containment refers to the ability to reach beyond the intended use of the product. A well-designed software product should perform its intended function and do no more. A Web server intended to publish pages in the inetpub/wwwroot directory should not allow users to escape that folder and access the command shell. Decisions made by vendors and customers affect containment.

Asset Value

The asset value is a measurement of the time and resources needed to replace an asset or restore it to its former state. Cost of replacement is an equivalent term. A database server hosting client credit card information is assumed to have a higher value or cost of replacement than a workstation in a testing laboratory. Cost can also refer to the value of an organization's reputation, brand, or trust held by the public.

A Case Study on Risk

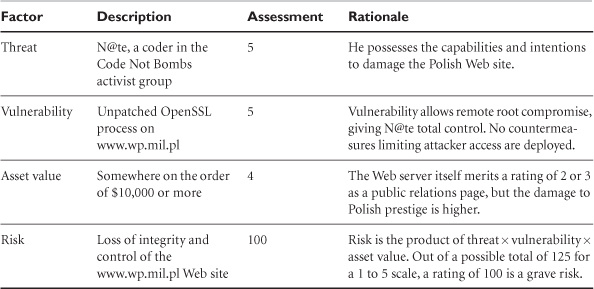

Putting these terms to work in an example, let's consider the risk to a public Web server operated by the Polish Ministry of Defense (www.wp.mil.pl). On September 3, 2003, Polish army forces assumed control of the Multinational Division Central South in Iraq. A hypothetical anti–Iraq war hacker group, Code Not Bombs, reads the press release at www.nato.int and is angry about Poland's involvement in the war.11 One of their young coders, N@te, doesn't like Poland's involvement and wants to embarrass the Polish military by placing false news stories on the Ministry of Defense's Web site. He discovers that although www.wp.mil.pl is running Apache, its version of OpenSSL is old and subject to a buffer-overflow attack. If N@te so desired, he could accomplish his goal. The Polish military spends $10,000 (or the Polish equivalent) per year maintaining its Web server. Damage to national prestige from an attack would be several times greater.

When translating this story into a risk equation, it's fine to use an arbitrary numerical scheme to assign ratings to each factor. In this case, imagine that a 5 is a severe value, while a 1 is a minor value. Parsing this scenario using our terminology, we find the results shown in Table 1.1.

Table 1.1. Sample risk assessment for the Polish army Web server

What is the security of the Polish Web server? Remember our definition: Security is the process of maintaining an acceptable level of perceived risk. Assume first that the Polish military is unaware that anyone would think to harm its Web server. If the security administrators believe the threat to www.wp.mil.pl is zero, then their perceived risk of loss is zero. The Polish military organization assesses its Web server to be perfectly secure.

Perception is a key to understanding security. Some people are quick to laugh when told, “The world changed on September 11th, 2001.” If the observer perceives little threat, then the risk is perceived to be low and the feeling of security is high. September 11th changed most people's assessment of threats to the American way of life, thereby changing their risk equations. For anyone inside the intelligence community, the world did not change on 9/11. The intelligence community already knew of thousands of potential evildoers and had spent years fighting to prevent harm to the United States.

Now assume the Polish military is aware that the computer underground detests the Polish army's participation in reconstructing Iraq. Once threats are identified, the presence of vulnerabilities takes on new importance. Threats are the key to security, yet most people concentrate on vulnerabilities. Researchers announce thousands of software vulnerabilities every year, yet perhaps only a few dozen are actually used for purposes of exploitation. Recognizing the parties that possess the capabilities and intentions to harm a target is more important than trying to patch every vulnerability published on the BugTraq mailing list.

By knowing who can hurt an organization and how they can do it, security staff can concentrate on addressing critical vulnerabilities first and leaving less severe holes for later. The Simple Network Management Protocol (SNMP) vulnerabilities published in February 2002 received a great deal of attention because most network devices offer management via SNMP.12 However, widespread exploitation of SNMP did not follow.13 Either malicious parties chose not to write code exploiting SNMP, or they did not possess evil intentions for targets operating vulnerable SNMP-enabled devices. (It's also quite possible that hundreds or thousands of SNMP-enabled devices, like routers, were quietly compromised. Routers tend to lie outside the view of many network-based intrusion detection products.)

Contrast that vulnerability with many of the discoveries made concerning Windows Remote Procedure Call (RPC) services in 2003.14 Intruders wrote dozens of exploits for Windows RPC services throughout 2003. Automated code like the Blaster worm exploited Windows RPC services and caused upward of a billion U.S. dollars in lost productivity and cleanup.15

Consider again the OpenSSL vulnerability in the Polish Web site. If the Poles are unaware of the existence of the vulnerability, they might assess the security of their Web site as high. Once they read BugTraq, however, they immediately change their perception and recognize the great risk to their server. Countermeasures are needed. Countermeasures are steps to limit the possibility of an incident or the effects of compromise, should N@te attack the Polish Web site. Countermeasures are not explicitly listed in the risk equation, but they do play a role in risk assessment. Applying countermeasures decreases the vulnerability rating, while the absence of countermeasures has the opposite effect. For example, restricting access to www.wp.mil.pl to parties possessing a digital certificate reduces the vulnerability profile of the Web server. Allowing only authorized Internet Protocol (IP) addresses to visit www.wp.mil.pl has a similar effect.

Countermeasures can also be applied against the threat. They can act against the offending party's capabilities or intentions. If the Polish government makes a large financial contribution to Code Not Bombs, N@te might change his mind concerning Poland's role in Iraq. If Poland arrests N@te for his earlier compromise of another Web site, then Code Not Bombs has lost its primary cyber-weapon.

How do you assess risk when an attacker is not present? The Polish Web site could be hosted on an old 486-class system with a ten-year-old hard drive. Age is not a threat because old hardware is not an active entity with capabilities and intentions. It's better to think in terms of deficiencies, which are flaws or characteristics of an asset that result in failure without an attacker's involvement. Failures due to deficiencies can be considered risks, although reliability is the term more often associated with these sorts of problems.

Security Principles: Characteristics of the Intruder

With a common understanding of security terms, we must analyze certain assumptions held by those who practice NSM operations. If you accept these principles, the manner in which NSM is implemented will make sense. Some of these principles are accepted throughout the security community, and others could provoke heated debate. The first set of security principles, presented in this section, address the nature of the attacker.

Some Intruders Are Smarter Than You

Let's begin with the principle most likely to cause heartache. As Master Kan said in the pilot of the 1970s Kung Fu television series, “A wise man walks with his head bowed, humble like the dust.” However smart you are, however many years you've studied, however many defenses you've deployed, one day you will face a challenger who possesses more skill, more guile, and more cunning. Don't despair, for Master Kan also said, “[Your spirit] can defeat the power of another, no matter how great.” It is this spirit we shall return to when implementing NSM—plus a few helpful open source tools!

This principle doesn't mean all intruders are smarter. For every truly skilled attacker, there are thousands of wanna-be “script kiddies” whose knowledge extends no further than running precompiled exploits. NSM is designed to deal with the absolute worst-case scenario, where an evil mastermind decides to test your network's defenses. Once that situation is covered, everything else is easier to handle.

Many Intruders Are Unpredictable

Not only are some intruders smarter than you, but their activities cannot be predicted. Again, this discussion pertains more accurately to the highest-end attacker. Planning for the worst-case scenario will leave you much better prepared to watch the low-skilled teeming intruder masses bounce off your security walls. Defenders are always playing catch-up. No one talks about “zero-day defenses”; zero-day exploits are privately held programs coded to take advantage of vulnerabilities not known by the public. Vendors have not published patches for the vulnerabilities targeted by zero-day exploits.16

The best intruders save their exploits for the targets that truly matter. The fully patched remote access server offering nothing but the latest OpenSSH service could fall tomorrow. (That's why you can't tell your manager you'll “be secure” tomorrow.) The U.S. military follows the same principles. During the first Gulf War, munitions containing flexible metal strips disabled Iraqi power stations. This simple technique was supposedly kept a secret after chaff was mistakenly dropped on a power station in southern California, disrupting Orange County's electricity supply. Only during the aftermath of the first Gulf War did the technique become publicly acknowledged.17

Prevention Eventually Fails

If at least some intruders are smarter than you and their ways are unpredictable, they will find a way to penetrate your defenses. This means that at some point your preventative measures will fail. When you first accept the principle that prevention eventually fails, your worldview changes. Where once you saw happy, functional servers, all you see now are potential victims. You begin to think of all the information you need to scope and recover from the future intrusion.

Believing you will be a victim at some point in the future is like a nonswimmer planning for a white-water rafting trip. It's possible your boat won't flip, but shouldn't you learn to swim in case it (inevitably) does? If you don't believe the rafting analogy, think about jumping from an airplane. Preventing the failure of a skydiver's main chute is impossible; at some point, for some skydiver, it won't open. Skydivers mitigate this risk by jumping with a reserve chute.

This principle doesn't mean you should abandon your prevention efforts. As a necessary ingredient of the security process, it's always preferable to prevent intrusions than to recover from them. Unfortunately, no security professional maintains a 1.000 batting average against intruders. Prevention is a necessary but not sufficient component of security.

Security Principles: Phases of Compromise

If we want to detect intrusions, we should understand the actions needed to compromise a target. The five phases described in this section—reconnaissance, exploitation, reinforcement, consolidation, and pillage—are not the only way for an intruder to take advantage of a victim.18 Figure 1.2 illustrates the locations in time and space where intruders may be detected as they compromise victims.

Figure 1.2. The five phases of compromise

The scenario outlined here and in Chapter 4 concentrate on attacks by outsiders. Attacks by outsiders are far more common than those by insiders. Attacks for which insiders are more suited, such as theft of proprietary information, are more devastating. They are not as frequent as the incessant barrage perpetrated by outsiders, as we will see in the discussion in Chapter 2 of the CSI/FBI computer security survey.

Recognizing that intruders from the outside are a big problem for networked organizations, we should understand the actions that must be accomplished to gain unauthorized access. Some of the five phases that follow can be ignored or combined. Some intruders may augment these activities or dispense with them, according to their modus operandi and skill levels. Regardless, knowledge of these five phases of compromise provide a framework for understanding how and when to detect intrusions.

Reconnaissance

Reconnaissance is the processes of validating connectivity, enumerating services, and checking for vulnerable applications. Intruders who verify the vulnerability of a service prior to exploitation have a greater likelihood of successfully exploiting a target. Structured threats typically select a specific victim and then perform reconnaissance to devise means of compromising their target. Reconnaissance helps structured threats plan their attacks in the most efficient and unobtrusive manner possible. Reconnaissance can be technical as well as nontechnical, such as gathering information from dumpsters or insiders willing to sell or share information.

Unstructured threats often dispense with reconnaissance. They scan blocks of IP addresses for systems offering the port for which they have an exploit. Offensive code in the 1990s tended to check victim systems to determine, at least on a basic level, whether the victim service was vulnerable to the chosen attack. For example, a black hat19 might code a worm for Microsoft Internet Information Server (IIS) that refused to waste its payload against the Apache Web servers. In recent years, offensive code—especially against Windows systems—has largely dispensed with reconnaissance and simply launched exploits against services without checking for applicability. For example, SQL Slammer is fired at random targets, regardless of whether or not they are running the SQL resolution service on port 1434 User Datagram Protocol (UDP).

Sometimes the trade-off in speed is worth forgoing reconnaissance. For a worm like W32/Blaster, connection to port 135 Transmission Control Protocol (TCP) is itself a good indication the target is a Windows system because UNIX machines do not offer services on that port.20 An exception to this “fire and forget” trend was the Apache/mod_ssl worm, which performed a rudimentary check for vulnerable OpenSSL versions before launching its attack.21

Assume an intruder uses IP Address 1 as the source of the traffic used to profile the target. At this point the attacker probably has complete control (hereafter called root access) of his workstation but no control over the target. This limits his freedom of movement. To validate connectivity, he may send one or more “odd” or “stealth” packets and receive some sort of reply. When enumerating services, he may again rely on slightly out-of-specification packets and still receive satisfactory results.

However, to determine the version of an application, such as a Web server, he must speak the target's language and follow the target's rules. In most cases an intruder cannot identify the version of Microsoft's IIS or the Internet Software Consortium's Berkeley Internet Name Daemon (BIND) without exchanging valid TCP segments or UDP datagrams. The very nature of his reconnaissance activities will be visible to the monitor. Exceptions to this principle include performing reconnaissance over an encrypted channel, such as footprinting a Web server using Secure Sockets Layer (SSL) encryption on port 443 TCP.

Many of the so-called stealthy reconnaissance techniques aren't so stealthy at all. Traditional stealth techniques manipulate TCP segment headers, especially the TCP flags (SYN, FIN, ACK, and so on), to evade unsophisticated detection methods. Modern intrusion detection systems (IDSs) easily detect out-of-specification segments. The best way for an intruder to conduct truly stealthy reconnaissance is to appear as normal traffic.

Exploitation

Exploitation is the process of abusing, subverting, or breaching services on a target. Abuse of a service involves making illegitimate use of a legitimate mode of access. For example, an intruder might log in to a server over Telnet, Secure Shell, or Microsoft Terminal Services using a username and password stolen from another system. Subversion involves making a service perform in a manner not anticipated by its programmers. The designers of Microsoft's IIS Web server did not predict intruders would exploit the method by which Unicode characters were checked against security policies. This oversight led to the Web Server Folder Directory Traversal vulnerability described by CERT in 2000.22 To breach a service is to “break” it—to stop it from running and potentially to assume the level of privilege the process possessed prior to the breach. This differs from subversion, which does not interrupt service. Modern exploit code often restarts the exploited service, while the attacker makes use of the privileges assumed during the original breach.

Like the enumeration of services phase of reconnaissance, delivery of an exploit normally takes place via everyday protocols. Because intruders still are in full control of only their workstations, with no influence over their targets prior to exploitation, intruders must speak proper protocols with their victims. Since the attackers must still follow the rules, you have a chance to detect their activities. In some cases the intruders need not follow any rules because vulnerable services die when confronted by unexpected data.

Limitations caused by encryption remain. Furthermore, the capability for IDSs to perform real-time detection (i.e., to generate an alert during exploitation) can be degraded by the use of novel or zero-day attacks. In this respect, the backward-looking network traffic audit approach used by NSM is helpful. Knowledgeable intruders will launch exploits from a new IP address (e.g., IP Address 2, in the case of our hypothetical intruder mentioned earlier).

Reinforcement

Reinforcement is the stage when intruders really flex their muscles. Reinforcement takes advantage of the initial mode of unauthorized access to gain additional capabilities on the target. While some exploits yield immediate remote root-level privileges, some provide only user-level access. The attackers must find a way to elevate their privileges and put those ill-gotten gains to work. At this point the intruders may have root control over both their own workstations and those of their victims.

The intruders leverage their access on the victims to retrieve tools, perhaps using File Transfer Protocol (FTP) or Trivial FTP (TFTP). More advanced intruders use Secure Copy (SCP) or another encrypted derivative, subject to the limitations imposed by their current privilege levels. The most advanced intruders transfer their tools through the same socket used to exploit the victims.

In the case of our hypothetical intruder, when he retrieves his tools, they will be stored at a new system at IP Address 3. This is another machine under the intruder's control. The attacker's tools will contain applications to elevate privileges if necessary, remove host-based log entries, add unauthorized accounts, and disguise processes, files, and other evidence of his illegitimate presence.

Most significantly, skilled intruders will install a means to communicate with the outside world. Such covert channels range from simple encrypted tunnels to extremely complicated, patient, low-bandwidth signaling methods. Security professionals call these means of access back doors.

Consolidation

Consolidation occurs when the intruder communicates with the victim server via the back door. The back door could take the form of a listening service to which the intruder connects. It could also be a stateless system relying on sequences of specific fields in the IP, TCP, UDP, or other protocol headers. In our hypothetical case, IP Address 4 is the address of the intruder, or his agent, as he speaks with the victim. A second option involves the intruder's back door connecting outbound to the intruder's IP address. A third option causes the victim to call outbound to an Internet Relay Chat (IRC) channel, where the intruder issues instructions via IRC commands. Often the intruder verifies the reliability of his back door and then “runs silent,” not connecting to his victim for a short period of time. He'll return once he's satisfied no one has discovered his presence.

When covert channels are deployed, the ability of the analyst to detect such traffic can be sorely tested. Truly well-written covert channels appear to be normal traffic and may sometimes be detected only via laborious manual analysis of full content traffic. At this stage the intruder has complete control over his workstation and the target. The only limitations are those imposed by network devices filtering traffic between the two parties. This key insight will be discussed more fully in Chapter 3 when we discuss packet scrubbing.

You may wonder why an intruder needs to install a back door. If he can access a victim using an exploitation method, why alter the victim and give clues to his presence? Intruders deploy back doors because they cannot rely on their initial exploitation vector remaining available. First, the exploit may crash the service, requiring a reboot or process reinitialization. Second, the system administrator may eventually patch the vulnerable service. Third, another attacking party may exploit the victim and patch the vulnerable service. Intruders often “secure” unpatched services to preserve their hold on victim servers. Use of a back door is less likely to attract attention from IDSs.

Pillage

Pillage is the execution of the intruder's ultimate plan. This could involve stealing sensitive information, building a base for attacks deeper within the organization, or anything else the intruder desires. In many cases the intruder will be more visible to the network security analyst at this point, as attacking other systems may again begin with reconnaissance and exploitation steps. Unfortunately, intruders with a beachhead into an organization can frequently dispense with these actions. From the intruders' vantage point they may observe the behavior and traffic of legitimate users. The intruders can assume the users' identities by obtaining credentials and abusing privileges. Because most organizations focus their prevention and detection operations toward external intruders, an attacker already inside the castle walls may go largely unnoticed.

We can assess the chances of detection at each of the five phases of compromise. Table 1.2 highlights when and where detection can occur.

Table 1.2. Detecting intruders during the five phases of compromise

Throughout this book, we will examine intruder actions and the network traffic associated with those activities. Familiarity with these patterns enables defenders to apply their understanding across multiple protection and detection products. Like design patterns in software development, an understanding of intruder activities will bear more fruit than intimate knowledge of one or two exploits sure to become dated in the years to come.

Security Principles: Defensible Networks

I use the term defensible networks to describe enterprises that encourage, rather than frustrate, digital self-defense. Too many organizations lay cables and connect servers without giving a second thought to security consequences. They build infrastructures that any army of defenders could never protect from an enemy. It's as if these organizations used chain-link fences for the roofs of their buildings and wonder why their cleaning staff can't keep the floors dry.

This section describes traits possessed by defensible networks. As you might expect, defensible networks are the easiest to monitor using NSM principles. Many readers will sympathize with my suggestions but complain that their management disagrees. If I'm preaching to the choir, at least you have another hymn in your songbook to show to your management. After the fifth compromise in as many weeks, perhaps your boss will listen to your recommendations!

Defensible Networks Can Be Watched

This first principle implies that defensible networks give analysts the opportunity to observe traffic traversing the enterprise's networks. The network was designed with monitoring in mind, whether for security or, more likely, performance and health purposes. These organizations ensure every critical piece of network infrastructure is accessible and offers a way to see some aspects of the traffic passing through it. For example, engineers equip Cisco routers with the appropriate amount of random access memory (RAM) and the necessary version of Internetwork Operating System (IOS) to collect statistics and NetFlow data reflecting the sort of traffic carried by the device. Technicians deploy switches with Switched Port ANalyzer (SPAN) access in mind. If asymmetric routing is deployed at the network edge, engineers use devices capable of making sense of the mismatched traffic patterns. (This is a feature of the new Proventia series of IDS appliances announced by Internet Security Systems, Inc., in late 2003.) If the content of encrypted Web sessions must be analyzed, technicians attach IDSs to SSL accelerators that decrypt and reencrypt traffic on the fly.

A corollary of this principle is that defensible networks can be audited. “Accountants” can make records of the “transactions” occurring across and through the enterprise. Analysts can scrutinize these records for signs of misuse and intrusion. Network administrators can watch for signs of misconfiguration, saturation, or any other problems impeding performance. Networks that can be watched can also be baselined to determine what is normal and what is not. Technicians investigate deviations from normalcy to identify problems.

A second corollary is that defensible networks are inventoried. If you can watch everything, you should keep a list of what you see. The network inventory should account for all hosts, operating systems, services, application versions, and other relevant aspects of maintaining an enterprise network. You can't defend what you don't realize you possess.

Defensible Networks Limit an Intruder's Freedom to Maneuver

This second principle means attackers are not given undue opportunity to roam across an enterprise and access any system they wish. This freedom to maneuver takes many forms. I've encountered far too many organizations whose entire enterprise consists of publicly routable IP addresses. The alternative, network address translation (NAT), translates one or more public IP addresses across a range of private addresses. Internet purists feel this is an abomination, having “broken” end-to-end connectivity. When multiple private addresses are hidden behind one or more public IPs, it's more difficult to directly reach internal hosts from the outside. The security benefits of NAT outweigh the purists' concerns for reachability. NAT makes the intruder's job far more difficult, at least until he or she compromises a host behind the router or firewall implementing NAT.

Beyond directly limiting reachability of internal IP addresses, reducing an intruder's freedom to maneuver applies to the sorts of traffic he or she is allowed to pass across the enterprise's Internet gateways. Network administrators constantly battle with users and management to limit the number of protocols passed through firewalls. While inbound traffic filtering (sometimes called ingress filtering) is generally accepted as a sound security strategy, outbound filtering (or egress filtering) is still not the norm. Networks that deny all but the absolutely necessary inbound protocols reduce the opportunities for reconnaissance and exploitation. Networks that deny all but mission-critical outbound protocols reduce the chances of successful reinforcement and consolidation. These same sorts of restrictions should be applied to the IP addresses allowed to transit Internet gateways. An organization should not allow traffic spoofing Microsoft's address space to leave its enterprise, for example.

An additional element of limiting an intruder's traffic involves scrubbing traffic that doesn't meet predefined norms. Scrubbing is also called normalization, which is the process of removing ambiguities in a traffic stream. Ambiguities take the form of fragmented packets, unusual combinations of TCP flags, low Time to Live (TTL) values, and other aspects of traffic. The concept was formally pioneered by Mark Handley and Vern Paxson in 2001.24 The OpenBSD firewall, Pf, offers an open source implementation of scrubbing. Chapter 3 describes how to set up an OpenBSD firewall running Pf and performing packet scrubbing. Traffic normalization reduces an intruder's ability to deploy certain types of covert channels that rely on manipulating packet headers.

Defensible Networks Offer a Minimum Number of Services

There's nothing mysterious about penetrating computers. Aside from certain vulnerabilities in applications that listen promiscuously (like Tcpdump and Snort), every remote server-side exploit must target an active service.25 It follows that disabling all unnecessary services improves the survivability of a network. An attacker with few services to exploit will lack the freedom to maneuver.

Where possible, deploy operating systems that allow minimal installations, such as the various BSD distributions. An intruder who gains local access should find a system running with the bare necessities required to accomplish the business's objectives. A system without a compiler can frustrate an intruder who needs to transform source code into an exploit. Consider using operating systems that provide services within a “jail,” a restricted environment designed to operate exposed network services.26

Defensible Networks Can Be Kept Current

This principle refers to the fact that well-administered networks can be patched against newly discovered vulnerabilities. Although I'm not a big fan of Microsoft's products, I must advocate upgrading to its latest and greatest software offerings. What's the latest patch for Windows NT 4.0? It's called Windows Server 2003. This is no joke. Microsoft and other vendors retire old code for a purpose. Flaws in the design or common implementations of older products eventually render them unusable. “Unusable” here means “not capable of being defended.” Some might argue that certain code, like Plan 9, doesn't need to be abandoned for newer versions. Also, using sufficiently old code reduces the number of people familiar with it. You'd be hard pressed to find someone active in the modern computer underground who could exploit software from ten or twenty years ago.

Most intrusions I've encountered on incident response engagements were the result of exploitation of known vulnerabilities. They were not caused by zero-day exploits, and the vulnerabilities were typically attacked months after the vendor released a patch. Old systems or vulnerable services should have an upgrade or retirement plan. The modern Internet is no place for a system that can't defend itself.

Conclusion

This chapter introduced the security principles on which NSM is based. NSM is the collection, analysis, and escalation of indications and warnings to detect and respond to intrusions, a topic more fully explained in Chapter 2. Security is the process of maintaining an acceptable level of perceived risk; it is a process, not an end state. Risk is the possibility of suffering harm or loss, a measure of danger to an asset. To minimize risk, defenders must remain ever vigilant by implementing assessment, protection, detection, and response procedures.

Intruders bring their own characteristics to the risk equation. Some of them are smarter than the defenders they oppose. Intruders are often unpredictable, which contributes to the recognition that prevention inevitably fails. Thankfully, defenders have a chance of detecting intruders who communicate with systems they compromise. During reconnaissance, exploitation, reinforcement, consolidation, or pillage, an intruder will most likely provide some form of network-based evidence worthy of investigation by a defender.

Successfully implementing a security process requires maintaining a network capable of being defended. So-called defensible networks can be watched and kept up-to-date. Defensible networks limit an intruder's freedom to maneuver and provide the least number of potential targets by minimizing unnecessary services.

With this understanding of fundamental security principles, we can now more fully explore the meaning and implications of NSM.