Chapter 13. Wrapping up: putting the project away

This chapter covers

- Cleaning up, documenting, and storing project materials

- Making sure you or someone else can find and restart the project later

- Project postmortem reflection and lessons

- A project as data science experience and its products as tools

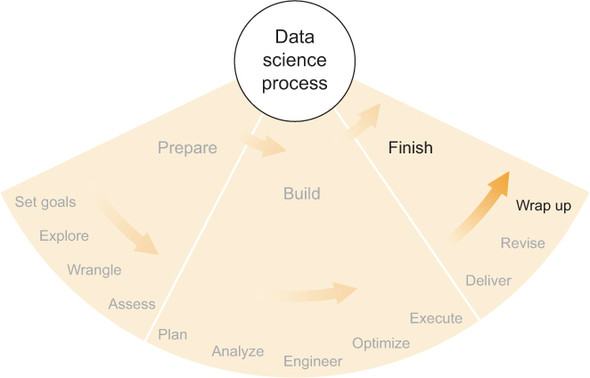

Figure 13.1 shows where we are in the data science process: wrapping up the project. As a project in data science comes to an end, it can seem like all the work has been done, and all that remains is to fix any remaining bugs or other problems before you can stop thinking about the project entirely and move on to the next one (continued product support and improvement notwithstanding). But before calling the project done, there are some things you can do to increase your chances of success in the future, whether with an extension of this same project or with a completely different project.

Figure 13.1. The final step of the finishing phase and the final step overall of the data science process: wrapping up the project neatly

There are two ways in which doing something now could increase your chances of success in the future. One way is to make sure that at any point in the future you can easily pick up this project again and redo it, extend it, or modify it. By doing so you will be increasing your chance of success in that follow-on project, as compared to the case when a few months or years from now you dig up your project materials and code and find that you don’t remember exactly what you did or how you did it. Another way to increase your chances of success in future projects is to learn as much as possible from this project and carry that knowledge with you into every future project. This chapter focuses on those two things: packing away a project neatly and reusably and learning as much as possible from the project as a whole.

13.1. Putting the project away neatly

It can be tempting to put a project out of your mind after your to-do list is done and there are no more tasks on the horizon. You usually have other projects pending, and you’d rather use your time to make progress with those instead of dwelling on a project that has been completed. But there are three future scenarios, among others, in which you’ll be happier if you take the time now to make sure project materials are organized and in a safe place:

- Someone has a question about the project, its data, its methods, or its results.

- You begin an extension or a new project based on this project.

- A colleague begins an extension or new project based on this project.

None of these cases appears daunting if it occurs immediately after the project in question. But if some time has passed—months or years—it becomes increasingly challenging to remember details. If you can’t remember them, you’ll need to consult the project materials to find answers. How much time and effort it takes you to do this depends entirely on how organized you were when wrapping up the project.

In this section, I’ll discuss thinking ahead to these future scenarios, but first I’ll cover the two practical concepts most relevant to putting a project away neatly: documentation and storage. To save yourself time and effort in the future scenarios I mentioned, you should make sure your project materials are easy to find (storage) and easy to understand (documentation).

13.1.1. Documentation

Project documentation can be thought of in three levels, depending on who might use it and how much technical expertise is required to understand it. Customers or users see the top-level documentation, whereas only software engineers building the product generally see the bottom-most level of documentation, and in the middle is technical documentation for folks who need to know how the software works but won’t need to modify it. Much like in designing and building a product, knowing your audience is helpful in creating documentation.

User documentation

The top level, user documentation, is what a customer would see in the product or in materials accompanying the product. This includes any reports, results summaries, and text and descriptions in any applications you built and delivered. User documentation should contain whatever information is necessary for someone to use your product in the way you intended. It’s the conceptual equivalent of the information needed to drive a car, without any reference to car maintenance or how to build a car.

User documentation might include the following:

- How to use the product

- The product’s main capabilities and limitations

- Any non-obvious information that might help the user

- Warnings against using the product in ways known to cause problems

Typically, user documentation appears either in the product itself or in materials that accompany the product. Some common forms of user documentation include the following:

- A help page within a web application

- A written document that’s given to all users explaining how to use the product

- A wiki or other resource provided for users

In general, the scope of user documentation doesn’t extend past the user interface or anything else that wasn’t explicitly intended for the customers and users to see. Information about how the product works behind the scenes should be reserved for lower-level documentation.

Developer documentation

The middle level, developer documentation, consists of information that a software developer would want to know if they were going to integrate with or programmatically use the product, or if they were going to build something similar themselves. Developer documentation should describe architecture and interfaces without delving too much into specific software implementations. It’s a complete surface-level description plus a conceptual internal description, of the same nature as the information needed to perform an oil change or replace the brakes on a car, without being too concerned with how the car was built. When changing the oil, it might be helpful to know that there is an engine inside the car and that the engine is connected to the gas tank, but the specific construction of these—including timing belts, pistons, valves, and more—is largely irrelevant to the task.

Developer documentation might include the following:

- Thorough descriptions of APIs and other points of integration

- Specific statistical methods that were implemented

- High-level descriptions of software architecture or object structure

- Data inputs and outputs, with content and format descriptions

Developer documentation doesn’t usually appear within the product itself, except when the product’s users are software developers. Here are some common forms of developer documentation:

- A wiki, a Javadoc, a pydoc-generated document, or other document describing an API

- A README file in the code repository

- A diagram or other description of the software architecture or object model

- A technical report describing the statistical methods used

- A written or graphical description of data sources and formats

- Examples of code that integrates or programmatically uses the software product

In general, developer documentation, as I’m using the term here, describes the inner workings of the software product or methods without necessarily getting into the product code and detailed structure.

Code documentation

The lowest level of documentation, code documentation, tells a software developer on the product development or maintenance team how the code works at the lowest level, so that they can fix bugs, make improvements, or extend the capabilities of the product. In the context of my earlier automotive comparisons, the equivalent of code documentation explains how to fix, disassemble, reassemble, and tune any part of the car, inside and out. A funny but apt aspect of this comparison is that this low-level documentation says nothing about how to drive the car. Driving a car and fixing a car require nearly mutually exclusive sets of knowledge, though admittedly they aid one another. The same is true for code documentation and user documentation. There may be a little overlap, but generally speaking they’ll be different.

Code documentation may include the following:

- Descriptions of objects, methods, functions, inheritance, usage, and so on

- Highly detailed descriptions of object structure and architecture

- Explanations about why certain implementation choices were made

Code documentation usually accompanies the code itself or is somewhere close by. Some common forms of code documentation are these:

- Comments in the code alongside the thing they’re describing

- READMEs or other documents in the code repo

- Software architectural diagrams

- Documentation generated automatically using Javadoc, pydoc, or other similar application

In general, code documentation should provide enough information for any developer on the product team to navigate, understand, and work with the code base in a reasonably efficient manner. Without good code documentation, such a developer would have to figure out how the code works the hard way: by reading the code itself. For sufficiently complex software products or code that isn’t inherently readable, this is a rabbit hole no one wants to find oneself in.

13.1.2. Storage

By storage, I mean the ways in which you might store all material—code, documentation, data, results, and the like. More convenient and navigable locations make it easier to find answers to questions that pop up later or to revive the project entirely if need be. In addition, safer, more reliable locations help ensure the continued existence of the materials well into the future.

In this section, I consider both the location in which the materials are stored and the format in which they’re stored. Because I’m mixing these two concepts, the alternatives listed might not be direct replacements for one another, but it’s often difficult to separate the location from the format—for example, with remote Git repos—so I outline the capabilities of each so that it’s clear which alternatives can be used for what purpose.

Local drive

Usually the easiest place to store your code, data, and other files, your local computer is a reasonable choice for projects that aren’t that important. But there are serious risks and limitations when you’re using only one machine and not backing up your files anywhere else. See table 13.1 for a summary of when and why to use a local drive.

Table 13.1. Benefits and risks of using a local drive for storage

|

Disadvantages |

Best for |

|

|---|---|---|

| Easy Convenient Can store any file formats You have complete control. | Limited disk space Single point of failure or loss in case you break or lose the computer No one else can access it without you. | Personal projects that are easy to redo Whenever you have a backup somewhere else |

Network drive

A shared network drive at your place of work is often bigger than your local computer’s drive, and it may also have regular backups to another location by the IT department. Usually you can connect to such a drive either by logging into the system or by using a computer that has been explicitly connected to the network and the drive. See table 13.2 for a summary of when and why to use a network drive.

Table 13.2. Benefits and risks of using a network drive for storage

|

Advantages |

Disadvantages |

Best for |

|---|---|---|

| Can store any file formats Managed internally, for example, by an IT department There is often plenty of space. Other people can access your files if you choose. | Limited access There may be a single point of failure if not backed up elsewhere. Can get disorganized if many people are using it | Projects shared between people Saving all of your files in one place without extra work |

Code repository

I’ve mentioned source code repositories previously in this book, in conjunction with version control tools like Git. For anything but the most trivial coding projects, committing your code to a repo and pushing it to a remote location is strongly recommended. You or your organization can manage your own repo server, or you can use one of the popular web-based remote repo providers such as Bitbucket or GitHub. Not only does this provide a duplicate, managed location for your code to reside in, but it also provides a central location for viewing and sharing the code. See table 13.3 for a summary of when and why to use a code repository.

Table 13.3. Benefits and risks of using a code repository for storage

|

Advantages |

Disadvantages |

Best for |

|---|---|---|

| Great for sharing code and working on it together Remote servers function as both version control and as a backup copy. | It’s not great for anything but code and other plain-text-based file formats. Repo software like Git can take some time to learn. | All code Plain-text-based files Whenever the history of changes may be important |

READMEs

Though not technically a location or a format, a README is a text-based file that accompanies an application or code, typically residing in the same folder. Its format is generally some kind of markup language that can be read directly in any text editor or by an interpreter of the markup language. There can be multiple READMEs, one in each folder in the code or project structure, each describing what’s in that folder. They can be committed to code repos as well, and both Bitbucket and GitHub provide nice facilities for viewing READMEs in certain formats (for example, the Markdown markup language, among others) directly in a web browser. I highly recommend providing READMEs with your code, including important developer- and code-level documentation. See table 13.4 for a summary of when and why to use READMEs.

Table 13.4. Benefits and risks of using READMEs

|

Advantages |

Disadvantages |

Best for |

|---|---|---|

| Lightweight format Resides in the same place as code Saved in all the same places as the code Markup languages enable reasonably good formatting. | The text-based format isn’t as flexible as wikis and other more sophisticated document types. It’s part of the code base and not that shareable without including code. | Documentation that isn’t within the code itself but should always accompany the code |

Wiki system

Wikis are web-based systems designed for multiple, shared, interrelated documents that may be updated and expanded repeatedly. Wikipedia is the canonical example of a large system of wikis. Documents can be related to each other through links, and good wiki systems update all relevant links whenever a document’s location changes, something you’d have to do by hand if you tried to use a less-sophisticated documentation system. See table 13.5 for a summary of when and why to use a wiki system.

Table 13.5. Benefits and risks of using a wiki system for documentation and storage

|

Advantages |

Disadvantages |

Best for |

|---|---|---|

| Handles links and references between documents better than other media Wiki markup languages allow fairly sophisticated formatting and page structure. | They can be tedious to set up and manage. Few good wiki products are free to use. Without active maintenance, wikis can get disorganized or cluttered. | Extensive non-code documentation with many links and references between documents Documents requiring more formatting or structure than plain text generally provides |

Web-based document hosting

Google (Drive/Docs) and Microsoft (Office 365), among others, offer online document and file hosting. These can be helpful for organizing files and documentation, as well as offer a browser-based document editor that’s accessible from any computer. The documents hosted are generally in a few familiar formats: word-processing-style documents, spreadsheets, diagrams, graphics, and so on. You may also be able to upload other file types but not work with them through a browser. In that way, these can act as a plain remote backup server that’s managed by the hosting company. See table 13.6 for a summary of when and why to use a web-based document hosting.

Table 13.6. Benefits and risks of using web-based document hosting for storage

|

Advantages |

Disadvantages |

Best for |

|---|---|---|

| Sophisticated word processing and spreadsheets Handles many file types Collaborative editing The major companies offer near-flawless reliability. | Not good for storing code Can get messy without some effort toward organization These systems have some quirks, for example, in the conversion from file to web version and vice versa. | Documents of the sort you might want to print or turn into a PDF Spreadsheets, diagrams, graphics, and some other specialized file types Printable user documentation |

13.1.3. Thinking ahead to future scenarios

Now that I’ve talked about some available types of documentation and storage, I’ll return to the three future scenarios I listed at the beginning of this section and discuss the implications of each on the choices you might make at the end of a project.

Someone has a question about the project

If you finished a project a while ago and someone comes to you with a question about the data, methods, or results and you don’t know the answer offhand, you’ll have to go fishing through the old materials. The questions to ask yourself, in order, are these:

- Did you save the relevant materials somewhere?

- If yes, can you find those materials now?

- If you find the materials, can you find the answer to the question within them?

For the first question, let’s hope the answer is yes. If you didn’t save the materials, then you’ll have little hope of answering the question. The lesson here is that you should always save your project materials in a safe place whenever there’s any chance you’ll need them in the future.

Question 2 deals with your ability to find everything you need to answer the question. Beyond the fact that you did save the materials, hopefully you saved them in a place that you remember (or can locate) and that’s relatively convenient to access. If not, that’s something to think about the next time you’re finishing a project. Consider choosing one of the storage options I mentioned previously or another that has the reliability and convenience for keeping your project materials safe for years to come.

The organization and documentation of your materials is the crux of the third question. If all you have is a pile of files in your project folders and no document that describes them, you might have a tough time finding what you’re looking for. And next time consider using a storage location and format that are appropriate for the types of materials you have. In addition, good user and developer documentation is crucial to being able to navigate project materials and to find definitive answers about details of the project. Without such documentation, some details of the project are bound to be forgotten. Some of them might be able to be inferred from code and tangential details, but some won’t. To avoid loss of significant knowledge, it’s best to capture whatever knowledge you can into documentation at the end of a project. Not only might it save you time and effort, but it may also ensure that customers and others don’t lose faith in your work if they come back to you with questions later.

You begin an extension or a new project based on this project

As in the previous scenario, in which someone asks you a question about the completed project, this scenario requires that you can find and sort through all of your old materials. But here you’ll need to be able to understand the materials on a deeper level. You’ll need to understand the code or other aspects of the statistical software well enough to modify it or integrate something with it. This is a much bigger challenge than figuring out what was done and what the results were.

Specifically, knowing what code does and knowing how it does it are different things. If you’re going to work with existing code, you’ll need to know how the code works—or at least how part of the code works. User and developer documentation won’t help much, but code documentation will. Without good code documentation or a reliable memory of how the code works, you’ll have to read the code and decipher it yourself.

When finishing up a project, put yourself in the shoes of your future self and ask, “What parts of the code and software functionality might I find confusing in a year or two?” Be generous in your answer. Some things that seem obvious now might not seem obvious after you’ve been away from the project for a while.

At the least, you should go through each of the major parts of the code and document the first things that jump out at you as possibly becoming confusing later. A detailed set of instructions on how to use the product as well as a description of the overall software architecture can also be immensely useful.

A colleague begins an extension or new project based on this project

If you can imagine what it would be like for you to revive your project at some point in the not-so-near future, think what it would be like for someone else to do the same—without you. What would happen then? In my experience, they would falter in the same points that you would, plus a few more because they weren’t working on the project the first time around.

Put yourself in their shoes. What would they need to know in order to follow in your footsteps? You could comb through your code, find any examples of code that might be confusing, and write a comment about why you did it that way. That’s the brute-force method, and it’s not necessarily recommended unless you have exceptionally poorly readable code or you have a ton of time. More efficient and probably more helpful would be to think about which parts of the software are novel and would seem confusing to a completely unfamiliar eye. Do you do anything unconventional, like coding style, complex statistical methods, or any algorithmic tricks that improve performance in a non-obvious way? If so, you should document them.

Documenting your work for someone else is a far more thorough affair than documenting it for yourself. You have to think beyond your own conventions and your own perspectives. It might be a new colleague who is tasked with taking over the project. Maybe they know almost nothing about the history of the project, and they’re suddenly supposed to understand it all and make some use of it.

Like working on the user experience of a piece of software, creating documentation is an exercise in empathy. If you can imagine what someone might not understand about the project and the code, you can write much better documentation on all levels by helping them understand. Imagine any software developers at your current employer—senior and junior—the data scientists who might come after you, the person at the next desk over; these people have any number of reasons not to understand your project, your documentation, or your code. But if you can put yourself in their shoes, you can begin to see which parts of your work they might not understand, and you can create the documentation that addresses that. Write the documentation—user, developer, and code—as if you were never going to return to the project, but someone else will. Create the documentation that they would need to continue with it without your input. If someone can take over the project without you helping, based on your documentation, you’ve succeeded.

13.1.4. Best practices

I take the following steps when documenting and storing project materials:

- Skim the code, editing for organization and readability and adding comments for my future self and for others who might have to read it.

- Write high-level READMEs for each major component of the code, explaining basic functionality and usage; include these with the code.

- Commit the code to a Git repo and push it to a remote host such as Bitbucket.

- Collect all project results, reports, and other non-code materials, and place them in a shared, reliable storage location; also include raw data if it’s not too big.

- Leave myself breadcrumbs to help find all the pieces again; for example, the main README could contain links to the locations of all the materials. Or I sometimes email myself all the information so that all links are in one place.

- If I’m working on a team, I document the location of all the materials on the team’s main documentation system, such as a shared wiki, where everyone can find it easily.

Much of this chapter was written as if documentation and storage are something you handle at the end of a project. It’s better and easier (in the long run) to concern yourself with both starting from day one of the project. Documentation in particular will most likely be far better if you’ve been documenting things from the beginning. Here are a few things that I do throughout every project to improve the state of documentation and storage at the end:

- I commit all code and push it to a remote host as soon as the code is nontrivial.

- I don’t write user or developer documentation until I’m fairly certain they won’t need to be completely rewritten in the near future (documentation has a nasty habit of becoming obsolete).

- When I’m fairly certain that the user and developer experiences are stable, I write quick-and-dirty documentation for them.

- Every time I’m reading my code and I find a section that confuses me, even a little, I write a comment explaining the confusing part to myself.

- I put user and developer documentation either into READMEs or on a wiki, depending on their complexity and the other particulars of the situation.

Following these general guidelines has saved me much time and effort whenever I’ve had to return to an old project.

13.2. Learning from the project

I’ve covered some ways in which you can improve your chances of success if at some point in the future you have to revisit that project. Obviously, knowledge you gain from a project will help if you revisit that same project. But that same knowledge can also help you with other data science projects. To that end, it can be helpful to formally consider all the things you’ve learned from the project and then extract from these things some new knowledge that’s applicable not only to this project.

Such knowledge might concern the technology you used. Was a piece of software better or worse than you expected? Did you use infrastructure that ended up causing you problems? The new knowledge could also relate to surprises you may have found in the data. Were your preliminary analyses and descriptive statistics thorough enough? Did your software crash because of unexpected values in data? In any case, there are things that you’ve learned and probably things that you’d do differently if you started the project again. Some of these are project specific, and some of them can be treated as lessons to be applied in future projects. By conducting a project postmortem, you can hope to tease out the useful lessons from the rest.

13.2.1. Project postmortem

A project postmortem is a formal consideration, after the project is over, of everything that happened during the course of the project, with the intent of learning some things that will help in future projects.

A funny thing about memory is that if you know a particular fact now, it can be hard to remember what it was like before you knew that fact. Figuring out what you’ve learned since the beginning of a project is not always easy. If you’ve been making notes throughout, though, the task can be much easier. In particular, the goals you set and the plan you made way back in chapter 6 can now provide a lot of insight into what you knew and what you thought you knew back then. Chapter 2 framed some questions whose answers would fulfill some fundamental goals of the project; these can be helpful as well.

Review the old goals

The goals that you stated in chapters 2 or 6 were based on the knowledge you had back then; you had no choice. If you can’t remember what you didn’t know then, maybe your goals from back then will betray your ignorance.

Let’s revisit the beer-recommendation algorithm project from chapters 2 and 6. The main goal was to be able to recommend beers a user of the application would like, and to do that, you’d have to predict the score that a user of the application would give a particular beer they tried. Assume that you stated specific goals of achieving 90% accuracy on recommendations and a 10% standard error in score predictions and that you achieved neither of these.

What are some reasons why you failed to achieve those goals? One possibility is that the data set wasn’t large enough or was too sparse to support such high accuracy. Perhaps there were hundreds of beers and hundreds of users, but the typical user had rated only 10–20 beers on average. It would be hard to predict the rating of a beer that few people had rated. Another possibility is that human taste and preference have a large variance and are inherently not predictable to the degree stated. Research supports this theory to an extent—unrecorded factors such as the context in which the person tried the beer and what they ate or drank beforehand can influence ratings beyond the 10% error rate you were striving for.

In any case, every goal that wasn’t achieved has some main suspects for its demise. Pinning down a few of these can show you what you didn’t know before and hence what you’ve learned since you stated the goal. Can what you’ve learned be applied to future data science projects? In the case where the data was too sparse, you might be more wary in the future of the promises of data sets and be less optimistic about the accuracy of results until you’ve proven them possible. In the case where you realized that human preference is fickle, you learned that some factors can’t—or won’t—be measured such that you can make use of them, and these factors can limit your accuracy and success. In future projects, you might be less ambitious or at least acknowledge the possibility that inherent and insurmountable variances might prevent you from achieving the goals.

Review the old plan

Like old goals, an old plan can tell you a lot about what you knew and what you were thinking at the time you made it. It can be helpful to look at it again to filter out what you’ve learned since then and again to consider whether what you’ve learned constitutes a lesson that you can carry into future projects.

Consider the plan for the beer-recommendation algorithm project that appears in figure 6.2 of chapter 6. This plan has two outcomes: either the accuracy goals were met or they weren’t, and the user interface would depend on that outcome. One aspect of that plan that now seems a bit naïve is that it seems to imply that accuracy of the algorithm is by far the most important thing in the application. The entire product design depends on the accuracy achieved by the algorithm. There’s nothing inherently wrong with this perspective, but it relies heavily on the data and the math. Good algorithms don’t guarantee that users will show up in the application, and maybe there’s another option that can make the application a success without requiring high accuracy predictions.

If the main goal of the project is to make an app that engages users and keeps them coming back, then it may not be entirely necessary for the algorithm to have near-flawless accuracy. Perhaps there’s another way to engage users, and it might become obvious after releasing the app, but it wasn’t obvious before. A more flexible plan that included as an option redesigning the app may have been a better choice. Market research wasn’t part of the plan, but maybe it should have been; you can carry that lesson into future projects.

Review your technology choices

Beyond deviations from the goals and plans that you set earlier in the project, you may have learned something new about the technologies that you chose to use. Did the programming language you used cause problems at any point? Did you elect to use a database that caused more problems than it was worth? Were the statistical methods and the requisite software tools the right choice, or is it now clear that you should have chosen something else?

Sometimes software tools fulfill the promise of what you wanted them to do, but sometimes they fall short. Maybe you tried to use the Perl language to do some text parsing but then found it inordinately difficult to integrate the results with the rest of your application, which is written in Java. You ditched Perl and tried Python instead, finding that Python isn’t quite as easy to use for text parsing, but it integrates more easily with Java applications. Your experience with each of these languages depends entirely on your knowledge and expertise with them, but in this scenario, you’ve learned about advantages and disadvantages of both languages.

Likewise, you may have included big data software like Hadoop or Spark in your original implementation, but did you need it? For experts, it’s no problem to include these technologies in an application, but for others it’s an additional workload to use these specialized tools in their applications. Was the extra effort in development and maintenance worth it?

Whenever you include new or somewhat unfamiliar technologies in a project, you stand to learn something about those technologies. In future projects, whatever you learn can be used to make better decisions about which tools to use for which task and why. It can be helpful to ask yourself now, “Did I make good technology choices, or do I now realize I could have done better?”

Do it differently next time

Unless you’re perfect, there probably was a time during the project when you realized you should have done something differently. It can be a learning experience to realize that another choice would have been better, but in many cases this realization is based on knowledge that you didn’t have at the time you made the decision. If you can generalize that realization and carry it into future projects, it might be helpful.

It’s tough to formalize the process of generalizing lessons from a specific project to the set of all future projects. If the lesson deals with software or other tools that you used, you can certainly apply that knowledge to future projects; for example, if a software tool turned out not to be as good as you thought, you probably shouldn’t use it next time you’re in a similar situation. But if the lesson concerns something more specific to the project, such as the data or the goals, it may not be easy to apply it in the future.

For example, it may have been impossible to know that people’s preferences were too fickle to support 90% accuracy in beer recommendations, but for future projects it’s possible to generalize that lesson and recognize that some accuracy benchmarks are unattainable. Assuming that better statistical analysis will give better results, to an arbitrarily accurate degree, is a fallacy. It’s best to learn from lessons like that early and to proceed cautiously (but ambitiously) into future projects, when merely the awareness of the possibility of the same type of outcome can help you in your planning and execution.

Whether there’s a specific lesson you can apply to future projects or a general lesson that contributes to your awareness of possible, unexpected outcomes, thinking through the project during a postmortem review can help uncover useful knowledge that will enable you to do things differently—and hopefully better—next time.

13.3. Looking toward the future

The project is over, all the materials are documented and in safe places, and a postmortem review unveiled a few lessons you can take with you into projects yet to come. Regardless of how successful the project was—let’s hope it was a resounding success—one thing is certain: you have more experience in the field of data science.

It’s clearly a truism: the more data science you do, the more experience you have. None of it guarantees that you’ve done good work or that you will in the future. But more experience does directly imply that you have more projects to learn from. Doing good data science depends highly on the experiences you’ve had and what you’ve learned from them. This is true for all scientific fields. You can know everything there is to know about statistics and software development, but with little experience it’s hard to anticipate any of the multitude of uncertainties that affect every project in data science. If you can use all your past experience to foster an awareness of all the things that might go wrong in a given project, your chances of success in that project increase dramatically. You can plan a full set of alternatives and hedge your bets.

On the other hand, no matter how much you learn, you can’t foresee everything. Data is unpredictable, or perhaps it’s better to say that data always offers the possibility of something unexpected. Even if you can make accurate predictions 9 times out of 10—or 99 out of 100—things might change. The underlying system might start behaving differently, or maybe the data source could become less reliable. In either case, your experience might be able to help you figure out what the problem is, particularly if you can connect the current situation with any of your past projects and set a strategy accordingly.

Along with all the uncertainties in data science, one certainty is that software and other tools will continue to change. Every year, and practically every month, new and improved software tools appear on the market. Some of them are great; some are not. I’m a fan of staying with tools that have been proven to work for the task at hand; there are fewer uncertainties and generally more people who can help out when problems arise. New tools might be able to do something that nothing else can, but only in exceptional circumstances are exceptional tools necessary. If you stay up to date on new developments, though, and you utilize all your experience, you might recognize the moment when you need something entirely new and specialized. That isn’t a common situation, but it does happen, and when it does it can lead to significant breakthroughs in analytical technologies. But that’s the exception and not the rule.

Given the excitement surrounding many new software tools, the more difficult challenge is often to resist using them until they have an established track record. It’s usually the cool thing to do to use the newest, most exciting software, but unless you can foresee the state of the industry in a few years, you might want to wait until you’re sure that a particular tool is reliable and durable before you use it in your project. Unlike statistical methods, which can be proven correct or not, software is shown to be good or bad only through use. It’s best to choose your software based on what you know and not on what you hope is true. With both statistics and software, you have to take care in what you choose, because both have a large effect on a project’s results. New developments can affect your choices, but it’s important to remember a fundamental difference between the two main aspects of data science: software changes all the time, but statistics are forever.

If you take away only one lesson from each project, it should probably relate to the biggest surprise that happened along the way. Uncertainty can creep into about every aspect of your work, and remembering all the uncertainties that caused problems for you in the past can hopefully prevent similar ones from happening again. From the data to the analysis to the project’s goals, almost anything might change on short notice. Staying aware of all of the possibilities is not only a difficult challenge but is near impossible. The difference between a good data scientist and a great data scientist is the ability to foresee what might go wrong and prepare for it.

Exercises

Reflect on your past and present projects, and try these exercises:

Think of a project (data science or otherwise) you’ve worked on in the past, preferably more than a year ago. Where are the materials and resources related to that project? If someone asked you to repeat, restart, or continue that project today, would you be able to? Could you have done anything back then to make it easier today?

Consider your current job and place of employment. Where are shared resources kept? Can you find what you’re looking for easily? If it were your job to come up with a detailed plan and policy for archiving completed projects, what would you include?

Summary

- Organizing project materials and storing them in a reliable place can spare you from headaches later if you have to revive the project for any reason.

- It’s important to document the software and the methods so that you and your colleagues can understand every aspect of the project and work with it in the future.

- Documentation is an exercise in empathy; you have to imagine what you and others might not understand in the future and write explanations accordingly.

- Conducting a formal project postmortem can reveal many lessons that may not have been obvious otherwise.

- Every project offers many lessons to be learned, and many of them can be generalized to apply to almost any future data science project.

- Data science is mostly about recognizing when something unexpected might occur, and awareness of such uncertainties can make the difference between success and failure in future projects.