Chapter 2. Defining UAT—What It Is…and What It Is Not

Introduction

If I know you as well as I think, you probably skipped the Introduction to this book. I am not going to waste your time spending the first chapters defining terminology and quality assurance concepts like most books on testing. Heck, I’m not even going to explain the difference between black-box and white-box testing. But you do need to have a good understanding of the goals, expectations, and scope of UAT before learning how to be successful at it. In fact, I insist on going further than other testing books with the depth that I explore topics indirectly related to UAT. My goal is this and only this: to provide you with everything you need to know to be successful and effective at representing the user throughout the software development process.

In this chapter, you will learn what UAT is. Then, you will learn how uncovering software issues early in the development process saves significant time and cost for the entire project. Next, you will learn the warning signs that your organization needs to improve its UAT capability, as well as the menu of approaches available for user testing to help you determine which makes the most sense for your projects. We will look at how to include users in developing requirements and how embracing the design process, even if not invited, allows you to represent users’ interest far more effectively than through other downstream activities. I then describe the cyclical tendency in the way that testing organizations evolve over time, followed by a contrast between UAT and usability testing. This chapter ends with some special considerations for data validation and a review of the end goals for a UAT project.

How Can UAT Be a Silver Bullet?

The term silver bullet describes any number of overhyped tools or processes that promise to cure the many ills of software development as easily as a silver bullet can take down a pesky werewolf. These approaches are great when they deliver on their promises. The silver bullet of an efficient and effective UAT program, for a change, can be shot from the Business side of the house rather than the Information Technology (IT) side.

The goal of UAT is to validate that a system of products is of sufficient quality to be accepted by the users, and ultimately, the sponsors. It is helpful to think of quality as the degree to which the deliverables meet the stated and implied requirements in such a way to produce a usable system. This is a variation on the ISO and PMI definitions of quality, but it is sufficient for our purposes. Two key points in these definitions are that this UAT does not include searching for every coding defect, and that quality does not require every requirement to be documented to justify addressing an issue during UAT.

A common misconception regarding UAT is that it is a discrete segment of the software development lifecycle that falls somewhere between system testing and deployment. When UAT is a discrete activity, there are very few options for improving it. That would be like saying that your revenue will be $100 and your costs will be $90; now figure out how to increase profits. Not a lot to work with.

Through the remainder of this book, we will broaden the definition of UAT. Think of UAT as the idea that the user should be represented in every step of the software delivery lifecycle, including requirements, designs, testing, and maintenance, so that the user community is prepared, and even eager, to accept the software after it is completed.

The traditional distinction between levels of testing can be thought of as the following:

• Unit testing. Validates that code works as designed.

• System testing. Validates that a system meets the system requirements and design specifications.

• End-to-End testing (sometimes called integration testing or flow-through testing). Validates that all systems interacting to fulfill a business process work effectively in conjunction.

• UAT. Validates that users can perform their jobs using the new or modified system, and that requirements were given, documented, interpreted, designed, and developed correctly.

The traditional approach to enterprise software development is that users are represented moderately in the definition phase and extensively in the requirements phase, but then they are mostly left alone until after all of the testing by the IT department is complete and the software is “ready for UAT” (see Figure 2-1).

Figure 2-1. Traditional user involvement by phase.

The flaw in the traditional approach is that it consistently asks the users to settle for what they are given because so many resources have already gone into making sure a system works as it does. By the time the users first try the new system, so little time is left before deployment that any modification is seen as a great burden that the user community needs to justify. As a UAT coordinator, I have even been required to create a business case to justify “modifying” the system to do what the users originally asked for it to do! The result is suboptimal software, negative perceptions of the IT organization, forgone benefit to the business, and animosity all around. In short, nobody wins when the user participation is minimized or postponed.

One simple rule to remember is that anything you plan to test during UAT should be tested during end-to-end, integration, or system testing first. Further, anything that will be tested in UAT should first be signed off on by user representatives in the design processes to catch issues early, or prevent them altogether.

Figure 2-2 shows an alternative approach. It shows that by involving the users more heavily in the design and system testing stages of the process, you can actually reduce the total time the users spend across all tasks. This approach works because it is faster, cheaper, easier, and less stressful to uncover incorrect assumption, inaccurate requirement, or unworkable approaches the first time they occur in the process.

Figure 2-2. Ideal user involvement by phase.

However, the benefits to company resources from a user-based approach are even more dramatic. Even an extremely usable system can be a complete failure in terms of cost and budget if you don’t have optimal user participation and external review processes throughout the software delivery process. To demonstrate this point, a TRW study found the relative time taken to identify and resolve defects in each phase of the software delivery lifecycle. Note that fixing a problem during operations was found to cost as much as 1000 times more than addressing it during the requirements phase (see Figure 2-3).1

Figure 2-3. Relative time to identify and resolve defects in each phase of TRW’s software delivery lifecycle.

Another study of the NASA space shuttle’s onboard software found the relative cost to fix a problem during later phases to be as much as 92 times greater than one detected during the design and implementation phases (see Figure 2-4).2

Figure 2-4. Relative cost of fixing NASA software problems discovered in various stages.

Although these studies have some inconsistencies in their results, the point is clear: Any investments or process changes that make software “correct” earlier in the process have a significant return on investment.

Signs That Your UAT Needs Help

The following are common problems that result from a traditional approach to UAT. Think about which of these you see in your own organization:

• The time period assigned to UAT is not sufficient for the resulting code re-work that the users demand.

• Users refuse to accept the system because the advantages don’t outweigh the pain of the required workarounds.

• The users only accept the system if there is a “point release” (e.g., the fix to the problem in version 1.0 will be in version 1.1) promised within a month of the major deployment to finish fixing the issues they uncovered during UAT.

• Users find functional “defects” immediately after system testers validated that all test cases passed.

• Users are faced with strong political pressure to accept a new system rather than buying into the benefits on their own.

• Systems fail because not all decision makers and influencers have bought into the organizational changes associated with the new system.

• Organizations become preoccupied with finding ways to delay releases in such a way that blames a different organization for the schedule slippage instead of working together to make it succeed.

• Trainers are unable to show users a working version of the finalized system because developers scramble to modify code up to the last minute to fix critical issues found in UAT.

A holistic approach to UAT mitigates the preceding problems by ensuring that users’ needs are satisfied up front, that issues are identified as early as possible, and that users buying into the design decisions are not surprised by any issues near the launch date.

Choosing Your Approach to UAT

Users of systems often provide their requirements in the form of use cases, narratives, user stories, or simply through meetings and emails. Often, it is an IT representative that translates the users’ wishes into discrete requirements that can be easily coded and eventually tested. System testers usually validate system requirements, and even designs, rather than directly testing business requirements. This is because it is much more difficult to base test cases on functional requirements than to base them on system requirements due to their format, language, and structure.

Most companies recognize the need for users to validate a system before it is put into production use. Decades of software development have left it undeniably clear that development groups cannot on their own satisfy users, even if they start with very detailed requirements. However, the activities that make up UAT vary based on the market, type, and complexity of the software being produced, the impact of defects, the availability of end users to participate, the experience of designers, company resources, and so on. Some organizations find it cost-effective to conduct pilot markets, friendly user tests, customer experience tests, production validation tests, parallel usage periods, mock runs, and even pre-production simulations.

One driver of which approach to user testing is available to you is the type of system you will be testing. This book focuses on enterprise systems, which are robust information systems that serve the data or computing needs of a company’s business processes in a centralized, cross-functional, or integrated way. Such systems primarily have internal users, but often some external users as well. Consumer software, by contrast, typically designs and sells to individual people or other companies.

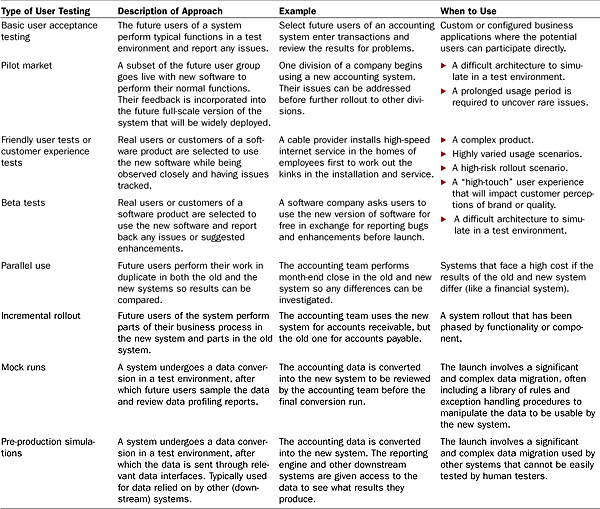

When dealing with internal users, they are typically represented by subject matter experts (SMEs), which are workers, supervisors, or managers who are well-versed in one or more of the company’s key business processes or functions and on the procedures and systems that support it. (For more on SMEs, see Chapter 4.) External users, on the other hand, are represented by soliciting volunteers, offering incentives for people to join focus groups or fill out surveys or reaching out to user groups of enthusiastic users. Often, however, the internal marketing or sales staff is used as a proxy for external customers based on their research or their experience in making sales and resolving issues. Be sure to understand who your users are before proceeding to plan a UAT project. Table 2-1 shows different approaches to user acceptance testing that may be mixed and matched for any project.

Table 2-1. Types of User Testing, Definitions and Typical Usage Scenarios

Although the nuances of each of the preceding could be the topic of its own book, they share enough features to benefit from the lessons covered here. Each type of UAT in the table shares the following:

• Each user testing phase is reliant on prior successful testing phases before it begins.

• Tests are not performed by members of the IT department, even if they are involved with the test case development and monitoring.

• The criteria for passage are not strictly based on meeting requirements, but rather on what makes sense in a real-world environment.

• If the testing does not “pass,” the system may or may not be rolled out to a production usage situation as planned.

User Representation in Requirements and Design

The following scenario is both common and disastrous. The users give their requirements to IT representatives through meetings, phone calls, or email. IT analysts document functional requirements and convert them into a system requirements document. Then, IT staff design and code a solution and have the system testing team make sure the code matches those system requirements. The first time the users see the outcome is during UAT testing a couple weeks before deployment, when it is too late to make major design changes.

Have you ever played the telephone game as a child? You whisper a secret in the ear of the person next to you. That person whispers it again in the next person’s ear, and so on down the line. By the time the last person announces his interpretation of what he heard, it is comically different from the original secret. It is not quite as funny when a software solution is not what the users had in mind and there is great pressure to roll out the system into production. Maybe there are even several other systems that are dependent on the functionality that is packaged together with the problematic code.

Requirements are what the business representatives want the IT department to build for them. At this phase, one well-worded sentence can save thousands or millions of dollars by setting the designers and developers on the optimal path. Despite the apparent logic, you should never have intended users write up a detailed document describing what the system should do and send it to your IT department to implement. Instead, formulate requirements in cooperation with an IT designer. This may appear to contradict the importance of user participation, but some of the biggest problems from having the business users write requirements include the following:

• Confusing goals with requirements. At the early stage of a requirement, users should only describe what they want to do and why they want to do it. The decisions about how to provide that functionality can only be made in the context of the technical limitations of the systems and the other functionality that will need to be put into the system at the same time. Also, a consistent look and feel should be achieved, which is especially important if the requirements for each functional component come from different sources.

• The bias of anchoring. People have a natural tendency to anchor onto an idea and only deviate when there is a reason to. This has been studied numerous times. It is as blatant as this simple experiment demonstrates:

Ask person #1 whether the population of Indonesia is above or below 200 million. Then ask them to guess the actual number.

Ask person #2 whether the population of Indonesia is above or below 400 million. Then ask them to guess the actual number.

Person #2 is much more likely to give a higher guess since they mentally anchored on the larger number. The same tendency is at work when you tell an IT designer to create a drop-down list that automatically gives an error message if a user selects any invalid choice. The designer is much less likely to propose a more effective way to reach the same end goal, such as filtering out invalid choices before the user ever sees the list.

During business requirements gathering sessions, strive for these important points:

• Represent all business user communities in the same meeting. This avoids incongruent hand-offs between adjacent processes as well as simple misunderstandings about the internal processes of other groups. Remember that even dissenters will feel more buy-in to the decisions made in meetings that they attend.

• Document requirements real-time. Have an analyst typing and projecting the requirements document simultaneously on a screen in the conference room or through a virtual meeting tool.

• Have an IT designer present. The designer needs to be the voice of the user during many discussions with the development staff during implementation. It is crucial this individual understands the context that surrounded each decision if alternatives need to be proposed later.

Don’t let IT work on assumptions. The business experts must be involved with every requirement, even if only to review it for business impacts. The most commonly overlooked categories of requirements include the following:

• Nonfunctional requirements. These represent qualities of a system beyond what the user should be able to “do” with the system. They include the number of users that should be supported simultaneously, the required uptime, and the response times that a user should experience for each major task or transaction. My projects that neglected performance requirements up front had to scramble to re-architect parts of the physical infrastructure and the code, even delaying the launch to do so.

• Other considerations include security requirements (never assume it’s not your job, especially in today’s environment of data theft, hacker attacks, and Sarbanes-Oxley and HIPPA regulations) and Section 508 compliance. (For checklists of the characteristics of software so it can be read by tools used by those with disabilities, go to http://zing.ncsl.nist.gov/iusr/.)

Design is the process of deciding how to meet the goals of the business and users in a way that makes the development process efficient, satisfies the nonfunctional requirements, and complies with usability principles and with corporate standards and policies. If opportunities for user inclusion in the design process are not offered in your organization, demand them. That is your right as one of the managers who must “sign off” before a system is deployed.

Think about this throughout your next project: If this system makes the lives of your users significantly worse than planned, who are they going to look to for relief? If they revolt and will not use the system, who will IT look to blame? If users make mistakes, who will have to deal with their frustration? And if other organizations are depending on the information that your users produce, who will need to clean up the mess?

The better the designer knows the users you represent, the more likely she is to represent their interests. The single best recommendation I can make is to give the designer twice as much time as she requests. Your goal is to make that person feel your pains and understand your goals and constraints.

There are a couple of design concepts that anyone representing the users should understand in order to help the designer do a better job satisfying their needs.

Make sure your designer is using the persona approach. Personas can be defined as user archetypes. In other words, they represent groups of typical users who share goals, abilities, and usage contexts. If you are a revenue assurance manager, perhaps you are representing credit analysts, billing analysts, auditors, and a manager for each. A system should be designed to meet the needs of each one of these personas, but not to meet the needs of all of them at the same time or in the same way. Personas should be the result of research, typically questionnaires and interviews. There should be enough personas to represent the user groups.

After the IT organization has aligned the requirements with technical limitations and the enterprise architecture standards, it is crucial to regroup with the users to walk through the proposed processes. Be sure to include business subject matter experts, trainers, system testers, and UAT coordinators. You should actively look for signs that highly technical designers think users are overly similar to themselves, a bias called self-referential design. This often leads to software too complex for beginners to understand quickly. See Box 2-1 below for further discussion of the benefits of a confrontational design debate.

Beware the Testing Organization Cycle of Life

There is a natural evolution many testing organizations go through over several years. I am not saying there is anything inherently good or bad about the evolution, but rather that if you recognize the forces at work, you can better anticipate them and manage their effects. Can you identify the stage where your own organization is currently? Have you witnessed your organization transition from one stage to another (see Figure 2-5)?

Figure 2-5. The cyclical evolution of testing organizations.

- The Almighty System Test: In the beginning, it seems logical that a comprehensive system testing effort should be able to validate that all requirements are met and that all previous functionality remains intact in a new software release. That stage does not last very long before some faulty code or incorrectly interpreted requirement makes its way into production.

- UAT Investment: The response to poor-quality code reaching production tends to be to throw more resources at building a UAT capability, such as staffing a professional coordinator, paying for travel to face-to-face meetings, building a UAT environment/architecture, and ensuring enough over-staffing so that subject matter experts can dedicate time to periodically execute UAT. If you are lucky, this stage lasts a fairly long time, perhaps as long as the lifespan of the systems you are testing.

- Cost Cutting: Perhaps the impetus is a financial downturn, or perhaps it is due to the lack of high-profile testing blunders that lure executives into a false sense of security. Either way, the next step is to look for lower-cost options to get the same user involvement. First, this requires each subject matter expert to take on more and more UAT responsibility. After all, the only critical piece of UAT is to have your SME look at the software, right? So, the SMEs take on the UAT management responsibilities and perhaps even dedicate themselves to UAT full time.

- One of three paths occur:

• SME Burnout: The SMEs get overworked and one or two key players leave the organization, leaving behind a very large hole that is likely filled by a less-skilled substitute, eroding the UAT capability.

• SMEs Go Pro: The next potential path is that the SMEs who are dedicated to UAT eventually become system testers rather than true user representatives. Their detachment leads to disconnect between the latest user process and the resulting software, creating dissatisfied users.

• SMEs Go Home: The third potential path occurs when UAT starts being seen as a noncritical responsibility (or burden) of the SMEs. As a result, it is transitioned to the system test team or outsourced to a consulting company. Then, the SMEs simply review and approve the test results…whenever they have time. When UAT is seen as a low priority, it gets little attention to find the types of issues that only someone fully engaged in meetings, design reviews, and issue status calls would understand.

- Quality Falls: Regardless of which of the previous three paths you follow, the next step is likely an increase in software issues and misinterpreted requirements reaching the production environment; Senior Management starts to take notice.

- When In Doubt, Add a Testing Layer: The reaction to low quality is natural—add another layer of testing. If UAT already exists, the new layer may be customer experience testing, beta testing, pilot testing, or some other partial rollout added after the UAT—let’s call it beta testing for the sake of this discussion.

- Testing Resources Flow Downstream: The new beta testing layer catches many of the problems that slipped through UAT. Therefore, the best SMEs help with the beta testing rather than wasting their time with the “less mature” code available in the UAT phase. It seems more logical to have the best SMEs see code closer to what the users will so they can sign off on the final version. That means issues are being identified later rather than earlier in the testing process—the exact opposite of what you want to occur for maximum efficiency.

- Collapse the Redundancies: Because the best SMEs have started participating in the beta testing stage, the system test team and UAT test team are virtually identical (both are testing complete systems with minimal user input). So, it makes logical sense to cut costs by combining them into one. By using the best testers and test managers from each, a superior system test team evolves, which feeds into a superior UAT/beta testing effort. Most likely, this round of cost-cutting reduces the resources of the customer-focused beta testing as well, converting it into more of an SME-focused phase rather than a customer-focused phase.

- The Cycle Continues: Recognize this final state? It looks identical to phase 2 with a mature system testing capability and a mature UAT capability. Rather, this describes a common, although sometimes unfortunate, cycle of life.

Were you able to identify where your organization lies on this path? See Box 2-2 for a real-world example of movement along the cyclical evolution of testing organizations.

UAT Is Not Usability Testing

Brian Shackel provided my preferred definition of usability as “a system’s capability in human terms to be used easily and effectively by the specified range of users, given specified training and support, to fulfill a given set of tasks....”3 How is usability different than user acceptance? Although closely aligned, the first difference is that there is an entire body of knowledge dedicated to studying usability. There are methods and techniques in which you can get a doctorate degree. Although it is invaluable to have a usability expert as part of the IT design team (and you should give that expert as much time as she requests), that is beyond the scope of UAT.

In 1990, Indian Airlines Flight 605 crashed, killing 98 passengers. According to a press report about an Airbus official’s comment, “[Airbus officials] maintain the problem is the pilots failing to adapt to the automation, rather than acknowledging the need for the software to work smoothly with the humans.” Do not put yourself in a situation where you are forced to blame users for their poor adoption of a system. This system may have met every user requirement, but was still misused at the critical moment. If UAT practitioners had to know that a blue user interface would be more calming, and a thousand other similar rules, that practitioner probably would not have time be a functional manager during his day job. It is a tradeoff that most companies agree with: It is better to have more effective managers who know something about software development than to employ an army of usability experts that know something about their business.

Confused? In my experience, a user does not reject a system, or even open a trouble ticket, for anything but the most egregious issue of poor usability because the user often cannot put her finger on what problem she encountered. Instead, users tend to blame themselves when they cannot figure out an interface because that is what decades of working with computers have taught them to do. If you test a Web page that includes a search box with a drop-down to choose the category of topic you want—for example, Titles, Authors, Categories—a user would sigh and then diligently try searching multiple ways for his desired topic. However, a usability expert would report that a designer should not force a user to guess which way to find his book most easily. That is a mental burden that can be avoided to improve the user’s experience.

Special Considerations for Data Validation

A system deployment typically includes moving data from one or more legacy systems into the new system. Sometimes, as much work is required for the data migration as building the entire system, so this step should not be underestimated. I’ve been involved in many such endeavors, and they have rarely gone as planned.

At some point, senior management needs to decide whether the business users or IT is in the best position to determine if the data in the new system is correct and usable. The most obvious choice is that because the users have to work with the data after the system goes live, IT should make its best effort at determining the proper conversion rules, after which the users should validate the data during the UAT phase of the project. This approach often leads to serious problems for the following reasons:

• IT cannot figure out the conversion rules without close user cooperation. I’ve seen uses of data that left me dumbfounded. My favorite example is a billing system that had logic built in to change how it billed a customer based on certain words typed in to the comment field. Workarounds such as this become legitimized and then built upon until their use is only apparent to someone who worked throughout its full history.

• Data UAT can take longer than functional UAT. The execution of test cases, the analysis of issues, and re-running of data mock conversion runs can each take many days when millions of records are involved. This makes trial and error very costly if a proposed fix does not result in the expected resolutions. If data UAT is started at the same time as functional UAT, often it’s the data that holds up the launch. A further problem is that it’s much more difficult and costly to implement manual workarounds for poor data quality because operational use may worsen the data with each transaction.

• Users don’t have access to data profiling tools. I cannot stress enough that data profiling tools should be considered to help determine if converted data behaves as expected. Data profiling is an automated way to perform queries on a set of data to produce reports as critical as the number of values, distribution of values, and linkages between interdependent tables. In other words, a user randomly searching for accounts to validate would never notice that a certain category of accounts was never converted at all. IT should work with the users to review the reports of data profiling and discuss any anomalous results.

• Manual cleanup is sometimes the only solution. During the analysis of data conversion results, often the root cause is bad data in the source system. There are two types of bad data: data that is incorrect in a systematic way where rules can be written to clean it up, or data that is bad in a way that only a human can economically review and correct. In the latter situations, the only way to keep the project on schedule is to identify the need for manual corrections early enough that the work can be completed before the final conversion run and launch date (depending on whether it is fixed in the legacy or the new system). Sometimes, this requires bringing in an army of temporary workers or authorizing overtime for the current line workers. I have seen conversion routines that put the unreadable data into special data fields so workers could make updates the first time they accessed each incomplete account. Ideally, you can write rules into the data conversion routines to flag the records that need to be manually cleaned, or even partially perform the cleanup.

The Go/No-Go Goal

Say that five times fast. I am a huge proponent of goal-driven behavior, so I will take this opportunity to refocus you on the goals of UAT. There are two: to provide a measure of the risk associated with a software release, and to help the organization decide whether that risk is low enough to proceed with a release. If you are wondering why the main goals do not include finding software defects, think of that as an incidental benefit, but which should be the goal of the systematic testing before UAT begins. There is not enough time or money to have your UAT testers looking for every defect.

The culmination of the UAT process is the go/no-go call. Typically, this is a conference call, a meeting, or some sort of virtual meeting where decision makers and stakeholders review the test results, outstanding issues, known problems, and proposed workarounds compared to the list of expected benefits. There are a number of intangible benefits and costs that are factored in, usually making quantification impractical. But when it comes down to it, the UAT results are the most recent, most trusted, and the clearest indicators of the impacts that software has on the business processes. Therefore, even if an executive makes the final decision, it is the UAT coordinators who have the largest influence. The UAT coordinator should be a one-stop shop, able to explain to the executives, the developers, and the program management office (PMO) what he needs to know to make decisions, understand the status, or intervene in a project.

Conclusion

After reading this chapter, you should understand what UAT is, and how important it is to uncover software issues earlier in the development process. You may be wondering how user involvement, which typically occurs late in the development cycle, contributes to early detection of software problems. Fortunately, that’s the topic of Chapter 3.

You should now be able to answer the following questions about your organization:

• Does your organization show signs that it needs to improve its UAT capability?

• What are the common approaches to user testing? Which are the most appropriate for your project?

• What cyclical stage best describes your testing organization? Are there signs your organization is evolving into the next stage?

• Do your software requirements originate from users? Or do users only get involved when the software is near completion? How can you get them to see the value of earlier involvement?

• Are your requirements gathered in such a way that they focus on what functionality is needed rather than how the resulting system should be developed? Do you overlook nonfunctional requirements?

• What methods does your organization use to solicit user feedback during the design stage? What other options should be considered?

• Does the user involvement in the requirements and design stages of the software development project set the stage for a successful UAT? The next chapter will help you recognize and address the key success factors for the UAT planning phase.

• Can you describe the difference between user acceptance testing and usability testing?

• Does your project call for data validation? If so, do you have the tools and process in place to avoid the pitfalls that commonly delay projects?

• Are you on track to give a clear and confident go or no-go recommendation at the conclusion of each UAT project? If not, read on to identify and address the barriers you might be facing.