Data is both an asset and a potential liability. As we move data to the cloud, it becomes highly important to understand the various stages that the data goes through so you can understand the security risks and plan mitigations in case of issues. In this chapter, you will investigate the various security mechanisms that Azure Data Factory provides to secure your data.

Overview

Azure Data Factory management resources are built on the Azure security infrastructure, and they use all the possible security measures offered by Azure. Azure Data Factory does not store any data except for the metadata information such as the pipeline, the activity, and in some cases the linked service credentials (connections to data stores) that are using the Azure integration runtime and are encrypted and stored on ADF managed storage.

Azure Data Factory has been certified for the following: HIPAA/HITECH, ISO/IEC 27001, ISO/IEC 27018, CSA STAR.

If you’re interested in Azure compliance and how Azure secures its own infrastructure, visit the Microsoft Trust Center ( http://aka.ms/azuretrust ).

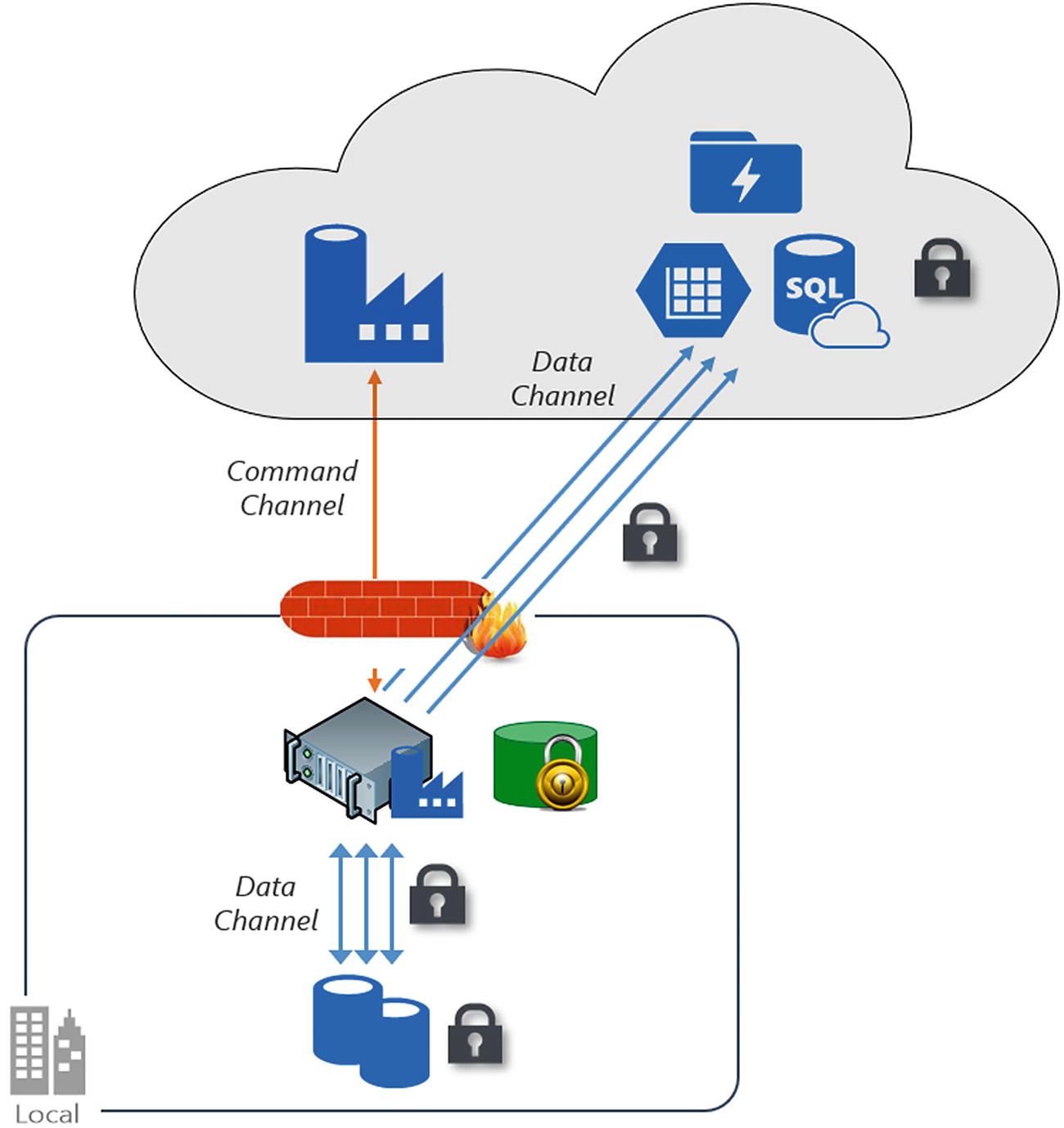

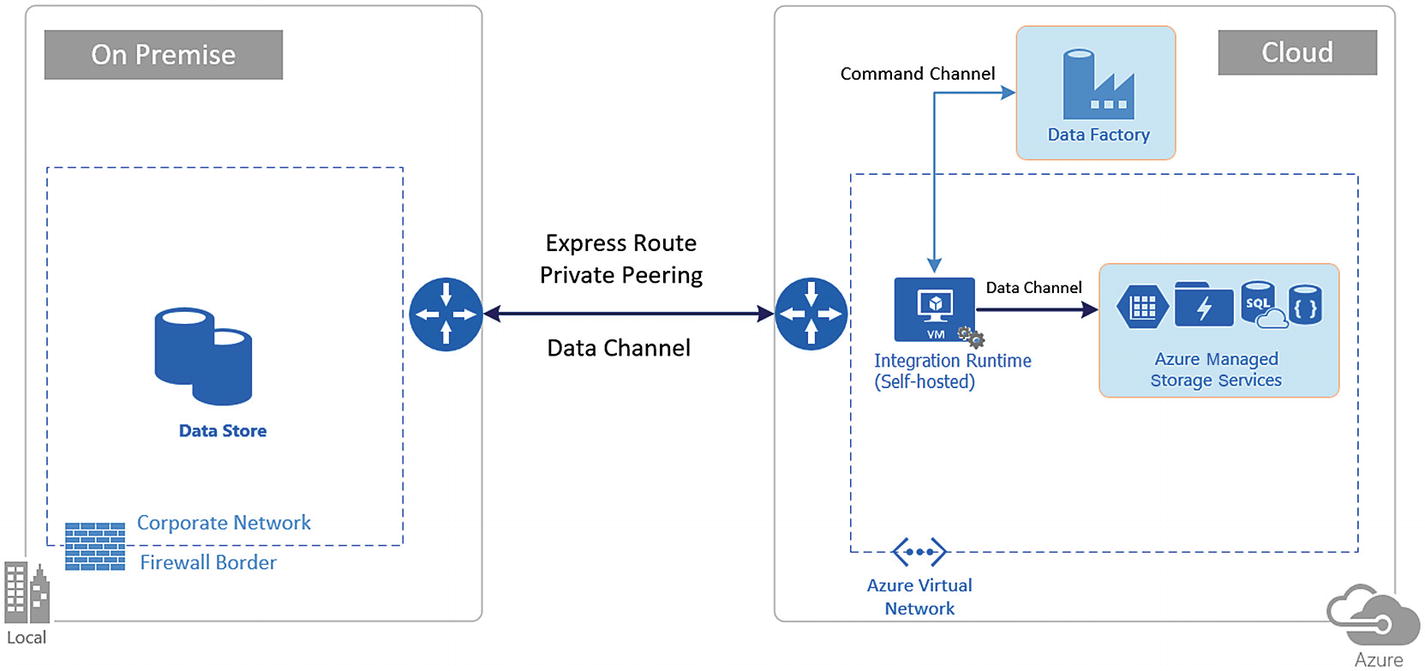

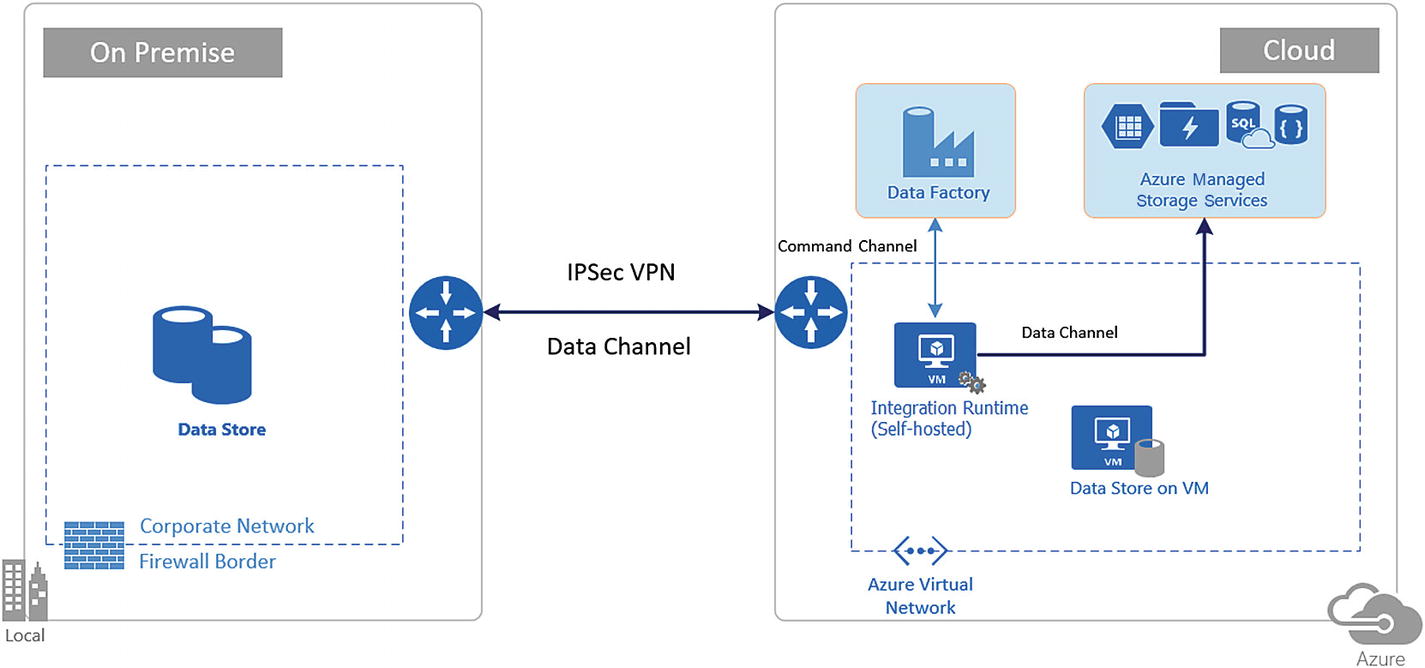

Data channel and command channel in ADF. The data channel is used for the actual data movement, while the command channel is required only for communication within the ADF service.

Cloud scenario : In this scenario, both your source and your destination are publicly accessible through the Internet. These include managed cloud storage services such as Azure Storage, Azure SQL Data Warehouse, Azure SQL Database, Azure Data Lake Store, Amazon S3, Amazon Redshift, SaaS services such as Salesforce, and web protocols such as FTP and OData. Find a complete list of supported data sources at https://docs.microsoft.com/en-us/azure/data-factory/copy-activity-overview#supported-data-stores-and-formats .

Hybrid scenario : In this scenario, either your source or your destination is behind a firewall or inside an on-premises corporate network. Or, the data store is in a private network or virtual network (most often the source) and is not publicly accessible. Database servers hosted on virtual machines also fall under this scenario.

Cloud Scenario

This section explains the cloud scenario.

Securing the Data Credentials

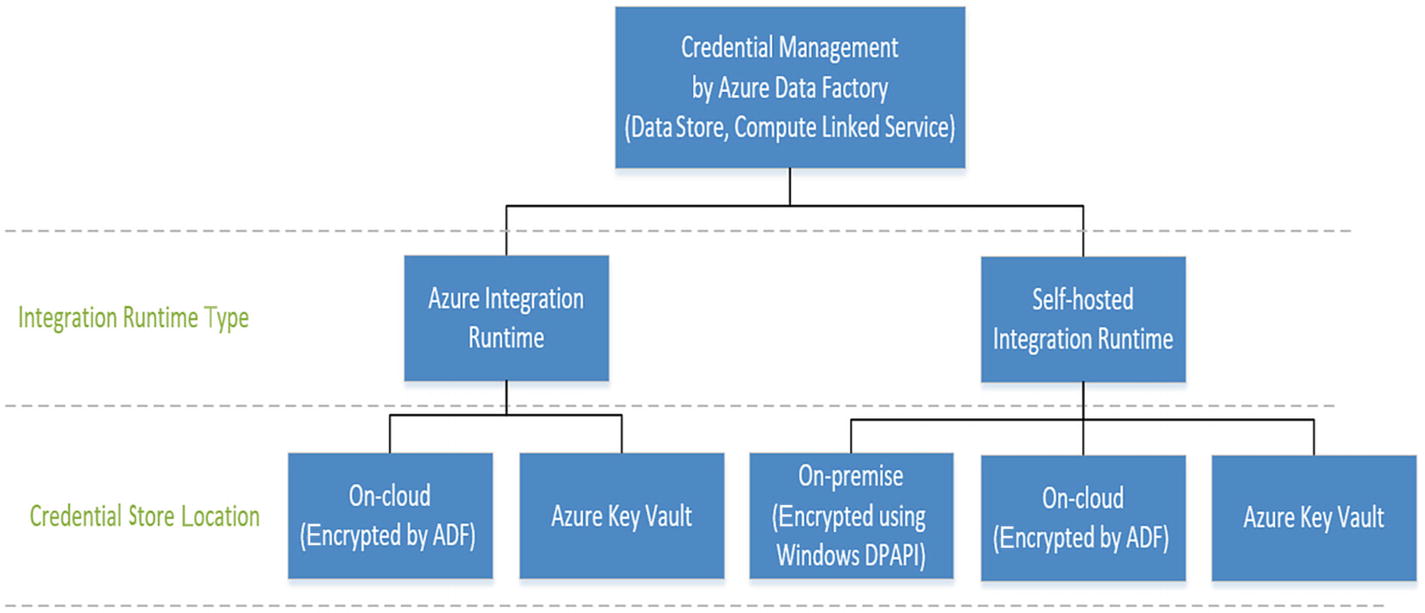

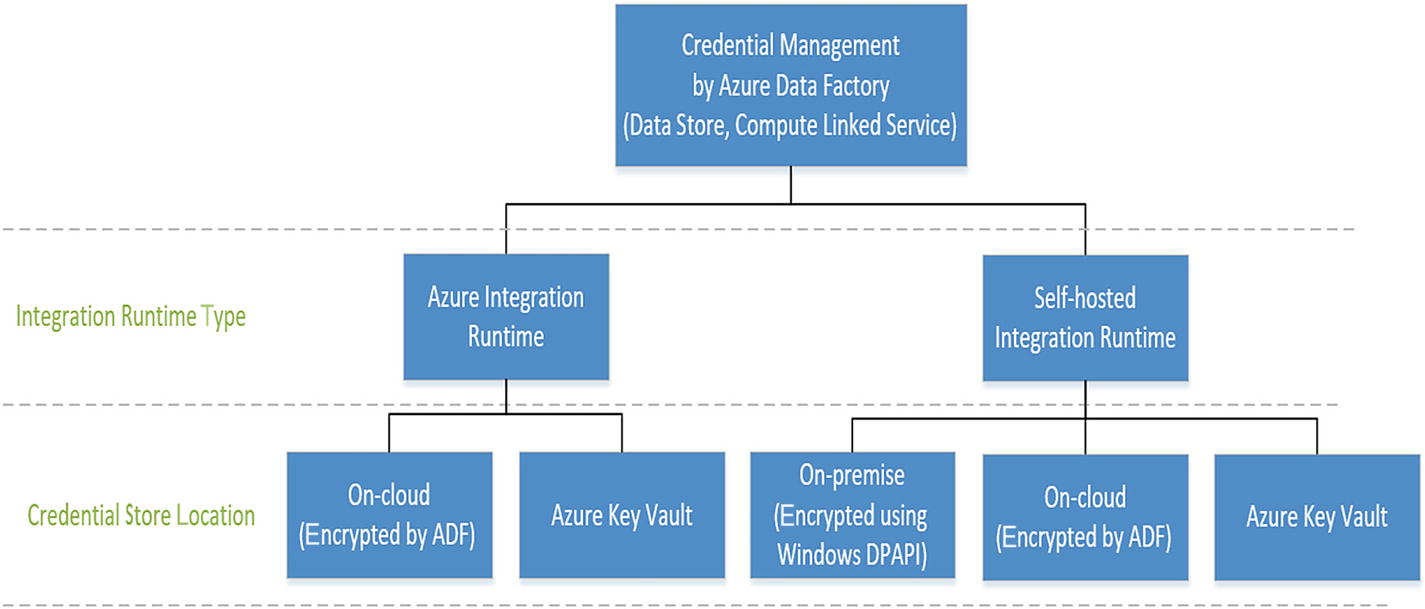

Data store credential storage options in ADF

Store the encrypted credentials in an Azure Data Factory managed store. Azure Data Factory helps protect your data store credentials by encrypting them with certificates managed by Microsoft. These certificates are rotated every two years (which includes certificate renewal and the migration of credentials). The encrypted credentials are securely stored in an Azure storage account managed by Azure Data Factory management services. For more information about Azure Storage security, see the Azure Storage security overview at https://docs.microsoft.com/en-us/azure/security/security-storage-overview .

You can also store the data store’s credentials in Azure Key Vault. Azure Data Factory retrieves the credentials during the execution of an activity. For more information, see https://docs.microsoft.com/en-us/azure/data-factory/store-credentials-in-key-vault .

Data Encryption in Transit

Data is always encrypted in transit. It depends on different data stores on what protocol is used for the connectivity. If the cloud data store supports HTTPS or TLS, all data transfers between Azure Data Factory and the cloud data store will be via a secure channel of HTTPS or TLS. TLS 1.2 is used by Azure Data Factory.

Data Encryption at Rest

Azure SQL Data Warehouse : This supports Transparent Data Encryption (TDE), which helps protect against the threat of malicious activity by performing real-time encryption and decryption of your data. This behavior is transparent to the client.

Azure SQL Database : Azure SQL Database supports TDE, which helps protect against the threat of malicious activity by performing real-time encryption and decryption of the data, without requiring changes to the application. This behavior is transparent to the client.

Azure Storage : Azure Blob Storage and Azure Table Storage support Storage Service Encryption (SSE), which automatically encrypts your data before persisting to storage and decrypts it before retrieval.

Azure Data Lake Store (Gen1/ Gen2) : Azure Data Lake Store provides encryption for data stored in the account. When encryption is enabled, Azure Data Lake Store automatically encrypts the data before persisting and decrypts it before retrieval, making it transparent to the client that accesses the data.

Amazon S3 : This provides the encryption of data at rest for both the client and the server.

Amazon Redshift : This supports cluster encryption for data at rest.

Azure Cosmos DB : This supports the encryption of data at rest and is automatically applied for both new and existing customers in all Azure regions. There is no need to configure anything.

Salesforce : Salesforce supports Shield Platform Encryption, which allows encryption of all files, attachments, and custom fields.

Hybrid Scenario

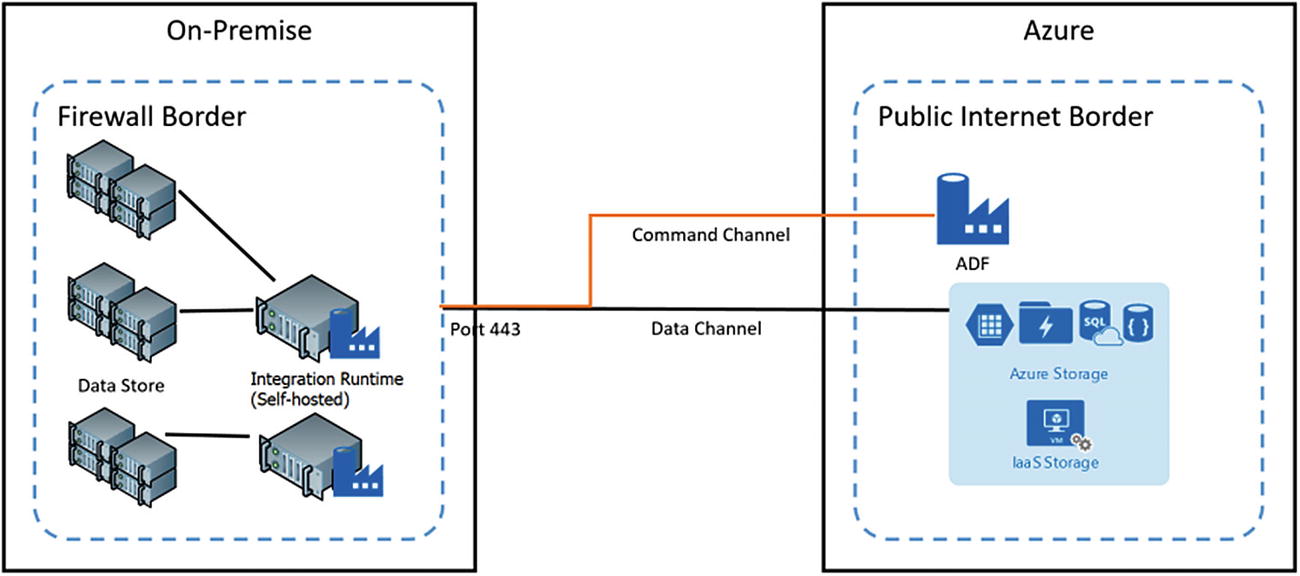

Hybrid setup using the self-hosted integration runtime to connect on-premise data stores

The command channel allows for communication between data movement services in Azure Data Factory and the self-hosted integration runtime. The communication contains information related to the activity. The data channel is used for transferring data between on-premise data stores and cloud data stores.

On-Premise Data Store Credentials

The credentials for your on-premise data stores are always encrypted and stored. They can be either stored locally on the self-hosted integration runtime machine or stored in Azure Data Factory managed storage.

Store credentials locally. The self-hosted integration runtime uses Windows DPAPI to encrypt the sensitive data and credential information.

Store credentials in Azure Data Factory managed storage. If you directly use the Set-AzureRmDataFactoryV2LinkedService cmdlet with the connection strings and credentials inline in the JSON, the linked service is encrypted and stored in Azure Data Factory managed storage. The sensitive information is still encrypted by certificates, and Microsoft manages these certificates.

Store credentials in Azure Key Vault (AKV). Credentials stored in AKV are fetched by ADF during runtime.

Data store credential storage options in ADF

Encryption in Transit

All data transfers are via the secure channel of HTTPS and TLS over TCP to prevent man-in-the-middle attacks during communication with Azure services.

You can also use an IPSec VPN or Azure ExpressRoute to further secure the communication channel between your on-premise network and Azure.

Azure Virtual Network is a logical representation of your network in the cloud. You can connect an on-premise network to your virtual network by setting up an IPSec VPN (site-to-site) or ExpressRoute (private peering).

Network and Self-Hosted Integration Runtime Configuration

Source | Destination | Network Configuration | Integration Runtime Setup |

|---|---|---|---|

On-premises | Virtual machines and cloud services deployed in virtual networks | IPSec VPN (point-to-site or site-to-site) | The self-hosted integration runtime can be installed either on-premises or on an Azure virtual machine in a virtual network. |

On-premises | Virtual machines and cloud services deployed in virtual networks | ExpressRoute (private peering) | The self-hosted integration runtime can be installed either on-premises or on an Azure virtual machine in a virtual network. |

On-premises | Azure-based services that have a public endpoint | ExpressRoute (public peering) | The self-hosted integration runtime must be installed on-premises. |

Express route network setup for accessing on-premise data stores

IPSec VPN setup for accessing the on-premise data stores

Considerations for Selecting Express Route or VPN

Express Route is a better choice as it is safer and gives you dedicated bandwidth, at an additional cost.

The self-hosted IR can be set up either on-premises or on an Azure VM to access your data stores. I personally prefer setting it up on an Azure VM for the ease of manageability and network setup.

If you set up the self-hosted IR on-premises, then you need to grant access from your on-premises networks to your storage account/data sources with an IP network rule. In addition, you must identify the Internet-facing IP addresses used by your network. If your network is connected to the Azure network using ExpressRoute, each circuit is configured with two public IP addresses at the Microsoft edge that are used to connect to Microsoft services like Azure Storage using Azure public peering. To allow communication from your circuit to Azure Storage, you must create IP network rules for the public IP addresses of your circuits. To find your ExpressRoute circuit’s public IP addresses, open a support ticket with ExpressRoute via the Azure portal.

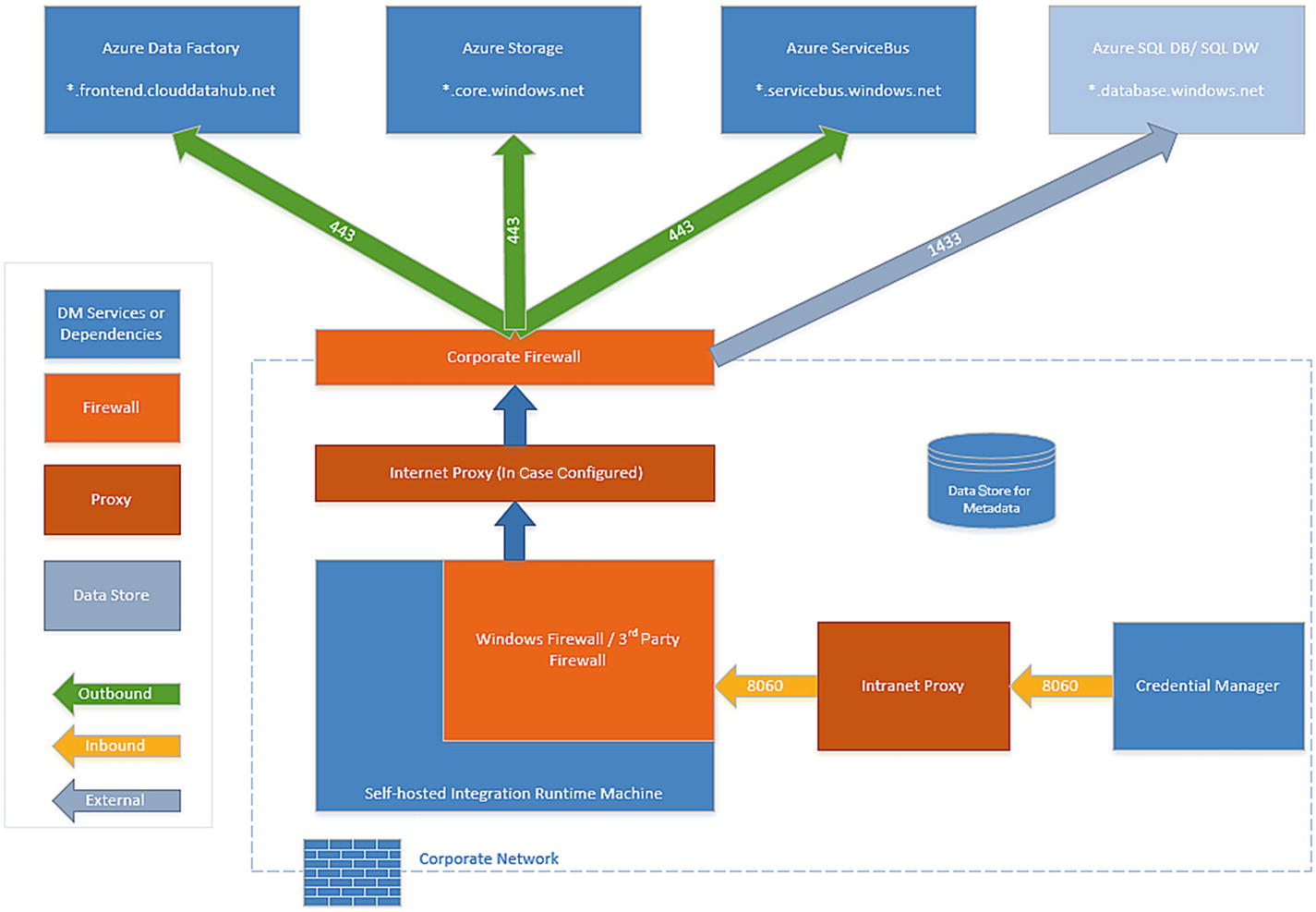

Firewall Configurations and IP Whitelisting for Self-Hosted Integration Runtime Functionality

Firewall configurations and IP whitelisting requirements

Outbound Port and Domain Requirements

Domain Names | Outbound Ports | Description |

|---|---|---|

*.servicebus. windows.net | 443 | Required by the self-hosted integration runtime to connect to data movement services in Azure Data Factory. |

*.frontend. clouddatahub.net | 443 | Required by the self-hosted integration runtime to connect to the Azure Data Factory service. |

download. microsoft.com | 443 | Required by the self-hosted integration runtime for downloading the updates. If you have disabled auto-updates, then you may skip this. |

*.core. windows.net | 443 | Used by the self-hosted integration runtime to connect to the Azure storage account when you use the copy feature. |

*.database. windows.net | 1433 | (Optional) Required when you copy from or to Azure SQL Database or Azure SQL Data Warehouse. Use the staged copy feature to copy data to Azure SQL Database or Azure SQL Data Warehouse without opening port 1433. |

*. azuredatalakestore.netlogin.microsoftonline.com/<tenant>/oauth2/token | 443 | (Optional) Required when you copy from or to Azure Data Lake Store. |

download. microsoft.com | 443 | Used for downloading the updates. |

At the Windows firewall level (in other words, the machine level), these outbound ports are normally enabled. If not, you can configure the domains and ports accordingly on the self-hosted integration runtime machine. Port 8060 is required for node-to-node communication in the self-hosted IR when you have set up high availability (two or more nodes).

IP Configurations and Whitelisting in Data Stores

Some data stores in the cloud also require that you whitelist the IP address of the machine accessing the store. Ensure that the IP address of the self-hosted integration runtime machine is whitelisted or configured in the firewall appropriately.

Proxy Server Considerations

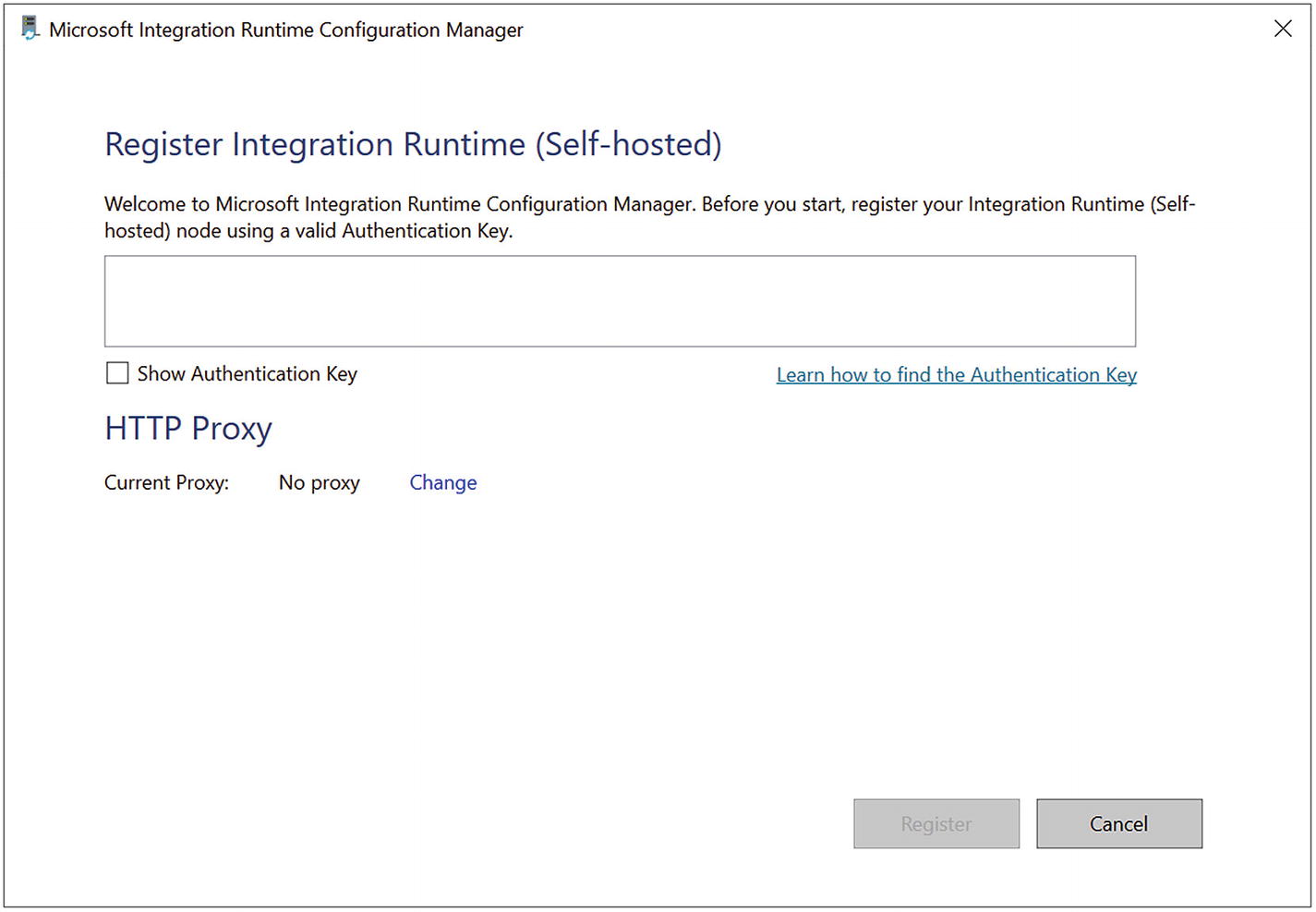

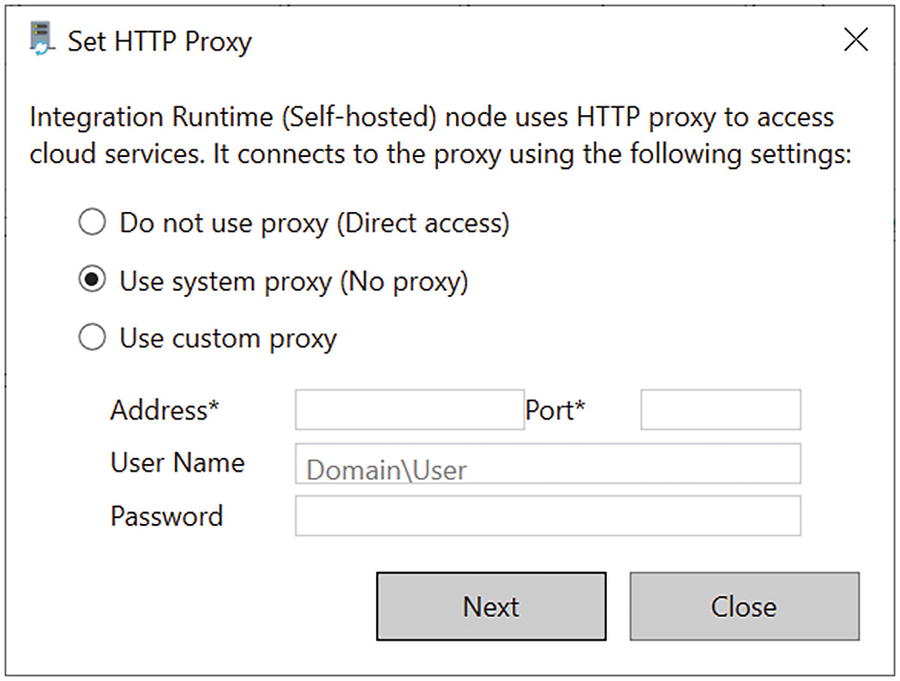

Self-hosted IR configuration manager

Proxy settings in the self-hosted IR

Do not use proxy: The self-hosted integration runtime does not explicitly use any proxy to connect to cloud services.

Use system proxy: The self-hosted integration runtime uses the proxy setting that is configured in diahost.exe.config and diawp.exe.config. If no proxy is configured in diahost.exe.config and diawp.exe.config, the self-hosted integration runtime connects to the cloud service directly without going through a proxy.

Use custom proxy: Configure the HTTP proxy setting to use the self-hosted integration runtime, instead of using configurations in diahost.exe.config and diawp.exe.config. The Address and Port fields are required. The User Name and Password fields are optional depending on your proxy’s authentication setting. All settings are encrypted with Windows DPAPI on the self-hosted integration runtime and stored locally on the machine.

The integration runtime host service restarts automatically after you save the updated proxy settings. This is an HTTP proxy; hence, only connections involving HTTP/ HTTPS use the proxy, whereas database connections will not use the proxy.

Storing Credentials in Azure Key Vault

You can store credentials for data stores and computes in Azure Key Vault. Azure Data Factory retrieves the credentials when executing an activity that uses the data store/compute.

Prerequisites

This feature relies on the Azure Data Factory service identity.

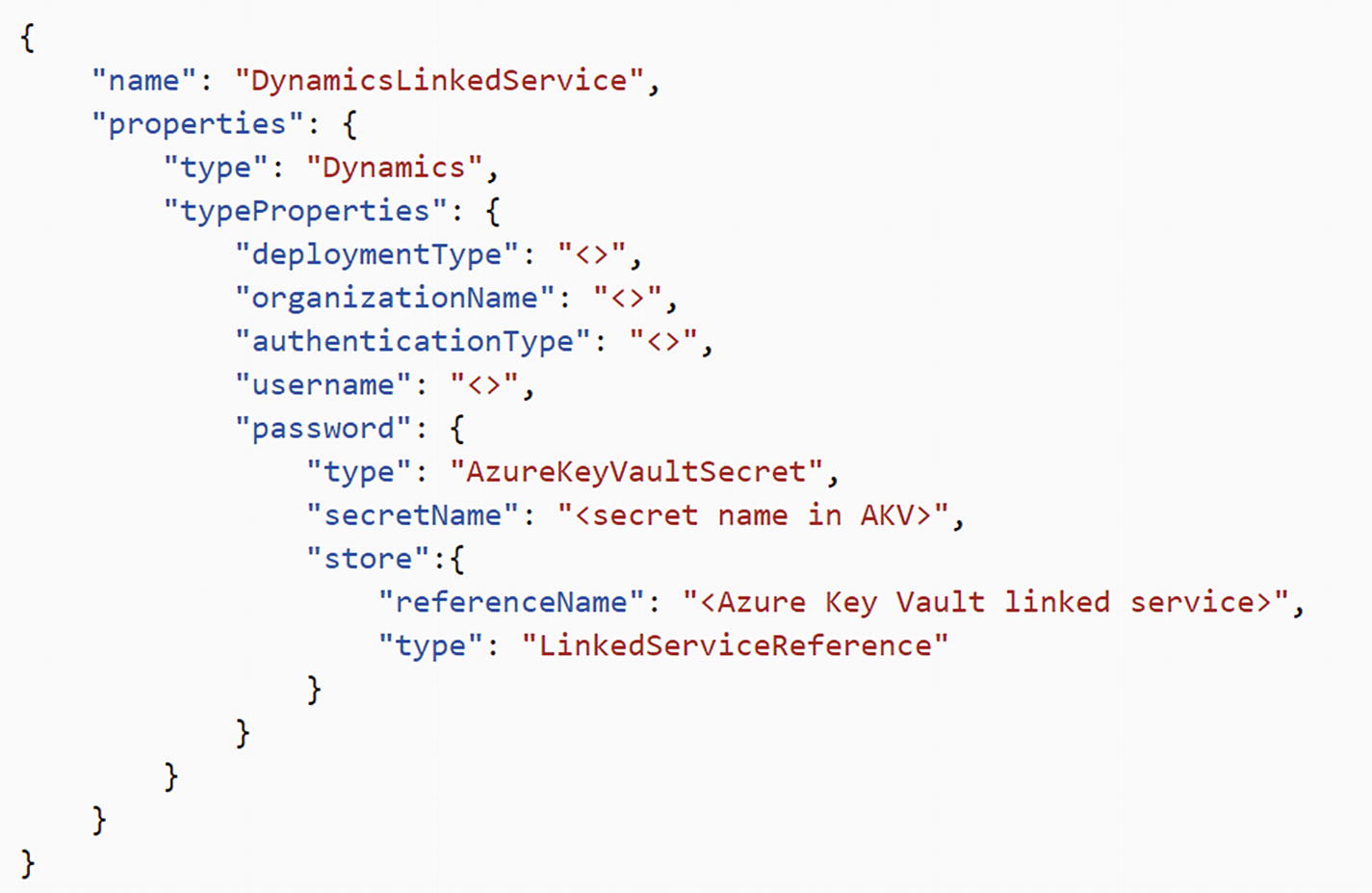

In Azure Key Vault, when you create a secret, use the entire value of the secret property that the ADF linked service asks for (e.g., connection string/password/service principal key, and so on). For example, for the Azure Storage linked service, enter DefaultEndpointsProtocol=http;AccountName=myAccount;AccountKey=myKey; as the AKV secret for myPassword. Then reference it in the connectionString field in ADF. For the Dynamics linked service, enter myPassword as the AKV secret and then reference it in the Password field in ADF. All ADF connectors support AKV.

Steps

- 1.

Retrieve the data factory service identity by copying the value of Service Identity Application ID that is generated with your factory. If you use the ADF authoring UI, the service identity ID will be shown in the Azure Key Vault linked service creation window. You can also retrieve it from the Azure portal; refer to https://docs.microsoft.com/en-us/azure/data-factory/data-factory-service-identity#retrieve-service-identity .

- 2.

Grant the service identity access to your Azure Key Vault. In Key Vault, go to “Access policies,” click “Add new,” and search for this service identity application ID to grant Get permission to in the “Secret permissions” drop-down. This allows this designated factory to access the secret in Key Vault.

- 3.

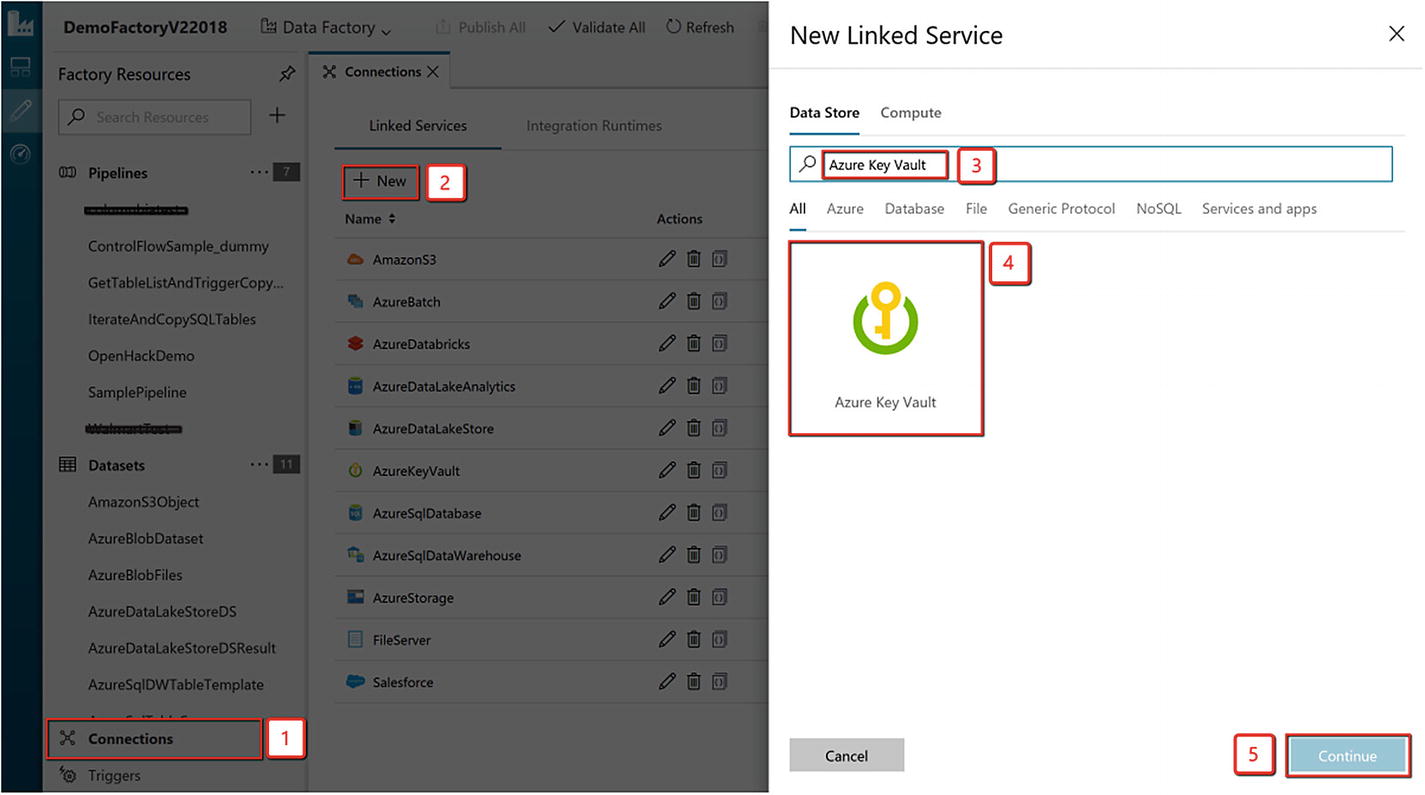

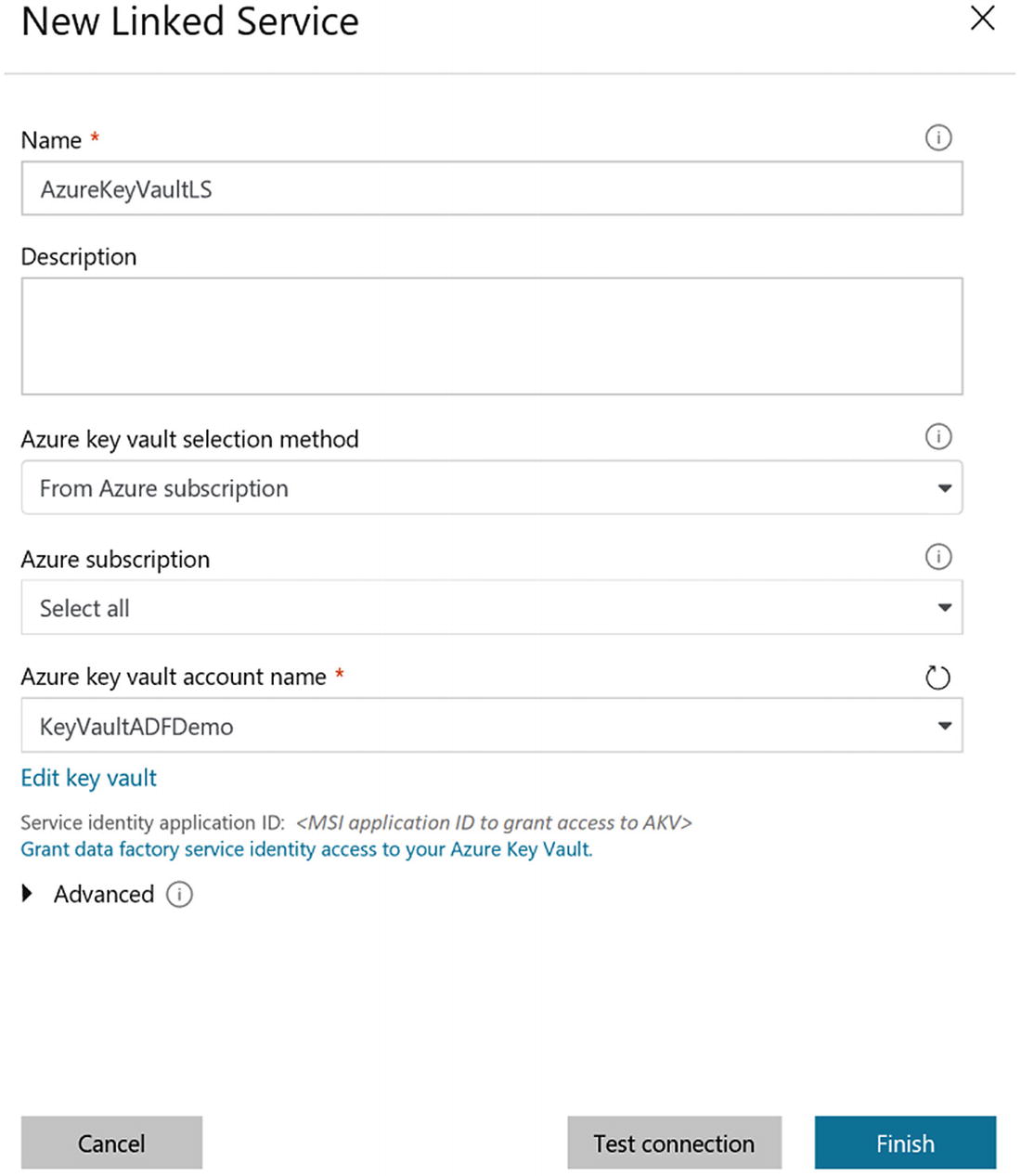

Create a linked service pointing to your Azure Key Vault. Refer to https://docs.microsoft.com/en-us/azure/data-factory/store-credentials-in-key-vault#azure-key-vault-linked-service .

- 4.

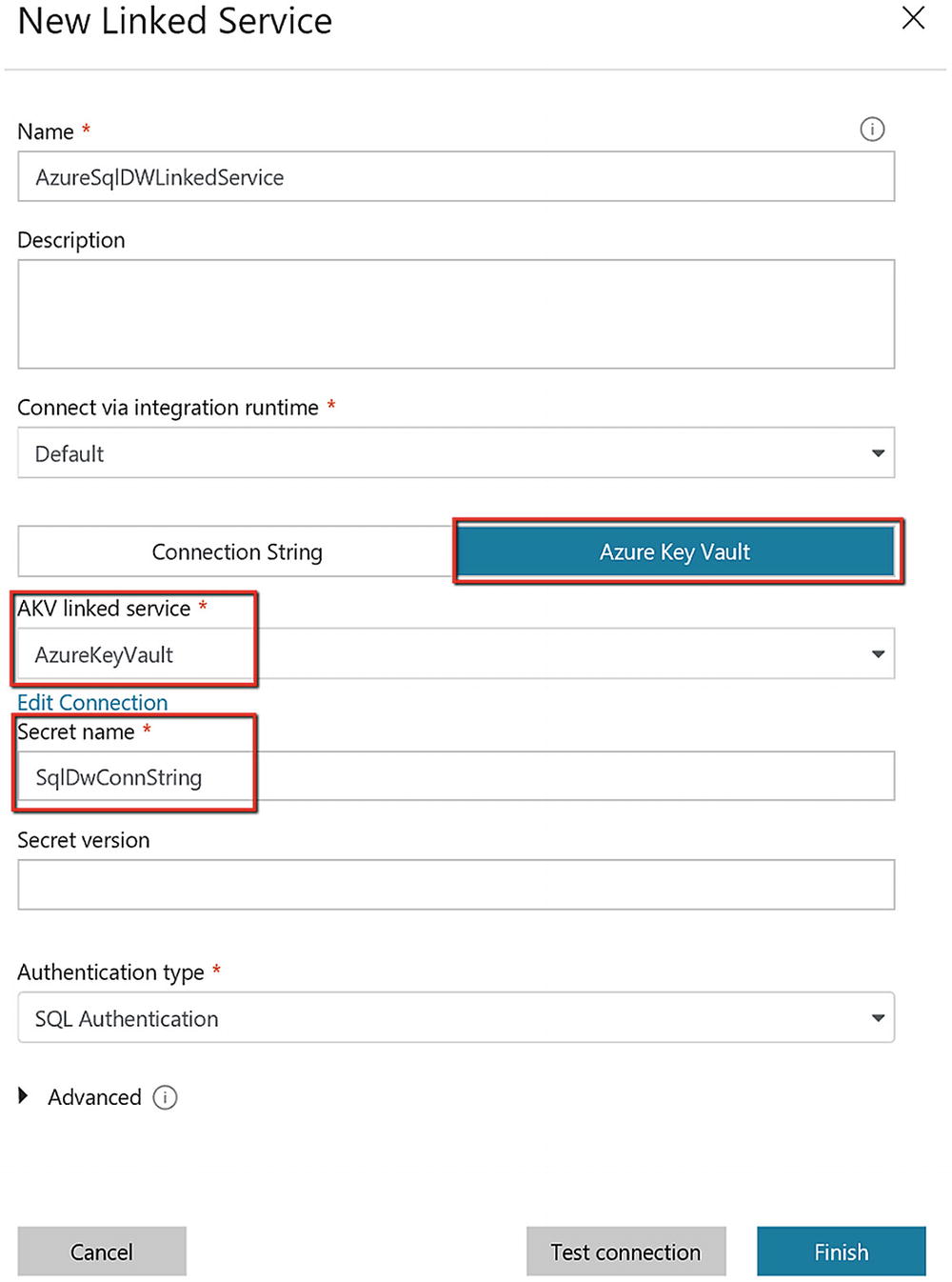

Create a data store linked service, inside which you can reference the corresponding secret stored in Key Vault.

Using the Authoring UI

Creating an Azure Key Vault linked service for connecting to a Key Vault account for pulling the credentials in during execution time

Key Vault linked service properties

Here’s the JSON representation of the AKV linked service:

Reference Secret Stored in Key Vault

Properties

Property | Description | Required |

|---|---|---|

type | The type property of the field must be set to AzureKeyVaultSecret. | Yes |

secretName | The name of the secret in Azure Key Vault. | Yes |

secretVersion | The version of the secret in Azure Key Vault.If not specified, it always uses the latest version of the secret.If specified, then it sticks to the given version. | No |

store | Refers to an Azure Key Vault linked service that you use to store the credential. | Yes |

Using the Authoring UI

SQL DW linked service referencing the secret from Azure Key Vault

JSON representation of a linked service that references secrets/ passwords from Key Vault using the Azure Key Vault linked service

Advanced Security with Managed Service Identity

When creating an Azure Data Factory instance, a service identity can be created along with factory creation. The service identity is a managed application registered to Azure Activity Directory and represents this specific Azure Data Factory.

You can store the credentials in Azure Key Vault, in which case the Azure Data Factory service identity is used for Azure Key Vault authentication.

It has many connectors including Azure Blob Storage, Azure Data Lake Storage Gen1, Azure SQL Database, and Azure SQL Data Warehouse.

A common problem is how to manage the final keys. For example, even if you store the keys/secrets in Azure Key Vault, you need to create another secret to access Key Vault (let’s say using a service principal in Azure Active Directory).

Managed Service Identity (MSI) helps you build a secret-free ETL pipeline on Azure. The less you expose the secrets/credentials to data engineers/users, the safer they are. This really eases the tough job of credential management for the data engineers. This is one of the coolest features of Azure Data Factory.

Summary

In any cloud solution, security plays an important role. Often the security teams will have questions about the architecture before they approve the product/service in question to be used. The objective of this chapter was to expose you to all the security requirements when using ADF.

We know that most data breaches happen because of leaking data store credentials. With ADF, you can build an end-to-end data pipeline that is password free using technologies like Managed Service Identity. You can create a trust with ADF MSI in your data stores, and ADF can authenticate itself to access the data, completely removing the need to type passwords into ADF!