SOAP, WSDL, and UDDI form the core foundation for the Web services architecture, but these technologies provide only the most basic functionality. As mentioned in Chapter 3, SOAP gives you a powerful extension mechanism via SOAP headers. You can use SOAP extensions to add advanced middleware functionality, such as security, management, and transactions, to the environment.

When extending SOAP, people can devise their own SOAP header structures and their own intermediaries to process them, but if you'd like to make your extra middleware services interoperable it's helpful to have standards for these SOAP extensions. Standard SOAP extensions ensure cross-vendor and cross-business interoperability.

Security has been a big concern for SOAP users from the beginning, and the industry has made excellent progress in defining security standards for XML and Web services. As mentioned in Chapter 3, the simplest way to make SOAP secure is to encrypt your network traffic using HTTPS and SSL/TLS. But network-based security is rather crude. It doesn't offer very much control at the application level. For fine security control, you must implement security at the application level, something that requires a different set of security standards.

Security is a broad subject, and many groups are working on various aspects of XML and Web services security standards. (Remarkably, there's almost no overlap across the various efforts.) The purpose of these new standards is not to replace existing security standards but instead to provide an XML-based abstraction layer that enables Web services to transparently use a variety of security technologies for confidentiality, integrity, nonrepudiation, authentication, and authorization.[1]

The National Institute of Standards and Technologies (NIST) and the IETF manage most of the low-level security standards associated with data encryption and digital signatures. The W3C and OASIS are building XML and Web services security standards based on the core NIST and IETF work. The W3C manages the XML standards associated with encryption, digital signatures, and key management. OASIS manages most of the efforts focused on security information exchange, access control, and SOAP message protection. Figure 5-1 shows the responsibilities of these groups.

Figure 5-1. IEFT, W3C, and OASIS share responsibility for developing Web services security standards.

Let's start by talking about data confidentiality and integrity. Confidentiality protects the contents of a message from unauthorized access. Integrity ensures that the contents of the message have not been changed in transit. Encryption is the most common mechanism used to ensure the confidentiality and integrity of data. You can use SSL/TLS to encrypt your messages during transport, but network-level encryption is an all-or-nothing approach. You might need to route a message through a number of intermediaries on its way to its final destination. If you're using network-level encryption, the message will get decrypted at each stop along the way. If you want to make sure that a particular piece of information is kept confidential, you must encrypt it at the application level so that only the intended recipient can see it. XML encryption standards let you encrypt all or part of an XML message.

Encryption uses a string of characters called a key to mathematically encode and decode data. The two most common types of encryption are symmetric encryption (single key) and asymmetric encryption (public key). In symmetric key encryption, a message is encrypted and decrypted using the same key, which must be confidentially exchanged in a separate transmission. In asymmetric key encryption, a message is encrypted and decrypted using two keys (one public, one private). If the message is encrypted with the public key, it can be decrypted only with the private key. Similarly, if the message is encrypted with the private key, it can be decrypted only with the public key.

NIST manages most low-level encryption standards. IETF controls the standards for SSL/TLS and HTTPS, which are the technologies used to encrypt messages at the network level. To implement encryption at the SOAP level, we need additional standards designed specifically to work with XML data.

The W3C formed the XML Encryption Activity in January 2001 to develop a standard to support XML encryption. The resulting XML Encryption W3C Recommendation was approved in December 2002. This standard defines a process for encrypting and decrypting all or part of an XML document. It also defines an XML syntax for representing encrypted content in an XML document and an XML syntax for representing the information needed to decrypt the content.

A digital signature uses encryption technology to support data integrity and nonrepudiation. A digital signature provides proof that a particular person (the signatory) sent a piece of information (the signed data). Digital signatures rely on public key cryptography. You create a digital signature by using your private key to apply a signing encryption algorithm to the data being signed. The signing algorithm does not modify the data, but it does produce a unique value, which is the digital signature. The receiver verifies the signature by applying a verification encryption algorithm to the same data, but this time using the signatory's public key. The generated value should match the digital signature. If the signed data have been tampered with in any way during transport, the signatures won't match. Because only the signatory has access to the private key, the receiver is assured that the signed data did in fact come from that person and that the data have not been altered in any way.

As with encryption, NIST and IETF are responsible for the low-level digital signature standards. The issue at hand is how to make digital signatures work with XML data and how to represent a digital signature in XML. An XML document often contains a lot of white space—blanks and line feeds that have no semantic meaning. Because the encryption process becomes invalid if even one character within a document has changed, it's necessary to transform an XML document into canonical form (which removes all white space) before signing the data.[2] Hence XML signatures require a little extra processing. The W3C XML Digital Signatures Activity has been working with the IETF xmldsig working group to define the necessary technologies needed to sign XML data. These two groups jointly produced the W3C XML Signature Recommendations in February 2002. These standards define an XML syntax to represent signed data in XML and a set of processing instructions to canonicalize XML, sign data, and interpret signatures.

Now that you have an inkling of the way digital signatures work, you're probably wondering how an application might go about acquiring encryption keys and creating and verifying a digital signature. Every public key infrastructure (PKI) system provides APIs that you can use to manage keys and process signatures. Unfortunately, these APIs are fairly difficult to use, and they require you to deploy special PKI client code on each system that wants to process signatures.

You probably don't want to write key management and signature processing code in every one of your applications. It is much more convenient to encapsulate this code into a centralized, reusable trust service that can perform this complex processing on behalf of your applications. A trust service is a Web service that provides security functions for your applications. Any client or application can access these services using simple SOAP requests. If you use trust services, you no longer need to deploy PKI client software on all your systems.

The W3C XML Key Management Activity, which was formed in December 2001, is developing specifications for a set of trust services that can manage the registration and distribution of public keys. These Web services are described using WSDL and are accessed using SOAP. They completely hide the complexity of using PKI APIs from your applications. The group published the first Working Draft of the XML Key Management Specification (XKMS) in March 2002. This draft is based on the XKMS specification developed by Microsoft, VeriSign, and webMethods. The authors and seven other companies submitted the specification in March 2001.

The OASIS Digital Signature Services (DSS) Technical Committee, which was formed in December 2002, is developing specifications for a set of trust services that can create and verify XML signatures. These Web services will also be described using WSDL and accessed using SOAP, and they hide the complexities of the PKI signature processing APIs.

Another important part of security is authentication and authorization. Authentication verifies the identity of a user or application. Authorization determines whether or not a user has permission to perform a particular action. These two security functions protect your systems from unauthorized access.

There are many ways to accomplish authentication. As with encryption, you can rely on the network to manage authentication. HTTP supports two mechanisms for passing a user ID and password with the message; one mechanism uses clear text, and the other uses encryption. But again, as with network-based encryption, HTTP-based authentication is rather crude. If the request passes through multiple intermediaries or if the request accesses multiple services, you may lose track of the identity of the original caller.

A more flexible and reliable way to manage authentication is to set up some type of authentication authority. A user goes to the authentication authority, provides some proof of identity, and in return receives a security token that represents an assertion by the authority of the user's identity. Examples of an authentication authority include a directory service, a certificate authority, or a single sign-on service. Users prove their identity by passing an authentication challenge (user ID password or biometric) or via their private key. Security tokens include X.509 certificates, Kerberos tickets, and XML tokens. You can use these security tokens to pass authentication information in subsequent SOAP requests.

A number of groups at OASIS are working on XML-based authentication and authorization standards. These groups are defining XML vocabularies that allow you to express and exchange security information using XML.

The OASIS XML Common Biometric Format (XCBF) Technical Committee has defined an XML vocabulary for representing and exchanging biometric information in XML. You can use this biometric information to create an XCBF security token, which you can use for authentication and identification purposes. XCBF was approved as an OASIS Committee Specification in January 2003.

The OASIS Security Assertions Markup Language (SAML) standard defines an XML syntax for representing security assertions. OASIS formed the XML-Based Security Services Technical Committee (SSTC) in December 2000, and the specification was approved as a formal OASIS Standard in November 2002. SAML supports three types of security assertions:

Authentication. An authentication assertion states that a particular authentication authority has authenticated the subject of the assertion (either a human or a digital entity such as a computer or application) at a particular time, and this assertion is valid for a specified period of time.

Authorization. An authorization assertion states that a particular authorization authority has granted or denied permission for the subject of the assertion to access a particular resource within a specified period of time.

Attributes. An attribute assertion provides qualifying information about either an authentication or an authorization assertion. For example, an authorization assertion might say that Joe Cool is authorized to submit purchase orders to Acme Parts, and an attribute assertion indicates that his spending limit is $5000.

SAML defines a set of Web APIs that you use to obtain these assertions from a trust service that makes authentication or authorization decisions. SAML doesn't specify which authorities can be used to obtain these assertions. In fact, SAML is designed to support any type of security authority. After the authority has made its assertion, SAML gives you the means to exchange that security information with other systems.

SAML also provides a mechanism to support an XML-based single sign-on service. A single sign-on service allows a user to log in once with a recognized security authority and then use the returned login credentials to access multiple resources for some predefined period of time. You can sign on to a SAML authentication authority and use the returned SAML assertion as an authentication token on subsequent SOAP requests. SAML allows you to authenticate yourself once and access many different Web services within your security domain within the allotted timeframe.

The Liberty Alliance Project is developing a set of standards that allow you to use a SAML authentication assertion across multiple security domains. The Liberty Alliance Project is a consortium of more than 150 technology and consumer organizations working to develop open specifications for federated identity. The founding members include American Express, AOL, Bell Canada, France Telecom, GM, HP, MasterCard, Nokia, NTT DoCoMo, Openwave, RSA Security, Sony, Sun, and Vodafone.

The Liberty federated identity infrastructure allows you to create a circle of trust with your business partners. Although each member of the circle maintains and protects unique user account information, you can use a single federated identity credential as proof of authentication with all members of the circle. Each member maps the credential to the private user identity known by that system.

For example, let's say that you would like to make some travel reservations, and you'd like to pay for your airline tickets using frequent flyer miles. If you're like me, you have a decent stash of frequent flyer miles with most of the airlines, so you have a choice of carriers. Wouldn't it be nice if you could use an online travel agent to see what your options are and then book the flight directly? Unfortunately, as of this writing you need to book these tickets directly through the airline. Your frequent flyer information is owned and managed by each airline. The airline requires that you perform a login before you can access your frequent flyer account to purchase tickets. And no doubt you have a different user ID and password with each airline. Currently, there is no way for the online travel agent to log in with the airline on your behalf. The identity you have with the travel agent is different from the identities you have with the airlines, and the online travel agent doesn't know all the user IDs and passwords you use to authenticate yourself with the airlines (and you really don't want the agent to know that information).

With Liberty, all these travel-related companies can create a circle of trust to support federated identification. A Liberty federated identity is a single identity that can map to multiple account identities within the circle of trust. When you log in to a Liberty single sign-on service, you obtain a SAML authentication assertion that represents your federated identity credential. As you interact with each of the circle members, this credential maps to the specific account ID that you maintain with each company.

This means that you will be able to use an online travel agent to book a flight using your frequent flier miles. You'll be able log in to your travel agent site using your federated identity. The travel agent site can then use that identity to conduct transactions on your behalf (with your permission) with the various airlines. All the while, Liberty doesn't permit the partners to share your personal information, so it protects your privacy.

The Liberty Alliance Project is a private consortium that operates like a formal standards body. The Liberty V1.1 specifications were published in January 2003. I expect the next version of the specifications to be released in the first half of 2003. Liberty-based products are or soon will be available from a number of directory and identity service companies, including Cavio, Communicator, Entrust, NeuStar, Novell, Oblix, OneName, Phaos, RSA Security, Sun, and WaveSet.

After you prove your identity to a system, it can determine whether you're authorized to access or use a particular resource. OASIS has a two technical committees working on specifications for authorization. The XML Access Control Markup Language (XACML) takes a policy-centric approach to authorization, which is typically used to control access to application functions and enterprise data. XACML defines a language for defining access policies and rules. These policies may apply to multiple resources or services. A user must obtain permission from an authorization authority to gain access to these resources and services. At run time the authorization authority evaluates the policies to determine whether access is permitted. The results of the authorization decision are formatted into a SAML authorization assertion. XACML was approved as a formal OASIS Standard in February 2003.

The Extensible Rights Markup Language (XrML) takes a content-centric approach to access control, which is typically used to control access to content and multimedia. It uses a digital rights methodology. It defines an XML language for specifying access rights and permissions, permitting you to say who can view a particular resource and under what conditions. Access rights must be defined for each protected resource. The Rights Language Technical Committee was formed in May 2002. XrML is based on a specification submitted by Content Guard, which maintains rights to the intellectual property.

We've talked a lot about basic security technologies and discussed how to represent security information in XML, but we have yet to mention how to use these technologies with Web services. This is where the OASIS Web Services Security (WSS) effort comes into play. IBM, Microsoft, and VeriSign developed the WS-Security specification and submitted it to OASIS in July 2002. The OASIS WSS technical committee was formed in September 2002 to standardize this specification and to explore future requirements for securing Web services.

WS-Security, a SOAP extension specification, defines a standard way to represent security information in a SOAP message. WS-Security allows you to pass security tokens for authentication and authorization in a SOAP header. It provides a mechanism that allows you to digitally sign all or part of a SOAP message and to pass the signature in a SOAP header. It also provides a mechanism that allows you to encrypt all or part of a SOAP message. And it provides a way to pass information in a SOAP header about the encryption keys needed to decrypt the message or verify a digital signature.

WSS has published a series of working draft specifications. As of this writing, the most recent draft was published in March 2003. In addition the team has defined bindings for a variety of security tokens, including a simple username, SAML, XCBF, XrML, X.509 certificates, and Kerberos tickets.

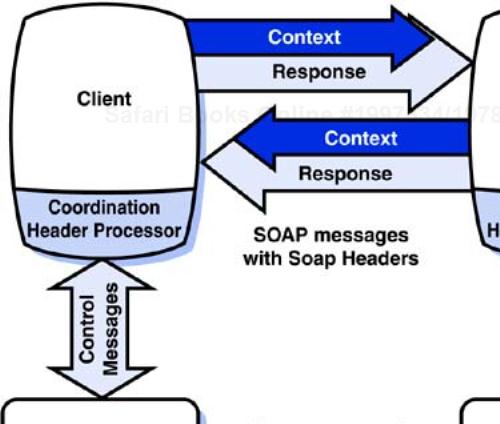

Pulling all these standards together, you have very fine-grained security control over your SOAP messages. Figure 5-2 shows how you can use SOAP header processors and a variety of trust services to simplify SOAP security. SOAP header processors automatically manage the security process on behalf of both the client and service applications. You should have no problem with interoperability because the format of the WS-Security security header is standard.

Figure 5-2. SOAP header processors automatically manage security on behalf of the client and service applications. The SOAP header processors use trust services to manage digital signatures, encryption keys, and authentication and authorization decisions.

In this example, the client first calls a SAML-compliant single sign-on service to log in and obtain a token containing a SAML authentication assertion. The SOAP header processor creates a WS-Security SOAP header and places the SAML token into the header. It then calls a DSS service to sign the message payload. It places the resulting digital signature and information about the user's public key in the SOAP header and sends the message on its way.

At the receiving end, the SOAP header processor uses the key information to obtain the client's public key from an XKMS service. The SOAP header processor then calls the DSS service, which uses the public key to verify the signature. The SOAP header processor also calls an entitlement service to verify the client's access rights to the service based on the SAML token.

Distributed application systems impose serious management challenges. If one application component goes down, it may impact a number of application systems. If an application system isn't working properly, how do you identify the one component that's actually causing the problem? After you've identified the problem, what can you do to fix it and to avoid it in the future?

There are two distinct management activities. One activity focuses on reporting, and the other focuses on control. The purpose of the reporting activity is to help you monitor systems to understand what is going on. The purpose of controlling activities is to configure systems and solve any problems that might arise. By and large it's easy to develop standards for management reporting. It's much more difficult to convince vendors to agree on standards for control.

Most people use a systems management framework, such as BMC Patrol, CA Unicenter, HP OpenView, and IBM Tivoli, to monitor their production systems. These management frameworks consist of a set of monitoring agents and a central management coordinator. The agents collect information about the various nodes, devices, networks, and applications throughout your system, and they send the information to the central manager, which aggregates the information into a management console.

This management console gives system administrators a global view of the situation. When systems don't perform within specified parameters, the central manager issues an alert, highlighting the problem on the console. The system administrators can then issue commands to the various agents to adjust the system. Each agent interacts with the component that it's managing through some type of instrumentation API. This instrumentation API lets the agent observe what's going on and potentially adjust the object's runtime configuration. A separate agent is required for each type of managed component.

When these system management frameworks first appeared, each system used proprietary communication protocols. These proprietary protocols made it impossible for different frameworks to communicate, and therefore you could use only the agents supplied by the framework provider. If the framework provider didn't supply an agent for the device or application you needed, you couldn't manage that component through the framework. Over the past decade, a number of organizations have come together to develop management standards that would enable interoperability across multi vendor agents and frameworks.

To enable an open management framework, a number of standards are required. You need the following:

A standard protocol that the agents use to report information to the central manager

A standard format for the management information

A standard command API that the central console can use to give instructions to the agents to manage a particular resource

The first and most critical standard is IETF Simple Network Management Protocol (SNMP). SNMP is the standard protocol used by agents to report information to the central manager. SNMP was first developed in 1988 and standardized in 1990. Almost everyone has adopted SNMP for all reporting activities. But SNMP addresses only the first requirement.

The Distributed Management Task Force (DMTF) is trying to address the other two requirements. DMTF develops standards that build on SNMP to provide a vendor-neutral, Internet-based management infrastructure for both reporting and control. The DMTF Common Information Model (CIM) defines a common management data model that is independent of any particular management framework. This data model defines the structure and format of management information.

CIM also defines a standard set of control commands called CIM Operations, which are used to give instructions to the management agents. The CIM specifications enable interoperability among multiple management frameworks so that you are no longer forced to buy your entire management solution from a single vendor. When using CIM, you can obtain specialized management agents from third parties to monitor components of your environment that aren't supported by the core framework.

The CIM specifications have been available since 1998. The data model is specified in Unified Modeling Language (UML), a standard, object-oriented modeling language defined by OMG. The DMTF Web-Based Enterprise Management (WBEM) initiative extends CIM one step further by bringing it more in line with the Web. The xmlCIM specification defines CIM in terms of XML. The CIM Operations over HTTP specification defines a transport mechanism that allows you to send CIM commands to the agents over HTTP.

Although the interoperability characteristics of CIM seem quite appealing, vendors of system management frameworks have been slow to fully adopt CIM and WBEM. Of course if they did, they would relinquish the lock-in features of their frameworks.

Although WBEM is based on XML, it does not define instrumentation APIs for Web services. More to the point, the CIM data model doesn't really define the right type of management elements needed to represent distributed application components and the relationships that might exist among multiple components that collaborate to complete a transaction.

Most management systems focus on monitoring and managing individual network elements, such as nodes, routers, and switches. More recently these systems have also been extended to let you manage software servers such as database systems, Web servers, and application servers. But very few management systems understand how to manage individual application components running within these software servers. Nor do they understand the relationships and dependencies that exist among software components running in different environments. A management framework can manage all the individual systems that host the various components of a distributed application, but it really doesn't understand how to view a distributed application system as a single entity.

For the moment you must rely on separate management tools supplied by the application server vendors. These tools allow you to manage the individual application components running within the server. But in most circumstances, even these tools don't give you a holistic view of the composite application system. To facilitate runtime management of Web services, a more comprehensive model is required.

One promising approach is described in a specification developed by HP and webMethods. The Open Management Interface (OMI) takes a different track from that of WBEM—one that attempts to enable application management rather than element management. OMI does not build on CIM. Instead it defines a new management data model and a new set of management interfaces. The OMI data model represents individual application elements and the relationships among them. The OMI data model recognizes dependencies among multiple resources, and hence it gives you a holistic view of a composite application system.

OMI defines a set of Web APIs, implemented using SOAP and WSDL, for managing resources such as Web services. In this model, every managed resource supports a management API. An OMI-compliant management framework uses this API to manage the resource. In other words, OMI uses Web services to manage Web services. Although OMI looks promising, as of this writing none of the big vendors of system management frameworks has released products that use it. My guess is that they are waiting for some kind of formal endorsement from a standards group.

At the time of this writing, the most interesting standardization effort is the OASIS Web Services Distributed Management (WSDM) Technical Committee. This group was formed in February 2003. Its charter is to define a management framework that uses Web services architecture and technology to monitor and control distributed resources, such as Web services. This group will leverage a variety of standards and specifications, including SNMP, CIM, WBEM, and OMI. The goal is to produce a specification by January 2004.

One area that has been the focus of a lot of Web services hype is Web service assembly and business process automation. Quite a few people have painted rosy pictures of users dynamically assembling Web services into complex business transactions. I think dynamic assembly of services is still far off in the future, but at least we're working in the right direction.

Business process automation has always been a popular effort. That's because multistep processes are notorious as a sinkhole of in efficiency. If you can find a way to automate these processes, you have the opportunity to reduce costs, improve quality, and increase efficiency. Application integration is at the heart of any business process automation project. Given that Web services make it easier to integrate applications, it's only natural that people would try to use this technology to implement business process automation.

Many view it as a critical priority to develop standards to support Web service assembly. Unfortunately there is very little consensus in the industry as to what approach to take, how much to standardize, or even what to call it. Is it orchestration or choreography? Are the two terms interchangeable, or do they have different meanings? Does Web services assembly require a new loosely coupled distributed transaction protocol?

One of the most challenging aspects of business process automation is the management of long-running, loosely coupled, asynchronous transactions. Traditional transaction middleware assumes that communications are synchronous and tightly coupled. Given that multistep business processes may span minutes, days, or even weeks, traditional transaction middleware may not be applicable to the job.

The OASIS Business Transactions Technical Committee has developed the Business Transaction Protocol (BTP) specification. BTP defines an XML-based transaction coordination system that supports asynchronous, loosely coupled, long-term transactions. BTP supports the concept of atomic transactions (in which all tasks within a transaction must complete successfully or else the entire transaction must be reset) and cohesive transactions (in which a certain set of tasks within a transaction must complete successfully or else the entire transaction must be reset).

BTP can coordinate transactions for Web services based on SOAP, ebXML, or any other XML protocol. The BTP specification was approved as an OASIS Committee Specification in May 2002 but thus far has not won the endorsement of strategic vendors such as IBM and Microsoft. As a result it has received little attention.

Many people in the industry think that a new loosely coupled distributed transaction coordination system is not really required—at least not for the majority of applications. Most applications can get by using existing transaction services, such as those supplied with database systems, application servers, and workflow engines. Rather than build a new transaction coordination system, the goal is to provide an XML-based abstraction layer that enables Web services to transparently use existing transaction systems, and to enable interoperability across disparate environments. To this end BEA Systems, IBM, and Microsoft published two specifications called WS-Coordination and WS-Transaction in August 2002.

WS-Coordination defines a coordination framework that allows multiple participants to reach agreement on the outcome of a distributed activity. It defines a set of Web APIs and protocols that allow a Web service to create a coordinated activity and to propagate information about that activity, known as context, to other Web services.

The coordination framework assumes that there is some type of transaction management system that will coordinate the activity. For example, you could use a J2EE-based transaction manager or a BTP-compliant transaction coordinator. WS-Coordination defines a set of standard control APIs for conversing with the transaction management system. Normally you would use a set of SOAP header processors to manage the distributed activity on behalf of the application programs.

Figure 5-3 shows an overview of the framework. In this diagram, the client initiates the activity. The client-side coordination header processor uses the WS-Coordination APIs to create a coordinated activity and to obtain context information. The header processor takes the context and puts it into a SOAP header in the request message. The receiving header processor on the server side uses the context information to enroll the service as a participant in the coordinated activity. The transaction management system uses its own transaction protocol to coordinate the activity.

Figure 5-3. The coordination framework works by propagating context between participants in the distributed activity.

To act as coordinator for the activity, a transaction service must provide support for the WS-Coordination Web APIs. WS-Coordination can support a variety of types of transaction coordination, such as atomic transactions, cohesive transactions, and loosely coupled business activities. The specific protocols used to implement the various types of transaction coordination are defined separately. WS-Transaction defines the specific protocols used to implement atomic transactions and loosely coupled business activities.

WS-Coordination and WS-Transaction are not direct competitors to BTP. WS-Coordination defines a common Web API that allows Web services to register a transaction with a transaction coordinator, but it does not define the actual transaction coordinator. It can be used with any transaction coordinator. BTP defines a new standard for a transaction coordinator. A BTP-compliant transaction coordinator could support the WS-Coordination Web APIs and act as the Web service transaction coordinator.

Currently, the WS-Coordination and WS-Transaction specifications are not industry standards. According to the document status section of the specifications, they are intended for review and evaluation only. As of this writing, they have not been submitted to a standards organization, and there are no other specifications on the horizon that appear to compete with these two. Given the backing of BEA, IBM, and Microsoft, I expect these specifications to form the basis for future Web services transaction coordination standards.

The next area of contention—orchestration and choreography—doesn't have quite as clear a resolution. Five proposals are competing in this area, including solutions from ebXML, a joint effort lead by Sun, a new effort at the W3C, the Business Process Management Initiative (BPMI), and the BEA/IBM/Microsoft triumvirate.

The first point of contention is in the definition of the terms. Some people use the terms “choreography” and “orchestration” interchangeably. Others view choreography as a description of service interactions (without workflow), and orchestration as the controlled execution of service interactions (with workflow).

The ebXML solution focuses on the lighter-weight choreography approach. The ebXML Business Process Specification Schema (BPSS) defines a framework for defining dual-party or multiparty business collaborations. Business collaboration is defined as a choreographed exchange of business documents among two or more business parties that results in an electronic commerce transaction.

BPSS does not describe the actions that must be executed by the various business parties. It simply defines the messages that must be exchanged according to a defined pattern of collaboration. BPSS relies on the Web services that take part in the exchange to coordinate the transaction. It does not assume that there is a central coordinator, nor does it require a transaction protocol. One limitation associated with BPSS is that it relies on other ebXML infrastructure components—including ebMS and CPPA—and it does not work with SOAP or WSDL. EbXML BPSS was published in July 2002.

The second contender in this space is the Web Services Choreography Interface (WSCI) specification. WSCI was jointly developed by BEA, Intalio, SAP, and Sun. It was published in June 2002 and submitted to W3C in August 2002.

WSCI is based on the SOAP and WSDL infrastructure. As with BPSS, WSCI describes Web service composition in terms of message exchange patterns. It makes no assumptions about the internals of a particular application that processes a message, and it does not define a business process execution language.

WSCI describes a message exchange in terms of sequencing rules, message correlation, exception handling, and transactions. Unlike BPSS, which describes collaboration participants in terms of business roles, WSCI describes the exchange as an interaction of Web services. Each message exchange is described as a prescribed sequence of invocations of WSDL operations. In fact, WSCI is an extension to WSDL. A WSCI process is defined within a WSDL document. WSCI doesn't attempt to prescribe who or what is responsible for ensuring that the process gets executed as prescribed. For example, a business process engine or another Web service could coordinate the process.

In January 2003, the W3C formed the WS-Chor working group to develop a formal standard for choreography. Its charter is to develop a language for creating composite services and for defining choreographed interchanges among multiple Web services. The charter requires that the language build on WSDL. It is beyond the scope of this group to define a business process modeling language. Although the group is free to develop a completely new language, it will use the WSCI specification as formal input for consideration.

You'll notice that these first three proposals don't address the workflow aspect of business process coordination. The last two efforts focus on standards for business process engines: BPMI and the BEA/IBM/Microsoft triumvirate.

BPMI is a nonprofit consortium dedicated to the development of business process management (BPM) standards. The founding members include Aventail, Black Pearl, Blaze Software, Bowstreet, Cap Gemini Ernst & Young, Computer Sciences Corporation, Cyclone Commerce, DataChannel, Entricom, Intalio, Ontology.Org, S1 Corporation, Versata, VerticalNet, Verve, and XMLFund. Membership has grown to more than 80 software and service companies, including key players such as BEA, BMC, Commerce One, EDS, IBM, SAP, and Sun. In November 2002 BPMI published a Web services-based standard for business process engines.

The BPMI Business Process Modeling Language (BPML) is an XML vocabulary for modeling business processes. BPML relies on WSDL and WSCI to describe Web service interactions. It defines an execution language that specifies the runtime semantics of interactions, and it defines an abstract execution model to manage the orchestration of those interactions. BPML assumes the use of a transactional business process engine. A number of vendors, including Intalio, SeeBeyond, and Versata, plan to release products based on BPML.

The last proposal is from the BEA/IBM/Microsoft triumvirate. In conjunction with the WS-Coordination and WS-Transaction specifications, these three vendors published a BPM language called the Business Process Execution Language for Web Services. BPEL4WS is an execution language designed to support workflow-oriented orchestration based on a SOAP and WSDL infrastructure. BPEL4WS represents a convergence of the ideas in Microsoft's XLANG and IBM's Web Services Flow Language (WSFL) specifications. It provides a language that describes business processes and business interaction protocols. Following a similar vein as WSDL, BPEL4WS allows you to describe abstract versus executable business processes. An abstract process can be thought of as a process type, which can be implemented by multiple services. An executable process is an implementation of an abstract process that can be executed by a business process engine. BPEL4WS is based on SOAP, and it defines interactions between Web services as described by a WSDL operation.

At the time of this writing, it appears that there is division among the vendors. In a controversial move in March 2003, the BPEL4WS authors spurned the W3C WS-Chor effort. Microsoft representatives attended the first WS-Chor meeting and left partway through, saying that the effort didn't match their plans. A month later the BPEL4WS authors launched a competing effort at OASIS. The OASIS WS-BPEL Technical Committee will develop a BPM standard based on BPEL4WS.

Perhaps I'm being too harsh by saying the efforts are competing. After all, WS-Chor focuses on choreography, and WS-BPEL addresses orchestration, but I think it's unlikely that the two efforts will end up being complementary. WS-BPEL will be a superset of WS-Chor, and you can be sure that the WS-BPEL team won't wait for the WS-Chor team to define choreography standards for them. The OASIS team already has a working specification. My prediction is that WS-BPEL will eclipse all other efforts. BPSS will maintain the ebXML niche, but BPMI will kowtow and adopt WS-BPEL. And WS-Chor will fade into obscurity as it haggles over requirements. WS-BPEL has already garnered product support. IBM provides a preliminary implementation of a BPEL4WS process engine, called BPWS4J, available through alphaWorks. Collaxa and Momentum provide commercial products that support BPEL4WS. And since the launch of WS-BPEL, many BPM companies have expressed support for the effort.

Reliability is another area of contention. “Reliability,” also known as reliable message delivery, refers to the ability to guarantee the proper delivery of messages, in the right sequence, within an acceptable time frame. Reliability is often associated with transactions and choreography because it provides a foundation for loosely coupled, asynchronous transaction coordination.

A number of SOAP implementations support reliable messaging at the transport layer by using a reliable transport protocol, such as a message queuing service. Although this approach is effective, it limits your options. It forces you to use a specific transport, which may not be available on all platforms. An application-layer reliability specification allows you to implement reliable message delivery using any transport protocol, including HTTP. At the time of this writing, two reliability proposals are competing for dominance. One proposal is in development at OASIS. The other proposal comes from BEA, IBM, Microsoft, and TIBCO.

In January 2003 Fujitsu, Hitachi, NEC, Oracle, Sonic Software, and Sun published a specification for message reliability called WS-Reliability. This specification defines a SOAP extension to support guaranteed delivery of messages with guaranteed message ordering and no duplicates. The authors submitted the specification to OASIS in February 2003, coinciding with the launch of the Web Services Reliable Messaging (WS-RM) Technical Committee. The group intends to produce a specification by September 2003.

In March 2003 BEA, IBM, Microsoft, and TIBCO published a competing set of reliability specifications called WS-ReliableMessaging and WS-Addressing. These SOAP extensions address essentially the same requirements as WS-Reliability, although they do so in a different way.

For discussion purposes, I refer to the OASIS proposal as WS-RM(1) and the other proposal as WS-RM(2). One important distinction between the two proposals relates to asynchronicity. WS-RM(1) ties asynchronous messaging to reliability. WS-RM(2) defines asynchronous messaging in a separate specification, WS-Addressing. By separating asynchronicity from reliability, WS-RM(2) is more flexible and extensible.

Although I think WS-RM(2) is a better specification, I don't think the industry needs two competing reliability specifications. What's most aggravating about this situation is that the rift appears to be entirely political. The OASIS team members have established a time line that precludes any extensive discussions about requirements or redesign. It appears as if their goal is to standardize the WS-Reliability specification as quickly as possible. For their part, the authors of the second proposal don't seem particularly interested in working with the OASIS team. As of this writing, no one from BEA, IBM, Microsoft, or Tibco has joined the OASIS effort, nor have the authors submitted the specifications to a standards group. It would be better for the industry if the two teams joined forces to develop a single set of specifications that draws the best features from both proposals.

One other area of high interest is that of portlets and interactive applications. One of the most obvious places to use Web services is as a content provider for a portal. Portals provide a convenient, personalized interface to numerous applications. But to make an application available to a portal, you must implement an interface between the portal and the application. This interface, known as a portlet, consists of presentation logic that tells the portal how to access and display the content. Normally, a separate portlet is required for each portal application. Web services technology provides an opportunity to devise a standard mechanism to simplify the integration of remote applications and content into portals.

Two OASIS technical committees are working together to do just that. The Web Services Interactive Applications (WSIA) Technical Committee and the Web Services for Remote Portals (WSRP) Technical Committee are jointly developing the WSRP specification for “pluggable portlets.” WSRP defines a generic adapter that enables any WSRP-compliant portal to consume and display any WSRP-compliant Web service, without the need to develop a specific portlet for each service. This specification allows portal administrators to add new content to a portal with only a few clicks of a mouse.

The generic portal adapter talks to a WSRP service through a set of standard Web APIs, which are defined using standard WSDL. All WSRP services must implement these WSRP Web APIs.

A WSRP service must also supply its own interactive presentation logic as part of its Web API. Because it supplies its own presentation logic, no application-specific portlet is required. A WSRP-compliant portal accesses the WSRP service using the generic WSRP portlet adapter. The WSRP service returns a response containing a presentation markup fragment that can be displayed in a browser.

The WSRP service can generate the appropriate markup fragment for many types of display or listening devices, such as desktops, mobile handsets, PDAs, or telephones. The WSIA technical committee is designing the presentation aspect of the joint WSRP specification.

WSRP makes portals much more dynamic than ever before. WSRP allows users to gain access to new content dynamically. Users don't have to wait for an administrator to configure new content into the portal. Instead you can dynamically discover new content through a service registry, such as UDDI. Using a registry browser, a user can query the registry and select the service, and the portal can dynamically configure the service at runtime using WSRP.

The WSLA and WSRP committees published the WSRP 1.0 Committee Specification in April 2003 and initiated the review process to promote the specification to full OASIS standard status. I expect WSRP to be approved as a formal standard by June 2003.

As you can see, quite a bit of activity is going on to develop advanced Web services standards. Even so, a number of areas are yet to be addressed by formal standards groups. The W3C WS-Arch working group is doing a nice job of identifying the various areas that need to be addressed to flesh out a complete Web services infrastructure. Examples of these areas include asynchronous messaging, message correlation, message routing, alternative message exchange patterns, session management, policy, and caching. As of this writing, there are no formal efforts to define standards for these advanced features. There are quite a few vendor-published specifications to consider, though.

Microsoft has published a number of specifications as part of its Global XML Web Services Architecture (GXA). Microsoft positions GXA as a set of technologies that aims to make Web services appropriate for cross-platform application integration. The GXA specifications include WS-Attachments, WS-Inspection, WS-Routing, WS-Referral, WS-Security, WS-Policy, WS-ReliableMessaging, WS-Addressing, WS-Coordination, and WS-Transaction. Microsoft says that these specifications or derivatives thereof may be submitted to a standards group at some point in the future.

Since launching the GXA initiative, Microsoft has joined forces with BEA, IBM, RSA Security, SAP, and VeriSign to develop a number of advanced security specifications. In December 2002 these six companies released six security-based specifications that focus on trust and policy. The group intends to submit these specifications to a standards group in the future.

In March 2003 BEA published a set of SOAP extension specifications to support asynchronous and conversational SOAP interactions. These specifications—WS-Acknowledgement, WS-Callback, and WS-MessageData—are designed to work with and augment the WS-Addressing and WS-ReliableMessaging specifications. BEA has published these specifications on a royalty-free basis.

One thing is clear. The vendors are committed to Web services. They are investing time and effort in the development of advanced Web services technologies. And they are committed to making sure that their systems are interoperable. We've made excellent progress in security. Management and portals are coming along nicely. There are competing efforts regarding transactions, choreography, and reliability, but progress is definitely being made. For the most part, the degree of cooperation is unprecedented.

[2] Canonicalization involves a number of other functions in addition to removing white space. If you have a burning desire to learn all about XML encryption and XML signature, I recommend Secure XML, by Donald Eastlake and Kitty Niles, ISBN 0201756056.