Chapter 4

HITL Technologies and Applications

In an effort to gain an understanding of the existing types of solutions and methods, we will begin by analyzing the scope of HiTLCPSs from a practical perspective, first delving into the processes of data acquisition, then considering different solutions for inferring state, and finally, different techniques for actuation. In the second part of the chapter we will look at several experimental projects and research initiatives in this field.

4.1 Technologies for Supporting HiTLCPS

4.1.1 Data Acquisition

The acquisition of data through which a human's state may be inferred is a complex process, with a multitude of possible sources of information. HiTLCPSs have previously used physical properties, such as localization (e.g. GPS positioning), vital signals (heart rate, ECG, EEG, body temperature), movement (accelerometers), and sound (voice processing) among many other types of information that can be acquired directly from the physical reality. There are also many non-physical properties that may be derived, such as communication behaviors (e.g. phone calls, SMSs) or socialization habits (e.g. social networking data, friend lists). We will discuss some examples of the use of socialization habits and social networking in Sections 4.2.3 and 11.2. Here, we focus on physical properties, since they have greater technological requirements.

Most raw physical data comes from distributed sensor architectures, which are critically important for HiTLCPSs, since they allow the measurement of physiological changes, which may be processed to infer current environmental conditions and human activities, psychological states, and intent. In this regard, several types of architectures and technologies have been proposed.

One of the most important technologies for the process of data acquisition in HiTLCPSs is the WSN. These are networks of small, battery-powered devices with limited capabilities, wireless communication, and various sensors that have been applied in countless application scenarios worldwide. One highly debated challenge of WSNs applied to HiTLCPS is the integration of these tiny devices into the worldwide IoT. The ease of integration of these small devices is of particular importance for HiTLCPSs, since it would make these systems more distributed, open, interactive, discoverable, and heterogeneous, as envisioned in [33]. In WSNs, the use of the Internet protocol (IP) has always raised some concerns, owing to the fact that it does not minimize memory usage or processing. Moreover, the use of the full transmission control protocol (TCP) and/or the IP stack is not possible because it requires more resources than the ones most of these devices can offer. However, the integration of IP has the advantage of offering a transparent communication between nodes while using a well-known protocol, providing interoperability and even Internet connectivity. While working towards employing IP in WSNs, the IETF created the 6LoWPAN group that has been working on standards for the transmission of IPv6 packets over low-capability devices in wireless personal areas, using IEEE 802.15.4 radios [80]. New drafts were also proposed for adapting 6LoWPAN to other technologies like Bluetooth. Unfortunately, 6LoWPAN cannot be applied to devices devoid of processing or memory capabilities, such as RFID tags. Gateway-based approaches are a possible solution to support IP functionality in these scenarios. The main advantage of these approaches is that terminal devices do not require any processing or communication capabilities. Moreover, they make the sensor and device networks transparent to external environments, and developers can use any protocols as long as they are suitable for their needs. However, one inherent problem of gateway-based approaches is that gateways are single points of failure. This problem is exacerbated in environments where devices present some type of mobility, i.e. moving from place to place while maintaining connectivity. In these mobile environments, all of the communication processes are more fragile, and failure of fixed gateways can compromise the integrity of the HiTLCPS. Another problem of gateway-based approaches is that sensor nodes are often required to format the data according to a specification defined by the provided drivers of the gateway, forcing the developer to create a software driver or analyzer for each specific data frame format, further reducing their interoperability for HiTLCPSs.

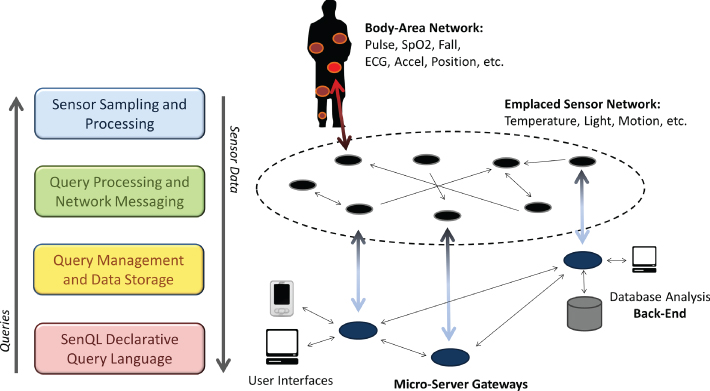

Some research work focused on some of the architectural issues of including WSNs into HiTLCPS. SenQ [9] is a data streaming service for WSNs designed to support user-driven applications through peer-to-peer (P2P) in-network queries between wearable interfaces and other resource-constrained devices. It introduced the concept of “virtual sensors”, user-defined streams that could be dynamically discovered and shared. For example, in assisted-living scenarios for elderly people, a doctor could combine information from mobility speed, movement, and location (e.g. nearby stairs) to create a virtual sensor that alerted nearby healthcare staff of an elevated risk of falls. SenQ took into consideration several requirements that were not satisfied by existing query system designs at the time, such as heterogeneity of sensor devices, dynamics of data flow patterns, localized aggregation of sensor data, and in-network monitoring. The system supported hierarchical architectures but predominantly favored ad hoc decentralized ones, as the authors argued that ad hoc architectures with neighborhood devices and service discovery are better suited to supporting large-scale and open systems with many users and sensors [33]. Data and control logic was also kept close to the concerned devices, in order to save energy and preserve scalability by providing a stack with loosely coupled layers that were placed on devices according to their capabilities and by enabling in-network P2P query issue for streaming data. Figure 4.1 shows SenQ's query system stack and the topology of one of its prototype implementations.

Figure 4.1 SenQ's query system stack shown side-by-side with the topology and components of AlarmNet, a prototypical implementation for assisted-living [9]. Source: Adapted from Wood 2008.

Another type of communication paradigm that may benefit HiTCPSs is body-coupled communication (BCC) [45] for supporting low-energy usage, heterogeneity, and reduced interference. BCC leverages the human body as the communication channel, i.e. signals are transmitted between sensors as electrical impulses directly through human tissue to a point of data collection, a circuit-equivalent representation of the body channel in which different types of body tissue (skin, fat, muscles, and bone) are modeled with variable levels of impedance [81]. In particular, “Galvanic coupling” differentially applies the signal over two coupler electrodes, which is then received by two detector electrodes. The coupler establishes a modulated electrical field, which is sensed by the detector. Therefore, a signal transfer is established between the coupler and detector units by galvanically coupling signal currents into the human body [82]. There are several motivations for using this paradigm. First, the energy consumed in BCC is shown to be approximately three orders of magnitude less than the low-power classic RF-based network created through IEEE 802.15.4-based nodes. This technology is also bolstered by high bandwidth availability, of approximately 10 Mbps, which accommodates the needs of multiple sensor measurements. Finally, it offers a considerable mitigation of fading phenomena and overcomes typical interference problems of the industrial, scientific, and medical (ISM) which are usually affected by nearby devices (e.g. Bluetooth, WiFi, or microwave ovens) [45].

Conversely, we argue that much of the computational power and sensing capabilities for future HiTLCPSs will come from devices already existing in the environment. In particular, we believe that near-future HiTL systems will be heavily based on smartphone technology, owing to their rapidly expanding dissemination and powerful computation and sensing capabilities. A smartphone's sensors can be used by simple inference mechanisms to evaluate a human's psychological and physiologic states and integrate this information into HiTLCPSs. These sensors may include accelerometers, GPS positioning, microphones, or even the smartphone's camera.

In this line, some research attempted to combine smartphone data acquisition with social networking. The term “people-centric sensing” was used by the MetroSense project [83] to describe a vision where the majority of network traffic and applications are related to sensor and actuator data, applied to the general citizen. The MetroSense project envisioned collaborative data gathering of sensed data by individuals, facilitated by sensing systems that comprise cheap and easily accessible mobile phones and their interaction with software applications. The project proposed an architecture that supported heterogeneity by joining a variety of sensing platforms into a single architecture, while considering the communication limitations of low-power wireless devices [84].

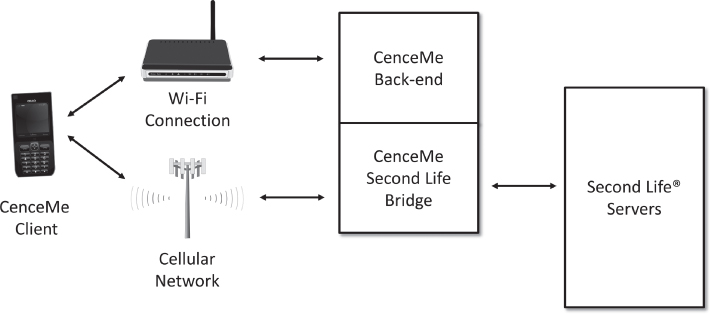

MetroSense's architecture design followed a number of guiding principles: “network symbiosis” meant that traditional networking infrastructures would be integrated into the new sensing infrastructure in order to utilize the already existing power, communication, routing, reliability, and security resources; “asymmetric design” was a principle where differences in computational power and resources between different members of the network were exploited by pushing computational complexity and energy burden to devices with greater capacity; “localized interaction” implied that network elements should only interact with devices within a constrained “sphere of interaction”, sacrificing network flexibility with the aim of increasing service implementation, simplicity, and communications performance. The architecture of one of its project implementations is shown in Figure 4.2.

Figure 4.2 The architecture of CenceME [10], one of MetroSense's implementations.

Many of these guiding principles also apply to more general HiTLCPSs. We provide further insight into this matter in Chapter 10.

Several mechanisms have been proposed to support continuous sensing using smartphones. Jigsaw [85] is a continuous sensing engine that supports resilient accelerometer, microphone, and GPS data processing. It comprises a set of plug-and-play sensing pipelines that adapt their depth and sophistication to the quality of data as well as the mobility and behavioral patterns of the user, in order to drive down energy costs. This reusable and application agnostic sensing engine proposed solutions to problems that usually arise in mobile phone sensors, such as performing calibration of the accelerometer independently of body position, reducing computational costs of microphone processing, and reducing the GPS duty cycle by taking into account the activity of the user. Focusing more specifically on the microphone, as one of the most ubiquitous but least exploited of the smartphone's sensors, SoundSense [86] is a scalable sound sensing platform for people-centric sensing applications which classifies sound events. It is a general purpose sound sensing system for phones with limited resources that uses several supervised and unsupervised sensing techniques to classify general types of sound (music, voice) and discover novel sound events that are specific to individual users. These sorts of sensing architectures could be exploited to enable future continuous and ubiquitous smartphone sensing in HiTLCPSs.

4.1.2 State Inference

A recurring premise behind powerful HiTL systems is transparent interfaces that can infer human intent, physical and psychological states, emotions, and actions. While traditional interface schemes such as the mouse and keyboard have long been used to transmit human desires, they are not practical, involving series of key combinations or sequences of mouse clicks that are unintuitive and require practice and repetition in order to be learned and mastered. On the other hand, HiTLCPS applications are meant to react to natural human behavior and do not necessarily require direct human interaction. However, deriving advanced mathematical models or machine learning techniques that can reliably classify and possibly predict human behavior is a colossal challenge.

Many different methods for human activity classification can be found in the literature. One of the most successful and popular techniques is the use of Hidden Markov Models [87–89], but some approaches also use naive Bayes classifiers [90–93], Support Vector Machines [94], C4.5 [92 94], and Fuzzy classification [95]. Research also uses different kinds of sensory data for activity detection: wearable sensor boards with many different types of sensors [87 88], wearable accelerometers [89 92 94 96] , gyroscopes [96], ECG [94], heart rate [92], smartphone accelerometer data and sound [97], and even RSSI signals [98]. The application of activity recognition is present in many areas, from sport solutions to social networking and health monitoring. Research in these topics is very active and presents very good results, in some cases achieving accuracy levels in the order of 90–95%.

The detection of a user's psychological state has been previously attempted. A communication framework for human–machine interaction that is sensitive to human affective states is presented in [99], through the detection and recognition of human affective states based on physiological signals. Since anxiety plays an important role in various human–machine interaction tasks and can be related to task performance, this framework was applied in [100] to specifically detect anxiety through the user's physiological signals. The presented anxiety-recognition methods can be potentially applied in advanced HiTLCPSs.

One example of such an application is the use of smartphones in experience sampling method (ESM) studies. ESM is a research methodology that requires periodical notes of the participant's experience. The notes can encompass the participant's feelings at a given moment and are best employed when the subjects do not know in advance when they will be asked to note their experience [101].

Different means can be used to signal a request for the participant's notes. Traditional methods include the use of preprogrammed stopwatches controlled by the researcher. However, through a prototype smartphone-based ESM system, named EmotionSense, it was possible to study the influence of different sampling strategies on the inferred conclusions about the participants' behavior [102]. This prototype system was based on an Android application that used both “physical sensors” (including accelerometer, microphone, proximity, GPS location, and the phone's screen status) as well as “software sensors” (capturing phone calls and messaging activity). These sensors were used to evaluate the context of users and to trigger survey questions about their feelings, namely how positive and negative they felt, their location, and their social setting. These short questionnaires gave insight into the participants' moods and behavior. The application could be remotely reconfigured to vary the questions, sampling parameters, and triggering mechanisms that notified users to answer a questionnaire. The results were used to empirically quantify the extent to which sensor-triggered ESM designs influence the breadth of behavioral data captured in this kind of studies.

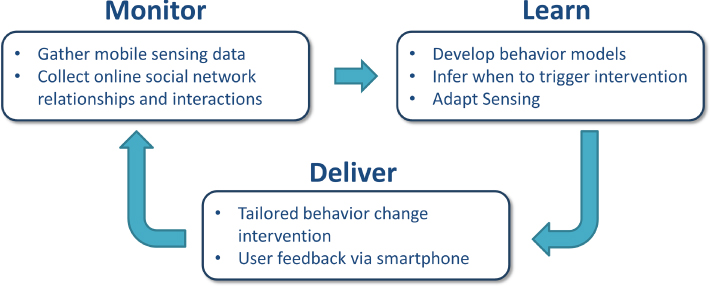

EmotionSense was also used to enhance behavior change interventions (BCI) by using the devices capabilities to positively influence human behavior [11]. Traditional BCIs involve advice, support, and relevant information for the patient's daily activities, which are given during sessions by therapists and coaches. Smartphones with their powerful sensing and machine learning capabilities, ubiquity, and presence allow for behavior scientists to use directed, unobtrusive, and real-time BCIs to induce lifestyle changes that may help people coping with chronic diseases, smoking addiction, diets, or even depression. Information can be delivered and measured in the moments when the users need it the most. For example, people addicted to smoking usually suffer from detectable stress when feeling the need to smoke, creating an opportunity for the system to send a notification urging them not to do so. Thus, detecting the user's context and emotions allows for interventions to be delivered at the right time and place. The authors identified three key components of BCI using smartphones (shown in Figure 4.3). Interestingly, they closely match the basic processes of HiTL control (refer to Figure 3.1), where Monitor corresponds to Data Acquisition, Learn is a part of State Inference and Deliver is a form of Actuation.

Figure 4.3 The three key components of BCI using smartphones [11]. Source: Adapted from Lathia et al. 2013.

The EmotionSense application uses the gaussian mixture model machine learning technique to detect ongoing conversations and their respective participants. An emotion inference component was also developed using a similar approach, training a background gaussian mixture model representative of all emotions through the Emotional Prosody Speech and Transcripts library [103]. This component allowed the application to infer five broad emotional states from the smartphone's microphone: anger, fear, happiness, neutrality, and sadness. The authors reported an accuracy of over 90% for speaker identification and over 70% for emotion recognition.

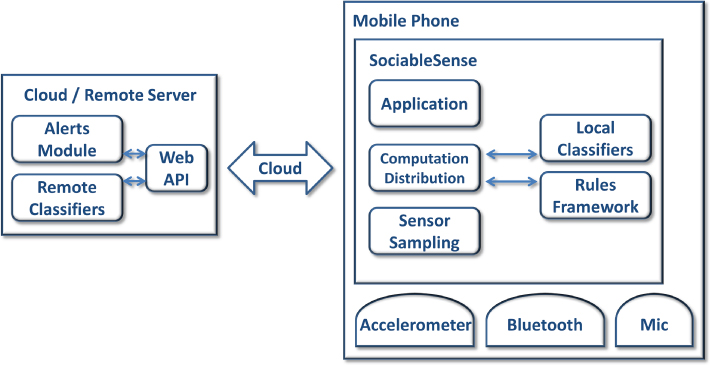

Also with the objective of providing positive behavioral change, SociableSense [12] was a platform that monitored the user's social interactions and provided real-time feedback to improve their relations with their peers. In this work, the authors attempted to measure the “sociability” of users, which is an important factor in many behavioral disorders, ranging from autism to depression. The system then closed the loop by providing real-time feedback and alerts that aimed to make people more sociable. The sociability measurement was divided into two factors: collocation and interaction. Collocation was defined by the proximity between users, and it was inferred by a coarse-grained Bluetooth-based indoor localization mechanism. Interaction was derived from the speaking between users, and it was inferred via the microphone sensor and a speaker identification classifier, in a fashion similar to EmotionSense. Active socialization was promoted through a gaming system which classified the most sociable persons as “mayors” of the social groups. Results showed that such feedback mechanisms influenced users and increased their sociability. SociableSense's architecture is shown in Figure 4.4.

Figure 4.4 SociableSense architecture [12]. Source: Adapted from Rachuri 2011.

Several ongoing challenges for mobile sensing were also identified in [11], including:

- Energy constraints associated with continuous sensing, which require intelligent mechanisms that dynamically adapt sampling rates depending on the user's context.

- Data processing and inference mechanisms that can accurately extract information on human behavior from raw sensor data, and the importance of balancing the distribution of this computation among smartphone sensors and cloud-based back-ends.

- Generality of classification mechanisms that need to make uniform inferences regarding widely different populations of users.

- Privacy concerns about the acquisition of sensitive data (locations, activities) and the recording of data without people's informed consent (e.g. inadvertently capturing the voice of an external person through the microphone of a smartphone user).

The detection of emotion is not restricted to voice pattern recognition, however. Other interesting ways of inferring the user's psychological state have been proposed. The touch interface and movement of a smartphone were used in [104] as a way of inferring emotional states. The proposed framework consisted of an emotion recognition process and an emotion preference learning algorithm which were used to recommend smartphone applications, media contents, and mobile services that fit the user's current emotional state. The system collected data from three sensors: the touch interface, accelerometer, and gyroscope, classifying it into types (e.g. touch actions could be divided into tapping, dragging, flicking, and multi-touching). The processed data was used to quantify higher level emotions, such as “neutral”, “disgust”, “happiness”, or “sadness”, through decision tree classification methods. By analyzing communication history and application usage patterns, MoodScope [105] also inferred the mood of the user based on how the smartphone was used. The system passively ran in the background, monitoring application usage, phone calls, email messages, SMSs, web browsing histories, and location changes as user behavior features. With daily mood averages as labels and usage records as a feature table, the authors applied a regression algorithm to discern a mood inference model.

More recently, image-based processing of facial expressions was used to detect irritation in drivers [44] and to improve driving safety. The developed system was non-intrusive and ran in real-time. Through an NIR camera mounted inside the dashboard, a near frontal view of the driver's face was captured. A face tracker was applied to track a set of facial landmarks used to classify the facial expressions. Experimental results demonstrated that the system had a detection rate of 90.5% for in-door tests and 85% for the in-car tests.

4.1.3 Actuation

Actuation has a very broad definition in the field of HiTLCPS. For example, applications that passively monitor a human being's sleep environment to give information about potential causes of sleep disruption [106] or that record a human's cardiac sounds to detect pathologies [75] do not directly influence the associated environment, nor do they attempt to achieve a certain goal and yet they still “actuate” by providing information.

More direct actuation with the physical world can be achieved through specialized devices, such as robots [16]. Historically, robots were designed and programmed for relatively static and structured environments. Once programmed, it was usually expected for the robot's environment and interactions to remain within a very constrained range of actions. Anything unaccounted for in the robot's configuration is essentially invisible and only minimal feedback is traditionally available, such as joint position measurements. These primitive sensory capabilities require robots to operate in isolated “work cells”, free from people and other interferences. Thus, current robots, including mobile ones, far from being integrated into HiTLCPSs, continue, on the whole, to use collision sensors that halt operation whenever something unaccounted for happens or whenever somebody enters their workspace, to prevent accidents. This continues to enforce the need for having areas for workers and areas for robots, which are mutually exclusive and preclude any type of human–robot cooperation typically found in HiTLCPSs [107]. Apart from safety reasons, there is also the lack of trust of workers in robots. People prefer to work alongside teleoperated robots than with autonomous ones [108]. The reason for this mistrust is that people cannot predict the robot's intentions or behavior, owing to the lack of body language signs, common in humans. A second reason for mistrusting robots is that people do not know if the robot “sees them” (lack of presence awareness). Without HiTL behavior modeling, robots in many automated factories remain isolated in both physical and sensorial senses [109].

Yet, this is about to change. While robots were initially used in repetitive tasks where all human commands were given a priori, the next generations of advanced robots is envisioned to be mobile and operating in unstructured or uncertain contexts.

Achieving “human-awareness” requires robots to have sensing capabilities greater than mere joint position measurements. Interestingly, in recent years there has been a combination of two important technologies—robots and WSNs—that can complement each other in this respect. WSNs can assist in the process of discovering the environment where robots actuate; the detailed level of information provided by sensors may be essential for the tasks to be undertaken by a robot. On the other hand, robots can be used as mules that collect and forward information from several sensor nodes spread in the environment. Thus, the energy needed for long-distance or multi-hop transmissions can be reduced. Robots can also perform the calibration of sensors and support their recharging process when energy levels are low. Robots and wireless sensor technologies can be exploited to support remote monitoring in dangerous environments under maintenance, using a set of sensors to measure, for example, gas levels. They can also be applied in the monitoring of environmental parameters, such as in wastewater treatment facilities or for measuring air pollutants, allowing a proactive implementation of a social responsibility culture. Using wireless technology and robotic mobile inspection for the monitoring and surveillance of wide areas, where diagnosis and intrusion detection are critical, is also more reliable and cost-efficient than traditional methods.

While WSNs offer the sensorial capabilities necessary for robots to perform the desired tasks, humans provide the necessary management of their operation. Thus, robots are capable of performing missions in hazardous environments in cooperation with humans, taking into consideration the psychological state of humans, while using data from WSNs to scan both humans and the environment. In fact, the human–WSN–robot combination has huge potential in the perspective of actuation in HiTLCPSs, since advanced industrial automation can strongly benefit from distributed sensing capabilities. Robots, humans, and WSNs can be deployed to support personnel safety, by complementing human work in hazardous contexts, with wireless sensor networks collecting and processing information. Mobile workers and robots can be equipped with multiple sensory systems that send information to a control center, accessible and monitored by safety and health-control personnel. This allows workers to safely and remotely control operations, and to take faster decisions. The combination of these technologies allows us to envision highly advanced HiTLCPSs applied to many different scenarios. As an example, flying inspection robots could be used to navigate interactively and inspect power plant structures (including various components within and around boilers, environmental filters, or cooling towers), as well as oil and gas industrial structures (inside and outside large-scale chimneys, inside and outside flare systems, inside button part of refining columns, as well as pipelines and pipe webs). On the other hand, workers in the field may collaborate with these robots in their inspection tasks, in the management of the whole operation, and in the deployment and collection of sensor networks. HiTL controls allow for this collaboration to be safe for humans, since their presence, actions, and intentions are made known to individual robots as well as to the entire system.

There are several projects that specifically study and evaluate the integration of WSNs with robots. For example, the Robotic UBIquitous COgnitive Network (FP7-ICT-269914) [110] was a project that aimed to create autonomous and auto-configured systems by combining WSNs, multi-agents, and mobile robots. The proposed mechanisms reduced the complexity and the time needed in deployment and reconfiguration tasks. However, the main objective of this project was to remove, as much as possible, the human from the configuration and maintenance processes. According to the authors, this meant that the quality of service that was offered by robot WSNs was significantly improved, without the need for extensive human involvement. Considering that these technologies coexist with human beings, why were humans excluded from control loop decisions? Why not take advantage of the human potential to create immersive HiTLCPSs?

Other research initiatives focus more on this human–robot cooperation. For instance, the NIFTi European FP7 project (natural human–robot cooperation in dynamic environments, FP7-ICT-247870) [111], which ran from January 2010 until December 2013, proposed new models for cooperation between robots and humans when they work towards a shared goal, cooperatively performing a series of tasks. However, this project required a lot of direct instructions from human to robots, and WSNs were not used to dynamically contribute and adapt to these systems. PHRIENDS: Physical Human–Robot Interaction: Dependability and Safety (FP6-IST-045359) [112] was a project that aimed to propose the co-existence of robots and humans. One of its main objectives was to find the strictest safety standards for this coexistence. Later, this project resulted in a new PF7 project, SAPHARI [113], which maintained its main objective but now used soft robotics, combining cognitive reaction and safe physical human–robot interaction. In contrast with its precursor project, SAPHARI intended to provide reliable, efficient, and easy-to-use functionality. There are also other projects on the topic of safety in interaction between humans and robots. CHRIS (Cooperative Human Robot Interaction Systems; FP7-ICT-215805) [114] evaluated a mapping mechanism between robots and humans. This project also aimed at studying the safety of cooperative tasks between humans and robots. However, once again, these environments did not assume the existence and participation of WSNs. On the other hand, humans were just seen as end users and they were not integrated into the system. SWARMANOID: Towards Humanoid Robotic Systems (FP6-IST-022888) [115] and SYMBRION (Symbiotic Robotic Organisms; FP7-ICT-2007.8.2) [116] were two similar projects that aimed to find strategies to achieve collaborative work between robots. SWARMANOID proposed joint mechanisms both by air and land to achieve search tasks. The latter project intended to optimize energy by sharing policies. Robot-Era [117] was a project that started in 2012 and finished in 2015. It intended to implement and integrate advanced robotic systems and intelligent environments in real scenarios for the aging population. Some of these intelligent environments were based on WSNs, and their role was to support the quality of life and independent living for elderly people.

In Table 4.1, we summarize the main technologies/solutions that are discussed in this section.

Table 4.1 Summary of some of the technologies/solutions that support HiTLCPS

| Technology | Description | Examples of applied research |

| Data Acquisition | ||

| Wireless Sensor Networks | Networks of small, battery-powered devices | 6LoWPan [80] and SenQ [9] |

| Body-Coupled Communication | Sensors that leverage the human body as their communication channel | [81] and [82] |

| Smartphones | Devices with powerful computation and sensing capabilities that accompany users throughout their days | MetroSense [84], Jigsaw [85], and SoundSense [86] |

| State Inference | ||

| Physical Activity Classification | Detection of human activities (e.g. walking, brushing teeth,) | Hidden Markov Models [87–89], naive Bayes classifiers [90–93], Support Vector Machines [94], C4.5 [92 94], and Fuzzy classification [95] |

| Psychological State Classification | Detection of human affective states (e.g. happiness, anger) | Frameworks such as [99], EmotionSense [11], SociableSense [12], smartphones' touch interface [104], MoodScope [105], and facial recognition [44] |

| Actuation | ||

| Human–WSNs–Robots | WSNs offer the sensorial capabilities necessary for robots to perform the desired tasks, while humans provide the necessary management of their operation | RUBICON [110], NIFTi [111], PHRIENDS [112], SAPHARI [113], CHRIS [114], SWARMANOID [115], SYMBRION [116], and Robot-Era [117] |

| Notifications and Suggestions | Smartphone-based systems often show suggestive notifications | EmotionSense [11], SociableSense [12 118], and HappyHour [119] |

Despite all of these efforts, much work still has to be done, in particular for robotic actuation that considers the human state. Thus, the role of robotics in future HiTLCPSs cannot be yet fully understood. In addition to the unmet technical challenges, there are also questions of an ethical nature that will also need to be considered. We will identify some these matters in Section c11.2.

4.2 Experimental Projects

As previously mentioned, the area of HiTLCPSs is vast. Not only can they be applied to many different areas, the variety of their possible configurations and technologies makes it very difficult to establish well-defined borders for classification. Nevertheless, in this section we present several examples of some of the works in the area of HiTLCPSs that apply the various technologies discussed in Chapter 2. By no means do we intend to provide an extensive review on the state of the art; the purpose of this section is to give the reader a better idea of the applicability of HiTLCPS concepts.

4.2.1 HiTL in Industry and at Home

To contribute towards a better understanding of the spectrum of HiTL applications, the work in [13] provided its own implementation of an HiTL system that attempted to reduce the energy waste in computer workstations by modeling human behavior and detecting distractions. While current practices for reducing energy consumption are usually based on fixed timers that initiate sleep mode after several minutes of inactivity, the proposed system uses adaptive timeout intervals, multi-level sensing, and background processing to detect distractions (e.g. phone calls, restroom breaks) with 97.28% accuracy and cutting energy waste by 80.19% [13]. The control architecture of the system is shown in Figure 4.5. The proposed “distraction model” comprised two main sources of information: user activity and system activity. At user-activity level, the authors used a “gaze tracker”, which evaluated the user's gazing at the computer's screen through a webcam. At system-activity level, the system evaluated keyboard and mouse events, CPU usage, and network activities to infer the machine's level of use. The control loop combined both types of information to determine the distraction status of the user, with some self-correcting measures. For example, if the user resumed the system shortly after it was put to sleep, the control loop took this as a negative feedback event, and subsequently adjusted the timeout interval.

Figure 4.5 Control architecture for energy saving with HiTL [13]. Source: Adapted from Liang 2013.

At the same time, there is an increasing concern of corporations with the well-being and happiness of employees, since a happy employee is a more productive one. An article published in the December 2012's edition of IEEE Spectrum [120] discusses how the same technology advances in computers and telecommunications that have brought about tremendous gains in productivity may also be applied to increase happiness, instead of stress. The work of engineers and psychologists over the last few decades has allowed us to infer a person's level of happiness by monitoring and analyzing a person's sleep patterns, exercise and dietary habits, as well as vital statistics like body temperature, blood pressure, and heart rate. This technology might be used to improve the overall environment of the workplace, resulting in better communication, teamwork, and job satisfaction. The Hitachi Business Microscope is a small wearable device containing six infrared transceivers, an accelerometer, a flash memory chip, a microphone, a wireless transceiver, and a rechargeable lithium-ion battery that allows the badge to operate for up to two days at a time. It measures the wearer's body movements, voice level, and location, as well as the ambient air temperature and illumination. When these transceivers detect another badge within two meters, the two badges exchange IDs and each badge then records the time, duration, and location of the interaction. This allows the collection of data on the type of social exchanges that take place in the workplace. The Hitachi Business Microscope is being used by hundreds of organizations (banks, design firms, research institutes, hospitals, etc.) to collect behavioral data. This data is then used in conjunction with studies from the field of positive psychology, which focuses on desired mental states (including happiness), to improve people's personal and professional lives. Happy people tend to be more creative, more productive, and feel more fulfilled by their work. Happy people also tend to more easily achieve a state of full engagement and concentration. Interestingly, the research presented in [120] suggests that it is possible to infer when a person has reached this state by analyzing the consistency of their movements: for some people, that consistent movement is slow, while for others it is fast. The time of day during which people tend to experience the “flow” is also highly variable and individual, some people favor mornings while others favor afternoons or evenings. Regardless of when participants experience “flow”, their motions become more regular as they immerse themselves in the activity at hand. One advantage of measuring the worker's activity is that once people become aware of their daily patterns they can better schedule their work to take advantage of times when they are most likely to be in this focused mental state. Documenting social interactions can also help to identify the areas in an office which tend to host the most frequent and active discussions, helping in the restructuring of office layouts to foster more fruitful collaboration.

The area of human-computer interaction has long studied the concept of HiTL. Humans prefer to attend to their surrounding environment and engage in dialog and interaction with other humans rather than to control the operations of machines that serve them. Thus, in [121] it is suggested that we must put computers in the human interaction loop (CHIL), rather than the other way around. In this line, a consortium of 15 laboratories in nine countries has teamed up to explore what is needed to build usable CHIL services. The consortium developed an infrastructure used in several prototype services, including a proactive phone/communication device; the Memory Jog system for supportive information and reminders in meetings, collaborative supportive workspaces, and meeting monitoring; and a simultaneous speech translator for the lecture domain. These projects led to several advances in the areas of audiovisual perceptual technologies, including speech recognition and natural language; person tracking and identification; identification of interaction cues, such as gestures, body and head poses, and attention; as well as human activity classification.

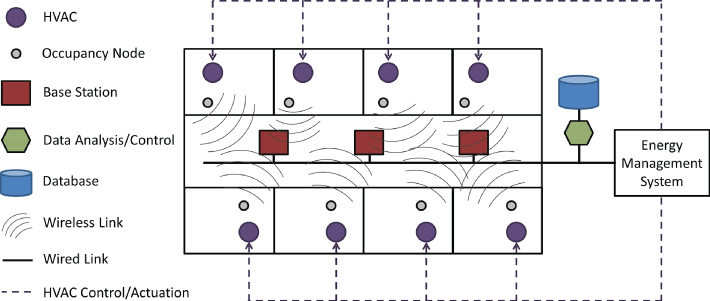

HVAC systems have also been endowed with HiTL control. For example, in [122] the authors implemented a system that used cheap and simple wireless motion sensors and door sensors to automatically infer when occupants were away, active, or sleeping. The system used these patterns to save energy by automatically turning off the home's HVAC system as much as possible without sacrificing occupant comfort, effectively creating a HiTLCPS. Another example can be found in [14], where an occupancy sensor network was deployed across an entire floor of a university building together with a control architecture (see Figure 4.6) that guided the operation of the building's HVAC system, turning it on or off to save energy, while meeting building performance requirements.

Figure 4.6 Architecture of an HiTL HVAC system [14]. Source: Adapted from Agarwal 2011.

4.2.2 HiTL in Healthcare

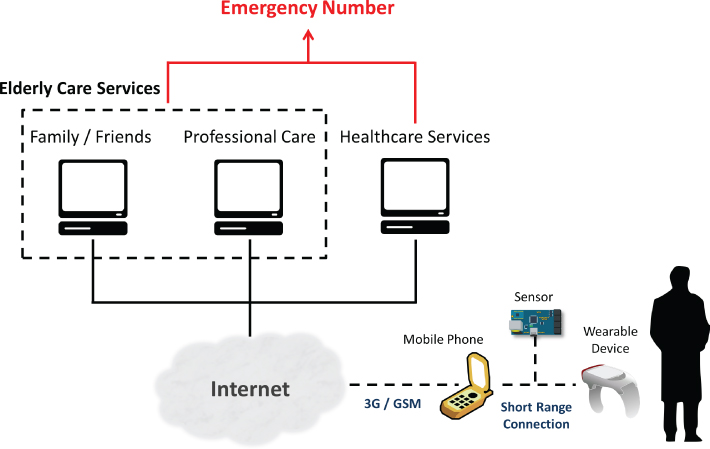

CAALYX [15] was a research work that intended to develop a wearable light device directed towards the monitoring of elderly people that could measure specific vital signs in order to detect falls and to automatically communicate in real time with assistance services in case of emergency (Figure 4.7). A number of wireless sensors that detected several vital signs (blood pressure, heart rate, temperature, respiratory rate, etc.) were used. These wireless sensors communicated within a body-area-network and with a mobile phone with GPRS access. The mobile phone was able to analyze the data and detect emergency situations, during which it would contact an emergency service, regardless of the elderly person's location. The CAALYX project also developed an initial simulation of its workings in the Second Life® virtual world, as a means of disseminating and showcasing the project's concepts to wider audiences [123]. There are two interesting aspects of this work: its use of mobile phones as gateways for ubiquitous communication and its early attempts at integrating health monitoring with virtual environments.

Figure 4.7 Diagram showing the main components of CAALYX's roaming monitoring system [15]. Source: Adapted from Boulos et al. 2007.

Schirner et al. [16] stress the existing multidisciplinary challenges associated with the acquisition of human states in HiTLCPS. For example, embedded systems are key components used in these systems and, as such, they propose a holistic methodology for system automation in which designers develop their algorithms in high-level languages and fit them into an electronic system level tool suite which acts as a system compiler, producing code for both the CPU and the field-programmable gate array. This automation allows researchers to more easily test their algorithms in real-life scenarios and to focus on the important task of algorithm and model development. Schirner et al. also used an EEG-based brain–computer interface for context-aware sensing of a human's status, which influenced the control of an electrical wheelchair. To improve the intent inference accuracy, the authors suggest that inference algorithms should adapt to the current application as well as to the user's preferences and historical behavior, that is they should use application-specific history and contextual information. The field of robotics is also addressed, as robots are the primary means for actuation and interaction with the physical world in CPSs. Their semi-autonomous wheelchair interpreted brain signals that translated high-level tasks such as “navigate to kitchen”, and then executed path planning and obstacle avoidance [16]. However, important research questions are still unresolved in this area, namely the problem of dividing control between human and machine, as well as the modularity and configurability of such systems. Distributed sensor architectures are also very important for HiTL since they allow the measurement of physiological changes, which may be processed to infer current human activities, psychological states, and intent. In this regard, BCC was presented as a means of supporting low-energy usage, high bandwidth, heterogeneity, and reduced interference.

At the Worcester Polytechnic Institute [124], a HiTLCPS prototype platform and open design framework for a semi-autonomous wheelchair was developed. The authors considered disabled individuals, namely those suffering from “locked-in syndrome”, a condition in which an individual is fully aware and awake but all voluntary muscles of the body are paralyzed. To improve the life condition of these individuals, they created a HiLCPS wheelchair system which used infrared and ultrasonic sensors to navigate through indoor environments, enabling the user to share control with the wheelchair in an HiTL fashion. This allowed handicapped individuals to live more independently and have mobility. The resulting prototype used modular components to provide the wheelchair with a degree of semi-autonomy that would assist users of powered wheelchairs to navigate through the environment. This work was extended in [16], where the user could interface with the wheelchair through a brain–computer interface based on steady-state visual-evoked potentials induced by flickering light patterns in the operator's visual field (see Figure 4.8). A monitor showed flickering checkerboards with different frequencies. Each checkerboard and frequency corresponded to one of four desired locations. When the operator focused on a desired checkerboard on the monitor, his/her visual cortex predominantly synchronized with the checkerboard's flickering harmonic frequencies. These frequencies were detected through an electrode on the scalp near the occipital lobe, where the visual cortex is located.

Figure 4.8 A semi-autonomous wheelchair receives brain signals from the user and executes the associated tasks of path planning, obstacle avoidance, and localization [16]. Source: Adapted from Schirner 2013.

On the other hand, there are other projects that focus on the development of intelligent wheelchairs with HiTL to assist disabled people. The work “I Want That” [74] proposes a system that controls a commercially available wheelchair-mounted robotic arm. Since people with cognitive impairments may not be able to navigate the manufacturer-provided menu-based interface, the authors improved it with a vision-based system which allows users to directly control the robotic arm to autonomously retrieve a desired object from a shelf. To do so, they use a touchscreen which displays a shoulder camera view, an approximation of the viewpoint of the user in the wheelchair. An object selection module streams the live image feed from the camera and computes the position of the objects. The user can indicate “I want that” by pointing to an object on the screen. Afterwards, a visual tracking module recognizes the object from a template database while the robotic arm grabs the object and gives it to the user.

A vision-based robot-assisted device to facilitate daily living activities of spinal cord injured users with motor disabilities is also proposed in [125], through an HiTL control of a robotic arm. The research objective was to reduce time for task completion and the cognitive burden for users interacting with unstructured environments via a wheelchair-mounted robotic arm. Initially, the user needed to indicate the approximate location of a desired object in the camera's field of view using one of a number of diverse user interfaces, including a touchscreen, a trackball, a jelly switch, and a microphone. Afterwards, the user could order the robotic arm to center the object of interest in the visual field of the camera, and then grab the desired object.

A model-driven design and validation of closed-loop medical device systems is presented in [126]. The safety of a closed-loop control system of interconnected medical devices and mechanisms was studied in a clinical scenario, with the objective of reducing the possibility of human error and improving patient safety. A patient-controlled analgesia pump delivered a drug to the patient at a programmed rate while a pulse oximeter received physiological signals and processed them to produce heart rate and peripheral capillary oxygen saturation outputs. A supervisor component got these outputs and used a patient's model to calculate the level of drug in the patient's body. This, in turn, influenced the physiological output signals through a drug absorption function. Based on this information, the supervisor decided whether to send a stop signal to the pump. The main contribution of the project was the methodology for the analysis of safety properties of closed-loop medical device systems.

4.2.3 HiTL in Smartphones and Social Networking

Exploiting the line of people-centric sensing, the authors of [10] propose the use of sensors embedded in commercial mobile phones to extrapolate the user's real-world activities that in turn can be reproduced in virtual settings. The authors' goal was to go further than simply representing locations or objects; they intended to provide virtual representations of humans, their surroundings, and their social interactions. The proposed system prototype implementation was named CenceMe, and allowed members of social networks, namely Second Life®, to process the information sensed by their mobile phones and use this information to extrapolate the user's surroundings and actions (refer to Figure 4.2). The authors proposed to use mobile phone sensors, such as microphones or accelerometers, to infer the user's current activity. In fact, today's mobile phones are powerful enough to run activity recognition algorithms, and the results can be sent to virtual worlds thanks to the phone's mobile Internet capabilities. Activity recognition algorithms extracted patterns from the obtained data, such as the current user status in terms of activity (e.g., sitting, walking, standing, dancing, or meeting friends), and logical location (e.g., at the gym, coffee shop, work, or other). This information was reflected in the virtual world, where the user's friends could see what activities he/she was performing at a given moment and his/her current geographical position. Real-world activities could be mapped to different activities in the virtual world; for example, the user could choose to have real-world running represented by flying on the virtual world. The avatar's clothes and accessories could also be changed according to a user's location; the avatar's shirt could display a logo of the user's current location (cafe, home, school, etc.). The authors also used external sensors to complement the ones provided by the mobile phone, namely they suggested the use of galvanic skin response sensors to infer emotional states and stress levels. The activity recognition algorithms were performed on the mobile device in order to reduce communication costs and computational burden on the server. However, in cases where the algorithms were too intensive for handheld devices, the computation was partially or even completely performed on the server side. The use of mobile phones as means of sensing and communicating with virtual realities is an important aspect of this work, as relying on common and easily accessible technologies fosters the adoption of these new systems by more users.

A few more recent mobile applications attempted to combine context awareness with user social connections. Highlight [127] is a social application that allows users to learn more about the people around them by displaying profiles of nearby users. The application presents several data items, including names, photos, mutual friends, and other information users have chosen to share, as well as a tiny map that shows their recent location (in a fashion similar to the mockup shown in Figure 4.9). The closer a person is to the user (the more interests, friends, or history they have in common), the more likely the user will be notified of their presence. According to Paul Davison, Highlight's chief executive officer, the application “started with the idea that if you can just take two people and connect them, you can make the world a better place” [128]. Thus, Highlight hopes to increase synchronicity and reduce the friction in meeting new people, allowing users to know a few things about each other in advance.

Figure 4.9 A mockup of a map interface similar to the Highlight application.

The SceneTap [129] application is an even more flexible and complex example of detection of people for social networking purposes. The application uses anonymous facial detection software to approximate the age and gender of people entering a nightclub environment. By counting the number of people entering and leaving a venue, the application can estimate and report crowd size, gender ratios, and the average age of people in a given location. This information is shared among users, allowing them to better plan their nights out and decide which nightlife establishments are a better fit for their desires.

HiTL concepts have also been applied to smartphone data usage. In fact, HiTL has previously been proposed as a solution for addressing the increasing demand for wireless data access [118]. Since the wireless spectrum is limited and shared, and transmission rates can hardly be improved solely with physical layer innovations, a “user-in-the-loop” mechanism was proposed that promoted spatial control, in which the user is encouraged to move to a less congested location, and temporal control, in which incentives, such as dynamic pricing, ensure that the user reduces or postpones his/her current data demand in case the network is congested. This closed loop controlled user activity itself through suggestions and incentives, influenced by the current location's signal-to-interference-plus-noise ratio and traffic situation. The authors propose that users receive control information in the form of a graphical user interface, showing a map and directions towards a better location and a better time to start the user traffic session (e.g. outside busy hours).

Another area that has been closely linked with smartphones and HiTLCPS is the area of recommendation systems. On this information era, the ability to quickly and accurately understand consumers' desires allows companies to timely control supply and demand, and cope with quick changes in consuming trends. There has been a considerable amount of research in the area of data mining to derive intelligence from large amounts of transaction records, so that individual consumer marketing strategies can be developed [130]. Since consumers are also influenced by relevant information provided by retailers, context-aware recommendation systems can have a considerable effect on consumerism dynamics. In particular, smartphones are amazing candidates for sensing and understanding consumer context (Data Acquisition and Inference) and powerful dissemination vectors for recommendations (Actuation). In a sense, these systems can be considered open-loop HiTLCPSs as they usually do not directly affect the environment or the consumer, but merely suggest products and services. A very large body of research work has studied how smartphones can be used in this context. However, we will only present a few examples of such research work, for the sake of brevity.

In [130], context-aware recommendation systems for smartphones were divided into two modules to provide product recommendations. First, a simple RSSI indoor localization module located the user's position and determined his/her context information. RFID readers would be equipped in shopping centers with a consumer location mechanism. Consumers, would place their RFID tags close to the readers and let them recognize their identity and location (based on which reader was accessed). Second, a recommendation module provided directed product information to users, through association rules mining. The system performed recommendation calculations pertaining to merchandise in the region of the user, and passed on this information through the smartphone.

Another example can be found in [17], where the authors managed to create a recommendation system to suggest smartphone applications. The motivation behind this work is the huge number of available apps; Google's own Android Play Store currently has over 1,600,000 applications [131]. Bayesian networks processed data from several of the smartphone's sensors, including accelerometer, light, GPS, time of day, and date, to perform context inference (see Figure 4.10). From this information, the recommendation system was able to associate the user's context with application categories of interest, based on the author's domain knowledge. The categories included communications, health and fitness, medical, media and video, news and magazines, weather, business, social, games, or traffic information.

Figure 4.10 Overview of the system proposed in [17]. Source: Adapted from W.-H. Rho and S.-B. Cho 2014.

In a similar vein, the authors in [132] proposed AppJoy, a system that also made personalized application recommendations; however, instead of using the smartphone's sensors to understand context, the system actually analyzed how the user interacted with his/her installed applications. AppJoy measured usage scores for each app, which were then used by a collaborative filter algorithm to make personalized recommendations. What the user did directly affected his/her application profiling. AppJoy followed a ubiquitous usability approach, being completely automatic, without requiring manual input, and adapted to changes of the user's application taste.

Let us summarize the projects presented in this section by looking at Table 4.2.

Table 4.2 Summary of experimental HiTLCPS projects

| Project | Main objectives and features |

| Industry and home | |

| Reducing Energy Waste for Computers by Human-in-the-loop Control [13] | Attempted to reduce the energy waste in computer workstations by detecting distractions |

| Can Technology Make You Happy? [120] | Used the Hitachi Business Microscope to acquire workspace behavioral data. This data was then used in conjunction with positive psychology to improve people's mood, creativity, and productivity |

| Handbook of Ambient Intelligence and Smart Environments [121] | Focused on human–computer interaction within smart environments and described several prototype services (proactive phone/communication, information reminders, collaborative supportive workspaces, speech translators, etc.) |

| The Smart Thermostat [122] | Smart HVAC systems that used HiTL control to improve performance and save energy |

| Duty-cycling buildings aggressively [14] | |

| Healthcare | |

| CAALYX [15] | Used wearable devices to measure specific vital signs and detect falls of elderly people. Mobile phones with GPRS access were also used to analyze data and automatically communicate with assistance services |

| Modular Designs for Semi-autonomous Wheelchair Navigation [124] | Presented a semi-autonomous wheelchair that navigated through indoor environments and was controlled through a brain–computer interface based on flickering light patterns |

| The Future of Human-in-the-loop Cyber-physical Systems [16] | |

| I Want That [74] | HiTL control of wheelchair-mounted robotic arms through touchscreens, visual tracking of objects, trackballs, jelly switches, and microphones |

| HiTL control of an assistive robotic arm in unstructured environments for spinal cord injured users [125] | |

| Toward patient safety in closed-loop medical device systems [126] | Design and validation of a closed-loop medical device system that consisted of an HiTL-controlled analgesia pump |

| Smartphones and social networking | |

| CenceMe [10] | Second Life® users could use their smartphone's sensors to automatically detect and share their location and actions in the virtual world |

| Highlight [127] | Mobile social application that allows users to learn more about the people around them by displaying profiles of nearby users |

| SceneTap [129] | Application that used anonymous facial detection software to approximate the age and gender of people entering a nightclub |

| User-in-the-loop: spatial and temporal demand shaping for sustainable wireless networks [118] | HiTL control of the wireless spectrum through suggestions and incentives that encouraged less congested locations and the reduction of data demand |

| Recommendation-aware Smartphone Sensing System [130] | HiTL recommendation systems for smartphones that used location, sensors, and interaction to understand user context |

| Context-aware smartphone application category recommender system with modularized Bayesian networks [17] | |

| AppJoy [132] | |

4.3 In Summary..

The objective of this chapter was two-fold. In Section 4.1, we presented some of the technologies (summarized in Table 4.1) that can support current and future HiTLCPSs in terms of Data Acquisition, State Inference, and Actuation. This section was meant to give the reader an overview of the tools currently available to him.

On the other hand, Section 4.2 presented several projects and scientific prototypes (summarized in Table 4.2) to give the reader a better idea of how the previously presented HiTL concepts and technologies can be applied in real-world scenarios.

As such, we hope that, by now, the reader has enough awareness of the theoretical concept behind HiTLCPSs to begin a practical exercise. In fact, in the next part of this book we will attempt to strengthen this theoretical understanding through a hands-on approach.