Base and Parallel Sysplex

A sysplex has been available since 1990 when it was announced as a platform for an evolving large system computing environment. Sysplex provides a commercial platform that supports the nondisruptive addition of scalable processing capacity, in increments matching the growth of workload requirements for customers, without requiring re-engineering of customer applications or re-partitioning of databases. Sysplex also allows the computing environment to reach almost unimaginable levels of continuous availability or 24 by 7. Sysplex technology is built on existing data processing skills and runs existing applications with additional cost savings.

A sysplex (SYStems comPLEX) is not a single product that you install in your data center. A sysplex is a collection of z/OS systems that cooperate, using certain hardware, software, and microcode, to process workloads, provide higher continuous availability, easier systems management, and improved growth potential over a conventional computer system of comparable processing power. This chapter is an overview of a sysplex, including the following topics:

•Sysplex benefits, evolution, and philosophy

•Required software and hardware for sysplex

•Coupling facility

•The z/OS sysplex services components: XCF and XES

•Several types of couple data set (CDS)

•The sysplex configurations: base sysplex and Parallel Sysplex

•Sysplex exploiters

•Coupling facility structure rebuild and duplexing

•Coupling facility configuration and availability

•An overview of settings for sysplex

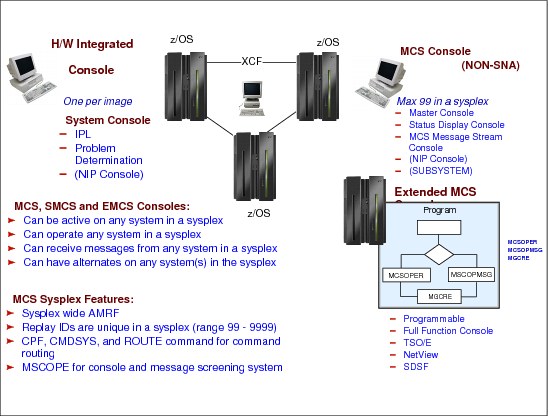

•Consoles and sysplex management

1.1 Evolution to a Parallel Sysplex

Figure 1-1 Evolution to a Parallel Sysplex

Evolution to a Parallel Sysplex

A Parallel Sysplex is the most recent development in the evolution of IBM large systems. Large system configurations have evolved from a single system to a Parallel Sysplex in the following progression:

•Single system uniprocessor

A single copy of the z/OS (or its ancestor) operating system manages the processing of a central processor complex (CPC) that has a single Central Processor (CP), also called a CPU.

•Tightly coupled multiprocessors

A single copy (also called an image) z/OS operating system manages more than one CP sharing the same central storage, thus allowing several transactions to have their programs executed in parallel.

•Loosely coupled configuration

This configuration is when more than one CPC, possibly tightly coupled multiprocessors, share DASD but not central storage. The CPCs are connected by channel-to-channel communications and are managed by more than one z/OS images.

•Base sysplex

A base sysplex is similar to loosely coupled but the z/OS system and applications programs use a standard communication mechanism for exchanging messages called XCF. This configuration makes the management easier because it provides a greater degree of communication and cooperation among systems and a more unified system image with a single z/OS console group to manage all components.

•Parallel Sysplex

A Parallel Sysplex has up to 32 z/OS systems, as in a base sysplex, but it contains a coupling facility (CF). The coupling facility is a global and intelligent memory that provides multisystem data sharing (with total integrity), workload balancing, high performance communication, and many other advantages.

Table 1 on page 3 summarizes the characteristics in terms of availability, capacity, and system management according to the system configuration.

Table 1 Evolution to a Parallel Sysplex

|

|

Capacity

|

Continuous availability

|

Systems management

|

|

Single system uniprocessor

|

Limited by the size of the largest single CP.

|

Single points of failure and disruptive changes.

|

Easy.

|

|

Tightly coupled multiprocessors

|

Limited by the maximum number of CPs in the CPC.

|

Single points of failure and disruptive changes.

|

Easy.

|

|

Loosely coupled configuration

|

Increased over tightly coupled.

|

Increased over tightly coupled.

|

Each system must be managed separately. Complexity grows with the number of systems.

|

|

Base sysplex

|

Same as loosely coupled.

|

Better than loosely coupled because of the cooperation.

|

Single z/OS console group to manage all components.

|

|

Parallel Sysplex

|

Ability to add incremental capacity to match workload growth.

|

Total (24 by 7), if there are not multiple concurrent failures.

|

Multisystem data- sharing capability, multisystem workload balancing, enhanced single-system image.

|

1.2 SYStems comPLEX or sysplex

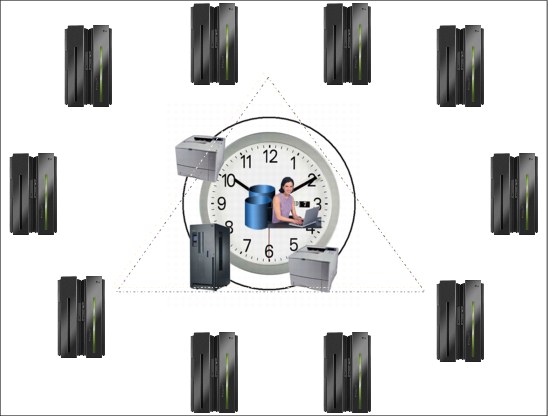

Figure 1-2 Systems complex or sysplex

SYStems comPLEX or sysplex

Parallel and clustered systems initially found in numerically intensive markets (engineering and scientific) have gained increasing acceptance in commercial segments as well. The architectural elements of these systems span a broad spectrum that includes massively parallel processors that focus on high performance for numerically intensive workloads, and cluster operating systems that deliver high system availability.

Parallel Sysplex clustering

Parallel Sysplex clustering contains innovative multisystem data-sharing technology, allowing direct concurrent read/write access to shared data from all processing images in a parallel configuration, without sacrificing performance or data integrity. Each image is able to concurrently cache shared data in a global electronic memory through hardware-assisted cluster-wide serialization and coherency controls. This in turn enables work requests associated with a single workload, such as business transactions or database queries, to be dynamically distributed for parallel execution on nodes in a sysplex cluster, based on available processor capacity. Through this state-of-the-art cluster technology, the power of multiple z/Series processors can be harnessed to work in concert on common workloads, taking the commercial strengths of the z/OS platform to improved levels of competitive price performance, scalable growth, and continuous availability. Prior to the Parallel Sysplex, S/390 (now called System z®) customers had been forced to contain capacity requirements of a workload within technology limits imposed by the largest single symmetric multiprocessor available (symmetric meaning all CPUs are the same).

1.3 The sysplex symmetry

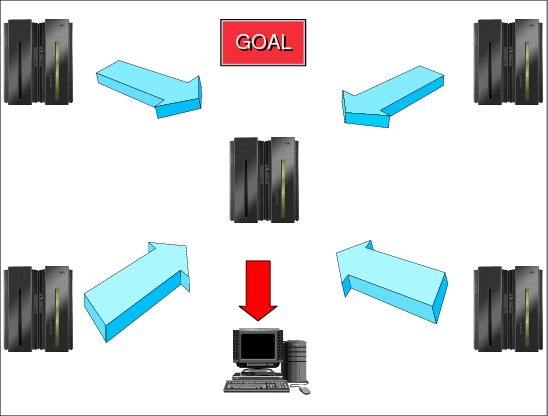

Figure 1-3 The sysplex symmetry

The sysplex symmetry

You can think of a sysplex as a symphony orchestra. The orchestra consists of violins, flutes, oboes, and so on. Think of each instrument as representing a different product (or component) in the sysplex. The fact that you have several of each instrument corresponds to having several images of the same product in the sysplex.

Think of symmetry in the orchestra in the following ways:

•All the violins (or whatever instrument) sound basically the same, and play the same musical part.

•All the instruments in the orchestra share the same musical score. Each instrument plays the appropriate part for that instrument.

Similarly in the sysplex, you can make all the systems, or a subset of them, look alike (clone systems) and do the same work. All the systems can access the same database, and the same library of programs, each one using the information it needs at any point in time. The concept of symmetry allows new systems to be easily introduced, and permits automatic workload distribution all the time, even in the event of failure or when an individual system is scheduled for maintenance. Symmetry also significantly reduces the amount of work required by the systems programmer in setting up the environment.

In an asymmetric sysplex, each system has its own software and hardware configurations, so some of the system management benefits of being in a sysplex are lost.

1.4 Sysplex philosophy

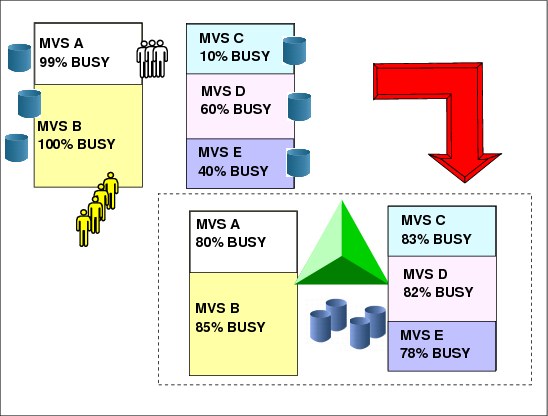

Figure 1-4 Sysplex philosophy

Sysplex philosophy

A new violinist who joins the symphony orchestra receives a copy of the score, and begins playing with the other violinists. The new violinist has received a share of the workload. Similarly in a sysplex, if you add a system, the Customer Information Control System (CICS®) OLTP transaction workload can be automatically rebalanced so that the new system gets its share, provided you have set up the correct definitions in your sysplex.

Dynamic workload balancing

Theoretically, in the CICS OLTP environment, transactions coming into the sysplex for processing can be routed to any system. CICS uses the z/OS component called Workload Manager (WLM), along with CICSPlex® System Manager (CPSM), to dynamically route CICS transactions. For this to happen in the sysplex, you need symmetry; the systems across which you want to automatically balance the workload must have access to the same data, and have the same applications that are necessary to run the workload. In other words, no system affinity should be allowed.

Data sharing

We noted earlier that in the symphony orchestra, all the instruments share the same musical score, each playing the appropriate part. You can think of the musical score as a kind of database. Part of the definition of symmetry, as used in this book, is systems sharing the same resources. An important resource for systems to share is data and programs, either in the form of a database, or data sets. Symmetry through systems sharing the same database and program libraries facilitates dynamic workload balancing and availability. The coupling facility technology, together with the support in the database managers, provides the data sharing capability.

You improve application availability by using products that provide data sharing with the coupling facility technology, such as Information Management System Database Manager (IMS™ DB), DB2® Universal Data Base for z/OS (DB2), VSAM RLS, or Transactional VSAM Services (DFSMStvs).

Incremental growth

The conductor can add violins, or other instruments, to the orchestra one by one until the desired effect is achieved. The conductor would not want to hire five more violinists if only two are needed at the moment. A sysplex exhibits the same incremental growth ability. Rather than adding capacity in large chunks, most of which might remain idle, you can add small chunks closer to the size you need at the moment.

Also, the introduction of a new violinist is non disruptive. It is possible (although you might see this only in the most novel of musical pieces) that the violinist could walk onto the stage in the middle of the concert, take a seat, and begin playing with the others. There is no need to stop the concert. Similarly, with a sysplex, because of symmetry and dynamic workload balancing, you can add a system to your sysplex without having to bring down the entire sysplex, and without having to manually rebalance your CICS OLTP workload to include the new system.

Continuous availability

If a violinist gets sick and cannot be present for a given performance, there are enough other violinists so that the absence of one will probably not be noticeable. If a violinist decides to quit the orchestra for good, that violinist can be replaced with another. A sysplex exhibits similar availability characteristics. One of the primary goals of a sysplex is continuous availability. You can think of availability from these perspectives: the availability of your applications programs and the availability of your data.

With symmetry and dynamic workload balancing, your applications can remain continuously available across changes, and your sysplex remains resilient across failures. Adding a system, changing a system, or losing a system should have little or no impact on overall availability. With symmetry and data sharing, using the coupling facility, you also have enhanced database availability.

Automation plays a key role in availability. Typically, automation routines are responsible for bringing up applications, and if something goes wrong, automation handles the application’s restart. While automation does not play much of a role in our symphony orchestra, the need for automation is quite important in the sysplex, for availability as well as other reasons.

A facility of z/OS called Automatic Restart Manager (ARM) provides a fast restart and automatic capability for failed subsystems, components, and applications. ARM plays an important part in the availability of key z/OS components and subsystems by decreasing the mean-time-to-repair (MTTR), which in turn affects the availability of data.

For example, when a subsystem such as CICS, IMS DB, or DB2 fails, it might be holding resources, such as locks, that prevent other applications from accessing the data they need. ARM quickly restarts the failed subsystem; the subsystem can then resume processing and release the resources, making data available once again to other applications. Note that System Automation for z/OS (SA z/OS), an IBM product that provides automation of operator functions such as start-up, shutdown, and restart of subsystems, has awareness of z/OS Automatic Restart Manager, so that restart actions are properly coordinated. A sysplex is also the framework that provides a single system image.

1.5 Single system image

Figure 1-5 Single system image

Single system image

Think of all the violins in the symphony orchestra playing the same part. To the audience, they might sound like one giant violin. The entire orchestra is cooperating to produce the music that the audience hears. In this way, the audience perceives the orchestra as a single entity. This is a good way to picture single system image in the sysplex. You have multiple images of the same product, but they appear, and you interact with them, as one image. The entire sysplex is cooperating to process the workload. In this way, you can think of the collection of systems in the sysplex as a single entity.

Single system image is not a new concept. Many products already provide single system image capability to some degree, or have plans to implement it in the context of commercial enterprise-wide systems management. The important point is, single system image is a key theme in a sysplex. Implementing symmetry in your sysplex facilitates single system image; symmetry facilitates your ability to manage multiple systems in the sysplex as though they were one system. Now, you have the best of two worlds, a logical centralized topology implemented in a physically distributed one.

While single system image is the goal, different IBM and non-IBM products, and even different components within products, are at different stages of development on this issue. Attaining the goal depends on the installation choosing the right options on such products and components.

Different perspectives on single system image

The single system image goal provides different advantages depending on your perspective. The advantage for the end user is the ability to log onto an application in the sysplex, and to be able to access that application without being concerned about which system the application resides on.

For example, CICS uses the VTAM generic resources function, which allows an end user to log on to one of a set of CICS terminal-owning regions (TORs), such as TOR1, TOR2, and TOR3, through a generic name, such as TOR, thus providing single system image for VTAM access to CICS TORs. With dynamic workload management, provided by CICSPlex SM (a CICS component), the logons are then balanced across the CICS TORs; later, when the transactions start to arrive, they will be dynamically distributed through transaction application-owning regions (AORs) that are logically connected to the TOR at logon time. All of this provides a single system image from an application perspective.

The advantage to an operator is the ability to control the sysplex as though it is a single entity. For example, through commands with sysplex-wide scope, operators can control all the z/OS images in the sysplex as though only one z/OS image existed.

When TSO/E is part of a sysplex and exists on multiple sysplex members, you can assign a VTAM generic name to all TSO/E and VTAM application programs. A TSO/E and VTAM application on one z/OS system can be known by the same generic resource as a TSO/E and VTAM application on any other z/OS system. All application programs that share a particular generic name can be concurrently active. This means that a user can log on to a TSO/E generic name in the sysplex rather than to a particular system. The generic name can apply to all systems in the sysplex, or to a subset.

Eventually, when all the necessary products provides single system image capability, the result is greatly improved and simplified enterprise-wide systems management. Both IBM and non-IBM products work towards this goal.

Single point of control

The conductor of the symphony controls the entire orchestra from the podium. The conductor does not stand by the violins and conduct them for a while, and then run over and stand by the flutes to conduct them. In a sysplex, an operator or a systems programmer should be able to control a set of tasks for the sysplex from a given workstation.

The sysplex is a little different from the symphony orchestra in that single point of control in the sysplex does not imply a single universal workstation such as a console. The object is not to control every task for every person from one place. A given individual should be able to accomplish the set of tasks pertinent to that individual’s job from one place.

Ideally, you can have multiple consoles, each tailored to a particular set of tasks; for each such console, you can have either a duplicate of that console, or some other mechanism to ensure that for every task, there is an alternative way to accomplish the task in the event the console or its connection to the sysplex fails.

IBM and non-IBM products are furthering the ability to implement single point of control through integrating operations on a workstation. IBM provides an implementation of an integrated operations console through the Tivoli® Management Environment (TME) 10.

1.6 Parallel Sysplex workload balancing

Figure 1-6 Parallel Sysplex workload balancing

Parallel Sysplex workload balancing

You might be wondering what a sysplex could do for you. If your data center is responsible for even one of the following types of work, you could benefit from a sysplex:

•Large business workloads that involve hundreds of end users, or deal with volumes of work that can be counted in millions of transactions per day.

•Work that consists of small work units, such as online transactions, or large work units that can be subdivided into smaller work units, such as queries.

•Concurrent applications on different systems that need to directly access and update a single database without jeopardizing data integrity and security.

Sharing the workload

A sysplex shares the processing of work across z/OS systems and as a result offers benefits such as reduced cost through more cost-effective processor technology using IBM software licensing charges in Parallel Sysplex.

Workload balancing

When you are in an environment with multiple systems, the set of performance issues changes. Existing mechanisms for managing system performance are complex and single-system oriented.

To reduce the complexity of managing a sysplex, MVS workload management provides dynamic sysplex-wide management of system resources. MVS workload management is the combined cooperation of various subsystems (such as CICS, IMS, and VTAM) with the MVS workload manager (WLM) component. An installation defines performance goals and a business importance to workloads through WLM. Workload management focuses on attaining these goals through dynamic resource distribution.

Workload management

A sysplex provides a different way of managing workloads than was previously used. The emphasis is on defining performance goals for work, and having MVS and the subsystems adapt to meet the goals. This provides for the following:

•A platform for continuous availability so that applications can be available 24 hours a day, 7 days a week, 365 days a year (or close to it).

•The ability to do more work to provide greater capacity and an improved ability to manage response time through the use of goals.

•Greater flexibility and the ability to mix levels of hardware and software.

•The ability to dynamically add systems, which allows an easy path for incremental growth.

•Considering resource sharing (of unit tapes, for example), resources are used in the system that needs them, instead of being dedicated. That enables better environment management, performance, and cost savings.

•Data sharing and resource sharing are the fundamental mechanisms that allow installations to have their workload dynamically redistributed between images in the Parallel Sysplex. Workload can be routed to systems where spare capacity exists, avoiding CEC upgrade and still meeting service level objectives, as shown in Figure 1-6.

For example, CICS is usually implementing the function shipping capability toward the file owner region (FOR) in order to share VSAM files across multiple CICS application regions. Most of the time this FOR region becomes a bottleneck and a single point of failure. In a Parallel Sysplex environment, with data sharing, transactions can be routed to a CICS address space with workload balancing and better response time in the Parallel Sysplex. This requires a transaction management tool, like CICSPlex SM, but the basic framework is the Parallel Sysplex.

Sysplex configurations

A sysplex configuration can be either a base sysplex or a Parallel Sysplex. Later in this chapter, after introducing all the hardware and software required in a sysplex, both configurations are described. The configurations are described in “Base sysplex” on page 51 and “Parallel Sysplex” on page 52.

1.7 Sysplex software

Figure 1-7 Sysplex software

Sysplex software

The following types of software exploit sysplex capabilities:

System System software is the base software that is enhanced to support a sysplex. It includes the z/OS operating system, JES2 and JES3, and DFSMS.

Networking Includes Virtual Telecommunications Access Method (VTAM) and Transmission Control Protocol/ Internet Protocol (TCP/IP), which support attachment of a sysplex to a network.

Data management Data management software includes data managers that support data sharing in a sysplex, such as Information Management System Database Manager (IMS DB), DATA BASE 2 (DB2), and Virtual Storage Access Method (VSAM). We can also include here Adabas and Oracle.

Transaction management Transaction management software includes transaction managers that support a sysplex such as Customer Information Control System (CICS Transaction Server), Information Management System Transaction Manager (IMS TM) and WebSphere Application Services.

Systems management Systems management software includes a number of software products that are enhanced to run in a sysplex and exploit its capabilities. The products manage accounting, workload, operations (as DFSMShsm), performance (as RMF), security (as RACF), and configuration (as HCD).

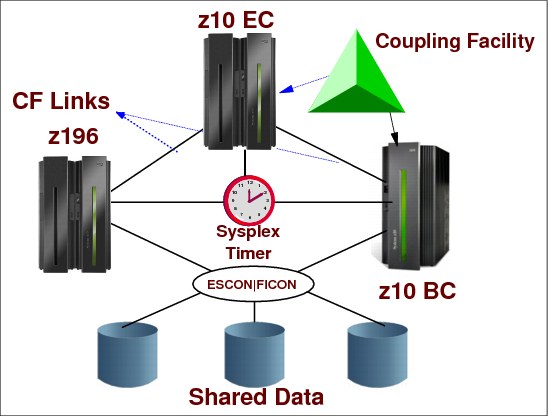

1.8 Sysplex hardware

Figure 1-8 Sysplex hardware

Sysplex hardware

A sysplex is a collection of MVS systems that cooperate, using certain hardware and software products, to process work. A conventional large computer system also uses hardware and software products that cooperate to process work. A major difference between a sysplex and a conventional large computer system is the improved growth potential and level of availability in a sysplex. The sysplex increases the number of processing units and MVS operating systems that can cooperate, which in turn increases the amount of work that can be processed. To facilitate this cooperation, new products were created and old products were enhanced. The following types of hardware participate in a sysplex.

System z processors

Selected models of System z processors can take advantage of a sysplex. These include large water-cooled processors, air-cooled processors, and the processors that take advantage of (CMOS) technology.

Coupling facility

Coupling facilities enable high performance multisystem data sharing. Coupling facility links, called channels, provide high speed connectivity between the coupling facility and the central processor complexes that use it. These z/OS systems can be located in the same or in different CPCs that have the coupling facility.

Sysplex Timer

The Sysplex Timer® is an external time reference (ETR) device that synchronizes the time-of-day (TOD) clocks across multiple CPCs in a sysplex. The time stamp from the Sysplex Timer is a way to monitor and sequence events within the sysplex. Server Time Protocol (STP) is a server-wide facility providing capability for multiple z/OSs to maintain TOD time synchronization with each other and form a Coordinated Timing Network (CTN). It is a cost saving replacement for the Sysplex Timer.

FICON and ESCON

The term Fibre Connection (FICON®) represents the architecture as defined by the InterNational Committee of Information Technology Standards (INCITS), and published as ANSI standards. FICON also represents the names of the various System z server I/O features. ESCON® and FICON control units and I/O devices provide the increased connectivity necessary among a greater number of systems.

ESCON channels and directors, and FICON channels and directors (switches), are Enterprise Systems Connection (ESCON) and Fiber Connection (FICON) channels that enhance data access and communication in the sysplex. The ESCON directors and FICON switches add dynamic switching capability for those channels.

FICON is widely used in the System z environment, and provides additional strengths and capabilities compared to the ESCON technology. Many additional capabilities have been included in support of FICON since it was originally introduced. Some control units and control unit functions might require FICON use exclusively. For example, Hyper Parallel Access Volume requires the use of FICON and will not work with ESCON.

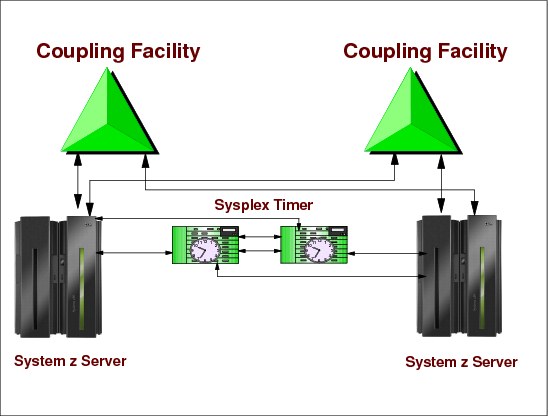

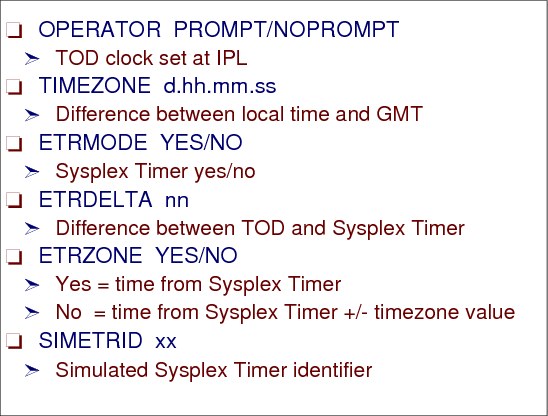

1.9 Sysplex Timer

Figure 1-9 Sysplex Timer

Sysplex Timer

Time is a key variable for commercial programs. In physics there are three time scales:

•Universal international time (UIT) based on the earth revolution cycles. Not used for modern purposes due to the variability of such cycles.

•International atomic time (TAI) based on the radioactive properties of Cesium 133.

•Coordinated universal time (UTC) is derived from TAI, but kept not far from UIT by adding or deleting discrete units of leap seconds.

Several System z processors can execute several task programs in a data processing complex. Each of these processors has a time-of-day (TOD) clock, which is an internal 104-bit register incremented by adding a one in bit position 51 every microsecond. TOD clocks use a UTC time scale.

Multiple TOD clocks

When tasks are shared among different CPUs, multiple TOD clocks can be involved. These clocks might be in sync with one another. All CPU TODs from the same CPC are internally synchronized. Then, there is a need for a single time resource, that is, an External Time Reference (ETR) to synchronize the TOD clocks of CPUs located in distinct CPCs running z/OS in the same sysplex. The Sysplex Timer is hardware that is used when the sysplex consists of z/OS systems running in more than one CPC.

|

Note: Currently, you have the possibility of replacing a Sysplex Timer by Server Time Protocol (STP), as shown in “Server Timer Protocol (STP)” on page 17. The Sysplex Timer provides synchronization for the TOD clocks of multiple CPUs in distinct CPCs, and thereby allows events started by different CPUs to be properly sequenced in time. For example, when multiple CPUs update the same database, all updates are required to be time stamped in proper sequence in the database log.

|

Timer functions

There is a long-standing requirement for accurate time and date information in data processing. As single operating systems have been replaced by multiple, coupled operating systems on multiple servers, this need has evolved into a requirement for both accurate and consistent clocks among these systems. Clocks are said to be consistent when the difference or offset between them is sufficiently small. An accurate clock is consistent with a standard time source.

The IBM z/Architecture, Server Time Protocol (STP), and External Time Reference (ETR) architecture facilitates the synchronization of server time-of-day clocks to ensure consistent time stamp data across multiple servers and operating systems. The STP or ETR architecture provides a means of synchronizing TOD clocks in different servers with a centralized time reference, which in turn might be set accurately on the basis of an international time standard (External Time Source). The architecture defines a time-signal protocol and a distribution network, which permits accurate setting, maintenance, and consistency of TOD clocks.

External time reference (ETR)

External time reference hardware facility (ETR) is the generic name for IBM Sysplex Timer. The ETR architecture provides a means of synchronizing TOD clocks in different CPCs with a centralized time reference, which in turn can be set accurately on the basis of UTC time standard (External Time Source). The architecture defines a time-signal protocol and a distribution network (called the ETR network) that permits accurate setting, maintenance, and consistency of TOD clocks.

Timing services

Timing services are implemented in z/OS by a time supervisor component. It can be used by an application program to obtain the present date and time, and convert date and time information to various formats. Interval timing lets your program set a time interval to be used in the program logic, specify how much time is left in the interval, or cancel the interval. For programs that are dependent upon synchronized TOD clocks in a multi CPC environment, like a database, it is important that the clocks are in ETR synchronization. These programs can use the STCKSYNC macro to obtain the TOD clock contents and determine if the clock is synchronized with an ETR. STCKSYNC also provides an optional parameter, ETRID, that returns the ID of the ETR source with which the TOD clock is currently synchronized.

ETR attachments

The ETR feature in System z9® and System z10® servers provides the interface to a Network Time Protocol (NTP) server with pulse per second (PPS) support.

|

Note: The zEnterprise 196 does not support the IBM Sysplex Timer and ETR attachment.

|

1.10 Server Timer Protocol (STP)

Figure 1-10 Server Time Protocol (STP)

Server Time Protocol (STP)

Server Time Protocol (STP) is designed to help multiple System z servers maintain time synchronization with each other, without the use of a Sysplex Timer. STP uses a message-based protocol in which timekeeping information is passed over externally defined coupling links, such as InterSystem Channel-3 (ISC-3) links configured in peer mode, Integrated Cluster Bus-3 (ICB-3) links, and Integrated Cluster Bus-4 (ICB-4) links. These can be the same links that already are being used in a Parallel Sysplex for coupling facility message communication.

STP is implemented in the Licensed Internal Code (LIC) of System z servers and CFs for presenting a single view of time to PR/SM™.

|

Note: A time synchronization mechanism, either IBM Sysplex Timer or Server Time Protocol (STP), is a mandatory hardware requirement for a Parallel Sysplex environment consisting of more than one server.

|

STP link

An STP link is a coupling facility connection that serves as a timing-only link. With STP links, you can allow multiple servers to form a Coordinated Timing Network (CTN), which is a collection of servers and coupling facilities that are synchronized to a time value called Coordinated Server Time. Establishing an STP link between two processors does not require a CF partition; an STP link can be established between two OS partitions. For an STP link, HCD generates a control unit of type “STP” on both sides of the connection. No devices are defined.

This server-wide facility provides capability for multiple z/OS systems to maintain TOD synchronization with each other and form a Coordinated Timing Network (CTN), that is, a collection of z/OS systems that are time synchronized to a time value. In Figure 1-10, the preferred time is as follows:

•P1 is the z/OS preferred timer server (stratum 1) that synchronizes the TODs of the z/OS systems (stratum 2).

•P2 is the backup time server, ready to replace P1.

•P3 is the arbiter time server that decides when the replacement should be done.

The External Time Reference connections are replaced by the implementation of STP, which makes use of coupling links to pass timing messages to the servers. Transition to STP makes it possible to have a Mixed Coordinated Network configuration. The Sysplex Timer provides the timekeeping information in a Mixed CTN. Once an STP-only configuration is established, the ETR connections are no longer needed. STP allows coexistence with Sysplex Timer in mixed configurations. The Sysplex Timer console is replaced by an HMC screen for each possible time zone.

|

Note: A z196 cannot be connected to a Sysplex Timer; consider migrating to an STP-only Coordinated Time Network (CTN) for existing environments. It is possible to have a z196 as a Stratum 2 or Stratum 3 server in a Mixed CTN, as long as there are at least two System z10 or System z9 servers attached to the Sysplex Timer operating as Stratum 1 servers.

|

Defining STP links in a sysplex

Server Time Protocol is designed to help multiple System z servers maintain time synchronization with each other, without the use of a Sysplex Timer. STP uses a message-based protocol in which timekeeping information is passed over externally defined coupling links, such as InterSystem Channel-3 (ISC-3) links configured in peer mode, Integrated Cluster Bus-3 (ICB-3) links, and Integrated Cluster Bus-4 (ICB-4) links. These can be the same links that already are being used in a Parallel Sysplex for coupling facility message communication.

An STP link is a coupling facility connection that serves as a timing-only link. With STP links, you can allow multiple servers to form a Coordinated Timing Network (CTN), which is a collection of servers and coupling facilities that are synchronized to a time value called Coordinated Server Time. Establishing an STP link between two processors does not require a CF partition; an STP link can be established between two OS partitions. For an STP link, HCD generates a control unit of type STP on each side of the connection. No devices are defined.

You can establish an STP link between two System z servers (z890, z990, z9 EC, or later). In the Connect to CF Channel Path dialog, select two CHPIDs defined for coupling facilities, and then specify the Timing-only link option to create an STP link.

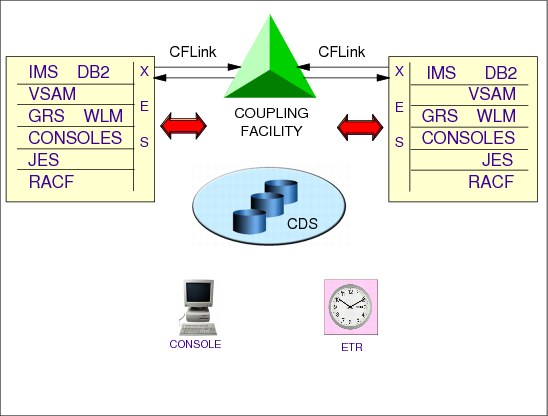

1.11 Coupling facility

Figure 1-11 Coupling facility

Coupling facility

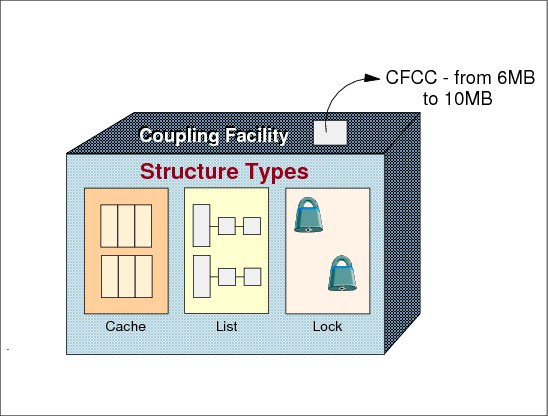

A coupling facility is a special logical partition that runs the coupling facility control code (CFCC) and provides high-speed caching, list processing, and locking functions in a sysplex. HCD enables you to specify whether a logical partition can be a coupling facility, operating system, or either on certain processors. You connect the coupling facility logical partition to a processor through the coupling facility channels.

With z/OS services, a component called XES allows authorized applications, such as subsystems and z/OS components, to use the coupling facility to cache data, exchange status, and access sysplex lock structures in order to implement high performance data sharing and rapid recovery from failures.

Coupling facility control code (CFCC)

IBM CFCC is licensed internal code (LIC) and always runs under an LPAR, regardless of whether the CF is in a standalone CPC or in a general purpose CPC (where CFCC LPs are together with z/OS LPs). A standalone CPC is a CPC only allowing LPs running CFCCs.

CFCC is a simple but efficient operating system where, for example, no virtual storage support is implemented. It has multiprocessing capabilities running multiple processors and when there is no work to do, it loops in the CF link waiting for work requests (the interrupt mechanism is not implemented).

A coupling facility (CF) runs the coupling facility control code (CFCC) that is loaded into main storage at power-on reset (POR) time. CFCC can run on a stand-alone CF server or in a logical partition.

Coupling facility logical partition (LP)

The coupling facility LP is defined through HCD and processor resource/systems manager (PR/SM) panels on the Hardware Management Console (HMC). Once you have defined an LP to be a coupling facility LP, only the CFCC can run in that LP. When you activate the coupling facility LP, the system automatically loads the CFCC from the laptop support element (SE) hard disk of the CPC. Its major functions are:

•Storage management

•Support for CF links

•Console services (HMC)

•Trace, logout, and recovery functions

•Provide support for the list, cache, and lock structures

1.12 Message time ordering

Figure 1-12 Message time ordering

Parallel Sysplex configurations

As server and coupling link technologies have improved over the years, the synchronization tolerance between operating system images in a Parallel Sysplex has become more rigorous. In order to ensure that any exchanges of time stamped information between operating system images in a Parallel Sysplex involving the CF observe the correct time ordering, time stamps are included in the message transfer protocol between the server operating system images and the CF. This is known as message time ordering.

Message time ordering

Figure 1-12 illustrates the components of a Parallel Sysplex as implemented within the zSeries architecture. Shown is an ICF connection between two z10 EC servers running in a sysplex, and there is a second integrated coupling facility defined within one of the z10s containing sysplex LPARs. Shown also is the connection required between the coupling facility defined on a z10 and the sysplex timer to support message time ordering. Message time ordering requires a CF connection to the Sysplex Timer.

Before listing the message time ordering facility rules, a short description of the message time ordering facility is included here. When the CF receives the message, it verifies that the message’s time stamp is less than the CF’s TOD clock. If the time stamp in the message is ahead of the CF’s TOD clock, the message is not processed until the CF TOD clock catches up to the message time stamp value.

Mixed or STP-only Parallel Sysplex

In a mixed or STP-only CTN in a Parallel Sysplex configuration, the requirement is that all servers support the message time ordering facility.

The following message time ordering facility rules are enforced when there is a mixed CTN in a Parallel Sysplex configuration:

•z/OS images running on STP-configured servers can connect to CFs that are on servers that are not STP capable only if the coupling facility supports message time ordering facility and is attached to the Sysplex Timer.

•CFs on an STP-configured server can connect to z/OS images running on servers that are not STP capable only if the non-STP-capable server supports message time ordering facility and is attached to the Sysplex Timer.

For more details about this topic, see the document Server Time Protocol Planning Guide, SG24-7280.

1.13 Coupling facility LPARs and CFCC code

Figure 1-13 Coupling facility LPARs and CFCC code

Standalone coupling facility (CF)

A standalone CF is a CPC where all the processor units (PUs), links, and memory are for CFCC use. This means that all LPs running in such a CPC run only CFCC code. The standalone CF is also called external coupling facility.

Internal coupling facility (ICF)

ICFs are PUs in a CPC configured to run only CFCC code. The PUs are not shipped with a fixed function assignment (personality), but are assigned during power-on reset (POR) or later non-disruptively by on demand offerings such as: CBU, CuOD, CIU, ON/OFF COD. Those offerings allow the customer to convert, in seconds, a non-characterizable PU in any PU personality type such as: CPU, ICF, IFL, zAAP, zIIP and SAP.

An ICF can reduce the cost of exploiting coupling facility technology because:

•ICFs are less expensive than CPs.

•An ICF has a special software license charge. Special PR/SM microcode prevents the defined ICF PUs from executing non-CFCC code such as z/OS.

Coupling facility configuration options

A coupling facility always runs CFCC code within a PR/SM LPAR license internal code (LIC). As we already saw, a CF LPAR can be configured in one of two ways:

•In a standalone CPC, only coupling facilities are present

•In a general purpose CPC, the CF LP can co-exist with LPs running z/OS code, or even Linux® (under z/VM® or not). The z/OS LPs can be either in the same Parallel Sysplex as the coupling facility or not.

Decisions regarding where to configure CF LPs are based mostly on price/performance, configuration characteristics, CF link options, and recovery characteristics (availability).

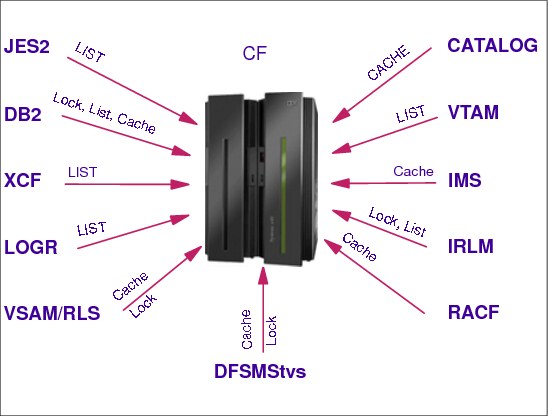

CF storage

CFCC formats central storage in contiguous pieces called structures. Structures can be used to keep data by software exploiters (authorized programs) such as: z/OS components, subsystems, products. The exploiters may have several instances running in different z/OS systems in the sysplex. The major reason for having structures is to implement data sharing (with total integrity), although the structures may be used as high-speed caching memory. Structure types are:

•Lock structure

•List structure

•Cache structure

Each structure type provides a specific function to the exploiter. Some storage in the coupling facility can also be allocated as a dedicated dump space for capturing structure information for diagnostic purposes. In order to access the coupling facility structures, z/OS systems (running the exploiters) must have connectivity to the coupling facility through coupling facility links. Refer to “Coupling facility links” on page 25 for more information.

Coupling facility control code level (CFLEVEL)

The level (CFLEVEL) of the coupling facility control code (CFCC) that is loaded into the coupling facility LPAR determines what functions are available for exploiting applications. Various levels provide new functions and enhancements that an application might require for its operation. As more functions are added to the CFCC, it might be necessary to allocate additional storage to a coupling facility structure. Similarly, as new functions are added, the coupling facility itself may require additional storage. In any configuration, the amount of fixed storage required for the coupling facility is based on configuration-dependent factors.

To implement a coupling facility in your sysplex requires both hardware and software, as follows:

•CPC that supports the CFCC.

•CPCs on which one or more z/OS images run and which are capable of connecting to the coupling facility with CF links.

•Appropriate level of z/OS that allows an exploiter to access a desired function when managing the coupling facility resources.

•CFCC must implement the functions the exploiter needs.

To support migration from one CFCC level to the next, you can run several levels of CFCC concurrently as long as the coupling facility logical partitions are running on different servers (CF logical partitions running on the same server share the same CFCC level).

1.14 Coupling facility links

Figure 1-14 Coupling facility links

Coupling facility links

To enable the communication between a coupling facility (CF) logical partition (LP) and the z/OS (LPs), special types of high-speed CF links are required. These links are important because of the impact of link performance on CF request response times. For configurations covering large distances, time spent on the link can be the largest part of CF response time.

The coupling link types for the coupling facility are:

(PSIFB, IC, ICB, and ISC-3)

A CF link adapter can be shared between LPs, meaning the same adapter can transfer data from/to different z/OS systems to one CF, thus reducing the number of links needed. This is called multiple image facility (MIF), the same name used for FICON and ESON channels.

CF links in the System z servers work in a mode called peer mode. In this mode we have even more flexibility with connections. For example, a single link adapter can be connected (multiple image facility) to both z/OS and a CF.

Both the coupling facility LPs and the CF links must be defined to the I/O configuration data set (IOCDS). Hardware configuration definition (HCD) provides the interface to accomplish these definitions and also automatically supplies the required channel control unit and I/O device definitions for the coupling facility channels.

Internal coupling (IC) link

The IC is a zSeries or z9 connectivity option that enables high-speed connection (more than 3 GB/sec) between a CF LP and one or more z/OS LPs running on the same zSeries or z9 CPC. The IC is a linkless connection (implemented in Licensed Internal Code) and so does not require any hardware or cabling.

InterSystem Channel (ISC) link

InterSystem Channel provides connectivity through optical cables. ISC links are point-to-point connections that require a unique channel definition at each end of the link. There are two types of ISC features supported, ISC-3 and ISC-2. In modern CPCs such as System z servers (z800, z900, z890, z990 and z9), there is support for ISC-3 only. They are used for distances beyond 7 meters and allow a rate of up to 200 MBps.

Integrated Cluster Bus (ICB)

Integrated Cluster Bus provides connectivity through copper cables. They are faster than ISC links, attaching directly to a Self-Timed Interconnect (STI) bus of the CEC cage. They are the preferred method for coupling connectivity when connecting System z servers over short distances (up to 7 meters). For longer distances, ISC-3 links must be used. There are two types of ICB links available:

•ICB-4 links provide 2 GB/sec coupling communication between z990, z890, and z9 CPCs.

•ICB-3 links provide 1 GB/sec coupling communication between z800, z900, z990, z890, and z9 CPCs.

InfiniBand coupling links (PSIFB)

InfiniBand coupling links (PSIFB) are high speed links on z196, z10, and z9 servers. The PSIFB coupling links originate from three types of fanout. PSIFB coupling links of either type are defined as CHPID type CIB in HCD/IOCP, as follows:

•HCA2-O (FC 0163)

The HCA2-O fanouts support InfiniBand Double Data Rate (IB-DDR) and InfiniBand Single Data Rate (IB-SDR) optical links. The HCA2-O fanout supports PSIFB coupling links at distances of up to 150 meters. PSIFB coupling links operate at 6 GBps (12x IB-DDR) when connecting a z196 or z10 to z196 and z10 servers, and at 3 GBps (12x IB-SDR) when connecting a z196 or z10 to a z9. The link speed is auto-negotiated to the highest common rate.

•HCA2-O LR (FC 0168)

The HCA2-O LR fanout supports PSIFB Long Reach (PSIFB LR) coupling links for distances of up to 10 km and up to 100 km when repeated through a DWDM. This fanout is supported on z196 and z10. PSIFB LR coupling links operate at up to 5.0 Gbps (1x IB-DDR) between z196 and z10 servers, or automatically scale down to 2.5 Gbps (1x IB-SDR) depending on the capability of the attached equipment.

•HCA1-O (FC 0167)

The HCA1-O fanout supports InfiniBand Single Data Rate (IB-SDR) optical links. The HCA1-O fanout supports PSIFB coupling links at distances of up to 150 meters. PSIFB coupling links operate at 3 GBps (12x IB-SDR) when connecting the z9 server to a z196 or z10 server.

Publications of interest

zSeries Connectivity Handbook, SG24-5444, Processor Resource/Systems Manager Planning Guide, SB10-7036, z/OS MVS Setting Up a Sysplex, SA22-7625, and HCD Planning, GA22-7525.

1.15 Sysplex overview

Figure 1-15 Sysplex overview

Sysplex overview

Now that the pieces that make up a sysplex have been introduced, the remainder of this chapter presents an overview of the sysplex. Figure 1-15 is an overview of the remaining topics to be discussed in this chapter.

It is difficult to explain the sysplex without first explaining the cross-system coupling facility (XCF) and its services, so we start by describing XCF, its services and exploiters. Take note that despite having in the name the expression “coupling facility,” XCF is not able to access such coupling facility directly.

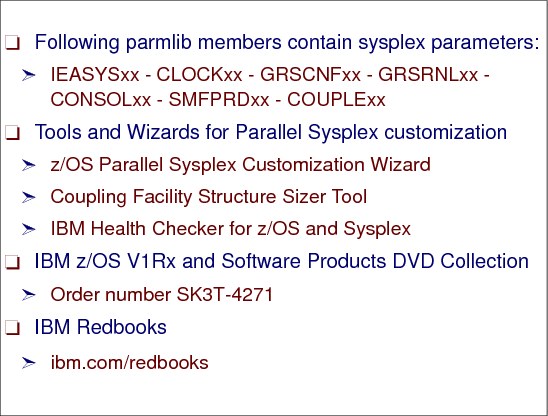

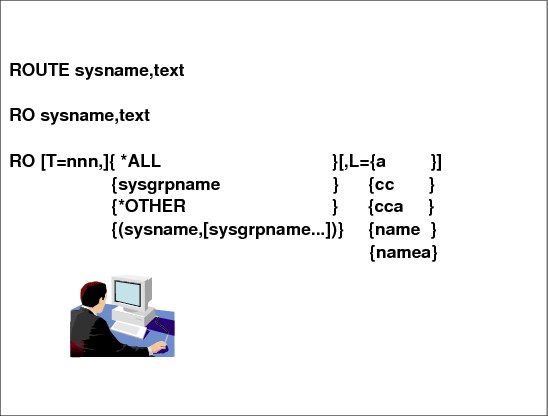

Next, the sysplex configurations and how system consoles are used to enter sysplex-related commands are described, as follows:

•Fundamentals of a base sysplex (a function of XCF), what is required and how to define it.

•An overview of Parallel Sysplex and how to migrate from a base to a Parallel Sysplex.

•Which PARMLIB members you have to change in order to define a sysplex and which changes are necessary.

•How to use consoles in a sysplex environment; how many consoles you need and which ones are mandatory.

•The sysplex-related commands, how to direct a command to a specific console, and how to reply to messages.

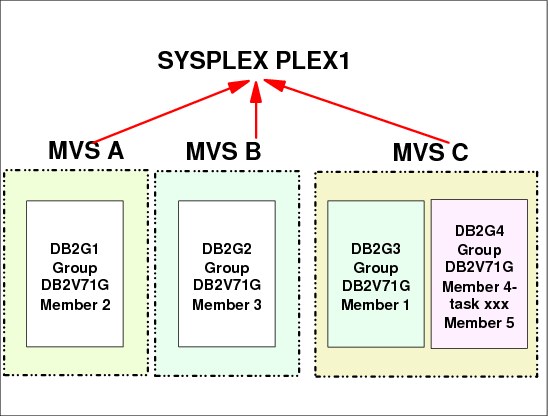

1.16 Cross-system coupling facility (XCF)

Figure 1-16 Cross-system coupling facility (XCF)

Cross-system coupling facility (XCF)

The cross system coupling facility (XCF) component of z/OS provides simplified multisystem management. XCF services allow authorized programs on one system to communicate with programs on the same system or on other systems. If a system fails, XCF services also provide the capability for batch jobs and started tasks to be restarted on another eligible system in the sysplex.

XCF groups

An XCF group is a set of related members that a multisystem application defines to XCF. A member is a specific function, or instance, of the application. A member resides on one system and can communicate with other members of the same group across the sysplex.

Communication between group members on different systems occurs over the signaling paths that connect the systems; on the same system, communication between group members occurs through local signaling services. To prevent multisystem applications from interfering with one another, each XCF group name in the sysplex must be unique.

CICS address spaces

Each CICS address space in Figure 1-16 is an XCF instance. The terms instance, exploiting instance, or exploiter are used to denote the active subsystem or address space of a component, product, or function that directly exploits sysplex services. XCF is a z/OS component that provides services to allow multiple instances (named members) of an application or subsystem, running on different systems in a sysplex, to share status information and communicate through messages with each other. A set of same instance members is called a group. A member of an application can use XCF services to:

•Inform other members of their status (active, failed, and so forth).

•Obtain information about the status of other members, such as whether another member failed.

•Send messages to and receive messages from each other member, in the same or in another z/OS. The most frequent case is other z/OSs (inter-system communication).

Automatic Restart Manager (ARM)

If z/OS fails, XCF can call Automatic Restart Manager (ARM) services (a z/OS component) to provide the capability for batch jobs and started tasks to be restarted on another eligible z/OS in the sysplex. Then an application is allowed to:

•Request automatic restart in the event of application or system failure

•Wait for another job to restart before restarting

•Indicate its readiness to accept work

•Request that automatic restart no longer be performed

•Indicate that automatic restart should be performed only if the backup copy of the application data no longer exists

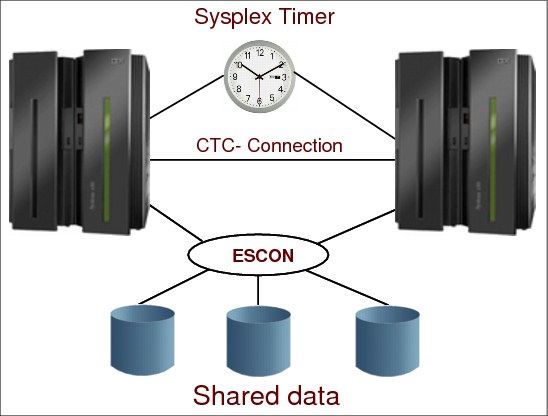

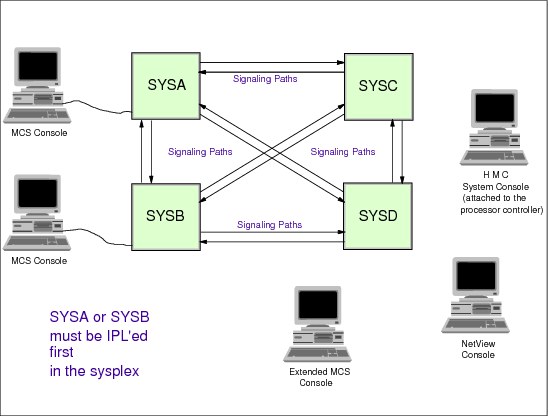

1.17 Base sysplex

Figure 1-17 Base sysplex

Base sysplex

A base sysplex configuration is a sysplex with no coupling facilities. The base sysplex can be composed of one or more z/OS systems that have an XCF sysplex name and in which the authorized programs (members) use XCF services. XCF services are available in both single and multisystem environments. A multisystem environment is defined as two or more z/OS systems residing on one or more CPCs’ logical partitions connected through CTCs.

A base sysplex is the first step to implementing a Parallel Sysplex. A Parallel Sysplex is a base sysplex plus the use of the coupling facility. So, when you introduce the coupling facility, XCF exploits the coupling facility, using it as a link between z/OS systems.

Figure 1-17 shows how XCF works in a multisystem sysplex. Each z/OS has an XCF component that handles groups for the participating members. Here you can see as an example the group named SYSGRS, which is very important for global resource serialization (in the group the name of each member is the name of the z/OS system). This group has one member (GRS component of z/OS) in each z/OS. Other groups can be: consoles (SYSMCS), JES2, JES3, WLM, and others. These groups are described in detail in 1.23, “XCF exploiters” on page 39.

The communication link between XCFs could be through channel-to-channel adapters (CTCs) that allow data movement between XCF buffers in the systems through an I/O operation. Another option for linking is a CF list structure, which is discussed later in this chapter.

1.18 XCF application, member, and group

Figure 1-18 XCF application, member, and group

XCF application, member, and group

An application in a XCF context is a program that has various functions distributed across z/OS systems in a multisystem environment. An application or subsystem might consist of multiple instances, each running on a different system in the same sysplex. Typically, each instance performs certain functions for the application as a whole. Alternatively, each instance could perform all the application’s functions on a given system. XCF services is available to authorized applications, such as subsystems and z/OS components, to use sysplex services.

Member

A member is a specific function (one or more routines) of a multisystem application that is joined to XCF and assigned to a group by the multisystem application. A member concept applies to all authorized routines running in the address space that issued the IXCJOIN macro service. Only for termination purposes (resource clean-up), the member can be associated with an address space, job step, or task. XCF terminates the member when its association ends. The same address space can have more than one group.

Group

A group is the set of related members defined to XCF by a multisystem application in which members of the group can communicate with other members of the same group. A group can span one or more of the systems in a sysplex and represents a complete logical entity to XCF.

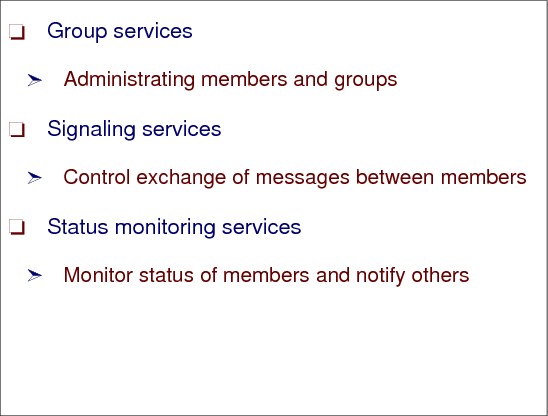

1.19 XCF services

Figure 1-19 XCF services

XCF services

z/OS XCF allows up to 32 z/OS systems to communicate in a sysplex. XCF provides the services that allow multisystem application functions (programs) on one z/OS system to communicate (send and receive data) with functions on the same or other z/OS systems. The communication services are provided through authorized assembler macros and are as follows:

•Group services

•Signalling services

•Status monitoring services

Group services

XCF group services provide ways for defining members to XCF, establishing them as part of a group, and allowing them to find out about the other members in the group. A member introduces itself to XCF through the IXCJOIN macro. If a member identifies a group exit routine, XCF uses this routine to notify this member about status changes that occur to other members of the group, or systems in the sysplex; thus, members can have the most current information about the other members in their group without having to query each other.

Signaling services

The signaling services control the exchange of messages between members of an XCF group. The sender of a message requests services from XCF signaling services. XCF uses buffer pools to communicate between members in the same system, and it uses buffer pools plus signaling paths (CTCs or a CF list structure) to send messages between systems in the sysplex.

Status monitoring services

Status monitoring services provide a way for members to determine their own operational status and to notify the other members of the group when that operational status changes. An installation-written status exit routine identified to XCF determines whether a member is operating normally. An installation-written group exit routine identified to XCF allows a member to maintain current information about other members in the group, and systems in the sysplex.

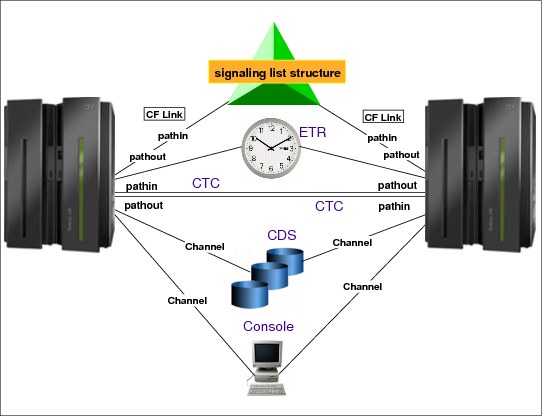

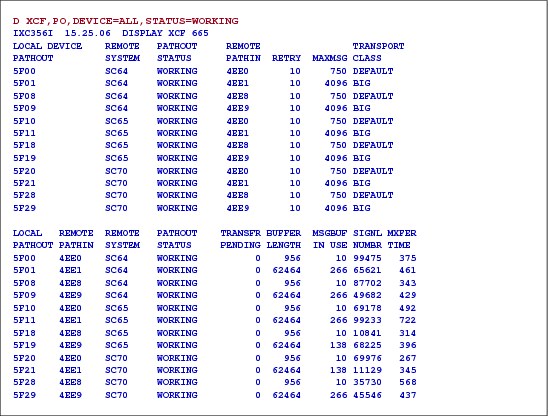

1.20 XCF signaling paths

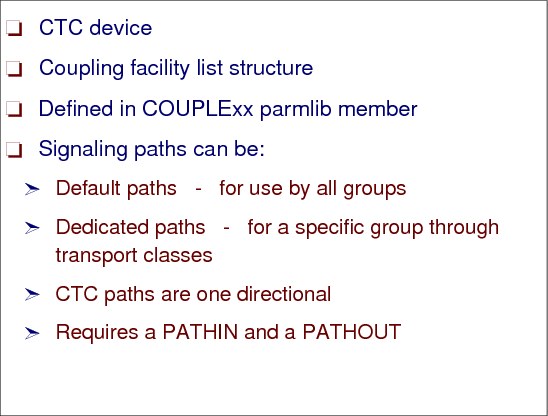

Figure 1-20 XCF signaling paths

XCF signaling paths

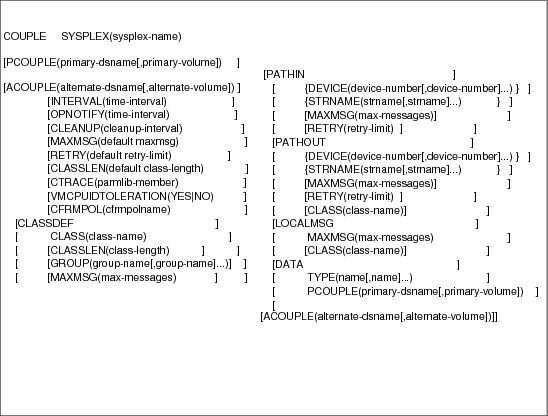

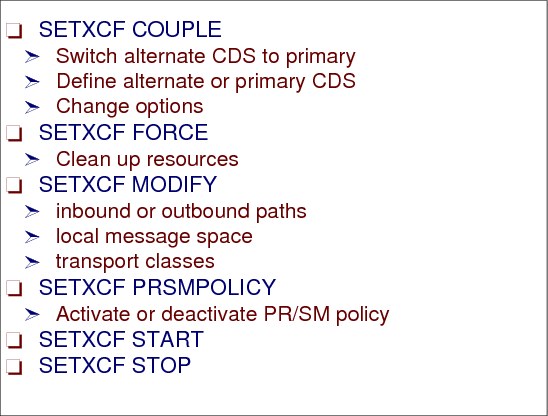

Whether you implement signaling through CTC connections or coupling facility list structures, you need to specify information in the COUPLExx parmlib member. After an IPL, you can issue the SETXCF START,PATHOUT or SETXCF START,PATHIN commands to specify outbound or inbound paths. To establish signaling through a coupling facility, you also need to define a coupling facility resource management (CFRM) policy.

Then, XCF group members use the signaling mechanism to communicate with each other. These communication paths can be:

•Channel-to-channel adapter (CTC) communication connections.

•Coupling facility through list structures. XCF calls XES services when the path of communication is a coupling facility.

•A combination of both, CTC and list structures. In this case XCF selects the faster path.

A message generated in a member has the following path:

•From the sending member to an XCF buffer in the output buffer pool.

•From the XCF output buffer through the signaling path:

– If a CTC goes directly to a buffer in the XCF input buffer pool.

– If CF list structure is stored for a while in the structure memory and from it to an XCF buffer in the input buffer pool. It is like a mail box.

•From the XCF input buffer to a receiving member.

The communication path is determined at IPL by the PATHIN and PATHOUT definitions in the COUPLExx PARMLIB member and can be modified by the SETXCF operator command. This is explained later in this chapter

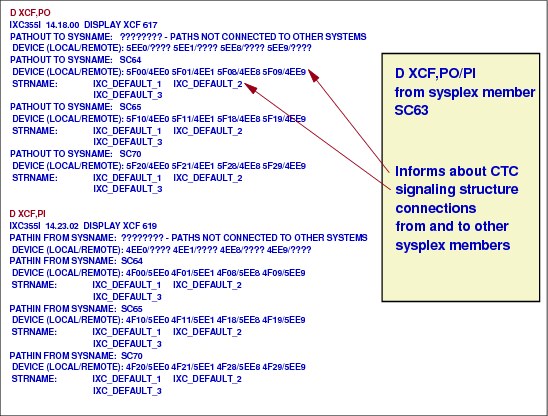

CTC paths and devices

To establish signaling paths through CTC devices, you specify their device numbers in HCD. Each CTCA has a logical controller with a certain amount of logical devices. CTC devices are used by XCF in a uni-directional mode. That is, on each system, messages sent to other systems require an outbound path, and messages received from other systems require a separate inbound path. Then, for each z/OS in the sysplex, you must specify all devices for outbound paths and inbound paths on the DEVICE keyword of the PATHOUT and PATHIN statements in COUPLExx.

Coupling facility list structures

When you define signaling paths through coupling facility list structures, you define to z/OS which list structures to use and how to use them (as PATHIN or PATHOUT), and XCF (through z/OS) creates the logical connections between z/OSs that are using the structures for signaling.

Signaling paths and transport classes

Signaling path is the set of outbound paths plus outbound message buffers in one XCF. A transport class is a set of signalling paths that can be associated with XCF groups. Transport classes are defined in the COUPLExx member in PARMLIB. The reasons for associating an XCF group with a transport class include:

•To isolate the messages (signalling path wise) of these groups from the others

An example from COUPLExx is:

CLASSDEF CLASS(CICS) GROUP(DFHIR0000)

PATHOUT STRNAME(IXC_CICS) CLASS(CICS)

In this example, the group DFHIR0000 is associated with a transport class named CICS and all the messages out from the group use the CF list structure named IXC_CICS.

•To optimize the size of the message with the size of the output buffer

1.21 XCF channel-to-channel connection (CTC)

Figure 1-21 XCF channel-to-channel connection (CTC)

XCF channel-to-channel adapter connection (CTCA)

Full signaling connectivity is required between all z/OSs in a sysplex; that is, there must be an outbound and an inbound path between each pair of systems in the sysplex.

To avoid a single point of signaling connectivity failure, use redundant connections between each pair of systems, through either CTC connections or coupling facility list structures.

CTC signaling paths

CTC signaling paths are uni-directional and require at least four signaling paths between each z/OS (two inbound and two outbound paths). CTCA connections and their device numbers become more complex to manage as the number of z/OSs in the configuration increases. For example, it is almost impossible to have the same COUPLExx member for all the z/OSs in the sysplex. The formula producing the number of connections is: N x (N-1) where N is the number of z/OSs.

Coupling facility signaling paths are bi-directional; this offers better performance in general and is much less complex to maintain. We discuss coupling facility structures next.

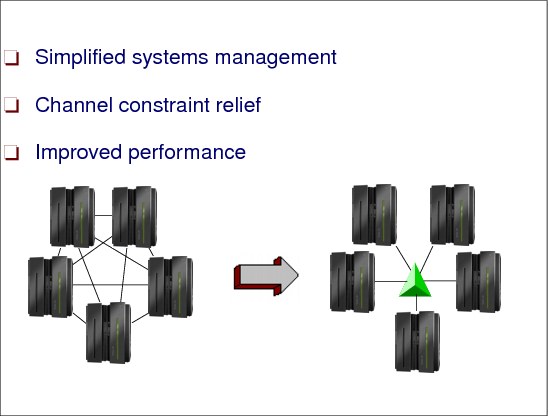

1.22 XCF using coupling facility list structures

Figure 1-22 XCF using coupling facility list structures

List structures

A list structure consists of a set of lists and an optional lock table of exclusive locks, which you can use to serialize the use of lists, list entries, or other resources in the list structure. Each list is pointed to by a header and can contain a number of list entries. A list entry consists of list entry controls and can also include a data entry, an adjunct area, or both. Both data entries and adjunct areas are optional. However, data entries are optional for each list entry while a list structure either has or doesn’t have adjunct areas.

Simplified systems management

When XCF uses coupling facility structures for signaling, each other XCF in the sysplex can automatically discover its connectivity to other systems via the CF, without having to define point-to-point connections on every XCF in the configuration. Furthermore, in the event that a signaling path or signaling structure is lost, the recovery of CF signaling structures is automated and greatly simplified if more than one structure is allocated.

Channel constraint relief

XCF allows customers to consolidate CTC links used by VTAM, JES, and GRS into XCF communication, freeing up channel paths for other uses in constrained configurations. These advancements in CF coupling technologies combined with simplified systems management, ease of recovery, and better cost efficiencies, make XCF the clear choice for configuring XCF signaling paths in a Parallel Sysplex cluster.

List services (IXLLIST)

IXLLIST provides high-performance list transition monitoring that allows you to detect when a list changes from the empty state to the non-empty state (in which it has one or more entries) without having to access the coupling facility to check the list. For instance, if you are using the list structure as a distribution mechanism for work requests, list transition monitoring allows users to detect easily the presence or absence of incoming work requests on their queues.

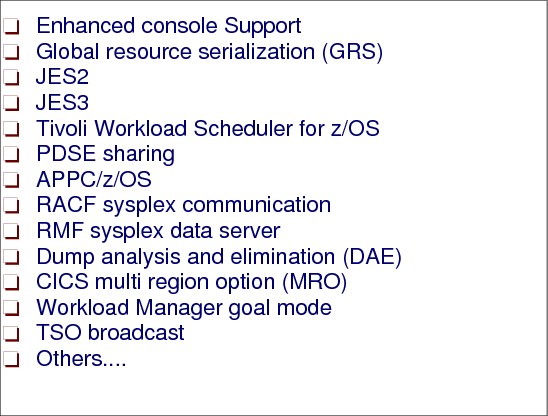

1.23 XCF exploiters

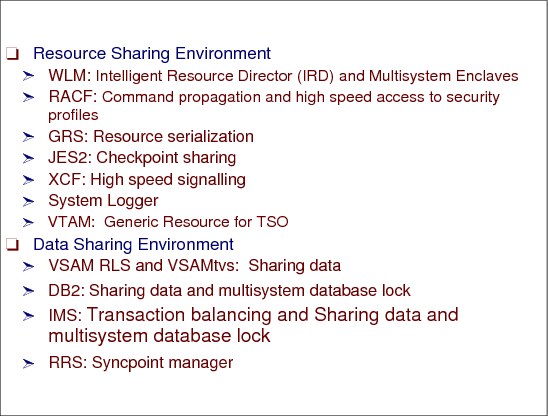

Figure 1-23 XCF exploiters

XCF exploiters

Figure 1-23 shows some sample z/OS components and products that exploit XCF services.

Enhanced console support

Multisystem console support allows consolidation of consoles across multiple z/OS images. A console address space of one z/OS image can communicate with console address spaces of all other images in the sysplex by means of XCF signaling. With this support, any console in the sysplex has the capability to view messages from any other system in the sysplex and to route commands in the same way.

Global resource serialization (GRS)

With the introduction of GRS Star, a new method of communicating GRS allocation requests was introduced. GRS Star uses a lock structure in the coupling facility as the hub of the GRS complex and eliminates the delays and processing overhead inherent in the traditional ring configuration. However, all quiescing and purging of images from GRS is done automatically by XCF. Also the execution of the GRSCAN function is done through XCF communication. This function is requested by performance monitors such as RMF in order to discover contention situations to be reported. To detect global contention the GRSs talk among themselves through XCF links.

JES2

When in multi-access spool (MAS) configuration (several JES2 member sharing the same spool data set), JES2 uses XCF to communicate with other members. Previously, all communication between members was via the JES2 checkpoint data set and, in the event of a system failure by one member of a JES2 MAS, the operator had to manually issue a reset command to requeue jobs that were in execution on the failing image and make them available for execution on the remaining images in the MAS complex. If running in a sysplex, this can be done automatically because of the XCF communication capability.

JES3

JES3 uses XCF services to communicate between JES3 systems in a complex. Previously, JES3 had to manage its own CTCs for this communication. This enables you to reduce the overall number of CTCs that need to be managed in your installation.

Tivoli Workload Scheduler for z/OS

IBM Tivoli Workload Scheduler for z/OS (TWS) is a subsystem that can automate, plan, and control the processing of a batch production workload. In a sysplex environment, TWS subsystems in separate z/OS images can take advantage of XCF services for communication with each other.

PDSE sharing

In partitioned data sets extended (PDSEs) access, XCF services is used for the exchange of locking information between the sharing systems in a sysplex (extended sharing only). Multiple images in a sysplex can concurrently access PDSE members for input and output, but not for update-in-place (the protection is implemented through XCF communication). That is, any member of a PDSE data set (pay attention that a PDSE member is not an XCF member), while being updated-in-place, can only be accessed by a single user. A sharer of a PDSE can read members, create new members or new copies of existing members concurrently with other sharers on the same or other images, input/output, but not for update-in-place.

APPC/z/OS

APPC/z/OS uses XCF to communicate with transaction-scheduler address spaces on the same image that APPC/z/OS is running on.

RACF sysplex communication

RACF can be enabled for sysplex communication between RACF members in the same sysplex. Doing this enables the subsequent commands to be propagated to RACF members in the sysplex other than the member who issued the command, thus simplifying multisystem security management.

RMF sysplex data server

The RMF data server is an in-storage area that RMF uses to store SMF data, which can then be moved around the sysplex to provide a sysplex-wide view of performance, via RMF Monitor III, without having to sign on to each of the systems individually.

Dump analysis and elimination (DAE)

Sharing the SYS1.DAE data set between images in a sysplex can avoid taking multiple dumps for the same problem if it is encountered on different systems in the sysplex.

CICS multi region option (MRO)

With CICS running in a sysplex, MRO has been extended to include cross-system MRO. This is achieved using XCF services for the cross-system communication, rather than having to use VTAM ISC links, as was previously required. This can give considerable performance benefits over current ISC implementations. MRO allows splitting of all the CICS functions that before were jammed in just one address space into several specialist address spaces, such as: terminal owning region or TOR (interface with VTAM to receive the incoming transaction), application owning region or AOR (where the programs containing the transaction logic are executed), file owning region (FOR) where VSAM data sets are accessed.

Workload manager in goal mode

WLM uses XCF for communication between WLM address spaces in the same sysplex. This communication is needed because the goals are global and not z/OS local.

TSO broadcast

If all z/OSs in the sysplex share the same SYS1.BRODCAST data set (used to send welcome messages to logged on users), and SYSPLXSHR(ON) is declared in IKJTSOxx PARMLIB member, then TSO NOTICEs are communicated via XCF signaling. Consequently, the I/O to the SYS1.BRODCAST data set is eliminated.

There are more XCF exploiters, such as DB2, IMS, DFSMS, VSAM, VTAM, and others.

1.24 Sympathy sickness

Figure 1-24 XCF Sympathy sickness

XCF sympathy sickness

There is one structural problem when messages from different XCF groups flow through common resources (message buffer pools and paths). This problem is not only with XCF, but is common with all message traffic.

The problem description is: If an input member fails (or slows down) in taking the messages out from the input buffer pool, this can hurt “innocent bystanders” in this and in other z/OS systems.

This can have the related effect of hurting users in other systems. It is known as “sympathy sickness.”

In the very busy Figure 1-24, if the application receiving messages (top right corner) is not taking its messages from the input buffer pool, this pool will be jammed, causing congestion in the normal flow. After a while, the application sending a message in another z/OS (bottom left corner) will receive back “MSG Rejected” because the output buffer pool is full. Depending on its logic this application may abend.

The objective of a new function, in z/OS 1.8 base and 1.6 with APAR OA09194, is to improve availability for innocent bystanders by automatically removing the stalled task that owns the messages that are filling the most PATHIN buffers. This function must be enabled through the SFM policy containing new MEMSTALLTIME parameter.

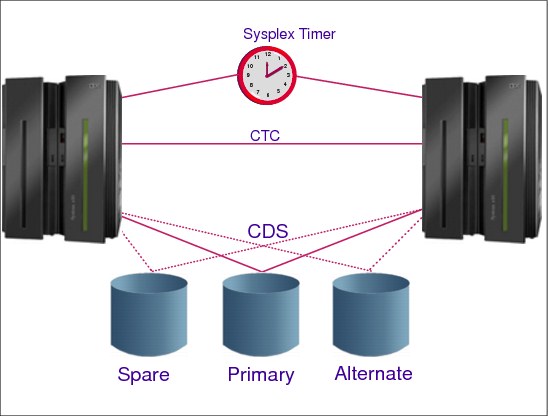

1.25 Sysplex couple data sets

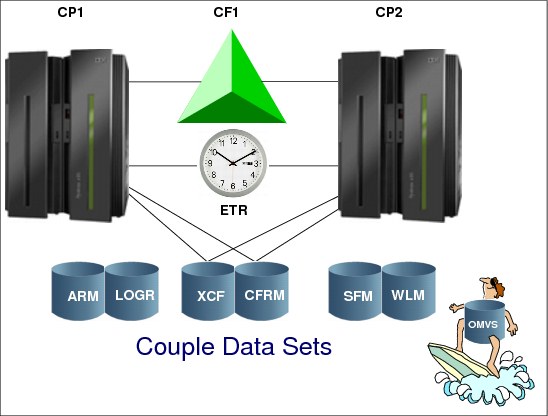

Figure 1-25 Sysplex couple data sets

Couple data sets

Figure 1.25 shows a sysplex of two z/OS systems, where both systems are connected to a Sysplex Timer and can access the sysplex couple data sets. The sysplex might also be configured so the systems can access one or more of the following couple data sets: ARM (automatic restart management), LOGR, SFM, WLM, z/OS UNIX, and a sysplex couple data set.

Sysplex couple data set

XCF requires a sysplex couple data set to be shared by all XCFs in the sysplex. The sysplex couple data set resides on a DASD device and is used to:

•Hold general status information about the z/OS images, XCF groups, and the XCF group members running in the sysplex

•Hold a description of pathouts and pathins together with buffer pools and transport classes

•Point to other couple data sets used in the sysplex

•Contain the heart beat data which inspects all systems to verify if they are alive and working

The couple data sets in a sense are used as a PARMLIB, that is, they have parameters set by the installation to customize the system. However, couple data sets are designed to be shared among z/OS systems in a sysplex (and are not actually a true PARMLIB). When a system programmer is creating their contents, care should be taken with the syntax of the statements.

All coupled data sets need to be created, formatted, and finally filled with installation options.

Primary and alternate data sets

To avoid a single point of failure, it is recommended to format a primary and an alternate couple data set on different devices, physical control units, and channels. All the updates in the primary are immediately copied in the secondary by XCF itself. If the primary data set fails, XCF switches automatically to the alternate data set. The alternate is now the primary couple data set. You can also perform a manual switch with the SETXCF COUPLE,PSWITCH command. Note that this command forces the alternate to be the primary, but the primary does not become the alternate, it is just deallocated. This allows you, for example, to:

•Increase the size of the primary couple data set

•Move the couple data set to a different device

•Change sysplex couple data set definitions

•Reformat the couple data set

Spare couple data set

It is recommended to pre-format a spare couple data set. With the SETXCF COUPLE,ACOUPLE command it is possible to define the spare data set as a new alternate couple data set, avoiding potentially having a single point of failure. The sysplex couple data sets (one type of couple data set) are defined to the XCFs in the sysplex with the PCOUPLE and ACOUPLE statements in the COUPLExx PARMLIB member.

Figure 1-30 on page 50 shows an example of formatting the sysplex couple data sets.

|

Tip: It is recommended that you place the primary sysplex couple data set on a different volume than the primary CFRM couple data set due to their high activities. You might allocate the primary sysplex couple data set together with the alternate CFRM couple data set on one volume, and vice versa.

|

1.26 Other couple data sets

Figure 1-26 Other couple data sets

Couple data sets policy

A policy is a set of rules and actions that z/OS systems in a sysplex follow when using certain z/OS services. A policy allows z/OS to manage specific resources in compliance with system and resource requirements but with little operator intervention. Depending on the policy type, you can set up a policy to govern all z/OS systems in the sysplex or only a set of selected z/OS systems. You might need to define more than one policy to allow for varying workloads, configurations, or other installation requirements at different times. For example, you might need to define one policy for your prime shift operations and another policy for other times. Although you can define more than one policy of each type (except for System Logger), but only one policy of each type can be active at a time.

The same primary and alternate CDS rules apply as described for the sysplex CDS. There are different CDS types (pointed to by the sysplex CDS) that hold the different policies.

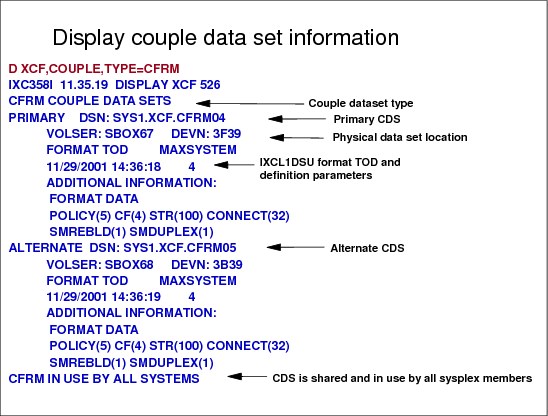

Coupling facility resource management (CFRM) CDS

This CDS contains several CFRM policies. A CF structure is created by CFCC when the first exploiter connects to it (IXLCONN macro). Each structure has several attributes. Parallel Sysplex design requires that some of them should be informed by the exploiter software and others by the installation. The CFRM CDS contains a CFRM policy, that describes the structures attributes that should be decided by the installation. In the CFRM policy, you define:

•The coupling facility HW accessed by the sysplex. Major parameters are:

– CF name, sequence number, partition, cpcid, dumpspace

•All structures and their attributes, for example:

– Structure name, size, initsize

– The preferred CF name for the structure location

– CF duplexing, allowance of structure size altering and structure full monitoring function

Any structure used in the Parallel Sysplex must be defined in the CFRM policy.

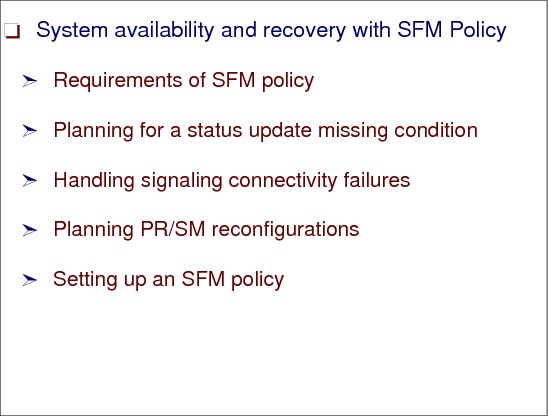

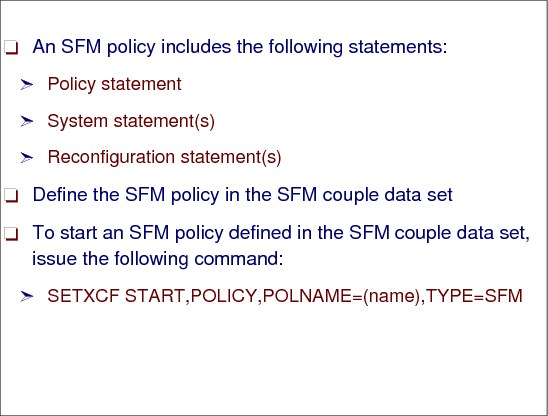

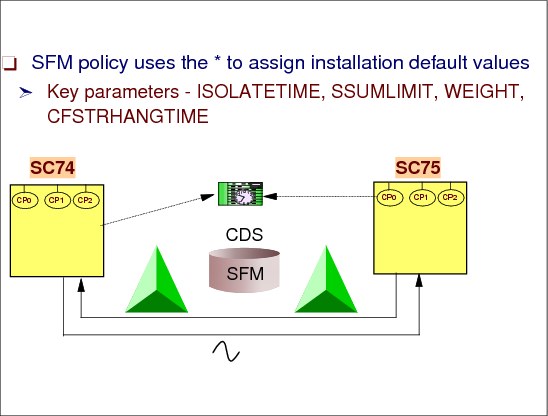

Sysplex failure management (SFM) CDS

This CDS contains several SFM policies. SFM policy allows the installation to define responses to:

•Signaling connectivity failures among XCFs

•Signaling connectivity failures in CF links

•System failures, indicated by a status update (heart beat) missing condition

•Reconfiguring systems in a PR/SM environment

•Sympathy sickness

Workload Manager (WLM) CDS

This CDS contains several WLM policies. In the WLM policy you define workloads, service classes describing goals for workloads, application and schedule environments, goals for non-z/OS partitions and others related to system performance management.

Automatic Restart Manager (ARM) CDS

This CDS contains several ARM policies. In the ARM policy you define how to process restarts and other events for started tasks and batch jobs that failed but previously have registered with Automatic Restart Manager through macros.

One example is CICS and DB2 running dependent on each other. ARM handles this so-called restart group as defined in the ARM policy, through the policy options:

•Start the restart group only on the same system

•Which system in the sysplex should do the restart

•Number of restart attempts

•Restart order

|

Important: It is important to know that Automatic Restart Manager is not to be used as a replacement for system automation, but an addition to.

|

System Logger (LOGR) CDS

This CDS contains several LOGR policies. You use the LOGR policy to define, update, delete and manage log streams. A log stream is a sequential data set describing events, where the records are sorted by a time stamp. In a sysplex environment is almost mandatory to have just one CICS log (for example) instead of one log per each z/OS where CICS address spaces are running. The LOGR policy is used by System Logger services to manage log data across the z/OSs in the Parallel Sysplex. It guarantees the single image of a log merging in chronological order all the log records. Exploiters of System Logger services are: CICS, VSAM TVS, RRS, LOGREC, OPERLOG, and others.

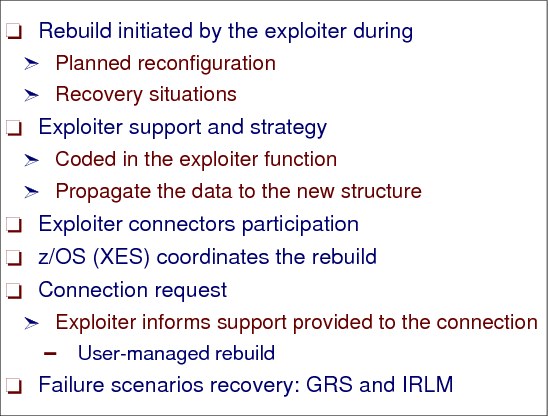

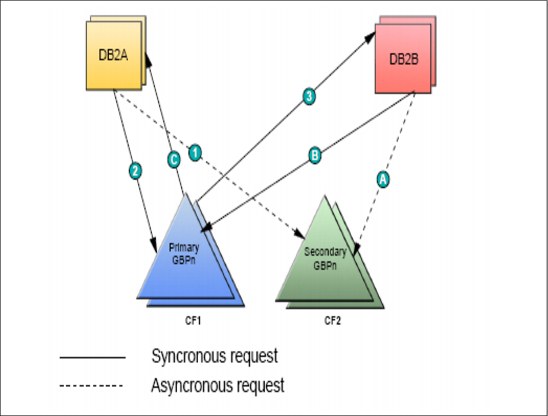

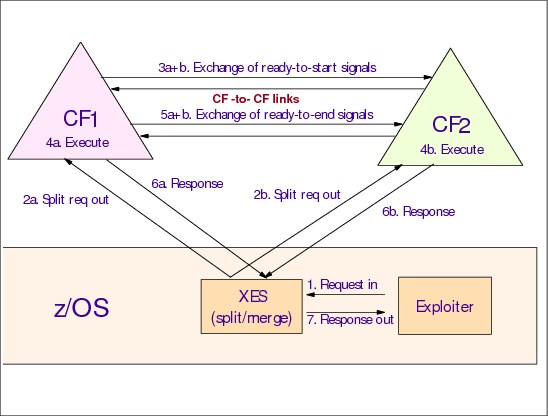

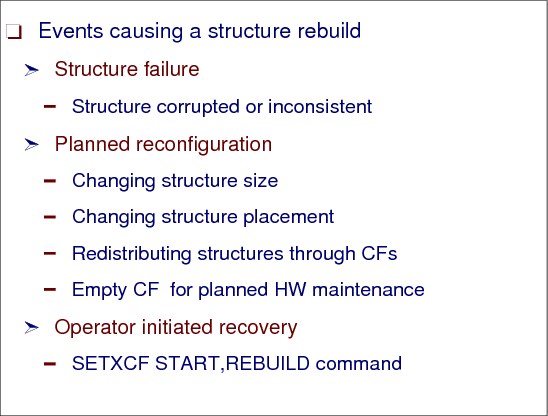

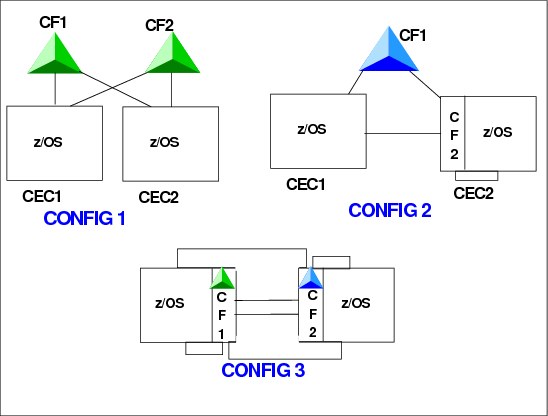

UNIX System Services (OMVS) CDS