In this chapter, we’re going to take you through some key milestones of both AI and UX, pointing out lessons we take from the formation of the two fields. While the histories of AI and UX can fill entire volumes of their own, we will focus on specific portions of each.

If we step back and look at how AI and UX started as separate disciplines, following those journeys provides an interesting perspective of lessons learned and insight. We believe that at the confluence of these two disciplines is where AI will have much more success.

UX is a relatively modern discipline with its roots in the field of psychology; as technology emerged, it became known as the field of human-computer interaction (HCI). HCI is about optimizing the experience people have with technology. It is about design and it recognizes that a designer’s initial attempt on the design of the product might require modification to make the experience a positive one. (We’ll discuss this in more detail later in this chapter.) As such, HCI emphasizes an interactive process. At every step of the interaction, there can and should be opportunities for the computer and the human to step back and provide each other feedback, to make sure that each party contributes positively and works comfortably with the other. AI opens up many possibilities in this type of interaction, as AI-enabled computers are becoming capable of learning about humans as a user in the same way that a real flesh-and-blood personal assistant might. This would make a better-calibrated AI assistant, one far more valuable than simply a tool to be operated: a partner rather than a servant.

The Turing Test and its impact on AI

The exact start of AI is a subject of discussion, but for practical purposes, we choose to start with the work of computer scientist, Alan Turing. In 1950, Turing proposed a test to determine whether a computer can be said to be acting intelligently. He felt that an intelligent computer was one that could be mistaken for a human being by another human being. His experiment was manifest in several forms that would test for computer intelligence. The clearest form involved a user sending a question to an unknown respondent, either a human or a computer, which would provide an answer anonymously. The user would then be tasked with determining whether this answer came from a human or from a computer. If the user could not identify the category of the respondent with at least 50% accuracy, the computer would be said to have achieved intelligence, thereby passing the “Turing Test.”

The Turing Test is a procedure intended to determine the intelligence of a computer by asking a series of questions to assess whether a human is unable to distinguish if a computer or human is giving responses.1

The Turing Test has become a defining measure of AI, particularly for AI opponents, who believe that today’s AI cannot be said to truly be “intelligent.” However, some AI opponents, such as philosopher John Searle, have proposed that Turing’s classification of machines that seem human as intelligent may have even gone too far, since Turing’s definition of intelligent computers would be limited to machines that imitate humans.2 Searle argues that intention is missing from the Turing Test and the definition of AI goes beyond syntax.3 Elon Musk takes a similar view of intelligence4 to Searle, proposing that AI simply delegates tasks to constituent algorithms which consider individual factors and does not have the ability to consider complex variables on its own, so this would argue AI is not really intelligent at all.

As far as we know, no computer has ever passed the Turing Test—though a recent demonstration by Google Duplex (making a haircut appointment) was eerily close.5 The Google Duplex demonstrations are fascinating as they represent examples of natural language dialog. The recordings start with Google’s Voice AI placing a telephone call to a human receptionist to schedule a hair appointment and a call to make a reservation with a human hostess at a restaurant.6 What is fascinating is the verbal and nonverbal cues that were designed into the computer voice, such as pauses and inflection by Duplex, were interpreted by the human successfully. On face, Duplex engaged into a conversation with a human where the human does not appear to realize that a machine is operating at the other end of the call. It’s unclear how many iterations Google actually had to go through to get this example. But, in this demonstration, the machine—through both verbal and nonverbal cues—seemed to successfully navigate a human conversation without the human showing any knowledge of or negative reaction to the fact that it was a machine that was calling.

BOB: Regardless of whether or not the Turing Test is a sufficient litmus test for the presence of AI, it’s had a profound influence on how we define AI.

GAVIN: The Turing Test has captured the attention of the masses interested in the future of AI. The Turing Test has an inherent simplicity as a game of imitation and asked the question: can a computer fool a human?

BOB: Popular science fiction elevated the Turing Test in movies, such as Ex Machina, which was explicit in its use of the Turing Test, and Blade Runner, where the protagonist played by Harrison Ford tests whether a woman is a human or “replicant.”

GAVIN: Turing’s influence on computing and our perception of intelligence is undeniable, that’s for sure.

■ The point Throughout the short history of AI, there has been a desire to make the AI have human qualities.

As AI continues to evolve, one trap that needs to be avoided is to advance a definition of AI that juxtaposes AI against a human. This might be too limiting to where AI that complements human decision making would be undervalued. Put simply, there is more to AI than conversation. Moreover, by simply defining intelligence based on Turing’s test, the development of AI could lead to a belief that a program seems more intelligent than it actually is.

Attribution of intelligence to AI

One of the most notorious programs in computer science was written in 1966 by Joseph Weizenbaum, a professor at MIT. The program was called ELIZA. The name comes from George Bernard Shaw’s play, Pygmalion, in which Eliza Doolittle studies elocution and etiquette. In this program, Weizenbaum modeled the interaction similar to how a Rogerian psychotherapist might respond to a patient.

The person simply types responses to the questions Eliza asked.

ELIZA: Tell me your problem.

PERSON: I am depressed.

ELIZA: How long have you been feeling depressed?

PERSON: Since my daughter left home.

ELIZA: Please go on.

PERSON: I don’t want her to get into trouble.

ELIZA: What would it mean to you if she got into trouble?

And so on…

The point This conversation between the user and the program left an impression that Eliza embodied human-like intelligence. Because the dialog felt natural, the computer must be intelligent. But, did this constitute intelligence? Is all that is necessary for intelligence is to fool a human?

ELIZA was successful and people may have poured their lives into it. But, in all of these conversations, there was no learning algorithm to carefully analyze the data. In fact, not much of what was typed was saved as this was 1966. Some proclaimed that Weizenbaum had solved natural language through his program.

Weizenbaum ended up on a crusade against his own program.7 ELIZA was more of a Carl Rogers parody. The program did not know psychology, just semantic logic to reflect questions back. But because the program felt human, intelligence was bestowed to it. This is an example of AI that can capture the imagination of the masses. And this makes AI susceptible to being overhyped.

The influence of hype

With ELIZA, the hype was brought about from the users. In other examples, hype can come from its creators, investors, government, media, or market forces.

Historically, AI overpromised and under-delivered

In the past, artificial intelligence has had something of an image problem. In 2006, The New York Times’ John Markoff called AI “a technology field that for decades has overpromised and under-delivered”8 in the lead paragraph of a story about an AI success.

One of the earliest attempts to develop AI was machine translation, which has its genesis in the post-World War II information theories of Claude Shannon and Norbert Weaver; there was substantial progress in code breaking as well as theories about universal principles underlying language.9

Machine translation is the translation of one language into another via a computer program.

Machine translation’s paramount example is the now (in)famous Georgetown-IBM experiment10 where in a public demonstration in 1954, a program developed by Georgetown University and IBM researchers successfully translated many Russian-language sentences to English. This demonstration earned the experiment major media coverage. In the heat of the Cold War, a machine that could translate Russian documents into English would have been very compelling to American national defense interests. A number of headlines—“The bilingual machine,” for example—greatly exaggerated the machine’s capabilities.11 This coverage was accompanied by massive investment leading to wild predictions about the future capabilities of machine translation. One professor who worked on the experiment was quoted in the Christian Science Monitor saying that machine translation in “important functional areas of several areas” might be ready in 3–5 years.12 Hype was running extremely high. The reality was far different: the machine could only translate 250 words and 49 sentences.

Indeed, the program’s focus was on translating a set of narrow scientific sentences in the domain of chemistry, but the press coverage focused more on a select group of “less specific” examples which were included with the experiment. According to linguist W. John Hutchins,13 even these few less specific examples shared features in common with the scientific sentences that made them easier for the system to analyze. Perhaps because of these few contrary examples, the people covering the Georgetown-IBM experiment did not grasp the leap in difficulty between translating a defined set of static sentences to translating something as complex and dynamic as policy documents or newspapers.

The Georgetown-IBM translator may have seemed intelligent in its initial testing, but further analysis proved its limitations. For one thing, it was based on a rigid rules-based system. Just six rules were used to encode the entire conversion from English to Russian.14 This, obviously, inadequately captures the complexity of the task of translation. Plus, language only loosely follows rules—for proof, look no further than the plethora of irregular verbs in any language.15 Not to belabor the point but the program was trained on a narrow corpus and its main function was to translate scientific sentences, which is only an initial step towards translating Russian documents and communications.

This very early public test of machine translation featured AI that seemed to pass the Turing Test—but that accomplishment was deceptive.

GAVIN: The Georgetown-IBM experiment was a machine language initiative that started as a demonstration to translate certain chemistry documents. And this resulted in investment that spurred a decade of research in machine language.

BOB: Looking back now, you could argue the logic of applying something that marginally worked in the domain chemistry to be generalized to the entire Russian language. It seems overly simplistic, but, at the time, this chemistry corpus of terms might have been the best available dataset. Over the past 70 years, the field of linguistics has evolved significantly, and the nuisances of language are now recognized to be far more complex.

GAVIN: Nevertheless, the fascination that the power of computing would find patterns and solve mutual translation between English and Russian is an all too common theme. I suspect that the researchers were clear in the limitations of the work, but as with Greenspan’s now famous “irrational exuberance” quote that described the hype associated with the stock market, expectations can often take on a life of their own.

The point We must not believe that the power of computing can overcome all. What shows promise in one domain (chemistry) might not be widely generalizable to others.

The Georgetown and IBM researchers who presented the program in public may have chosen to hide the flaws of their machine translator. They did so by limiting the translation to the scientific sentences that the machine could handle. The few selected sentences that the machine translated during the demonstration were likely chosen to fit into the tightly constrained rules and vocabulary of the system.16

The weakness of the Turing Test as a measure of intelligence can be seen in journalists’ and funders’ initial, hype-building reactions to the Georgetown-IBM experiment’s deceptively human-like results.17 Upon witnessing a machine that could seemingly translate Russian sentences with the near accuracy of a human translator, journalists18 must have thought they had seen something whose capabilities significantly outstripped the reality of the program.

Yet these journalists were ignorant or unaware of the limited nature of the Georgetown-IBM technology (and the organizers of the experiment may have nudged them in that direction with their choices for public display). If the machine had been tested on sentences outside the few that were preselected by the researchers, it wouldn’t have appeared to be so impressive. Journalists wrote articles that hyped the technology’s capability. But the technology wasn’t ready to match the hype. Nearly 60 years later, machine translation is still considered imperfect at best.19

Hype can play a large influence on whether the product is judged a success or failure.

AI failures resulted in AI winters

A most devastating consequence of this irrational hype was the suspension of funding for AI research. As described, the hype and perceived success from the Georgetown-IBM experiment resulted in massive interest and substantially increased investment in machine translation research; however, that research soon stagnated as the difficulty of the real challenge associated with machine translation began to sink in.20 By the late 1960s, the bloom had come off the rose. Hutchins specifically tied funding cuts to the Automatic Language Processing Advisory Committee (ALPAC) report, released in 1966.21

The ALPAC report, sponsored by several US government agencies in science and national security, was highly critical of machine translation, implying that it was less efficient and more costly than human-based translation for the task of translating Russian documents.22 At best, the report said that computing could be a tool for use in human translation and linguistics studies, but not as a translator itself.23 The report went on to say that machine-translated text needed further editing from human translators, which seemed to defeat the purpose of using it in place of human translators.24 The conclusions of the report led to a drastic reduction in machine translation funding for many years afterward.

In a key portion, the report used the Georgetown-IBM experiment as evidence that machine translation had not improved in a decade’s worth of effort. The report compared the Georgetown-IBM results directly with results from subsequent Georgetown machine translators, finding that original Georgetown-IBM’s results had been more accurate than advanced versions. That said, Hutchins defined the original Georgetown-IBM experiment as not an authentic test of the latest machine translation technology but as a spectacle “intended to generate attention and funds.”25 Despite this, ALPAC judged later results against Georgetown-IBM as if it had been a true showing of AI’s capabilities. Even though machine translation may have actually improved in the late 1950s and early 1960s, it was judged against its hype, not against its capabilities.

As machine translation was one of the most important early manifestations of AI, this report had an impact on the field of AI in general. The ALPAC report and the corresponding domain-specific machine translation winter were part of a chain reaction that eventually led to what is considered the first AI winte r.26

An AI winter is a period when research and investment into AI stagnates significantly. During these periods, AI development gains a negative reputation as an intractable problem. This leads to decreased investment in AI research, which further exacerbates the problem. We identify two types of AI winters: some are domain specific, where only a certain subfield of AI is affected, and some are general, in which the entire field of AI research is affected.

Today, there are lots of different terms for technology that encapsulate AI—expert systems, machine learning, neural networks, deep learning, chatbots, and many more. Much of that renaming started in the 1970s, when AI became a bad word. After early developments in AI in the 1950s, the field was hot—not too different from right now, though on a smaller scale. But in the decade or two that followed, funding agencies (specifically US and UK governments) labeled the work a failure and halted funding—the first-ever general AI winter.27

AI suffered greatly from this long-term lapse in funding. In order to get around AI’s newfound negative reputation, AI researchers had to come up with new terms that specifically did not mention AI to get funding. So, following the AI winter, new labels like expert systems emerged.

Given the seeming promise of AI today, it may be difficult to contemplate that another AI winter may be just over the horizon. While there is much effort and investment directed toward AI, progress in AI has been prone to stagnation and pessimism in the past.

If AI does enter another winter, we believe a significant contributing factor will be that AI designers and developers neglected the role UX plays in successful design. There is another contributing factor and that is the velocity of technology infusion into everyday life. In the 1950s, many homes had no television or telephone. Now the demands are higher for applications; users will reject applications with poor UX. As AI gets embedded into more consumer applications where user expectations are higher, it is only inevitable that AI will need better UX.

The first AI winter followed the ALPAC report and was associated with a governmental stop to funding related to machine language efforts. This investment freeze lasted into the 1970s in the United States. Negative funding attention and news continued with the 1973 Lighthill Report, where Sir James Lighthill reported to English Parliament results similar to those of ALPAC. AI was directly criticized as being overhyped and not delivering on its promise.28

BOB: So, was it that the underlying theory and technology in the Georgetown-IBM experiment were flawed or was it just hype that created the failure?

GAVIN: I think it was both. The ALPAC report pulled no punches and led to a collapse in any research in machine translation—a domain-specific AI winter. Huge hype for machine translation turned out to be misplaced and the result was a significant cut in funding.

BOB: Yes, funding requests with the terms “machine translation” or “artificial intelligence” disappeared. Not unlike the old adage of “throwing the baby out with the bath water,” a major failure in one domain makes the whole field look suspect. That’s the danger of hype. If it doesn’t match the actual capabilities of the product, it can be hard to regain the trust.

GAVIN: The first general AI winter formed a pattern of initial signs of promise, to hype, to failure, and subsequently a freeze in future funding. This cycle led to significant consequences for the field. But scientists are smart; out from the ashes, AI bloomed again, but this time using new terminology such as expert systems, which led to advancements in robotics.

BOB: So, under its new names, AI garnered over $1 billion in new investment in the 1980s, ushered in by private sector companies in the United States, Britain, and Japan.

GAVIN: Actually, Japan’s advances in AI spawned US and British international competition to keep up with the Japanese. Notable examples are the European Strategic Program on Research and Information Technology, the Strategic Computing Initiative, and Microelectronics and Computer Technology Corporation in the United States. Unfortunately, hype emerged again, and when these companies failed to deliver on the lofty promises, the second AI winter29 was said to occur in 1993.

The point AI has seen boom and bust cycles multiple times in its history.

Can another AI winter happen? It already did

Often lessons from the past are ignored with the hope that this time will be different. Will another AI winter happen in our lifetime is not the question because one happened before our very eyes.

Consider Apple’s voice assistant, Siri. Siri was not launched fully functional right out of the gates. The “beta” version was introduced with a lot of fanfare. Soon Apple pulled it out of “beta” and released more fully fledged versions in subsequent updates to iOS—versions that were far more functional and usable than the original—the potential for many users to adapt to it was greatly reduced. However, Siri users had already formed their impressions, and considering the AI-UX principle of trust, those impressions were long-lasting. Not to be too cheeky, but one bad Apple (released too early) spoiled the barrel.

BOB: Look, to the Siri fans out there, Apple did an amazing job relative to previous voice assistants. When working for Baby Bell companies many years back, we often tested voice assistants. Siri was a generation ahead of anything we had in our labs.

GAVIN: And Siri was the first-ever virtual assistant to achieve major market penetration. In 2016, industry researcher Carolina Milanesi found that 98% of iPhone users had given Siri at least one chance.30 This is a phenomenal achievement in mass use of a product.

BOB: The problem though was continued use. When 98% were asked how much they used it, most replied “rarely” or “sometimes” (70%). In short, almost all tried it, but most stopped using it.

GAVIN: Apple hyped Siri for its ability to understand the spoken word, and Siri captured the attention of the masses. But after time, most users were sorely disappointed with hearing the response, “I’m sorry. I don’t understand that,” and abandoned it after a few initial failures.

BOB: To have so many try a product that is designed to be used daily (i.e., “Siri, what is the weather like today?”) and practically abandon its use is not simply a shame; it is a commercial loss. The effort to get a customer to try something and lose them, well, you poison the well.

GAVIN: Even now, if you were to play the Siri prompt (“bee boom”), a chill goes up my spine because I must have accidentally pressed it. But this feeling of a chill negatively impacted other voice assistants. Ask yourself: Have you ever tried Cortana (Microsoft’s voice feature on Windows OS)? Did you try it? Even once? And why did you not try it?

BOB: No. Never gave it a try. Because to me, Cortana was just another Siri. In fact, I moved to Android partly because Siri was so lame.

GAVIN: In speaking to Microsoft Cortana design and development teams, they would vociferously argue how much different (or better) their voice assistant Cortana was from Siri. But because of the failure of trust, people who used Siri tended to associate the technology with Cortana.

BOB: Ask if anyone has tried Bixby, Samsung’s mobile phone voice assistant, and you get blank stares.

The point Violating an AI-UX principle like trust can be powerful enough to prevent users from trying similar, but competitive products. This is arguably a domain-specific AI winter.

These negative feelings toward Siri extended to other virtual assistants that were perceived to be similar to Siri. As other virtual assistants came along, some users had already generalized their experiences with virtual assistants as a category and reached their own conclusions. The immediate impact of this was to reduce the likelihood of adoption. For instance, only 22% of Windows PC users ended up using Cortana.31

Ultimately, Cortana was likely hit even harder than Siri itself by this AI winter because Siri was able to overcome and still exists. Cortana was eventually repurposed as a lesser service. In 2019, Microsoft announced that, going forward, they intended to make Cortana a “skill” or “app” for users of various virtual assistants and operating systems that would allow them to access information for subscribers to the Microsoft 365 productivity suite.32 This meant that Cortana would no longer be equivalent to Siri.

Unlike Siri, Cortana was a vastly capable virtual assistant at its launch, especially for productivity functions. Its “notebook” feature, modeled after the notebooks that human personal assistants keep on their clients’ idiosyncrasies, offered an unmatched level of personalization.33 Cortana’s notebook also offered users the ability to delete some of the data that it had collected on them. This privacy feature exceeded any offered by other assistants.34

Despite these very different capabilities, users simply did not engage. Many could not get past what they thought Siri represented.

Moreover, interaction also became a problem for Siri. Speaking to your phone was accompanied by social stigma. In 2016, industry research by Creative Strategies indicated that “shame” about talking to a smartphone in public was a prominent reason why many users did not use Siri regularly.35 The most stigmatized places for voice assistant use—public spaces—also happen to be common use cases for smartphones. Ditto for many of the common use cases for laptop computers: workplace, library, and classroom. Though in our very unscientific observations, an increasing number of people are using the voice recognition services on their phones these days.

BOB: Perhaps the reason we do not readily think of the impact that stemmed from the poor initial MVP experience with Siri is because this AI winter lasted only a couple years not decades. This rebirth of the virtual assistant emerged as Amazon’s Alexa.

GAVIN: But look what it took for the masses to try another voice assistant. Alexa embodied an entirely new form factor, something that sat like a black obelisk on the kitchen counter. This changed the environment of use. Where the device was placed afforded a visual cue to engage Alexa.

BOB: It also allowed Amazon to bring forth Alexa with more features than Siri. Amazon was determined to learn from the failed experience of its Amazon Fire Phone. The Fire had a voice assistant feature, and Amazon’s Jeff Bezos did not want to make Alexa’s voice assistant an MVP version. He wanted to think big.

GAVIN: Almost overnight, Jeff Bezos dropped $50 million and authorized headcount of 200 to “build a cloud-based computer that would respond to voice commands, ‘like the one in Star Trek’.”36

The point Alexa emerged as a voice assistant and broke out of the AI winter that similar products could not, but it needed an entirely different form factor to get users to try it. And when users tried, Jeff Bezos was determined to not have users experience an MVP version, but much bigger.

“Lick” and the origins of UX

In the early days of computing, computers were seen as a means of extending human capability by making computation faster. In fact, through the 1930s, “computer” was a name used for humans whose job it was to make calculations.37 But there were a few who saw computers and computing quite differently. The one person who foresaw what computing was to become was J. C. R. Licklider, also known as “Lick.” Lick did not start out as a computer scientist; he was an experimental psychologist, to be more precise, a highly regarded psychoacoustician, a psychologist who studies the perception of sound. Lick worked at MIT’s Lincoln Labs and started a program in the 1950s to introduce engineering students to psychology—a precursor to future human-computer interaction (HCI) university programs.

Human-computer interaction is an area of research dedicated to understanding of how people interact with computers and the application of certain psychological principles to the design of computer systems. 38

Lick became head of MIT’s human factors group where he transitioned from work in psychoacoustics to computer science because of his strong belief that digital computers would be best used in tandem with human beings to augment and extend each other’s capabilities.39 In his most well-known paper, Man-Computer Symbiosis40, Lick described a computer assistant that would answer questions when asked, do simulations, display results in graphical form, and extrapolate solutions for new situations from past experience.41 (Sounds a little like AI, doesn’t it?) He also conceived the “Intergalactic Computer Network” in 1963—an idea that heralded the modern-day Internet.42

Eventually, Lick was recognized for his expertise and became the head of the Information Processing Techniques Office (IPTO) of the US Department of Defense Advanced Research Projects Agency (ARPA). Once there, Lick fully embraced his new career in computer engineering. He was given a budget of over $10 million dollars to launch the vision he cited in Man-Computer Symbiosis. In the intertwining of HCI and AI, Lick was the one who initially funded the work of the AI and Internet pioneers Marvin Minsky, Douglas Engelbart, Allen Newell, Herb Simon, and John McCarthy.43 Through this funding, he spawned many of the computing “things” we know today (e.g., the mouse, hypertext, time-shared computing, windows, tablet, etc.). Who could have predicted that a humble experimental psychologist, turned computer scientist, would be known as the “Johnny Appleseed of the Internet”?44

GAVIN: Bob, you’re a huge fan of Lick.

BOB: With good reason. Lick was the first person to merge principles of psychology into computer science. His work was foundational for computer science, AI and UX. Lick pioneered an idea essential to UX that computers can and should be leveraged for efficient collaboration among people.

GAVIN: You can certainly see that in technology. Computers have become the primary place where communication and collaboration happen. I can have a digital meeting with someone who’s halfway across the world and collaborate with them on a project. It seems obvious to us, but it’s really a monumental difference from the way the world was even just 20 years ago, let alone in Lick’s day.

BOB: We now exist in a world where computers are not just calculators but the primary medium of communication among humans. That vision came from Lick and others like him who saw the potential of digital technology to facilitate communication.

The point Lick formed the basis of where AI is headed today—where AI and humans are complementary.

In a few years, men will be able to communicate more effectively through a machine than face to face. That is a rather startling thing to say, but it is our conclusion.

Lick and Taylor describe, in 1968, a future world that must have seemed very odd at the time. Fast forward to today where our lives are filled with video calls, email, text messaging, and social media. This goes to show how differently people thought of computing back in the 1960s and how forward-looking Lick and Taylor were at the time. This paper was a clear-eyed vision of the Internet and how we communicate today.

Taylor eventually succeeded Lick as the director of IPTO. While there, he started development on a networking service that allowed users to access the information stored on remote computers.47 One of the problems he saw though was that each of the groups he funded were isolated communities and were unable to communicate with one another. His vision to interconnect these communities gave rise to the ARPANET and eventually the Internet.

After finishing his time at IPTO, Taylor eventually found his way to Xerox PARC (Palo Alto Research Center) and managed its Computer Science Lab, a pioneering laboratory for new and developing computing technologies that would go on to change the world as we know it. We’ll discuss Xerox PARC later in this chapter. But, first, let’s return to the world of AI and see what was going on during this time period.

Expert systems and the second AI winter

Following the first AI winter that was initiated by the ALPAC findings that concluded unfavorable progress in machine translation, scientists eventually adapted and proposed research into new AI concepts. This was the rise of expert systems in the late 1970s and into the 1980s. Instead of focusing on translation, an expert system was a type of AI that used rule-based systems to systematically solve problems.48

Expert systems operate based on a set of if-then rules and draw upon a “knowledge base” that mimics, in some way, how experts might perform a task.

According to Edward Feigenbaum, one of the early AI pioneers following the first AI winter, expert systems brought positive impacts of computer science in mathematics and statistics to other, more qualitative fields.49, 50 In the 1980s, expert systems had a massive spike in popularity, as they entered popular usage in corporate settings. Though expert systems are still used for business applications and emerge as concepts like clinical decision making for electronic health record systems (EHR) ,51 their popularity fell dramatically in the late 1980s and early 1990s, as an AI winter hit.52

Feigenbaum outlined two components of an expert system: the “knowledge base,” a set of if-then rules which includes expert-level formal and informal knowledge in a particular field, and the “inference engine,” a system for weighting the information from the knowledge base in order to apply it to particular situations.53 While many expert systems benefit from machine learning, meaning they can adjust their rules without programmer input, even these adaptable expert systems are generally reliant on the knowledge entered into them, at least as a starting point.

This dependence on programmed rules poses problems when expert systems are applied to highly specific fields of inquiry. Feigenbaum identified such a problem54 in 1980, citing a “bottleneck” in “knowledge acquisition” that resulted from the difficulty in programming expert knowledge into a computer. Since machine learning was unable to directly translate expert knowledge texts into its knowledge base and since experts in many fields did not have the computer science knowledge necessary to program the expert system themselves, programmers acted as an intermediary between experts and AI. If programmers misinterpreted or misrepresented expert knowledge, the resulting misinformation would become part of the expert system. This was particularly problematic in cases where the experts’ knowledge was of the unstated sort that comes with extensive experience within a field. If the expert could not properly express this unstated knowledge, it would be difficult to program it into the expert system. In fact, psychologists tried to get at this problem of “knowledge elicitation” from experts in order to support the development of expert systems.55 Getting people (particularly experts) to talk about what they know and express that knowledge in rules-based format suitable for machines turns out to be a gnarly problem.

These limitations of the expert system architecture were part of the problem that eventually put them into decline. The failure of expert systems led to a years-long period in which the development of AI in general was stagnant. We cannot say exactly why expert systems stalled in the 1980s, although irrationally high expectations for a limited form of AI certainly played a role. But it is likely that the perceived failures of expert systems negatively impacted other areas of AI.

GAVIN: Just think about what it took to build “rule-based” systems. You needed computer scientists who programmed the “brain,” but you also needed to enter “information” to essentially embed domain knowledge into the system as data.

BOB: When the objective was machine translation, the elements were words and sentences. But when you are building expert systems like autonomous robotics, this effort adds a physical dimension, like one that would perform on an automated assembly line.

GAVIN: The sheer amount of knowledge at play makes for a complicated world, one that had some programmers coding. Others worked to take knowledge and create training datasets. Others worked on computer vision. Still others worked on robotic functions to enable the mechanical degrees of freedom to complete physical actions. The need to have machines learn on their own has necessitated our current definitions of artificial intelligence. There was simply too much work to be done.

BOB: AI winters have come and gone—but they were hardly the “Dark Ages.” The science advanced. As technology advanced, challenges only became greater. Whether changing its name or its focus, many pushed through AI failures to get us to where we are today.

AI winters stifled funding, but the challenge of AI captured the attention of great minds who wanted to advance technology and science.

Of course, failure provides the lessons that we carry forward when we pick ourselves up, dust ourselves off, and move on. Failure helps us be better prepared for the next time we are at a crossroads. We think that AI is at such a crossroads now and that lessons learned from the failure that led to the expert system AI winter can help us get through it. AI scholar Roger Schank,56 a contemporary of Feigenbaum and other expert systems proponents, outlined his opinion on the shortcomings of expert systems in 1991. Schank believes that expert systems, especially after encouragement from venture capitalists, were done in by an overemphasized focus on their inference engines.57

Schank describes venture capitalists seeing dollar signs and encouraging the development of an inference machine “shell”—a sort of build-your-own-expert-system machine. They could sell this general engine to various types of companies who would program it with their specific expertise. The problem with this approach, for Schank, is that the inference engine is not really doing much of the work in an expert system.58 All it does, he says, is choose an output based on values already represented in the knowledge base. Just like machine translation, the inference engine developed hype that was incommensurate with its actual capabilities.

These inference machine “shells” lost the intelligence found in the programmers’ learning process. Programmers were constantly learning about the expert knowledge in a particular field and then adding that knowledge into the knowledge base.59 Since there is no such thing as expertise without a specific domain on which to work, Schank argues that the shells that venture capitalists attempted to create were not AI at all—that is, the AI is in the knowledge base, not the rules engine.

Failure can be devastating, but can teach us valuable lessons.

Xerox PARC and trusting human-centered insights

The history of Xerox’s Palo Alto Research Center (PARC) is remarkable that a company known for its copiers gave us some of the greatest innovations of all time. In the last decades of the 20th century, Xerox PARC was the premier tech research facility in the world. Here are just some of the important innovations in which Xerox PARC played a major role: personal computer, graphical user interface (GUI), laser printer, computer mouse, object-oriented programming (Smalltalk), and Ethernet.60 The GUI and the mouse made computing much easier for most people to understand, allowing them to use the computer’s capabilities without having to learn complex commands. The design of the early systems was made easier by applying psychological principles to how computers—both software and hardware—were designed.

BOB: Bob Taylor, who was head of the Computer Sciences Division at Xerox PARC, recruited the brightest minds from his ARPA network and other Bay Area institutions, such as Douglas Engelbart’s Augmentation Research Center. These scientists introduced the concepts of the computer mouse, windows-based interfaces, and networking.

GAVIN: Xerox PARC was one of those places that had a center of excellence (COE) that attracted the world’s brightest. This wasn’t like the COEs we see today that are used as a business strategy. Instead, PARC was recognized like Niels Bohr’s institute at Copenhagen when it was the world center for quantum physics in the 1920s or the way postwar Greenwich Village drew artists inspired by Abstract Expressionism or how Motown Records attracted the most creative writers and musicians in soul music.61 It was vibrant!

BOB: PARC was indeed an institute of knowledge. Despite building such a remarkable combination of talent and innovative new ideas, the sustainability of such an institution can still be transitory. By the 1980s, the diffusion of Xerox PARC scientists began. But much of where technology stands today is because of Xerox PARC’s gathering and the eventual dispersion that allowed advancement to move from invention and research to commercialization.

The point Xerox PARC is where human-computer interaction made progress in technology with roots in psychology.

Eric Schmidt, former chairman of Google and later Alphabet, said—perhaps with a bit of exaggeration—that “Bob Taylor invented almost everything in one form or another that we use today in the office and at home.” Taylor led Xerox PARC during its formative period. For Taylor, collaboration was critical to the success of his products. Taylor and the rest of his team at PARC garnered insights through group creativity, and Taylor often emphasized the group component of his teams’ work at Xerox PARC.62

While Lick and Taylor poured the foundation, a group of scientists built on that, recognizing that there was an applied psychology to humans interacting with computers. A “user-centered” framework began to emerge at Stanford and PARC; this framework was eventually articulated in the 1983 book The Psychology of Human-Computer Interaction63 by Stuart Card, Thomas Moran, and Allen Newell. Though the book predates the widespread presence of personal computing—let alone the Internet—it tightly described human behavior in the context of interacting with a computer system.

The user is not an operator. He does not operate the computer; he communicates with it to accomplish a task. Thus, we are creating a new arena of human action: communication with machines rather than operation of machines.(Emphasis theirs)64

The Psychology of Human-Computer Interaction argued that psychological principles should be used in the design phase of computer software and hardware in order to make them more compatible with the skills, knowledge, capabilities, and biases of their users.65 While computers are, ultimately, tools for humans to use, they also need to be designed in a way that would enable users to effectively work with them. In short, the fundamental idea that we have to understand how people are wired and then adapt the machine (i.e., the computer) to better fit the user arose from Card, Moran, and Newell.

Alan Newell also had a hand in some of the earliest AI systems in existence; he saw the computer as a digital representation of human problem-solving processes.66 Newell’s principal interest was in determining the structure of the human mind, and he felt that structure was best modeled by computer systems. By building computers with complex hardware and software architectures, Newell intended to create an overarching theory of the function of the human brain.

Newell’s contributions to computer science were a byproduct of his goal of modeling human cognition. Nevertheless, he is one of the most important progenitors of AI, and he based his developments on psychological principles.

BOB: There’s an important dialog between psychologists who were trying to model the mind and brain and computer scientists who were trying to get computers to think.

GAVIN: Sometimes, it seems like the same people were doing both.

BOB: Right—the line between computer scientists and cognitive psychologists was blurred. But you had people like Newell and others who saw the complex architecture of computers as a way to understand the cognitive architecture of the brain.

GAVIN: This is a dance. On one hand, you have computer scientists building complex programs and hardware systems to mimic the brain, and on the other hand, you have psychologists who are trying to argue how to integrate a human into the system.

A simple example is the old “green screen” cathode-ray tube (CRT ) monitor, where characters lit the screen up in green. One anecdotal story had the hardware technologists pulling their hair out because the psychology researchers argued that the move from all caps font to mixed-case font would be better from a human performance perspective if the screen had black characters on a white background. This was a debate because the hardware technology required to make it easier for the human is vastly different from a CRT. Even with this story, you can imagine how having computer scientists and psychologists in the same room advanced the field.

BOB: It’s really the basis of where we’re at today. Even though computers and brains don’t work the same way, the work of people like Allan Newell created insights on both sides. Especially on the computing side, conceptualizing a computer as being fundamentally like a brain helped make a lot of gains in computing.

GAVIN: Psychology and computer science can work hand in hand.

BOB: Ideally, they would. But it doesn’t always happen that way. For instance, in today’s world, most companies are hiring computer scientists to do natural language processing and eschewing linguists or psycholinguists. Language is more than a math problem.

The point Psychology and computing should go hand in hand. In the past, computer scientists with a psychology background generated new, creative insights.

Bouncing back from failure

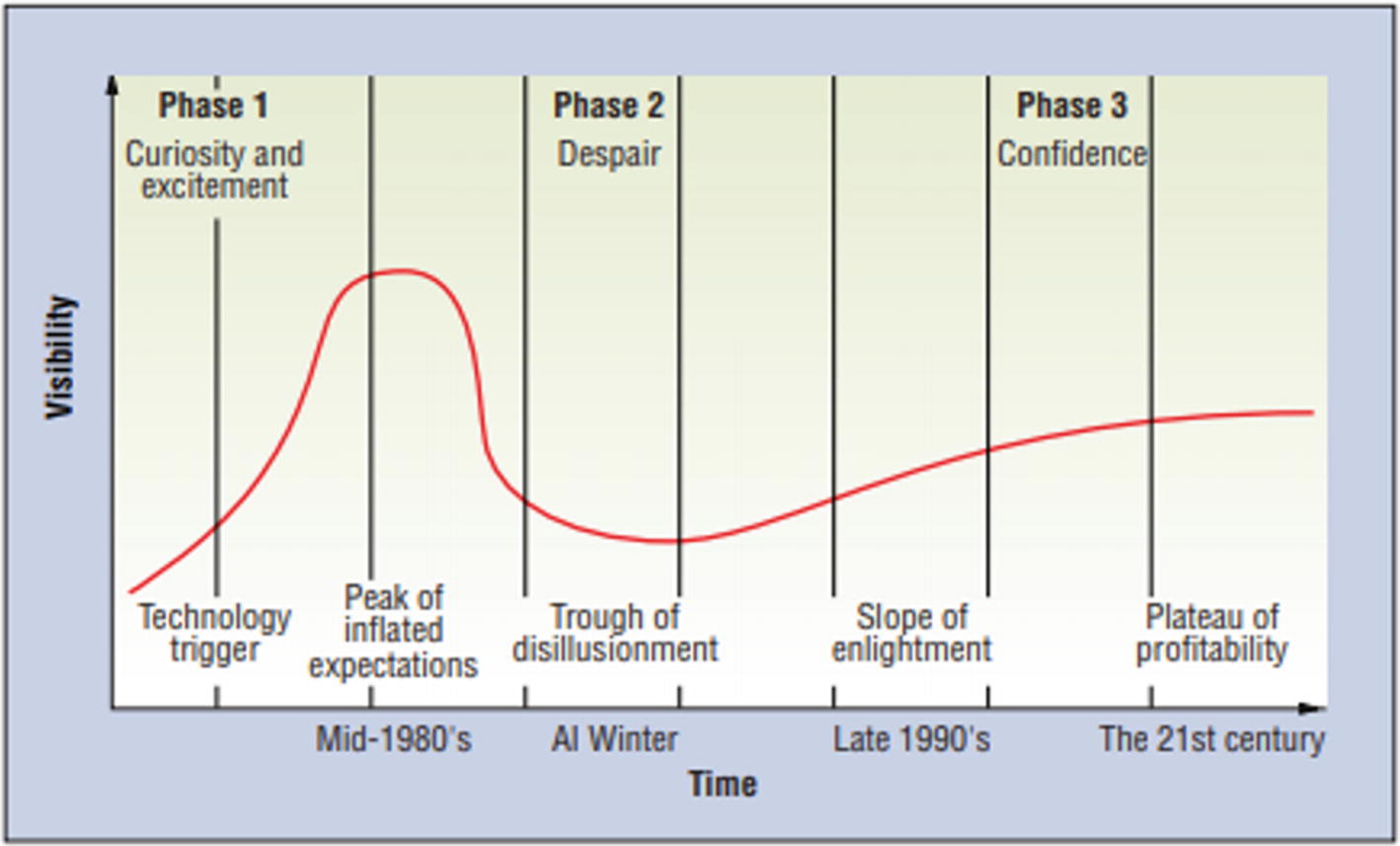

The hype cycle for new technology

AI’s gradual climb back to solvency after the AI winter of the late 1980s is a story of rebirth that can also provide lessons for us. One of the key components of this rebirth was a type of AI called neural networks that we alluded to earlier. Neural networks actually date back to at least the 1950s,68 but they became popular in the 1990s, in the wake of the AI winter as a means to continue AI research under a different name.69 They included an emphasis on and newfound focus on a property of intelligence that Schank emphasized in 1991. Schank argued that “intelligence entails learning,”70 implying that true AI needs to be able to learn in order to be intelligent. While expert systems had many valuable capabilities, they were rarely capable of machine learning. Artificial neural networks offered more room for machine learning capabilities.

Artificial neural networks, sometimes simply called neural networks, is a type of AI system that is loosely based on the architecture of the brain, with signals sent between artificial neurons in the system. The system features layers of nodes that receive information, and based on a calculated weighted threshold, information is passed on to the next layer of nodes and so forth.71

Neural networks come broadly in two types: supervised and unsupervised. Supervised neural networks are trained on a relevant dataset for which the researchers have already identified correct conclusions. If they are asked to group data, they will do so based on the criteria they have learned from the data on which they were trained. Unsupervised neural networks are given no guidance about how to group data or what correct groupings might look like. If asked to group data, they must generate groupings on their own.

Neural networks also had significant grounding in psychological principles. The work of David Rumelhart exemplifies this relationship. Rumelhart, who worked closely with UX pioneer Don Norman (among many others), was a mathematical psychologist whose work as a professor at the University of California-San Diego was similar to Newell’s. Rumelhart focused on modeling human cognition in a computer architecture, and his work was important to the advancement of neural networks—specifically back propagation which enabled machines to “learn” if exposed to many (i.e., thousands) of instances and non-instances of a stimulus and response.72

Feigenbaum said that “the AI field…tends to reward individuals for reinventing and renaming concepts and methods which are well explored.”73 Neural networks are certainly intended to solve the same sorts of problems as expert systems: they are targeted at applying the capabilities of computer technologies to qualitative problems, rather than the usual quantitative problems. (Humans are very good at qualitative reasoning; computers are not good at all in this space.) Supervised neural networks in particular could be accused of being a renamed version of expert systems, since the training data they rely on could be conceived of as a knowledge base and the neuron-inspired architecture as an inference engine.

There is some truth to the concept that AI’s comeback is due to its new name; this helped its re-adoption in the marketplace. After all, once a user (individual, corporate, or government) decides that “expert systems,” for example, do not work for them, it is unlikely that they’ll want to try anything called “expert systems” for a long time afterward. If we want to reintroduce AI back into their vocabularies, we must have some way of indicating to these users that a technology is different enough from its predecessor to be worth giving another chance. The rise of a new subcategory of AI with a new name seems to have been enough to do that.

However, slapping a new name on a similar technology is not enough to regain users’ trust on its own. The technology must be different enough from its predecessor for the renaming to seem apt. Neural networks were not simply a renamed, slightly altered version of expert systems. They have radical differences in both their architecture and their capabilities, especially for those neural networks which allow a neural network to adjust the weights of its artificial neurons according to the effectiveness of those neurons in producing an accurate result (i.e., “back propagation”). This is just the sort of learning Schank believes is essential to AI.

Norman and the rise of UX

As AI morphed, so did user experience. The timing of the rise of neural networks (in the early 1990s) roughly coincides with Don Norman’s coining of the term “user experience” in 1993. Where HCI was originally focused heavily on the psychology of cognitive, motor, and perceptual functions, UX is defined at a higher level—the experiences that people have with things in their world, not just computers. HCI seemed too confining for a domain that now included toasters and door handles. Moreover, Norman, among others, championed the role of beauty and emotion and their impact on the user experience. Socio-technical factors also play a big part. So UX casts a broader net over people’s interactions with stuff. That’s not to say that HCI is/was irrelevant; it was just too limiting for the ways in which we experience our world.

But where is this leading? As of today, UX continues to grow because technologies build on each other and the world is getting increasingly complex.74 We come into contact daily with things we have no mental model for, interfaces that present unique features, and experiences that are richer and deeper than they’ve ever been. These new products and services take advantage of new technology, but how do people learn to interact with things that are new to the world? These new interactions with new interfaces can be challenging for adoption.

More and more those interfaces contain AI algorithms. A child growing up a decade from now may find it archaic to have to type into a computer when they learn from the very beginning that an AI-natural-language-understanding Alexa can handle so many (albeit mundane) requests. We may not recognize when our user experience is managed by an AI algorithm. Whenever a designer/developer surfaces the user interface to an AI system, there is an experience to evaluate. The perceived goodness of that interface (the UX) may determine the success of that application.

As UX evolves from HCI, it becomes more relevant to AI.

Ensuring success for AI-embedded products

For those who code, design, manage, market, maintain, fund, or simply have interest in AI, having an understanding about the evolution of the field and the somewhat parallel path of UX is necessary to explore. Before moving on to Chapter 3, which will address some of today’s verticals of AI investment, a pause to register an observation is in order: there is a distinct possibility that another AI winter is on the horizon.

The hype is certainly here. There is a tremendous amount of money going into AI. Commercials touting the impressive feats of some new application come at us daily. Whole colleges are devoting resources, faculty, students, and even buildings toward AI. But as described earlier, hype can often be followed by a trough.

In many ways, we need to return to Licklider’s perspective that there exists a symbiosis between the human and the machine. Each has something to contribute. Each will be more successful if there is an understanding about who does what, when, and how. For AI to succeed, to avoid another winter, it needs good UX.

AI is at an important crossroads. In order to understand how AI can achieve success, we must set one key assumption: Let’s assume the underlying code—the algorithms—work. That it is functional and purposeful. Let’s assume also that AI can deliver on the promise and opportunity. The challenge is whether success will follow. Know that success does not simply happen; it needs to be carefully developed and properly placed. Google was not the first search engine. Facebook was not the first social media network. There are many factors that make one product a success and another a historical footnote.

The missing element is not the speed of AI or even the possible uncovering of patterns previously unknown. It will be on whether the product you build can take advantage of the insight or idea and be successful. The key position put forth is that AI is here, but much around it needs to be shaped, developed, and made more usable. The moment is here where the two fields developing in parallel should converge.

BOB: At what point does our story of the computer scientist and psychologist merge? The term HCI itself embodies not just computers but the interaction with humans.

GAVIN: Again, the dance follows the AI timeline. From the beginning, there was convergence on the future of computing and how it may integrate with humanity. Neural networks brought ideas that computer networks may mimic the brain. Programmers build AI systems and cognitive psychologists focused on making technology work for people.

BOB: The time is now. We started our careers over 25 years ago pleading for a budget to make the technology “user friendly” or “usable.” Today, the value of a good product experience is not just nice to have, but clearly linked to the brand experience and tied to company value.

GAVIN: People speak about the Apple brand with high reverence. Much time and effort went into the design of not only the product (e.g., iMac, iPod or iPhone), but the design of the brand experience. The Apple brand almost transcends a singular product. Perhaps out of necessity, businesses recognize the value of the brand experience—as AI embeds itself into new products, good design matters. Focus on the experience is where the emphasis needs to be. No one cares about the AI algorithm or if an unsupervised neural net was used. People care about good experiences. Or more aptly, people pay for good experiences.

The point HCI’s time is now. AI technology is at a point where the differentiator for success is the user experience.

Conclusion: Where we’re going

In the next chapter, we will explore AI at the 30,000 foot level. This will describe core elements of how AI works so we can explore areas where we can apply UX to improve AI outcomes.

It is important to note that the emphasis will not be detailed technical information; in fact, let’s assume the AI algorithms at the heart of it all work just fine. What can we do as product managers, marketers, researchers, UX practitioners, or even technophiles to understand the potential gaps and opportunities for nonprogrammers and non-data scientists to impact product success? The next chapter is about all of the areas where AI is emerging and to identify where a better experience would make a difference.