When we look at the ground we have covered so far in this book, we see how AI and UX have some common DNA. Both started with the advent of computers and both with a desire to create a better world. We saw how UX evolved from a need to bring the information age closer to everyone.1 AI grew similarly—with some fits and starts—and is now in the mainstream of conversation.

The benefits AI can bring are legion. However, there is a risk of another AI winter for reasons that have less to do with potential and more to do with perception. For many people, there’s still a hesitance, a resistance, to adopt AI. Perhaps it is because of the influence of sci-fi movies that have planted images of Skynet and the Terminator in our minds, or simply fear of those things that we don’t understand. AI has an image problem. Risks remain that people will get disillusioned with AI again.

We believe that AI is ready. AI is more accessible than ever before and not just to the big players in industry, but within reach of many from startups to avid technophiles who are able to experiment with AI tools. This means AI is being embedded into new product ideas across almost all industries.

But, AI needs to be more than technology—the prescription for success is to not just embed AI into the product but also to deliver a solid user experience. We believe that this is the key to success.

BOB: Let’s get something straight. No company wants to build a product with a terrible experience.

GAVIN: But, think about it. One does not have to work too hard to remember experiences where you caught yourself saying, “What were they thinking when they made this?”

BOB: Several years ago, I used to give a talk that was more on the foundations of UX. Every application’s user interface presents an experience. What we need to realize is that there is a designer behind the application and it’s the designer’s choice as to what that experience is: it can be amazing or a dud.

GAVIN: I don’t really think that programmers wake up in the morning and say, “I’m gonna make things just a little harder for those users.” But here’s the thing, if programmers don’t set out to create bad user experiences, why are there so many bad user experiences?

BOB: Yup, that is a paradox. There are a lot of reasons why so many products have such mediocre experiences—cost, awareness of users, time, laziness, and so on.

GAVIN: I would say though that most product owners underestimate how hard it is to design good experiences.

BOB: Luckily, technology has advanced to where microwaves and clock radios no longer default to flashing “12:00.” Fixing the time after a power outage was so annoying. But, just this last Christmas, I spent hours trying to set up new gadgets for my house only to scream out in frustration.

GAVIN: I believe experiences matter. A product can promote the most amazing features, but thinking of AI-UX principles, when I set it up or use the product, is the interaction intuitive? Do I trust that the product works? Think of products you love and use every day—how much of that is because the user experience isfrictionless and enjoyable?

BOB: Technology has become a commodity. What can set a product apart is good design. The same logic applies to AI-enabled products.

The point Designing for simplicity is hard; it takes commitment.

What makes a good experience?

Was it easy to order?

Was it easy to set up?

If you used instructions, did they help? (Were they even necessary?)

Could you get the product to work quickly?

Did it work like you thought it would?

Do you still use it or does it sit idle after a month?

What were they [designers, engineers, and product people] thinking when they designed this? It doesn’t work the way I think.

Again, manufacturers do not set out to make products that disappoint—but they exist. Why? Sometimes, the simple answer is that the product creators did not spend enough time on the true need. Put in a different way, they built the product because they felt the technology was so compelling that they assumed everyone else would be captivated by it.

Often users find novel ways to use products far different from what the company or organization expected.

GAVIN: I believe watches have always been fashionable, but now, we don’t use watches to tell time any more. We use them as fashion accessories. The functionality of my watch has been replaced by my mobile phone.

BOB: And your phone tends to be more accurate than your old Timex watch too!

GAVIN: This is a powerful example because the ubiquity of mobile phones really changed behavior. Think about early mobile phones. Some phones let you set an alarm as a feature. Now, you can set multiple alarms on your phone. I can even say, “Hey, Google, set an alarm at 7 a.m.” And she replies, “Got it. Alarm set for 7 a.m. tomorrow.”

BOB: More to the point, did the early phone manufacturers think that their product would have decreased sales for physical alarm clocks or result in people not wearing watches as often as they did 20 years ago?

The point Even the most seemingly mundane features can change behaviors and change markets for products. When people use a product, their expectations and behaviors change in unanticipated manners.

Understanding the user

How do we better understand how people use products? A better understanding of the user experience is the answer. The ultimate purpose of a user-centered design (UCD) is to build products and services around users and their needs.

We have been involved in research and design of all manner of applications and products for decades. We have also seen many different phases and approaches to user interface design. The method that we see with most success is a user-centered design.

User-centered design (UCD) places user needs at the core. At each stage of the design process, design teams focus on the user and the user’s needs. This involves a variety of research techniques to understand the user and is used to inform product design.

UCD2 advocates putting the user and the user’s needs as primary during each phase of the design process. While this seems obvious that one would design with the user in mind, the reality is that surprisingly few development efforts strictly follow this process end to end.

Research matters

It is hard to disagree with the idea that any product is better if the intended user has a good experience. Designing these seamless experiences is the result of hard work that maps what the user expects and needs into the design and interaction models for the product.

User research is the key method to capturing the needs of the intended user and integrating these insights into the product design.

BOB: In order for UCD to really work, user research is needed. I have heard many creative and design directors say that “they know what the user needs!” But, the reality is that having evidence describing user needs is better than one’s belief, however adamant one says it.

GAVIN: And at the very least, designers should be humble as they recognize that iteration can only make things better. Test early versions with prospective users. Get feedback early and often. Don’t be afraid to be wrong. Know that the design will be better with this feedback.

BOB: And let someone else do the research. Let an objective party evaluate the initial designs. It is too easy to fall in love with something that you have poured time and energy into building. The product becomes “your baby,” and let’s face it—it is hard to hear someone call your baby ugly. You might ignore the criticism, or you might get defensive to protect it.

GAVIN: But this is the best thing to hear—especially early in the design process. The parts that are “ugly” appear as confusion or outright frustration when naive users interact with your product. Learn from what users think and improve the design.

The point Make mistakes faster by having users interact with early-stage designs. Then, improve and repeat.

Does research really matter?

If I had asked people what they wanted, they would have said faster horses.

The question “does research really matter?” places people into two groups: those who believe true innovation comes from gifted visionaries and those who believe that understanding what people think matters to sound design.

As a researcher, this is not even a good question because it is leading (i.e., directs the answer in a particular direction and is biased). While there are some who might be truly visionary, the reality is that all too often, research is the evidence that drives the innovation, the need that can be filled. Identifying this need and designing around it is where user research is best suited.

BOB: When I think of the Henry Ford’s often recited adage, I shake my head. Rhetorically, it implies insight cannot be obtained from the user. If Henry Ford had asked the question, he would have not made the Model T.

GAVIN: I think the time Henry Ford would have said this is in the mid-1920s. Think of The Great Gatsby, which was set at around the same time.

BOB: Whether in the book or in its theatrical interpretations, automobiles were prominent.

GAVIN: Exactly. Where were the horses? In The Great Gatsby, no one talked about horses. Driving and owning an automobile was a key theme.

BOB: Remember, Henry Ford did not invent the automobile. He invented a conveyor belt system to make cars more efficiently.

GAVIN: And that is why Henry Ford never said that quote.3 What he did say was, “Any color, so long as it is black.”

The point While great innovations can indeed come from gifted designers, obtaining user feedback on designs early and often is a recipe for success.

Objectivity in research and design

User research is the key method to capturing the needs of the intended user and integrating these insights into the product design.

The UX lens

Many readers of this book may not have the skill (or inclination!) to debug Python code, but we believe that there is much more to AI than the code. Sufficient attention is usually given to the AI engine proper. AI-enabled products can benefit by shifting attention to everything around the AI engine. How can we improve everything else?

BOB: A common output of AI is a number or coefficient, such as 0.86: a correlation between 0 and1. Let’s take your credit card fraud example. You’re out to dinner and make a payment using your credit card…

GAVIN: So, as my payment transaction is processing, there is an AI program analyzing and thinking about fraud. In this example, AI outputs 0.86 and this means the transaction could be fraudulent (i.e., the algorithm assumes anything above .8 is likely to be fraud).

BOB: That is the extent of AI. The outcome was 0.86. But the experience with the AI-enabled fraud detection product is an alert to my phone in the form of a text message.

GAVIN: It could read, “WARNING. POTENTIAL FRAUD DETECTED ABOVE 0.80. CODE F00BE1DB.”

BOB: Or someone could spend time designing a better interaction. The message could be much more friendly and provide the user with a call to action, such as “To authorize this purchase, Reply Yes.”

The point Work on what touches the user, such as messaging and user interactions. What AI provides can be quite amazing, but what makes the product a success is the experience.

We think AI can be seen through the lens of how we look at the user experience of any product or application. AI is no different. To be successful, it must have the essential elements of utility, usability, and aesthetics.

So what are the principles and what is that process?4

Key elements of UX

UX is not one thing for all users. It is multivariate. The various constituent parts, which we introduce next, combine to provide the user with an experience that is beneficial and maybe even delightful.

Utility/functionality

Probably the most important thing that defines any application is what it does—we call this “utility” or “functionality” or “usefulness.” Basically, is there a perceived functional benefit? In more formal terms, does the application (tool) fit for the purpose it was designed for? Does it do what the user needs? A hammer is good for pounding nails, not so good for putting on makeup. Any application needs to have the features and functions that a user expects and needs to be useful. These are table stakes for a successful product.

BOB: Let’s go back to the car example with a natural language voice assistant. The table stakes here is that AI needs to reliably understand human speech. In a car, it could be touchless controls like “call mom,” “turn on the heater,” “find a destination on the navigation system,” and so on.

GAVIN: Earlier speech recognition in cars was very command driven and typically the user needed to know the exact voice command to say. Do you say, “Place call…” or “Make call…” or “Dial…” or “I want to call…” or even “Can you please call…”

BOB: The concept of using your voice has a benefit because the driver can keep their eyes on the road. But, too often, the rigid structure required the driver to remember the phrase. And, as the driver guesses commands, random noise can interfere. The driver might have been correct on the command, but the external noise caused the system to reply, “Sorry, I did not understand.”

GAVIN: Instead, a better user experience would be to design an interaction that accepts many alternative commands in the presence of typical ambient noises found in cars.

The point Designing frictionless or effortless interactions can allow users to experience the utility and functionality. Early attempts to put AI-based speech recognition in cars had poor usage not because voice activation would not be beneficial, but possibly because the driver found it difficult to engage.

Usability

Utility and usability are often conflated, but they are independent constructs. Let’s consider an absurd example, steering a car. We all can use a steering wheel to turn a car to the right or to the left. But if you think about it, there are other ways in which a car could be controlled. A keyboard where you would type “turn right” or a joystick or a remote control. There is a difference between function and how that function is implemented. Some of those ways are more usable than others.

Usability is defined as whether a product enables the user to perform the functions for which it is designed efficiently, effectively, and safely.

Because car manufacturers aligned to a steering wheel, people who know how to drive can rent a car and, after some seat and mirror adjustments, drive a make and model of a car they have never driven before with a high degree of proficiency. These are standards that help guide how people interact with different instantiations of a system.

But what about new or systems requiring new controls? How do you create an interaction that is usable?

- 1.

Early-stage research (exploratory or discovery) to capture user expectations and understand what people think.

- 2.

Construction of a prototype.

- 3.

Formative research, such as a usability test, to identify areas of confusion and gaps preventing a satisfactory experience.

- 4.

Iterative design and more usability tests to further refine the product.

A usability test is a qualitative study where intended users who are naive to the product are given context and asked to complete tasks for which the product was designed. Behaviors and reactions are observed. A usability test typically involves small sample sizes and feeds into an iterative design process.

More detail will be provided in the Principles of UCD section later in this chapter.

BOB: Apple said swiping and pinching on a phone are natural gestures where people just intuitively know how to do it.

GAVIN: Really? I remember 2007 playing with my first iPhone. I saw the commercials by AT&T. This is where I learned to swipe and pinch.

BOB: Those commercials might have been the most expensive user manuals ever made!

GAVIN: Apple has patented scores of gestures that it calls natural. You have to take a look at Apple’s patents like “multi-touch gesture dictionary.”5 My favorites are Apple’s patents for print and save. For print, you put three fingers together, say, at the center, and branch out to form the points of a triangle. But to save, you do the opposite. Three fingers stay apart like points on a triangle and move inwards to the center. How is this something people know?

BOB: If these gestures were so natural, then why did Apple go to the trouble of patenting so many?

GAVIN: This is how myths are made. This is a 2009 study that describes the gestures that people know.6 But, we did studies on multi-touch mobile phones in 2006 and 2007 where I saw people struggle. In 2009, people may have learned and adapted, but to say gestures have always been known and are natural is hard for me to reconcile from what I saw in the lab on the LG Chocolate, Palm, and first iPhone.

The point Not everything is natural or springs to mind when someone interacts with technology. Research and design go hand in hand to make the experience usable.

There are many products that have the same functions but those functions are rendered in different ways; some ways being more usable than others. An obvious example is booking a seat on an airline. Many sites will sell the exact same seat, they all do it a little differently, and some do it better than others. The function or purpose is the same, but the usability is different.

UX, AI, and trust

For a moment, let’s consider one of our AI-UX principles : trust. One of the reasons that AI often fails to deliver for the users is that the outputs are off point. That is, we can’t trust what we see or hear. The two dimensions of UX (utility and usability) speak directly to trust. If we build an app that lacks sufficient utility or suffers from poor usability, users will fail to trust the app and abandon. The same will happen with an AI app.

We find Alexa to have a lot of utility in some specific areas—giving the time, playing the news, telling the weather, and so on. And for these tasks, Alexa is also very usable. Thus, in a narrow, but important way, Alexa serves the user well. Yet, there are tens of thousands of skills available. We have tried dozens of skills that we thought would be useful, but they were deleted quickly because they were not usable. One new skill Bob tried to get sports scores, launched hisRoomba by mistake; needless to say, he appreciated the clean floor, but it wasn’t the Roomba app that I launched. It’s aggravating at best.

Admittedly, designing for voice is hard and, often, the design fails in two ways on usability; the natural language processing is simply not robust enough to handle many cues, and the voice designers have not done a good job of designing the dialog. Many of these voice skills also fail on utility because they do not sufficiently anticipate the users and the use cases. Thus, Alexa is relegated not to do much more than set a timer or gather information for a shopping list.

BOB: We both started out in telecommunications. Back when phones were wired and could survive a hurricane. Even when the power went out in the neighborhood, your phone usually still worked. Back when we were at Ameritech, we did an internal study on dialing. What we found was that people were about 98% accurate when dialing any single number.

GAVIN: That is pretty accurate, but when dialing seven or now ten digits to complete a call, an error can occur. Doing the math, it happens maybe once or twice every dozen or so times you manually enter a phone number.

BOB: Precisely. Even with what seems to be a highly practiced task, errors happen. Who can say that when dialing a number from a piece of paper that they have never accidentally made a mistake?

GAVIN: Imagine a voice assistant that is always listening for that wake word. Even with a high accuracy rate, mistakes happen.

BOB: When a person makes a mistake, we say, “Oh, I fat-fingered the number.” But humans are less forgiving of machines. If machines make “fat-finger” errors, we are more apt to say, “This thing sucks!”

The point Because people have such a low tolerance for mistakes made by machines, trust is very important to the success of any AI-enabled product.

Trust has a very powerful influence on perceived success. Consider the challenge posed by autonomous driving cars. In 2017, there were over 6.4 million vehicle crashes in the United States. That is a crash every five seconds by human drivers. According to Stanford University, 90% of motor vehicle accidents are due to human error.7 What is the potential benefit to autonomous vehicles? In California, there are 55 companies with self-driving car permits to conduct trials. From 2014 to 2018, there have been a total of 54 accidents from these autonomous vehicles, and according to an Axios report, all but one were due to human driver error—not the AI.8

But the problem is that humans have trust issues with autonomous vehicles. According to a AAA study, 73% of respondents expressed lack of trust in the technology’s safety.9 However, the thesis of the user experience playing a pivotal role in user acceptance still holds. “Having the opportunity to interact with partially or fully automated vehicle technology will help remove some of the mystery for consumers and open the door for greater acceptance,” said Greg Brannon, AAA’s director of automotive engineering and industry relations. The AAA study further stressed that “experience seems to play a pivotal role in how drivers feel about automated vehicle technology, and that regular interaction with advanced driver assistance systems (ADAS) components like lane keeping assistance, adaptive cruise control, automatic emergency braking and self-parking, considered the building blocks for self-driving vehicles, significantly improve consumer comfort level.”

Experience and trust matter. Positive experiences with AI-enabled driving features can improve trust in more advanced AI technologies.

Weirdness

As AI-enabled products proliferate, more information is available to the system to be analyzed. As AI thinks about your data, how should AI engage without making it weird?

By weird, we mean situations that can make human to AI interactions uncomfortable, awkward, or unusual.

GAVIN: Imagine your daily commute in your car. There is a lot of data that can be collected about your driving habits, from what you are listening to on the radio to your speed to route taken—all time and date stamped. The car even knows if you are wearing your seatbelt.

BOB: And based on the key fob you are using, it probably can distinguish between different drivers. With all of this data—all collected passively in the background while you drive to work.

GAVIN: Now, if an AI system could recognize patterns in your behavior. Logically, AI could use this insight to be proactive and save you time and effort. For example, say, there is a major traffic jam on your normal route to work, it could inform the driver and even suggest an alternative route.

BOB: I would expect there is high value in this suggestion based on AI constantly thinking about me and my commute to work. This would be a frictionless experience for the user.

GAVIN: But, it could suggest a lot of things from my patterns. Perhaps I go to the gym every other day after work. It could inquire if I want directions to the gym. But, where is the line on what to suggest? Say, I go to places that I would rather not have AI offer suggestions?

AI making a recommendation could freak me out. There needs to be a “weirdness scale” to designate what is appropriate and helpful from what is downright creepy.

The point AI has the potential to offer suggestions based on personal patterns of behaviors and habits, but where is the line between what is acceptable and unacceptable? This is a good example of why AI and UX need to be linked.

A weirdness scale

The concept of creating a continuum of inappropriate to appropriate actions is not about precision, but to present a user-centered mindset to what actions AI should and should not do. This weirdness scale would ground the product team to reflect on the appropriateness of AI suggestions. Patterns formed based on user behaviors, both explicit (e.g., a driver entering an exact address for directions) and passive (e.g., the car enters a shopping area and parks for 45 minutes before heading home), are target-rich opportunities for an AI system. Knowing whether or not to trigger an action can be shaped and guided through a UX lens.

When a pattern is identified and your AI product is ready to offer a recommendation, the event can be called a trigger. The actions that follow can be shaped with the user’s needs at the center.

Having a concept such as this weirdness scale during the design stage of any AI-enabled product has value. It can set the perspective for the team that there are triggers that should and should not be acted upon. It establishes for the product team that there are boundaries that should be considered. This can lead to interactions with the user to help refine the AI engine by capturing the times when the user explicitly pressed or said “No” to the recommendation. The team can brainstorm and anticipate the possible triggers to illustrate the breadth of the scale and to define “guardrails” that should be recognized.

When you consider the concerns over “Big Brother” fears that AI systems are always watching or listening (even when there is little evidence to support that voice assistants are actually “always listening”10), care must be given by the team designing the AI-enabled product to improve trust and subsequent adoption and usage. This is not about what AI can predict, but the responsibility of the team to the design the product using an AI-UX perspective.

AI has the potential to offer suggestions based on your habits, but where is the line between what is acceptable and unacceptable? This is a good example of why AI and UX need to be linked and is in the hands of the team making the product.

Aesthetics/emotion

As humans, we prefer to use things that are aesthetically pleasing.11 Imagine two websites that have the same features and same controls (i.e., same utility and same usability), but one has a better rendering than the other. Most people would prefer the one that looks nicer. There is some evidence to show that under these exact conditions, users will judge the better-looking site as being more usable, even though it has exactly the same functions and controls (the so-called aesthetic usability effect). The emotion from using a product has a profound effect on our perception of it.

The aesthetic usability effect describes how users attribute a more usable design to products that are more visually pleasing to the eye.

Clearly, designing to a high standard for the look and feel of a product or application is important. The problem comes when companies think that all they need to do is provide a beautiful product and ignore the utility and usability dimensions. The glitz may sell, but users will remember and punish that product in the market, especially when it comes time to making buying decisions again.

GAVIN: When I go to the Consumer Electronics Show where tens of thousands of products are showcased, I always find it curious how companies try to capture your attention with the shiny new tech.

BOB: What strikes me as fascinating is the push to anthropomorphize products. Is there really a reason for these AI applications to be rendered with faces? But the reason they are, I think, is the designer wanted to evoke emotions in the user. Interacting with a plastic object that has big eyes and a mouth that speaks might be less intimidating than an orb that speaks.

GAVIN: There is nothing wrong with trying to tap into the emotional factor with aesthetically pleasing, anthropomorphic talking heads.

BOB: But, recognize that there is a UX hierarchy at play. Getting the foundation sound is key. Consider the usability of the application. Does it do as intended? Is there a perceived usefulness? These are the things that have to be done right because how something looks can only take you so far.

GAVIN: The experience has to be good. Or you are just dressing up another mediocre product. Know that the aesthetic usability effect only takes a product just so far.

The point Focus on getting the foundation right and then apply the visual treatment to make the product stand out and set apart from the rest.

UX and the relationship to branding

This is a good place to inject an issue that we think about a lot: branding and its relationship to UX.

There is this interconnection between brand perception and user experience. Sometimes “brand” covers a lot of sins in the user experience;12 sometimes a poor user experience damages the brand’s value. We’ve come to realize just how influential a brand is to experience. We’ve seen amazing products that have no brand value get discounted and mediocre products from respected brands get lauded. Maybe it does not need to be said, but the most useful and usable don’t always win. If brand marketers are pushing something that the product does not deliver on, then the market will react. Trust is eroded when the promises exceed the reality.

As we are increasingly commercializing the AI in the product, branding issues surface. Just saying something has a “powerful AI engine” does not guarantee a sale. It needs more; it needs UX.

BOB: UX intersects with marketing whether we like it or not. And one of the things that we have learned from marketing and branding is that it’s easy to sell the sizzle, but you have to deliver the steak too.

GAVIN: That’s why getting the user experience right is so important. Brand marketing can tell us how easy something will be or how a product will change our lives, but unless we actually experience that, it’s all just talk.

BOB: Yep, UX is a delivery on that brand promise. If the user doesn’t experience what the brand marketer sold them, then that mismatch will undermine the credibility of the brand.

GAVIN: AI has the same problem. Lots of hype, but until it delivers in a meaningful way to change lives, it will be just the sizzle without the steak.

The point Designing a product is more than just the product itself. The landscape is littered with failures. Build a better product experience—one that allows AI to show what it can do.

Principles of UCD

The key principle of user-centered design is that it focuses the design and development of a product on the user and the user’s needs. There are certainly business and technical aspects to engineering and launching a product. The UCD approach does not disregard or discount the needs of the business or the technical requirements. However, UCD process places emphasis squarely on the user because the tradeoffs made at the business and technical level for timelines, specifications, and budgets are assumed. The UCD focus ensures that the user is not cast aside easily.

So let’s turn to the key components: users, environment, and tasks.

The users

The importance of understanding the user’s goals, capabilities, knowledge, skills, behaviors, attitudes, and so on cannot be underestimated. There may also be multiple user groups as well. Descriptions of each group should be documented (sometimes these descriptions are expressed as “personas).” One trap we see is that designers and developers assume they know the user. Or they assume they are just like the user. Many designs fail because the description of the user was nonspecific, nonexistent, or just wrong.

Define the intended user—beyond simply a market description or segment. Construct a persona describing the user’s knowledge, goals, capabilities, limitations and include user scenarios that will both define the experience to be built and include “guardrails” of things to avoid.

The environment

Where are the places and what are the conditions where the users interact with the product? Environment includes the locations, times, ambient sounds, temperatures, and so on. For instance, certain environments (i.e., contexts) are better for some modalities (hands-free) than others. An app to be used on a treadmill while running will have different design characteristics than an app used for banking. What this means is that the user experience extends beyond simply what the user sees on the screen. The UX is the whole environment; the totality of the context of use. In registering for a new application that requires mobile phone use for two-factor authentication, for instance, assumes the user has access to the phone and must account for the possibility that the phone is not present or unable to be used. The whole process is part of the UX. The instruction guide that comes with a new smartphone is part of the UX. These things are not disconnected in the mind of the user, but they are often handled by different groups in the organization.

Develop use cases against the environments of use to identify further user needs. This could also illuminate the points where passive and explicit user data can be used to trigger additional user benefits.

GAVIN: In 2017, France’s official state railway company, SNCF, wanted to build an AI chatbot ticket application.13 The design team captured the conversations between travelers and ticket agents. They trained the AI’s grammar system to model the experience observed.

BOB: But when they tested the chatbot prototype with users, it failed. When customers encountered the blank text field, they began with, “Hi. I would like to buy a ticket.” Users expected to engage the chatbot in a friendly and conversational manner.

GAVIN: So, the chatbot expected, “I would like to buy a ticket from Paris to Lyon today at 10:00 a.m.” Just like how they were observed at the ticket window.

BOB: Yep, they never encountered a friendly and conversational experience when they observed transactions at the ticket window!

GAVIN: Ah, this is Paris, right? There was probably a line of customers all waiting impatiently. If a customer walked up and said, “Hi. I would like to buy a ticket from Paris to Lyon or maybe Normandy... What times are available?” Those in the queue would be visibly angry. Such that the next person would walk right up and make the request as precise and efficient as possible!

BOB: Exactly! The environment of use was different. A chatbot could be used at one’s leisure without the pressure from a queue of Parisian travelers!

The point The environment of use can change user interactions dramatically. Luckily, testing identified the issue and the team was able to retrain the chatbot.

The tasks

Tasks are about what people do to accomplish their goals and involve breaking down the steps that users will take. Depositing a check using a mobile app requires me to log in, to navigate to the right place, enter amounts, take pictures, and a lot more. Tasks can be expressed as a task analysis or a journey map (if detailed enough). Based on a full explication of the tasks, the application requirements can be built.

Use cases are well defined in product development processes. With AI, where the system presumably can learn and identify new opportunities, design for AI-enabled products now has AI-originated use cases which are not defined by the product team. Tasks have an added dimension with AI that needs to be managed.

While much of this can seem elementary, it amazes us how few organizations take the time to describe users, environments, and tasks. Getting to this detail requires user research14 and must be documented in the specifications for the design and development team. The three key elements of UCD form the foundation of knowledge, but the process is what delivers a successful product or application that allows AI to show what it can do.

Processes of UCD

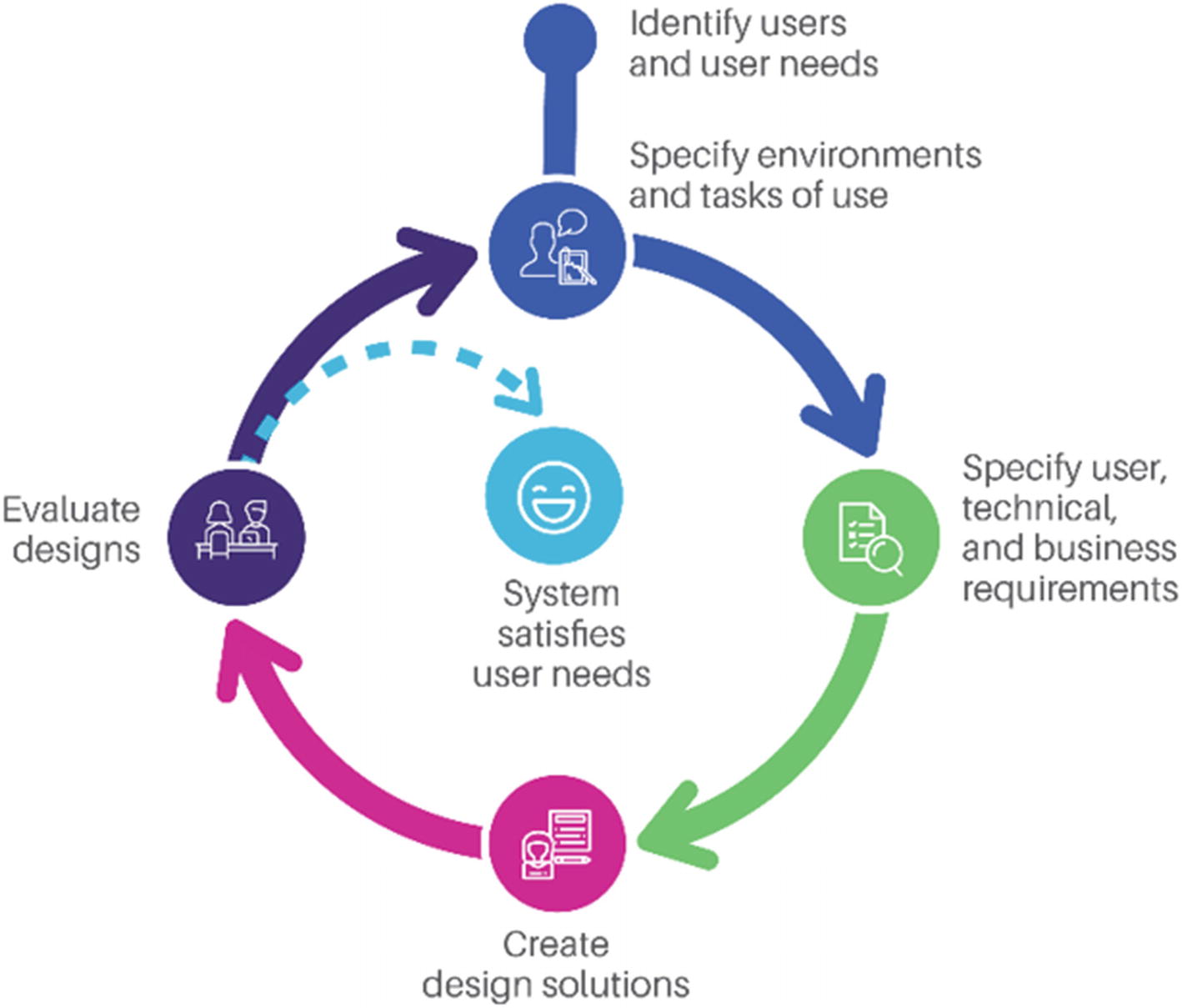

Typical UCD processes ensure the users and their needs feed into the process and then cycle through design and evaluation processes

Taking the research and building the user interface begins next.16 Design should begin by defining with rough sketches so as not to get too wedded to early ideas.17 Be willing to throw out a dozen ideas before settling on one. Draw the wireframes and map out the interactions—do they make sense? Do they get users closer to their goals?

Once design begins, conduct usability tests with paper prototypes. Successively refine them with representative users doing typical tasks. Later develop digital prototypes to test dynamic interactions, getting the design closer to the final look and feel. There are countless books and websites describing methods on UI design, conducting usability testing and iterative prototyping that we’re not going to cover here. Suffice it to say, usability testing is the single-most important component to perfecting the user interface.

As Figure 5-1 illustrates, this is an iterative process, a successive approximation to a system that meets the needs of the user. Once the design is evaluated, based on user feedback, one might adjust the requirements, decide on different ways to break down the tasks, or change the interface controls. A lot can change.

One key thing is that it is only at the end that we advocate applying graphical and visual treatments. What most people think of as design, graphical treatment, comes only after we have nailed the interaction models in the prototype designs. Colors, shape, size, and others, all support the underlying design. In construction, you can’t paint the house before the walls go up. In application development, you should not create pretty diagrams first. Design begins in the field with research, not in Photoshop.

A best practice at the outset of design is to describe tangibly what success looks like as it relates to the user needs. For instance, you might set a goal that 95% of users get through the registration page in 2 minutes without error. These targets on critical tasks provide measurable progress that the development team can aim for. More importantly, success on these targets during usability testing is what breaks us out of that loop and moves us to the technical development.

The real benefit of the UCD model is that through progressive improvements documented in usability testing, the organization can have much higher confidence in the success of the final deliverable than if there was no, or only casual, feedback from users. Done well, applying the UCD model will make applications useful, usable, learnable, forgivable, and enjoyable.

UCD is agnostic to the content; it can, and should, be applied to AI applications.

A cheat sheet for AI-UX interactions

Guidelines for AI-UX interactions from Microsoft and the University of Washington

# | Guideline | Description |

|---|---|---|

1 | Make clear what the system can do. | Help the user understand what the AI system is capable of doing. |

2 | Make clear how well the system can do what it can do. | Help the user understand how often the AI system may make mistakes. |

3 | Time services based on context. | Time when to act or interrupt based on the user’s current task and environment. |

4 | Show contextually relevant information. | Display information relevant to the user’s current task and environment. |

5 | Match relevant social norms. | Ensure the experience is delivered in a way that users would expect, given their social and cultural context. |

6 | Mitigate social biases. | Ensure the AI system’s language and behaviors do not reinforce undesirable and unfair stereotypes and biases. |

7 | Support efficient invocation. | Make it easy to invoke or request the AI system’s services when needed. |

8 | Support efficient dismissal. | Make it easy to dismiss or ignore undesired AI system services. |

9 | Support efficient correction. | Make it easy to edit, refine, or recover when the AI system is wrong. |

10 | Scope services when in doubt. | Engage in disambiguation or gracefully degrade the AI system’s services when uncertain about a user’s goals. |

11 | Make clear why the system did what it did. | Enable the user to access an explanation of why the AI system behaved as it did. |

12 | Remember recent interactions. | Maintain short-term memory and allow the user to make efficient references to that memory. |

13 | Learn from user behavior. | Personalize the user’s experience by learning from their actions over time. |

14 | Update and adapt cautiously. | Limit disruptive changes when updating and adapting the AI system’s behaviors. |

15 | Encourage granular feedback. | Enable the user to provide feedback indicating their preferences during regular interaction with the AI system. |

16 | Convey the consequences of user actions. | Immediately update or convey how user actions will impact future behaviors of the AI system. |

17 | Provide global controls | Allow the user to globally customize what the AI system monitors and how it behaves. |

18 | Notify users about changes. | Inform the user when the AI system adds or updates its capabilities |

Consider developing and applying a set of guidelines, such as those in Table 5-1, when designing your product’s AI and user interactions. It covers a wide range of topic areas with UX implications.

A UX prescription for AI

We have looked at what some of the problems with AI have been in the past. We have also investigated why similar problems might manifest again in the future and why UX offers an effective solution to these problems. Now we will look at the “how”: how can we use UX to avoid some of the pitfalls of AI?

The answer lies in advancing a framework for incorporating UX sensibilities into AI.

We are going to illustrate the goal by example. Let’s say we want to build a smart chatbot into a desktop application (say, an electronic health record (EHR) used by doctors) to offer in-context help or clinical support. The first stop is to understand if this is a need that user’s really have? If so, what do we know about those users?

Understanding users, environments, and tasks

Firstly, we’d want to understand our users; we’d conduct user interviews to understand how they use their EHR today. This will uncover the user’s mental model for how they interact with the EHR. Workflows will be identified that will help set the stage for how AI would integrate seamlessly into daily tasks already performed. Moreover, the research will find existing difficulty and inefficiencies with the EHR which can be opportunities for AI to be more beneficial.

- What are the things you did in your job earlier this week? Ideally,

Shadow and observe actual activities; watch the tasks users do and capture the workflow with emphasis on the different sources of information and manual effort.

Watch how the user navigates and interacts with systems.

Pay attention to the types of help used.

Who are the various users? Do they all need/want help for the same things? In the same ways?

To avoid being too influenced by a small set of users, in a case like this, we might use the qualitative interviews and observations to develop a survey to get more information on how users would be best supported by an EHR chatbot. The survey would collect data about the characteristics of the user, where help is needed in the EHR, under what circumstances help might be welcomed, and so on. Often we will take the quantitative data about users and identify groups with similar characteristics using multivariate statistical methods. These groups form the basis of personas, and we will carry out further, more detailed, interviews with people who fall into those groups to flesh out the personas. Our emphasis through all this is to get at the knowledge, skills, expertise, and behaviors of the users to know how best to develop the application to serve them.

Note that after doing these interviews, we may discover that the proposed construction of the chatbot is not a good solution and that users would be better aided in other ways.

Next, we move on to understanding the contexts of use, the environments. EHRs are used in exam rooms, in hospitals, at reception desk, in homes, and many more. Knowing what users do in each of these settings is important to know the kind of context-sensitive help that the chatbot would offer. If the chatbot offers the same kind of help to all users in all contexts, it’s likely not to be so useful. Getting information about the environments might be able to be done in the same interviews as those for the users. Better still would be to include some questions in the preceding questionnaire to get a feeling for the environments, but then to go and observe the users in the actual use environments. Seeing how users use the application and all the supporting materials and procedures in context is essential to documenting requirements. This means going to the clinic and watching as many representative users use (and get frustrated with) the application.

Last, while gathering information about the users and their environments, data about the specific tasks people do can be gathered. If we know what each groups’ goals are in using the EHR and learn about the environments, then we can begin to identify the various steps in the process to support them.

Underlying all this is the AI model—presumably based on thousands of cases—knows the interaction sequences (e.g., screens viewed), user inputs, error messages, and so on that occur. The AI model can infer where users might need the support of an intelligent bot. While these inferences are probably directionally correct, they can only be refined by the knowledge gathered from users. Perfecting these models may require tuning through crowdsourcing of the inputs and outputs. (More on this in the next section.)

Applying the UCD process

Armed with this background on users, environments, and tasks, initial design can begin. This design should start with the interaction model, controls, and objects, but at a rough level. Recall that the purpose is to help the user by monitoring the user’s behavior and offer advice/support when it detects trouble. Design specifically when the chatbot should trigger and interrupt the user with important information or when decisions need to be made. Set the guardrails defining when and how that the chatbot interacts.

This is very tricky and potentially dangerous as distracting someone during a critical task on an application like an EHR might cause the user to forget to enter critical information.

The design team will go through a series of concepts, perhaps on paper or crude digital prototypes (e.g., using PowerPoint). It is here that the iterative design, test, revise, test cycle takes over. The design is put through usability testing with representative users doing representative tasks. Giving users the chance to experience how the chatbot interface will respond to actions will be essential in successively refining its behavior.

The important thing to note is that, up to this point, we are assuming that the algorithms are sound and that the underlying AI is appropriate. During user testing, not only should the experience of interacting with the chatbot be tested, but a test of the AI-based content should be conducted too. The point here being that once the design cycle begins, all aspects of the user experience should be in play. The user testing begins cheap and fast and often ends with a larger-scale more formal user test.

GAVIN: Microsoft unleashed Clippy on the world in the mid-1990s trying to make using Office applications more friendly. It had a rules-based engine that would detect if you needed help and intervene.

BOB: Yeah, but it would totally stop my work and make me respond to it. It quickly became hated by most of us. It’s even a punchline now. People have a nostalgia for it!

GAVIN: What’s interesting though is that despite the annoying experience, it had another side. Microsoft ignored their own research—many women thought that Clippy was male, too male. And not only that, he was a creepy male.19 The thing is, men did not feel this emotion—but women did.

BOB: Clippy was an anthropomorphized agent that went wrong. It simply could never deliver the kind of support or build the kind of rapport with the user that was intended. He resurfaced through the years most recently in Microsoft Teams, but that was crushed in very short order!20

GAVIN: Yeah, Clippy’s become an exemplar of how not to annoy your users. So many poor decisions!

The point User research can identify potential trouble and allow for course corrections to solve issues.

In a development like this, the measures of success are perhaps a little harder to come by. Clearly, user measures of utility and usability are high on the list. We see this today in many applications—users get asked after many interactions how many stars they would give the quality of service. Other objective measures can also be taken. If this is supposed to be a user assistant, we could measure whether the number of successful actions after the chatbot interaction has increased or not. It is important to have these measures established so that we know we have succeeded in the design and can proceed with detailed programming.

What else can UX offer? Better datasets

We’ve spent a lot talking about the benefits of UX and the UCD process to AI. But there may be other ways UX can benefit AI.

User experience comes from various disciplines within psychology. One important thing that psychology and therefore user experience can bring to AI is a set of capabilities for collecting better data.

Giving AI better datasets

As we discussed earlier in Chapter 4, one of the biggest drawbacks for AI is getting the right data. Many in UX researchers are trained in research methods to collect and measure human performance data. UX researchers can assist AI researchers in collection, interpretation, and usage of human-behavior datasets for incorporation into AI algorithms.

What is the process by which this is done?

Identify the objective

The first task is to understand what the AI researchers really need. What is the objective? What constitutes a good sample case? How much variability across cases is acceptable? What are the core cases, and what are the edge cases? So, if we wanted to get 10,000 pictures of people smiling, is there an objective definition of a smile? Does a wry smile work? With teeth, without teeth? What age ranges of subjects? Gender? Ethnicity? Facial hair or clean shaven? Different hair styles? And so on. Both the in and out cases are components that the AI researchers need to clearly define and have all parties agree on.

Collect data

Next, do the necessary planning for data collection. One of the strengths of UX researchers is the ability to construct and execute large-scale research programs that involve human subjects. How to collect masses of behavioral data face to face, efficiently, and effectively is not in the core expertise of many AI researchers. In contrast, much of the practice of user research is about setting the conditions necessary to get unbiased data. Being able to recruit sample, obtain facilities, get informed consent, instruct participants, and collect, store, and transmit data is essential. Furthermore, UX researchers can also collect all the metadata necessary and attach that data to the examples for additional support. UX researchers are practiced in sorting, collecting, and categorizing data—as is evidenced by a skillset that includes qualitative coding and the many tools that support these types of analysis.

Do further tagging

After initial data collection, it may be necessary to organize and execute a crowdsourcing program such as Amazon’s Mechanical Turk21 to further augment the data collected so far. For instance, if we were to collect voice samples of how someone orders a decaf, skim, extra-hot, triple-shot latte in a noisy coffee shop, there could be several properties that would be of interest for each sample. In such cases, we might engage multiple researchers, or coders, to review each sample, transcribe the samples, and judge their clarity and completeness. These coders would then have to resolve any observed differences to ensure the cleanliness of the coding.

BOB: We recently did a study where we had to get representative users to enter voice commands into a mobile device in a way they would normally speak to a colleague.

GAVIN: Yes, we sampled more than a thousand people through an on-line survey, gave them scenarios, and they had to respond with the commands. They spoke the commands into their mobile phone. We were dealing with all sorts of accents, so the voice recognition was a challenge.

BOB: Yeah, and there was lots of ambient noise. The speech then had to be converted to text. There was a lot of really good content and voice capture, but there was also gibberish. But, that’s realistic.

GAVIN: Ultimately, there were dozens of coders who listened to the recordings and checked the speech to text transcriptions in order to tune the AI algorithms. It was hard work, but necessary to make sure that the AI engines get it right in the future.

The point AI-enabled apps often need a lot of humans to curate the input.

Well-constructed and executed research programs can help protect against the limitations that may be present in existing databases used to train AI algorithms. Using custom datasets avoids the use of inconclusive, useless, or incorrect data whose limitations might not be immediately obvious. UX researchers are well positioned to help ML scientists collect clean datasets for the training and testing of AI algorithms.

Similarly, one increasing objection to AI is that it has been trained on biased data; we touched on this earlier. By controlling the sample from which the data are collected, datasets can avoid that bias. We once did a large-scale study (n=5000) for a company that wanted to collect videos of people doing day-to-day activities. One of the key criteria for them was to get a representative sample of the population—by age, gender, ethnicity, and so on—so that their facial recognition algorithms could be trained on better data and their output more accurate.

Where does this leave us?

Find the why

We opened the book with a “Hobbit’s tale” suggesting AI and UX have been on quite a journey. One filled with hype and periods of winter. The future for AI is quite immense. In the not too distant future, we will witness AI integrating into almost every industry to bring forth better health, liberation from mundane or dangerous jobs, and advances beyond what can be imagined. However, we believe that success will come more readily and may avoid failures if more attention were placed on the user experience to specifically improve AI-UX principles of context, interaction, and trust. We believe AI-enabled products need to not be singularly focused around technology. As a solution, we recommend using a UCD process—one that places the human, who is the beneficiary of AI’s progress, at the core. However, we would like to end with one additional suggestion—to build AI-enabled products with a purpose, find the why that will give AI purpose that will drive the design to greater success.

GAVIN: You know, I started my research career at UCSF studying brainwaves. I placed electrodes on people’s heads and recorded electrical impulses from the brain when the participant heard a “boop” or a “beep” or when they lifted their index finger.

BOB: You were a lab technician doing basic research!

GAVIN: Exactly. The year was 1991, and during one research session, the participant finished, and as he left, he shook my hand and said, “I really hoped this research goes to find a cure.” I smiled and said, “I hope so as well.” When the participant left, I was devastated.

The participant was HIV+, and at the time, retrovirus treatments were still experimental. I thought that in 10 years, this 21-year-old, bright-eyed, and energetic person might have full-blown AIDS and probably would pass away before a cure is found.

BOB: You were doing basic research. Comparing his brainwave activity to see if it resembled an Alzheimer’s patient or alcoholic’s when a “beep” is heard…

GAVIN: Or when an index finger is raised…

BOB: We still haven’t found a cure for any of those three diseases and that was almost 30 years ago.

GAVIN: You could say that was the day I lost my purpose. I moved into UX research where I hoped research would have more direct impact for me.

Fast forward 10 years. I was with a patient doing a study on a prototype for an auto injection device. I remember the patient needed to pause and stand up because she had such terribly fused joints from her disease. At the end of the session, she did not shake my hand like the HIV patient. She gave me a hug.

BOB: I have never received a hug in a research session before!

GAVIN: Me either! I was surprised. She looked at me and said, “You don’t get it, do you?” I shook my head. She continued, “Look at my hands and see how hard it is for me to hold things. Currently, I have to reconstitute this medicine. The process is so complicated that I have to do it all on my kitchen table. I have to syringe one drug and mix with another precise amount. Wait and then inject. Again, my hands barely work, but this therapy stops the progression and it is a miracle drug for me.”

I said, “The purpose is to understand how it works for you. We want to understand how we can make the experience match your expectations so you can have a safe and effective dose.”

She replied, “So I can use this new device for the first time correctly? I can walk into the bathroom and be done in a minute?”

I said, “Exactly.”

She shook her head and said, “You still don’t get it. You see, my current process is so complex that I do it on the kitchen table and it takes forever. Well, my 5-year-old daughter sits and watches her mother struggle to take her miracle medicine. Now, with this device, I can take my medicine discreetly in a bathroom.

“Well, you are not just making this safe and effective for people like me,” she said. “You are changing the way a daughter looks at her mother! My daughter does not have to watch her mom struggle with her medicine and shoot up to live.”

BOB: Wow. When a product is done well and is appreciated, the reasons can be broader than imagined.

The device’s purpose for this patient is powerful.

GAVIN. I found my purpose. Companies need to also find purpose within a product and let this help drive better design.

The point Have a strong understanding of how the user experience will be affected by its usage. Who will be affected? Identify situations of use that can amplify the user benefit. A product’s purpose will be found there. This is the “why” that will drive the product team to design a compelling experience rather than falling prey to the hype surrounding the technology.

If AI doesn’t work for people, it doesn’t work.