Chapter 12. AI Readiness and Maturity

As part of creating an AI strategy, and in order to successfully pursue and generate real value from AI initiatives, companies must have a certain degree of AI readiness and maturity. I have created an AI Readiness Model that breaks AI readiness into four categories, and I have also created three models related to maturity.

In this chapter, we go over AI readiness and AI maturity, and their respective models in detail. Recall that the AIPB Assessment Component defines three categories: readiness, maturity, and key considerations. This chapter focuses on the first two categories; Chapter 13 looks at key considerations.

You should carry out assessment of all three categories during the initial assess phase of the AIPB Methodology Component, which should result in an assessment strategy. This strategy should identify AI readiness and maturity gaps, with a plan to fill them, and also address key considerations that should be taken into account and planned for when pursuing AI initiatives.

Let’s begin our discussion with the concept of AI readiness.

AI Readiness

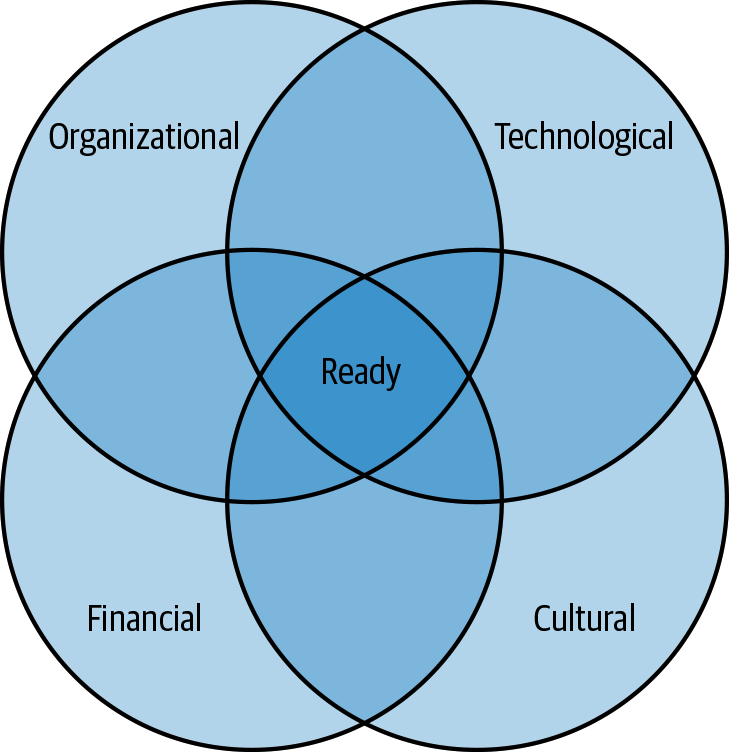

Figure 12-1 shows the AI Readiness Model that I created, a simplified version of which was first introduced in Chapter 3.

Figure 12-1. The AI Readiness Model

These four categories—organizational, technological, financial, and cultural—when combined, are the primary factors contributing to an organization’s readiness and ability to execute a successful AI initiative, and therefore to capitalize on the opportunity and benefit from the initiative as intended. AI readiness is complicated, as you’ll see, and there are many things to consider.

Although being “ready” in every way presented in this chapter is the ideal scenario, companies certainly should not wait to pursue AI initiatives until after this is achieved. In reality, often companies are never able to accomplish everything discussed in the AI readiness parts of this chapter, but the more gaps that you can identify and fill, the better.

Let’s discuss each readiness category in turn.

Organizational

I break the organizational category of AI readiness into four subcategories. These are organizational structure and leadership, shared vision and strategy, adoption and alignment, and sponsorship and support.

Organizational structure, leadership, and talent

Organizational structure, leadership, and talent are important components of AI readiness. Specifically, the organization should be structured such that it has strong leadership around data and advanced analytics, ideally at the highest executive level. Often this person has the title chief AI officer (CAIO), chief analytics officer (CAO), chief data officer (CDO), or something similar. In my opinion, this data and advanced analytics leadership is so critical that I consider it to be a hard prerequisite for developing and executing any AI vision and strategy; the only hard prerequisite in this chapter, in fact. I will note, however, that this is often easier said than done. There are not many leaders or manager-level people available to fill such roles, and certain projections indicate a growing and mass shortage of such people over time.

Again, in my opinion, AI, machine learning, and data science should sit organizationally only under an executive leadership role with the requisite expertise such as the aforementioned (doesn’t need to have a C-level title). I personally don’t recommend organizing AI, machine learning, and data science under software engineering or other product development disciplines. Leadership and managers in these disciplines will likely not have the requisite expertise, and therefore not be able to make critical decisions involving key AI considerations and trade-offs, and everything else discussed so far.

Most non–data scientists in general do not have the relevant background and experience to understand everything needed for data science and advanced analytics. Just as virtually all technology companies have a CTO, a company that is serious about taking advantage of its data with techniques including AI and machine learning should have an equivalent analytics leader. It is as important as having any other C-level functional leader, if not more for companies that are serious about becoming more data-driven or data-informed.

Further, and most important, data and advanced analytics leadership is critical for bringing the right AI expertise and strategic direction to the table at the highest level of the company. Also, for developing an AI vision and strategy, you need to ensure that everyone has a shared vision and understanding around both, that expectations are properly set and managed (which can be very challenging given the scientific nature of the work, as discussed), that you effectively communicate initiative progress, and that you make sure that the right opportunities are pursued. This includes determining whether AI is the right tool for the job. AI could be a sledge hammer when you only need a push pin. An analytics leader with the appropriate expertise can help determine whether AI will solve a given problem or provide a certain UX in a unique and warranted way.

Without this organization, the burden and responsibilities are placed on individual contributors, regardless of whether they have the appropriate leadership or business skills. Normally, the following happens with enterprises that do not have the proper organization as described. The CEO, CTO, or whomever asks an AI or machine learning practitioner, in advance of starting an AI project and without the practitioner first accessing and exploring the data that’s available, “What will I get exactly, how much it will cost, how long it will take, and what exact data is needed to ensure mitigated risks, timely delivery, and ultimate success?” (Sound familiar from the last chapter?)

The practitioner responds that they’ll need to explore and analyze the data, and then experiment with many different approaches to try to achieve the best performance possible. The executive’s response is something like, “great, so what am I going to get exactly, how much it will cost, how long it will take, and what exact data is needed to ensure mitigated risks, timely delivery, and ultimate success?” Data scientists are already unicorn-ish enough as it is. Let’s not ask them to also have business leadership and strategy skills that they might not have. Ensure that you have the appropriate data and advanced analytics leadership in place, and organize your business accordingly.

This is a perfect segue into the characteristics and responsibilities of an effective data and advanced analytics leader. Most data scientists and machine learning engineers need direction. It is highly unlikely that you’ll get the outcome you want simply by handing data to these folks and telling them to have at it. In the worst case, hiring data scientists without anyone who is an expert in data science and advanced analytics who can lead and manage may be doomed from the start.

This person should have strong and demonstrable skills in effective communication, data science, advanced analytics, and stakeholder management. They should also have the critical soft skills that we discussed earlier in the book. AI is a very dynamic field that is changing and becoming more advanced every day. It is crucial to have somebody who is able to keep pace with trends and state-of-the-art techniques in the field, and who also has the ability to determine how to utilize this information to create the best AI vision and strategies possible while keeping initiatives on track for ongoing success.

Given the complexity and scientific nature of AI and machine learning, this leader must be able to properly communicate and educate on complex topics that are scientific in nature, in an easy-to-understand way, and in the context of business and what matters most to executives. This person must understand and avoid the “curse of knowledge,” a form of bias that causes people to assume that lesser-informed people have the background to understand something as well as they do. The goal is to make no assumptions and present complex information with childlike clarity.

This person must also be excellent at providing insight into AI initiatives, project status, and, most important, the ability to properly manage expectations. Expectation management is a critical skill, particularly for the scientific initiatives associated with data science and advanced analytics.

In addition to the responsibilities already covered, this person should also be responsible for data and analytics P&L (or just analytics if there’s a separate data-specific organizational structure), performing strategic assessments (such as those defined by AIPB) and developing associated strategies, talent hiring and development, tooling and best practices, and more. Lastly, talent is also a critical component of AI readiness, which we’ll discuss again in Chapter 13.

Vision and strategy

Creating an AI vision and strategy is a core part of AIPB and has been an ongoing theme in this book. We have also discussed the concept of generating a shared vision and understanding and its importance in being able to produce successful AI initiatives.

Here, we examine vision and strategy development through the lens of AI readiness, as some amount of readiness is needed to both create and execute successfully. With the appropriate analytics leadership in place, a company should be able to develop a benefit-driven AI vision and strategy. At a high level, and as previously discussed, the vision covers the why, how, and what for a particular AI initiative to benefit both people and business. The strategy, on the other hand, is the plan for executing the vision to make it a reality.

Both of these involve creating appropriate business and individual use cases for key strategic initiatives. The business case is the business-level why, which should outline the vision, goals, and potential ROI for a given initiative. Business cases can also include identification and development of potential new business models, products, and services, and/or capturing greater market share and expanding into new markets.

After you’ve established one or more business cases, the next step is to identify and specify individual use cases for specific AI solutions. This means identifying the users who will benefit from the solution (or specific features), defining why (benefit) and how (user flow) the user will interact with the solution to accomplish a certain task, and determining what the outcome will be for all potential user interactions in a given scenario.

In the field of product management, and assuming a product vision has been created, a technology-based solution strategy is manifested as a prioritized product roadmap and backlog of ideas. The solution strategy should take into account any assessments and corresponding strategies, as well. For cases in which an AI solution is either standalone or will be deployed as part of another application (e.g., to automate a process, augment human intelligence, or integrate with an existing mobile or web-based application), the strategy will involve generating a product roadmap, with the initial focus usually on first building a MVP or comparable entity (e.g., prototype, proof of concept [PoC], or pilot) to test the riskiest assumptions, validate the product–market fit, and verify usage as intended.

For needs that are time sensitive or require timed coordination, and for which an AI solution must be developed, tested, and deployed to a production environment, you must keep in mind the scientific, empirical, and nondeterministic nature of data science and advanced analytics tasks, as previously discussed. This is important because it can affect the ability to create estimations and deliverable timelines. It also results in a certain amount of budget uncertainty because it is impossible to know in advance the exact resources needed (e.g., cloud compute for model training and optimization) and time required to achieve the desired result. This all requires a shared vision and understanding, along with proper expectation management among key stakeholders. Again, increased maturity, as we’ll discuss soon, will help decrease general uncertainty.

Adoption and alignment

Having developed an AI vision and strategy and thus having established the goals and plan to successfully execute key AI initiatives, the next step is to gain company-wide adoption and alignment around both, which means it must become a “shared” vision and strategy.

New initiatives, especially those involving data from across a company, can require buy-in, participation, and resources from senior executives and multiple LoB owners. Business owners might include the CEO, head of marketing, head of product, and head of sales, for example. Adoption means that all relevant stakeholders commit to the initiative and take ownership of a certain aspect of it and its overall success.

Adoption typically results from stakeholders understanding the vision, value (benefits), and potential ROI. Stakeholders who understand these things are more likely to be interested in adopting initiatives.

Adoption is not enough, however. Alignment is required, as well. Alignment means that all stakeholders not only share the same vision, understanding, and strategy, but are also aligned on what is needed to execute on the strategy, what to expect throughout the process, and ultimately on making the solution and its intended benefits a reality. This includes alignment around who is responsible for what, potential phases and milestones, key deliverables, and timing of involvement.

Sponsorship and support

Given the proper organizational structure and leadership and a shared vision and strategy that has company-wide adoption and alignment, the next step is to establish initiative sponsorship and support.

Sponsorship means having key stakeholders commit to providing the resources (e.g., money, data, people) necessary to ensure initiative success and as needed at the right time. Support means providing ongoing support in helping define requirements, getting tasks done (e.g., providing access to data), answering questions, collaborating as needed, properly setting expectations, and communicating progress.

Technological

I break the technological category of AI readiness into three subcategories: infrastructure and technologies, support and maintain, and data readiness and quality (the “right” data).

Infrastructure and technologies

The infrastructure and technologies component of AI readiness refers to having the appropriate technology-based resources and processes in place, which includes cloud infrastructures and services (e.g., AWS and GCP), DevOps and site reliability engineering (infrastructure as code; tools for build, integration, and deployment; scalability), oversight of regulations and compliance (e.g., Europe’s General Data Protection Regulation [GDPR]), and effective software development processes and methodologies (e.g., Agile, Kanban, CI/CD). This category also includes the people required to set up infrastructure components and who can design solutions built on top. Note that some new infrastructure development might be required for new AI initiatives.

Infrastructure can also include data warehouse and/or data lake setup and maintenance, including all data acquisition; ingestion; integration; extract, transform, and load (ETL); extract, load, and transform (ELT); and data pipeline–related processes.

Technologies in the context of AI readiness refer to having core competence and expertise with using requisite technologies for any given AI project. In the context of AI applications, the technologies category includes common programming languages (e.g., Python, R, Java), software packages and libraries (e.g., Jupyter Notebooks, TensorFlow, scikit-learn, Spark), version-control systems (e.g., Git), testing tools (e.g., A/B testing), and databases (e.g., PostgreSQL, Hadoop, MongoDB).

This list is nonexhaustive and not small, and that’s part of the reason why building software solutions is difficult.

Support and maintain

After any AI solution (and software solutions in general) is developed, it needs to be supported and maintained. These solutions should be regularly monitored to ensure proper functioning and health.

Supporting software involves creating processes to capture bug reports with proper severity (e.g., critical, high, medium, low), soliciting and capturing customer feedback and new feature requests, and handling customer support requests. When any of these items are captured, a system should be in place to track progress if action is to be taken as well as to provide visibility in order to communicate progress and status updates to those submitting the support requests.

Also, there are usually multiple levels, or tiers, of support that represent increasing degrees of escalation as needed to resolve a certain issue. Finally, support might be governed by a service-level agreement (SLA), and therefore must adhere to specific response and resolution times or face certain penalties.

Maintaining a solution means addressing anything support or enhancement related, and making appropriate changes or improvements and deploying them to production. In addition, programming languages and software (e.g., libraries, frameworks) are usually updated regularly. Code bases should therefore also be updated regularly to take advantage of the newest programming languages, software, and frameworks, which usually offer improvements such as bug fixes and performance and security enhancements.

Maintenance also includes technical debt reduction and improving nonfunctional requirements such as scalability, reliability, and maintainability. Time should always be alloted (usually 20%) to software development teams in order to continuously work on this type of maintenance. As with support, systems and processes should be in place to effectively carry out both on an ongoing basis.

Data readiness and quality (the “right” data)

Data readiness and quality was discussed at length earlier in the book. Having the “right” data is a critical element of the technological category of AI readiness. Recall from Chapter 4 that I use the phrase “data readiness and quality” to collectively refer to the following:

-

Adequate data amount

-

Adequate data depth

-

Well-balanced data

-

Highly representative and unbiased data

-

Complete data

-

Clean data

If you need a refresher, refer to Chapter 4.

Financial

I break the financial category of AI readiness into three subcategories: budgeting, competing investments, and prioritization.

Budgeting

People and technology cost money and require resources. Budgeting in this context refers to money to spend on resources such as people and technology.

As such, AI initiatives cost money as well, and you need to budget for these costs. In the context of AI readiness, this means that money should be earmarked for making a company’s AI vision and strategy a reality. One very common challenge is that a given AI initiative might require a budget from multiple business owners, something that companies aren’t necessarily set up to easily handle. Getting buy-in and commitment across business functions to contribute budget monies to the same initiative is a cross-functional effort that can be very challenging, and is therefore highly related to the sponsorship and support element of AI readiness, as well. Part of the reason for this is differing LoB incentives, and also that the potential value and ROI might not be easily attributable in a quantified way to a specific LoB, which means that P&L implications might not be straightforward or desirable for that LoB, and can potentially cause barriers to progress. Potential benefits and ROI should be considered at the company-wide level—this should apply to any cross-functional form of innovation and transformation.

Suppose that marketing is championing a new AI initiative and is therefore willing to allocate budget to it. It might be that a certain amount of infrastructure and people’s time are required from the IT department. Usually different business functions are incentivized differently, and they usually have differing priorities and initiatives as a result. This can result in alignment, priority, and budgeting challenges. This is one of the reasons having a strong leader in data and analytics is critical. This person should be tasked with generating the shared vision and understanding that we’ve talked about, and therefore help people across business functions see the value to their departments and, more important, to the company as a whole, and ultimately get the necessary buy-in and participation (e.g., money, time, and resources).

Competing investments and prioritization

Depending on the size of a company and its internal departments, there are usually many different potential initiatives to prioritize within each of those departments, in addition to the high-priority company-wide initiatives that need to be addressed. This all results in competition for resources; as a result, financial decisions and prioritization are required around competing investments.

Nothing seems to succeed in securing the funds to pay for a given initiative better than a compelling argument around how much money will be generated as a result of the initiative. In other words, presenting convincing ROI estimates is very powerful when possible, and that includes crafting a very effective story on how a given initiative can generate the proposed ROI. Story telling is often a key skill here, and, again, having a data and advanced analytics leader is critical.

Cultural

I break the cultural category of AI readiness into four subcategories: scientific innovation and disruption, gut-to-data driven, action ready, and data democratization. Cultural readiness is largely about creating a culture, mindset, and established set of processes that enable and foster pursuit of data-driven initiatives such as those associated with AI and machine learning.

Scientific innovation and disruption

Companies are often not set up or incentivized for innovation and disruption. Many companies are mostly incentivized and driven by short-term, quarter-by-quarter gains such as company profits and growth. This usually results in incremental thinking and action, and means that significant progress and improvement can take a long time.

Creating a culture of innovation and disruption is very important in terms of AI readiness for developing and executing an AI vision and strategy. In incremental-thinking companies, creating this culture can be very challenging, and there are usually many forces opposing it. Creating this culture begins with big-picture, long-term, and high-risk-versus-reward thinking. It also begins with a willingness to be agile and experimental.

Keep in mind that you might have very agile competitors that have prioritized and embraced a culture of innovation and disruption from day one. Sticking to the status quo and thinking in small incremental steps is the quickest way to fall behind and not remain competitive.

Also, large enterprise companies often have a “buy versus build” mentality—the attitude of “Why invest time, money, and resources into customized advanced analytics, for example, when I can just buy a product like a popular CRM right off the shelf, right?” Wrong.

When trying to sell people on the idea of data science and advanced analytics, this has come up time and time again. I hear things like, “But XYZ already has an analytics dashboard.” This might be true, but third-party tools that have built-in analytics are catering to the masses, are very generic, and are not customized to your business or needs. If you’re looking for shallow insights, that’s the analytics solution for you. But if you want differentiated, very deep, and actionable insights, and want to have the ability to make predictions and have optimal actions automatically taken for you, look elsewhere. In fact, AI and machine learning represent opportunities that are well beyond—even unachievable with—traditional analytics, and are universally applicable throughout business. You should approach AI and machine learning as a new core business competency in the same way that marketing and sales are. The more you think of your company as a data company, and data as a core advantage, the better off your company will be.

Another thing to consider that many don’t realize is that by generating deeper insights, you can more easily develop new ideas and strategies around potential new business models, services, and products. You can also find innovative ways to capture new markets and expand your current market. Again, in ways that are unachievable with traditional analytics.

Companies that embrace scientific innovation and disruption have the best chance of differentiating themselves while also generating competitive advantage. AI represents a massive opportunity in this respect. Innovation creates barriers to entry, provides commoditization protection, and ultimately increases your chances of long-term success. As of 2018, seven of the top ten publicly traded companies in terms of market capitalization are technology companies, and they have recently replaced major incumbents that had previously enjoyed the top ten designation, including ExxonMobil, General Electric, Wells Fargo, and Wal-Mart.1

Gut-to-data driven

Certain physical laws such as those describing gravity, motion, and electricity often boil down to taking the path of least resistance. Humans are no different, and as a result, decision making is often entirely driven by experience and historical precedent; for instance, what happened in similar situations in the past, simple analytics, and gut feel. Although many decisions have been made with success using this approach, not only can we significantly improve the success rate and outcomes by incorporating data into decision making, but analytics-based decision making also enables predictions of the potential impact (e.g., ROI) for a given decision. In other words, it unlocks the ability to be better able to understand and plan for the exact outcome in advance.

The concepts of being data driven and data informed are highly applicable here. It is not necessary, or possible, in some cases for decisions to be made solely based on data (data driven), but we should definitely incorporate data and analytics, when available, into the process of making all important decisions (data informed). The outcomes will almost certainly be better.

Becoming a data-driven organization requires a cultural and mindset shift, along with a data democratization shift. People can’t make data-driven or data-informed decisions without having access to the “right” data as well as the ability to properly analyze, derive insights, and take actions from such data. Companies must invest heavily in functionally appropriate talent (e.g., analysts, data scientists, and AI and machine learning engineers) for this purpose. This is again a cultural shift in terms of seeing the value of the investment into data and analytics and its potential return and prioritizing it accordingly.

Action ready

Being “action ready” means being committed to prioritizing the generation of actionable insights and then being willing to take the actions (e.g., make decision, augment intelligence, automate) needed to realize the potential value and benefits. This is a cultural thing and cannot be overstated. For any given goal such as increasing revenue, companies can create different initiatives to help accomplish the goal and often have multiple levers that can be pulled, as well. This includes having the ability to create new and innovative levers that don’t yet exist (i.e., products, features, services). There is not much point in generating highly actionable deep insights if nobody is willing to pull any levers that are suggested by these insights.

I won’t delve into more detail on the types of actions that can be taken here, because that list would be massive, very company specific, and also industry and business function specific in many cases. The point is that if your company is going to become culturally ready to pursue generating deep actionable insights, become culturally ready to take appropriate action, as well.

Data democratization

Data is not very useful if it is siloed and unavailable. Many companies build silos around entire departments in general, and especially each departments data. LoB owners often have an “it’s my data” mentality and approach. Let’s be clear—it’s the company’s data, and data is most effective when combined with other data and when it is accessible by anyone who can benefit from it without violating data governance rules, privacy, and security in particular.

Tear down silos and democratize data. This is critical. I’m not suggesting that you run out tomorrow and build a data warehouse or data lake (I’ve definitely seen this become a barrier to advanced analytics adoption, as well), but work hard to ensure that everyone has access to data within the company who can benefit from having it. Not only that, consider making data available externally (ethically of course, and without aforementioned violations), as well.

In an MIT Sloan Management Review article, “Analytics As a Source of Business Innovation”, the authors discuss how Bridgestone wants to transform its business by taking advantage of shared third-party data (e.g., data from car manufacturers) in order to sell proactively by encouraging and reminding consumers to come in for tire inspections, new tires, and other services before a problem develops; in other words, predictive maintenance. Tire manufacturers currently have no way to know how many miles have been driven on a given set of tires for a given car, so data democratization could change this, and this idea is applicable to many industries and companies.

Like many things, data is better democratized than siloed and restricted. Getting to this point requires a cultural shift as well as the gut-to-data-driven shift, as discussed. LoB owners and any employee who is trying to make effective and company-improving decisions should instinctively think to access relevant data in order to gain insights and produce outcomes, and do so.

Now let’s switch gears and discuss AI maturity in the context of AIPB and assessments.

AI Maturity

AI maturity is highly relevant to successfully developing and executing an AI vision and strategy. I have created multiple maturity-related models that we cover in this section, two of which I first introduced in Chapter 3.

I break AI maturity into both data maturity and analytics maturity subcategories. This is due to the fact that data-specific and analytics-specific roles, processes, and tools can be different, and so can the corresponding levels of maturity for each.

Data maturity means having an increasingly advanced data capturing, collection, processing, integration, and analytics-optimized storage foundation in place (data pipelines and infrastructure). Analytics maturity refers to applying increasingly advanced analytics to existing and new data, which ranges from simple and traditional analytics (e.g., statistical analysis, visualization, descriptive analytics, and business intelligence) to more complex advanced analytics (e.g., AI, machine learning, predictive analytics, prescriptive analytics). To keep things simple in this discussion, and unless otherwise noted, I use the term “AI maturity” to include both data maturity and analytics maturity.

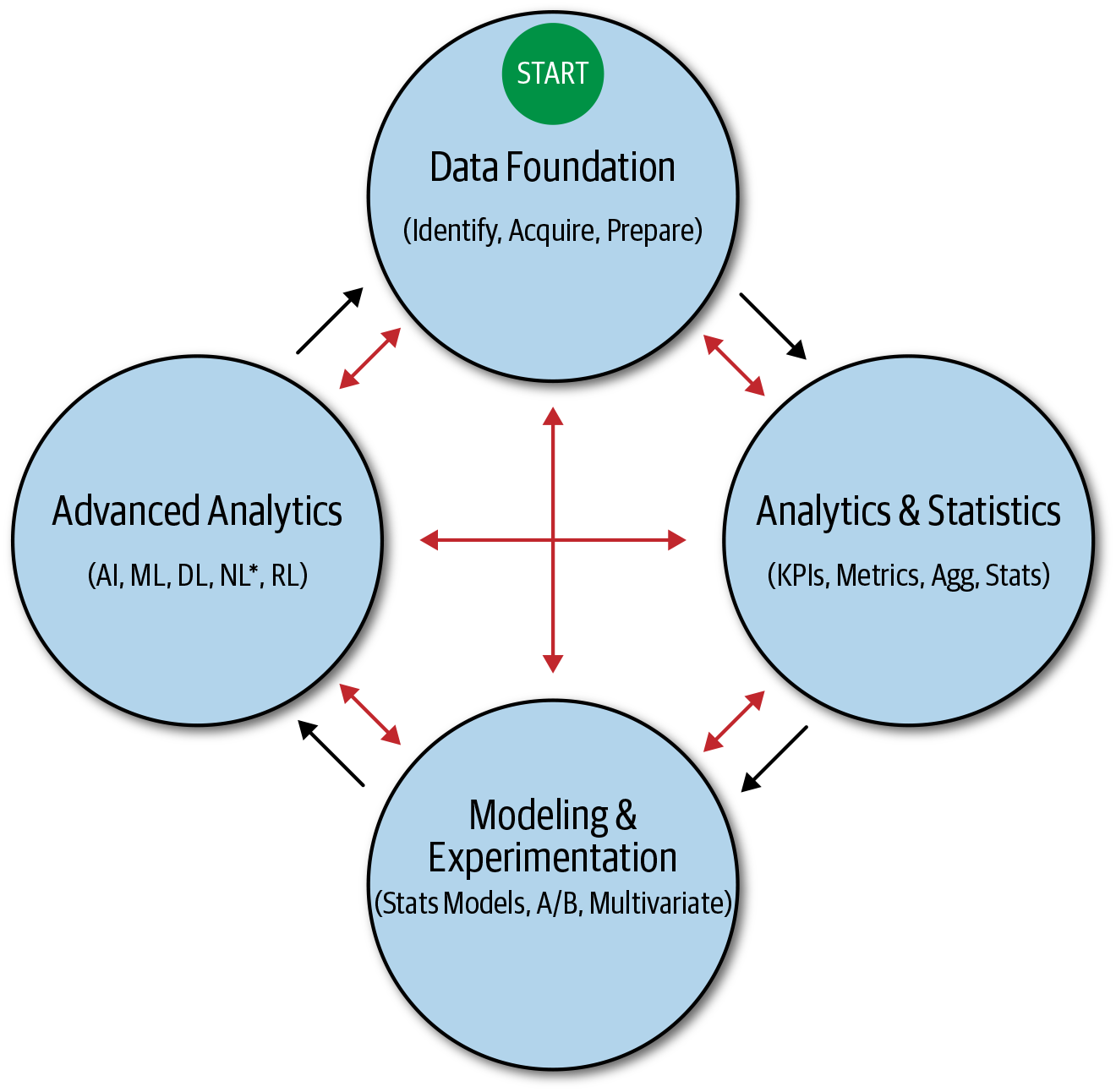

Before discussing AI maturity as related to data and analytics specifically, let’s first discuss a more general Technical Maturity Model that I’ve created, as shown in Figure 12-2. This provides the criteria for measuring the degree of maturity for each level of sophistication covered in the AI Maturity Model (Figure 12-3) that follows.

I define technical maturity, in general, as a mixture of individual levels of maturity characteristics (i.e., maturity measurement criteria). Specifically, I define technical maturity as a collective measure (mixture) of the level of experience, technical sophistication, and technical competency around a given technical field or technology at a given point in time; in this case, AI. Figure 12-2 shows this.

Figure 12-2. The Technical Maturity Mixture Model

Experience represents the collective amount of experience that the relevant team has with the technical field or technology involved. Technical sophistication is a measure of the team’s ability to utilize advanced and state-of-the-art tools and techniques related to the given technical field or technology (e.g., deep learning, reinforcement learning, natural language understanding). Technical sophistication is usually directly related to the team and its individual members’ experience (for example, perhaps only one team member knows how to use reinforcement learning techniques). Finally, technical competency is a measure of the ability to successfully execute and deliver on related initiatives and projects.

The proportions of each maturity characteristic contributing to the collective measure (mixture) of technical maturity can be somewhat subjective and constantly changing based on advancements in technology. Here is a formula that I’ve created to indicate that increased technical maturity according to this model results in increased certainty and confidence (remember scientific innovation and the TCPR model?) and, ultimately, better outcomes and success.

- ↑ Maturity = ↑ Certainty and Confidence = Better Outcomes and Success!

In the context of the technical maturity mixture highlighted in the previous model, maturity can be progressively measured by a field-specific (AI in this case), predefined number of levels of sophistication. Figure 12-3 shows a model that I’ve created to illustrate this in terms of AI maturity.

Figure 12-3. The AI Maturity Model

The model shows the starting point as building a data foundation to fuel progressively increasing levels of analytics sophistication, as indicated by the green arrows. Building a data foundation means identifying, acquiring, and preparing the “right” data, which we discussed earlier in the book. Acquiring data includes capturing and integrating data, potentially from many different sources and systems.

After a data foundation is either partially or entirely built, we can measure AI maturity as a progression from traditional analytics and statistics, to more sophisticated modeling and experimentation, and finally to advanced analytics, which includes state-of-the-art AI and machine learning techniques.

Similar to Maslow changing his mind about his hierarchy requiring a strict and ordered progression, I don’t think that it’s mandatory to gain complete competency at every level of maturity according to this model before moving to the next level, and particularly before beginning to use AI and machine learning. Also, the red arrows indicate that the outputs of each level of sophistication can influence or power one or more of the other levels in some way. For example, all three analytics-specific levels can create data output that can be integrated into your data foundation. Likewise, the outcomes resulting from AI and machine learning applications should be understood using traditional analytics and statistics, particularly in terms of the solution’s impact on key success metrics and KPIs.

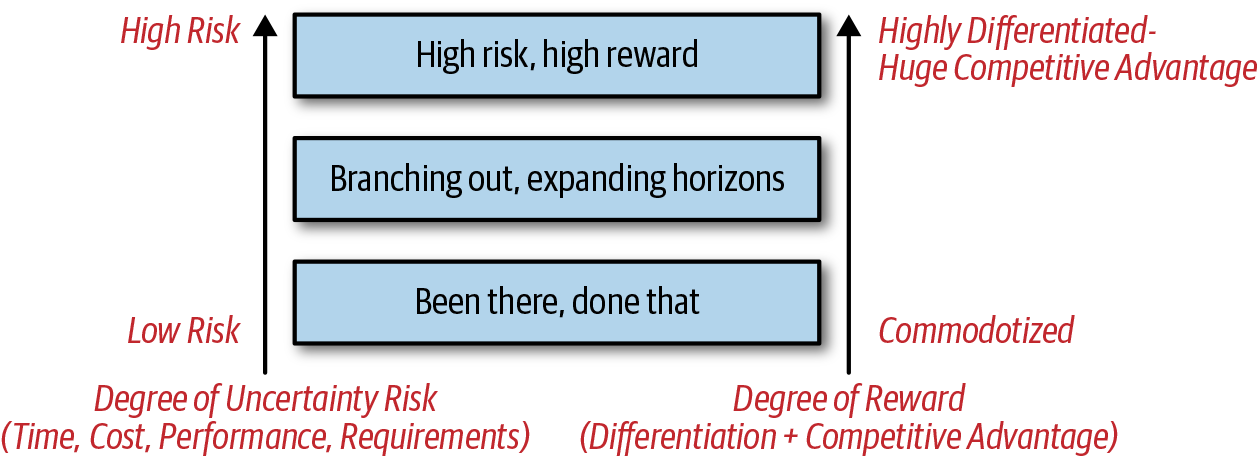

Figure 12-4 shows the final maturity model that I’ve created, the Innovation Uncertainty Risk versus Reward Model, which is highly relevant to AI maturity. It presents a nontechnical, strategic, and business-focused perspective.

Figure 12-4. Innovation Uncertainty Risk versus Reward Model

The model shows the relationship of technology-based maturity (again, AI in this case) as a function of innovation uncertainty risk versus reward, where uncertainty is around time, cost, performance, and requirements (again, the TCPR Model!), and the reward is differentiation and competitive advantage.

Time and cost uncertainty should be obvious, as already discussed. Performance uncertainty refers to error-based performance in AI and machine learning applications (e.g., accuracy, although note that not all applications are error based), for which the solution might be a predictive model, for example. In contrast, performance for more deterministic applications such as a mobile app would be KPI or UX based; for example, conversions, customer retention, or delight. Finally, requirements uncertainty is around the data, features, and techniques required to achieve target performance.

Notice that I’ve intentionally omitted specific data and analytics subject areas from the model (e.g., ETL, A/B testing, AI) or any specific technologies, for that matter. I’ve done this because what is emerging or state-of-the-art in terms of data and analytics techniques today might be commoditized, automated, or obsolete tomorrow. Also, maturity is a moving target and is technical area specific; that is, you might have varying degrees of maturity with respect to business intelligence as compared to AI techniques such as deep learning.

In the context of analytics maturity level, “been there, done that” represents low uncertainty risk, and thus it is very easy to estimate time, cost, performance, and requirements for a specific project. This results from having the requisite experience, sophistication, and competency to effectively erase any uncertainty and resulting risk. It also means that the technology being used and resulting outcomes might be commoditized and not able to help generate significant differentiation and competitive advantage (minimal rewards, if any).

BI is a good example, although as is often the case, it’s not that simple. Certain industries are generally very slow to make the data and analytics cultural shifts covered earlier in the context of AI readiness. In those cases, significant BI competency can create appreciable competitive advantage relative to the competition.

“Branching out, expanding horizons” is the process of building on existing data and analytics experience, sophistication, and competency to increase the level of maturity for either or both. This usually means that uncertainty risk is increased because there are new areas of exploration, experimentation, and unpredictable outcomes; or, put another way, scientific innovation. It also means that there is greater potential for generating increased differentiation and competitive advantage.

The final category, “high risk, high reward,” is as it sounds. It represents taking risks and gambling, venturing into the great unknown, pushing boundaries, and any other comparable way of thinking about pursuing true scientific innovation to reap potentially huge rewards. This means pioneering and leading with emerging and state-of-the-art technology instead of following.

It also means assuming a large amount of uncertainty risk but with the upside of massive rewards. The way that venture capitalists operate is a great example. Most strategic investments are expected to fail, but those that succeed usually do so in a huge way. For successful VCs, though, the successes more than financially cover the greater number of failures. R&D programs at pharmaceutical companies work in the same way.

A final note on this model. At a glance, the model seems to indicate that low uncertainty risk always implies commoditized data and analytics technologies and competency, and vice versa, for high uncertainty risk. In reality, it is not that simple and depends on many factors. For example, some tech giants (e.g., Google, Amazon) have a lot of experience, sophistication, and competency with certain AI and machine learning techniques, which therefore carry low uncertainty risk for them, and yet those techniques are nowhere near being commoditized in the general market. The model is therefore a relative one and applies more so to the majority of companies outside of the AI-forward tech giants.

Summary

We divided AI readiness into four categories—organizational, technological, financial, and cultural—as shown by the AI Readiness Model. Although companies are unlikely to be completely “ready” in all categories, as described, you should pursue AI initiatives nonetheless. We have also defined AI maturity in terms of different concepts and multiple models, particularly the Technical Maturity Mixture Model, the AI Maturity Model, and the Innovation Uncertainty Risk versus Reward Model.

Overly focusing on mandatory and progressive steps to data and analytics maturity before moving forward is similar to establishing barriers to entry, where some barriers can take a very long time to break down (e.g., building a data warehouse). Get started now, and make decisions based on needs and desired outcomes, not on your data and analytics maturity.

The key is to assess your AI readiness and AI maturity as a subphase of the assess phase of the AIPB Methodology Component, and identify gaps and a plan to fill them as you go. This becomes part of your assessment strategy. To help complete your AIPB assessments and create your assessment strategy, an important part of your overall AI strategy, we next discuss AI key considerations.