Chapter 3. AIPB Core Components

This chapter continues to develop our understanding of how to innovate using AIPB in order to create better human experiences and business success. You’ll recall that the AIPB Framework consists of a North Star, benefits pseudocomponent, and four core components. Figure 3-1 provides a refresher of this.

We’ve already discussed the North Star and benefits pseudocomponent in the last chapter, so let’s begin this chapter by discussing a relevant analogy and then look at the four AIPB core components in detail: experts, assessment, methodology, and outputs. We also discuss the concept of the flipped classroom, which is an important element in approaching the innovation process in new, more efficient and effective ways.

Before diving into the AIPB core components, let’s first discuss Agile development as an analogy to certain characteristics of AIPB.

An Agile Analogy

You are probably familiar with the Agile software development movement and associated methodologies such as Scrum and Kanban. Agile was created to fill gaps and solve many problems previously experienced with the waterfall approach to building technology products. AIPB analogously intends to fill gaps and improve upon existing business and innovation frameworks.

Figure 3-1. The AIPB Framework

Agile is based on the Agile Manifesto and the four Agile software development values that the manifesto defines:

-

Individuals and interactions over processes and tools

-

Working software over comprehensive documentation

-

Customer collaboration over contract negotiation

-

Responding to change over following a plan

These four values are the basis for which all Agile principles and methodologies are built (interestingly, the values are phrased in terms of the how and what and not the why). That aside, the benefits pseudocomponent of AIPB is analogous to Agile’s “four values,” and it is meant to be the foundation on which everything else is built.

Continuing with our analogy, the experts component of AIPB is analogous to the roles defined in Scrum;1 the Assessment Component is analogous to assessments conducted by scrum teams;2 the Methodology Component, is analogous to recurring scrum meetings (aka ceremonies);3 and, finally, the outputs component is analogous to the “product artifacts” defined by scrum.4

Both Agile and AIPB share a focus on people and process. One way that Agile differs from AIPB in that Agile does not necessarily involve all of the people required for innovation initiatives from concept to launch, nor does it represent the innovation process end to end.

Agile omits most of the vision and strategy aspects of innovation, for example, and focuses more on actual development, deployment, and maintenance based on an existing product roadmap. That said, Agile methods are very useful, and I’m a big fan, particularly of Kanban. I personally recommend Kanban for product development, which we can easily incorporate into the build methodology phase of AIPB (covered later in this chapter).

Now let’s discuss each of AIPB’s core components.

Experts Component

You must assemble the appropriate experts when pursuing AI initiatives. You should also bring them together during each AIPB methodology phase to collaborate and help ensure maximum success.

Often the people making critical product decisions (such as filling in canvases or SWOT lists) do not have all the requisite expertise. Or, they aren’t able to properly empathize with the target market; that is, they’re not the customer or user, and therefore view things more from the business perspective, even if they don’t think that’s the case.

The key takeaway, and a differentiator of the AIPB Framework, is that the right experts must collaborate in the appropriate AIPB Methodology phases. Consensus is not required, but expert ideas, opinions, and perspectives are. From there, the initiative owner must make the final decisions by taking all expertise into account, a responsibility usually best handled by a product manager for product initiatives, and likely will be handled more over time by data product managers, a new data and analytics-tailored product management role.

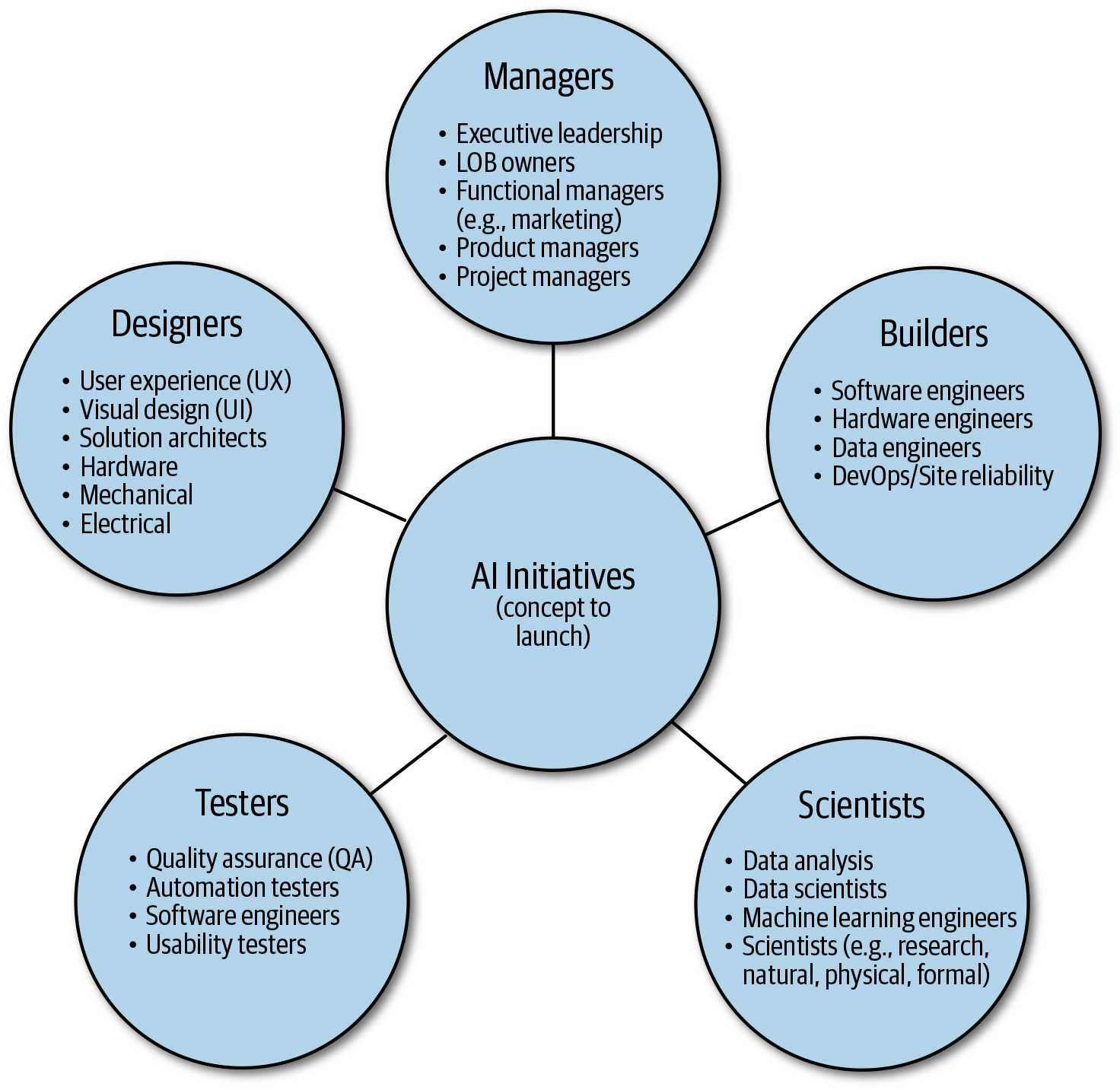

So who are these experts? AIPB establishes five groups of experts: managers, designers, builders, testers, and scientists. Certain people might fall into one or more categories based on a given assessment task or methodology phase of the framework. Figure 3-2 shows these categories with some example roles in each.

Figure 3-2. AIPB Experts

Let me first explain why I chose the categories builders and scientists, how they differ from each other, and how they differ from traditional functional designations.

You might have seen The LEGO Movie. I highly recommend watching it if you haven’t. The movie is centered on a character named Emmet Brickowski who is a construction worker. Construction workers are builders who build objects with LEGO bricks by following instructions, just as one does after purchasing a LEGO kit. This is essentially the same as software engineers writing code based on product requirements and designs, or hardware assemblers who build physical objects based on specifications, materials, and designs. I refer to this group as builders—those that build from instructions that they’ve been given in one or more forms.

The movie also designates another group called master builders. Master builders are those people who are able to use their imagination to develop ideas and create instructions for building new innovative objects, without requiring explicit instructions themselves. These new ideas and objects can become building blocks upon which even more new ideas and objects are built, just as with real LEGO objects. Developing new ideas and outcomes as well as validating and optimizing them might require exploration, hypothesis development, and experimentation. Traditional scientists (and data scientists), mathematicians, and engineers fit perfectly in this category, for example. I refer to this group as scientists.

Scientists historically had no instructions for which to base their new ideas, hypothesis, and discoveries other than the laws, theorems, and empirically determined results established by previous scientists. These historic findings help scientists by providing guidance and a foundation on which to base new ideas and discoveries, but they do not provide explicit instructions to achieve a specific new discovery.

The scientist as the master builder must use their expertise and imagination for that. More specifically, new scientific discoveries come by way of one or more of the following: building on previous science, conducting experiments (thought, empirical, or both), and by making real-world or lab-based observations. In all cases, the scientists aren’t sure what they will find in advance; rather, they are guided by a set of initial ideas and hypotheses.

Given the statistical, probabilistic, and scientific nature of AI and machine learning, the term scientists is the most apropos. Innovation means creating something new by definition, and therefore by default there are no instructions on how to achieve a certain outcome. Innovation requires expertise and imagination combined with strategies to validate key assumptions, mitigate risks, and achieve an intended result. Going a step further, scientific innovation—as compared to more deterministic innovation involving less uncertainty—is a key differentiator and very certainly can generate competitive advantage. The fact that visionaries and scientific innovators can and have created successful outcomes in the face of significant uncertainty is what makes them great.

So to recap, some questions simply do not have answers without exploration and experimentation. That’s exactly the concept behind Lean and Agile product development, as well. Create an MVP of what you think best addresses a given need and has the highest chance of achieving product-market fit, and then iteratively experiment and test versions in order to reach the optimal solution—a solution that was not known in advance. This is how the scientific method works and thus why I use the term scientists.

One final note: data scientists, machine learning engineers, and AI researchers aren’t the only scientists in this process. User experience (UX) designers and others can also be considered scientists in some cases, as well. The distinguishing factor is simply recognizing that some sort of hypothesis and test or experiment is required to make discoveries and find answers. For example, usability tests are conducted by UX researchers for product evaluation and to determine whether users understand how to use a certain product or product feature. This is experimental by nature and requires the test results in order to gain insights and drive actionable, data-driven changes to the product. There is no way to know the results in advance of the actual test. AI-based scientific innovation is no different and therefore requires a scientific mindset and one or more scientists to participate in the process. This requires a similar change in mindset as switching from waterfall to agile.

Designers and design in general are also critical components of any technology that people interact with in some way. Designers are thus also a very important category of experts recognized by AIPB. We discuss the importance of design, particularly in the context of AI solutions, in much greater detail later in this book.

Testers are another very important group of experts recognized by AIPB. Solution quality and minimization of liability and risk are paramount with any technology solution; I would argue more-so in most cases involving data and analytics-centric solutions such as those using AI. The importance of achieving maximum quality with minimal risk, and testers and other AIPB Experts that ensure it, will become abundantly clear in later chapters.

Finally, managers are also a very important group of experts recognized by AIPB, especially for leading and managing AI initiatives. Many managers, including executives, might not be domain-specific experts or technical subject matter experts (SMEs), but they should be experts in the following:

-

assembling purpose-built teams

-

setting goals

-

creating strategies

-

managing risks

-

making key decisions

-

delegating work

-

providing direction and guidance

-

providing autonomy

-

facilitating collaboration and alignment

-

properly setting expectations

-

keeping initiatives on track

-

providing needed resources (e.g., budgets)

-

ensuring both initiative and business success

As with any of my former IndyCar racing teams, winning happens when everyone does their part and does it very well. Any one person can lose a race, but it takes everyone on the team working together at the highest level of performance possible and without mistakes to win.

Now let’s turn our attention to the two process-related components of AIPB: assessment and methodology. Rather than discuss the outputs component separately, we look at the appropriate outputs as we go for each phase of the Methodology Component.

AIPB Process Categories and Recommended Methods

AIPB is meant to be modular, as mentioned previously. Certain methods and frameworks are time-tested and have proven to be both effective and efficient. This means that whatever collaborative (not consensus-based) process and methods gets you to the best answers and outputs is the right one.

Recall from Chapter 2 the AIPB process categories and some of my recommended methods, which I present again here for reference in this chapter.

-

Assessment (e.g., gap analysis, competency analysis)

-

Ideation and vision development (e.g., design thinking, brainstorming, Five why’s)

-

Business and product strategy (e.g., SWOT, Porter’s five forces, cost-benefit analysis (CBA), the Product-Market Fit Pyramid)

-

Roadmap prioritization (e.g., cost of delay, CD3, Kano model, importance versus satisfaction)

-

Requirements elicitation (e.g., design thinking, interviews)

-

Product design (e.g., design thinking, UX design, human-centered design)

-

Product development (e.g., Agile, Kanban, GABDO, continuous delivery)

-

Product evaluation, validation, and optimization (e.g., MVP/prototyping, success metrics, KPIs, usability testing)

I list my recommended process categories and methods where appropriate in this chapter, but I mostly omit the details of existing methods (e.g., SWOT) given the bigger picture focus of this book. You’re encouraged to do further research as needed. Lastly, my recommendations are based on my experience and what I’ve found to be most effective. There might be perfectly valid alternatives that I haven’t tried that can be applied to the Methodology Component phases, and the appropriate experts should choose what to use based on their experience and expertise. The end result being correct is what matters most. AIPB is modular and also expert driven!

Assessment Component

Pursuing AI initiatives and innovation in general requires that we identify and address certain gaps and key considerations, either partially or fully. This is the basis of the AIPB Assessment Component: to assess any gaps and key considerations and determine a strategy to address them. I break this into three categories, as follows:

-

AI readiness

-

AI maturity

-

AI key considerations

The Assessment Component and its three categories are represented as the first AIPB Methodology Component phase (discussed shortly) and should be addressed very early by any company planning on undergoing an applied AI transformation. These assessments are so important that they are represented as a separate core component in AIPB. Making the appropriate assessments and developing strategies, collectively referred to by AIPB as an “assessment strategy,” based on the findings will help ensure initiatives don’t fail and, more importantly, that they’re set up to win from the start.

Note that developing strategies and addressing gaps around readiness, maturity, and key considerations should not be considered a hard prerequisite for moving forward with AI, machine learning, or innovation in general. Rather, performing the assessment and developing your assessment strategy should be done first. In my opinion, it’s necessary for most companies to begin with AI now rather than later and work on filling gaps and addressing key considerations along the way.

AI Readiness and Maturity

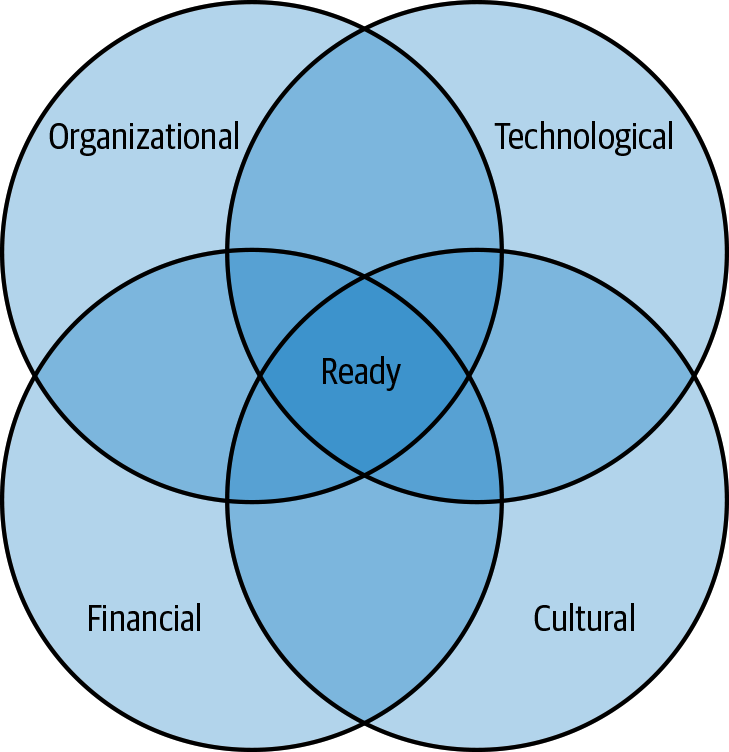

Readiness versus maturity? For me, readiness means being ready in certain ways before getting started with something. Figure 3-3 shows the AI Readiness Model that I created in which I organize AI readiness into four categories. I cover this model in depth in Chapter 12.

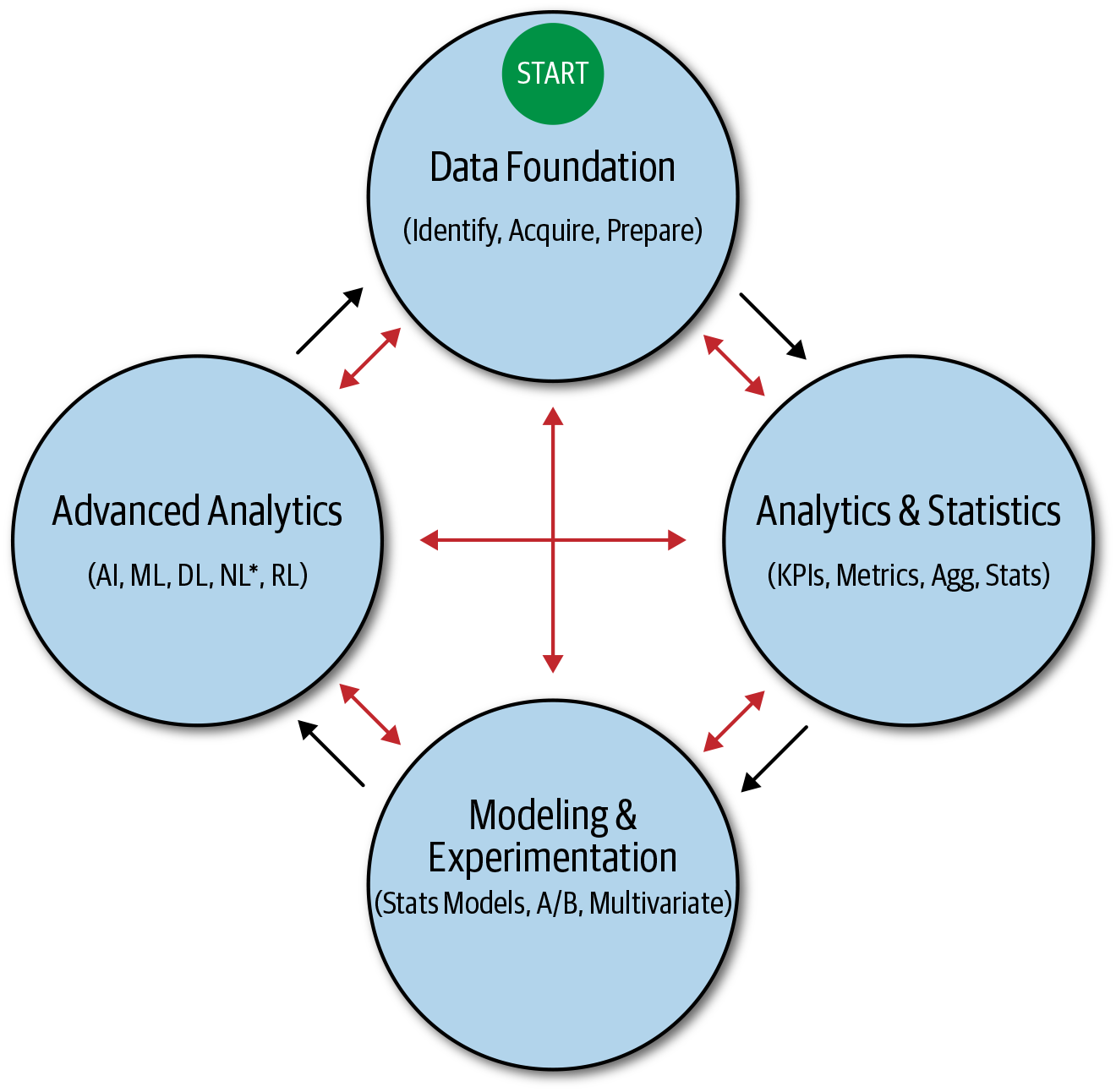

Maturity, on the other hand, represents one or more measures of progression. Although maturity in the context of technology is usually discussed in terms of levels of technical sophistication, I characterize maturity in different ways, as a few models that I have created illustrate, and that when combined specifically in terms of AI represent the way that I define AI maturity.

Figure 3-3. The AI Readiness Model

Two of these models are shown as a preview here. The first, shown in Figure 3-4, is a maturity model that represents analytics sophistication, and the second, shown in Figure 3-5, represents technical maturity, in general, as a collective measure (mixture) of the level of experience, technical sophistication, and technical competency around a given technical field or technology at a given point in time.

Figure 3-4. The AI Maturity Model

AI readiness and maturity, the basis to assess both, and all of these models are covered in much greater detail in Part III of this book, as are AI-related key considerations for which we must account. For now, the key takeaway is that readiness and maturity are both a process and a journey. You don’t need to have a data warehouse or an extract, transform, and load (ETL) system built before pursuing an AI or machine learning task, but you definitely need to identify gaps, create appropriate strategies, and start getting more AI “ready” to begin advancing your AI maturity plan today.

Figure 3-5. The Technical Maturity Mixture Model

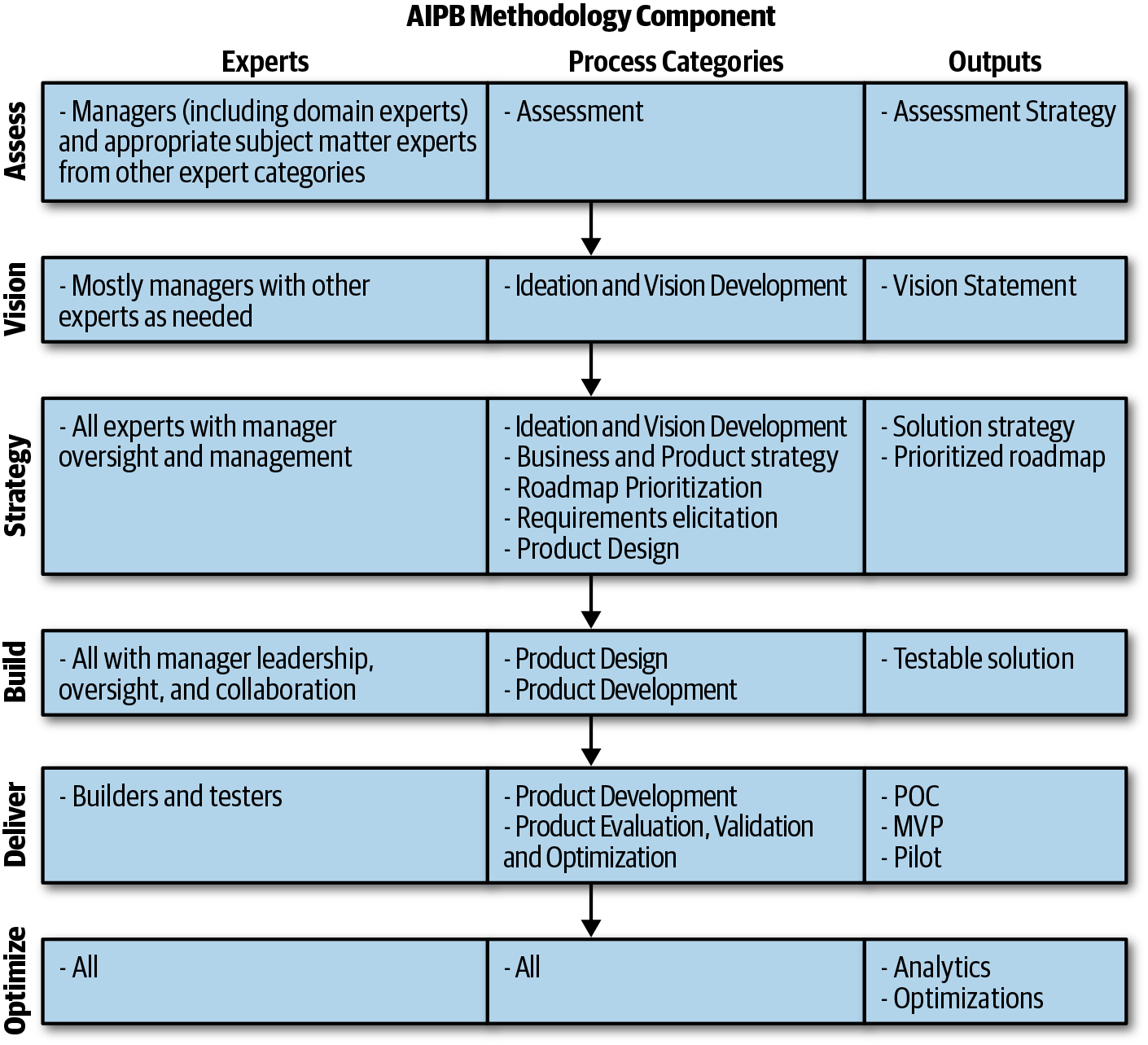

Methodology Component

Let’s move on to the Methodology Component of AIPB, which consists of six iterative phases: assess, vision, strategy, build, deliver, and optimize. Although represented as separate components in AIPB, I’ve logically combined the discussion of methodology and outputs in this section because each of the methodology phases has a specific output. Figure 3-6 shows each methodology phase, along with corresponding and recommended experts, process categories, and outputs, which we cover throughout this section.

Before diving into each phase, let’s remember the North Star of AIPB, which is a focus on people and business, with the specific goals of creating better human experiences and business success. This is critical because this framework (and book) is about pursuing AI that benefits both people and business, not just business, and definitely not AI that will harm people in any way. This aspect of AIPB is critical and must guide everything else.

Figure 3-6. AIPB Methodology phases

We also talk about the experts that I think should be collaboratively involved in each phase. I use the high-level designation in most cases (e.g., designer, scientist) and leave it up to you to decide who in your company is the best suited “expert” in that discipline to participate in the given phase. I present some example roles, as well.

For all of the methodology phases and generating the appropriate output for each, the approach should be different than many existing frameworks usually suggest. This begins with using a flipped classroom, collaborative, interactive, and/or design thinking-style approach. It’s not about creating paper sheets, bullet point lists, and filling in boxes; rather, it’s about posing questions and answering them. It really doesn’t matter how you arrive at the answers, just that you arrive at them and they’re the right ones, or at least as right as possible. It is also paramount that these answers can be effectively communicated to any stakeholder as needed.

Depending on the phase, the questions might be about goals, benefits, outcomes, people, risks, costs, trade-offs, considerations, assumptions, strategies, techniques, or gaps. Regardless, the output of each phase should include explanations in plain English that are highly explainable to anyone, regardless of expertise and background. For many executives, this is a necessity.

For certain phases, the answers to the right questions in an easy-to-understand verbal form should be all that’s needed to generate a shared understanding and also set expectations properly. From my experience, bullet point lists and other forms of documentation often fall woefully short in this respect.

So on that note, let’s dive into the phases of the AIPB methodology and the outputs of each phase. Keep in mind that all phases can be iterative and participate in a feedback loop; in other words, the outputs of downstream phases might suggest iterations of previous phases to drive continuous improvements and maximize outcomes.

Assess

To recap, the Assessment Component includes assessments of the following:

-

AI readiness

-

AI maturity

-

AI key considerations

Following are some questions around these categories that your assessments should answer:

-

What are the gaps in my company’s readiness for AI?

-

Is my company able to pursue scientific innovation (i.e., be agile, exploratory, and experimental)?

-

How would I characterize my company’s data and analytics maturity? What about AI maturity? What gaps do I need to address?

-

What are the AI-specific key considerations that we should understand and address?

-

What are the critical assumptions and potential risks we need to understand and test?

From the AIPB process categories, the assessment category is applicable to answering these questions. Assessments should result in a plan—that is, a strategy. As such, the outputs of this component are defined by AIPB as an assessment strategy, which includes strategies for filling gaps related to AI readiness and maturity as well as strategies for addressing AI key considerations. Neither should be considered hard requirements to move forward as discussed, but they shouldn’t just sit on a piece of paper as a bullet point list of gaps and considerations either. Turning assessments into an assessment strategy is critical; it helps planning and, most importantly, helps to avoid potential failure while ensuring maximum success.

Many AI initiatives fail, as already discussed. I would argue that most do so because of either lack of assessment strategy, as we’ve defined, or because of lack of vision, strategy, and the ability to execute, as we cover next. In either case, don’t wait until it’s too late to identify and address potential points of failure.

Lastly, given the business-level and strategic nature of these assessments, the experts involved should be mostly managers (including domain experts) and appropriate SMEs. This includes executive leadership, functionally appropriate executives and managers (e.g., CAIO, CDO, CAO, VP AI/Data Science, Director AI/Data Science), and appropriate individual contributor team leads brought in as needed for specific expertise and analysis.

Vision

In my opinion, a vision for a new business, product, service, or feature is the high-level why, how, and what. The why can be expressed in terms of goals, benefits, or outcomes. The why can be driven by solving a specific problem, meeting a certain need, or eliminating a given pain point, but it can also be driven by wanting to create better human experiences without requiring a problem, need, or pain point. The goal is to define the “right” why’s, which can mean using a technique like the five whys to get there.

A great example are the swipe and pinch touch interactions that were introduced by products such as the first iPhone. I don’t think many people would have considered it as being a solution to a problem per se, nor do I think many people ever thought that type of interaction was even a possibility at the time. That said, as soon as people experienced those touch interactions, it became a delighter for the masses. Further, many people viewed these new interaction possibilities as solutions to problems that they didn’t even realize they had. Delighters can fuel a great vision in the same way that solving problems and eliminating pain points can.

Returning to AIPB’s emphasis on people and business, the key is to define the why for both. For businesses, the why is most commonly framed as a business case: how will it help the business in terms of goals, KPIs, objectives, ROI, or some similar measure. This is where most frameworks or models stop.

AIPB must also define the why for the people that are benefactors of the solution, which can be a customer, end user, or internal employee. There are very few, if any, innovative technology applications that don’t have an impact on a human in one way or another. Even full-blown automation affects people, although not always in a beneficial way if it is used to eliminate jobs without any job reassignment, retraining, or reskilling. We examine the topic of automation and job displacement in a later chapter, but for now keep in mind that this book is about how we can use AI to benefit people and businesses, and that includes beneficial augmented intelligence, job reassignment, retraining, and reskilling, as needed.

So, the key then is to define the business case and also the intended benefit for people who will experience the AI solution directly, regardless of whether the AI solution takes the form of insights, recommendations, predictions, augmented intelligence, optimization, or automation. All of these forms of AI can benefit stakeholders, so the goal is to identify who the stakeholders are and how they benefit.

So how do you formulate your AI vision and what is the final output? Let’s begin by asking the right questions:

-

In which of the following am I most interested? Achieving a company goal, reaching a specific KPI target, solving a problem, eliminating a pain point, creating better human experiences, saving lives, maximizing patient outcomes, or helping people with disabilities, for example?

-

If I develop an AI-based solution based on the answer to the previous question, how will it benefit my business? How will it benefit people?

-

What AI opportunities will help me make these benefits a reality (assuming more than one), and how do I choose and prioritize them?

-

At a high level, what will the solution be?

-

At a high level, how will I be able to make this solution a reality and a success?

-

What is the potential ROI?

You’ll notice I use the phrase “at a high level” for a couple of the questions. The reason is that we have not yet gone through the process of actually creating a strategy for making your vision a reality, and therefore we might not know many of the considerations, risks, details, designs, and so on that we’ll discover along the way. We’ve also not yet gone through the exercise of determining the desirability, viability, and feasibility of a promising idea. Vision development is a starting point of an iterative process, and, as I mentioned earlier, it might need to be modified and improved based on downstream activities. This is perfectly fine and, if anything, will help ensure success.

In terms of answering these questions and producing the desired output of the vision phase, I recommend managers (which includes domain experts) as the primary experts to participate in the process; for example, executive leadership as well as functionally appropriate executives and managers. You also should bring in other experts as needed (particularly AI practitioners from the scientists category), and the process should be collaborative and interactive, involving high-level assessment, ideation, and prioritization because there might be multiple great opportunities.

For the AIPB methodology vision phase, and from the AIPB process categories, I recommend the ideation and vision development category. Many of the recommended methods in this category are commonly and successfully employed by product managers and UX folks.

The output of the vision phase should be a people and business-focused vision statement that specifies the answers to the questions posed in an easy-to-understand way that can be delivered as an elevator pitch. It should start with why and describe the purpose and goals of the AI application (for both people and business), how it will be built, and what the solution will be.

A great example of something similar is Amazon’s “Working Backwards” customer-centric methodology.5 It’s an approach designed to work backwards from the customer in order to come up with product ideas, as opposed to the other way around.

The output of the process is a one-page internal press release written by a product manager that announces the not-yet-existent finished product. The idea is that the press release must indicate who the target customer is, what benefits the product will provide to them, how existing products fail, and how the new product will be better than the existing alternatives.

Ian McAllister from Amazon notes:

“If the benefits listed don’t sound very interesting or exciting to customers, then perhaps they’re not (and shouldn’t be built). Instead, the product manager should keep iterating on the press release until they’ve come up with benefits that actually sound like benefits. Iterating on a press release is a lot less expensive than iterating on the product itself (and quicker!).”

Ian McAllister, Director

McAllister suggests writing these press releases in what he calls “Oprah-speak.” In other words, write it in the way you would imagine Oprah Winfrey explaining it to her audience.

Although not exactly phrased in terms of the type of vision statement that I’m recommending for AIPB, it’s certainly a great example. I especially like his term “Oprah-speak”; that is, if Oprah can deliver the vision to an audience and have everyone understand it, it’s probably well built. I provide an example of developing an AIPB vision at the end of Part II.

Strategy

With an AI vision in the form of a vision statement in place, the next step is to develop an AI strategy. Developing an AI strategy (or data and analytics strategy in general) is something with which I find many companies, executives, and managers struggle with.

AI applied in the real world is relatively new, not generally well understood, engrossed in excessive marketing hype, needs a certain amount of technical expertise that is in short supply, and requires acceptance of uncomfortable concepts such as nondeterminism and uncertainty to truly understand and develop a strategy based on it. These are some of the many reasons why AI leadership is critical to successfully developing a vision and strategy around AI.

So, what exactly do I mean by AI strategy? In the context of AIPB, an AI strategy is the plan for execution of your AI vision in order to make it a successful reality. It also represents a plan for executing the end-to-end process of taking an idea through to becoming a continually optimized solution in production. An AI strategy guided by AIPB should take the form of a solution strategy and prioritized roadmap.

The solution strategy (i.e., the plan) should define the people, processes, and resources (e.g., tools) required to do the following:

-

Make your AI vision a successful reality

-

Execute your assessment strategy initiatives while executing your AI strategy

-

Iteratively execute the Five Ds (discussed shortly)

The purpose of the AIPB solution strategy is to successfully execute your AI vision by helping to develop an AIPB prioritized roadmap. It’s also to ensure successful downstream AIPB Methodology phases, outputs, and resulting AI-initiative benefits and outcomes. Having the ability to create and execute a successful AI vision and strategy is a critical part of pursuing AI initiatives.

The actual format of the solution strategy is less important than the strategy itself. We can materialize it as one or more documents, diagrams, whiteboards, or any other format as long as it serves its purpose and gets the job done. A prioritized roadmap, on the other hand, should have a well-defined format, as we’ll later discuss.

From a product strategy, design, and development methodology perspective, I use my own version of a model that some refer to as the Five Ds for describing the iterative and end-to-end process of building successful AI products and product features. Figure 3-7 shows the stages of the Five Ds.

Note that actually performing some of the work outlined by the Five Ds (e.g., design, develop, deliver) is covered by certain downstream methodology phases of AIPB, so the purpose of the Five Ds during the strategy phase of AIPB is to develop a plan for successfully executing these downstream phases.

Figure 3-7. The Five Ds

To create a solution strategy as part of your AI strategy, answer the following questions for each stage of the Five Ds. They are posed in the context of the AI solution defined by your vision.

Discover:

-

What are the why’s, goals, needs, and/or pain points?

-

How does the solution create better human experiences and business success?

-

Who are the stakeholders and benefactors of the solution around which your vision is based?

-

What are the solution’s business and individual use cases?

-

What does the potential market look like (market analysis) and how is your solution differentiated from your competitors (competitor analysis)?

-

How can you ensure that your solution will achieve the intended benefits for both people and business?

-

When applicable, how can you ensure that the solution is highly delightful, usable, and sticky (see Chapters 7 and 8)?

-

How complete will the solution be—design prototype, PoC/pilot/MVP, full solution?

Define:

-

What data will be used? Does the data already exist? Do you need to generate any new data? If yes, by what mechanism (e.g., augmentation methods, IoT)? Are there any external data sources that would be beneficial (through purchase or that are publicly available)?

-

Who owns each of the data sources and what steps are necessary to get access?

-

What data pipeline (access, ingestion, ETL, processing, integration, storage), if any, is required?

-

How will the data be prepared (cleaned, validated, transformed, labeled) for your solution?

-

What platform or tools (e.g., Amazon Web Services [AWS] or Google Cloud Platform [GCP] cloud) will be used to train, validate, and optimize models or perform any other AI and machine learning tasks?

-

What software programming languages, architecture, and technologies are required for your solution (tech stack)? This includes firmware and embedded software such as those involved in IoT, and edge and fog computing.

-

What hardware, materials, and manufacturing are required for your solution?

-

What are the software and hardware requirements for your solution (both functional and nonfunctional) on which all designs and working software/hardware will be based? Note that this should be in the context of Agile requirements for software and more waterfall-esque for hardware because, as they say: measure twice, cut once.

Design:

-

What software and hardware designs are required for your solution? This includes visual designs, mechanical designs, and any other assets associated with designing a UX (e.g., information architecture, interaction and flow design, user journey, use cases) or physical object.

-

What technical diagrams, charts, graphics, or schematics are needed?

Develop:

-

What hypotheses do you have, and with what AI and machine learning software, algorithms, and techniques will you explore and experiment? Remember, this is scientific innovation.

-

How will you turn all requirements and designs into a high-quality, successful reality?

-

How will you ensure that you follow software and hardware development best practices?

-

What Agile software development methodology will you use (e.g., Lean, Kanban, Scrum)?

-

What Continuous Integration/Continuous Delivery (CI/CD), and general release mechanisms will you use?

-

What version control technology (e.g., Git) and branching strategy will you use?

-

How will you test everything to ensure maximum quality?

-

How will you test usability and gauge other aspects of the UX?

-

How will you measure success, what metrics will you use, and what tracking mechanisms must you build into the solution?

Deliver:

-

How will you deploy your solution to a production environment so that it is being used by actual benefactors in the real world?

-

How will you monitor the health of the solution and address any issues?

-

How will you continue to learn from new data and make data-driven improvements to the solution over time?

-

How will you ensure that the intended benefits of the solution are being realized by both people and your business (efficacy)?

-

How will you monitor the efficacy of the solution and address any issues (e.g., stale models; aka model drift)?

-

How will you scale the solution as needed, and ensure that all other non-functional requirements are being met?

Your solution strategy is all about making your AI vision a successful reality. This begins by performing discovery in order to guide the prioritization and definition of the best solution (prioritized roadmap), which then guides how you design, build, deliver, monitor, and improve the solution.

Note that some of the discovery questions given here were also included in our discussion of developing your AI vision. There is some overlap. The initial discovery and understanding from the higher-level vision phase will guide your strategy development and increase granularity in terms of discovery and definition.

From a product management perspective, a product vision and strategy is manifested as a prioritized product roadmap that consists of a plan to deliver a working solution either through continuous delivery (CD), product releases, or both. The product roadmap can be either time constrained based on product development team estimates or rough and not necessarily based on fixed time and effort estimates.

The latter is more appropriate with increasing levels of pioneering, innovation, and uncertainty (i.e., scientific innovation) and depends on your company’s degree of AI readiness and maturity. Statistics- and probability-based technologies are scientific, empirical, and nondeterministic by nature, and this certainly applies to using state-of-the-art and emerging AI techniques.

Developing an end-to-end, AI-based innovation strategy in the forms of a solution strategy and prioritized roadmap requires expertise across most functional areas of a business. This means that all AIPB expert categories are applicable as needed, with oversight and management as the responsibility of the manager group of experts (especially product managers given the prioritized roadmap output).

For the AIPB methodology strategy phase, and from the AIPB process categories, I recommend the following:

-

Ideation and vision development

-

Business and product strategy

-

Roadmap prioritization

-

Requirements elicitation

-

Product design

I provide an example of creating an AIPB strategy in Chapter 14.

Now let’s move on to discussing the final three phases of the AIPB methodology, beginning with executing the strategy and actually building the solution. Given the target audience of the book, and that these three phases are more tactical in nature, we cover them at an overview level here, but the remainder of the book focuses on developing an AI vision and strategy, subjects that are better suited to the target audience.

The build phase centers on software, hardware, and analytics product development, and includes design, development, and testing at a high level. It assumes all discovery and definition work has been completed and a prioritized roadmap is available to guide the build phase process.

In reality, all phases discussed so far tend to be iterative, and as much as people try, some requirements, use cases, and other defining aspects are usually missed or not fully understood at the outset (Agile, not waterfall). This means that some amount of discovery and definition might continue during the build process. Also, and most important, I recommend an Agile approach where the Five Ds process is carried out on a more granular (feature-level), as-you-go basis, as opposed to performing discovery and definition for an entire roadmap at once (which is more waterfall). A competent product manager and product development team can facilitate this process, and Kanban is my recommended development methodology for this phase.

After you have developed a prioritized roadmap and Agile requirements, design, development, and testing work should follow. Requirements should include both functional and nonfunctional requirements. Functional requirements specify how the solution should work, look, and feel, whereas nonfunctional requirements specify solution requirements around scalability, reliability, and maintainability, for example.

Testing includes software, quality assurance (QA), and user acceptance testing (UAT). Note that any development not requiring designs can begin while design work is happening in parallel with other features (e.g., data preparation, exploratory analytics, predictive modeling).

All experts are involved in the build phase of AIPB—managers, designers, builders, testers, and scientists—with manager involvement centered mostly on leadership, oversight, and collaboration. Product-related roles such as product manager represent the primary management roles from the managers group of experts for the build phase, although other stakeholders in management will continue to participate in the build process through demos, feedback gathering, and status updates.

For the AIPB methodology build phase, and from the AIPB process categories, I recommend the product design and product development categories. Methods, techniques, and best practices associated with these two tend to evolve or change relatively often over time, so the experts involved should make decisions and provide guidance accordingly.

The output of the build phase is a testable solution, and that either represents a part or the entire solution. The output can be from one or multiple categories such as designs, software, hardware, models, and algorithms. The key point is that the output is in a testable state.

Testable design outputs include all relevant design assets such as wireframes, mockups, interactive prototypes, and flow diagrams. Software and hardware outputs include working functionality that can be executed and demonstrated to stakeholders. Analytics outputs can include insights, data visualizations, reports, descriptive analytics, predictive models, or other AI-powered software, models, and engines (e.g., recommendations, natural language processing).

As soon as each roadmap item is built in accordance with the requirements and is successfully tested for maximum quality, it is delivered, either continuously (continuous delivery) or as a release, which we discuss next.

Deliver

The AIPB deliver phase is about delivering high-quality, working solutions to a production environment; that is, an environment in which actual benefactors (business, users, customers) will take advantage of it. In the case of automation, the solution is deployed to automate a real process involving real-world data.

Deploying working solutions to production environments involves specialized experts and processes. Like the build phase, the result of the deployment process has both functional and nonfunctional requirements as well. Functionally, the solution must look and work as expected after it’s deployed. This is usually verified with certain production tests (e.g., smoke tests, QA, UAT). Nonfunctionally, the solution must successfully operate under the real-world conditions to which it will be subjected; for example, the solution must be able to scale as needed and be always available.

The experts involved in the deliver phase of AIPB include mostly builders and testers. For the AIPB methodology deliver phase, and from the AIPB process categories, I recommend the product development and product evaluation, validation, and optimization categories. As in the build phase, experts should employ the latest methods, techniques, and best practices.

The output of the deliver phase is a working solution. It will likely be minimalistic for new innovations, and represent an MVP, PoC, or pilot. I would recommend an Agile and Lean approach as a key strategy in order to ensure iterative validation, pivots, and success as quickly as possible. This means building an MVP first to test the riskiest assumptions, validate product-market fit, ensure that the solution actually accomplishes its goals, and better understand aspects of the UX such as usability and delight (concepts we discuss later in the book). You can continue to build on and improve the MVP with subsequent updates.

The deployed solution should have active health monitoring, logging, and tracking (e.g., tagging, event capture) in which to detect issues and perform analytics. Monitoring and analytics should provide data-driven insights around the health of the solution, whether the solution is providing the intended benefits (to both people and business!) and whether the solution is achieving intended KPIs and to what extent (e.g., success metrics, ROI), and ensuring that all nonfunctional needs are being met (e.g., scalability).

One specific and AI-related item to closely monitor is stale models and model drift. Markets, trends, fads, environments (e.g., economic), competitors, interests, and people change constantly. Data changes constantly as a result, which means that models trained and optimized to a certain performance level on yesterday’s data might not perform well on tomorrow’s data. AI-based solutions typically require ongoing retraining and improvement; or, put another way, continued data gathering, knowledge development, and ongoing learning. This is best facilitated by developing a data feedback loop in which new data generated by the solution is regularly fed back into the system for updated learning and performance improvement.

After you deploy a solution to a real-world environment and are continuously monitoring and analyzing it, as discussed, you need to direct your attention to optimization, the topic of the next section.

Optimize

The optimize phase is the final phase of the AIPB Methodology Component. After you have delivered an AI-based solution with appropriate monitoring and tracking, and you have determined that the solution is worth developing further (e.g., to achieve product-market fit), you should optimize it for better performance and user delight.

Health monitoring and logging should indicate whether any functional or nonfunctional issues need to be fixed or optimized. You should regularly address any signs of stale models and model drift with ongoing learning and performance optimization using a prebuilt data feedback loop. You should regularly analyze KPIs and metrics to determine the efficacy of the solution based on the intended vision and goals and also for discovering any patterns and insights indicating areas of further improvement and lift. You should regularly solicit, capture, and analyze user, customer, and stakeholder feedback in order to further improve and optimize the solution, as well.

Experimentation and testing are also powerful optimization tools that carry the added benefit of helping to determine cause and effect. Correlation does not imply causation, and predictive models are usually unable to uncover all causes leading to specific effects or outcomes. Experimentation techniques such as A/B and multivariate testing are very well suited to continued optimization through strategic experiments and gaining a deeper causal understanding as well. These techniques can also be used to compare the efficacy of different AI models, and generally using AI solutions as compared to the status quo.

Finally, AI and machine learning are rapidly evolving and advancing fields. This is true in all aspects, including research, software, algorithms, and hardware (e.g., deep learning optimized processors such as Graphics Processing Units [GPUs] and Tensor Processing Units [TPUs]). Optimization of AI and machine learning deployments is not only related to better model performance (e.g., predictive accuracy), but also to hardware and training cost reductions, increases in training speeds, reduced computational complexity and resources required, and faster and automated analytics.

The experts involved in the optimize phase of AIPB include everyone. The optimize phase is based on literally everything upstream of it, and any and all aspects can likely be optimized or at least improved. This includes potential involvement of all of the process categories and associated methods from the AIPB process categories. In fact, I’m a huge proponent of kaizen. It’s a Japanese word for improvement with a focus on continuous improvement that was made famous by the Lean manufacturing movement and Toyota’s renowned Toyota Production System (TPS).

The output of the optimize phase is a solution whose intended benefit and outcome performance is well understood and continuously improved in all possible ways; for example, goals, KPIs, lift, user delight, benefits, and performance. Put another way, the outputs are effective analytics and data-driven continuous optimizations.

The Flipped Classroom

Recall that I said that the unique value of AIPB was its North Star, benefits, structure, and approach. We’ve already discussed the North Star, benefits, and structure, so now let’s discuss the recommended approach.

There are many collaborative and interactive techniques (e.g., brainstorming, prioritization) that are employed by skilled educators and practitioners of various disciplines, particularly very good product managers. One method I especially recommend when executing AIPB, and in general, is the concept of a flipped classroom.

The flipped classroom “flips” the traditional in-class versus out-of-class work, and has students review instructional material (e.g., lectures, readings) outside of class (i.e., the time traditionally used for doing homework, research, and project work) and often online. This allows class time to be much more productive and effective through discussions, collaboration, projects, and other forms of actual “doing” as opposed to just listening and being lectured at. At the risk of sounding obvious, actually doing gets things done, and provides better learning, experience, and understanding.

With the proper approach, this is easily translated to work environments, as well. Rather than schedule a meeting and spend a good part explaining methods, techniques, and subject matter details, have attendees review all of that beforehand so that the meeting is purely focused on getting things done. For example, if the goal is to create a prioritized roadmap of goal-aligned initiatives, have attendees and participants review the prioritization concepts and techniques to be used ahead of time. You can answer any questions people have up front, but the majority of collaboration time should be actual selection and prioritization, with the output being a prioritized roadmap that will drive next steps.

Additionally, people regularly send meeting invites to colleagues with absolutely zero description, agenda, or any other information that would otherwise assist them in understanding the purpose and goals of the meeting. Part of the flipped classroom approach here is to never do that. There’s a great TED talk by David Grady that goes over this called “How to save the world (or at least yourself) from bad meetings.” Always let people know exactly what the purpose of the meeting is, what you’ll be covering, the agenda, what the outcomes should be, and anything else that’s relevant. This matters.

Lastly, people might argue that they’re busy and don’t have enough time outside of the meeting to review suggested materials, or might simply not do their part beforehand. Those who have not reviewed the materials in advance are usually obvious based on their questions or lack of understanding of what is to be done and the goals of the session. In the end, you can’t force people and maybe they are truly too busy, but the end result is what is too often seen in the real world: unproductive and ineffective meetings, time wasted, slow progress, and sometimes failure. This might require a cultural shift, but it’s worth it.

Summary

AIPB is a unique AI-based innovation framework that emphasizes the benefits of innovation for both people and business. It has many benefits as discussed, and these benefits combined with the framework’s unique North Star (better human experiences and business success), structure, and approach are its key differentiators. AIPB is meant to be a one-stop-shop for AI vision, strategy, execution, and optimization for maximum success.

AIPB requires that the appropriate experts are included and collaborate effectively throughout all phases of end-to-end AI-based innovation. Each phase is modular in that the process categories and associated methods employed should be either those recommended by me or by the experts involved based on their experience, expertise, and current best practices. The outputs of each phase should drive innovation toward the end goal, which is AI-powered better human experiences and business success. For the latest AIPB information and resources, visit https://aipbbook.com.

Now let’s turn our focus to understanding AI and machine learning at a high and nontechnical level, followed by a close look at the ways that AI is being used in the real world today and the vast potential it presents for tomorrow.

1 For example, scrum master, product owner, team member, and stakeholder. These roles are meant to bring together the voice of the business, voice of the customer (or user), domain expertise, and technical expertise when working collaboratively on a scrum team. This collaboration is used to determine the desirability, viability, and feasibility for any given product or product feature and to execute the Agile product development process itself.

2 For example, technical feasibility and sprint retrospectives.

3 For example, sprint planning (tasking and estimation), daily scrum (aka daily standup), sprint review (software demo and feedback gathering), and sprint retrospective.

4 For example, product roadmap, product and sprint backlogs, and a release plan.