CHAPTER 7

Storage and Archiving in the AWS Cloud

In this chapter, you will

• Learn how Amazon Elastic Block Storage (EBS) provides block-level storage volumes for use with your EC2 instances

• See how Amazon Simple Storage Service (S3) provides object storage in the AWS cloud

• Understand Amazon S3 Glacier, a storage service optimized for infrequently accessed data that’s ideal for data archiving and backup storage

• Learn how Amazon Elastic File System (EFS) provides scalable file storage for your EC2 instances

• Learn about Amazon Snowball, a service that helps you transport large quantities of data to and from AWS with the help of an AWS-provided storage appliance

• Learn about the Amazon Storage Gateway, a service that connects your on-premise software appliances with AWS S3 storage

This chapter discusses the various storage options offered by AWS. These storage options are wide ranging—from object storage in the cloud, to an elastic file system that lets you access an AWS-based file system as a local file system.

Overview of AWS Storage Options

AWS offers a broad range of storage options that offer plenty of flexibility. You can move between various storage tiers and storage types at any time. Your goal is to choose the AWS storage option that suits your organization’s needs, including cost, availability, durability, and performance considerations. Before you consider the various storage alternatives offered by AWS, you must identify your storage requirements.

Identifying Your Storage Requirements

There’s no one AWS storage type that suits all workloads. Different storage types are geared toward different purposes, such as durability, speed of access, cost efficiency, and security. You must therefore understand the performance profiles of your workloads and choose a specific storage type that matches each type of workload that you manage.

Following are the key factors that determine the type of storage required for a workload:

• Performance You can measure performance in terms of throughput, or IOPS (I/O per second). Throughput (measured in megabytes per second) is the amount of data transferred in a set time period; IOPS relates to the latency or speed of data access. Some workloads require a high throughput, while others require a high IOPS. AWS offers storage types that are optimized for either, so you can pick the right storage for your needs.

• Availability This criterion measures the ability of the storage volumes to return data when it’s requested.

• Frequency of data access AWS offers storage types designed for frequent, less frequent, or infrequent access to data.

• Data durability If your data is critical and must be stored indefinitely, you must choose the storage type that meets these requirements. Transient data such as clickstream and Twitter data, for example, doesn’t require high durability, because it’s not critical data.

• Data sensitivity Some types of data are critical from a business point of view, and others are critical from a security or regulatory point of view.

• Cost of storage Probably one of the most important criteria in choosing the storage type is the cost of storing data. Your storage budget, the importance of the data that you store, and the speed with which you must retrieve the data are pertinent factors in evaluating the storage cost factor.

Choosing the right AWS storage service means that you evaluate these key storage-related factors, keeping in mind your budget, compliance and regulatory requirements, and your application requirements for availability, durability, and performance of the data storage.

AWS Storage Categories

AWS offers three basic storage service categories: object, block, and file. Each of these storage services satisfies a different set of storage requirements, thus providing you a way to choose the storage service, or services, that work best for you.

Object Storage

Amazon S3 is an all-purpose object storage option that offers the highest level of data durability and availability in the AWS cloud. Object storage means that the files that you store in this type of storage are saved as single objects, and not as data blocks. If you store a file sized 40MB, it’s saved as a single, 40MB-sized object in S3.

As you’ll learn in the next section, block storage is provided as mountable storage volumes that you can format and use. Object storage is completely different from block storage in this regard: With block storage, you don’t have direct access to the object storage volumes in S3; instead, you use APIs to make calls to the S3 service to read, write, update, or delete data that you store in S3 buckets. (I describe buckets and the objects that you store in them in the sections “Buckets” and “Objects,” later in the chapter.)

As with most AWS object storage types, S3 is a regional service. The data that you store in S3 is automatically replicated across several data centers in different availability zones (AZs). This is a true advantage of S3 storage, because if you had to provide the same level of data durability on your own, you’d need to store your data in multiple data centers.

A key difference in the way you work with S3 and EBS (block storage) is that with S3, there’s no need for you to provision the storage. Use whatever storage you need, and AWS bills you for that storage volume. This is much simpler than provisioning several EBS volumes ahead of time and having to pay for all those volumes, regardless of your usage of those volumes.

S3 comes in three storage tiers for hot, warm, and cold data. To optimize your storage costs, you can move data between the three storage tiers based on how your data changes over time. Hot data is data that your applications and services request quite frequently. Cold data is data that you access infrequently or rarely (such as archived data and backup data). Warm data falls in between the hot and cold data types, in terms of cost and the speed of access. The hotter data costs the most to store but is much faster to access. The reverse is true of the coldest data—it’s the cheapest to store but slowest in access time.

S3 is ideal for storing unstructured data such as media content. Here’s how the three S3 storage tiers differ:

• Amazon S3 Standard Delivers low latency and high throughput and is ideal for storing frequently accessed data, such as cloud applications, gaming, dynamic web sites, and data analytics.

• Amazon S3 Standard – Infrequent Access (Amazon S3 Standard – IA) Ideal for less frequently accessed data, such as backup data. It’s cheaper than S3 Standard, but it also costs more to retrieve or transfer data.

• Amazon Glacier Ideal for storing data long-term—for example, storing data for meeting compliance and regulatory requirements. There are various methods to retrieve data from Glacier that differ in their speed and cost of access. You can’t retrieve data that you store on Glacier immediately; retrieval can take a few minutes to several hours. So use Glacier when you don’t need to retrieve the data often and you can afford to wait a long time to view it. In addition, content can’t be searched or served directly from Glacier storage, unlike other S3 storage types.

Here’s the pricing structure for S3 storage as of February 2019, based on the US East (N. Virginia) prices (costs may vary across AWS regions):

As you can tell, there’s an inverse relationship between the speed of access and its cost. AWS charges you only for usage of S3 storage, so it doesn’t bill you on the basis of your provisioned S3 storage. Take advantage of this by using S3 wherever possible.

Block Storage

Amazon EBS is block-level storage that you can use with EC2 instances. Files are stored in block storage volumes (similar to hard drives), and data is divided into chunks, or blocks, of a certain size, such as 512-byte blocks. A file of 20KB in size could be chunked into 40 blocks, for example. The OS tracks each of the data blocks independently of the other blocks.

The block storage that your provision with EBS is in the form of disk drives that are mountable to EC2 instances. The drives come unformatted, and you can format them and specify their block sizes. Although the Amazon Machine Image (AMI) you may use determines things such as the block size, you can make changes to a drive by specifying attributes such as the format and the file size of the disk drives.

An EBS volume can be a boot volume or a data volume. You can make the same EBS volume serve as both a boot and a data volume, but this means that you’ll need to shut down the instance when resizing the volume. Many EC2 servers are stateless, so there’s no need to maintain separate boot and data volumes for those instances.

Although a boot volume (root device volume) is automatically destroyed when you terminate an instance, additional EBS volumes that you’ve attached to an instance (data volumes) are independent of the instance lifecycle, and thus stay intact after instance termination if the Delete on Termination option is not selected. Once you attach the block storage to an instance, you can copy, share, resize, restore, and detach the drives as you’d do with any other disk drive.

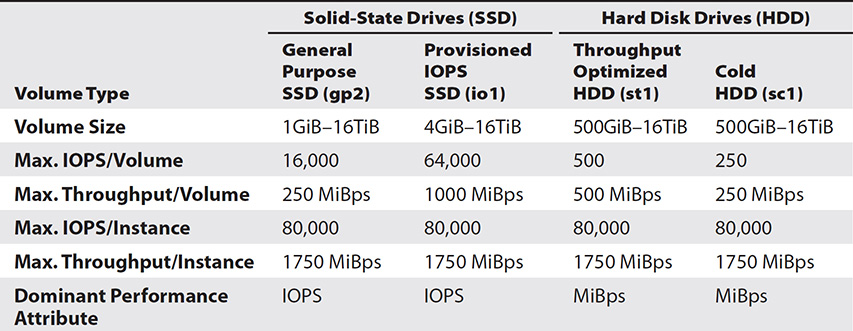

EBS storage is meant for storing data that requires both long-term persistence and quick access. There are two types of block stage: solid-state drive (SSD) and hard disk drive (HDD). Here are the differences between the two EBS block storage types:

• SSD storage is best for transactional workloads, where you need high performance with a high IOPS. You can choose from the following SSD types:

• SSD Provisioned IOPS SSD (io1) If your workloads are latency sensitive and require a minimum guaranteed IOPS, the io1 SSD volumes are right for you. These storage volumes require you to pay separately for the Provisioned IOPS you ask for.

• EBS General Purpose SSD (gp2) The gp2 SSD volumes offer a cost and performance balance and are meant for general use where you don’t need high levels of Provisioned IOPS. The gp2 volumes cost less than the io1 volumes.

• HDD storage disks are designed for throughput-heavy workloads such as data warehouses. As with Provisioned IOPS SDD, you have two choices:

• Throughput Optimized HDD (st1) The st1 volumes are optimized for frequently accessed workloads.

• Cold HDD (sc1) The sc1 volumes are designed for infrequently accessed workloads.

Here’s the pricing structure for EBS storage as of February 2019, based on the US East (N. Virginia) prices:

Not only is EBS Provisioned IOPS storage more expensive, but you pay for the EBS volumes that you provision, regardless of the extent of your usage. Therefore, you must consider using these volumes only if your applications strongly demand the Provisioned IOPS.

File Storage

Amazon EFS provides scalable file storage for your EC2 instances without your having to provision storage. Big data, content management, and web serving applications can benefit from EFS file storage. Its fast file synchronization capability enables you to synchronize your on-premise files to EFS at speeds that are up to five times faster than normal Linux copy mechanisms.

In the same N. Virginia region as in the previous two cases (S3 and EBS), EFS storage costs you $0.30 per GB-month. EFS is, therefore, generally more expensive than EBS and S3, but you don’t need to provision storage; instead, you can simply use EFS for scalable file storage.

In Chapter 3, I discussed the instance store as a storage option. The instance store is also a block storage type but is often referred to as ephemeral storage because it’s best for temporary work, not for persistent storage such as EBS. Instance storage, unlike EBS storage volumes, isn’t separate from the instance. It’s located on the same hardware as an instance and its cost is included in that of the instance per hour. This means that when you stop the instance, the instance volume isn’t available and all data on it is lost. You can run a root volume on the instance store instead of an EBS volume, but if you do, you can’t really stop the instance, as a stop terminates the instance.

Unlike EBS volumes, which you can attach to all EC2 instance types, some instance types don’t support instance stores; they support only EBS volumes. Also, unlike EBS volumes, you can choose an instance store–based storage volume only when creating an instance, but not later. Finally, any encryption that you want to perform for the data on instance store volumes is at the OS level, and you’re fully responsible for it.

Now that you have a basic idea of the various AWS storage options, it’s time to dive into the details of each of the key storage choices.

Amazon Elastic Block Storage

Amazon Elastic Block Storage (EBS) provides block storage volumes that you can attach to your EC2 instances. You can use the volumes like any other physical hard drives. The EC2 instance can format the EBS volume with a file system such as ext3 or ext4 and use the volume as it would any local physical drive. Once you attach the EBS volumes to an EC2 instance, they persist independently from the instance, meaning that they’ll remain intact after you terminate the instance, unlike instance store volumes, which are ephemeral.

AWS recommends that you use EBS volumes when you require quick access and want to store data for long periods. Thus, EBS volumes work well for storing file systems and databases, and they are the best choice when you need raw, unformatted block-level storage. EBS volumes are appropriate for applications that perform random reads and writes and for throughput-heavy applications that perform massive continuous reads or writes.

An EBS volume and the EC2 instance to which you attach the volume must belong to the same AZ. You can attach multiple EBS volumes of varying sizes to an EC2 instance, but you can attach an EBS volume only to one EC2 instance at any time.

Benefits of Amazon EBS Storage

EBS storage volumes offer several benefits:

• Persistent storage EBS volumes live independently of the EC2 instance to which you attach the volumes. However, you continue to pay for the volumes even if you terminate the instance. Data on an EBS volume stays intact through instance starts and terminations. The data remains persistently on the volumes until you delete the data. When you delete an EBS volume, AWS will zero-out (wipe out all data from the disk) the volume before assigning it to other AWS accounts. By default, the volumes are detached from an instance upon instance termination, and you can reattach them to another EC2 instance, if you want.

• Dynamic changes If you use a current generation EBS volume by attaching it to a current generation EC2 instance type, you can increase the instance size, the Provisioned IOPS capacity, and the instance type while the volume continues to be attached to the EC2 instance.

• Snapshots for backups You can create backups of EBS volumes in the form of snapshots and store the snapshots in Amazon S3 in multiple AZs. You pay for the S3 storage charges based on the EBS volume size. EBS backups are incremental backups, which means that for newer snapshots, you pay only for the data that’s beyond the volume’s original size.

• Replication AWS automatically replicates an EBS volume in the AZ in which you create the volume.

• Encryption You can encrypt your EBS volumes to satisfy various data-at-rest encryption requirements or regulations. The snapshots that you make of an encrypted EBS volume are automatically encrypted. To protect your sensitive data, it’s a best practice to encrypt data or use EBS-encrypted volumes.

Enhancing EBS Performance and Best Practices

For best performance, AWS recommends that you use an EBS-optimized instance. EBS optimization offers the best performance for EBS volumes by minimizing the network contention between EBS I/O and the other traffic flowing to and from an EC2 instance. Depending on the EC2 instance type, an EBS-optimized instance provides a dedicated bandwidth that’s between 425 Mbps and 14,000 Mbps.

You can choose from various EBS volume types, each of which offers a performance delivery that’s guaranteed to be very near to its baseline and burst (when workload is heavy) performance if you attach it to an EBS-optimized instance. For instance, General Purpose (gp2) EBS volumes will deliver performance that’s within 10 percent of their baseline and burst performance, 99 percent of the time, throughout a year. So if you want guaranteed disk performance to meet the needs of your critical applications, use EBS-optimized EC2 instances.

In addition to configuring EBS-optimized instances, you may want to consider following these best practices for getting the most of your EBS storage volumes:

• Instead of attaching just a single EBS volume to an instance, consider stringing together multiple EBS volumes in a RAID 0 configuration to take advantage of the maximum bandwidth for the instance.

• For the st1 and sc1 EBS volume types (described in the following section), consider increasing the read-ahead setting to 1MB to achieve the maximum throughput for your read-heavy workloads. This setting is ideal for workloads with large sequential I/Os (but detrimental to workloads that consist of many small, or random, I/Os).

• If you create an EBS snapshot from an EBS backup, it pays to initialize the volume ahead of time by accessing all the blocks in the volume before making the volume accessible to users and applications. This strategy avoids a significant latency hit when an application first accesses a data block on the restored EBS volume.

EBS Volume Types

Amazon EBS offers the following EBS volume types:

• General Purpose SSD (gp2)

• Provisioned IOPS SSD (io1)

• Throughput Optimized HDD (st1)

• Cold HDD (sc1)

• Magnetic (standard, a previous-generation type)

I discuss the first four volume types in this chapter. These four EBS volume types differ in performance and price, and you should choose the volume that best fits your performance and cost requirements. The main difference among the instance types is their price/performance ratio.

Throughput and I/O per second (IOPS) are the key performance attributes of a disk. Throughput is the rate at which data is processed. For the two HDD volume types, which are throughput oriented, the dominant performance attribute is mebibyte per second (MiBps). IOPS are a unit of measure (the operations are measured in kibibytes, or KiB) representing input/output operations per second. For the two SSD volume types, the dominant performance attribute is IOPS.

The four volume types fall under two broad categories:

• SSD-backed volumes For workloads that involve heavy read/write operations of small I/O size and whose primary performance attribute is IOPS. They can offer a maximum of 64,000 IOPS, whereas the HDD storage type can offer 500 IOPS at best.

• HDD-backed volumes For large workloads where high throughput (MiBps) is more important than IOPS.

Before we delve into the performance details of the four types of EBS volumes, you need to understand two key concepts that play a role here: the bucket and credit model, which involves burst performance, and throughput and I/O credits.

Bucket and Credit Model: I/O and Throughput Credits and Burst Performance

When evaluating the four volume types, you should consider two important interrelated concepts: burst performance and I/O and throughput credits, which together are referred to as the bucket and credit model. Of the four volume types, three instance types—gp2 (General Purpose SSD), st1 (Throughput Optimized HDD), and sc1 (Cold HDD)—use the bucket and credit model for performance.

The performance of a volume depends directly on the size of the volume. The larger the volume, the higher the baseline performance level in terms of IOPS or throughput, depending on the type of disk. Often, a disk volume is expected to support a sustained increase in IOPS or throughput required by the workload. For example, a database may require a high throughput when you’re performing a scheduled backup. You obviously don’t want to pay the cost of a more powerful volume type just because your workload may spike at times. Ideally, your volumes should be able to support the higher throughput or IOPS for short periods of time by being able to burst through their provisioned performance levels when the workload demands it.

AWS provides I/O and throughput credits to enable a volume to burst occasionally through its baseline performance. I/O and throughput credits represent the ability of a volume to acquire additional capability to burst beyond its baseline performance to reach the higher performance level that you require for a short period. There is a maximum on the number of IOPS or throughput for the burst performance. Each volume receives an initial I/O credit balance and the volumes add to their I/O at a certain rate per second, based on the volume size.

For a volume type with IOPS as the dominant performance attribute (such as gp2), burst performance provides the ability to go above their minimum IOPS for an extended period. Once the volume uses up its credit balance, the maximum IOPS performance stays at the baseline IOPS performance level. At this point, the volume starts acquiring (earning) I/O credits. The IOPS performance of the volume can again exceed the baseline performance if its I/O demands fall below the baseline level, and as a result, more credits are added to its I/O credit balance. Larger drives accumulate I/O credits faster since their baseline performance is higher.

For a volume type with throughput (MiBps) as its dominant performance attribute (such as st1), burst performance means the volume can support higher throughput than the baseline throughput of the volume. Once a volume uses up all its I/O or throughput credit balance (the maximum IOPS or throughput), the volume reverts to its base IOPS or throughput performance.

Now that you’ve learned the key concepts of throughput credits and burst performance, you’re ready to learn the details of the four types of EBS storage volumes. It’s easy to analyze the performance characteristics of the various volume types by focusing on the SSD and HDD drives separately. As I go through the various volume types, I describe the bucket and credit model in greater detail where it’s relevant.

Hard Disk Drives

HDD-backed volumes are throughput optimized and are ideal for large workloads where you are focusing on throughput rather than IOPS. There are two types of HDD: Throughput Optimized HDD (st1) and Cold HDD (sc1).

Throughput Optimized HDD (st1) Throughput Optimized HDD storage provides low-cost HDD volumes (magnetic storage) designed for frequently accessed, throughput-heavy sequential workloads such as those you encounter when working with data warehouses or big data environments such as a Hadoop cluster. You measure performance for a st1 volume in terms of throughput, and not IOPS. Throughput Optimized HDD volume sizes range from 500GiB to 16TiB.

As with the gp2 volume type (discussed in the following sections), st1 volumes rely on the burst bucket performance model, with the volume size determining both the baseline throughput, the rate at which throughput credits accrue, and the burst throughput of the volume. Remember that an HDD volume (such as st1) bases its bursting on throughput (measured in MiBps), and not on IOPS, as an SSD volume (such as gp2), which uses IOPS as the criterion for bursting.

You can calculate the available throughput of an st1 volume with the following formula:

Throughput = (size of the volume) × (credit accrual rate per TiB)

Let’s take, for example, a small st1 volume, sized 1TiB. Its base throughput is 40 MiBps, meaning that it can fill its credit bucket with I/O credits at the rate of 40 MiBps. The maximum size of its credit bucket is 1TiB worth of credits. You can calculate the baseline throughput of a 5TB-sized volume thus:

Throughput = 5 × 40 MiBps / 1TiB = 200 MiBps

The baseline, or base throughput, ranges from 20 MiBps to 500 MiBps for volumes ranging from 0.5 to 16TiB. The maximum of 500 MiBps is reached by a volume sized 12.5TiB:

12.5TiB × 40 MiBps / 1TiB = 500 MiBps

Burst throughput starts at 250 MiBps per tebibyte (TiB) and is capped at 500 MiBps. You will hit the cap at a volume size of 2TiB:

TiB × 250 MiBps / 1TiB = 500 MiBps

Figure 7-1 shows the relationship between st1 volume size and throughput. You can see that both burst throughput and base throughput max out at 500 MiBps.

Figure 7-1 Relationship between an st1 volume’s size and its throughput

Cold HDD (sc1) Cold HDD (sc1) offers the lowest-cost HDD volumes; it’s designed for workloads that you access infrequently. These volumes are ideal where cost, rather than the access speed, is your main objective.

The sc1 type volume ranges in size from 0.5TiB to 16TiB. The sc1 volume type uses a burst-bucket performance model and works like the st1 model discussed earlier. For a small 1TiB sc1 volume, the credit bucket fills at the rate of 12 MiBps, with a maximum of 1TiB of credits. Its burst throughput is capped at 80 MiBps.

You use the same formula as for the st1 volume type to calculate the baseline throughput for sc1. To figure out the baseline throughput for the largest-sized sc1 type volume:

16 TiB × 12 MiBps / 1TiB = 192 MiBps

The maximum burst throughput is capped at 250 MiBps, and you reach this level with a 3.125TiB volume as shown here:

TiB × 80 MiBps / 1TiB = 250 MiBps

Choosing Between the st1 and sc1 HDD Volume Types The scan time for reading data is a critical performance indicator for both HDD types (st1 and sc1). Scan time is the time it takes to complete a full volume scan and is a function of the volume size and throughput of the volume:

scan time = volume size / throughput

Regardless of the volume size, the scan times for the sc1 HDD type are much longer (almost twice as long) than the scan times for the st1 HDD type. If you often need to support workloads that involve many full volume scans, it’s a good idea to go for st1. The sc1 volume type is suitable for workloads that you access infrequently, and where scan performance isn’t important.

Finally, for both HDD types st1 and sc1, the throughput is the smaller of the volume throughput and the instance throughput.

Solid-State Drives

Solid-state drives (SSDs) are designed for high performance. There are two types of SSD-backed volumes: General Purpose SSD (gp2) and Provisioned IOPS SSD (io1).

General Purpose SSD (gp2) General Purpose SSD (gp2) volumes offer a balance between price and performance and are suitable for many workloads, such as interactive applications that require low latency, as well as large development and test databases.

The gp2 volumes offer very fast performance, with single-digit millisecond latencies. A gp2 volume can range in size from 1GiB to 16TiB. The volumes have a baseline performance that scales linearly at 3 IOPS/GiB and can burst to a maximum of 3000 IOPS for extended period of time. A 100GiB gp2 volume, then, has a baseline of 300 IOPS (3×100), and a 1TiB volume has a 3000 IOPS baseline performance. The minimum performance is 100 IOPS (at 33.33GiB and smaller sized volumes) and the maximum IOPS is 16,000, offered by large gp2 volumes sized at 5334GiB and higher, up to a maximum size of 16TiB. A gp2 volume delivers 90 percent of its provisioned performance 99 percent of the time.

A key point that you must understand here is that when you attach multiple EBS volumes to an EC2 instance, the maximum amount of performance for that instance is calculated as the combined performance of all the EBS volumes that you’ve attached to the instance. As Table 7-1 shows, the maximum IOPS for gp2 volumes is 16,000 IOPS. You can attain this performance with a single, large gp2 volume sized 16GiB (the maximum size for a gp2 volume), or you can cobble together a set of four 4TiB-sized gp2 volumes and stripe them as a single RAID 0 device, giving you a logical gp2 volume sized 16TiB, with a combined performance of 64,000 IOPS.

Each gp2 volume comes with an initial I/O credit balance of 5.4 million I/O credits. For smaller (under 1TiB) gp2 volumes, these I/O credits can sustain a maximum burst performance of 3000 IOPS for 30 minutes. The volumes earn I/O credits at the baseline performance rate of 3 IOPS per GiB of volume size. A 500GiB-sized volume will thus have a baseline performance of 1500 IOPS.

When a gp2 volume requires more IOPS than its baseline performance level, it draws down the I/O credits it has accumulated in its credit balance. A volume can use its I/O credits to burst to a maximum of 3000 IOPS. For example, a volume sized 500GiB has a baseline performance of 1500 IOPS and offers a maximum burst duration of 1 hour at 3000 IOPS.

Figure 7-2 shows how both baseline performance and burst IOPS are based on the size of a gp2 volume.

Figure 7-2 Volume size and maximum IOPS for a gp2 EBS volume

A volume’s ability to burst beyond its baseline performance is based on the I/O credits that the volume accumulates at a rate of 3 credits per GiB of provisioned storage per second. Once the burst performance window opens, the volume consumes the credits at the rate of 3 credits per IOPS. A volume can accumulate a maximum of 54 million credits, which means that it can burst for a maximum of 3000 IOPS for 30 minutes. After the volume exhausts all its credits, its performance falls back to its baseline performance.

Burst performance and I/O credits are applicable only to volumes under 1000GiB. For these volumes, burst performance is allowed to exceed their baseline performance. A 16TiB-sized volume (the largest-sized volume in the gp2 volume type) has a baseline performance of 16,000 IOPS, which is greater than the maximum burst performance, and, therefore, it always has a positive I/O credit balance. This maximum 16,000 IOPS baseline performance is for volumes sized 5334GiB and higher. For all volumes sized 5334GiB to 16,384MiB (the 16TiB maximum volume size for a gp2 volume), the baseline performance remains at the maximum level of 16,000 IOPS.

You can use the following formula to calculate the burst duration of a volume, which is directly related to volume size:

burst duration = credit balance / burst IOPS – 3 (volume size)

Provisioned IOPS SSD (io1) Provisioned IOPS SSD (io1) volumes are the most expensive as well as the highest-performance storage volumes and are ideal for missional-critical business applications such as online transaction processing databases that rely on low latency operations, as well as high throughput–demanding large NoSQL databases such as Cassandra and MongoDB.

Minimum and Maximum IOPS It’s a good idea to mention the minimum and maximum IOPS you can specify for a Provisioned IOPS volume. AWS recommends that you specify a minimum of 2 IOPS for each GiB of a Provisioned IOPS volume. This is to ensure adequate performance and minimize latency. So, if you’re provisioning a gp2 volume sized 6000GiB, you assure that the volume has a performance of at least 12,000 IOPS.

There’s also a maximum amount of IOPS that you can provision for a Provisioned IOPS volume. You can specify a maximum of 50 IOPS per GiB unto a maximum of 20,000 IOPS per volume. So, you can provision a 200GiB-sized gp2 volume for 10,000 IOPS. You can provision a gpc2 volume sized 400GiB or higher for the maximum amount of IOPS.

EC2 Instance Maximum IOPS and Throughput There’s a practical limit to the number of IOPS and throughput that you can provision for a single EC2 instance. The maximum IOPS a single EC2 instance can handle is set at 65,000 IOPS. If you need more IOPS than this for your applications, you must provision multiple instances.

As with IOPS, there’s also a practical limit on the throughput a single EC2 instance can handle. If you turn on EBS optimization for a volume, the instance can achieve a 12 GiBps maximum throughput.

You can specify from 100 to 64,000 IOPS per a single Provisioned IOPS SSD volume. The maximum ratio of Provisioned IOPS to volume size is 50. This means that a 200GiB io1 volume can be provisioned with a maximum of 10,000 IOPS. As mentioned earlier, the maximum IOPS you can provision is 64,000 IOPS per volume, Thus, for a volume that’s 1280GiB or larger, you can provision up to the 64,000 IOPS maximum, since 1280GiB × 50 IOPS = 64,000 IOPS.

Provisioned IOPS SSD volumes range in size from 4GiB to 16TiB, with the 16TiB-sized volume offering a maximum of 32,000 IOPS. This volume type doesn’t use the bucket and credit performance model like the gp2 volumes. Instead, it lets you specify the IOPS you require, and EBS will deliver that performance. If you’re using a gp2 volume and find that your workloads require IOPS of greater than 16,000 IOPS (maximum offered by a gp2 volume type) for long periods, you may consider the Provisioned IOPS volume type.

Comparing the EBS Volume Types

Table 7-1 shows the performance storage characteristics of each of the four EBS volume types.

Table 7-1 EBS Volume Types and Their Performance and Storage Characteristics

Now that you know a bit about IOPS and throughput performance, and IOPS and throughput–related bursting behaviors of the various EBS volume types, let’s compare several aspects of the EBS volume types. We’ll look at cost, maximum throughput, and maximum IOPS.

Pricing and Cost A Provisioned IOPS volume is the most expensive storage volume type, since it not only costs more per GB-month of storage, but you also must pay an additional $0.05 per Provisioned IOPS per month (at current prices). So if you use a 10,000 IOPS volume, it’s going to cost you $650 a month on top of the storage cost per month.

If you focus just on the cost per GB-month, the Cold HDD (sc1) volume type may look like the best choice. However, there’s a minimum size of 500GiB for a sc1 volume. If you’re storing only 20GiB of data, then you’ll be paying for a lot more storage than you need.

Maximum Throughput You can compute throughput as a product of the IOPS offered by a volume type and the packet size. Both Throughput Optimized HDD (st1) and Cold HDD (sc1) have very small maximum IOPS (500 for st1 and 250 for sc1), and thus have a small maximum throughput, especially for small random writes. A General Purpose SSD (gp2) volume, on the other hand, offers the highest throughput for such small random writes, at 16,000 IOPS. With a maximum IOPS of 64,000 a Provisioned IOPS SSD volume (io1) offers four times more maximum throughput that a gp2 volume (1000MiB versus 250MiB).

Maximum IOPS Table 7-1 showed the maximum IOPS for all the volume types. EBS specifies the IOPS ratings based on 16KB-sized packets for the SSD volumes and 1MB packets for the HDD volumes. You can go to a maximum packet size of 256KB for SSD volumes and 1MB for HDD volumes.

The difference in the sizing of the packets for the SSD and HDD volumes helps SSD volumes efficiently process a large number of small packets, while HDD handles fewer large packets more efficiently.

Packet size has a huge bearing on the IOPS for a volume and could lead to a lower IOPS than the rated IOPS for the volume. For example, a 100GiB-sized gp2 volume is rated at 300 IOPS if the packet size is 16KB. The 300 random read/write operations are sustained only if the packet size remains at or under 16KB. Should the packet size increase, say to an average of 64KB, the 100GiB volume can support read/write operations at the rate of only 75 IOPS.

Managing Amazon EBS

To use an EBS volume to store your data persistently, you must create the volume and attach it to an EC2 instance. In this section, I’ll show you how to perform common EBS-related administrative tasks such as the following:

• Create an EBS volume

• Attach an EBS volume to an EC2 instance

• Make an EBS volume available for use

• Detach an EBS volume

• Delete an EBS volume

Creating an EBS Volume

You can create and attach an EBS volume when launching an EC2 instance or create the volume and attach it to an existing instance. To keep latency low, however, EBS doesn’t permit attaching volumes to an instance across AZs. This means that both the instance and the volume that you’d like to attach to it must be located in the same AZ. Here are the key things to know regarding the creation of EBS volumes:

• You can create encrypted EBS volumes as long as the EC2 instance type supports Amazon EBS encryption.

• You can create and attach an EBS volume at instance creation time through the specification of block device mapping.

• You can restore an EBS volume from snapshots (see note).

• You can tag the volumes when creating them to support inventory tracking.

Exercise 7-1 walks you through the steps for creating an EBS volume from the console. You can also create an EBS volume from the command line with the create-volume command. Following is an example that shows how to create a 500GiB General Purpose (SSD) EBS volume:

$ aws ec2 create-volume –size 500 –region us-east-1 –availability-zone us-east-1a –volume-type gp2

You must specify the volume size, volume type, region, and the AZ when creating an EBS volume. You can specify several optional parameters, such as encryption, which will encrypt the EBS volume. If provisioning an io1 volume type, you should specify the IOPS.

The previous example showed how to create a brand-new volume from scratch, but you can also create an EBS volume from a snapshot that you’ve stored in S3. You must specify the snapshot ID if you want to restore your EBS volume from the snapshot. The following example shows how to create an EBS volume (Provisioned IOPS) from a snapshot:

$ aws ec2 create-volume –size 500 –region us-east-1 –availability-zone us-east-1a –snapshot-id snap-084028835991ca12d –volume-type io1 –iops 1000

The volume type is io1 (Provisioned IOPS SSD), and the iops attribute specifies that you want this volume to support 1000 IOPS.

When you create an EBS volume from an EBS snapshot, the volume loads lazily, meaning that the EC2 instance can start accessing the volume and all its data before all the data is transferred from S3 (snapshot) to the new EBS volume.

Attaching an EBS Volume to an EC2 Instance

As long as an EC2 instance is in the same AZ as an EBS volume, you can attach the volume to an instance. You can attach multiple EBS volumes to a single EC2 instance. If the volume is encrypted, the instance must support EBS encryption. Exercise 7-2 shows how to attach an EBS volume to an EC2 instance using the console. You can also attach the volume from the command line, with the attach-volume command:

$ aws ec2 attach-volume –volume-id vol-1234567890abcdef0 --instance-id 01283ef334d86420 --device /dev/sdf

In this command, you specify the volume-id attribute to represent the volume you want to attach, and the instance-id attribute to specify the EC2 instance to which you want to attach the volume. You attach the volume to the instance as the device, /dev/sdf.

You can view the EBS volumes attached to an EC2 instance by opening the EC2 console and selecting Instances in the navigation pane. To do so from the command line, run this command:

$ aws ec2 describe-volumes –region us-west-2

After creating an EBS volume and attaching it to an EC2 instance, you can modify the volume by increasing the volume type, increasing the volume size, or changing the volume’s IOPS settings. You can make these changes to a volume that’s attached to an instance as well as a detached EBS volume. AWS starts charging you at the new volume’s rate once you start the change in a volume’s settings.

Once you attach an EBS volume to an EC2 instance, it isn’t ready for use. You must first format and mount the drive, as I explain in the next section.

Making an EBS Volume Available for Use

Before an EC2 instance can start using the EBS volume you’ve attached to it, you must format the volume with a file system and mount the volume. This is so because initially the instance sees only a block volume with no file system on it. Once you format and mount the volume, the instance can use it the same way it does a regular hard disk drive.

Follow these steps to format and mount an EBS volume to make it available for use on a Linux system:

1. Connect to the EC2 instance to which you’ve attached the new EBS volume through SSH.

2. Although you name the EBS volume something like /dev/sdf, it could be attached with a different name such as /dev/xvdh or /dev/hdf, depending on the OS of the EC2 instance. Run the lsblk command to view the available disk devices and their mount points; you can figure out the correct device name to specify later on:

$ lsblk

3. If this is a volume you’ve restored from an EBS snapshot, you may not want to create a file system for the device because it already has one (unless you want to overwrite the existing file system). If this is a new volume, which is a raw block device, you must create a file system on the device. Run the mkfs command to create the file system on the device. Here’s the general format of the mkfs command to create an ext4 file system:

$ sudo mkfs -t ext4 device_name

In our case, the command is as follows:

$ sudo mkfs -t ext4 /dev/xvdf

4. Create a mount point directory for the volume with the mkdir command:

$ sudo mkdir /dev/xvdf /mydata

This command creates the directory /mydata, where you can write and read files after you mount the volume.

5. Add an entry for the new device to the /etc/fstab file, so the EBS volume is mounted automatically after a system reboot. Just add the following line at the end of the /etc/fstab file:

/dev/xvdf /data ext4 defaults 1 2

6. To make sure that your entry to the /etc/fstab file is correct, unmount the new device and mount all file systems listed in the /etc/fstab file (with the mount -a command):

$ sudo umount /mydata

$ sudo mount -a

7. Assign proper file permissions for the new volume mount to ensure that your applications and users can write to and read from the new EBS volume you’ve made available for use.

8. To find out how much disk space is available on this EBS volume, run the following Linux command:

$ df -hT /dev/xvda1

If you modify an EBS volume by increasing its size, you must extend the volume’s file system so it can use the increased storage capacity.

Attaching an EBS Volume to an Instance

In the previous section, I showed how to take a raw EBS volume, format it, and mount it to an EC2 instance. If you already have an EBS volume available to you, you can simply attach it to an instance, as long as both the volume and the instance are in the same AZ.

You can attach an EBS volume from the EC2 console or from the command line with the attach-volume command, as shown here (you must obtain the volume ID and the instance ID first):

$ aws ec2 attach-volume –volume-id vol-1234567890abcdef0 --instancce-id i-01474ef662b89480 –device /dev/sdf

This command attaches an EBS volume to a running or stopped instance and makes the volume available to the instance with the device name that you specify (/dev/sdf in this example).

Detaching and Deleting an EBS Volume

You can disassociate an EBS volume from an EC2 instance by detaching the volume from the instance. You can also delete an EBS volume from your account.

There are two ways to detach an EBS volume for an EC2 instance:

• Terminate the instance The EBS volume is automatically detached from the instance when you terminate an instance.

• Unmount the volume If the EC2 instance is running, you must unmount the volume on the instance.

From the command line, run the detach-volume command to detach a volume from an instance. As you can see in the following example, to detach a volume, you need to specify the volume-id attribute, and there’s no need to specify the instance name:

$ aws ec2 detach-volume –volume-id vol-1234567890abcdef0

Encrypting EBS Volumes

EBS offers encryption for most EC2 instance types. Creating an encrypted EBS volume and attaching it to an EC2 instance not only protects the data that you store on the EBS volume but offers several other encryption-related benefits.

Encrypting an EBS volume will encrypt the following:

• Data you store on the volume

• Data that flows between that volume and the EC2 instance to which you’ve attached it

• Snapshots that you create from that volume

• EBS volumes that you create from the snapshots that you make of that volume

EBS encryption uses AWS Key Management Service (KMS) customer master keys (CMKs) to encrypt volumes and snapshots. Unless you specify a custom CMK, AWS creates and manages a unique CMK for you in each AWS region where you own AWS resources. AWS uses the industry-standard AWS-256 encryption algorithm when it encrypts your EBS volumes with a data key. It stores the data key with the encrypted data on the volume, after first encrypting the key with your CMK. All snapshots and EBS volumes that you create from those snapshots share the same data key.

You can transfer data normally between encrypted and unencrypted EBS volumes (volume-level encryption). The snapshot of an unencrypted volume is by definition unencrypted. However, you can encrypt a volume’s data by applying encryption to a copy of a snapshot that you’ve made of an unencrypted EBS volume.

You can also encrypt data at the client level by incorporating encryption at the OS level of the EC2 instance. When you do this, you fully manage the encryption, including the storage and availability of the encryption keys. AWS doesn’t manage encryption keys for you in this case, as it does in the case of volume-level encryption.

EBS Monitoring and EBS CloudWatch Events

EBS sends notifications based on CloudWatch Events. These events can be volume events such as creating a volume, deleting a volume, and attaching a volume. Other EBS-related events include EBS snapshot events, such as creating a snapshot and copying a snapshot.

You learned about Amazon Lambda in Chapter 4. You can employ Amazon Lambda functions to handle CloudWatch Events and to help automate the workflow of a data backup. A CloudWatch Events rule that matches a new event can route the event to a Lambda function that you create that handles that event. Suppose, for example, that you’ve created a Lambda function that copies an EBS snapshot to a different region. You make this Lambda function available in the CloudWatch console. When EBS emits a createSnapshot event, CloudWatch invokes the Lambda function, which automatically copies the snapshot created in, say, the us-east-1 region to the us-east-2 region.

For all volumes except Provisioned IOPS volumes, CloudWatch provides metrics such as the number of bytes read/written, the read and write I/O per second, idle time, queue length, and other metrics, every five minutes. For Provisioned IOPS volumes, the metrics also include the throughput percentage, which shows the percentage of throughput a volume is using, compared to the throughput that you’ve provisioned. If the throughput percentage is low on a regular basis, it’s an indication that you’ve overprovisioned the IOPS for the volume, so you’re paying more than you need to for that volume. For volumes such as gp2, sc1, and st1, you also get burst balance metrics, which show the burst bucket credits still available to the volume.

Backing Up EBS Volumes with Snapshots

A point-in-time EBS snapshot helps you back up data you store on the volume. A snapshot makes a copy of an EBS volume. When you create a snapshot of an EBS volume, the snapshot is automatically stored for you in Amazon S3. EBS stores the snapshots at the regional level in multiple AZs in Amazon S3. Since a snapshot is linked to the region where you create it, you must start the snapshot process in the region where you need to store copies of your data. You can use EBS snapshots for backing up data or to save data before you terminate an instance. The maximum number of EBS snapshots you can take in your AWS account is 10,000, by default.

Although EBS volumes can survive a loss of a disk, because they’re redundant arrays, the volumes are located in only one AZ. If the AZ in which a volume lives becomes unavailable, your applications won’t be able to access the data stored in the volume. Maintaining EBS snapshots enables you to survive an AZ failure.

How EBS Snapshots Work

To minimize the time to create a snapshot every time you back up the same volume, and to save on storage costs (by avoiding data duplication), EBS snapshots are incremental in nature. Incremental snapshots capture only the changes in a volume over time. The first snapshot you take of an EBS volume is a full snapshot that captures all the data on the volume. The second snapshot captures only the changed data blocks of the volume since the full backup of the volume. Subsequent snapshots copy and store only the amount of data that has changed since the previous incremental backup.

You can use an EBS snapshot to create a new EBS volume that will be a replica of the backed-up EBS volume. A point-in-time snapshot means that with this snapshot, you can restore the data up to this moment to a new EBS volume.

When you start creating a new EBS volume from a snapshot, you don’t need to wait for all the data to load from the snapshot. Instead, you can start using the new volume immediately. Any data that you access that isn’t already on the new volume is immediately downloaded from Amazon S3, while the download continues uninterrupted in the background. You can take a new snapshot of a volume even if a previous snapshot of the volume is in the pending state. However, the volume may take a performance hit until the snapshots complete.

EBS automatically encrypts the snapshots of an encrypted volume. Any volumes that you create from an encrypted snapshot are also automatically encrypted. You can make copies of snapshots and share them across AWS accounts. You can choose to encrypt a snapshot that’s unencrypted during a copy of the unencrypted snapshot.

Creating EBS Snapshots

Unlike instance store storage, data on EBS persists, and most of the time, the data is critical. A snapshot helps you take a point-in-time backup of an EBS volume; it’s a backup of an EBS volume that AWS stores in S3. You can create a new EBS volume using data stored in a snapshot by specifying the snapshot’s ID.

AWS stores all snapshots in Amazon S3 at the regional level, across multiple AZs. Although the snapshots are stored in S3, they’re not like other S3 objects because they contain only the data that has changed since the previous snapshot was created. You can’t access your snapshots from the S3 console or APIs; instead, you must access them through the EC2 console and APIs.

Creating a snapshot could take some time and depends on the size of the source EBS volume. You can create up to five snapshots concurrently. Exercise 7-3 shows how to create an EBS snapshot from the console. You can create a snapshot from the command line by executing the create-snapshot command:

$ aws ec2 create-snapshot –volume-id vol-1234567890abcdef0 –description "Test snapshot of an EBS volume"

You can view snapshot information by going to the Amazon EC2 console and selecting Snapshots in the navigation pane. Or, from the command line, run the describe-snapshots command to view your snapshots:

$ aws ec2 describe-snapshots –owner-ids 01234567890

This command shows all the snapshots that are available to you, including the private snapshots that you own, private snapshots owned by other AWS accounts to which you have create volume permissions, and public snapshots that are available for all AWS accounts.

EBS snapshots use a lazy loading strategy that lets you use the snapshot immediately after you create it, even before S3 has loaded all the data in the snapshot. If your applications try to access data that hasn’t been loaded on the snapshot yet, the volume downloads the required chunk of data from Amazon.

Sharing EBS Snapshots and Making a Snapshot Public

You can share your EBS snapshots with other AWS accounts to enable other users to create EBS volumes with those snapshots. Although you can’t make an encrypted snapshot publicly available, you can publicly share your unencrypted snapshots. If you share an encrypted snapshot, remember that you must also share the custom CMK that you’ve used to encrypt the snapshot.

You must modify a snapshot’s permissions to enable sharing. The following example shows how to execute a modify-snapshot-attribute command to modify a snapshot attribute to grant the CreateVolumePermission for a specific AWS user:

$ aws ec2 modify-snapshot-attribute –snapshot-id snap-1234567890abcdef0 –attribute CreateVolumePermission –operation-type add –user-ids 123456789023

The modify-snapshot-attribute command also enables you to make a snapshot public. Just replace the -user-ids attribute with –group-name all.

Deleting a Snapshot

Deleting an EBS volume doesn’t delete its snapshots. You must explicitly delete any snapshots you don’t need. You can delete an EBS volume if you decide that you no longer need the volume. You can do so from the EC2 console or by running the delete-volume command:

$ aws ec2 delete-volume –volume-id vol-1234567890abcdef0

If you think you may need to use this volume later, you can create a snapshot of the EBS volume before deleting it.

Earlier, I explained that snapshots are incremental in nature, with each subsequent snapshot after the first snapshot storing only the data that has changed since the previous snapshot. When you use incremental backups for a database such as Oracle, you must retain all the incremental backups to restore the database. EBS snapshots work differently, in that you need to retain only the latest snapshot to restore the EBS volume. Snapshots contain only the changed data and reference the data from earlier snapshots.

Let’s look at a simple example to explain the unique nature of an EBS snapshot and what happens when you delete and restore a snapshot. This sequence of events shows how you can restore an EBS volume from a later snapshot, even after removing one or more earlier snapshots. In this example, I create two snapshots: Snapshot A and Snapshot B. The EBS volume for which I am creating a snapshot has 10GiB of data.

1. I create the first snapshot, Snapshot A, which is a full copy of the 10GiB EBS volume.

2. By the time I take the next snapshot (an incremental snapshot), 2GiB of data has changed. Therefore, the snapshot I create now, Snapshot B, copies and stores only the 2GiB of changed data. Snapshot B also references the other 8GiB of data that was copied and stored in Snapshot A in step 1.

3. I delete Snapshot A. EBS moves the 8GiB of data stored in Snapshot A to Snapshot B after the deletion of Snapshot A. As a result, Snapshot B now has all 10GiB of data (its own 2GiB, plus the 8GiB data moved from the deleted Snapshot A).

4. I restore the EBS volume for which I took the snapshots. I can restore all 10GiB stored in Snapshot B.

From the storage cost point of view, you still pay for storing all 10GiB of data, since the 8GiB of data from the deleted snapshot (Snapshot A) continues to be stored in Snapshot B.

Creating an AMI from a Snapshot

You can create an AMI from the snapshot of the root volume by adding metadata and registering the snapshot as an AMI. For a Linux AMI, you can do this by running the register-image command from the AWS CLI. Or, you can do the same thing from the EC2 console by selecting Elastic Block Store | Snapshots | Actions | Create Image | Create Image From EBS Snapshot.

Amazon S3: Object Storage in the Cloud

Amazon Simple Storage Service lives up to its name. It is an easy-to-use web service interface that you use to store and retrieve data from anywhere on the Web. S3 is primarily designed for handling read workloads, and you can use it to store data such as video clips and data for big data workflows. It is a scalable, fast, inexpensive, and reliable data storage infrastructure and offers 99.999999999 percent durability and 99.99 percent availability of objects over a year. It also automatically detects and repairs any lost data, through built-in redundancy.

Here are some key facts to remember about S3:

• You store S3 objects (data and metadata) in logical containers called buckets and manage permissions on the resources through access control and authentication policies.

• You can upload and download data using standards-based REST- and SOAP-based interfaces (APIs).

• You can store an infinite amount of data in S3 buckets.

• S3 is a regional service that offers you a high parallel throughput and stores your data within a region for compliance requirements.

• AWS automatically replicates the data that you store in S3 to at least three different data centers.

• You can configure access policies to limit who is authorized to view the data that you store in S3.

• Your S3 storage costs include the cost of the storage for the data you keep in S3, plus charges for the API calls you make to move data into and out of S3.

You can use S3 APIs to perform operations such as the following:

• Create a bucket

• Read from and write to an object

• Delete an object

• List the keys contained in a bucket

S3 Basic Entities and Key Concepts

The key entities in S3 are buckets and objects. To store data such as photos, videos, or documents in S3, your first step is to create a bucket (in any AWS region); then you can upload your objects (files that contain text, photos, and so on) to that bucket. The following sections describe buckets, objects, and other S3 concepts.

Buckets

A bucket in which you store objects is a globally unique (across all AWS accounts) entity. Although you access individual buckets and the content that you store in those buckets from the Web with an URL, your data isn’t exposed to the general public because of the security features built into S3 that require the right permissions to access the objects.

Bucket Naming Rules Bucket names must comply with DNS naming conventions. Here are the key naming rules to which you must adhere:

• A bucket name must consist of only lowercase characters, numbers, periods, and dashes. Each of a bucket’s labels must start with a lowercase character or number.

• The bucket names cannot use underscores, end with a dash, have two or more consecutive periods, or have a dash next to a period.

• A bucket name cannot be formatted as an IP address.

Addressing the Objects in a Bucket Let’s look at an example. Suppose I create a bucket named samalapati1 and store an object named photos/kitten.jpg in it. I can address this object with the following unique URL:

http://samalapati1.s3.amazonaws.com/photos/kitten.jpg

S3 offers two differently styled URLs to access buckets: a virtual-hosted-style URL and a path-style URL. When you use a virtual-hosted-style URL, you specify the bucket name as part of the domain name, as in this example:

http://samalapati1.s3.amazonaws.com

When you use a path-style URL, there’s no need to specify the bucket name as part of the domain:

http://s3.amazonaws.com/samalapati1

Buckets and Hierarchies A bucket is a flat container for objects. Buckets may look like directories, but they don’t have a hierarchical file organization. You can, however, create a logical file system hierarchy by using key names to stand for folders. For example, here’s a bucket with four objects, each with its own key name:

sample1.jpg

photos/2019/Jan/sample2.jpg

photos/2019/Feb/sample3.jpg

photos/2019/Mar/sample4.jpg

Based on the key names, this implies the following logical folder structure:

• sample1.jpg: this is the root of this bucket.

• sample2.jpg object: this is located in the photos/2019/Jan subfolder.

• sample3.jpg object: this is located in the photos/2019/Feb subfolder.

• sample4.jpb object: this is located in the photos/2019/Mar subfolder.

Buckets are useful for organizing the S3 namespace. They also identify the account to be charged for the storage and data transfer to S3. You can control access to S3 data at the bucket level and aggregate S3 usage at the bucket level.

The Delimiter and Prefix Parameters An S3 bucket doesn’t work like a Linux directory, with files stored inside a directory. Objects within a bucket are laid out flatly and alphabetically. Unlike Linux, where a directory is a file, in S3, everything is an object, and you identify each object by its key.

You can use the delimiter and prefix parameters to make S3 buckets work like a directory. The two parameters limit the results returned by a list operation. The prefix parameter limits the response to the keys that begin with the prefix you specify. The delimiter parameter helps the list command roll up all the keys with a common prefix into a single summary list result.

You can also use slash (/) as a delimiter. Here’s an example that shows how the delimiter and prefix parameters help. This example stores data for different cities:

North America/Canada/Quebec/Montreal

North America/USA/Texas/Austin

Instead of trying to manage a flat key namespace such as this, you can use the delimiter and prefix parameters in a list operation. So to list all the states in the United States, you can set the delimiter='/' and the prefix='North America/USA/'. A list operation with a delimiter enables you to browse the data hierarchy at just one level by summarizing the keys nested at the lower levels. If, for example, an S3 object myobject has the prefix myprefix, the S3 key would be myprefix/myobject.

Objects

Objects are the data that you store in S3; objects consist of data and metadata. Two items help you uniquely identify an object: a key (the object’s name) and a version ID. Metadata, which is a set of key-value pairs, is helpful in describing the data, with entities such as the Content-Type and the date in which the object was last modified. You can use the standard metadata as well as configure custom metadata for a bucket.

You place objects in S3 and access those objects using standard HTTP REST verbs (also called methods) such as GET, POST, PUT, PATCH, and DELETE. These five verbs correspond to the create, read, update, and delete operations, respectively. You use these verbs with their corresponding actions in S3.

Keys

A key uniquely identifies an object within a bucket. A bucket, key, and version ID uniquely identify an object in the entire Amazon S3 storage. You can uniquely identify every object in Amazon S3 with the following four entities:

• Web service endpoint

• Bucket name

• Key

• Version (optional)

Consider the URL http://mydocs.s3.amazonaws.com/2018-12-28/AmazonS3.wsdl. In this URL,

• S3.amazonaws.com is the web service endpoint.

• mydocs is the bucket name.

• 2018-12-28/AmazonS3.wsdl is the key.

Note that there’s no version entity (which is optional) in this URL.

High Availability and Durability of Data

S3 provides high availability for the data you store in it, which means that the data is almost always available to you when you need it. In addition, S3 ensures data durability, which means that the data is resilient to any storage or other types of failures. S3 offers high availability by replicating your data on multiple servers. S3 Standard storage delivers a guaranteed availability of 99.99 percent. AWS guarantees this through an SLA that penalizes AWS if the availability of your data goes below 99.99 percent in any month (roughly equal to a downtime of 44 minutes). S3 offers a data durability of 99.999999999 for your data, making it very unlikely that you’ll ever lose data. This is why AWS recommends that you store your EBS snapshots in S3.

The S3 Data Consistency Model

S3 offers eventual consistency. A consistent read offers reads that aren’t stale, but with a potentially higher read latency and lower throughput. Eventually consistent reads offer a lower read latency and higher read throughput, but at the cost of making stale reads possible.

When you make a PUT request and it’s successful, your data is safe in S3. However, S3 takes time to replicate data to all the servers that have a copy of this data, so you may see a situation where the following is true:

• Following a PUT operation, an immediate read operation of the object may return the older data, and a listing of the objects may not show the new object.

• After a DELETE operation, an immediate listing of the keys might still list the deleted object, and an immediate attempt to read the data might return data that you’ve deleted.

When you PUT a new object in an S3 bucket, the S3 service provides read-after-write consistency for the PUT. S3 offers eventual consistency for overwrite PUTs and DELETEs. An update to a single key is atomic; a read following a PUT to an existing key (update) won’t return corrupt or partial data.

S3 Storage Classes

Each object in S3 is associated with one of six storage classes: STANDARD, REDUCED_REDUNDANCY, INTELLIGENT_TIERING, STANDARD_IA, ONEZONE_IA, and Glacier. Your choice of the storage class depends on your use cases. Some types of storage are ideal for storing data that you frequently access, and others are best for less frequently used data. Objects that you store in the STANDARD, RRS, STANDARD_IA, and ONEZONE_IA storage classes are available for real-time access. Glacier objects aren’t accessible in real-time; you must first restore the objects before accessing them. Not all storage classes offer the same levels of durability.

Storage Classes for Frequently Accessed Objects

S3 offers two storage classes for frequently accessed objects stored in S3.

STANDARD Storage Class This default storage class offers millisecond access to your data. AWS stores STANDARD S3 data durably by replicating it across a minimum of three geographically separated data centers to ensure a guaranteed SLA of eleven 9’s (99.999999999 percent) durability. There’s is no minimum storage duration for the S3 objects that you store in the STANDARD storage class.

REDUCED_REDUNDANCY (RRS) Storage Class This storage class is designed for storing non-critical data (such as thumbnails and other processed data that you can easily reproduce). This class stores data at a lower level of redundancy than S3 STANDARD storage, with “only” four 9’s (99.99 percent) durability for RRS data, and it stores the data in only two data centers. RRS costs less than STANDARD S3 storage because of the lower durability of its data. If you’re storing mission-critical data, RRS isn’t a good choice, but if you can easily reproduce the data should you lose it, it is an appropriate choice because of its cost effectiveness—and the considerably high availability (about 400 times more durability than a typical disk drive) that it offers.

Storage Classes for Frequently and Infrequently Accessed Objects

The INTELLIGENT_TIERING storage class is designed for storing long-lived data with changing or unknown access patterns. It stores objects in two access tiers: one optimized for frequent access and a lower-cost tier for data that you infrequently access. This storage class automatically moves data to the most cost-effective storage class based on the data access patterns.

S3 monitors the access pattern of objects that you store in this storage class and moves any object that hasn’t been accessed in 30 days to the (cheaper) infrequent access tier. The INTELLIGENT_TIERING autotiering of data ensures that the most cost-effective storage access tier is used, even if you can’t predict your future access patterns.

This storage class is suitable for objects that are larger than 128KB that you intend to retain for longer than 30 days. Smaller objects are charged at the frequent access tier rates. If you delete objects before 30 days, you are still charged for 30 days.

Storage Classes for Infrequently Used Data

You can choose from three different storage classes for data that you access infrequently. Here are the key differences among the STANDARD_IA, ONEZONE_IA, and Glacier storage classes:

STANDARD_IA Storage Class Just like the STANDARD storage class, STANDARD_IA stores data in multiple AZs. This makes STANDARD_IA resilient because it can withstand the loss of an AZ. If your data cannot be easily (or never) re-created, use STANDARD_IA storage class. It is designed for long-lived, infrequently accessed, noncritical data, such as database backup archives. Suppose, for example, that you run a web site that stores historical photos, with most images being requested only a couple of times a year. STANDARD_IA storage would be a cost-effective and highly available choice for storing this type of data. You can serve the images directly from STANDARD_IA by hosting your web site in S3. There’s is no minimum storage duration for the S3 objects that you store in the STANDARD_IA storage class.

ONEZONE_IA Storage Class ONEZONE_IA stores objects in a single AZ, so it is cheaper to store data in this class than in STANDARD_IA. However, the data isn’t resilient, and you can lose data when the only AZ is lost for any reason. Use this storage class for data that can be easily re-created. ONEZONE_IA requires a minimum storage duration of 30 days.

Glacier Storage Class Designed for archiving data, Glacier offers the same resiliency as the STANDARD storage class. You cannot, however, access the objects that you store in real-time. The Glacier storage class requires a minimum duration of 90 days.

Storage Class Cost and Durability

Here are some guidelines regarding cost and durability for the various storage classes.

RRS is cheaper than STANDARD S3 storage but is significantly less durable. It’s the only storage class that offers less than 99.999999999 percent durability. If, however, you know you’re going to retrieve the data frequently (a few times a month or more), it’s cheaper to stay with STANDARD S3 storage because it costs more to retrieve data from the storage classes designed for infrequent access. If you infrequently access data, choose a storage class other than RRS, such as STANDARD_IA, ONEZONE_IA, or Glacier.

Another cost consideration is the length of time for which you store the data. Infrequently accessed data storage classes such as STANDARD_IA have a minimum storage requirement of 30 days, meaning that AWS bills you for 30 days, even if you store the data for only a day or two. Although STANDARD_IA may be cheaper than STANDARD S3, this minimum billing structure makes it more expensive than storing short-term data in STANDARD S3 storage.

Glacier is the cheapest of all the storage classes, but it’s best only if you almost never retrieve the data that you store. Unlike all the other storage classes, with Glacier, you must wait three to five hours to retrieve data; other storage classes enable you to retrieve your data in milliseconds. The minimum storage duration for Glacier is 90 days.

S3 and Object Lifecycle Management

Lifecycle policy rules apply actions to a group of objects. Using lifecycle management policies for S3 objects is a best practice that reduces your S3 storage costs and optimizes performance by removing unnecessary objects from your S3 buckets. There is no additional cost for setting up lifecycle policies.

You can configure two types of lifecycle policies:

• Transition actions These actions define when an object is moved to a different storage class. You can configure a lifecycle transition policy to automatically migrate objects stored in one S3 storage class to another (lower cost) storage class, based on the age of the data. You may, for example, specify that a set of S3 objects be moved to the STANDARD_IA storage class 30 days after their creation. You may also specify that a set of objects be moved to long-term Glacier storage class a year after their creation date.

• Expiration actions You can specify when an object can expire. A lifecycle expiration policy automatically removes objects from your account based on their age. For example, you can configure a policy that specifies that incomplete multipart uploads be deleted based on the age of the upload.

You can use the Amazon S3 Analytics feature to help you determine how you should transition your S3 data to the right storage class. S3 Analytics performs a storage class analysis of your storage access patterns. This helps you determine when you can transition data stored in less frequently accessed but more expensive STANDARD S3 storage to cheaper STANDARD_IA storage, which is designed for storing infrequently accessed data. The best way to benefit from storage class analysis is by filtering the storage analysis results by object groups, rather than by a single object.

S3 Multipart Uploads

When creating a new, large S3 object or copying a large object, you can use the Multipart Upload API to upload the object in parts, in any order you choose. To improve throughput, you can upload several parts in parallel. Once you upload all the parts of an object, S3 puts together all the parts to create the object. You can retransmit a part if the transmission of that part fails.

Amazon S3 recommends that you consider using a Multipart Upload when an object’s size reaches 100MB instead of attempting to upload the object in a single operation. The maximum object size for a Multipart Upload is 5TB, and the maximum number of parts per upload is 10,000.

Making Requests for S3 Data

Amazon S3 is a REST service, and, therefore, you send requests to the service via the REST API or—to make your life easy—via the AWS SDK wrapper libraries that wrap the S3 REST API. Your request can be anonymous or authenticated. An authenticated request must include a signature value that AWS partly generates from your AWS access keys (access key ID and secret access key).

You send an S3 request to an S3 service endpoint, a URL that acts as an entry point to a web service. Most AWS services offer a regional endpoint. When you create a new Amazon Virtual Private Cloud (VPC) endpoint for S3, you associate a subnet with the endpoint. When you do this, it adds a route to the endpoint in the subnet’s route table.

Here’s an example entry point for the DynamoDB service:

https://dynamodb.us-west-2.amazonaws.com

Using Amazon Athena to Query S3 Data

Amazon Athena is an interactive query service that enables you to query data in S3 via standard SQL statements. For example, if your developers want to query data from a set of CSV files that you’ve stored in S3, they can use Athena instead of going to the trouble of developing a program for querying the data. Athena is a serverless offering, meaning that there’s no infrastructure or anything else for you to maintain; you pay just for the queries that you execute against your S3 data.

Hosting a Static Web Site on Amazon S3

You can host static web sites in S3 that can contain client-side, but not server-side, scripting. To host a static web site, you must first configure an S3 bucket for web site hosting, and then upload your web content to that bucket. You must enable the bucket with public read access, so everyone in the world can read objects in that bucket.

There are three steps in setting up a static web site in S3 from the S3 bucket properties settings:

1. Enable static web site hosting in the S3 bucket properties.

2. Select the Make Public option for permissions on the bucket’s objects.

3. Upload an index document to the S3 bucket.

Your static web site is available to your users at AWS region–specific endpoints of the bucket you’ve designated for web site hosting. Once you create a bucket and configure it as a web site, URLs such as the following examples provide users access to your web site content.

In this case, the bucket is called examplebucket and it’s being created in the us-west-2 region. This URL returns the default index document that you configure for your web site:

http://examplebucket.s3-website-us-west-2.amazonaws.com/

This URL returns the photo1.jpg object that you stored at the root level of the bucket:

http://examplebucket.s3-website-us-west-2.amazonaws.com/photo123.jpg

The two examples use an S3 web site endpoint, but you can use your own custom domain when configuring a bucket for web site hosting, by adding your web site configuration to the bucket. For example, you can use a custom domain such as example.com to serve content from your web site. S3, along with Route 53, supports hosting of websites at the root domain, so your users can access your site by going to either http://www.example.com or http://example.com.

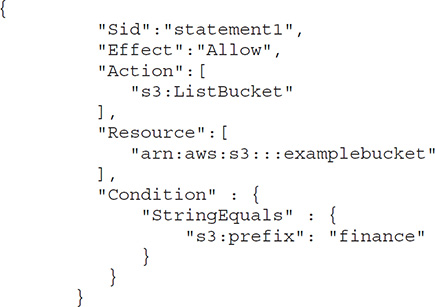

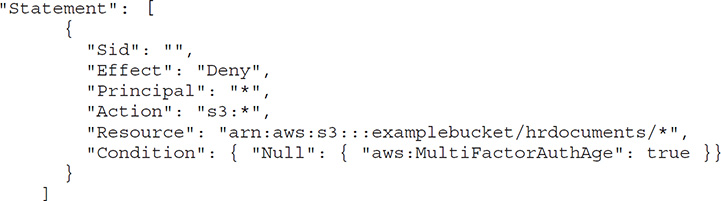

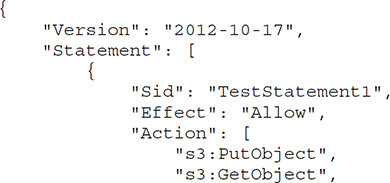

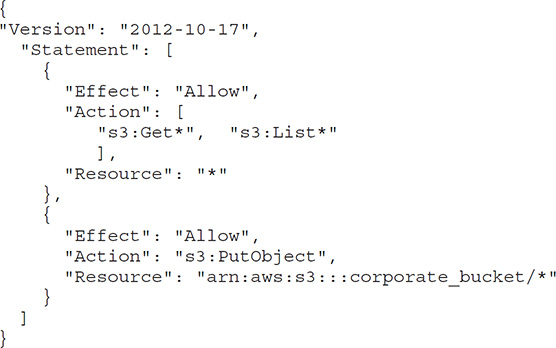

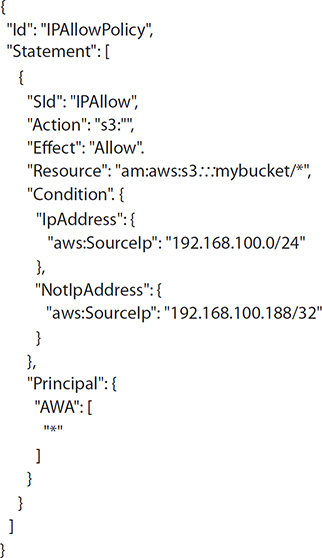

Managing Access to Your S3 Resources

Only the resource owner (an AWS account) has permissions to access buckets, objects, and related subresources such as lifecycle and web site configuration. By default, all S3 resources are private, and only the object owner has permissions to access them. However, the owner can choose to share objects with others by creating a pre-signed URL using the owner’s security credentials. Pre-signed URLs are valid for a specific duration and enable the owner to grant time-bound permissions to a user to view, modify, delete, upload, or download objects from and to the owner’s bucket. For example, the AWS account owner can create an access policy to grant an IAM user in the AWS account PUT Object permissions to enable that user to upload objects to the owner’s S3 bucket.

You can grant permissions to individual users and accounts, to everyone (called anonymous access), or to all users who authenticate successfully with AWS credentials. When would you grant anonymous access to an S3 bucket? A good use case is when you configure the S3 bucket as a static web site. In this case, you grant the GET Object permission to everyone to make the bucket’s objects public.