Chapter 7

Applications: Acoustics and Psychoacoustics Combined

Chapter Contents

- 7.1 Spatial and 3D Audio Reproduction

- 7.2 Critical Listening Room Design

- 7.2.1 Loudspeaker Arrangements for Critical Listening

- 7.2.2 IEC Listening Rooms

- 7.2.3 Energy–Time Considerations

- 7.2.4 Reflection-Controlled Rooms

- 7.2.5 The Absorption Level Required for Reflection-Free Zones

- 7.2.6 The Absorption Position for Reflection-Free Zones

- 7.2.7 Nonenvironment Rooms

- 7.2.8 The Diffuse-Reflection Room

- 7.2.9 Summary

- 7.3 Pure-Tone and Speech Audiometry

- 7.4 Psychoacoustic Testing

- 7.5 Filtering and Equalization

- 7.6 Public-Address Systems

- 7.6.1 Reverberation

- 7.6.2 The Effect of Reverberation on Intelligibility

- 7.6.3 The Effect of More Than One Loudspeaker on Intelligibility

- 7.6.4 The Effect of Noise on Intelligibility

- 7.6.5 Requirements for Good Speech Intelligibility

- 7.6.6 Achieving Speaker Directivity

- 7.6.7 A Design Example: How to Get It Right

- 7.6.8 More Than One Loudspeaker and Delays

- 7.6.9 Objective Methods for Measuring Speech Quality

- 7.7 Noise-Reducing Headphones

- 7.8 “Mosquito” Units and “Teen Buzz” Ringtones

- 7.9 Audio Coding Systems

- 7.9.1 The Archetypical Audio Coder

- 7.9.2 What Exactly Is Information?

- 7.9.3 The Signal Redundancy Removal Stage

- 7.9.4 The Entropy Coding Stage

- 7.9.5 How Do We Measure Information?

- 7.9.6 How Do We Measure the Total Information?

- 7.9.7 Entropy Coding

- 7.9.8 The Psychoacoustic Quantization Stage

- 7.9.9 Quantization and Adaptive Quantization

- 7.9.10 Psychoacoustic Noise Shaping

- 7.9.11 Psychoacoustic Quantization

- 7.10 Cochlea Implants

- 7.11 Sonification

- 7.12 Summary

- Bibliography

- Further Reading

So far in this book, we have considered acoustics and psychoacoustics as separate topics. However, real applications often require the combination of the two, because although the psychoacoustics tells us how we might perceive the sound, we need the acoustic description of sound to help create physical or electronic solutions to the problem. The purpose of this chapter is to give the reader a flavor of the many applications that make use of acoustics and psychoacoustics in combination. Of necessity, these vignettes are brief and do not cover all the possible applications. However, we have tried to cover areas we feel are important and of interest. The level of detail also varies, but in all cases, we have tried to provide enough detail for the reader to be able to read and understand the more advanced texts and references that we provide, and any that the reader may discover themselves, for further reading. The rest of this chapter will cover listening room design, audiometry, psychoacoustic testing, filtering and equalization, public-address systems, noise-reducing headphones, acoustical social control devices and last, but by no means least, audio coding systems.

7.1 Spatial and 3D Audio Reproduction

Spatial and so-called 3D audio reproduction is an essential aspect of the enjoyment of sound, whether it is on a personal player via headphones, at home, or in the cinema. All of these systems rely on the application of acoustics and psychoacoustics to present an illusion of specific sound directions that are called “phantom images” to mimic real sound sources such as singers or musical instruments. They also attempt to give the listener an illusion of being in a space, which is often described as its “ambience.”

A variety of systems are currently in use or being introduced as technology improves. They can be broadly categorized into two separate approaches.

- 1. Systems that try to provide the necessary directional cues directly via the reproduction method. This is the approach adopted by conventional stereo, surround systems and the new 3D reproduction methods.

- 2. Systems that try to reproduce the acoustic sound field that the listener would experience if they were there. This method covers ambisonics, wave-field synthesis and binaural stereo.

Historically, the first category formed the first practical systems with the second category following closely behind, and this section will give a brief description of how they work. To actually fully go into all the details of these systems, including the many approaches to producing and recording the necessary content, would take several additional books! The references and recommended reading at the end of the chapter can help you get additional detail.

7.1.1 Stereo

The simplest such system is stereophonic reproduction, which uses two spaced loudspeakers to provide the illusion of sound sources in front of the listener. Superficially this would only provide intensity cues to give the illusion of direction to sound coming from two speakers due to the head shading above 1kHz, as discussed in Section 2.6.2. However, this would be incorrect, as demonstrated by Blumlein in 1931.

Blumlein showed that the coherent addition of the acoustic signals arriving at the ears from both loudspeakers, as shown in Figure 7.1, produced a phase shift that mimicked the effect of the interaural time delay cue. In essence the signals from both speakers arrive at both ears, with equal amplitudes, because at low frequencies, they diffract easily around the head. Due to the relative delay caused by the speaker angles, the sine waves from the contralateral (farther away) speaker are delayed with respect to the ipsilateral (closer) one. As we know from Chapter 1, in Section 1.3.1, adding two sine waves of different amplitudes and phase forms a single one with a different amplitude and phase. The resultant phase of the these combined sine waves at the two ears will be a function of the relative speaker amplitudes. Blumlein showed that this mimics the effect of the ITD cue. How this works is shown in Figures 7.2(a) to 7.2(c), which show the effect of adding two sine waves with different amplitudes that emanate from left and right speakers at each ear. Three different cases are shown:

Figure 7.2c The Phase Shift Produced when Both Speakers Are Driven, Resulting in an Intermediate ITD

- 1. Only one speaker driven: This is shown in Figure 7.2(a). In this case, only the left speaker is providing the signal to both ears. Thus the phase shift between the two ears is identical to the phase shift caused by a sound source at the left speaker’s position.

- 2. Both speakers driven equally: This is shown in Figure 7.2(b). In this case, both speakers are providing identical signals to both ears. The composite wave due to the sum of the two signals from the speakers at each ear are identical. Thus the phase shift between the two ears is zero. This is identical to the phase shift due to a sound source that is central between the two speaker’s positions.

- 3. Both speakers driven with different amplitudes: This is shown in Figure 7.2(c). In this case, both speakers are providing different signals to both ears. The composite wave due to the sum of the two signals from the speakers at each ear is no longer identical. This makes the phase shift between the two ears somewhere between the first two cases. This phase shift will be dependent on the relative amplitudes of the two loudspeakers, because this determines the composite wave’s amplitude and phase. The resulting phase shift is identical to the phase shift due to a sound source that is between the center position and the physical loudspeaker positions.

Note that there is some amplitude variation in the composite ear signals that depends on the relative amplitudes and phases of the loudspeaker signals. This has to be compensated for in the controls of the relative amplitudes of the speaker, the pan pots.

If one assumes ITD calculation based on the geometry shown in Figure 7.1, one obtains the following equation.

| (7.1) |

- where θphantom is the angle with respect to the normal of the virtual image,

- L and R are the amplitudes of the left and right speaker signals, respectively,

- and θloudspeakers is the angle with respect to the normal of the loudspeakers.

This is the well-known law of sines.

Figure 7.3 shows the predicted angle as a function of the relative levels of the left and right loudspeakers for a θloudspeakers = 30°. This corresponds to a total angle between the loudspeakers, which is the recommended angle for ideal stereophonic listening.

The net result is that amplitude stereo can give a convincing directional image of a set of sound sources because it provides both intensity and delay cues. Critically, this requires that both the loudspeakers are heard by both ears at low frequencies. This is something that does not occur when listening to conventional stereo recordings on headphones and will be discussed later.

One way of recording this acoustically is via a pair of crossed figure-of-eight microphones, as shown in Figure 7.4. As a sound source moves across the sound stage, the amplitudes of the signals in each microphone have a cosine/sine relationship, as shown in Figure 7.5. Thus at extreme left or right the signal appears only in one speaker, whereas when the source is in the center, the signal appears equally in both speakers. Incidentally, this cosine/sine law is the ideal amplitude law used in pan pots in mixing desks for the purpose of positioning monophonic sources in a stereo image. Of course, different microphone layouts may be used to introduce phase shifts. Due to the effect of coherent addition of the two speaker signals at the ears at low frequencies, there is some trading between delay and amplitude. This can be used to achieve a variety of creative effects to the stereo image.

7.1.2 5.1 Surround Systems

A related form of spatial reproduction is 5.1 surround, which is used in film and video presentations. Here the objective is to provide not only clear dialogue but also stereo music and sound effects, as well as a sense of ambience. The typical speaker layout is shown in Figure 7.6.

Here in addition to the conventional stereo speakers, there are some additional ones to provide the additional requirements. These are as follows:

- Center dialogue speaker: The dialogue is replayed via a single central speaker, because this has been found to give better speech intelligibility than a stereo presentation. Interestingly, the fact that the speech is not in stereo is not noticeable because the visual cue dominates so that we hear the sound coming from the person speaking on the screen even if their sound is coming from a different direction. For music, this speaker may be used to help anchor the front image or support the two stereo speakers.

- Surround speakers: The ambient sounds and sound effects are diffused via rear-mounted speakers. Originally they were not supposed to provide directional effects and were deliberately designed and fed signals that minimized their correlation with each other and the front speakers. The effect of this was to fool the hearing system into perceiving the sound as all around with no specific direction. In modern surround systems, they are also used to provide directional effects in addition to the ambience.

- Low-frequency effects: This is the “0.1” channel in the 5.1 system. It is required because many of the sound effects used in film and video, such as explosions and punches, have substantial low-frequency and subsonic content. Thus, a specialized speaker is needed to reproduce these sounds properly. Note: this speaker was never originally intended to reproduce music signals. However, their presence in many surround music systems is now used to provide general low-frequency content as a “subwoofer.” This does have the advantage of providing a means of dealing with the effects of low-frequency modes on the sound, as these speakers can be placed in an “optimum” position. It also allows the use of multiple bass speakers and appropriate processing to combat the effects of modes. In addition, it can permit the use of more compact speakers for the other channels, as they do not have to handle the lowest frequencies.

All these signals can be encoded into and derived from a two-channel format using a technique called matrixing. However, this results in a considerable loss of separation between channels, but it was the only way to achieve it when only two channel distribution formats such as vinyl discs or stereo film tracks were available. Better performance is obtained from a multichannel formant, and with the advent of digital distribution formats that can provide discrete channels, this has become the norm, although some systems also use the matrixing technique to derive additional speaker feeds for more complex reproduction layouts.

7.1.3 Three-Dimensional Surround Systems

In recent years, additional speakers have been added to 5.1 surround systems. These systems are often designated 7.1, 9.1, 11.2 and so on to designate the extra speakers. The first digit specifies the number of full-range speakers actually used. Thus a 7.1 system would have two additional speakers compared to a 5.1 system, a 9.1 system four additional full-range speakers and so on. The figure after the decimal point denotes the number of low-frequency speakers used. So 7.1 would describe a system with 7 full-range speakers and 1 low-frequency speaker, whereas an 11.2 describes a system with 11 full-range speakers and 2 low-frequency speakers. Note that in many cases, the extra low-frequency channel is a duplicate of the existing low-frequency channel and not a different low-frequency feed. Having two low-frequency speakers allows one to place them in a way that deals with the inevitable modal issues that happen in real rooms at low frequencies. The necessary signals for these extra speakers may be derived from an existing 5.1 system via the technique of matrixing, or they can be provided by separate dedicated channels. It is also possible to conceive of systems that use a combination of matrixing and additional channels. Although some standard arrangements have been proposed, there is still an element of flux in these systems.

The purpose of these extra channels is to improve the surround-sound experience for the listener so that they have more consistent localization all the way around themselves. In addition, some arrangements can be used to give an illusion of source height, which can give the listener a more realistic sense of the environment the reproduced sound is in. For example, a 7.1 system places two additional speakers to the left and right of the listener (called the left and right surrounds) and moves the rear speakers farther around the back (called the left and right back surrounds) as shown in Figure 7.7. By adding two more speakers, 9.1 systems allow one to have some height loudspeakers (left and right front height), and 11.1 systems would allow two additional-height speakers to the rear. Clearly the situation can get very confusing! Material is now available that has been recorded for 9.1 systems with height, and the results can give a great sense of the space the recording was made in.

7.1.4 Ambisonics

Another form of spatial sound presentation is called ambisonics. This is a three-dimensional sound reproduction system that tries to simulate the sound field at a given point in the room. The original and simplest system does this by recognizing that at a given point, there are the component of pressure at the point, velocity in the left-to-right direction, velocity in the front-to-back direction and velocity in the down-to-up direction. These components can be measured via the use of an omnidirectional pressure microphone and three figure-of-eight pressure-gradient or velocity microphones, which are oriented in the three orthogonal directions, as shown in Figure 7.8.

This gives four channels of information that represent the first-order sound field at the recording point. This sound field can be reproduced in a room via an array of speakers. The minimum recommended is eight, and a typical arrangement is shown in Figure 7.9.

Unlike stereo, however, no particular speaker is assigned to a particular direction, such as left, right and so forth. Instead, all the speakers work in concert to provide the necessary sound field. That is, all the speakers work together to provide a pressure component and, by feeding appropriate levels and phases of the other signals to the loudspeakers, all cooperate to synthesize the necessary velocity components. As an example, the front-to-back component would be synthesized by feeding equal levels of the front-to-back signal to speakers 1 and 2 at the same level but in antiphase to speakers 3 and 4. Speakers 5 to 8 would receive no signal because they fall on the null of the figure-of-eight polar pattern for front-to-back sounds. Note that these weightings are only true for the speaker arrangement shown in Figure 7.9, and if other dimension ratios are used, then the weightings required change, because they are determined by the shape of the polar patterns of the different spherical harmonic components. The system can be simplified by simply removing channels; thus a two dimensional sound field requires only three channels, and the system becomes stereo if only two channels are used.

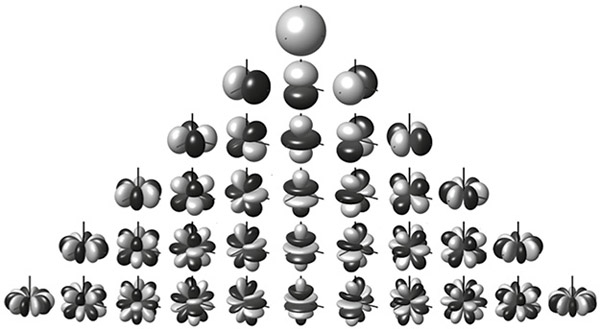

In principle, it is possible to extend the system to sample acceleration components and higher-order changes, in addition to the velocity components, to provide greater accuracy. The effective microphone patterns required for the different orders are shown in Figure 7.10. This results in the ability to produce more complex sound fields to give better localization accuracy. These higher-order ambisonic systems are now used for a variety of purposes and have been proposed as an intermediate format for production, because it is comparatively easy to mix down from an ambisonic format into the perplexing variety of different surround-sound formats.

7.1.5 Wavefield Synthesis

Another approach to reproducing spatial sound is to try to reproduce the radiated sound field using the Huygens-Fresnel Principle, which states that any possible wavefront shape can be made up from elemental spherical waves as shown in Figure 7.11.

As a conventional loudspeaker radiates spherical waves at wavelengths that are large compared to its size, this naturally suggests the possibility of synthesizing an arbitrary sound field using a large number of speakers in an array, as shown in Figure 7.12. If one can accurately produce the desired sound field using an array of speakers on the boundary of the reproduction area, the directional characteristics of the recorded sources and environment will be preserved. In theory, this could produce a sound field that is indistinguishable from the original sound field. However, in practice, there are some limitations, which are as follows.

- 1. Speaker size and spacing: To achieve perfect wavefront reconstruction, one needs to have speakers whose spacing, and therefore size, is less than half the wavelength of the highest frequency to be reproduced. For 20kHz, this would require a speaker size of less than 8.6 mm! If the spacing is larger than this, which in general is necessary for practical speaker size, then spatial aliasing occurs just as it does in array loudspeakers used for public-address systems, as described in Appendix A6.1.2. This causes distortions in the reproduced wavefront that degrades the spatial accuracy of the reconstruction. Much of the work on wavefield synthesis is directed to methods of reducing these effects for practical speaker sizes and technologies.

- 2. Reproduction environment: To be successful, the only sound in the space must be that which is radiated by the loudspeakers. Any reflecting boundary will result in spurious wavefronts that will degrade the accuracy of the wavefront reconstruction. Ideally, the array should be in an anechoic room to avoid this.

- 3. Finite array size: The Huygens-Fresnel Principle assumes that elemental spherical waves are present on an infinitely long boundary. This means that the sharp boundaries, or changes in direction that would be need to form a practical array, can cause problems. Figure 7.13 shows a wavefield synthesis installation in the anechoic room at the university of Salford. Note that it is an octagonal arrangement to reduce these end effects.

- 4. Large numbers of loudspeaker signals: As well as a large number of loudspeakers, there is also the same number of loudspeaker signals. Each of these is unique, and recording or generating them requires a lot of effort. These limitations make it difficult to use wavefield synthesis as a recording format. However, some virtual-reality and acoustic programs provide outputs that are representations of the necessary speaker feeds and so can be used in conjunction with wavefield synthesis as an auralization method.

- 5. Plane reproduction only: Because of the large number of speakers required, most wavefield synthesis setups are only in the horizontal plane. Theoretically, there is no reason for this limitation. But practically, the number of speakers and associated signals would be enormous for a three-dimensional system.

Wavefield synthesis can provide realistic sound fields, but the large amount of speakers and processing required limit its broader application with current technology. In niche applications, though, they can provide a highly realistic soundfield.

7.1.6 Binaural Stereo

For recorded sound that is to be presented on headphones, binaural stereo reproduction is more appropriate. This works by recording the sound using microphones that are located on either a real head or a “dummy” head. This recording is then presented to the listener’s ears via headphones, thus giving the listener a direct representation of the sound they would have heard in the recording venue. Because the sound is recorded at the ears, dummy or real, the full effect of head shading, interaural delay and pinna reflections is accounted for in the recording. This can result in amazingly realistic recordings, especially if your own ears are used. However, if other people’s ears are used, the effect is less realistic, because a given person learns the responses of their own head and ears. In fact, it seems that reasonably simple approximations to a head, which just handle the effect of head shading and interaural delay, provide the most benefit, probably because they feed less-confusing direction cues to the listener.

The other problem with binaural presentation is that the stereo image does not change as the listener moves their head. This is not a natural effect and is one of the reasons that binaural signals, especially those from normal-intensity stereo sources, appear within the head. In order to avoid this effect, it would be necessary to detect the head movement and adjust the signals to the two ears to take account of the change in head direction. When this is done, the sounds are properly externalized, and the effect can be quite dramatic!

This type of processing is required in virtual-reality systems, in which sound sources need to remain associated with their visual cue. One way of achieving this is to measure the effective filter response to the two ears for different directions, as shown in Figure 7.14. These are known as head related transfer functions (HRTFs). These filter functions can then be used directly to “pan” a monaural source to a particular direction in the binaural stereo perspective. Because it is difficult to interpolate between HRTFs for different angles, these functions have to be stored as a large table of data in both azimuth and elevation. If a recorded binaural signal is to be processed, the difference between the HRTF for zero angle and the actual angle of the head must be used to correct for head movement.

Normal stereo signals do not reproduce correctly on headphones, and binaural recordings do not reproduce correctly on normal stereo equipment. This is because in the former, the effect of the head is removed from the listening environment, whereas in the latter, the effect of the head is applied twice, once in the recording and once in the listening. It is possible to convert the signals from one format to the other by making use of the head-related transfer functions.

To convert normal stereo signals into signals suitable for binaural presentation, it is necessary to filter the left and right channels with the HRTFs associated with the positions of left and right speakers, as shown in Figure 7.15. Note that four filters are involved, because one must model not only the transfer function to each ear but also the cross-coupling of the loudspeakers between the two ears.

To convert binaural signals into a form suitable for normal stereo presentation, it is necessary to cancel out the HRTFs associated with the positions of left and right speakers, as shown in Figure 7.16. This involves using two filters that are the inverse of the HRTF to each ear and two other filters that model the cross-coupling between the two ears and subtract the effect. Clearly this is only going to be possible for a particular speaker layout and a forward-facing listener with no head movement. However, this technique is used in some proprietary spatial-enhancement systems. It has also been used in television and computer game sound reproduction to provide “virtual surround” by using the HRTFs to synthesize the surround speakers from the forward stereo ones. In this situation, not only are the speaker locations known, as they are built into the set, but the head is effectively clamped in position due to the need to watch the picture. In this sort of situation, the technique can be very effective.

7.1.7 Summary

This section has described some of the methods used to produce spatial sound. Some are more appropriate for domestic use than others, but the challenge of producing a realistic spatial impression in reproduced sound is ongoing.

7.2 Critical Listening Room Design

Although designing rooms for music performance is important, we often listen to recorded sound in small spaces. We listen to music and watch television and movies in both stereo and surround in rooms that are much smaller than the recording environments. If one wishes to evaluate the sound in these environments, it is necessary to make them suitable for this purpose. In Chapter 6, we have seen how to analyze existing rooms and predict their performance. We have also examined methods for improving their acoustic characteristics. However, is there anything else we can do to make rooms better for the purpose of critically listening to music? There are a variety of approaches to achieving this, and this section examines optimal speaker placement, IEC rooms, room energy evolution, LEDE rooms, nonenvironment rooms and diffuse-reflection rooms.

7.2.1 Loudspeaker Arrangements for Critical Listening

Before we examine specific room designs, let us first examine the optimum speaker layouts for both stereo and 5.1 surround systems. The reason for doing this is that most modern room designs for critical listening need to know where the speakers will be in order to be designed. It is also pretty pointless to have a wonderful room if the speakers are not in an optimum arrangement.

Figure 7.17 shows the optimum layout for stereo speakers. They should form an equilateral triangle with the center of the listening position. If one has a greater angle than this, the center phantom image becomes unstable—the so-called hole-in-the-middle effect. Clearly, having an angle of less than 60° results in a narrower stereo image.

5.1 surround systems are used in film and video presentations. Here, the objective is to provide both clear dialog and stereo music and sound effects, as well as a sense of ambience. The typical speaker layout was shown earlier in Figure 7.6. Here, in addition to the conventional stereo speakers, there are some additional ones to provide the additional requirements, as explained earlier.

More recently, systems using six or more channels have also been proposed and implemented; for more information, see Rumsey (2001).

As we shall see later, the physical arrangement of loudspeakers can significantly affect the listening room design.

7.2.2 IEC Listening Rooms

The first type of critical listening room is the IEC (International Electrotechnical Commission) listening room (IEC, 2003). This is essentially a conventional room that meets certain minimum requirements: a reverberation time that is flat and between 0.3 and 0.6 seconds above 200 Hz, a low noise level, an even mode distribution and a recommended floor area. In essence, this is a standardized living room that provides a consistent reference environment for a variety of listening tasks. It is the type of room that is often used for psychoacoustic testing, as it provides results that correlate well with that which is experienced in conventional domestic environments. This type of room can be readily designed using the techniques discussed in Chapter 6.

However, for critically listening to music mixes and the like, something more is required, and these types of rooms will now be discussed. All of them don’t control only reverberation but also the time evolution and level of early reflections. They also all take advantage of the fact that the speakers are in specific locations to do this and very often have an asymmetric acoustic that is different for the listener and the loudspeakers. Although there are many different implementations, they fall into three basic types: reflection-controlled rooms, nonenvironment rooms and diffuse-reflection rooms. As they all control the early reflections within a room, we shall look at them first.

7.2.3 Energy–Time Considerations

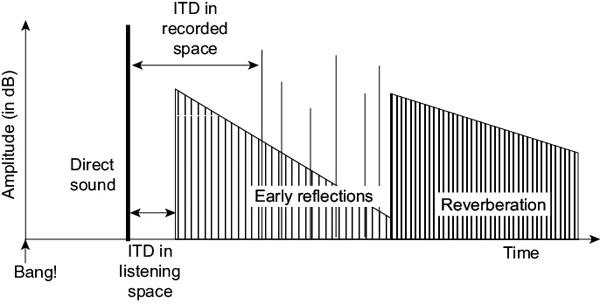

A major advance in acoustical design for listening to music has arisen from the realization that as well as reverberation time, the time evolution of the first part of the sound energy buildup in the room matters, that is, the detailed structure and level of the early reflections, as discussed in Chapter 6. As it is mostly the energy in the sound that is important as regards perception, the detailed evolution of the sound energy as a function of time in a room matters. Also, there are now acoustic measurement systems that can measure the energy–time curve of a room directly, thus allowing a designer to see what is happening within the room at different frequencies rather than relying on a pair of “golden ears.” An idealized energy–time curve for a typical room is shown in Figure 7.18. It has three major features:

- A gap between the direct sound and first reflections. This happens naturally in most spaces and gives a cue as to the size of the space. The gap should not be too long—less than 30 ms—or the early reflections will be perceived as echoes. Some delay, however, is desirable, as it gives some space for the direct sound and so improves the clarity of the sound, but a shorter gap does add “intimacy” to the space.

- The presence of high-level diffuse early reflections, which come to the listener predominantly from the side, that is, lateral early reflections. This adds spaciousness and is easier to achieve over the whole audience in a shoebox hall rather than a fan-shaped one. The first early reflections should ideally arrive at the listener within 20 ms of the direct sound. The frequency response of these early reflections should ideally be flat, and this, in conjunction with the need for a high level of lateral reflections, implies that the side walls of a hall should be diffuse reflecting surfaces with minimal absorption.

- A smoothly decaying diffuse reverberant field that has no obvious defects and no modal behavior and whose time of decay is appropriate to the style of music being performed. This is hard to achieve in practice, so a compromise is necessary in most cases. For performing acoustic music, a gentle bass rise in the reverberant field is desirable to add “warmth” to the sound, but in studios this is less desirable.

7.2.4 Reflection-Controlled Rooms

For the home listener or a sound engineer in the control room of a studio, the ideal would be an acoustic that allows them to “listen through” the system to the original acoustical environment that the sound was recorded in. Unfortunately, the room in which the recorded sound is being listened to is usually much smaller than the original space, and this has the effect shown in Figure 7.19. Here, the first reflection the listener hears is due to the wall in the listening room and not the acoustic space of the sound that has been recorded. Because of the precedence effect, this reflection dominates, and the replayed sound is perceived as coming from a space the size of the listening room, which is clearly undesirable. What is required is a means of making the sound from the loudspeakers appear as if it is coming from a larger space by suppressing the early reflections from the nearby walls, as shown in Figure 7.20. Examples of this approach are “live end dead end” (LEDE) (Davies and Davies, 1980), “reflection-free zone” (RFZ) (D’Antonio and Konnert, 1984) and controlled-reflection rooms (Walker, 1993, 1998).

One way of achieving this is to use absorption, as shown in Figure 7.21. The effect can also be achieved by using angled or shaped walls, as shown in Figures 7.22 and Figure 7.23. This is known as the “controlled-reflection technique” because it relies on the suppression of early reflections in a particular area of the room to achieve a larger initial time-delay gap. This effect can only be achieved over a limited volume of the room unless the room is made anechoic, which is undesirable. The idea is simple: by absorbing or reflecting away the first reflections from all walls except the farthest one away from the speakers, the initial time delay gap is maximized. If this gap is larger than the initial time-delay gap in the original recording space, the listener will hear the original space and not the listening room.

However, this must be achieved while satisfying the need for even diffuse reverberation, and so the rear wall in such situations must have some explicit form of diffusion structure on it to assure this. The initial time-delay gap in the listening should be as large as possible, but it is clearly limited by the time it takes sound to get to the rear wall and back to the listener. Ideally this gap should be 20 ms, but it should not be much greater, or it will be perceived as an echo. In most practical rooms, this requirement is automatically satisfied, and initial time-delay gaps in the range of 8 ms to 20 ms are achieved.

Note that if the reflections are redirected rather than being absorbed, there will be “hot areas” in the room where the level of early reflections is higher than normal. In general, it is often architecturally easier to use absorption rather than redirection, although this can sometimes result in a room with a shorter reverberation time.

7.2.5 The Absorption Level Required for Reflection-Free Zones

In order to achieve a reflection-free zone, it is necessary to suppress early reflections, but by how much? Figure 7.24 shows a graph of the average level that an early reflection has to be at in order to disturb the direction of a stereo image. From this, we can see that the level of the reflections must be less than about 15 dB to be subjectively inaudible. Allowing for some reduction due to the inverse square law, this implies that there must be about 10 dB, or α = 0.9, of absorption on the surfaces contributing to the first reflections. In a domestic setting, it is possible to get close to the desired target using carpets and curtains, and bookcases can form effective diffusers, although persuading the other occupants of the house that carpets or curtains on the ceiling are chic can be difficult. In a studio, more extreme treatments can be used. However, it is important to realize that the overall acoustic must still be good and comfortable, that it is not anechoic and that, due to the wavelength range of audible sound, this technique is only applicable at mid to high frequencies, where small patches of treatment are significant with respect to the wavelength.

7.2.6 The Absorption Position for Reflection-Free Zones

Figure 7.25 shows one method of working out where absorption should be placed in a room to control early reflections. By imagining the relevant walls to be mirrors, it is possible to create “image rooms” that show the direction of the early reflections. By defining a reflection-free space around the listening position and by drawing “rays” from the image speaker sources, one can see which portions of the wall need to be made absorbent, as shown in Figure 7.26. This is very straightforward for rectangular rooms but a little more complicated for rooms with angled walls. Nevertheless, this technique can still be used. It is applicable for both stereo and surround systems, the only real difference being the number of sources.

In Figure 7.25, the rear wall is not treated, because normally some form of diffusing material would be placed there. However, absorbing material could be so placed, in the places determined by another image room created by the rear wall, if these reflections were to be suppressed. One advantage of this technique is that it also shows places where absorption is unnecessary. This is useful because it shows you where to place doors and windows that are difficult to make absorptive. To minimize the amount of absorption needed, one should make the listening area as small as possible, because larger reflection-free volumes require larger absorption patches. The method is equally applicable in the vertical as well as the horizontal direction.

7.2.7 Nonenvironment Rooms

Another approach to controlling early reflections, which is used in many successful control rooms, is the “nonenvironment” room. These rooms control both the early reflections and the reverberation. However, although they are quite dead acoustically, they are not anechoic. Because for users in the room there are some reflections from the hard surfaces, there are some early reflections that make the room nonanechoic. However, sound that is emitted from the speakers is absorbed and is never able to contribute to the reverberant field. How this is achieved is shown in Figure 7.27.

These rooms have speakers, which are flush-mounted in a reflecting wall, and a reflecting floor. The rear wall is highly absorbent, as are the side walls and ceiling. The combined effect of these treatments is that sound from the loudspeakers is absorbed instead of being reflected so that only the direct sound is heard by the listener, except for a floor reflection. However, the presence of two reflecting surfaces does support some early reflections for sources away from the speakers. This means that the acoustic environment for people in the room, although dead, is not oppressively anechoic. Proponents of this style of room say that the lack of anything but the direct sound makes it much easier to hear low-level detail in the reproduced audio and provides excellent stereo imaging. This is almost certainly due to the removal of any conflicting cues in the sound, as the floor reflection has very little effect on the stereo image.

These rooms require wide-band absorbers, as shown in Figure 7.27. These absorbers can take up a considerable amount of space. As one can see in Figure 7.27, the absorbers can occupy more than 50% of the volume. However, it is possible to use wide-band membrane absorbers, as discussed in Chapter 6, with a structure similar to that shown in Figure 6.48 with a limp membrane in place of the perforated sheet. Using this type of absorber, it is possible to achieve sufficient wide-band absorption with a depth of 30 cm, which allows this technique to be applied in much smaller rooms whose area is approximately 15 m2. Figure 7.28 shows a typical nonenvironment room implementation: “the Lab” at the Liverpool Music House.

Because nonenvironment rooms have no reverberant field, there is no reverberant room support for the loudspeaker level, as discussed in Section 6.1.7. Only the direct sound is available to provide sound level. In a normal domestic environment, as discussed in Chapter 6, the reverberant field is providing most of the sound power and is often about 10 dB greater than the direct sound. Thus in a nonenvironment room, one must use either 10 times the power amplifier level or specialist loudspeaker systems with a greater efficiency to reproduce the necessary sound levels (Newell, 2008).

7.2.8 The Diffuse-Reflection Room

A novel approach to controlling early reflections is not to try to suppress or redirect them but instead diffuse them. This results in a reduced reflection level but does not absorb them.

In general, most surfaces absorb some of the sound energy, and so the reflection amplitude is weakened by the reflecting surface. Therefore, the level of direct reflections will be less than that which would be predicted by the inverse square law due to surface absorption. The amount of energy or power removed by a given area of absorbing material will depend on the energy or power per unit area striking it. As the sound intensity is a measure of the power per unit area, this means that the intensity of the sound reflected is reduced in proportion to the absorption coefficient. Therefore, the intensity of the early reflection is given by:

| (7.1) |

From Equation 7.1, which is Equation 1.18 with the addition of the effect of surface absorption, it is clear that the intensity reduction of a specular early reflection is inversely proportional to the distance squared.

Diffuse surfaces, on the other hand, scatter sound in other directions than the specular. In the case of an ideal diffuser, the scattered energy polar pattern would be in the form of a hemisphere. A simple approach to calculating the effect of this can be to model the scattered energy as a source whose initial intensity is given by the incident energy. Thus, for an ideal scatterer, the intensity of the reflection is given by the product of the equation describing the intensity from the source and the one describing the sound intensity radiated by the diffuser. For the geometry shown in Figure 7.29, this is given by:

| (7.2) |

The factor 2 in the second term represents the fact that the diffuser only radiates into half a hemisphere and therefore has a Q of 2. From Equation 7.2, one can see that the intensity of a diffuse reflection is inversely proportional to the distance to the power of 4. This means that the intensity of an individual diffuse reflection will be much smaller than that of a specular reflection from the same position.

So diffusion can result in a reduction of the amplitude of the early reflection from a given point. However, there will also be more reflections due to the diffusion arriving at the listening position from other points on the wall, as shown in Figure 7.30. Surely this negates any advantage of the technique? A closer inspection of Figure 7.30 reveals that although there are many reflection paths to the listening point, they are all of different lengths and hence time delays. The extra paths are also all of a greater length than the specular path, shown dashed in Figure 7.30. Furthermore, the phase-reflection diffusion structure will add an additional temporal spread to the reflections. As a consequence, the initial time-delay gap will be filled with a dense set of low-level early reflections instead of a sparse set of higher-level ones, as shown in Figure 7.31. Of particular note is that even with no added absorption, the diffuse-reflection levels are low enough in amplitude to have no effect on the stereo image, as shown earlier in Figure 7.24.

Figure 7.29 The Geometry for Calculating the Intensity of an Early Reflection from a Diffuse Surface

The effect of this is a large reduction of the comb-filtering effects that high-level early reflections cause. This is due to both the reduction in amplitude due to the diffusion and the smoothing of the comb filtering caused by the multiplicity of time delays present in the sound arriving from the diffuser. As these comb-filtering effects are thought to be responsible for perturbations of the stereo image (Rodgers, 1981), one should expect improved performance even if the level of the early reflections is slightly higher than the ideal.

The fact that the reflections are diffuse also results in an absence of focusing effects away from the optimum listening position, and this should result in a more gradual degradation of the listening environment away from the optimum listening position. Figure 7.32 shows the intensity of the largest diffuse side-wall reflection relative to the largest specular side-wall reflection as a function of room position for the speaker position shown. From this figure, we can see that over a large part of the room, the reflections are less than 15 dB below the direct sound.

Figure 7.33 shows one of the few examples of such a room. The experience of this room is that one is unaware of sound reflection from the walls: it sounds almost anechoic, yet it has reverberation. Stereo and multichannel material played in this room has images that are stable over a wide listening area, as predicted by theory. The room is also good for recording in, as the high level of diffuse reflections and the acoustic mixing it engenders, as shown in Figure 7.30, help integrate the sound emitted by acoustic instruments.

7.2.9 Summary

In this section, we have examined various techniques for achieving a good acoustic environment for hearing both stereo and multichannel music. However, the design of a practical critical listening room requires many detailed considerations regarding room treatment, sound isolation, air conditioning and so on that are covered in more detail in Newell (2008).

7.3 Pure-Tone and Speech Audiometry

In this section, a number of acoustic and psychoacoustic principles are applied to the clinical measurement of hearing ability. Hearing ability is described in Chapter 2 and summarized in Figure 2.10 in terms of the frequency and amplitude range typically found. But how can these be measured in practice, particularly in the clinic, where such information can provide medical professionals with critical data for the treatment of hearing problems?

The ability to detect sound and the ability to discriminate between sounds are the two aspects of hearing that can be detrimentally affected by age, disease, trauma or noise-induced hearing loss. The clinical tests that are available for the diagnosis of these are

- sound detection: pure-tone audiometry

- sound discrimination: speech audiometry

Pure-tone audiometry is used to test a subject’s hearing threshold at specific frequencies approximately covering the speech hearing range (see Figure 2.10). These frequencies are spaced in octaves as follows: 125 Hz, 250 Hz, 500 Hz, 1 kHz, 2 kHz, 4 kHz and 8 kHz. The range of sound levels that are tested usually start 10 dB below the average threshold of hearing, and they can rise to 120 dB above it; recall that the average threshold of hearing varies with frequency (see Figure 2.10).

A clinical audiometer is set up to make diagnosis straightforward and quick and easy to explain to patients. Because the threshold of hearing is a nonuniform curve and therefore not an easy reference to use on an everyday basis in practice, a straight line equating to the average threshold of hearing is used instead to display the results of a hearing test on an audiogram. A dBHL (hearing level) scale is defined for hearing testing, which is the number of dBs above the average threshold of hearing.

Figure 7.34 shows a blank audiogram that plots frequency on the x-axis (the octave values between 125 Hz and 8 kHz inclusive as shown earlier) against dBHL between −10 dBHL and +120 dBHL on the y-axis. Note that the dBHL scale increases downward to indicate greater hearing loss (a higher amplitude or greater dBHL value needed for the sound to be detected). The 0 dBHL (threshold of hearing) line is thicker than the other lines to give a visual focus on the average threshold of hearing as a reference against which measurements can be compared.

A pure-tone audiometer has three main controls: (1) frequency, (2) output sound level and (3) a spring-loaded output key switch to present the sound to the subject. When the frequency is set, the level is automatically altered to take account of the average threshold of hearing, which enables the output sound level control to be calibrated in dBHL directly. The output sound level control usually works in 5 dB steps and is calibrated in dBHL. It is vitally important that the operator is aware that an audiometer can produce very high sound levels that could do permanent damage to a normal hearing system (see Section 2.5). When testing a subject’s hearing, a modest level around +30 dBHL should be used to start with, which can be increased if the subject cannot hear it.

The spring-loaded output key is used to present the sound, thereby giving the operator control of when the sound is being presented and removing any pattern of presentation that might allow the subject to predict when to expect the next sound. Such unpredictability adds to the overall power of the test, but in the context of hearing measurement, it is particularly important when hearing is being tested in the context of, at one extreme, a legal claim for damages being made for hearing loss due to noise-induced hearing loss or, at the other, a health screening for normal hearing as part of a job interview.

When a sound is heard, the subject is asked to press a button, which illuminates a lamp or light-emitting diode (LED) on the front panel of the audiometer. The subject should be visible to the tester, but the subject should not be able to see the controls. When carrying out an audiometric test, local sound levels should be below the levels defined in BS EN ISO 8253–1, which are shown for the test frequencies in Figure 7.35. Generally, the local level should be below 35 dBA.

During audiometry, test signals are presented in one of two ways:

- air conduction

- bone conduction

For air-conduction audiometry, sound is presented acoustically to the outer ear and thereby tests the complete hearing system. Three types of air-conduction transducers are available:

- 1. circumaural headphones

- 2. supra-aural headphones

- 3. ear canal–insert earphones

Circumaural headphones surround and cover the pinna (see Figure 2.1) completely, thereby providing a degree of sound isolation. Supra-aural headphones rest on the pinna and are the more traditional type in use, but they are not particularly comfortable since they press quite heavily on the pinna in order to keep the distance between the transducer itself and the tympanic membrane constant. Both circum- and supra-aural headphones can be uncomfortable and somewhat awkward, and they can in certain circumstances deform the ear canal. As an alternative, ear canal–insert earphones that have a disposable foam tip can be used that will not distort the ear canal. They have the added advantage that less sound leaks to the other ear, which reduces the need to consider presenting a masking signal to it. There are, however, situations, such as infected or obstructed ear canals, in which the use of ear canal insert earphones is not appropriate.

For bone-conduction audiometry, sound is presented mechanically using a bone vibrator that is placed just behind but not touching the pinna on the bone protrusion known as the mastoid prominence. It is held in place with an elastic headband. The sound presented when using bone conduction bypasses the outer and middle ears since it vibrates the temporal bone in which the cochlea lies directly. Thus it can be used to assess inner function and the presence or otherwise of what is known as sensorineural hearing loss with no hindrance from any outer- or middle-ear disorder. Bone conduction is carried out in the same way as air conduction audiometry except that only frequencies from 500 Hz to 4 kHz are used due to the limitations of the bone-conduction transducers themselves. When a bone-conduction measurement is being made for a specific ear, it is essential that the other ear is masked using noise. Specific audiometric guidelines exist for the use of masking.

The usual audiometric procedure for air or bone-conduction measurements (recalling the one difference for bone conduction that the frequencies used are from 500 Hz to 4 kHz only) is to test frequencies (in the following order: 1 kHz, 2 kHz, 4 kHz, 8 kHz, 500 Hz, 250 Hz, 125 Hz, 1 kHz). (Note that the starting frequency is 1 kHz, which is a mid frequency in the hearing range and is therefore likely to be heard by all subjects to give them confidence at the start and end of a test.) If the retest measurement at 1 kHz has changed by more than 5 dB, other frequencies should be retested and the most sensitive value (lowest dBHL value) recorded. When testing one ear, consideration will be given as to whether masking should be presented to the other ear to ensure that only the test ear is involved in the trial. This is especially important when testing the poorer ear.

Tests are started at a level that can be readily heard (usually around +30 dBHL), which is presented for 1 to 3 s using the output key switch and then involves watching for the subject to light the lamp or LED. If this does not happen, the level is increased in 5-dB steps (5 dB being a minimum practical value to enable tests to be carried out in a reasonable time)—presenting the sound and awaiting a response each time. Once a starting level has been established, the sound level is changed using the “10 down, 5 up” method as follows:

- 1. Reduce the level in 10-dB steps until the sound is not heard.

- 2. Increase the level in 5-dB steps until the sound is heard.

- 3. Repeat 1 and 2 until the subject responds at the same level at least 50% of the time, defined as two out of two, two out of three or two out of four responses.

- 4. Record the threshold as the lowest level heard.

There are a number of degrees of hearing loss, which are defined in Table 7.1. These descriptions are used to provide a general conclusion about a subject’s hearing, and they should be interpreted as such. They consist of a single value that is the average dBHL value across frequencies 250 Hz to 4 kHz. The values are used to provide a general guideline as to the state of hearing, and it must be remembered that there could be one or more frequencies for which the hearing loss is worse than the average.

Consider, for example, the audiogram for damaged hearing given in Figure 2.19. Here, the average dBHL value for frequencies 250 Hz to 4 kHz would be {((10 + 5 + 5 + 15 + 60)/5) = 19 dBHL}, which indicates “no hearing handicap” (see Table 7.1), which is clearly not the case.

The upper part of Figure 7.36 shows audiograms for a young adult with normal healthy hearing within both ears based on air- and bone-conduction tests in the left and right ears (a key to the symbols used on audiograms is given in the figure). Notice that the bone- and air-conduction results lie in the same region (in this case < 20 dBHL) and a summary statement of “no hearing handicap” (see Table 7.1) would be entirely appropriate in this case. Pure-tone audiometry is the technique that enables the normal deterioration of hearing with age, or presbycusis (see Section 2.3 and Figure 2.11) to be monitored.

The lower part of Figure 7.36 shows example audiograms for two hearing-loss conditions. The audiogram in the lower-left position shows a conductive hearing loss in the left ear because the bone-conduction plot is normal, but the air-conduction plot shows a significant hearing loss that would be termed a “moderate hearing loss” (see Table 7.1). This indicates a problem between the outside world and the inner ear, and a hearing aid, tailored to the audiogram, could be used to correct for the air-conduction loss.

The audiogram in the lower-right position shows the effect on hearing of congenital rubella syndrome, which can occur in a developing fetus of a pregnant woman who contracts rubella (German measles) from about 4 weeks before conception to 20 weeks into pregnancy. One possible effect on the infant is profound hearing loss (> 95 dBHL—see Table 7.1), which is sensorineural (note that both the air- and bone-conduction results lie in the same region, indicating an inner-ear hearing loss). Sadly, there is no known cure; in this example, a hearing aid would not offer much help because there is no usable residual hearing above around 500 Hz.

Hearing loss |

Band in dBHL |

Effect on hearing speech |

|---|---|---|

Normal |

below 20 |

Speech heard easily |

Mild |

20 to 40 |

Quiet speech difficult to hear with competing acoustic noise |

Moderate |

41 to 70 |

Moderately loud speech difficult to hear with competing acoustic noise |

Severe |

71 to 95 |

Loud speech difficult to hear with competing acoustic noise |

Profound |

over 95 |

Almost no speech understood |

Pure-tone audiometry tests a subject’s ability to detect different frequencies, and the dBHL values indicate the extent to which the subject’s hearing is reduced at different frequencies. It thus indicates those frequency regions in which a subject is perhaps less sensitive than normally hearing listeners. This could, for example, be interpreted in practice in terms of timbral differences between specific musical sounds that might not be heard or vowel or other speech sounds that might be difficult to perceive. However, pure-tone audiometry does not provide a complete test of a subject’s hearing ability to discriminate between different sounds. Discrimination of sounds does start with the ability to detect the sounds, but it also requires appropriate sound processing to be available. For example, if the critical bands (see Section 2.2) are widened, they are less able to separate the components of complex sounds—the most important to us being speech. In order to test hearing discrimination, speech audiometry is employed that makes use of spoken material.

Speech audiometry is carried out for each ear separately and tests speech-discrimination performance against the pure-tone audiograms for each ear and normative data. When testing one ear, consideration will be given as to whether masking should be presented to the other ear to ensure that only the test ear is involved in the trial. This is particularly important when testing the poorer ear.

Speech audiometry involves the use of an audiometer and speech material that is usually recorded on audio compact disc (CD). Individual single-syllable words such as bus, fun, shop are played to the subject, who is asked to repeat them, providing part words if that is all they have heard. Each spoken response is scored phonetically in terms of the number of correct sounds in the response (for example, if bun or boss was the response for bus, the subject would score two out of three). Words are presented in sets of 10, and if a total phonetic score of 10% or better is achieved for a list, the level is reduced by 10 dB and a new set of 10 words is played, repeating the process until the score falls below 10%. The speech reception threshold (SRT) is the lowest level at which a 10% phonetic score can be achieved. The speech discrimination score (SDS) is the percentage of single-syllable words that can be identified at a comfortable loudness level.

The results from speech audiometry indicate something about the ability to discriminate between sounds, whereas pure-tone audiometry indicates ability to detect the presence of particular frequency components. Clearly detection ability is basic to being able to make use of frequency components in a particular sound, but how a listener might make use of those components depends on their discrimination ability. Discrimination will change if, for example, a listener’s critical bands are widened, which can result in an inability to separate individual components. This could have a direct effect on pitch, timbre and loudness perception. In addition, the ability to hear separately different instruments or voices in an ensemble might be impaired—something that could be very debilitating for a conductor, accompanist or recording engineer.

7.4 Psychoacoustic Testing

Knowledge of psychoacoustics is based on listening tests in order to find out how humans perceive sounds in terms, for example, of pitch, loudness and timbre. Direct measurements are not possible in this context, since direct connections cannot be made for ethical as well as practical reasons, and, in many cases, there is a cognitive dimension (higher-level processing) that is unique to each and every listener. Our knowledge of psychoacoustics therefore is based on listening tests, and this section presents an overview of procedures that are typically used in practice. Apart from offering this as a background to the origins of the psychoacoustic information presented in this book, it also enables readers to think through aspects of the creation of their own listening tests to progress psychoacoustic knowledge in the future.

When carrying out a psychoacoustic test, it is important to note that the responses will be from the opinions of listeners; that is, they will be subjective, whereas an objective test involves a direct physical measurement such as dB SPL, Hz or spectral components. There is no right answer to a subjective test since it is the opinion of a particular listener, and each listener will have an opinion that is unique; the process of psychoacoustic testing is to collect these listener opinions in a nonjudgmental manner. Subjective testing is unlike objective testing, in which direct measurements can be made of physical quantities such as sound pressure level, sound intensity level or fundamental frequency; in a subjective test, a listener is asked to offer an opinion in answer questions such as “Which sound is louder?” “Does the pitch rise or fall?” “Are these two sounds the same or different?” “Which chord is more in tune?” or “Which version do you prefer?”

Psychoacoustic testing involves careful experimental design to ensure that the results obtained can be truly attributed to whatever aspect of the signal is being used as the controlled variable. This process is called controlled experimentation. A starting point for experimental design may well be a hunch or something we believe to be the case from our own listening experience or from anecdotal evidence. A controlled experiment allows such listening experiences to be carefully explored in terms of which aspects of a sound affect them and how. Psychologists call a behavioral response, such as a listening experience, the dependent variable, and those aspects that might affect it are called the independent variables. Properly controlled psychoacoustic testing involves controlling all the independent variables so that any effects observed can be attributed to changes in the specific independent variable being tested.

7.4.1 Psychoacoustic Experimental Design Issues

One experimental example might be to explore what aspects of sound affect the perception of pitch. The main dependent variable would be f0, but other aspects of sound can affect the perceived pitch such as loudness, timbre and duration (see Section 3.2). Experimentally, it would also be very appropriate to consider other issues that might affect the results—some of which may not initially seem obvious—such as the fact that hearing abilities of the subjects can vary with age (see Section 2.3) and general health or that perhaps subjects’ hearing should be tested (see Section 7.2).

The way in which sounds are presented to subjects can also make a difference, since the use of loudspeakers would mean that the acoustics of the room will alter the signals arriving at each ear (see Chapter 6), whereas the use of headphones would not. There may be background acoustic noise in the listening room that could affect the results, and this may even be localized, perhaps to a ventilation outlet. Subjects can become tired (listener fatigue) or distracted or may perform better at different times of the day. The order in which stimuli are presented can have an effect—perhaps of alerting the listener to specific features of the signal, which prepares them better for a following stimulus. These are all potential independent variables and would need proper controlling.

Part of the process of planning a controlled experiment is thinking through such aspects (the ones given here are just examples and are not presented as a definitive list) before carrying out a full test. It is common to try a pilot test with a small number of listeners to check the test procedure and the presence of any additional independent variables. Some independent variables can be controlled by ensuring they remain constant (for example, the ventilation might be turned off, and measures could be taken to reduce background noise). Others can be controlled through the test procedure (for example, any learning effect could be explored by playing the stimuli in a different order to different subjects or asking each listener to take the test twice, with the stimulus order being reversed the second time).

7.4.2 Psychoacoustic Rating Scales

For many psychoacoustic experiments, the request to be given to the listeners is straightforward. In the pitch example, one might ask listeners to indicate which of two stimuli has the highest pitch or whether the pitch of a single stimulus was changing. In experiments in which the objective is to establish the nature of change in a sound, such as whether one synthetic sound is more natural than another, it is not so easy. A simple “yes” or “no” would not be very informative since it would not indicate the nature of the difference. A number of rating scales have been produced that are commonly used in such cases in which the listener is invited to choose the point on the scale that best describes what they have heard. Some examples are given in what follows.

When speech signals are rated subjectively by listeners, perhaps for the evaluation of the signal provided by a mobile phone or the output from a speech-synthesis system, it is usually the quality of the signal that is of interest. The number of listeners is important, since each will have a personal opinion, and it is generally suggested that at least 16 are used to ensure that statistical analysis of the results is sufficiently powerful. However, the greater the number of listeners, the more reliable the results are. It is also most appropriate to use listeners who are potential users of whatever system might result from the work and listeners who are definitely not experts in the area. A number of rating scales exist for the evaluation of the quality of a speech signal, and the following are examples:

- absolute category-rating (ACR) test

- degradation category-rating (DCR) test

- comparison category-rating (CCR) test

The absolute category-rating (ACR) test requires listeners to respond with a rating from the five-point ACR rating scale shown in Table 7.2. The results from all the listeners are averaged to provide a mean opinion score (MOS) for the signals being tested. Depending on the purpose of the test, it might be of more interest to present the results of the listening test in terms of the percentage of listeners that rated the presented sounds in one of the categories such as good or excellent or poor or bad.

A comparison is requested of listeners in a degradation category-rating (DCR) test, and this usually involves a comparison between a signal before and after some form of processing has been carried out. The assumption here is that the processing is going to degrade the original signal in some way, for example after some sort of coding scheme such as MP3 (see Section 7.8) has been applied, where one would never expect the signal to be improved. Listeners use the DCR rating scale (see Table 7.2) to evaluate the extent to which the processing has degraded the signal when comparing the processed version with the unprocessed original. The results are analyzed in the same way as for the ACR test, and these are sometimes referred to as the degradation mean opinion score (DMOS).

Rating |

ACR description—MOS |

DCR description—DMOS |

|---|---|---|

5 |

Excellent |

Degradation not perceived |

4 |

Good |

Degradation perceived but not annoying |

3 |

Fair |

Degradation slightly annoying |

2 |

Poor |

Degradation annoying |

1 |

Bad |

Degradation very annoying |

In situations in which the processed signal could be evaluated as being either better or worse, a comparison category-rating (CCR) test can be used. Its rating scale is shown in Table 7.3, and it can be seen that it is a symmetric two-sided scale. Listeners are asked to rate the two signals presented in terms of the quality of the second signal relative to the first. The CCR test might be used if one is interested in the effect of a signal-processing methodology being applied to an audio signal, such as noise reduction, in terms of whether it has improved the original signal.

7.4.3 Speech Intelligibility: Articulation Loss

Psychoacoustic experiments may be used to define thresholds of perception and rating scales for small degradations, such as the quality of the sound. However, at the other end of the quality scale is the case in which the degradation, due to noise distortion, reverberation and so on is so severe that it affects the intelligibility of speech. This is also measured and defined by the results of psychoacoustic experiments, but in these circumstances, instead of annoyance, the dependent variable is the proportion of the words that are actually heard correctly.

Two parameters are found to be important by those who work on speech: the “intelligibility” and the “quality” or “naturalness” of the speech. Both reflect human perception of the speech itself, and while they are most directly measured subjectively with panels of human listeners, research is being carried out to make equivalent objective measurements of these because of the problems of setting up listening experiments and the inherent inter- and intralistener variability. The relationship between intelligibility and naturalness is not fully understood. Speech that is unintelligible would usually be judged as being unnatural. However, muffled, fast or mumbling speech is natural but less intelligible, and speech that is highly intelligible may or may not be unnatural.

Subjective measures of intelligibility are often based on the use of lists of words that rhyme, differing only in their initial consonant. In a diagnostic rhyme test (DRT), listeners fill in the leading consonants on listening to the speech, and often the possible consonants will be indicated. In a modified rhyme test (MRT), each test consists of a pair-wise comparison of acoustically close initial consonants such as feel—veal, bowl—dole, fought—thought, pot—tot. The DRT identifies quite clearly in what area a speech system is failing, giving the designers guidance on where they might make modifications. DRT tests are widely accepted for testing intelligibility, mainly because they are rigorous, accurate and repeatable. Another type of testing is “logatom” testing.

In logatom testing, nonsense words such as “shesh” and “bik” are placed into a carrier phrase such as “Can con buy < logatom, e.g., “shesh” here > also” to ensure that they are all pronounced with the same inflection. The listener then has to identify the nonsense word and write it down. Using nonsense words has the advantage of removing the higher language processing that we use to resolve words with degraded quality and so provides a less biased measure. The errors listeners make show how the system being tested damages the speech, such as particular letter confusions, and provides a measure of intelligibility. Typically lists of 50 or 100 logatoms are used as a compromise between accuracy and fatigue, as discussed earlier. Although in theory any consonant-vowel-consonant may be used, it has been the authors’ experience that rude or swear words must be excluded, because the talker usually cannot pronounce them with the same inflection as normal words.

All of these tests result in a measure of the number of correctly identified words. This, as a percentage of the total, can be used as a measure of intelligibility or articulation loss, respectively. As consonants are more important in Western languages than vowels, this measure is often focused on just the consonants to form a measure called %ALcons (articulation loss; consonants) which is the percentage of consonants that are heard incorrectly. If this is greater than 15%, the intelligibility is considered to be poor. Although articulation loss is specific to speech—an important part of our auditory world—much music also relies on good articulation for its effect.

Subjective testing is a complex subject that could fill a complete book just on its own! For more details, see Bech and Zacharov (2006).

Rating |

Description |

|---|---|

3 |

Much better |

2 |

Better |

1 |

Slightly better |

0 |

About the same |

−1 |

Slightly worse |

−2 |

Worse |

−3 |

Much worse |

7.5 Filtering and Equalization

One of the simplest forms of electronic signal processing is to filter the signal in order to remove unwanted components. For example, often low-frequency noises, such as ventilation and traffic rumble, need to be removed from the signal picked up by the microphone. A high-pass filter would accomplish this, and mixing desks often provide some form of high-pass filtering for this reason. High frequencies also often need to be removed to either ameliorate the effects of noise and distortion or remove the high-frequency components that would cause alias distortion in digital systems. This is achieved via the use of a low-pass filter. A third type of filter is the notch filter, which is often used to remove tonal interference from signals. Figure 7.37 shows the effect of these different types of filter on the spectrum of a typical music signal.

In these cases, the ideal would be to filter the signal in a way that minimized any unwanted subjective effect on the desired signal. Ideally, in these cases, the timbre of the sound being processed should not change after filtering, but in practice, there will be some effects. What are these effects and how can they be minimized in the light of acoustic and psychoacoustic knowledge?

The first way of minimizing the effect is to recognize that many musical instruments do not cover the whole of the audible frequency range. Few instruments have a fundamental frequency that extends to the lowest frequency in the audible range, and many of them do not produce harmonics or components that extend to the upper frequencies of the audible range. Therefore, in theory, one can filter these instruments such that only the frequencies present are passed with no audible effect. In practice, this is not easily achieved for two reasons:

- Filter shape: Real filters do not suddenly stop passing signal components at a given frequency. Instead, there is a transition from passing the signal components to attenuating them, as shown in Figure 7.38. The cutoff frequency of a filter is usually expressed as the point at which it is attenuating the signal by 3 dB relative to the pass-band; see Figure 7.38. Thus if a filter’s cutoff is set to a given frequency, there will be a region within the pass-band that affects the amplitude of the frequency components of the signal. This region can extend as far as an octave away from the cutoff point. Therefore, in practice, the filter’s cutoff frequency must be set beyond the pass-band that one would expect from a simple consideration of the frequency range of the instruments in order to avoid any tonal change due to change in frequency content caused by the filter’s transition region. As the order of the filter increases, both the slope of the attenuation as a function of frequency and the sharpness of the cutoff increase; this reduces the transition region effects but, unfortunately, increases the time domain effects.

- Time domain effects: Filters also have a response in the time domain. Any form of filtering that reduces the bandwidth of the signal will also spread it over a longer period of time. In most practical filter circuits, these time domain effects are most pronounced near the cutoff frequency and become worse as the cutoff becomes sharper. Again, as in the case of filter shape, these effects can extend well into the pass-band of the filter. Note that even the notch filter has a time response, which gets longer as the notch bandwidth decreases. Interestingly, particular methods of digital filtering are particularly bad in this respect because they result in time domain artifacts that precede the main signal in their output. These artifacts are easily unmasked and so become subjectively disturbing. Again, the effect is to require that the filter cutoff be set beyond the value that one would expect from a simple consideration of the frequency range of the instruments.

Because of these effects, the design of filters that achieve the required filtering effect without subjectively altering the timbre of the signal is difficult.

The second way of minimizing the subjective effects is to recognize that the ear uses the spectral shape as a cue to timbre. Therefore, the effect of removing some frequency components by filtering may be partially compensated by enhancing the amplitudes of the frequency components nearby, as discussed in Chapter 5. Note that this is a limited effect and cannot be carried too far. Figure 7.39 shows how a filter shape might be modified to provide some compensation. Here a small amount of boost, between 1 dB and 2 dB, has been added to the region just before cutoff in order to enhance the amplitude of the frequencies near those that have been removed.

7.5.1 Equalization and Tone Controls