13

Future Testing Challenges

We have seen many aspects of systems-of-systems testing, although we have only scratched the surface. Many challenges lie ahead for the future and, as Niels Bohr mentioned, “Predictions are very difficult, especially if they concern the future.” However, let us try to do this perilous exercise and list some challenges below.

13.1. Technical debt

Technical debt is a reality in any development project. It appears because we make choices, sometimes take shortcuts or postpone updates. Technical debt is not just a problem for developers; it exists at all levels, including testing. Here is a list – not exhaustive – of usual technical debts:

– Specifications, requirements or user stories that are ambiguous, contradictory, outdated or missing, or even obsolete and which have not been reviewed (or removed if obsolete) before being provided to the development and test teams. These functionalities will have to be reviewed, corrected or – in the case of obsolete elements – removed and retested resulting in additional costs.

– Code, architecture of components and microservices, modularity and coupling of components, databases (relational or NoSQL), organization of data with or without orchestrators, distribution of components between the frontend, the backend and the cloud, etc. Any modification of the architecture and the organization which underlies the system will require modifications and tests, therefore additional costs.

– Absent test conditions or test cases for requirements considered minor, resulting in a discrepancy between the developed system and the test actions planned to validate the system. There will remain untested parts on this system.

– Lack of traceability from the requirements or user stories to the test cases that validate them, resulting in the design – and execution – of certain duplicate tests and the non-coverage of other functionalities, requirements or user stories.

– Non-automation of certain tests considered minor or relating to internal technical components. This can be intentional (e.g. difficulty in automating) or due to a lack of time or resources. These components will not be able to be tested automatically and the risk of regressions or false negatives is increased.

– Design of fragile tests (e.g. constants and hard data, non-parameterized replay capture, etc.). This is often due to a policy of automating tests – functional or not – that does not take into account the specific needs of automated tests (e.g. association of test cases to components within the framework of DevOps solutions, limitation of tests regression based on impact analyses, etc.). Test automation is to be considered exactly as a development project.

– Anomalies not retested after their corrections, and/or non-automation of these retests for inclusion in smoke test campaigns. Some anomalies are corrected and deployed without being retested (e.g. urgent correction or patch), which may cause side effects. It is important to maintain the level of test automation as close as possible to the functionalities of the developed system.

– Tests no longer working due to interface or code changes. These tests can be disabled during test execution campaigns, or generate incorrect results that are ignored but may hide other faulty tests. Often the absence of ascending traceability towards the requirements does not make it possible to identify those which are no longer validated.

13.1.1. Origin of the technical debt

Technical debt arises from decisions – intentional or not – that seem reasonable in the short term. Many reasons – often valid at the time – are at the origin of these technical debts, such as:

– developments including many updates over time;

– beginning of developments when the requirements have not been correctly and exhaustively validated, in order to save time but often entailing obligations for corrections (or refactoring) in the future;

– business pressures pushing for rapid delivery of imperfect solutions, with a lack of understanding – by the business – of the implications of these decisions;

– lack of modularity and strong coupling of functions making the system and its architecture inflexible, lack of knowledge on the part of the developers resulting in code that is not elegant and therefore difficult to maintain;

– lack of consistent test suites encouraging quick but risky bug fixes;

– missing documentation and poorly documented code; the work to create the documentation will constitute a technical debt;

– lack of collaboration and knowledge sharing in the organization;

– development of multiple branches – for individual customers – generating technical debt to merge the branches within a common core;

– delays in refactoring, rendering parts of the code useless or difficult to maintain;

– non-alignment with standards, including industry standards (tools, frameworks, etc.), or even change of tool or frameworks, requiring rewrites (which are all technical debts);

– lack of ownership, or outsourced developments require internal teams to rewrite or refactor this code;

– last minute changes with potential impacts throughout the project but lack of budget or sufficient time to document and test these changes.

It is necessary to identify and measure this technical debt, and then to treat it in a sufficiently short time. Technical debt must be seen at the level of the system-of-systems, and also at the level of each of the systems, subsystems and software that make up this system-of-systems.

Warning: the technical debt will, sooner or later, have a ratchet effect on the level of quality accepted by users and customers. This will have a financial impact and a significant delay aspect when it is necessary to reduce this technical debt. Agile methods propose to limit technical debt by performing code refactoring. Unfortunately, this refactoring is rarely implemented by designers during iterations, and customers do not want to have refactoring-focused iterations that do not add new functionality.

13.1.2. Technical debt elements

Among the elements entering into the concept of technical debt we have (non-exhaustive list):

– Updates to system-related documentation, whether requirements, specifications, technical architecture definition, test-related documentation, etc.

– Elements not executed, related to processes – design or test – incomplete or not carried out for one reason or another (e.g. unit tests not executed to successfully meet delivery deadlines).

– The non-correction of anomalies or their postponement to an undefined later date. This leads to a decrease in the quality of the software, and also an impact on future developments and their tests.

– A lack of analysis of the root causes of defects and the location of anomalies. The absence of analysis of the root causes means that these causes cannot be corrected and that other anomalies of the same type will reproduce (a flagrant lack of efficiency). Fault location analysis identifies components that statistically contain the most anomalies and may need to be corrected quickly.

– The absence of metrics and/or measures that make it impossible to improve forecasts. Here, it is the continuous improvement process that will suffer from this lack of information: for example, do not measure maintainability (or testability) according to the number of function points of the software.

13.1.3. Measuring technical debt

Technical debt is difficult to measure; often it is not even measured. Failure to take this into account will result in an accumulation of debts which will impact the product in the short term. Measuring the technical debt therefore makes it possible to identify the increase in this debt and to determine whether this debt is becoming too high.

Because technical debt can come from a variety of sources, it needs to be measured in multiple places. One way to measure the technical debt is to calculate the spent cost and the – planned – cost of correction and reconcile it with the optimized design cost to reach a Technical Debt Ratio (TDR) according to the formula:

A high ratio reflects a poor level of quality. This measurement should be done for the requirements (user stories, specifications, architecture, etc.), for the code and for the tests.

We can also identify the presence of technical debt by focusing on the following elements:

– increase in design and development costs;

– decline in quality and increase in the number of anomalies;

– increase in Time-to-Value, and increased delays between deliveries;

– reduced team velocity;

– too much cyclomatic complexity;

– etc.

Some tools pride themselves on measuring the technical debt of software (e.g. SQUORE, SonarQube, Kiuwan, etc.), but we also need to assess whether the technical debt is in the organization’s processes.

13.1.4. Reducing technical debt

As with financial debt, the cost of technical debt increases over time. Indeed, in the short term, the decision to postpone an activity may seem sensible, but there is often never time to do these activities in the future. Therefore, these undone activities accumulate, like debt in the financial world.

These debts will have to be repaid. One way to reduce this technical debt is to ensure – for example, during the activities of closing the tests of an iteration or a level – that no anomaly remains unresolved. Any unresolved anomaly carried over to the next iteration (or version) should be considered a priority in the next iteration. Another way to reduce technical debt – when it has been detected – is to allocate part of the resources allocated to a campaign (or a sprint) to updating the technical debts associated with the requirements or User Stories affected by this campaign or this sprint. This solution focuses the elimination of the debt on the components that undergo modifications without worrying about the components that do not evolve. This limits the effort to components and areas undergoing changes.

Development teams deal with their technical debt in refactoring phases; test teams must deal with their own technical debt each campaign and during each sprint. In an Agile or DevOps development framework, testing activities should be considered as important as development activities. Indeed, the identification of a failure – by a test case becoming “failed” during a campaign – can delay the production launch, especially when implementing “Continuous Testing” within the framework of DevOps.

Reducing technical debt is a major challenge and will become more so with systems-of-systems and complex systems.

In the context of software testing, “Technical Debt” will be considered as all things that are not (or have not been) done. For example, the non-execution of certain activities allowing anomalies to pass, which are detected late or by the customers after delivery, or the fact of not writing the documentation of the software or system developed. It will be necessary to perform these activities and their cost will increase over time.

13.2. Systems-of-systems specific challenges

Systems-of-systems are subject to particular challenges including:

– long lifespan of the systems and the software composing them, involving:

- the obligation to keep test environments and equipment in configurations that could become obsolete, in order to keep test environments representative of production,

- the need to have maintenance and test teams that are kept for many years, so as not to lose the functional and technical knowledge related to these applications,

- the need to have up-to-date and exhaustive documentation, in order to allow a transfer of knowledge to the personnel who will be involved in future maintenance;

– obligation to manage multiple software configurations (including OS compatibility, interactions between applications, flow definitions); these aspects of configuration management – both software and hardware (for EIS) – involve managing a very large number of versions, especially if continuous delivery methods are in place;

– evolution of requirements and risks, for each of the components and elements, each of the applications, each subsystem and each system;

– absence of a single referent with knowledge of the entire system-of-systems, specialization of knowledge on each of the systems without taking into account possible interactions;

– loss of consciousness by the departure of individuals without updating the documentation;

– obsolescence of components (HW/SW);

– management of interfaces and their evolutions;

– evolution of standards;

– ability to perform end-to-end tests (End-to-End or E2E);

– separate evolution of components (including hardware, operating systems, other software), replaceability of components; adding additional layers (display on tablets, evolution of multi-tiers systems).

13.3. Correct project management

For many years, the Standish Group has regularly published the “Chaos Report”, and the number of failed or seriously overdue or overbudget projects remains globally stable (close to 70%, of which 19% failed). The improvements identified over the years relate mainly to small projects – less than 10,000 function points – which can be properly mastered by Agile teams. We have confirmation – in Jones (2018) – that large projects (10,000 function points and more) such as systems-of-systems have a failure rate greater than 30%, which can go up to 47.5% for systems with 100,000 function points and more.

The need for good project management is obvious, but the organizational and technical complexity of systems-of-systems imposes a correct mastery of all aspects of project management, the use of appropriate project management tools, metrics and reliable periodic measurements to have an impartial view of the progress and quality of the project and of each of its components, and to be able to anticipate hazards. This implies that best practices in project management be put in place, such as those proposed by PMBOK (from the Project Management Institute) or PSP/TSP proposed by Carnegie Mellon University and Watts S. Humphrey (1998) and Humphrey and Thomas (2010) and to implement effective project management tools to take into account the management of critical paths and critical chains.

Some Agile advocates will consider that projects should be self-managing, but that does not seem appropriate for systems-of-systems projects given the interplay between a sequential view for higher levels and an agile and reactive view for lower levels. A SAFe type solution could be suitable by integrating periodic deliveries (Release Trains) comprising Agile sprints in a sequential environment. However, the requirements for hardware artifacts make implementing Agile solutions quite problematic.

The specifics related to testing software, systems with preponderant software and systems-of-systems are important and not covered in most project management frameworks, nor taught in most IT project managers’ courses. To limit the number of failures of large-scale projects, aspects related to testing (reviews, inspections, static analyses, coverage of quality characteristics, etc.) must be mastered, and this requires the adaptation of current initial training as well as specific continuing education.

13.4. DevOps

DevOps is a rising trend in the industry. As mentioned previously, it is a Lean version of agility whose objective is to streamline exchanges between development and operations via rapid and constant feedback to development teams (e.g. via the automation of tests) and automation of deployment activities (design of test environments and deployments).

The purpose of Lean is to limit loss of value. Among the losses, we have:

– loss of time, waiting times before an activity. Therefore, the grouping of several evolutions before their tests becomes a factor of loss of value; the sooner developers are made aware of their anomalies, the sooner they will be able to fix them and understand the source of these anomalies. This involves both build automation and test automation aspects;

– the accumulation of potential losses – technical debt – such as anomalies, even minor ones, not corrected and postponed to a later delivery;

– material losses, such as the need to rewrite tests or code.

One of the losses in value is the time lost between the creation of defects – during development – and the detection of these defects during testing. Early detection and correction of defects provides information to developers before costs rise too high.

In a systems-of-systems framework, the main DevOps challenges include:

– the quality and completeness of the documentation, whether we are talking about the description of requirements, functionalities, Epics, Features or User Stories, architecture or development documentation, or even the description of tests; this information – as well as the data allowing traceability from the requirements to the execution of the tests – is essential to maintain the consistency of the systems;

– execution of regression tests at each test level and for each iteration, taking into account the needs for automation of these tests, maintenance of previous tests (deletion or modification);

– the aspects of versioning and configuration management, in order to control the various hardware, parametric or software configurations of systems-of-systems at customers;

– the management of test data for each of the environments, and the potential coexistence of different versions of the same data;

– the need for multiple environments to run the tests for each test level, the maintenance of these environments and their upgrading;

– the difficulty of obtaining environments for the levels of system integration (SIT – System Integration Testing) and systems test of the system-of-systems insofar as these environments must be as representative as possible and their management – including aspects of configuration management, evolution management and anomaly management – are complex and costly.

13.4.1. DevOps ideals

In Kim (2019), the five DevOps ideals are defined as follows:

– locality and simplicity, in order to avoid internal complexity, whether in the code or in our organizations;

– focus, flow and joy, so that our daily work is pleasant, non-repetitive and allows us to deliver something quickly (and that we can have a quick return on these deliveries);

– improvement of daily work, so that each day is better than the day before, and therefore, that coming to work is a pleasure rather than an obligation;

– psychological safety, that is, avoiding a culture where fear of bad news becomes a danger of hiding bad news;

– customer focus, where we constantly ask ourselves the question if what we are doing really brings value to our customer.

These ideals are interesting and ambitious in a world where many organizations are struggling to survive. Given the extensive interweaving of companies and the use of umbrella companies to limit the number of suppliers (and allow revenue to be extracted without providing real added value), these ideals seem “idealistic”, even though they are completely valid in smaller organizations.

13.4.2. DevOps-specific challenges

As seen previously, DevOps offers to automate integration (CI for Continuous Integration), deployment (CD for Continuous Deployment) and therefore also testing (CT for Continuous Testing). Such automation makes it possible to reduce delays and ensure a level of quality, but it assumes that the automated verification and validation activities – and their reporting – are reliable. It will therefore be necessary to consider the use of techniques such as fault injection or statistical analysis of test results and production feedback.

An important aspect to manage in the context of DevOps is the separation of the technical aspect from the business aspect. DevOps, continuous integration, and continuous delivery focus on delivering software components. The regression test cases they will implement will focus – as with TDD, ATDD and BDD – on the operation of the software component, but not directly on the behavior of the system beyond the component. Failures – regressions – may appear in functional elements that are not directly in contact with the modified component (cases of remote regression). It will therefore be necessary to plan automated test campaigns to identify remote regressions.

13.5. IoT (Internet of Things)

The IoT represents an interesting challenge for systems-of-systems testing. Sometimes called “web 3.0”, the IoT covers telemetry and communications between connected objects for various uses, including in critical areas such as health and safety. We may also include areas such as home automation (any intrusion into our privacy may have an impact on our security and on the confidentiality of our personal data), production (e.g. refineries, power plants, etc.) or the transport of energy, without this list being exhaustive. For example, sensors placed on certain parts of our vehicle send information to a central unit to notify us in real time of its status, and also to notify the manufacturer or our mechanic in the event of a breakdown or failure. All these aspects of telemetry are grouped under the term IoT.

We clearly identify that objects will be developed by different organizations and that communications will use different standards or different versions of the same standards. The information exchanged from these objects will vary between object and connector types. As the objects are connected via radio connections (Wi-Fi, Bluetooth, ZigBee, Z-Wave, NFC, etc.), users do not always realize the information exchanged – sometimes without their knowledge – nor the impacts that these objects can have on their daily life (especially in case of dysfunction).

The identification of potential failures and how defects can be used for unauthorized purposes must be identified and analyzed as exhaustively as possible, and mitigation or even securing activities must be considered.

The combination of functional paths – valid and exceptional – is almost unlimited here, and the data exchange media are multiple and varied (RFID, QR Codes, ShotCodes, SMS, GPS-type virtual tags, etc.) when the sensors can provide medical data (blood sugar measurement for diabetics), geographical location or interact with social networks or not (e.g. sending targeted advertising messages, monitoring the quality of service in a store, etc.). This involves integrating components with varying levels of security into networks, which can weaken or compromise the level of security of network elements. Similarly, the use of voice detectors and analyzers (e.g. in vehicles or at home) implies that conversations can be listened to, analyzed and therefore data can be extracted and used well or badly.

As part of the end-to-end testing needed to validate systems-of-systems, one of the challenges will be to generate enough consistent test data to ensure that trends within a valid range of test data are detected (e.g. to detect leaks or wear), to identify any data outside of valid ranges, as well as to identify loss of connection with the sensors which could mean sensor failures or communication. It is indeed necessary to differentiate the display failure from the absence of data to display.

The rapid evolution of techniques, the appearance of new objects, new functionalities, and new exchanges of – confidential – data means that the identification of new attacks will be more difficult. Similarly, the use of central servers or the ability of manufacturers to impose – without the knowledge of system users – automatic updates including usage limitations – or changes to contractual conditions – tends to generalize and can be one of the vectors of a global attack on the systems-of-systems to which the IoT objects are connected, or even an open door to regressions or unwanted side effects.

13.6. Big Data

The concept of Big Data is often associated with the use of predictive analytics, behavioral analytics or other analytical methods that extract value from very large volumes of data. The use of the Internet, surveillance cameras (with facial recognition) and the storage of very large volumes of information on user behavior, their interactions in social networks, their browsing profiles, their consumption patterns, their purchases (including medicines), all this information can be used for analysis purposes by algorithms which will make it possible to obtain trends.

These algorithms should be checked and validated before being used. However, the only way to validate these algorithms, to ensure that they do not provide erroneous information, is to test them. To do this, it will be necessary to design datasets that represent behaviors that meet the profiles sought, and also behaviors that do not meet, or not completely, the profiles sought, in order to determine from which moment the algorithm is defaulted.

The solution of using mathematical analysis, statistics and other concepts to infer laws (e.g. regression, nonlinear relationships and causality of effects) is a way to reveal relationships and dependencies, even to predict results and behaviors. It seems risky to make such predictions if the sample size is not statistically representative, and – even though it is representative – such predictions are not certainties and come with no guarantees.

It is well realized that the level of complexity of the various algorithms will require testers with knowledge of statistics, human behaviors, etc. which must be at least equal to those of the designers of these algorithms. The creation of the test data could influence the results obtained.

13.7. Services and microservices

More and more systems are developed including microservices or APIs that allow communication between systems with data transfer. These exchanges use interfaces based on protocols, such as HTTP or HTTPS, to allow access to data servers, web servers or mobile applications. When a system uses data or content from multiple sources, it is sometimes referred to as a Mashup.

The challenges identified include, among others, the following aspects:

– evolutions of services, microservices or APIs, from the identification of these evolutions and the updating of systems, to the management of user regressions and security breaches;

– use of services in security-critical applications when the transmission medium (the web and TCP/IP) does not guarantee that all the data will be transmitted (asynchronous transmissions vs synchronous transmissions);

– increase in dependencies on services, functionalities or protocols developed by third parties for which the level of reliability is not adequately guaranteed, or even is not known (e.g. LOG4J flaw), which leads to fragility of the system-of-systems;

– confidentiality of transferred data, slowdown and security vulnerabilities due to coding format conversions.

The activities of refactoring or modifying the architectures of the systems-of-systems can lead to evolutions towards mergers or separation of components. This will have an impact on testing, among others in the context of DevOps (CI/CD) and the validation of functional and/or technical impacts (see also Ford et al. 2021).

13.8. Containers, Docker, Kubernetes, etc.

These solutions seem to allow for simple fixed configurations, but require significant software over-/under-layers that can slow down execution or increase dependencies, configuration management overhead, and development, maintenance and testing complexity. If these solutions make it possible to put code and unit tests in the same component, they will not cancel the need for complete functional tests of subsystems and systems.

Indeed, even though each element is stable and reliable, the evolution of its use or its environment in a system-of-systems, can lead to the appearance of risks or unforeseen behaviors during the initial design. We could take as an example the “Dirty Pipe” flaw (CVE-2022-0847) in Linux which allows a malicious process to modify the privileges of other containers using a shared image.

It is therefore, here too, necessary to ensure that the documentation of the components is up to date, whether it is the documentation of the interfaces, the functionalities, the code and the tests, and that everything is correctly traced from and towards requirements.

13.9. Artificial intelligence and machine learning (AI/ML)

Artificial intelligence has nothing to do with human intelligence but more with a machine repeating what it has learned (usually by repetition). Once the system has learned correctly (via the machine learning function), it must be able to use this learning to determine choices, make decisions, etc.

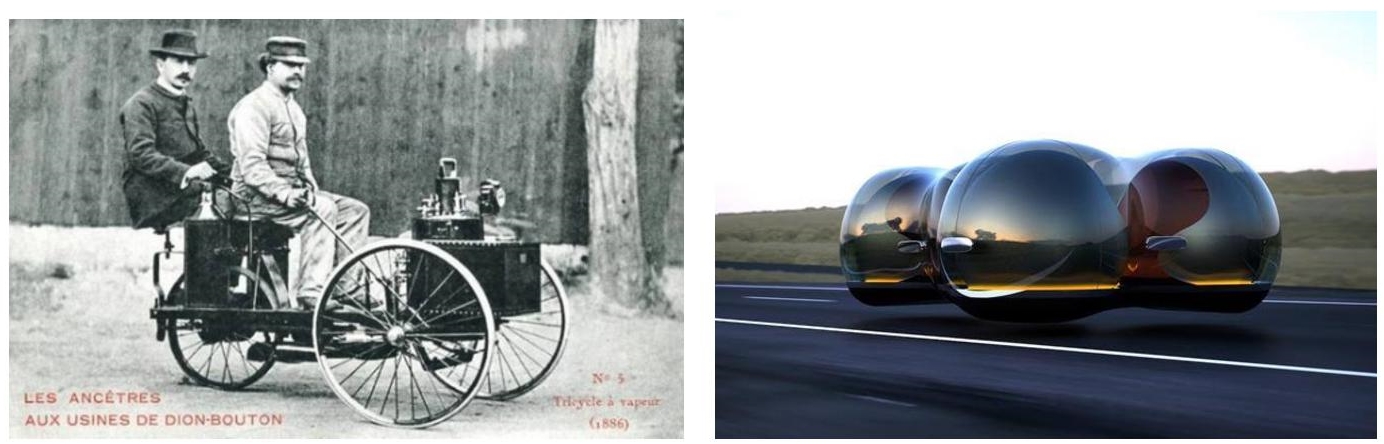

We understand that two aspects are fundamental: on the one hand, the quality of the data that will be used to teach the system (the sample and its size) and, on the other hand, the quality of the algorithm and its capacity to focus on the important elements of the samples by disregarding unnecessary elements. Let us compare with how a child learns a concept. What defines an automobile? Do we need wheels and if so how many? Do we need a roof, doors, steering system, etc.? Must the wheels be on the ground (is an overturned automobile still an automobile?)? We see that many parameters come into play. A machine should compare these two images below and determine that they are both automobiles.

Figure 13.1 Images of automobiles

Let us take an example to illustrate the difficulty linked to the validation of the algorithm: a tank detection system was shown (ML aspect) many images of allied or potentially enemy tanks. Image-based tests validated that the system correctly recognized “friends” from “enemies”. The system was therefore installed and tested on a shooting range. He considered ALL tanks identified as tanks to be “enemy”. Why? In fact, the images of “friendly” tanks were taken in different conditions than those of “enemy” tanks (closer to those of the terrain), and therefore, the system considered that the tanks on the ground were always enemy tanks. I do not know if this example is real or not, but it shows the difficulty in determining whether or not what the algorithm has selected is correct. In the context of automated driverless driving systems, these systems may be confronted with changes in environments that become challenges that are too complex to manage (see article from CNN1 and YouTube2).

Questions for testers to ask themselves will include:

– How can we validate the proposed sample?

– Can we identify examples that invalidate the sample, or can we demonstrate a failure in the algorithm?

– Are the testers’ knowledge in statistics sufficient? How do we make sure that what we read is correct?

It is very likely that the validation of algorithms requires advanced knowledge in algorithms, statistics and/or mathematics, therefore collaboration with other specialists (see also Smith (2022)).

13.10. Multi-platforms, mobility and availability

In addition to the development of connected objects, we also have a multitude of smartphones and computers that can connect to applications – and therefore to systems and systems-of-systems – either directly (via a wired connection) or indirectly via radio connections, wired, etc. This forces applications to manage aspects of multiple remote connections (e.g. the same account is connected from a PC and a smartphone), and also connections that may change during the transaction (e.g. connection to different relays for a transaction carried out on a moving smartphone).

Applications and systems will have to take into account evolutions to be designed in parallel on various operating systems (e.g. Windows + Linux + Android + iOS + …) so that the same application, the same system-of-system, is accessible from these various platforms. If the user interfaces are carried out via a browser, it will then be necessary to ensure that the application or the system-of-systems generates the same user experience regardless of the platform and the browser, and also regardless of the geographical location and the network reception level. If the application is of the heavy client type, optional application modules – depending on the performance level of the network used – could be considered.

We realize that the test activities will be more complex as it will be necessary to combine functional aspects with elements of geolocation, variation in the quality of networks (including 3G, 4G, 5G, etc.), different browsers, responsive displays, etc.

We find ourselves faced with the complexity of systems-of-systems with software publishers and uncoordinated developments that can lead to side effects.

Regarding smartphones, they could be emulated and the tests of the applications they include could be carried out based on these emulators. However, emulators do not always behave identically to the system they emulate, so it will be necessary to also – in addition – perform tests on the physical systems themselves. Similarly, the writing modes – from left to right, from right to left, without forgetting the characters linked to Sanskrit, Chinese, Korean and Japanese among others – as well as their coding (via unicode) can generate behaviors specific to be tested. The translation of the “€” code seems to be “$” in some cases, which can lead to differences of values in invoices.

The availability of networks and features may also be limited depending on whether the user is roaming and depending on the country. Some countries limit the use of applications, or monitor the information exchanged. It is therefore recommended to act with caution regarding the use of connected systems and systems-of-systems which can become victims of attacks via uncontrolled or poorly controlled network connections.

13.11. Complexity

The number of conditions to be taken into account in systems-of-systems will increase more and more, and their complexity will grow more and more. The difficulty that our testers will have to face will relate to the creation of test environments that will be increasingly complex.

Separating systems into subsystems helps limit complexity, and also hides interactions between subsystems that may not have been anticipated. For example, positioning an antenna on an aircraft may be in the way of a door opening; the operation of one piece of equipment can interfere with another (one of the causes of the loss of HMS Sheffield during the Falklands conflict).

Complex systems are inherently more hazardous and inherently more dangerous. The frequency of the hazard can be modified, but the presence of these hazards characterizes such complex systems (and by extension systems-of-systems). In complex systems and systems-of-systems, the combination of minor failures leads to catastrophic failures. It is extremely rare that a single failure is sufficient to cause the entire system to fail. In the same way, complex systems, given their complexity, will never be tested exhaustively and will operate with latent failures, or in degraded mode. However, their multiple redundancies will allow nominal operation despite the presence of numerous failures.

More and more the systems, given their complexity, tend to be optimized. This optimization makes them more fragile and less robust. Increasing redundancy increases the robustness of these systems and therefore their resilience to failures.

13.12. Unknown dependencies

The LOG4J component is present in an Apache component included in the Apache logging services (Apache Logging Services). On December 9, 2021 a zero-day vulnerability was published by Alibaba Cloud Security Team regarding this LOG4J component, and it has since been widely publicized and used by many hackers. This vulnerability clearly shows we have less and less control over all the software we use, and we are dependent on an increasing number of other software that can open doors to malicious activities. The LOG4J vulnerability potentially affects millions of servers on which tens of millions of applications and users depend.

In the context of systems-of-systems, these vulnerabilities in relation to other software will increase, and may concern many systems. It is difficult to ensure the security testing of the servers and applications on which our systems depend, and it is complicated to listen to all the new vulnerabilities discovered every day. Ensuring that these new vulnerabilities are not present in our systems-of-systems and – in case they are present – ensuring that all systems are properly updated is a long-term job.

This is made doubly difficult because vulnerability patches must simultaneously fix the discovered flaw, and also avoid opening other ones. In the case of LOG4J, the corrections led to the discovery of other vulnerabilities (CVE-2021-44228, CVE-2021-45046 and CVE-2021-45105) on the published patches.

13.13. Automation of tests

Test automation is often perceived as the only solution to quickly perform many tests to validate all the combinations of values and actions to be tested. However, test automation brings many questions too frequently underestimated or evaded:

– What does test automation really bring?

– What return on investment can be expected from test automation?

– How to correctly implement end-to-end tests, often on heterogeneous environments, with multiple technologies?

– What maintenance costs keep automated tests up to date and what costs reduce technical debt?

– When the tests will be automated, how to ensure that the trust we place in them is well placed (how to avoid false negatives)?

– Are the scripts and data easily portable to another tool when the current tool becomes obsolete?

– Knowing that it is impossible to be exhaustive in the design and execution of tests, how can we ensure the quality of the deliverables provided?

In the following, we propose some lines of thought in response to these questions.

13.13.1. Unrealistic expectations

We have seen in this book that software testing is not a trivial activity, and it is unrealistic to think that the installation of a tool (or several) will replace in the short term the intelligence and the capacities of a smart, properly trained human. Adding an extra layer of complexity – automated testing tools – to a layer of interlocking activities, under budget and time constraints, with cross-dependencies, will always have an impact.

Among the often unrealistic expectations of managers and some pseudo-experts, we have among others:

– Forgetting the general principles of the test as defined among others in Homès (2012), such as the impossibility of testing exhaustively or the obligation to verify the results obtained in relation to the expected results.

– The implementation of test tools would provide a methodology to improve the quality of the achievements. This expectation is absurd: if our processes are chaotic, there is no reason to think that a tool – no matter how good – will change the way we work. The more we will have the possibility to automate the chaos.

– Testing tools make testing easier and do more tests in less time. This expectation forgets the learning needs of the tool, the development of the frameworks (e.g. creation of keyword-driven test engines) and the maintenance needs when the test targets evolve (e.g. of the user interface or changes in functionality).

– The implementation of a keyword-driven testing framework should not be limited to a single software, but should be linked to similar frameworks focused on other software, components, equipment, subsystems, etc. to enable end-to-end functional testing. As the systems are developed by separate organizations, it is unlikely that these frameworks will be compatible within a test environment of sufficient size to replicate the complete system-of-systems.

– In a system-of-systems, software, software-relevant equipment, subsystems and systems are tested via interfaces that must be usable by humans. The majority of tools are not capable of reproducing human actions (e.g. pushing a button, pulling and/or turning a potentiometer, etc.), so only the computer translations of these actions are reproduced (conversion of the physical action into sequences of digital impulses). The real human feeling is not taken into account.

– Confusion in the use of the term “test” through the use of design techniques like TDD, ATDD, BDD, etc. where the term “testing” is used to refer to automated verification of specifications instead of “independent verification”. This use of the same term for two different meanings leads to confusion for non-specialists.

13.13.2. Difficult to reach ROI

The measure of return on investment is always difficult to calculate: the formula is (gains – costs)/costs. However, the duration should be considered because the return on investment is never instantaneous. Let us take a few examples:

– If the duration taken into account is only that of development, the gains can only be measured in terms of identifying defects, insofar as these defects can be measured financially. This is difficult because the impact of failures on users must be calculated as a risk (impact * frequency) and – as the software is not yet in production – the frequency cannot yet be measured, nor the financial amount of the impact for customers.

– If the duration taken into account is the total duration of the life of the product developed, maintenance costs must be added to the initial cost throughout the life of the product. Admittedly, it will be easier to measure the impact of failures, but improvement actions, rewrites, etc. will take place which (1) will impact the calculations and (2) can only be calculated a posteriori, therefore too late.

– If the cost of a defect is defined from the outset according to the moment when it is injected and the moment when it is discovered (and corrected or not), this introduces a dose of a priori which falsifies the calculation: how to justify the cost of an anomaly discovered during a specification review or the same anomaly discovered during system testing?

– If we focus on regression tests, how to justify their cost if they do not discover new failures? How to estimate the cost of the trust that stakeholders can put in the development of the product?

We also realize that the measurement of ROI would require having a stable reference – the real cost of failures – to have a fixed benchmark. However, such a benchmark is not easy to determine. What is the cost of human life (e.g. in the case of critical failures of a system-of-systems resulting in the death of one or more people)? Should the loss of market value of the company publishing the system be considered (but is the failure of the system the only value considered to value the company?)? Should we consider the reduction in maintenance and evolution implementation costs that can be obtained with a system that is more easily maintainable, scalable and testable? How to measure this and over what period to take it into account, and how to justify it to managers who mainly have a vision limited to quarterly or annual financial results?

13.13.3. Implementation difficulties

Correctly and efficiently implementing one – or several – test tools can only be envisaged with a few weeks or months of preparation, so it is not a task that must be carried out urgently during the project. In addition to determining the ROI we want to achieve, we must identify the characteristics – functional or technical – that we plan to automate. Are we planning to measure performance, maintainability or regressions? Do we want to test on a particular environment or on several environments? Each of these goals will require a different tool. Selecting and then choosing among the various tools available, installing and learning how to use these tools, and adapting to these tools current test methods and techniques will take time that we must anticipate from the start of the project.

If we identify that the project is falling behind schedule, deciding to implement tools will probably not bring short-term improvements to the project. Quite the contrary: think about training actions, learning about the tool, automation of tests already designed, creation of reports, etc. to put in place before being able to benefit from the use of any tool, especially if it is on the critical path of the project.

13.13.3.1. Multiple test environments

In a system-of-systems, it is common for each subsystem, each equipment with preponderant software, to use an operating system and different development languages. It will therefore be necessary to acquire specific tools for each different environment.

Specific test environments should be considered to avoid side effects between development, integration and system testing for each application. Therefore, in addition to the multiplicity of environments identified above, we must also consider the various environments for a given software, namely – at a minimum – a development environment, an environment for integration and integration tests, an environment for system testing and an acceptance test environment. In addition, we could have a pre-production or factory acceptance environment before delivery to the system-of-systems. Each of these environments can benefit from the implementation of automated testing tools. Differences in environment and organization must also be considered.

We realize that each of the test environments – automated or not – will have to be properly managed in configuration to ensure careful monitoring of versions and identification of anomalies. Similarly, we identify that the production teams will have to be able to manage multiple versions of software products to control the corrections of anomalies and to transfer them to all the other versions of the corrected software.

13.13.3.2. Interactions between environments

A system-of-systems involving exchanges between the various components and systems, it will be necessary to provide – between the test environments – exchange channels that will most faithfully represent the characteristics of the exchanges between the systems. This includes – for the “system” and “acceptance” system-of-systems test levels – simulating the latency and bandwidth limitations of communications between systems. If the systems are moving rapidly relative to each other, it will be necessary to consider whether to apply Doppler-induced frequency changes. If the communications are carried out by radio frequencies, the impact of jamming and the need for frequency hopping must be considered to limit the effect of such countermeasures. If we wish to guard against the impact of EMP (Electro Magnetic Pulse), it will be necessary to consider optical communications, for example, laser.

Each of these interactions between environments will have to be either simulated or considered with real equipment, which does not facilitate test automation activities.

13.13.3.3. Tests reproducibility

To simplify the execution of tests, we will want to rerun, on one environment, the tests that were executed on another test environment. Differences in settings between environments will likely make this difficult (especially if those settings are not synchronized or driven from a central point). Another element to be addressed in the reproducibility of test scripts stems from the diversity of tools and design of test scripts according to the components and test levels. The absence of common tools and a common organization makes this complex. The TTCN-3 standard allows a common scoring for tests and therefore to share tests between various components or levels.

13.13.4. Think about maintenance

Test automation is to be considered as a development project like any other, perhaps even as a more complex project since the repository (the systems to be tested) can vary while generally the requirements do not change too much in a development software. It follows that, just as for a software project, maintenance and development activities will be necessary throughout the life of the software to be tested, including for automated tests. Without this maintenance, the testing effort will quickly no longer be in line with the developed system.

As the test effort is often distributed between each of the co-contractors and sub-contractors, this implies that each of the stakeholders must maintain the test assets (environments, tools, automated test scripts, test cases, test data, etc.) as well as their documentation, and this for the entire life of the system-of-systems (or at least its warranty period). This will prevent an increase in technical debt.

It will therefore be necessary to also think about the maintenance test actions to be implemented when:

– a system, equipment or product evolves (addition, modification or deletion of components), in order to identify the impacts on the system-of-systems as a whole;

– the system-of-systems environment evolves (e.g. modification of interface supports, operating systems, etc.), in order to ensure that the system is maintained in operational condition after these evolutions;

– the system-of-systems is scrapped, to ensure that the functionality of the old system-of-systems is properly carried over to the new one.

13.13.5. Can you trust your tools and your results?

Once we have ensured that we have tooled test scripts and automated processes supported by tools, we tend to trust the results provided by these tests and rerun them periodically, daily or every iteration. Often the only thing checked will be the result: “pass” or “fail”. In case of failures, the test team will verify the causes of these failures and have the code corrected or the test scripts corrected, which is often a heavy workload. It is necessary to question the level of confidence that can be given to automated tests and the results obtained.

Tooled tests will only find the defects for which they are designed. They can miss “false negatives”, that is, not identify faults that are present in the code. These false negatives can then be found by users, in production. We will therefore have a false sense of security.

The tools can also detect “false positives”, that is, identify as defects elements that are not defects. Such detection will generate a workload – to find the nonexistent anomaly – and will have a negative impact on the confidence that we will have in our tests and in the application.

To verify the quality of the tests designed and their ability to identify faults, it is recommended to perform fault seeding to introduce known faults into the code and to verify, after passing of all automated processes, if these defects have been identified (and eliminated). If the defects in question have not been identified and removed, this implies that the automated processes have flaws that will need to be corrected.

13.14. Security

Testing security in systems-of-systems and in software in general is difficult to implement because we will only seek to cover vulnerabilities that have already been found (e.g. among competitors, based on recognized repositories, etc.). Security vulnerabilities not known to date will not be tested, except by malicious hackers. A technical watch to identify “zero day” flaws and remedy them will be useful for each of the co-contractors of the system-of-systems.

It may also be necessary to think about security in terms of intellectual (or industrial) property security, national security and the defense of national interests. These are elements very often present in systems-of-systems. It is necessary in such cases to turn to our security officer.

13.15. Blindness or cognitive dissonance

Blindness happens when our beliefs collide with the reality on the ground. Technical or procedural beliefs are like religious beliefs and lead to the same reactions of denial or self-confirmation. It is unfortunate that, in a field that claims to be scientific, the use of statistical and tangible evidence is not more frequent and more widespread. We can visualize this aspect of beliefs in the use of methods or tools that claim to be applicable to all projects whatever they are (size, domain, criticality, etc.) when nothing proves it. We therefore come to refuse reality, to cling to certain non-representative cases where the belief applies, or to seek excuses to refute arguments contrary to our convictions, as explained by Syed (2015).

Blindness in software development and testing is generally characterized by persistent optimism and a refusal to see reality, to measure oneself against a known benchmark. In the case of systems-of-systems and software testing, this translates into various ways:

– Plan test campaigns without considering the needs for correcting detected anomalies and re-executing test scenarios that have suffered anomalies. This results in the execution of tests until the day before delivery, therefore in the potential identification of defects until this date. There will be no time left to test defect fixes and the risk of introducing anomalies into production will be increased.

– Not ensuring that the test execution prerequisites (datasets, environments and flows, requirements repositories, data expected for comparison, etc.) are present: this implies that the test activities will be an uninterrupted sequence jerks and stops. This will have the result that tests will start and then be forced to stop in the absence of one or more prerequisites, which will impact the delays.

– Thinking that the development teams can design code without defects, and therefore that it is impossible to predict the number of anomalies that the code could (should) contain. This common blindness can be removed quickly during testing by using metrics to identify and display trends.

– This same blindness also applies to testers: testers can also make mistakes, whether in test design, data selection or test execution and reporting. The implementation of traceability makes it possible to limit some of the oversights.

– Think that multitasking is a solution that allows us to focus on one task when another is blocked. This way of doing forgets that the individual needs to concentrate to be effective. Any change of task leads to a more or less long period during which the individual must change context and focus and is therefore no longer as effective.

– Thinking that the corrections will be made without generating new defects and therefore will not need to be retested. Each of us knows that it is when we are rushed and stressed, when we take shortcuts, when we make mistakes (an application of Murphy’s law). However, developers are often in a hurry to deliver bug fixes. This results in an increased risk of errors, and therefore of the introduction of other defects. This amounts to thinking that the reduced delays, the absence of detailed analyzes and the complexity of the defects to be corrected, will have no impact on the quality (more precisely the lack of quality) of the software corrections.

A potential solution to this aspect of blindness and cognitive dissonance can be to question yourself at every moment. That is, not to rely on opinions but to rely on statistically representative data, rather than trying to use this data to confirm a belief or an opinion.

13.16. Four truths

Among the elements that should not be lost sight of in the testing of complex systems and systems-of-systems, we also note four recurring truths:

– people are more important than material;

– quality is more important than quantity;

– good tests – and good testers – cannot be mass-produced;

– competent test teams cannot be created after the emergency arises.

How should we understand these truths?

13.16.1. Importance of Individuals

Hardware, test environments, test tools and processes are all subject to failures and limitations. Only individuals can improve, adapt or evolve rapidly. Individuals can take initiatives, identify obstacles and avoid them, provided that they have sufficient knowledge and autonomy to do so, but tools cannot. The success of many projects relies on a small number of motivated – and motivating – individuals instilling confidence and the desire to excel in their teams.

In the context of software testing and more specifically system-of-systems testing, the workload is often very heavy, the impacts and constraints are multiple, the stress levels are high and the deadlines are short. If we are able to select motivated and motivating individuals, to leave them the ability to express themselves, the benefits we will derive will be much greater than the difference in salary level compared to less competent individuals.

13.16.2. Quality versus quantity

It is not necessary to have a plethora of testers to properly test components, products, systems or systems-of-systems. We must have the right people, covering the technical and functional needs, with the right level of expertise. Remember that the difference between a beginner and an expert individual is often a ratio of the order of 1 to 10. An expert individual will often be 10 times more effective (and efficient) than a beginner. If the expert is rarer and more expensive, he is rarely 10 times more expensive than a beginner.

The aspect of quality versus quantity also relates to the tools: some tools are open-source and/or free, but are the functionalities provided related to the loss of time or the increase in load compared to a commercial tool? This may need to be checked as part of our system-of-systems project.

Quality is also seen in the processes: often the processes are unnecessarily complex – especially when they rely on automated tools – and waste more time than they save. For example, defect management tools are able to ensure traceability to and from the requirements, test cases and component, products or systems under test, but if the workload to fill in the information is too heavy this information will not be filled in and… the expected benefits will not be present.

13.16.3. Training, experience and expertise

As mentioned earlier, the difference between a beginner and an expert is often a ratio of 1–10, where an expert takes 10 times less time than a beginner to complete the same job. Ten thousand hours of practice is often cited to define an “expert”.

Reaching a level of expertise implies having practiced in several fields, used several tools, products or systems, and having been trained in several techniques or methods in order to select the most appropriate to the needs. According to Anders Ericsson of the University of Florida (author of Peak: The New Science of Expertise), several factors come into play: it would take 20,000 to 25,000 hours of practice (much more than the 10,000 hours commonly accepted, i.e. from 12 to 17 years) and also a deliberate practice and a constant search for improvement in all fields so that a person becomes truly expert in this field. If the person has continued to do the same thing for years, they cannot claim to be an expert.

Training is a solution to increase the skills of individuals if they are quickly put into practice. Training should be adapted to the needs of individuals on their project, for example, by being accompanied by specific operating methods for ongoing projects.

Training is not a substitute for experience – far from it. The examples used during the training are selected to exercise an element relevant to the training. They rarely make it possible to model the complete level of complexity of a system-of-systems. Aspects related to volumetry, workload, quality – or volumetry – of the data to be introduced are often only seen after a few weeks or months of use.

Experience matters, whether positive or negative. A negative experience – on a project that ends badly – is beneficial if it allows the individual to identify the causes of project failure and avoid them on future projects.

13.16.4. Usefulness of certifications

A lot of certifications are starting to appear regarding testing methods and software testing tools. The author is the founder in 2005 of the French Software Testing Board (CFTL) and participated in the foundation of the ISTQB. At that time software testing was not taught and anyone could call themselves a tester. The implementation of certifications (foundation level and advanced level) for testers has made it possible to organize a certain hierarchy and to clarify things.

The main interest of certifications – for testers – is to ensure common terminology and basic knowledge. It is an illusion to think that 21 hours of training will be enough to teach all the intricacies of software testing. We have been able to see the emergence of a real training “business” where organizations benefit from the financial manna of training subsidized by the state, on the one hand, and where others assume the right to measure a level of knowledge for a financial contribution. In the same vein, while a definition of knowledge levels was necessary at the time, the proliferation of niche certifications (performance, Artificial Intelligence, Automotive, Usability, Automation, Gaming industry, Acceptance, Mobiles, etc.) as well as the proliferation of “expert” level certifications demonstrate a mercantile tendency rather than an objective of improving knowledge and test practices.

As testing is not “sexy” and is generally forgotten in computer engineering training courses, this mercantile view will probably continue to prevail, at the expense of the quality of the software and systems produced.

13.17. Need to anticipate

More and more immediate answers are sought instead of anticipating problems upstream. New methodologies, such as Agility, take this to the extreme by offering sprint durations of two to three weeks. This implies that the majority of individuals will work with a two- or three-week horizon and will not anticipate much longer term.

As in the construction of buildings, it is necessary to design infrastructures and have an overall plan if we do not wish to have a system-of-systems unable to adapt or develop in the future. An optimal architecture, a well-organized system does not evolve organically and we do not have time to consider several parallel developments of systems to then select the best (see Darwinism).

Let us take an example: if we want to invest in an automation solution – an important job, sometimes profitable and always long – it is necessary to define the short-, medium- and long-term objectives that we want to achieve with this automation. This may be to save time when executing test campaigns, but will imply an additional workload for each sprint: it will be necessary to automate the tests that are designed currently, and also to automate all the old tests that have been designed in previous sprints. We realize that a horizon of two or three weeks will be too short. Similarly, timeframes for purchasing, sourcing, training and setting up automation tools and processes need to be considered. Here too, a three-week horizon may be unrealistic.

The purpose of anticipation is to make it possible to propose minor but early adjustments to avoid major adjustments later. It will therefore be necessary to have a long-term vision, both of the objectives and of the risks/constraints. This anticipation is often the prerogative of experienced individuals.

13.18. Always reinvent yourself

The evolutions of methodologies, the increase in interactions between the various systems and components as well as between individuals and companies, mean that the “good practices” identified here and in the works cited in reference will have to be adapted to meet specific needs of the project: a solution that worked in one environment may not be suitable for another environment.

This implies two things:

– First, intimately know all the techniques and methods, their advantages and their weaknesses, their prerequisites and their deliverables, etc. This involves continuing to learn, both in one’s main area (testing and quality), and also in all related areas (development, team management, hardware and software environments, software packages, methods, etc.) in order to control their impact on the proposed solution.

– Second, continually question yourself, trying to identify how the solutions implemented can be improved to achieve the intended objective. A one-time gain to achieve a particular goal can lead us to implement a sub-optimal solution but which is the best, given the constraints of the moment.

We will need to constantly measure failures and analyze their root causes, as independently as possible, to learn from them. We could take inspiration from aeronautics and the words of Chesley Sullenberger3: “Everything we know in aviation, every rule and every procedure we have, we know because someone died.” This solution allows us to improve ourselves, improve the profession and improve the products we test.

13.19. Last but not least

The contractual aspects and the contractual follow-up by both the customer and the supplier are important and will always be a serious challenge in the case of systems-of-systems.

We only need to look at major information system projects and their myriad of problems to realize that the solutions are not simple, despite the involvement of very large ESNs or leading manufacturers:

– Integration of CRM with complex legacy systems, in an international context, with multiple co-contractors where unit tests are not carried out – or not carried out correctly – by the offshore development teams, the integration focusing on fast releases and on the only correction of critical and blocking anomalies (by trying to push the others to a following iteration) which generates an increasingly important technical debt.

– Integration of a CRM in an insurance group, with different priorities and operating modes according to each insurance group, with pressure from decision-making bodies that push for rapid delivery and development and test teams that are not sized to deal with the complexity of interfaces, interactions, different management rules depending on the members of the group.

– Integration of an ERP in a complex system including Legacy, production, distribution, 3D modeling systems via the Internet and automatic tracking of order progress, as well as the entire logistics, supply and manufacturing. A known integrator, but no awareness of the impact or the complexity of the tests to be considered to ensure correct operation.

– The integration of several payroll systems for the military and civil servants, with the use of subcontracted personnel in countries where labor is cheaper, but without considering that these countries are not specially allies, etc. This initially resulted in payroll issues for the military (e.g. if the word “OPEX” – for external operation – is present then pay reduced to zero), then in the buyout of the master ESN work by its competitor (for a symbolic euro?).

– Design of military ships integrating systems and subsystems from national and international sources, covered by non-proliferation and/or export control treaties, where the co-contractors must ensure that they do not lose their advantages (properties intellectual property, trade secrets, etc.), and where the involvement of various government policies is important.

The common point of these projects seems to be the disconnect between the evaluations of the designers and the people in charge of the contractual aspect of the project. The blissful optimism of each other, the obstinacy in not wanting to consider the problems and the tendency to pass them on to another actor combine to generate impressive additional costs and fiascos.

Note

- 1 https://edition.cnn.com/2021/05/17/tech/waymo-arizona-confused/index.html.

- 2 https://www.youtu.be/trWrEWfhTVg.

- 3 Pilot of US Airways Flight 1549 on January 15, 2009 that landed in the Hudson River in New York after losing both engines.