2

Testing Process

Test processes are nested within the set of processes of a system-of-systems. More specifically, they prepare and provide evidence to substantiate compliance with requirements and provide feedback to project management on the progress of test process activities. If CMMI is used, other process areas than VER and VAL will be involved: PPQA (Process and Product Quality Assurance), PMC (Project Monitoring and Control), REQM (Requirements Management), CM (Configuration Management), TS (Technical Solution), MA (Measurement and Analysis), etc.

These processes will all be involved to some degree in the testing processes. Indeed, the test processes will decline the requirements, whether or not they are defined in documents describing the conformity needs, and the way in which these requirements will be demonstrated (type of IADT proofs), will split the types of demonstration according to the levels test and integration (system, subsystem, sub-subsystem, component, etc.) and static types (Analysis and Inspection for static checks during design) or dynamic (demonstration and tests during the levels of integration and testing of subsystems, systems and systems-of-systems). Similarly, the activities of the test process will report information and progress metrics to project management (CMMI PMC for Project Monitoring and Control process) and will be impacted by the decisions descending from this management.

The processes described in this chapter apply to a test level and should be repeated on each of the test levels, for each piece of software or containing software. Any modification in the interfaces and/or the performance of a component interacting with the component(s) under test will involve an analysis of the impacts and, if necessary, an adaptation of the test activities (including about the test) and evidence to be provided to show the conformity of the component (or system, subsystem, equipment or software) to its requirements. Each test level should coordinate with the other levels to limit the execution of tests on the same requirements.

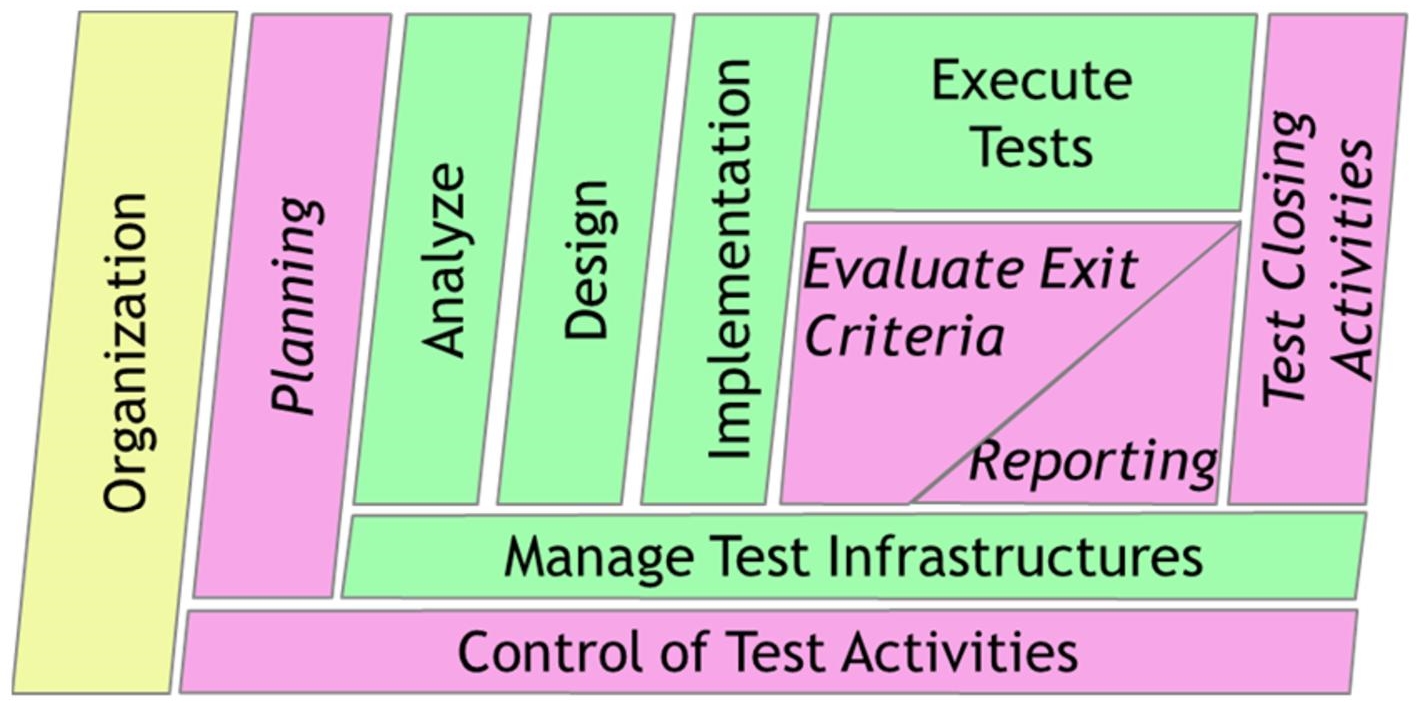

The ISO/IEC/IEEE29119-1 standard describes the following processes, grouping these in organizational processes (in yellow), management processes (pink) and dynamic processes (green).

Figure 2.1 Test processes.

Defined test processes are repeatable at each test level of a system-of-systems:

– the general organization of the test level;

– planning of level testing activities;

– control of test activities;

– analysis of needs, requirements and user stories to be tested;

– design of the test cases applicable to the level;

– implementation of test cases with automation and provision of test data;

– execution of designed and implemented test cases, including the management of anomalies;

– evaluation of test execution results and exit criteria;

– reporting;

– closure of test activities, including feedback and continuous improvement actions;

– infrastructure and environment management.

An additional process can be defined: the review process, which can be carried out several times on a test level, on the one hand, on the input deliverables, and on the other hand, on the deliverables produced by each of the processes of the level. Review activities can occur within each defined test process.

The proposed test processes are applicable regardless of the development mode (Agile or sequential). In the case of an Agile development mode, the testing processes must be repeated for each sprint and for each level of integration in a system-of-systems.

The processes must complement each other and – even if they may partially overlap – it must be ensured that the processes are completed successfully.

2.1. Organization

Objectives:

– develop and manage organizational needs, in accordance with the company’s test policy and the test strategies of higher levels;

– define the players at the level, their responsibilities and organizations;

– define deliverables and milestones;

– define quality targets (SLA, KPi, maximum failure rate, etc.);

– ensure that the objectives of the test strategy are addressed;

– define a standard RACI matrix.

Actor(s):

– CPI (R+A), CPU/CPO (I), developers (C+I);

– experienced “test manager” having a pilot role of the test project (R).

Prerequisites/inputs:

– calendar and budgetary constraints defined for the level;

– actors and subcontractors envisaged or selected;

– repository of lessons learned from previous projects.

Deliverables/outputs:

– organization of level tests;

– high-level WBS with the main tasks to be carried out;

– initial definition of test environments.

Entry criteria:

– beginning of the organization phase.

Exit criteria:

– approved organizational document (ideally a reduced number of pages).

Indicators:

1) efficiency: writing effort;

2) coverage: traceability to the quality characteristics identified in the project test strategy.

Points of attention:

– ensure that the actors and meeting points (milestones and level of reporting) are well defined.

2.2. Planning

Objective:

– plan test activities for the project, level, iteration or sprint considering existing issues, risk levels, constraints and objectives for testing;

– define the tasks (durations, objectives, incoming and outgoing, responsibilities, etc.) and sequencing;

– define the exit criteria (desired quality level) for the level;

– identify the prerequisites, resources (environment, personnel, tools, etc.) necessary;

– define measurement indicators and frequencies, as well as reporting.

Actor(s):

– CPI (R+A), CPU/CPO (I), developers (C+I);

– experienced testers “test manager”, having a role of manager of the test project (R);

– testers (C+I).

Prerequisites/inputs:

– information on the volume, workload and deadlines of the project;

– information on available environments and interfaces;

– objectives and scope of testing activities.

2.2.1. Project WBS and planning

Objective:

– plan test activities for the project or level, iteration or sprint considering existing status, risk levels, constraints and objectives for testing;

– define the tasks (durations, objectives, incoming and outgoing, responsibilities, etc.) and sequencing;

– define the exit criteria (desired quality level) for the level;

– identify prerequisites, resources (environment, personnel, tools, etc.) necessary;

– define measurement indicators and frequencies, as well as reporting.

Actor(s):

– CPI (R+A), CPU/CPO (I), developers (C+I);

– experienced testers “test manager”, having a role of manager of the test project (R);

– testers (C+I).

Prerequisites/inputs:

– REAL and project WBS defined in the investigation phase;

– lessons learned from previous projects (repository of lessons learned).

Deliverables/outputs:

– master test plan, level test plan(s);

– level WBS (or TBS for Test Breakdown Structure), detailing – for the applicable test level(s) – the tasks to be performed;

– initial definition of test environments.

Entry criteria:

– start of the investigation phase.

Exit criteria:

– test plan approved, all sections of the test plan template are completed.

Indicators:

1) efficiency: writing effort vs. completeness and size of the deliverables provided;

2) coverage: coverage of the quality characteristics selected in the project Test Strategy.

Points of attention:

– ensure that test data (for interface tests, environment settings, etc.) will be well defined and provided in a timely manner;

– collect lessons learned from previous projects.

Deliverables/outputs:

– master test plan, level test plan(s);

– level WBS, detailing – for the test level(s) – the tasks to be performed;

– detailed Gantt of test projects – each level – with dependencies;

– initial definition of test environments.

Entry criteria:

– start of the investigation phase.

Exit criteria:

– approved test plan, all sections of the applicable test plan template are completed.

Indicators:

1) efficiency: writing effort;

2) coverage: coverage of the quality characteristics selected in the project’s test strategy.

Points of attention:

– ensure that test data (for interface testing, environment settings, etc.) will be well defined and provided in a timely manner.

2.3. Control of test activities

Objective:

– throughout the project: adapt the test plan, processes and actions, based on the hazards and indicators reported by the test activities, so as to enable the project to achieve its objectives;

– identify changes in risks, implement mitigation actions;

– provide periodic reporting to the CoPil and the CoSuiv;

– escalate issues if needed.

Actor(s):

– CPI (A+I), CPU/CPO (I), developers (I);

– test manager with a test project manager role (R);

– testers (C+I) [provide indicators];

– CoPil CoNext (I).

Prerequisites/inputs:

– risk analysis, level WBS, project and level test plan.

Deliverables/outputs:

– periodic indicators and reporting for the CoPil and CoSuiv;

– updated risk analysis;

– modification of the test plan and/or activities to allow the achievement of the “project” objectives.

Entry criteria:

– project WBS, level WBS.

Exit criteria:

– end of the project, including end of the software warranty period.

Indicators:

– dependent on testing activities.

2.4. Analyze

Objective:

– analyze the repository of information (requirements, user stories, etc. usable for testing) to identify the test conditions to be covered and the test techniques to be used. A risk or requirement can be covered by more than one test condition. A test condition is something – a behavior or a combination of conditions – that may be interesting or useful to test.

Actor(s):

– testers, test analysts, technical test analysts.

Prerequisites/inputs:

– initial definition of test environments;

– requirements and user stories (depending on the development method);

– acceptance criteria for (if available);

– analysis of prioritized project risks;

– level test plan with the characteristics to be covered, the level test environment.

Deliverables/outputs:

– detailed definition of the level test environment;

– test file;

– prioritized test conditions;

– requirements/risks traceability matrix – test conditions.

Entry criteria:

– validated and prioritized requirements;

– risk analysis.

Exit criteria:

– each requirement is covered by the required number of test conditions (depending on the RPN of the requirement).

Indicators:

1) Efficiency:

- number of prioritized test conditions designed,

- updated traceability matrix for extension to test conditions.

2) Coverage:

- percentage of requirements and/or risks covered by one or more test conditions,

- for each requirement or user story analyzed, implementation of traceability to the planned test conditions,

- percentage of requirements and/or risks (by risk level) covered by one or more test conditions.

2.5. Design

Objective:

– convert test conditions into test cases and identify test data to be used to cover the various combinations. A test condition can be converted into one or more test cases.

Actor(s):

– testers, test technicians.

Prerequisites/inputs:

– prioritized test conditions;

– requirements/risks traceability matrix – test conditions.

Deliverables/outputs:

– prioritized test cases, definition of test data for each test case (input and expected);

– prioritized test procedures, taking into account the execution prerequisites;

– requirements/risks traceability matrix – test conditions – test cases.

Entry criteria:

– test conditions defined and prioritized;

– risk analysis.

Exit criteria:

– each test condition is covered by one or more test cases (according to the RPN);

– partitions and typologies of test data defined for each test;

– defined test environments.

Indicators:

1) Efficiency:

- number of prioritized test cases designed,

- updated traceability matrix for extension to test cases.

2) Coverage:

- percentage of requirements and/or risks covered by one or more test cases designed.

2.6. Implementation

Objective:

– finely describe – if necessary – the test cases;

– define the test data for each of the test cases generated by the test design activity;

– automate the test cases that need to be;

– setting up test environments.

Actor(s):

– testers, test automators, data and systems administrators.

Prerequisites/inputs:

– prioritized test cases;

– risk analysis.

Deliverables/outputs:

– automated or non-automated test scripts, test scenarios, test procedures;

– test data (input data and expected data for comparison);

– traceability matrix of requirements to risks – test conditions – test cases – test data.

Entry criteria:

– prioritized test cases, defined with their data partitions.

Exit criteria:

– test data defined for each test;

– test environments defined, implemented and verified.

Indicators:

1) Efficiency:

- number of prioritized test cases designed with test data,

- updated traceability matrix for extension to test data,

- number of test environments defined, implemented and verified vs. number of environments planned in the test strategy.

2) Coverage:

- percentage of test environments ready and delivered,

- coverage of requirements and/or risks by one or more test cases with data,

- coverage of requirements and/or risks by one or more automated test cases.

2.7. Test execution

Objective:

– execute the test cases (on the elements of the application to be tested) delivered by the development;

– identify defects and write anomaly sheets;

– report monitoring and coverage information.

Actor(s):

– testers, test technicians.

Prerequisites/inputs:

– system to be tested is available and managed in delivery (configuration management), accompanied by a delivery sheet.

Deliverables/outputs:

– anomaly sheets filled in for any identified defect;

– test logs.

Entry criteria:

– testing environment and resources (including testing tools) available for the level, and tested;

– anomaly management tool available and installed;

– test cases and test data available for the level;

– component or application to be tested available and managed in delivery (configuration management);

– delivery sheet provided.

Exit criteria:

– coverage of all test cases for the level.

Indicators:

1) Efficiency:

- percentage of tests passed, skipped (not passed) and failed, by level of risk,

- percentage of test environment availability for test execution,

- test execution workload achieved vs. planned.

2) Coverage:

- percentage of requirements/risks tested with at least one remaining defect,

- percentage of requirements/risks tested without any defect remaining.

2.8. Evaluation

Objective:

– identify whether the test execution results show that the execution campaign will be able to achieve the objectives;

– ensure that the acceptance criteria defined for the requirements or user stories are met;

– if scope or quality changes impact testing, identify and select the mitigation actions.

Actor(s):

– person responsible for project testing activities.

Prerequisites/inputs:

– definition of acceptance criteria;

– project load estimation data;

– actual usage data of project loads;

– progress data (coverage, deadlines, anomalies, etc.) of the project.

Deliverables/outputs:

– progress graphs, identification of trends;

– progress comments (identification of causes and proposals for mitigation).

Entry criteria:

– start of the project.

Exit criteria:

– end of the duration of each test task and of the test campaign;

– complete coverage achieved for the features or components to be tested.

Indicators:

1) Efficiency:

- identify the workload used, the anomalies identified – including priority and criticality – as well as the level of coverage achieved, compare against the objectives defined in the planning part.

2) Coverage:

- all the activities planned for the test task or for the test campaign have been carried out,

- to be defined based on the planned objectives and their achievement for each requirement or user story.

2.9. Reporting

Objective:

– provide project stakeholders with relevant and accurate information, as soon as possible, on the status of the test campaign and the components tested;

– if the changes in scope or quality impact the tests, identify and suggest mitigation actions with an estimate of costs and deadlines;

– identify whether the test execution campaign achieved the objectives.

Actor(s):

– person responsible for project testing activities.

Prerequisites/inputs:

– definition of reporting objectives;

– project load estimation data;

– actual usage data of project loads;

– progress data (coverage, deadlines, anomalies, etc.) of the project.

Deliverables/outputs:

– progress graphs, identification of trends;

– progress comments (identification of causes and proposals for mitigation).

Entry criteria:

– start of the project.

Exit criteria:

– end of the project.

Indicators:

1) Efficiency:

- can be determined by stakeholder satisfaction questionnaires.

2) Coverage:

- to be determined based on the achievement of reporting objectives.

2.10. Closure

Objective:

– ensure that all planned deliverables have been correctly delivered;

– identify lessons learned and feedback from the project;

– archive the deliverables as input and from test activities.

Actor(s):

– test manager, test analysts, technical test analysts, testers, test technicians.

Prerequisites/inputs:

– end of the test project.

Deliverables/outputs:

– lessons learned and feedback.

Entry criteria:

– the application has been delivered to the next stage.

Exit criteria:

– all test articles are properly archived;

– feedback and lessons learned have been archived and distributed.

Indicators:

1) Efficiency:

- duration of the feedback meeting; measurement of gains per lesson learned.

2) Coverage:

- number of items archived,

- number of feedback/lessons learned, archived and distributed.

2.11. Infrastructure management

Objective:

– ensure that the infrastructures are available to allow the proper execution of all processes;

– manage infrastructure (backups, restores, etc.) throughout testing activities.

Actor(s):

– infrastructure manager.

Prerequisites/inputs:

– description of the test infrastructures for each level and each component.

Deliverables/outputs:

– description of infrastructure;

– equipment purchase orders or rental of equipment;

– description of the management processes for each infrastructure.

Entry criteria:

– identification of a test activity requiring an infrastructure.

Exit criteria:

– infrastructure components are managed in configuration, archived or stored and retrievable;

– testing activities using the infrastructure are completed.

Indicators:

1) efficiency: actual availability time of the infrastructure.

2.12. Reviews

Objective:

– identify defects, as early as possible, in deliverables, to avoid the introduction of defects in other deliverables or in the code. In general, a review or inspection is composed of an individual work phase and a sharing meeting. NOTE.– test deliverables may also be subject to review or inspection.

Actor(s):

– CPI (A+I), CPU/CPO (I), developers (I);

– experienced testers “test manager”, having a role of manager of the test project (R);

– testers (A) participate in reviews and inspections.

Prerequisites/inputs:

– risk analysis;

– identification of deliverables to be reviewed or inspected;

– (optionally) review or inspection checklists, depending on submitted deliverable;

– project and level test plan, project review plan.

Deliverables/outputs:

– defects found in the deliverable;

– review efficiency and effort indicators.

Entry criteria:

– deliverable available, with a “sufficient” level of completeness. NOTE.– this must be a sufficiently advanced draft, but not the finished document.

Exit criteria:

– review completed, end of sharing meeting.

Indicators:

– dependent on test activities:

1) Efficiency:

- number of defects identified/review or inspection effort.

2) Coverage:

- document(s) submitted for review/set of deliverables.

These books provide a lot of useful information in the context of reviews, their advantages and how to set them up: Gilb and Graham (1993) and Wiegers (2010).

2.13. Adapting processes

Nothing prevents us from modifying the processes mentioned, from splitting a process into several parts or from adding additional processes. Splitting or adding additional processes should not cause major problems, other than increasing the complexity of the project. On the other hand, think twice before deleting a process: they complement each other, some provide data used by others, so the impacts of a deletion can be significant and underestimated.

Any adaptation must consider:

– the main objective of the tests which is to find the defects as soon as possible at a lower cost;

– the test policy and test strategy objectives that must be met;

– the objectives of the process, which must be maintained (e.g. deliverables for other processes, reporting);

– the level of test where the process is applied which impacts the actors of the processes and the delays before the next phase.

2.14. RACI matrix

A RACI matrix aims to identify the actions, actors and responsibilities of each. In general, the responsibilities are:

– R: responsible (in charge of carrying out the action);

– A: approver (usually there is only one A per action);

– C: consulted;

– I: informed.

| CPI | CPU | Dev | TM | TA | Tst | Infra | PO | Copil | |

| Planning | R+A | I | C+I | R | C+I | C+I | I | ||

| Control | A+I | I | I | R | C+I | C+I | I | I | |

| Analysis | R | A | (A) | ||||||

| Design | R | A | (A) | ||||||

| Implementation | R+I | A | A | ||||||

| Execution | R | R+A | A | ||||||

| Reporting | R+A | A | I | I | |||||

| Closure | R | A | A | ||||||

| Infrastructure | R+A | ||||||||

| Reviews | A+I | I | A+I | R+I | A | A | R | I |

Above we see an example of a RACI matrix for a test level. Other test activities or processes could be added, such as (non-exhaustive list):

– the design and provision of test data;

– the preparation and testing of deliveries in the following stages;

– acceptance of the quality level.

2.15. Automation of processes or tests

2.15.1. Automate or industrialize?

Automating is often seen as a synonym for industrializing, and therefore as a magic solution to achieve economies of scale or to limit quality variations between deliveries, as in the industrial world. However, if substantial savings are desired, it is necessary to identify the processes generating unnecessary losses or expenses. These “losses” can be seen according to Lean practices as loss of time (e.g. delays) or loss of material (e.g. corrections, rewriting, etc.). An analysis of the effectiveness of the processes implemented will therefore be necessary.

Similarly, test automation can generate improved quality and timeliness in the short term but introduce increased load and longer-term timeline degradation: automatic test case synchronization activities between the various components of the system-of-systems, the activities of maintenance of the automated test cases and the needs for traceability towards the requirements will generate an increasingly important workload. This effect is one of the risks identified in the Lean approach by Poppendieck and Poppendieck (2015).

2.15.2. What to automate?

To have an effective return on investment from the end-to-end test automation of a system-of-systems, it is necessary to define the desired objectives of this automation activity. From there, objectives can be defined concerning the tool(s) to be used. Once chosen, the use and effectiveness of these tools will have to be validated – by a pilot project – with a comparison of the benefits obtained with the expected benefits. Only then can the automation begin.

The choice of test cases and procedures to automate will vary according to the objectives defined on our system-of-systems, on the hardware and software components to be integrated and made to interact and on the ability of the test tools to interact with each other. The choice of tests should depend on a checklist as follows:

- Is the test case likely to be repeated many times?

- Does the execution of the task require the support of more than one individual?

- How much time can be saved with this automation?

- Is the task on the critical path?

- Are the requirements to be verified by the task stable, with little risk or with little chance of being impacted by significant changes?

- Is the task common to other projects or can it bring added value as part of a collaborative effort?

- Is the task particularly vulnerable to human error?

- Is the task long or does it contain significant periods of inactivity between test steps?

- Is the task very repetitive?

- Does the task require specialist knowledge, and are the skills needed to perform it in high demand by other activities?

One activity that can be automated easily is performing static code analysis. These scans can happen automatically when a developer introduces code into the configuration repository. The important thing here is to define and adapt the code verification rules to the applicable standards. The result of the code analysis can be sent directly to the developer allowing them to immediately correct the identified defects.

The decision to automate unit tests and to implement TDD or ATDD type unit tests is a solution that mainly arises from the choice of development model made on the project. If TDD is implemented as a development aid technique, it will identify regressions and facilitate development. However, neither TDD nor ATDD are to be confused with unit testing activities, which aim to cover instructions, branches or loops in order to find faults in them independently.

Performance testing requires automation, and load profiles – as well as the combination of test cases and user typologies – are elements with very important impacts. Considering performance testing only after functional testing fails to anticipate poor performance, while budgeting for transactions (and their components) and using low-level performance testing, enables faults to be identified as early as possible in the production cycle.

Other types of testing can benefit from the use of tools, such as requirements management, traceability to development and testing, component configuration management (including testing) as well as technical testing (security, usability, etc.).

2.15.3. Selecting what to automate

To effectively identify which tests to automate, we should first define the desired goals of test automation. Then, we will have to make these objectives measurable (with target KPIs and means of measurement) and validate the achievement of these objectives through a pilot project.

The choices will have to consider the maturity of the components, the test tools, the platforms to be tested, as well as the ability to update all these elements. We have too often witnessed automation failures because the tests were not updated, not or poorly documented, or managed by a single person – loss of knowledge in case of departure – or too dependent on user interfaces.