Chapter 1. The Birth and Evolution of Automated Testing

An effective test program, incorporating the automation of software testing, involves a mini-development life cycle of its own. Automated testing amounts to a development effort involving strategy and goal planning, test requirement definition, analysis, design, development, execution, and evaluation activities.

1.1 Automated Testing

“We need the new software application sooner than that.” “I need those new product features now.” Sound familiar?

Today’s software managers and developers are being asked to turn around their products within ever-shrinking schedules and with minimal resources. More than 90% of developers have missed ship dates. Missing deadlines is a routine occurrence for 67% of developers. In addition, 91% have been forced to remove key functionality late in the development cycle to meet deadlines [1]. A Standish Group report supports similar findings [2]. Getting a product to market as early as possible may mean the difference between product survival and product death—and therefore company survival and death.

Businesses and government agencies also face pressure to reduce their costs. A prime path for doing so consists of further automating and streamlining business processes with the support of software applications. Business management and government leaders who are responsible for application development efforts do not want to wait a year or more to see an operational product; instead, they are specifying software development efforts focused on minimizing the development schedule, which often requires incremental software builds. Although these incremental software releases provide something tangible for the customer to see and use, the need to combine the release of one software build with the subsequent release of the next build increases the magnitude and complexity of the test effort.

In an attempt to do more with less, organizations want to test their software adequately, but within a minimum schedule. To accomplish this goal, organizations are turning to automated testing. A convenient definition of automated testing might read as follows: “The management and performance of test activities, to include the development and execution of test scripts so as to verify test requirements, using an automated test tool.” The automation of test activities provides its greatest value in instances where test scripts are repeated or where test script subroutines are created and then invoked repeatedly by a number of test scripts. Such testing during development and integration stages, where reusable scripts may be run a great number of times, offers a significant payback.

The performance of integration test using an automated test tool for subsequent incremental software builds provides great value. Each new build brings a considerable number of new tests, but also reuses previously developed test scripts. Given the continual changes and additions to requirements and software, automated software test serves as an important control mechanism to ensure accuracy and stability of the software through each build.

Regression testing at the system test level represents another example of the efficient use of automated testing. Regression tests seek to verify that the functions provided by a modified system or software product perform as specified and that no unintended change has occurred in the operation of the system or product. Automated testing allows for regression testing to be executed in a more efficient manner. (Details and examples of this automated testing efficiency appear throughout this book.)

To understand the context of automated testing, it is necessary to describe the kinds of tests, which are typically performed during the various application development life-cycle stages. Within a client-server or Web environment, the target system spans more than just a software application. Indeed, it may perform across multiple platforms, involve multiple layers of supporting applications, involve interfaces with a host of commercial off-the-shelf (COTS) products, utilize one or more different types of databases, and involve both front-end and back-end processing. Tests within this environment can include functional requirement testing, server performance testing, user interface testing, unit testing, integration testing, program module complexity analysis, program code coverage testing, system load performance testing, boundary testing, security testing, memory leak testing, and many more types of assessments.

Automated testing can now support these kinds of tests because the functionality and capabilities of automated test tools have expanded in recent years. Such testing can perform in a more efficient and repeatable manner than manual testing. Automated test capabilities continue to increase, so as to keep pace with the growing demand for more rapid and less expensive production of better applications.

1.2 Background on Software Testing

The history of software testing mirrors the evolution of software development itself. For the longest time, software development focused on large-scale scientific and Defense Department programs coupled with corporate database systems developed on mainframe or minicomputer platforms. Test scenarios during this era were written down on paper, and tests targeted control flow paths, computations of complex algorithms, and data manipulation. A finite set of test procedures could effectively test a complete system. Testing was generally not initiated until the very end of the project schedule, when it was performed by personnel who were available at the time.

The advent of the personal computer injected a great deal of standardization throughout the industry, as software applications could now primarily be developed for operation on a common operating system. The introduction of personal computers gave birth to a new era and led to the explosive growth of commercial software development, where commercial software applications competed rigorously for supremacy and survival. Product leaders in niche markets survived and computer users adopted the surviving software as de facto standards. Systems became increasingly on-line systems replacing batch-mode operation. The test effort for on-line systems required a different approach to test design, due to the fact that job streams could be called in nearly any order. This capability suggested the possibility of a huge number of test procedures that would support an endless number of permutations and combinations.

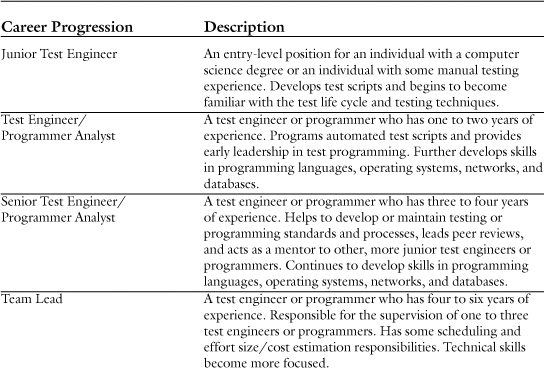

The client-server architecture takes advantage of specialty niche software by employing front-end graphical user interface (GUI) application development tools and back-end database management system applications as well as capitalizing on the widespread availability of networked personal computers. The term “client-server” describes the relationship between two software processes. In this pairing, the client computer requests a service that a server computer performs. Once the server completes the required function, it sends the results back to the client. Although client and server operations could run on one single machine, they usually run on separate computers connected by a network. Figure 1.1 provides a high-level graph of a client-server architecture.

Figure 1.1. Client-Server Architecture

The popularity of client-server applications introduces new complexity into the test effort. The test engineer is no longer exercising a single, closed application operating on a single system, as in the past. Instead, the client-server architecture involves three separate components: the server, the client, and the network. Interplatform connectivity increases the potential for errors, as few client-server standards have been developed to date. As a result, testing is concerned with the performance of the server and the network, as well as the overall system performance and functionality across the three components.

Coupled with the new complexity introduced by the client-server architecture, the nature of GUI screens presents some additional challenges. The GUI replaces character-based applications and makes software applications usable by just about anyone by alleviating the need for a user to know detailed commands or understand the behind-the-scenes functioning of the software. GUIs involve the presentation of information in user screen windows.

The user screen windows contain objects that can be selected, thereby allowing the user to control the logic. Such screens, which present objects as icons, can be altered in appearance in an endless number of ways. The size of the screen image can be changed, for example, and the screen image’s position on the monitor screen can be changed. Any object can be selected at any time and in any order. Objects can change position. This approach, referred to as an event-driven environment, is much different from the mainframe-based procedural environment.

Given the nature of client-server applications, a significant degree of randomness exists in terms of the way in which an object may be selected. Likewise, objects may be selected in several different orders. There are generally no clear paths within the application; rather, modules can be called and exercised through an exhausting number of paths. The result is a situation where test procedures cannot readily exercise all possible functional scenarios. Test engineers must therefore focus their test activity on the portion of the application that exercises the majority of system requirements and on ways that the user might potentially use the system.

With the widespread use of GUI applications, the possibility of screen capture and playback supporting screen navigation user scenarios became an attractive way to test applications. Automated test tools, which perform this capability, were introduced into the market to meet this need and slowly built up momentum. Although test scenarios and scripts were still generally written down using a word-processing application, the use of automated test tools nevertheless increased. The more complex test effort required greater and more thorough planning. Personnel performing the test were required to be more familiar with the application under test and to have more specific skill requirements relevant to the platforms and network that also apply to the automated test tools being used.

Automated test tools supporting screen capture and playback have since matured and expanded in capability. Different kinds of automated test tools with specific niche strengths continue to emerge. In addition, automated software test has become increasingly more of a programming exercise, although it continues to involve the traditional test management functions such as requirements traceability, test planning, test design, and test scenario and script development.

1.3 The Automated Test Life-Cycle Methodology (ATLM)

The use of automated test tools to support the test process is proving to be beneficial in terms of product quality and minimizing project schedule and effort (see “Case Study: Value of Test Automation Measurement,” in Chapter 2). To achieve these benefits, test activity and test planning must be initiated early in the project. Thus test engineers need to be included during business analysis and requirements activities and be involved in analysis and design review activities. These reviews can serve as effective testing techniques, preventing subsequent analysis/design errors. Such early involvement allows the test team to gain understanding of the customer needs to be supported, which will aid in developing an architecture for the appropriate test environment and generating a more thorough test design.

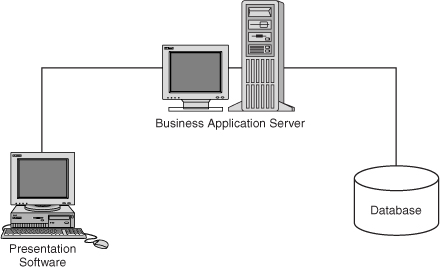

Early test involvement not only supports effective test design, which is a critically important activity when utilizing an automated test tool, but also provides early detection of errors and prevents migration of errors from requirement specification to design, and thence from design into code. This kind of error prevention reduces cost, minimizes rework, and saves time. The earlier in the development cycle that errors are uncovered, the easier and less costly they are to fix. Cost is measured in terms of the amount of time and resources required to correct the defect. A defect found at an early stage is relatively easy to fix, has no operational impact, and requires few resources. In contrast, a defect discovered during the operational phase can involve several organizations, can require a wider range of retesting, and can cause operational downtime. Table 1.1 outlines the cost savings of error detection through the various stages of the development life cycle [3].

Table 1.1. Prevention Is Cheaper Than Cure

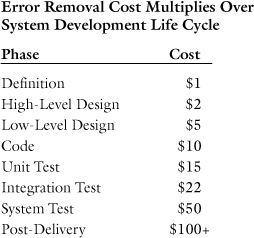

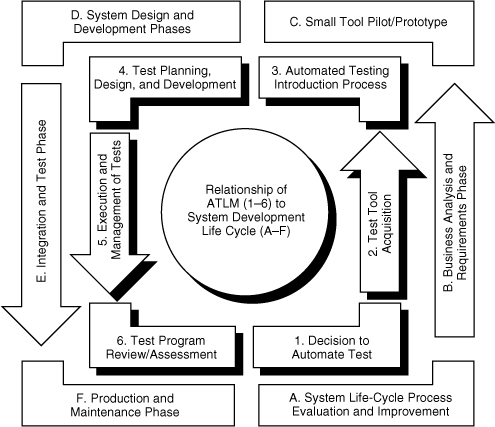

The Automated Test Life-cycle Methodology (ATLM) discussed throughout this book and outlined in Figure 1.2 represents a structured approach for the implementation and performance of automated testing. The ATLM approach mirrors the benefits of modern rapid application development efforts, where such efforts engage the user early on throughout analysis, design, and development of each software version, which is built in an incremental fashion.

Figure 1.2. Automated Test Life-Cycle Methodology (ATLM)

In adhering to the ATLM, the test engineer becomes involved early on in the system life cycle, during business analysis throughout the requirements phase, design, and development of each software build. This early involvement enables the test team to conduct a thorough review of requirements specification and software design, more completely understand business needs and requirements, design the most appropriate test environment, and generate a more rigorous test design. An auxiliary benefit of using a test methodology, such as the ATLM, that parallels the development life cycle is the development of a close working relationship between software developers and the test engineers, which fosters greater cooperation and makes possible better results during unit, integration, and system testing.

Early test involvement is significant because requirements or use cases constitute the foundation or reference point from which test requirements are defined and against which test success is measured. A system or application’s functional specification should be reviewed by the test team. Specifically, the functional specifications must be evaluated, at a minimum, using the criteria given here and further detailed in Appendix A.

• Completeness. Evaluate the extent to which the requirement is thoroughly defined.

• Consistency. Ensure that each requirement does not contradict other requirements.

• Feasibility. Evaluate the extent to which a requirement can actually be implemented with the available technology, hardware specifications, project budget and schedule, and project personnel skill levels.

• Testability. Evaluate the extent to which a test method can prove that a requirement has been successfully implemented.

Test strategies should be determined during the functional specification/requirements phase. Automated tools that support the requirements phase can help produce functional requirements that are testable, thus minimizing the effort and cost of testing. With test automation in mind, the product design and coding standards can provide the proper environment to get the most out of the test tool. For example, the development engineer could design and build in testability into the application code. Chapter 4 further discusses building testable code.

The ATLM, which is invoked to support test efforts involving automated test tools, incorporates a multistage process. This methodology supports the detailed and interrelated activities that are required to decide whether to employ an automated testing tool. It considers the process needed to introduce and utilize an automated test tool, covers test development and test design, and addresses test execution and management. The methodology also supports the development and management of test data and the test environment, and describes a way to develop test documentation so as to account for problem reports. The ATLM represents a structured approach that depicts a process with which to approach and execute testing. This structured approach is necessary to help steer the test team away from several common test program mistakes:

• Implementing the use of an automated test tool without a testing process in place, which results in an ad hoc, nonrepeatable, nonmeasurable test program.

• Implementing a test design without following any design standards, which results in the creation of test scripts that are not repeatable and therefore not reusable for incremental software builds.

• Attempting to automate 100% of test requirements, when the tools being applied do not support automation of all tests required.

• Using the wrong tool.

• Initiating test tool implementation too late in the application development life cycle, without allowing sufficient time for tool setup and test tool introduction (that is, without providing for a learning curve).

• Involving test engineers too late in the application development life cycle, which results in poor understanding of the application and system design and thereby incomplete testing.

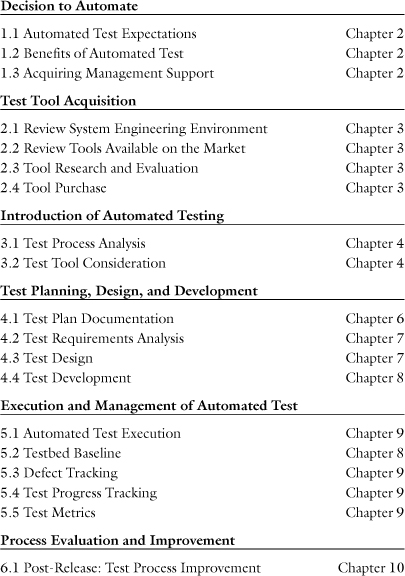

The ATLM is geared toward ensuring successful implementation of automated testing. As shown in Table 1.2, it includes six primary processes or components. Each primary process is further composed of subordinate processes as described here.

Table 1.2. ATLM Process Hierarchy

1.3.1 Decision to Automate Test

The decision to automate test represents the first phase of the ATLM. This phase is addressed in detail in Chapter 2, which covers the entire process that goes into the automated testing decision. The material in Chapter 2 is intended to help the test team manage automated testing expectations and outlines the potential benefits of automated testing, if implemented correctly. An approach for developing a test tool proposal is outlined, which will be helpful in acquiring management support.

1.3.2 Test Tool Acquisition

Test tool acquisition represents the second phase of the ATLM. Chapter 3 guides the test engineer through the entire test tool evaluation and selection process, starting with confirmation of management support. As a tool should support most of the organization’s testing requirements whenever feasible, the test engineer will need to review the systems engineering environment and other organizational needs. Chapter 3 reviews the different types of tools available to support aspects of the entire testing life cycle, enabling the reader to make an informed decision with regard to the types of tests to be performed on a particular project. It next guides the test engineer through the process of defining an evaluation domain to pilot the test tool. After completing all of those steps, the test engineer can make vendor contact to bring in the selected tool(s). Test personnel then evaluate the tool, based on sample criteria provided in Chapter 3.

1.3.3 Automated Testing Introduction Phase

The process of introducing automated testing to a new project team represents the third phase of the ATLM. Chapter 4 outlines the steps necessary to successfully introduce automated testing to a new project, which are summarized here.

Test Process Analysis

Test process analysis ensures that an overall test process and strategy are in place and are modified, if necessary, to allow successful introduction of the automated test. The test engineer defines and collects test process metrics so as to allow for process improvement. Test goals, objectives, and strategies must be defined and test process must be documented and communicated to the test team. In this phase, the kinds of testing applicable for the technical environment are defined, as well as tests that can be supported by automated tools. Plans for user involvement are assessed, and test team personnel skills are analyzed against test requirements and planned test activities. Early test team participation is emphasized, supporting refinement of requirements specifications into terms that can be adequately tested and enhancing the test team’s understanding of application requirements and design.

Test Tool Consideration

The test tool consideration phase includes steps in which the test engineer investigates whether incorporation of automated test tools or utilities into the test effort would be beneficial to a project, given the project testing requirements, available test environment and personnel resources, the user environment, the platform, and product features of the application under test. The project schedule is reviewed to ensure that sufficient time exists for test tool setup and development of the requirements hierarchy; potential test tools and utilities are mapped to test requirements; test tool compatibility with the application and environment is verified; and work-around solutions are investigated to incompatibility problems surfaced during compatibility tests.

1.3.4 Test Planning, Design, and Development

Test planning, design, and development is the fourth phase of the ATLM. These subjects are further addressed in Chapters 6, 7, and 8, and are summarized here.

Test Planning

The test planning phase includes a review of long-lead-time test planning activities. During this phase, the test team identifies test procedure creation standards and guidelines; hardware, software, and network required to support test environment; test data requirements; a preliminary test schedule; performance measurement requirements; a procedure to control test configuration and environment; and a defect tracking procedure and associated tracking tool.

The test plan incorporates the results of each preliminary phase of the structured test methodology (ATLM). It defines roles and responsibilities, the project test schedule, test planning and design activities, test environment preparation, test risks and contingencies, and the acceptable level of thoroughness (that is, test acceptance criteria). Test plan appendixes may include test procedures, a description of the naming convention, test procedure format standards, and a test procedure traceability matrix.

Setting up a test environment is part of test planning. The test team must plan, track, and manage test environment setup activities for which material procurements may have long lead times. It must schedule and monitor environment setup activities; install test environment hardware, software, and network resources; integrate and install test environment resources; obtain and refine test databases; and develop environment setup scripts and testbed scripts.

Test Design

The test design component addresses the need to define the number of tests to be performed, the ways that test will be approached (for example, the paths or functions), and the test conditions that need to be exercised. Test design standards need to be defined and followed.

Test Development

For automated tests to be reusable, repeatable, and maintainable, test development standards must be defined and followed.

1.3.5 Execution and Management of Tests

The test team must execute test scripts and refine the integration test scripts, based on a test procedure execution schedule. It should also conduct evaluation activities of test execution outcomes, so as to avoid false-positives or false-negatives. System problems should be documented via system problem reports, efforts should be made to support developer understanding of system and software problems and replication of the problem. Finally, the team should perform regression tests and all other tests and track problems to closure.

1.3.6 Test Program Review and Assessment

Test program review and assessment activities need to be conducted throughout the testing life cycle, thereby allowing for continuous improvement activities. Throughout the testing life cycle and following test execution activities, metrics need to be evaluated and final review and assessment activities need to be conducted to allow for process improvement.

1.4 ATLM’s Role in the Software Testing Universe

1.4.1 ATLM Relationship to System Development Life Cycle

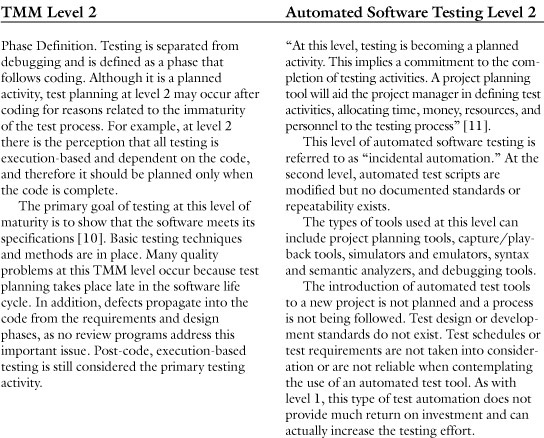

For maximum test program benefit, the ATLM approach needs to be pursued in parallel with the system life cycle. Figure 1.3 depicts the relationship between the ATLM and the system development life cycle. Note that the system development life cycle is represented in the outer layer in Figure 1.3. Displayed in the bottom right-hand corner of the figure is the process evaluation phase. During the system life cycle process evaluation phase, improvement possibilities often determine that test automation is a valid approach toward improving the testing life cycle. The associated ATLM phase is called the decision to automate test.

Figure 1.3. System Development Life Cycle—ATLM Relationship

During the business analysis and requirements phase, the test team conducts test tool acquisition activities (ATLM step 2). Note that test tool acquisition can take place at any time, but preferably when system requirements are available. Ideally, during the automated testing introduction process (ATLM step 3), the development group supports this effort by developing a pilot project or small prototype so as to iron out any discrepancies and conduct lessons learned activities.

Test planning, design and development activities (ATLM step 4) should take place in parallel to the system design and development phase. Although some test planning will already have taken place at the beginning and throughout the system development life cycle, it is finalized during this phase. Execution and management of tests (ATLM step 5) takes place in conjunction with the integration and test phase of the system development life cycle. System testing and other testing activities, such as acceptance testing, take place once the first build has been baselined. Test program review and assessment activities (ATLM step 6) are conducted throughout the entire life cycle, though they are finalized during the system development production and maintenance phase.

1.4.2 Test Maturity Model (TMM)—Augmented by Automated Software Testing Maturity

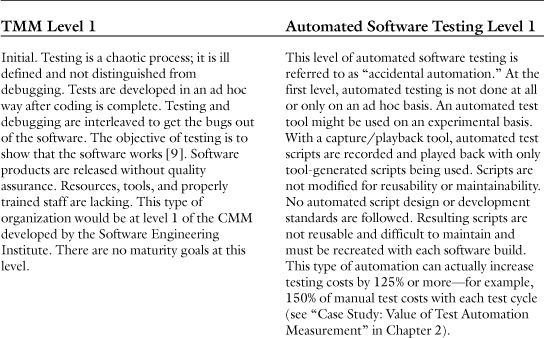

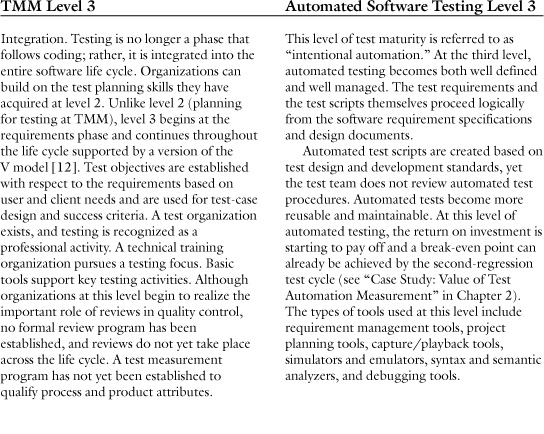

Test teams that implement the ATLM will make progress toward levels 4 and 5 of the Test Maturity Model (TMM). The TMM is a testing maturity model that was developed by the Illinois Institute of Technology [4]; it contains a set of maturity levels through which an organization can progress toward greater test process maturity. This model lists a set of recommended practices at each level of maturity above level 1. It promotes greater professionalism in software testing, similar to the intention of the Capability Maturity Model for software, which was developed by the Software Engineering Institute (SEI) at Carnegie Mellon University (see the SEI Web site at http://www.sei.cmu.edu/).

1.4.2.1 Correlation Between the CMM and TMM

The TMM was developed as a complement to the CMM [5]. It was envisioned that organizations interested in assessing and improving their testing capabilities would likely be involved in general software process improvement. To have directly corresponding levels in both maturity models would logically simplify these two parallel process improvement drives. This parallelism is not entirely present, however, because both the CMM and the TMM level structures are based on the individual historical maturity growth patterns of the processes they represent. The testing process is a subset of the overall software development process; therefore, its maturity growth needs support from the key process areas (KPAs) associated with general process growth [6–8]. For this reason, any organization that wishes to improve its testing process throughout implementation of the TMM (and ATLM) should first commit to improving its overall software development process by applying the CMM guidelines.

Research shows that an organization striving to reach a particular level of the TMM must be at least at the same level of the CMM. In many cases, a given TMM level needs specific support from KPAs in the corresponding CMM level and the CMM level beneath it. These KPAs should be addressed either prior to or in parallel with the TMM maturity goals.

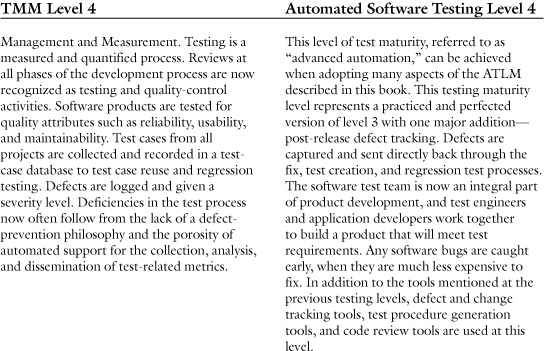

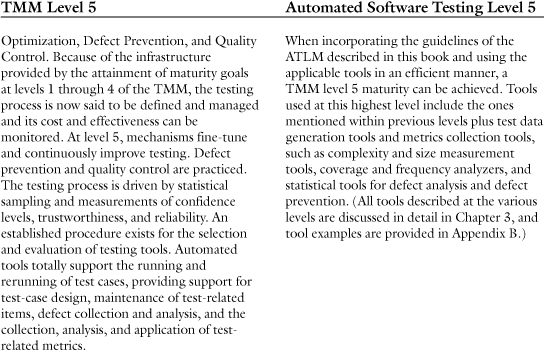

The TMM model adapts well to automated software testing, because effective software verification and validation programs grow out of development programs that are well planned, executed, managed, and monitored. A good software test program cannot stand alone; it must be an integral part of the software development process. Table 1.3 displays the levels 1 through 5 of the TMM in the first column, together with corresponding automated software testing levels 1 through 5 in the second column. Column 2 addresses test maturity as it specifically pertains to automated software testing.

Table 1.3. Testing Maturity and Automated Software Testing Maturity Levels 1–5

The test team must determine, based on the company’s environment, the TMM maturity level that best fits the organization and the applicable software applications or products. The level of testing should be proportional to complexity of design, and the testing effort should not be more complex than the development effort.

1.4.3 Test Automation Development

Modularity, shared function libraries, use of variables, parameter passing, conditional branching, loops, arrays, subroutines—this is now the universal language of not only the software developer, but also the software test engineer.

Automated software testing, as conducted with today’s automated test tools, is a development activity that includes programming responsibilities similar to those of the application-under-test software developer. Whereas manual testing is an activity that is often tagged on to the end of the system development life cycle, efficient automated testing emphasizes that testing should be incorporated from the beginning of the system development life cycle. Indeed, the development of an automated test can be viewed as a mini-development life cycle. Like software application development, automated test development requires careful design and planning.

Automated test engineers use automated test tools that generate code, while developing test scripts that exercise a user interface. The code generated consists of third-generation languages, such as BASIC, C, or C++. This code (which comprises automated test scripts) can be modified and reused to serve as automated test scripts for other applications in less time than if the test engineer were to use the automated test tool interface to generate the new scripts. Also, through the use of programming techniques, scripts can be set up to perform such tasks as testing different data values, testing a large number of different user interface characteristics, or performing volume testing.

Much like in software development, the software test engineer has a set of (test) requirements, needs to develop a detailed blueprint (design) for efficiently exercising all required tests, and must develop a product (test procedures/scripts) that is robust, modular, and reusable. The resulting test design may call for the use of variables within the test script programs to read in the value of a multitude of parameters that should be exercised during test. It also might employ looping constructs to exercise a script repeatedly or call conditional statements to exercise a statement only under a specific condition. The script might take advantage of application programming interface (API) calls or use .dll files and reuse libraries. In addition, the software test engineer wants the test design to be able to quickly and easily accommodate changes to the software developer’s product. The software developer and the software test engineer therefore have similar missions. If their development efforts are successful, the fruit of their labor is a reliable, maintainable, and user-capable system.

Again much like the software developer, the test engineer builds test script modules, which are designed to be robust, repeatable, and maintainable. The test engineer takes advantage of the native scripting language of the automated test tool so as to reuse and modify test scripts to perform an endless number of tests. This kind of test script versatility is driven largely by the fact that modern on-line and GUI-based applications involve job streams, which can be processed in nearly any order. Such flexibility translates into a requirement for test cases that can support an endless number of screen and data permutations and combinations.

Test scripts may need to be coded to perform checks on the application’s environment. Is the LAN drive mapped correctly? Is an integrated third-party software application up and running? The test engineer may need to create separate, reusable files that contain constant values or maintain variables. Test script code, which is reused repeatedly by a number of different test scripts, may need to be saved in a common directory or utility file as a global subroutine, where the subroutine represents shared code available to the entire test team.

Maintainability is as important to the test engineering product (test scripts) as it is to the software developer’s work product (that is, the application under test). It is a given that the software product under development will change. Requirements are modified, user feedback stipulates change, and developers alter code by performing bug fixes. The test team’s test scripts need to be structured in ways that can support global changes. A GUI screen change, for example, may affect hundreds of test scripts. If the GUI objects are maintained in a file, the changes can be made in the file and the test team needs to modify the test in only one place. As a result, the corresponding test scripts can be updated collectively.

As noted earlier, the nature of client-server applications ensures a significant degree of randomness for which an object may be selected. There is just as much freedom in terms of the order in which objects are selected. No clear paths exist within the application; rather, modules can be called and exercised through an exhausting number of paths. As a result, test procedures cannot readily exercise all possible functional scenarios. Test engineers must therefore focus their test activity on the portion of the application that exercises the majority of system requirements.

Software tests, which utilize an automated test tool having an inherent scripting language, require that software test engineers begin to use analytical skills and perform more of the same type of design and development tasks that software developers carry out. Test engineers with a manual test background, upon finding themselves on a project incorporating the use of one or more automated test tools, may need to follow the lead of test team personnel who have more experience with the test tool or who have significantly more programming experience. These more experienced test engineers should assume leadership roles in test design and test script programming, as well as in the establishment of test script programming standards and frameworks. These same seasoned test professionals need to act as mentors for more junior test engineers. Chapter 5 provides more details on test engineers’ roles and responsibilities.

In GUI-based client-server system environments, test team personnel need to be more like a system engineer as well as a software developer. Knowledge and understanding of network software, routers, LANs and various client/server operating systems, and database software are useful in this regard. In the future, the skills required for both the software developer and the software test engineer will continue to converge. Various junior systems engineering personnel, including network engineers and system administrators, may see the software test engineering position as a way to expand their software engineering capability.

1.4.4 Test Effort

“We have only one week left before we are supposed to initiate testing. Should we use an automated test tool?” Responding affirmatively to this question is not a good idea. Building an infrastructure of automated test scripts, for the purpose of executing testing in quick fashion, requires considerable investment in test planning and preparation. Perhaps the project manager has been overheard making a remark such as, “The test effort is not going to be that significant, because we’re going to use an automated tool.” The test team needs to be cautious when it encounters statements to this effect. As described in Table 1.3, ad hoc implementation of automated testing can actually increase testing costs by 125%—or even 150% of manual test costs with each test cycle.

Many industry software professionals have a perception that the use of automated test tools makes the software test effort less significant in terms of person-hours, or at least less complex in terms of planning and execution. In reality, savings accrued from the use of automated test tools will take time to generate. In fact, during the first use of a particular automated test tool by a test team, no or very little savings may be realized.

The use of automated tools may increase the scope and breadth of the test effort within a limited schedule and help displace the use of manual, mundane, and repetitive efforts, which are both labor-intensive and error-prone. Automated testing will allow test engineers to focus their skills on the more challenging tasks. Nevertheless, the use of automated testing introduces a new level of complexity, which a project’s test team may not have experienced previously. Expertise in test script programming is required but may be new to the test team, and possibly few members of the test team may have had coding experience. Even when the test team is familiar with one automated test tool, the tool required for a new project may differ.

For a test engineer or test lead on a new project, it is important to listen for the level of expectations communicated by the project manager or other project personnel. As outlined in Chapter 2, the test engineer will need to carefully monitor and influence automated test expectations. Without such assiduous attention to expectations, the test team may suddenly find the test schedule reduced or test funding cut to levels that do not support sufficient testing. Other consequences of ignoring this aspect of test management include the transfer to the test team of individuals with insufficient test or software programming skills or the rejection of a test team request for formal training on a new automated test tool.

Although the use of automated test tools provides the advantages outlined in Section 1.1, automated testing represents an investment that requires careful planning, a defined and structured process, and competent software professionals to execute and maintain test scripts.

1.5 Software Testing Careers

“I like to perform a variety of different work, learn a lot of things, and touch a lot of different products. I also want to exercise my programming and database skills, but I don’t want to be off in my own little world, isolated from others, doing nothing but hammering out code.” Does this litany of desirable job characteristics sound familiar?

Software test engineering can provide for an interesting and challenging work assignment and career. And, in addition, there is high demand in the marketplace for test engineering skills! The evolution of automated test capabilities has given birth to many new career opportunities for software engineers. This trend is further boosted by U.S. quality standards and software maturity guidelines that place a greater emphasis on software test and other product assurance disciplines. A review of computer-related job classified ads in the weekend newspaper clearly reveals the ongoing explosion in the demand for automated software test professionals. Software test automation, as a discipline, remains in its infancy stage, and presently the number of test engineers with automated test experience cannot keep pace with demand.

Many software engineers are choosing careers in the automated test arena for two reasons: (1) the different kinds of tasks involved and (2) the variety of applications for which they are introduced. Experience with automated test tools, likewise, can provide a career lift. It provides the software engineer with a broader set of skills and may provide this professional with a competitive career development edge. Likewise, the development of automated testing skills may be just the thing that an aspiring college graduate needs to break into a software engineering career.

“How do I know whether I would make a good test engineer?” you might ask. If you are already working as a software test engineer, you might pause to question whether a future in the discipline is right for you. Good software developers have been trained and groomed to have a mindset to make something work and to work around the problem if necessary. The test engineer, on the other hand, needs to be able to make things fail, but also requires a developer’s mentality to develop work-around solutions, if necessary, especially during the construction of test scripts.

Test engineers need to be structured, attentive to detail, and organized, and, given the complexities of automated testing, they should possess a creative and planning-ahead type of mindset. Because test engineers work closely and cooperatively with software developers, the test engineer needs to be both assertive and poised when working through trouble reports and issues with developers.

Given the complexities of the test effort associated with a client-server or multitier environment, test engineers should have a broad range of technical skills. Test engineers and test teams, for that matter, need experience across multiple platforms, multiple layers of supporting applications, interfaces to other products and systems, different types of databases, and application languages. If this were not enough, in an automated test environment, the test engineer needs to know the script programming language of the primary automated test tool.

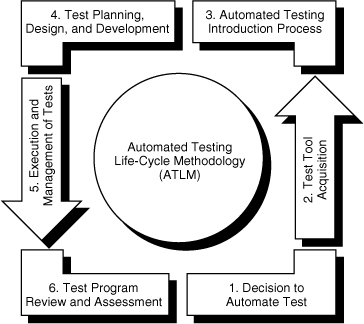

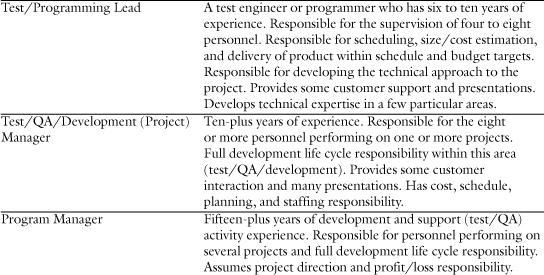

What might be a logical test career development path? Table 1.4 outlines a series of progressive steps possible for an individual performing in a professional test engineering capacity. This test engineer career development program is further described in Appendix C. This program identifies the different kinds of skills and activities at each stage or level and indicates where would-be test engineers should focus their time and attention so as to improve their capabilities and boost their careers. Individuals already performing in a management capacity can use this program as a guideline on how to approach training and development for test team staff.

Table 1.4. Test Career Progression

Given that today’s test engineer needs to develop a wide variety of skills and knowledge in areas that include programming languages, operating systems, database management systems, and networks, the ambitious software test engineer has the potential to divert from the test arena into different disciplines. For example, he or she might perform in the capacity of software development, system administration, network management, or software quality assurance.

Fortunately for the software test engineering professional, manual testing is gradually being replaced by tests involving automated test tools. Manual testing will not be replaced entirely, however, because some activities that only a human can perform will persist, such as inspecting the results of an output report.

As noted earlier, manual testing is labor-intensive and error-prone, and it does not support the same kind of quality checks that are possible through the use of an automated test tool. The introduction of automated test tools may replace some manual test processes with a more effective and repeatable testing environment. This use of automated test tools provides more time for the professional test engineer to invoke greater depth and breadth of testing, focus on problem analysis, and verify proper performance of software following modifications and fixes. Combined with the opportunity to perform programming tasks, this flexibility promotes test engineer retention and improves test engineer morale.

In the future, the software test effort will continue to become more automated and the kinds of testing available will continue to grow. These trends will require that software test engineering personnel become more organized and technically more proficient. The expansion of automation and the proliferation of multitier systems will require that test engineers have both software and system skills. In addition, software test engineering will offer many junior software engineers a place to start their software careers.

An article in Contract Professional magazine described a college student in Boston who learned an automated test tool while in school and landed a software test engineering position upon graduation. The student stated that she enjoyed her job because “you perform a variety of different work, learn a lot of things, and touch a lot of different products” [13]. The article also noted that software test engineers are in high demand, crediting this demand to the “boom in Internet development and the growing awareness of the need for software quality.”

It is likely that more universities will acknowledge the need to offer training in the software test and software quality assurance disciplines. Some university programs already offer product assurance degrees that incorporate training in test engineering and quality assurance. The state of Oregon has provided funding of $2.25 million to establish a master’s degree program in quality software engineering in the greater Portland metropolitan area [14]. Additionally, the North Seattle Community College offers two-year and four-year degree programs in automated testing curricula, as well as individual courses on automated testing (see its Web site at http://nsccux.sccd.ctc.edu/).

For the next several years, the most prominent software engineering environments will involve GUI-based client-server and Web-based applications. Automated test activity will continue to become more important, and the breadth of test tool coverage will continue to expand. Many people will choose careers in the automated test arena—many are already setting off on this path today.

Chapter Summary

• The incremental release of software, together with the development of GUI-based client-server or multitier applications, introduces new complexity to the test effort.

• Organizations want to test their software adequately, but within a minimum schedule.

• Rapid application development approach calls for repeated cycles of coding and testing.

• The automation of test activities provides its greatest value in instances where test scripts are repeated or where test script subroutines are created, and then invoked repeatedly by a number of test scripts.

• The use of automated test tools to support the test process is proving to be beneficial in terms of product quality and minimizing project schedule and effort.

• The Automated Testing Life-cycle Methodology (ATLM) includes six components and represents a structured approach with which to implement and execute testing.

• Early life-cycle involvement by test engineers in requirements and design review (as emphasized by the ATLM) bolsters test engineers’ understanding of business needs, increases requirements testability, and supports effective test design and development—a critically important activity when utilizing an automated test tool.

• Test support for finding errors early in the development process provides the most significant reduction in project cost.

• Personnel who perform testing must be more familiar with the application under test and must have specific skills relevant to the platforms and network involved as well as the automated test tools being used.

• Automated software testing, using automated test tools, is a development activity that includes programming responsibilities similar to those of the application-under-test software developer.

• Building an infrastructure of automated test scripts for the purpose of executing testing in quick fashion requires a considerable investment in test planning and preparation.

• An effective test program, incorporating the automation of software testing, involves a development life cycle of its own.

• Software test engineering can provide for an interesting and challenging work assignment and career, and the marketplace is in high demand for test engineering skills.

References

1. CenterLine Software, Inc. Survey. 1996. CenterLine is a software testing tool and automation company in Cambridge, Massachusetts.

2. http://www.standishgroup.com/chaos.html.

3. Littlewood, B. How Good Are Software Reliability Predictions? Software Reliability Achievement and Assessment. Oxford: Blackwell Scientific Publications, 1987.

4. Burnstein, I., Suwanassart, T., Carlson, C.R. Developing a Testing Maturity Model, Part II. Chicago: Illinois Institute of Technology, 1996.

6. Paulk, M., Weber, C., Curtis, B., Chrissis, M. The Capability Maturity Model Guideline for Improving the Software Process. Reading, MA: Addison-Wesley, 1995.

7. Paulk, M., Curtis, B., Chrissis, M., Weber, C. “Capability Maturity Model, Version 1.1.” IEEE Software July 1993: 18–27.

8. Paulk, M., et al. “Key Practices of the Capability Maturity Model, Version 1.1.” Technical Report CMS/SEI-93-TR-25. Pittsburgh, PA: Software Engineering Institute, 1993.

10. Gelperin, D., Hetzel, B. “The Growth of Software Testing.” CACM 1998;31:687–695.

12. Daich, G., Price, G., Ragland, B., Dawood, M. “Software Test Technologies Report.” STSC, Hill Air Force Base, Utah, August 1994.

13. Maglitta, M. “Quality Assurance Assures Lucrative Contracts.” Contract Professional Oct./Sept. 1997.

14. Bernstein, L. Pacific Northwest Software Quality Conference (1997). TTN On-Line Edition Newsletter December 1997.