Chapter 3. Introduction to Industrial Network Security

Information in this Chapter:

• The Importance of Securing Industrial Networks

• The Impact of Industrial Network Incidents

• Examples of Industrial Network Incidents

• APT and Cyber War

Securing an industrial network, although similar in many ways to standard enterprise information security, presents several unique challenges. Because industrial systems are built for reliability and longevity, the systems and networks used are easily outpaced by the tools employed by an attacker. An industrial control system may be expected to operate without pause for months or even years, and the overall life expectancy may be measured in decades. Attackers, on the contrary, have easy access to new exploits and can employ them at any time. Security considerations and practices have also lagged, largely for the same reason: the systems used predate modern network infrastructures, and so they have always been secured physically rather than digitally.

Because of the importance of industrial networks and the potentially devastating consequences of an attack, new security methods need to be adopted. As can be seen in real-life examples of industrial cyber sabotage (see the section “Examples of Industrial Network Incidents”), our industrial networks are being targeted. Furthermore, they are the target of a new threat profile that utilizes more sophisticated and targeted attacks than ever before.

The Importance of Securing Industrial Networks

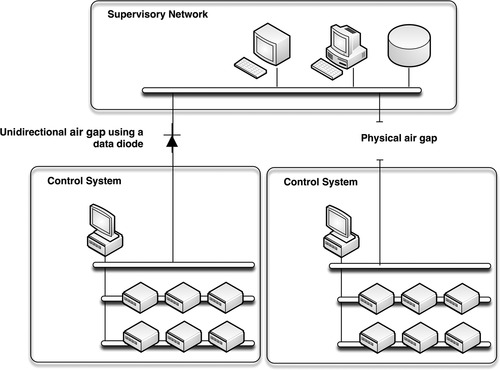

The need to improve the security of industrial networks cannot be overstated. Many industrial systems are built using legacy devices, in some cases running legacy protocols that have evolved to operate in routable networks. Before the proliferation of Internet connectivity, web-based applications, and real-time business information systems, energy systems were built for reliability. Physical security was always a concern, but information security was not a concern, because the control systems were air-gapped—that is, physically separated with no common system (electronic or otherwise) crossing that gap, as illustrated in Figure 3.1.

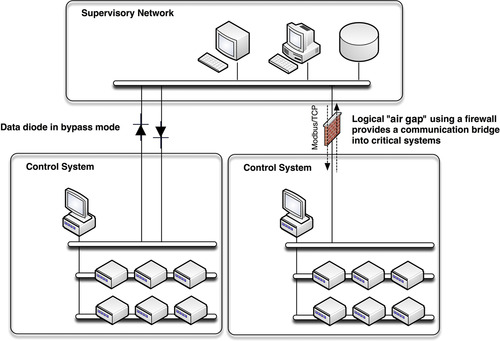

Ideally, the air gap would still exist, and it would still apply to digital communication, but in reality it does not. As the business operations of industrial networks evolved, the need for real-time information sharing evolved as well. Because the information required originated from across the air gap, a means to bypass the gap needed to be found. Typically, a firewall would be used, blocking all traffic except what was absolutely necessary in order to improve the efficiency of business operations.

The problem is that—regardless of how justified or well intended the action—the air gap no longer exists, as seen in Figure 3.2. There is now a path into critical systems, and any path that exists can be found and exploited.

Security consultants at Red Tiger Security presented research in 2010 that clearly indicates the current state of security in industrial networks. Penetration tests were performed on approximately 100 North American electric power generation facilities, resulting in more than 38,000 security warning and vulnerabilities. 1 Red Tiger was then contracted by the Department of Homeland Security (DHS) to analyze the data in search of trends that could be used to help identify common attack vectors and, ultimately, to help improve the security of these critical systems against cyber attack.

1.J. Pollet, Red Tiger, Electricity for free? The dirty underbelly of SCADA and smart meters, in: Proc. 2010 BlackHat Technical Conference, Las Vegas, NV, July 2010.

The results were presented at the 2010 BlackHat conference and implied a security climate that was lagging behind other industries. The average number of days between the time when the vulnerability was disclosed publicly and the time when the vulnerability was discovered in a control system was 331 days: almost an entire year. Worse still, there were cases of vulnerabilities that were over 1100 days old, nearly 3 years past their respective “zero-day.”2

2.Ibid.

What does this tell us? It tells us that there are known vulnerabilities that can allow hackers’ and cyber criminals’ entry into our control networks. A vulnerability that has been disclosed for almost a year has almost certainly been made readily available within open source penetration testing utilities such as Metasploit and Backtrack, making exploitation of those vulnerabilities fairly easy and available to a wide audience.

It should not be a surprise that there are well-known vulnerabilities within control systems. Control systems are by design very difficult to patch. By intentionally limiting (or even better, eliminating) access to outside networks and the Internet, simply obtaining patches can be difficult. Because reliability is paramount, actually applying patches once they are obtained can also be difficult and restricted to planned maintenance windows. The result is that there are almost always going to be unpatched vulnerabilities, although reducing the window from an average of 331 days to a weekly or even monthly maintenance window would be a huge improvement.

The Impact of Industrial Network Incidents

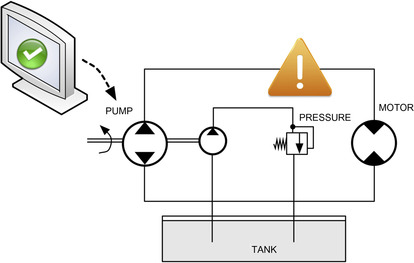

Industrial networks are responsible for process and manufacturing operations of almost every scale, and as a result the successful penetration of a control system network can be used to directly impact those processes. Consequences could potentially range from relatively benign disruptions, such as the disruption of the operation (taking a facility offline), the alteration of an operational process (changing the formula of a chemical process or recipe), all the way to deliberate acts of sabotage that are intended to cause harm. For example, manipulating the feedback loop of certain processes could cause pressure within a boiler to build beyond safe operating parameters, as shown in Figure 3.3. Cyber sabotage could result in injury or loss of life, including the loss of critical services (blackouts, unavailability of vaccines, etc.) or even catastrophic explosions.

Safety Controls

To avoid catastrophic failures, most industrial networks employ automated safety systems. However, many of these safety controls employ the same messaging and control protocols used by the industrial control network’s operational processes, and in some cases, such as certain fieldbus implementations, the safety systems are supported directly within the same communications protocols as the operational controls, on the same physical media (see Chapter 4, “Industrial Network Protocols,” for details and security concerns of industrial control protocols).

Although safety systems are extremely important, they have also been used to downplay the need for heightened security of industrial networks. However, research has shown that real consequences can occur in modeled systems. Simulations performed by the Sandia National Laboratories showed that simple Man-in-the-Middle (MITM) attacks could be used to change values in a control system and that a modest-scale attack on a larger bulk electric system using targeted malware (in this scenario, targeting specific control system front end processors) was able to cause significant loss of generation. 3

3.M.J. McDonald, G.N. Conrad, T.C. Service, R.H. Cassidy, SANDIA Report SAND2008-5954, Cyber Effects Analysis Using VCSE Promoting Control System Reliability, Sandia National Laboratories Albuquerque, New Mexico and Livermore, California, September 2008.

The European research team VIKING (Vital Infrastructure, Networks, Information and Control Systems Management) is currently investigating threats of a different sort. The Automatic Generation Control (AGC) of electric utilities operates in an entirely closed loop—that is, the control process completes entirely within the logic of the SCADA system, without human intervention or control. Rather than breaching a control system through the manipulation of an HMI, VIKING’s research attempts to investigate whether the manipulation of input data could alter the normal control loop functions, ultimately causing a disturbance. 4

4.A. Giani, S. Sastry, K.H. Johansson, H.Sandberg, The VIKING Project: An Initiative on Resilient Control of Power Networks, Department of Electrical Engineering and Computer Sciences, University of California at Berkeley, and School of Electrical Engineering, Royal Institute of Technology (KTH), Berkeley, CA, 2009.

Tip

When establishing a cyber security plan, think of security and safety as two entirely separate entities. Do not assume that security leads to safety or that safety leads to security. If an automated safety control is compromised by a cyber attack (or otherwise disrupted), the necessity of having a strong digital defense against the manipulation of operations becomes even more important. Likewise, a successful safety policy should not rely on the security of the networks used. By planning for both safety and security controls that operate independently of one another, both systems will be inherently more reliable.

Consequences of a Successful Cyber Incident

A successful cyber attack on a control system can either

• delay, block, or alter the intended process, that is, alter the amount of energy produced at an electric generation facility.

• delay, block, or alter information related to a process, thereby preventing a bulk energy provider from obtaining production metrics that are used in energy trading or other business operations.

The end result could be penalties for regulatory non-compliance or the financial impact of lost production hours due to misinformation or denial of service. An incident could impact the control system in almost any way, from taking a facility offline, disabling or altering safeguards, and even causing life-threatening incidents within the plant—up to and including the release or theft of hazardous materials or direct threats to national security. 5 The possible damages resulting from a cyber incident vary depending upon the type of incident, as shown in Table 3.1.

5.K. Stouffer, J. Falco, K. Scarfone, National Institute of Standards and Technology, Special Publication 800-82 (Final Public Draft), Guide to Industrial Control Systems (ICS) Security, Computer Security Division, Information Technology Laboratory, National Institute of Standards and Technology Gaithersburg, MD and Intelligent Systems Division, Manufacturing Engineering Laboratory, National Institute of Standards and Technology Gaithersburg, MD, September 2008.

Examples of Industrial Network Incidents

Over the past decade, there have been numerous incidents, outages, and other failures that have been identified as the result of a cyber incident. In 2000, a disgruntled man in Australia who was rejected for a government job was accused of using a radio transmitter to alter electronic data within a sewerage pumping station, causing the release of over two hundred thousand gallons of raw sewage into nearby rivers. 6

6.T. Smith, The Register. Hacker jailed for revenge sewage attacks. < http://www.theregister.co.uk/2001/10/31/hacker_jailed_for_revenge_sewage/>, October 31, 2001 (cited: November 3, 2010).

In 2007, there was the Aurora Project: a controlled experiment by the Idaho National Laboratories (INL), which successfully demonstrated that a controller could be destroyed via a cyber attack. The vulnerability allowed hackers—which in this case were white-hat security researchers at the INL—to successfully open and close breakers on a diesel generator out of synch, causing an explosive failure. In September 2007, CNN reported on the experiment, bringing the security of our power infrastructure into the popular media. 7

7.J. Meserve, CNN.com. Sources: Staged cyber attack reveals vulnerability in power grid. < http://articles.cnn.com/2007-09-26/us/power.at.risk_1_generator-cyber-attack-electric-infrastructure>, September 26, 2007 (cited: November 3, 2010).

The Aurora vulnerability remains a concern today. Although the North American Electric Reliability Corporation (NERC) first issued an alert on Aurora a few months before CNN’s report in June 2007, it has since provided additional alerts, as recent as an October 2010 alert that provides clear mitigation strategies for dealing with the vulnerability. 8

8.North American Reliability Corporation, Press Release: NERC Issues AURORA Alert to Industry, October 14, 2010.

In 2008, the agent.btz worm began infecting U.S. military machines and was reportedly carried into CENTCOM’s classified network on a USB thumb drive later that year. Although the CENTCOM breach, reported by CBS’ 60 Minutes in November 2009, was widely publicized, the specifics are difficult to ascertain and the damages and intentions remain highly speculative. 9

9.CBS News, Cyber war: sabotaging the system. < http://www.cbsnews.com/stories/2009/11/06/60minutes/main5555565.shtml>, November 8, 2009 (cited: November 3, 2010).

Not to be confused with the Aurora Project is another recent attack called Operation Aurora that hit Google and others in late 2009 and put the spotlight on the sophisticated new arsenal of cyber war. Operation Aurora used a zero-day exploit in Internet Explorer to deliver a payload designed to exfiltrate protected intellectual property. Operation Aurora changed the threat landscape from denial of service attacks and malware designed to damage or disable networks to targeted attacks designed to operate without disruption, to remain stealthy, and to steal information undetected. Aurora consisted of multiple individual pieces of malware, which combined to establish a hidden presence on a host and then communicate over a sophisticated command and control (C2) channel that employed a custom, encrypted protocol that mimicked common HTTPS traffic on port 443 encrypted via Secure Sockets Layer (SSL). 10 Although CENTCOM and Operation Aurora did not target industrial networks specifically, they exemplifed the evolving nature of threats. In other words, Aurora demonstrated the existence of the “Advanced Persistent Threats” (APTs), just as a more recent worm demonstrated the existence of targeted cyber weapons and the machinations of cyber war.

10.McAfee Threat Center, Operation Aurora. < http://www.mcafee.com/us/threat_center/operation_aurora.html> (cited: November 4, 2010).

This later worm, of course, is Stuxnet: the new weapon of cyber war, which began to infect industrial control systems in 2010. Any speculation over the possibility of a targeted cyber attack against an industrial network has been overruled by this extremely complex and intelligent collection of malware. Stuxnet is a tactical nuclear missile in the cyber war, and it was not a shot across the bow: it hit its mark and left behind the proof that extremely complex and sophisticated attacks can and do target industrial networks. The worst-case scenario has now been realized: industrial vulnerabilities have been targeted and exploited by an APT.

Although Stuxnet was first encountered in June 2009, widespread discussions about it did not occur until the summer of 2010, after an Industrial Control Systems Cyber Emergency Response Team (ICS-CERT) advisory was issued. 11 Stuxnet uses four zero-days in total to infect and spread, looking for SIMATIC WinCC and PCS 7 programs from Siemens, and then using default SQL account credentials to infect connected Programmable Logic Controllers (PLCs) by injecting a rootkit via the Siemens fieldbus protocol, Profibus. Stuxnet then looks for automation devices using a frequency converter that controls the speed of a motor. If it sees a controller operating within a range of 800–1200Hz, it attempts to sabotage the operation. 12

11.Industrial Control Systems Cyber Emergency Response Team (ICS-CERT), ICSA-10-238-01—STUXNET MALWARE MITIGATION, Department of Homeland Security, US-CERT, Washington, DC, August 26, 2010.

12.E. Chien, Symantec. Stuxnet: a breakthrough. < http://www.symantec.com/connect/blogs/stuxnet-breakthrough>, November 2010 (cited: November 16, 2010).

Although little was known at first, Siemens effectively responded to the issue, quickly issuing a security advisory, as well as a tool for the detection and removal of Stuxnet. Stuxnet drew the attention of the mass media through the fall of 2010 for being the first threat of its kind—a sophisticated and blended threat that actively targets SCADA systems—and it immediately raised the industry’s awareness of advanced threats, and illustrated exactly why industrial networks need to dramatically improve their security measures.

Dissecting Stuxnet

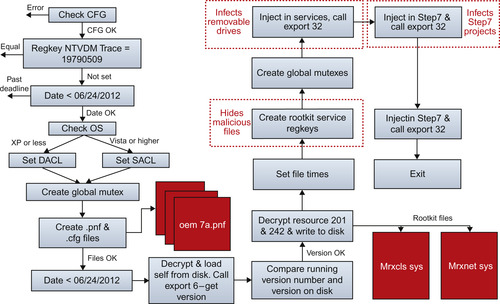

Stuxnet is very complex, as can be seen in Figure 3.4. It was used to deliver a payload targeting a specific control system. It is the first industrial control system rootkit. It can self-update even when cut off from C2 (which is necessary should it find its way into a truly air-gapped system). It is able to inject code into the ladder logic of PLCs, and at that point alter the operations of the PLC as well as hide itself by reporting false information back to the HMI. It adapts to its environment. It uses system-level, hard-coded authentication credentials that were not publicly disclosed. It signed itself with legitimate certificates manufactured using stolen keys. There is no doubt about it at this time: Stuxnet is an advanced new weapon in the cyber war.

|

| Figure 3.4 Stuxnet’s Infection Processes. 13 13.N. Falliere, L.O. Murchu, E. Chien, Symantec, W32.Stuxnet Dossier, Version 1.3, October 2010. Courtesy of Symantec. |

What It Does

The full extent of what Stuxnet is capable of doing is not known at the time of this writing. What we do know is that Stuxnet does the following: 14

14.N. Falliere, L.O Murchu, E. Chien, Symantec. W32.Stuxnet Dossier, Version 1.1, October 2010.

• Infects Windows systems using a variety of zero-day exploits and stolen certificates, and installing a Windows rootkit on compatible machines.

• Attempts to bypass behavior-blocking and host intrusion protection based technologies that monitor LoadLibrary calls by using special processes to load any required DLLs, including injection into preexisting trusted processes.

• Typically infects by injecting the entire DLL into another process and only exports additional DLLs as needed.

• Checks to make sure that its host is running a compatible version of Windows, whether or not it is already infected, and checks for installed Anti-Virus before attempting to inject its initial payload.

• Spreads laterally through infected networks, using removable media, network connections, and/or Step7 project files.

• Looks for target industrial systems (Siemens WinCC SCADA). When found, it uses hard-coded SQL authentication within the system to inject code into the database, infecting the system in order to gain access to target PLCs.

• Injects code blocks into the target PLCs that can interrupt processes, inject traffic on to the Profibus, and modify the PLC output bits, effectively establishing itself as a hidden rootkit that can inject commands to the target PLCs.

• Uses infected PLCs to watch for specific behaviors by monitoring Profibus (The industrial network protocol used by Siemens. See Chapter 4, “Industrial Network Protocols,” for more information on Profibus).

• If certain frequency controller settings are found, Stuxnet will throttle the frequency settings from 1410 to 2Hz, in a cycle.

• It includes the capabilities to remove itself from incompatible systems, lie dormant, reinfect cleaned systems, and communicate peer to peer in order to self-update within infected networks.

What we do not know at this point is what the full extent of damage could be from the malicious code that is inserted within the PLC. Subtle changes in set points over time could go unnoticed that could cause failures down the line, use the PLC logic to extrude additional details of the control system (such as command lists), or just about anything. Because Stuxnet has exhibited the capability to hide itself and lie dormant, the end goal is still a mystery.

Lessons Learned

Because Stuxnet is such a sophisticated piece of malware, there is a lot that we can learn from dissecting it and analyzing its behavior. How did we detect Stuxnet? Largely because it was so widespread. Had it been deployed more tactically, it might have gone unnoticed: altering PLC logic and then removing itself from the WinCC hosts that were used to inject those PLCs. How will we detect the next one? The truth is that we may not, and the reason is simple: our “barrier-based” methodologies do not work against cyber attacks that are this well researched and funded. They are delivered via zero-days, which means we do not detect them until they have been deployed, and they infect areas of the control system that are difficult to monitor.

So what do we do? We learn from Stuxnet and change our perception and attitude toward industrial network security (see Table 3.2). We adopt a new “need to know” mentality of control system communication. If something is not explicitly defined, approved, and allowed to communicate, it is denied. This requires understanding how control system communications work, establishing that “need to know” in the form of well-defined security enclaves, establishing policies and baselines around those enclaves that can be interpreted by automated security software, and whitelisting everything.

It can be seen in Table 3.2 that additional security measures need to be considered in order to address new “Stuxnet-class” threats that go beyond the requirements of compliance mandates and current best-practice recommendations. New measures include Layer 7 application session monitoring to discover zero-day threats and to detect covert malware communications. They also include more clearly defined security policies to be used in the adoption of policy-based user, application, and network whitelisting to control behavior in and between enclaves (see Chapter 7, “Establishing Secure Enclaves”).

Tip

Before Stuxnet, the axiom “to stop a hacker, you need to think like a hacker” was often used, meaning that in order to successfully defend against a cyber attack you need to think in terms of someone trying to penetrate your network. This philosophy still has merit, the only difference being that now the “hacker” can be thought of as having a much greater knowledge of control systems, as well as significantly more resources and motivation. In the post-Stuxnet world, imagine that you are building a digital bunker in the cyber war, rather than simply defending a network, and aim for the best possible defenses against the worst possible attack.

Night Dragon

In February 2011, McAfee announced the discovery of a series of coordinated attacks against oil, energy, and petrochemical companies. The attacks, which originated primarily in China, were believed to have originated in 2009, operating continuously and covertly for the purpose of information extraction, 15 as is indicative of an APT.

15.Global Energy Cyberattacks: “Night Dragon,” McAfee Foundstone Professional Services and McAfee Labs, Santa Clara, CA, February 10, 2011.

Night Dragon is further evidence of how an outside attacker can (and will) infiltrate critical systems. Although the attack did not result in sabotage, as was the case with Stuxnet, it did involve the theft of sensitive information. The intended use of this information is unknown at this time. The information that was stolen could be used for almost anything, and for a variety of motives. It began with SQL injections against corporate web servers, which were then used to access intranet servers. Using standard tools, attackers gained additional usernames and passwords to enable further infiltration to internal desktop PCs and servers. Night Dragon established command and control servers as well as Remote Administration Toolkits (RATs), primarily to extract e-mail archives from executive accounts. 16 Although it is important to note that the industrial control systems of the target companies were not affected, important information could have been obtained regarding the design and operation of those systems, which could be used in a later, more targeted attack. As with any APT, Night Dragon is surrounded with uncertainty and supposition. After all, APT is an act of cyber espionage: one that may or may not develop into a more targeted cyber war.

16.Ibid.

APT and Cyber War

The terms APT and cyber war are often used interchangeably, and they can be related, but they differ enough in their intent to justify distinct classifications of modern, sophisticated network threats.

Although both are of concern to industrial networks, it is important to understand their differences and intentions, so that they can be better addressed. It is also important to understand that both are types of threat behaviors that consist of various exploits and are not specific exploits or pieces of malware themselves. That is, “APT” classifies a group of exploits (delivery) to infect a network with malicious code (the payload) that is designed to accomplish a specific goal (information theft). Cyber war similarly classifies a threat that can include distinct delivery mechanisms to deliver payloads of various intents. 17 Although both can utilize similar techniques, exploits, and even common code, the differences between APT and cyber war at a higher level distinguish one from another, as can be seen in Table 3.3.

17.J. Pollet, Red Tiger, Understanding the advanced persistent threat, in: Proc. 2010 SANS European SCADA and Process Control Security Summit, Stockholm, Sweden, October 2010.

Just as APT and cyber war differ in intent, they can also differ in their targets, as seen in Table 3.4. Again, the methods used to steal intellectual property for profit and the methods used to steal intellectual property to sabotage an industrial system can be the same. However, by determining the target of attack, insight into the nature of the attack can be inferred. The difference is a subtle one and is made here in an attempt to highlight the level of severity and sophistication that should be considered when securing industrial networks. That is, blended attacks designed to be persistent and undetected represent the APT, while these same blended and stealthy attacks can be weaponized and used for cyber sabotage. APT can be used to obtain information that is later used to construct new zero-day exploits. APT can also be used to obtain information necessary to design a targeted payload—such as the one used by Stuxnet—that can be delivered using those exploits. In other words, the methods, intentions, and impact of cyber war should be treated as even more sophisticated than the APT.

The Advanced Persistent Threat

The Advanced Persistent Threat, or APT, has earned broad media attention in recent years. The Aurora Project and Stuxnet’s high publicity increased awareness of new threat behaviors both within and outside of the information security communities: Incident researchers such as Exida (http://www.exida.com), Lofty Perch (http://www.loftyperch.com), and Red Tiger Security (http://www.redtigersecurity.com) specialize in the incident response of APT; organizations such as RISI (Repository of Industrial Security Incidents; http://www.securityincidents.org) have been developed to catalogue incident behavior; and regulatory and CERT organizations have issued warnings for APTs, including an NERC alert issued by the North American Electric Reliability Corporation for both Aurora and Stuxnet, requiring direct action from its member electric utilities, with clear penalties for noncompliance. 18

18.North American Reliability Corporation, NERC Releases Alert on Malware Targeting SCADA Systems, September 14, 2010.

With all of this attention, a lot has been determined about how APTs function. One differentiator of an APT is a shift from broad, untargeted attacks to more directed attacks that focus on determining specifics about its target network. APTs spread and learn, and exfiltrate information through covert communications channels. Often, APT relies upon outside C2, although in some cases such as Stuxnet, APT threats are capable of operating in isolation. 19

19.J. Pollet, Red Tiger, Understanding the advanced persistent threat, in: Proc. 2010 SANS European SCADA and Process Control Security Summit, Stockholm, Sweden, October 2010.

Another differentiator of APT from normal malware or hack attempts is an attempt to remain hidden and to proliferate within a network, leading to the persistence of the threat. This typically includes a tiered infection model, where increasingly sophisticated methods of covert communication are established. The most basic will operate in an attempt to obtain information from the target, whereas one or more increasingly sophisticated mechanisms will remain dormant. This tiered model increases the persistence of the threat, where the more difficult to detect infections only awaken after the removal of the initial APT. In this way, “cleaned” machines can remain infected. This is one reason why it is important to thoroughly investigate an APT before attempting disinfection, as the initially detected threat may be easier to deal with, while higher-level programs remain dormant. 20

20.K. Harms, Mandiant, Keynote on advanced persistent threat, in: Proc. 2010 SCADA Security Scientific Symposium (S4), Miami, FL, January 2010.

The end result of APT’s relentless, layered approach is the deliberate exfiltration of data. Proprietary information can achieve anything from increased competitiveness in manufacturing and design (making the data valuable on the black market), to direct financial benefit achieved through the theft of financial resources and records. Highly classified information may also be valuable for the development of further, more sophisticated APTs, or even weaponized threats for use in cyber sabotage and cyber warfare.

Common APT Methods

The methods used by APT are diverse. Within industrial networks, incident data has been analyzed, and specific attacker profiles have been identified. The attacks themselves tend to be fairly straightforward, using Open Source Intelligence (OSINT) to facilitate social engineering, targeted spear phishing (customized e-mails designed to trick readers into clicking on a link, opening an attachment, or otherwise triggering malware), malicious attachments, removable media such as USB drives, and malicious websites as initial infection vectors. 21 APT payloads (the malware itself) range from freely available kits such as Webattacker and torrents, to commercial malware such as Zeus (ZBOT), Ghostnet (Ghostrat), Mumba (Zeus v3), and Mariposa. Malware delivery is typically obfuscated to avoid detection by Anti-Virus and other detection mechanisms. 22

21.J. Pollet, Red Tiger, Understanding the advanced persistent threat, in: Proc. 2010 SANS European SCADA and Process Control Security Summit, Stockholm, Sweden, October 2010.

22.Ibid.

Once a network is infected, APT strives to operate covertly and may attempt to deactivate or circumvent Anti-Malware software, establish backdoor channels, or open holes in firewalls. 23 Stuxnet, for example, attempts to avoid discovery by bypassing host intrusion detection and also by removing itself from systems that are incompatible with its payload. 24

23.Ibid.

24.N. Falliere, L.O. Murchu, E. Chien, Symantec. W32.Stuxnet Dossier, Version 1.1, October 2010.

Because the techniques used are for the most part common infection vectors and known malware, what is so “advanced” about the APT? One area where APT is often very sophisticated is in the knowledge of its target—known information about the target and the people associated with that target. For example, highly effective spear phishing may utilize knowledge of the target corporation’s organization structure (e.g., a mass e-mail that masquerades as a legitimate e-mail from an executive within the company), or of the local habits of employees (e.g., a mass e-mail promising discounted lunch coupons from a local eatery). 25

25.J. Pollet, Red Tiger, Understanding the advanced persistent threat, in: Proc. 2010 SANS European SCADA and Process Control Security Summit, Stockholm, Sweden, October 2010.

Cyber War

Unlike APT, where the initial infections are typically from focused yet simple exploits such as spear phishing (the “advanced” moniker comes from the behavior of the threat after infection), the threats associated with cyber war trend toward more sophisticated delivery mechanisms and payloads. 26 Stuxnet utilized multiple zero-day exploits for infection, for example. The development of one zero-day requires resources: the financial resources to purchase commercial malware or the intellectual resources with which to develop new malware. Stuxnet raised a high degree of speculation about its source and its intent at least partly due to the level of resources required to deliver the worm through so many zero-days. Stuxnet also used “insider intelligence” to focus on its target control system, which again implied that the creators of Stuxnet had significant resources: they either had access to an industrial control system with which to develop and test their malware, or they had enough knowledge about how such a control system was built that they were able to develop it in a simulated environment.

26.Ibid.

That is, the developers of Stuxnet could have used stolen intellectual property—which is the primary target of the Advanced Persistent Threat—to develop a more weaponized piece of malware. In other words, APT is a logical precursor to cyber war. In the case of Stuxnet, it is pure speculation: at the time of this writing, the creators of Stuxnet are unknown, as is their intent.

Two important inferences can be made by comparing APT and cyber warfare. The first is that cyber warfare is higher in sophistication and in consequence, mostly due to available resources of the attacker and the ultimate goal of destruction versus profit. The second is that in many industrial networks, there is less profit available to a cyber attacker than from others. If the industrial network you are defending is largely responsible for commercial manufacturing, signs of an APT are likely evidence of attempts at intellectual theft. If the industrial network you are defending is critical and could potentially impact lives, signs of an APT could mean something larger, and extra caution should be taken when investigating and mitigating these attacks.

Emerging Trends in APT and Cyber War

Through the analysis of known cyber incidents, several trends can be determined in how APT and cyber attacks are being performed. These include, but are not limited to, a shift in the initial infection vectors and the qualities of the malware used, its behavior, and how it infects and spreads.

Although threats have been trending “up the stack” for some time, where exploits are moving away from network-layer and protocol-layer vulnerabilities and more toward application-specific exploits, even more recent trends show signs that these applications are shifting away from the exploitation of Microsoft software products toward the almost ubiquitously deployed Adobe Portable Document Format (PDF) and its associated software products.

Web-based applications are also used heavily both for infections and for C2. The use of social networks such as Twitter, Facebook, Google groups, and other cloud services is ideal for both because they are widely used, highly accessible, and difficult to monitor. Many companies actually embrace social networking for marketing and sales purposes, often to the extent that these services are allowed open access through corporate firewalls.

The malware itself, of course, is also evolving. There is growing evidence among incident responders and forensics teams of deterministic malware and even the emergence of mutating bots. Stuxnet, again, is a good example: it contains robust logic and will operate differently depending upon its environment. It will spread, attempt to inject PLC code, communicate via C2, lie dormant, or awaken depending upon changes to its environment.

Evolving Vulnerabilities: The Adobe Exploits

Adobe Postscript Document Format (PDF) exploits are an example of the shifting attack paradigm from lower-level protocol and OS exploits to the manipulation of application contents. At a very high level, the exploits utilize PDFs’ ability to call and execute code to execute malicious code: either by calling a malicious website or by injecting the code directly within the PDF file. It works like this:

• An e-mail contains a compelling message, a properly targeted spear-phishing message. There is a .pdf attachment.

• This PDF uses a feature, specified in the PDF format, known as a “Launch action.” Security researcher Didier Stevens successfully demonstrated that Launch actions can be exploited and can be used to run an executable embedded within the PDF file itself. 27

27.D. Stevens, Escape from PDF. < http://blog.didierstevens.com/2010/03/29/escape-from-pdf>, March 2010 (cited: November 4, 2010).

• The malicious PDF also contains an embedded file named Discount_at_Pizza_Barn_Today_Only.pdf, which has been compressed inside the PDF file. This attachment is actually an executable file, and if the PDF is opened and the attachment is run, it will execute.

• The PDF uses the JavaScript function exportDataObject to save a copy of the attachment to the user’s PC.

• When this PDF is opened in Adobe Reader (JavaScript must be enabled), the exportDataObject function causes a dialog box to be displayed asking the user to “Specify a file to extract to.” The default file is the name of the attachment, Discount_at_Pizza_Barn_Today_Only.pdf. The exploit requires that the users’ naïveté and/or their confusion will cause them to save the file.

• Once the exportDataOject function has completed, the Launch action is run. The Launch action is used to execute the Windows command interpreter (cmd.exe), which searches for the previously saved executable attachment Discount_at_Pizza_Barn_Today_Only.pdf and attempts to execute it.

• A dialogue box will warn users that the command will run only if the user clicks “Open.”

The hack has been used to spread known malware, including ZeusBot. 28 Although it does require user interaction, PDF files are extremely common, and when combined with a quality spear-phishing attempt, this attack can be very effective.

28.M86 Security Labs, PDF “Launch” Feature Used to Install Zeus. < http://www.m86security.com/labs/traceitem.asp?article=1301>, April 14, 2010 (cited: November 4, 2010).

Another researcher chose to infect the benign PDF with another /Launch hack that redirected a user to a website, but noted that it could have just as easily been an exploit pack and/or embedded Trojan binary. The dialogue box used to warn users can also be modified, increasing the likeliness that even a normally cautious user will execute the file. 29

29.J. Conway, Sudosecure.net. Worm-Able PDF Clarification. < http://www.sudosecure.net/archives/644>, April 4, 2010 (cited: November 4, 2010).

Antisocial Networks: A New Playground for Malware

Social networking sites are increasingly popular, and they represent a serious risk against industrial networks. How can something as benign as Facebook or Twitter be a threat to an industrial network? By design, social networking sites make it easy to find and communicate with people, and people are subject to social engineering exploitation just as networks are subject to protocol and application exploits.

At the most basic level, they are a source of gathering personal information and end user’s trust that can be exploited either directly or indirectly. At a more sophisticated level, social networks can be used actively by malware as a C2 channel. Fake accounts posing as “trusted” coworkers can lead to even more information sharing, or to trick the user into clicking on a link that will take them to a malicious website that will infect the user’s laptop with malware. That malware could mine even further information, or it could be walked into a “secure” facility to impact an industrial network directly.

Although no direct evidence links the rise in web-based malware and social networking adoption, the correlation is strong enough that any good security plan should accommodate social networking, especially in industrial networks. According to Cisco, “Companies in the Pharmaceutical and Chemical vertical were the most at risk for web-based malware encounters, experiencing a heightened risk rating of 543 percent in 2Q10, up from 400 percent in 1Q10. Other higher risk verticals in 2Q10 included Energy, Oil, and Gas (446 percent), Education (157 percent), Government (148 percent), and Transportation and Shipping (146 percent).”30

30.Cisco Systems, 2Q10 Global Threat Report, 2010.

Apart from being a direct infection vector, social networking sites can be used by more sophisticated attackers to formulate targeted spear-phishing campaigns, such as the “pizza delivery” exercise. Through no direct fault of the social network operators (most have adequate privacy controls in place), users may post personal information about where they work, what their shift is, who their boss is, and other details that can be used to engineer a social exploitation. Spear phishing is already a proven tactic; combined with the additional trust associated with social networking communities, it is easier and even more effective.

Tip

Security awareness training is an important part of building a strong security plan, but it can also be used to assess current defenses. Conduct this simple experiment to both increase awareness of spear phishing and gauge the effectiveness of existing network security and monitoring capabilities:

1. Create a website using a free hosting service that displays a security awareness banner.

2. For this exercise, create a Gmail account using the name (modified if necessary) of a group manager, HR director, or the CEO of your company (again, disclosing this activity to that individual in advance and obtaining necessary permissions). Assume the role of an attacker, with no inside knowledge of the company: look for executives who are quoted in press releases, or listed on other public documents. Alternately, use the Social Engineering Toolkit (SET), a tool designed to “perform advanced attacks against the human element,” to perform a more thorough social engineering penetration test. 31

31.Social Engineering Framework, Computer based social engineering tools: Social Engineer Toolkit (SET). < http://www.social-engineer.org>.

3. Again, play the part of the attacker and use either SET or outside means such as Jigsaw.com or other business intelligence websites to build a list of e-mail addresses within the company.

4. Send an e-mail to the group from the fake “executive” account, informing recipients to please read the attached article in preparation for an upcoming meeting.

5. Perform the same experiment on a different group, using an e-mail address originating from a peer (again, obtain necessary permissions). This time, attempt to locate a pizza restaurant local to your corporate offices, using Google map searches or similar means, and send an e-mail with a link to an online coupon for buy-one-get-one-free pizza.

Track your results to see how many people clicked through to the offered URL. Did anyone validate the “from” in the e-mail, reply to it, or question it in any way? Did anyone outside of the target group click through, indicating a forwarded e-mail?

Finally, with the security monitoring tools that are currently in place, is it possible to effectively track the activity? Is it possible to determine who clicked through (without looking at web logs)? Is it possible to detect abnormal patterns or behaviors that could be used to generate signatures, and detect similar phishing in the future?

The best defense against a social attack continues to be security awareness and situational awareness: the first helps prevent a socially engineered attack from succeeding by establishing best-practice behaviors among personnel; the second helps to detect if and when a successful breach has occurred, where it originated, and where it may have spread to—in order to mitigate the damage and correct any gaps in security awareness and training.

Caution

Always inform appropriate personnel of any security awareness exercise to avoid unintended consequences and/or legal liability, and NEVER perform experiments of this kind using real malware. Even if performed as an exercise, the collection of actual personal or corporate information could violate your employment policy or even state, local, or federal privacy laws.

Finally, social networks can also be used as a C2 channel between deployed malware and a remote server. One case of Twitter being used to deliver commands to a bot is the @upd4t3 channel, first detected in 2009, that uses standard 140-character tweets to link to base64-encoded URLs that deliver infosteeler bots. 32

32.J. Nazario, Arbor networks. Twitter-based Botnet Command Channel. < http://asert.arbornetworks.com/2009/08/twitter-based-botnet-command-channel>, August 13, 2009 (cited: November 4, 2010).

This use of social networking is difficult to detect, as it is not feasible to scour these sites individually for such activity and there is no known way to detect what the C2 commands may look like or where they might be found. In the case of @upd4t3, application session analysis on social networking traffic could detect the base64 encoding once a session was initiated. The easiest way to block this type of activity, of course, is to block access to social networking sites completely from inside industrial networks. However, the wide adoption of these sites within the enterprise (for legitimate sales, marketing, and even business intelligence purposes) makes it highly likely that any threat originating from or directly exploiting social networks can and will compromise the business enterprise.

Cannibalistic Mutant Underground Malware

More serious than the 1984 New World Pictures film about cannibalistic humanoid underground dwellers, the newest breed of malware is a real threat. It is malware with a mind: using conditional logic to direct activity based on its surrounding until it finds itself in the perfect conditions in which it will best accomplish its goal (spread, stay hidden, deploy a weapon, etc.). Again, Stuxnet’s goal was to find a particular industrial process control system: it spread widely through all types of networks, and only took secondary infection measures when the target environment (SIMATIC) was found. Then, it again checked for particular PLC models and versions, and if found it injected process code into the PLC; if not, it lay dormant.

Malware mutations are also already in use. At a basic level, Stuxnet will update itself in the wild (even without a C2 connection), through peer-to-peer checks with others of its kind: if a newer version of Stuxnet bumps into an older version, it updates the older version, allowing the infection pool to evolve and upgrade in the wild. 33

33.J. Pollet, Red Tiger, Understanding the advanced persistent threat, in: Proc. 2010 SANS European SCADA and Process Control Security Summit, Stockholm, Sweden, October 2010.

Further mutation behavior involves self-destruction of certain code blocks with self-updates of others, effectively morphing the malware and making it more targeted as well as more difficult to detect. Mutation logic could include checking for the presence of other well-known malware and adjusting its own profile to utilize similar ports and services, knowing that this new profile will go undetected. In other words, malware is getting smarter and it is harder to detect.

Still to Come

Infection mechanisms, attack vectors, and malware payloads continue to evolve. We can expect to see greater sophistication of the individual exploits and bots, as well as more sophisticated blends of these components. Because advanced malware is expensive to develop (or acquire), however, it is reasonable to expect new variations or evolutions of existing threats in the short term, rather than additional “Stuxnet-level” revolutions. Understanding how existing exploits might be fuzzed or enhanced to avoid detection can help plan a strong defense strategy.

What we can assume is that threats will continue to grow in size, sophistication, and complexity. 34 We can also assume that new zero-day vulnerabilities will be used for one or more stages of an attack (infection, propagation, and execution). Also assume that attacks will become more focused, attempting to avoid detection through minimized exposure. Stuxnet spreads easily through many systems and only fully activates within certain environments; if a similar attack were less promiscuous and more tactically inserted into the target environment, it would be much more difficult to detect.

34.Ibid.

In early 2011, additional vulnerabilities and exploits that specifically target SCADA systems have been developed and released publically, including the broadly publicized exploits developed by two separate researchers in Italy and Russia. The “Luigi Vulnerabilities,” identified by Italian researcher Luigi Auriemma included 34 total vulnerabilities against systems from Siemens, Iconics, 7-Technologies, and DATAC. 35 Additional vulnerabilities and exploit code, including nine zero-days, was released by the Russian firm Gleg as part of the Agora+ exploit pack for the CANVAS toolkit. 36

35.D. Peterson, Italian researcher publishes 34 ICS vulnerabilities. Digital Bond. < http://www.digitalbond.com/2011/03/21/italian-researcher-publishes-34-ics-vulnerabilities/>, March 21, 2011 (cited: April 4, 2011).

36.D. Peterson, Friday News and Notes. < http://www.digitalbond.com/2011/03/25/friday-news-and-notes-127>, March 25, 2011 (cited: April 4, 2011).

Luckily, many tools are already available to defend against these sophisticated attacks, and the results can be very positive when they are used appropriately in a blended, sophisticated defense based upon “Advanced Persistent Diligence.”37

37.Ibid.

Defending Against APT

As mentioned in Chapter 2, “About Industrial Networks,” the security practices that are recommended herein are aimed high, and this is because the threat environment in industrial networks has already shifted to these types of APTs, if not outright cyber war. These recommendations are built around the concept of “Advanced Persistent Diligence” and a much higher than normal level of situational awareness. This is because APT is evolving specifically to avoid detection by known security measures. 38

38.Ibid.

Advanced Persistent Diligence requires a strong Defense-in-Depth approach, both in order to reduce the available attack surface exposed to an attacker, and in order to provide a broader perspective of threat activity for use in incident analysis, investigation, and response. That is, because APT is evolving to avoid detection even through advanced event analysis, it is necessary to examine more data about network activity and behavior from more contexts within the network. 39

39.US Department of Homeland Security, US-CERT, Recommended Practice: Improving Industrial Control Systems Cybersecurity with Defense-In-Depth Strategies, Washington, DC, October 2009.

More traditional security recommendations are not enough, because the active network defense systems such as firewalls, UTMs, and IPS are no longer capable of blocking the same threats that carry with them the highest consequences. APT threats can easily slide through these legacy cyber defenses.

Having situational awareness of what is attempting to connect to the system, as well as what is going on within the system is the only way to start to regain control of the system. This includes information about systems and assets, network communication flows and behavior patterns, organizational groups, user roles, and policies. Ideally, this level analysis will be automated and will provide an active feedback loop in order to allow IT and OT security professionals to successfully mitigate a detected APT.

Responding to APT

Ironically, the last thing that you should do upon detecting an APT is to clean the system of infected malware. This is because, as mentioned under section “Advanced Persistent Threats,” there may be subsequent levels of infection that exist, dormant, that will be activated as a result. Instead, a thorough investigation should be performed, with the same sophistication as the APT itself.

First, logically isolate the infected host so that it can no longer cause any harm. Allow the APT to communicate over established C2 channels, but isolate the host from the rest of your network, and remove all access between that host and any sensitive or protected information. Collect as much forensic detail as possible in the form of system logs, captured network traffic, and supplement where possible with memory analysis data. By effectively sandboxing the infected system, important information can be gathered that can result in the successful removal of an APT.

In summary, when you suspect that you are dealing with an APT, approach the situation with diligence and perform a thorough investigation:

• Always monitor everything: collect baseline data, configurations, and firmware for comparison.

• Analyze available logs to help identify scope, infected hosts, propagation vectors, etc.

• Sandbox and investigate infected systems.

• Analyze memory to find memory-resident rootkits and other threats living in user memory.

• Reverse engineer-detected malware to determine full scope and to identify additional attack vectors and possible prorogation.

• Retain all information for disclosure to authorities.

Note

Information collected from an infected and sandboxed host may prove valuable to legal authorities, and depending upon the nature of your industrial network you may be required to report this information to a governing body.

Depending on the severity of the APT, a “bare metal reload” may be necessary, where a device is completely erased and reduced to a bare, inoperable state. The host’s hardware must then be reimaged completely. For this reason, clean versions of operating systems and/or asset firmware should be kept in a safe, clean environment. This can be accomplished using secure virtual backup environments, or via secure storage on trusted removable media that can then be stored in a locked cabinet.

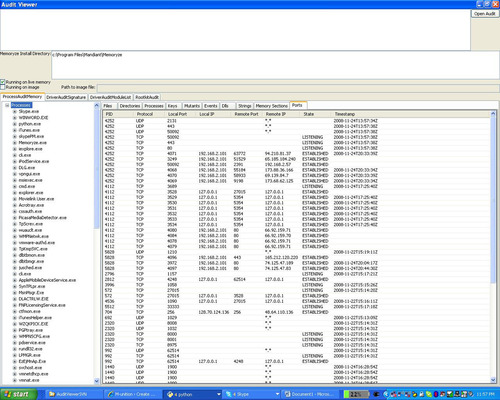

Free tools such as Mandiant’s Memoryze, shown in Figure 3.5, can help you to perform a deep forensic analysis on infected systems. This can help to determine how deeply infected a system might be, by detecting memory-resident rootkits. Memoryze and other forensics tools are available at http://www.mandiant.com.

|

| Figure 3.5 Mandiant’s Memoryze: A Memory Forensic Package. 40 40.Screenshot, Mandiant’s Memoryze memory analysis software. < http://www.mandiant.com> (cited: October 26, 2010). |

Tip

If you think you have an APT, you should know that there are security firms that are experienced in investigating and cleaning APT. Many such firms further specialize in industrial control networks. These firms can help you deal with infection as well as provide an expert interface between your organization and any governing authorities that may be involved.

Summary

Industrial networks are important and vulnerable, and there are potentially devastating consequences of a cyber incident. Examples of real cyber incidents—from CENTCOM to Aurora to Stuxnet—have grown progressively more severe over time, highlighting the evolving nature of threats against industrial systems. The attacks are evolving into APTs, and the intentions are evolving from information theft to industrial sabotage and the disruption of critical infrastructures.

Securing industrial networks requires a reassessment of our security practices, realigning them to a better understanding of how industrial protocols and networks operate (see Chapter 4, “Industrial Network Protocols,” and Chapter 5“How Industrial Networks Operate”), as well as a better understanding of the vulnerabilities and threats that exist (see Chapter 6, “Vulnerability and Risk Assessment”).

..................Content has been hidden....................

You can't read the all page of ebook, please click here login for view all page.