13

Present and Defend: First Mock

In this chapter, you will continue creating an end-to-end solution for your first full mock scenario. You covered most of the scenario already in Chapter 12, Practice the Review Board: First Mock, but you still have plenty of topics to cover. In this chapter, you will continue analyzing and solutioning each of the shared requirements and then practice creating some presentation pitches.

To give you the closest possible experience to the real-life exam, you will work through a practice Q&A session and defend, justify, and change the solution based on the judges’ feedback.

After reading this chapter, you are advised to reread the scenario and try to solution it on your own.

In this chapter, you are going to cover the following topics:

- Continue analyzing the requirements and creating an end-to-end solution

- Presenting and justifying your solution

By the end of this chapter, you will have completed your first mock scenario. You will be more familiar with the complexity of full mock scenarios and be better prepared for the exam. You will also learn to present your solution in the limited time given and justify it during the Q&A.

Continue Analyzing the Requirements and Creating an End-to-End Solution

You covered the business process requirements earlier in Chapter 12, Practice the Review Board: First Mock. By the end of that chapter, you had a good understanding of the potential data model and landscape. You have already marked some objects as potential LDVs.

Next, there is a set of data migration requirements that you need to solve. Once completed, you will have an even better understanding of the potential data volumes in each data model’s objects, which will help you create an LDV mitigation strategy.

Start with the data migration requirements first.

Analyzing the Data Migration Requirements

The requirements in this section might impact some of your diagrams, such as the landscape architecture diagram and the data model.

Some candidates prefer to create diagrams to explain their proposed data migration strategy. These diagrams are considered optional for the CTA review board exam; therefore, no such diagrams will be created in this book. Feel free to create additional diagrams if they will help you organize and explain your thoughts. Otherwise, it is time to start with the first shared data migration requirement.

PPA would like to migrate the data from all its current customer and rental management applications to the new system.

This is a predictable requirement, especially considering that PPA already expressed their intention to retire all five legacy rental management applications. But keep in mind that these five applications might be based on different technology, using different database types, and belong to various generations. The next requirements contain more details. Move to the next, which begins with the following:

PPA is aware that the current data contains many redundancies and duplicates and is looking for your guidance to deal with the situation.

PPA has struggled with the quality of its data, especially redundancies. How can you help them to achieve the following?

- Dedupe their existing data

- Give a quality score to each record to help identify records of poor quality (such as fractured address details or a non-standard phone format)

- Prevent duplicates from being created in the future

Salesforce’s native duplicate rules can help to prevent the creation of duplicate records. They have their limitations but are a decent tool for simple and moderate-complexity scenarios.

However, that does not answer the first two requirements. Deduplicating can get complicated, especially in the absence of strong attributes (such as an email address or phone number in a specific format). Data such as addresses and names is considered weak because it can be provided in multiple formats. Moreover, names can be spelled in various ways and can contain non-Latin characters.

In such cases, you need a tool capable of executing fuzzy-logic matching rather than exact matching (Salesforce’s duplicate rules provide a limited level of that). You might also need to give weights to each attribute to help with the merge logic.

Moreover, you need a tool capable of reparenting any related records to the merged customer record. In this case, this is relevant to historical rental records. If multiple customer records are merged into one, you need all their related rental records to point to the newly created golden record.

Calculating each record’s quality might also require some complex logic as it involves comparing multiple records against predefined criteria and updating some records accordingly. The criteria could be as simple as a predefined phone format and as complex as an entire sub-process.

All these capabilities can be custom developed, assuming you have the time and budget, or simply purchased and configured. This would generally save a lot of money (considering the overall ROI calculation).

As a CTA, you are expected to come up with the most technically accurate solution. In this case, it makes no sense to delay the entire program for 9-18 months until the tool is custom developed and tested. It makes a lot of sense to propose a battle-tested MDM tool that can be configured to meet all these requirements.

You are expected to name the proposed product during the review board, just like what you would normally do in real life. You can suggest a tool such as Informatica. However, you do not necessarily need to specify the exact sub-product name as they change frequently.

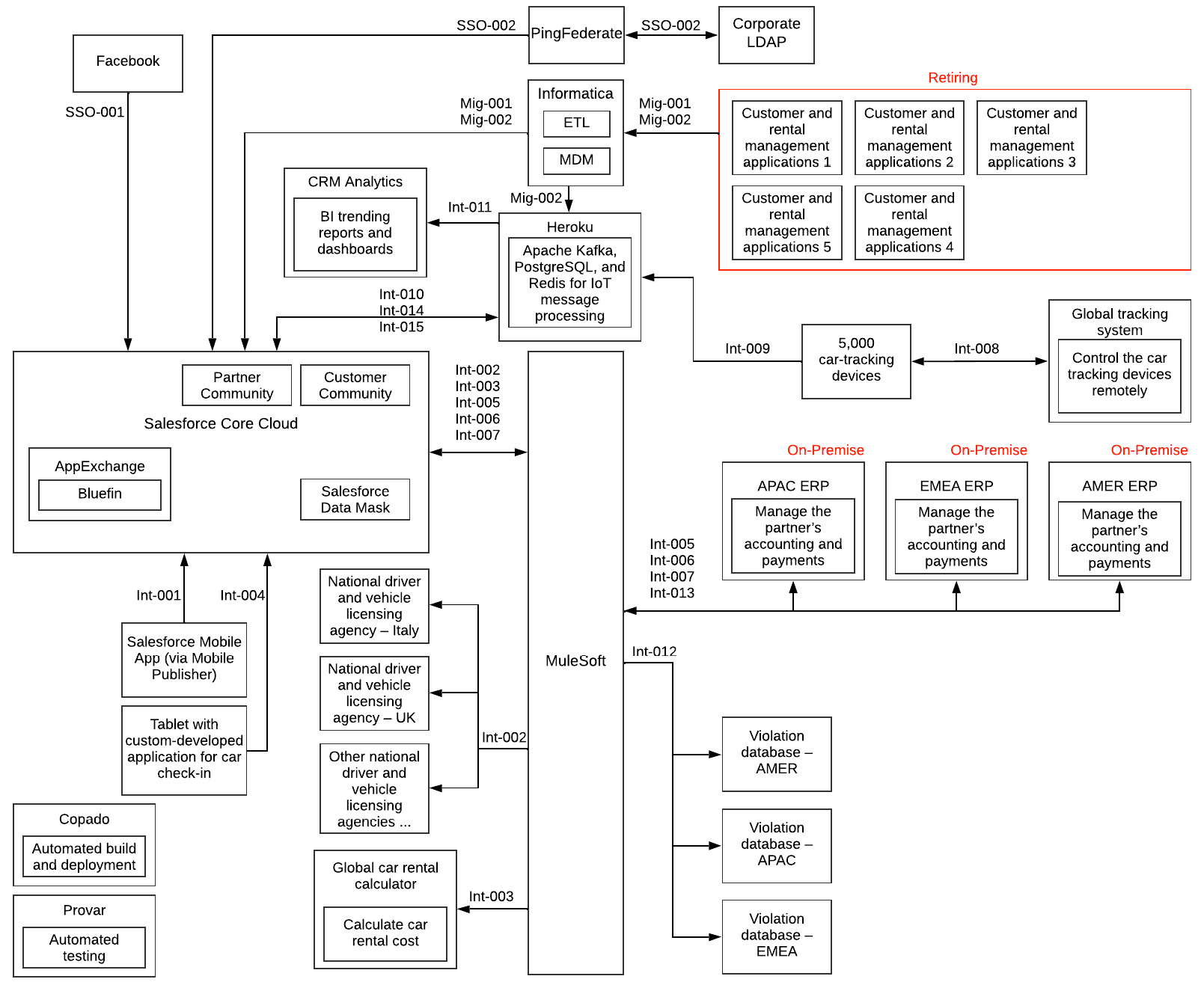

Update your landscape architecture diagram and your integration interfaces to indicate the new migration interface, then move on to the next requirement.

PPA has around 50 million rental records in each of its current rental management applications.

Most of them are stored for historical purposes as PPA wants to keep rental records for at least 10 years. PPA would like to continue allowing its sales and support agents to access these records in the new system.

PPA has around 250 million rental records (50 x 5) across its five legacy systems. Migrating all that data to Salesforce will turn the Order object into an LDV. Not to mention, the OrderItem object will contain at least an equal number of records.

It is fair to assume that most of these rental records are closed and are kept only to comply with PPA’s policy. Therefore, you can migrate the active Order and OrderItem objects to Salesforce while migrating any historical records to Heroku.

PPA would still like its sales and support agents to access historical records. Yet, instead of copying them to Salesforce, you can simply retrieve them on the fly using Salesforce Connect and External Objects.

Note

Heroku offers the Heroku External Objects feature on top of Heroku Connect, which provides an easy-to-consume Open Data Protocol (OData) wrapper for Heroku PostgreSQL databases.

You need to add the External Objects to the data model. You also need to update the landscape architecture diagram and the integration interfaces list to indicate the new migration interface and the new integration interface between Heroku and Salesforce.

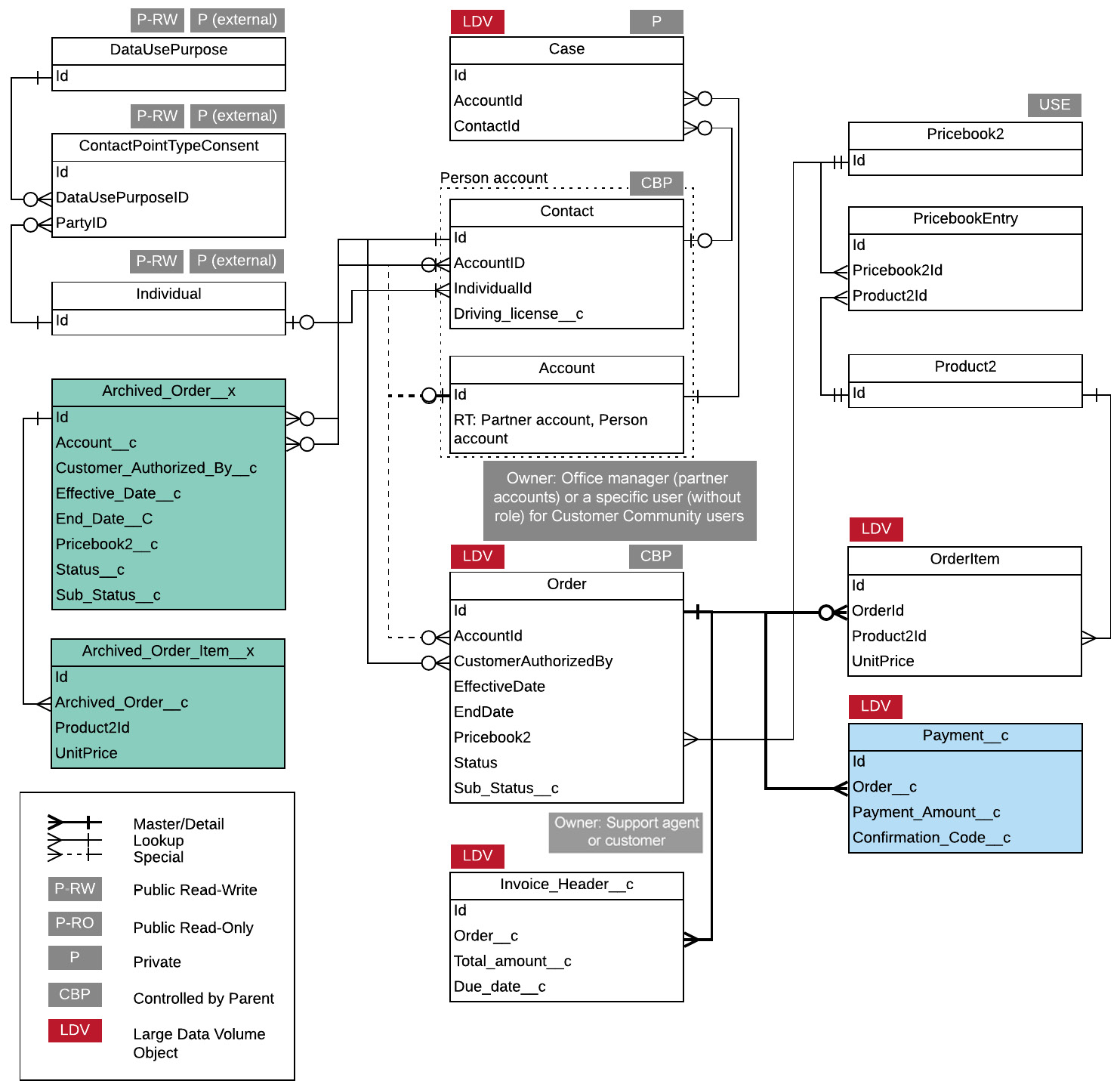

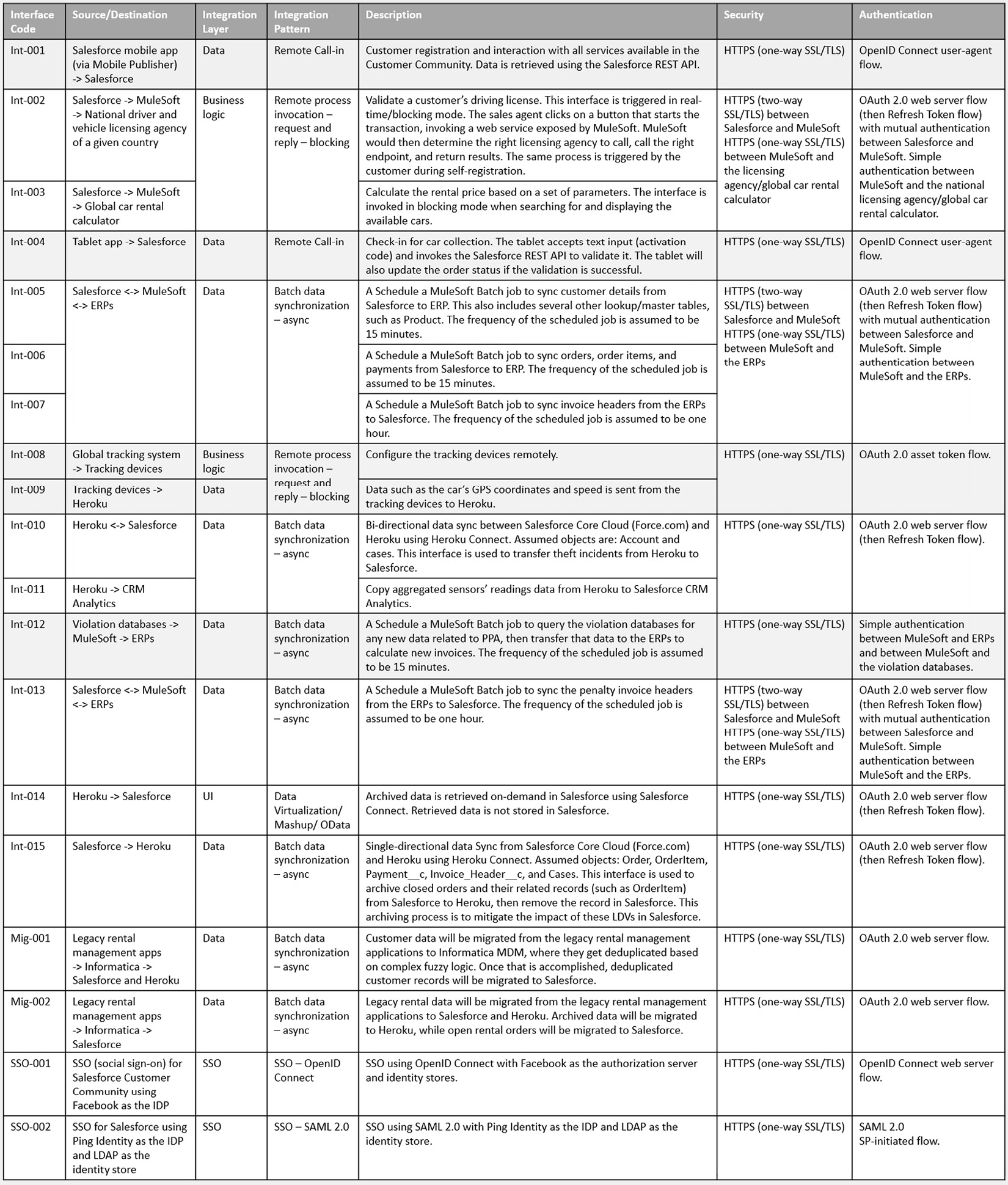

Next, update your diagrams. Your data model should look like this:

Figure 13.1 – Data model (third draft)

This requirement did not specifically request explaining your data migration strategy. But this is something you are expected to do in the exam, nevertheless. You need to explain the following:

- How are you planning to get the data from their data sources?

- How would you eventually load the data into Salesforce? What are the considerations that the client needs to be aware of and how can potential risks be mitigated? For example, who is going to own the newly migrated records? How can you ensure that all records have been migrated successfully? How can you ensure you do not violate any governor limits during the migration?

- What is the proposed migration plan?

- What is your migration approach (big bang versus ongoing accumulative approach)? Why?

You came across a similar challenge in Chapter 7, Designing a Scalable Salesforce Data Architecture. You need to formulate a comprehensive data migration pitch to include in your presentation that answers all the preceding questions clearly. You will do that later on in this chapter.

Before that, you need to review your LDV mitigation strategy. You have penciled in several objects as potential LDVs, but you still need to do the math to confirm whether they can be considered LDVs or not. Once you do, you need to craft a mitigation strategy. You will tackle this challenge next.

Reviewing Identified LDVs and Developing a Mitigation Strategy

Have a look at the penciled-in LDVs in the data model (Figure 13.1) and validate whether the identified objects indeed qualify to be considered LDVs.

You know that PPA has around one million registered users globally and an average of 10 million car rentals every year. This means that the Order object will grow by 10 million records annually. The object qualifies to be considered an LDV. Moreover, the OrderItem object will have at least 10 million more records. Reasonably, it may contain even more, assuming that 50% of rentals would include an additional line item besides the car rental itself, such as an insurance add-on.

This means more than 15 million new records every year. On top of that, the Payment__c object will contain at least one record per Order. Invoice_Header__c will also contain a minimum of one record per Order (some rentals might get more than one invoice due to penalties or required car maintenance). You will also have at least one Case record created per rental (for the inspection feedback).

A large number of Order, OrderItem, Case, Payment__c, and Invoice_Header__c records could impact the speed of CRUD operations. They are all considered LDVs, and therefore you need a mitigation strategy.

Considering that the challenge you are dealing with is related to CRUD efficiency, a solution such as skinny tables would not add much value. Custom indexes would help with read operations only. Divisions could help, but they would add unnecessary complexity. In comparison, the most suitable option here is to offload some of the non-essential data to off-platform storage.

Archiving data in that way ensures that these four objects remain as slim as possible. Your archiving strategy could include all closed Order and Case records.

You can archive your data to Salesforce Big Objects, external storage such as a data warehouse, or a platform capable of handling a massive number of records, such as AWS or Heroku. You already have Heroku in the landscape. The decision should be easy in this case. You can utilize Heroku Connect to transfer the data to a Heroku PostgreSQL database, transfer the archived data to another PostgreSQL database that is exposed to Salesforce Connect via Heroku Connect and Heroku External Objects, then delete the archived records from the first PostgreSQL database and Salesforce.

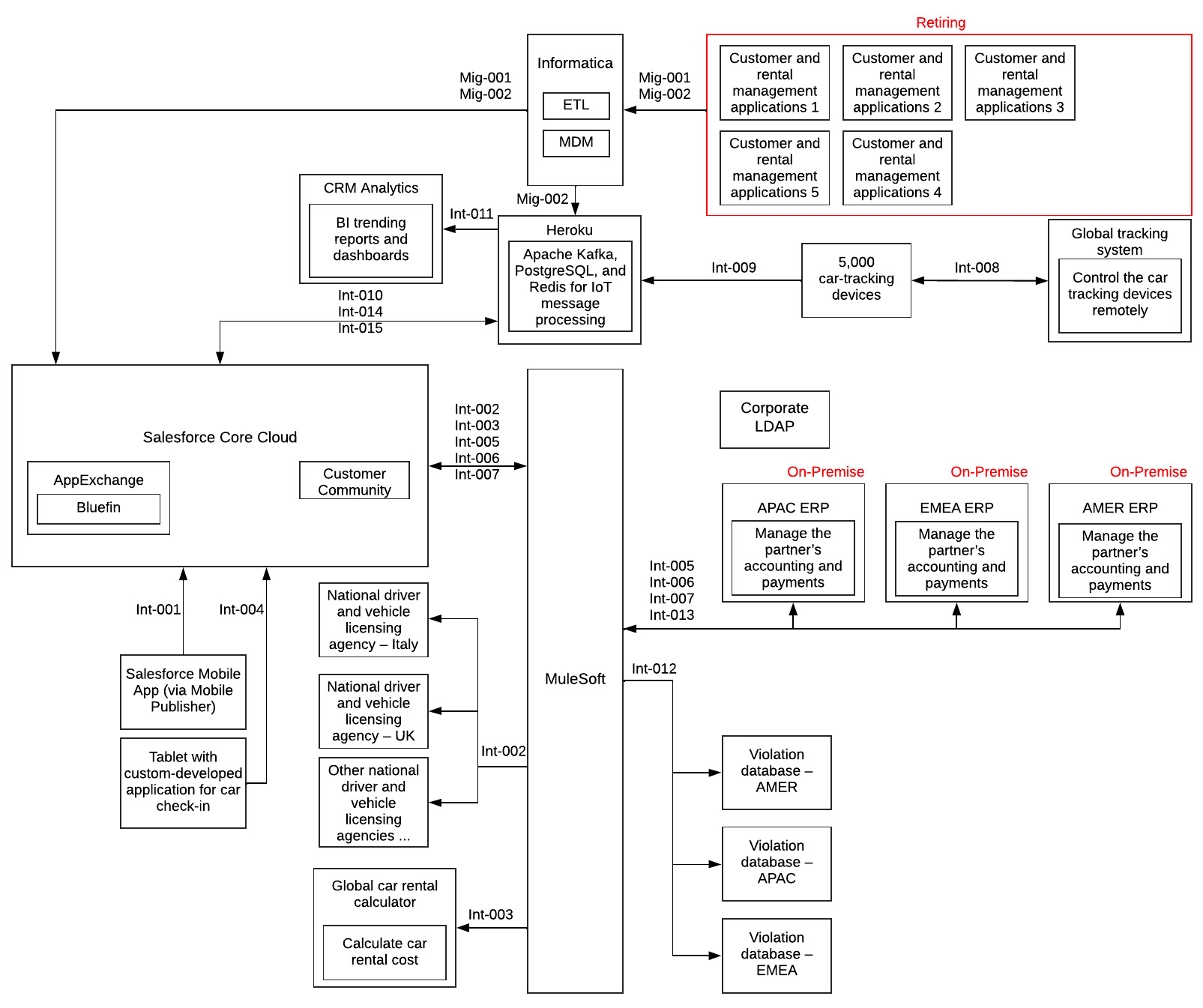

Update your landscape diagram and add another integration interface between Heroku and Salesforce. Your landscape architecture diagram should look like the following:

Figure 13.2 – Landscape architecture (fourth draft)

Update your list of integration interfaces and move on to the next set of requirements, which is related to accessibility and security.

Analyzing the Accessibility and Security Requirements

The accessibility and security sections’ requirements are usually the second most difficult to tackle after the business process requirements. In contrast to the business process requirements, they do not tend to be lengthy, but rather more technically challenging.

You might need to adjust your data model, role hierarchy, and possibly your actors and licenses diagram based on the proposed solution for the accessibility and security requirements. The good news is that there is a limited number of possible sharing and visibility capabilities available within the platform. You have a small pool of tools to choose from. However, you must know each of these tools well to select the correct combination.

Start with the first shared requirement, which begins with the following:

Customer accounts should be visible to office managers, sales agents, and service agents worldwide.

You should take enough time to analyze these requirements. They could seem easy at first glance, but if you do not thoroughly consider all possible challenges, there is a chance you will find yourself stuck with a solution that violates the governor limits. Changing such solutions on the fly during the Q&A is challenging. Analyze these requirements thoroughly to avoid such instances.

Considering that the requirement indicates that some records will be visible to some users but not others, the OWD of the Account object should be Private.

This will restrict access to accounts not owned by the user. You will need to figure out a mechanism to share all accounts with the mentioned user set (which is literally all internal users except technicians) while allowing the technicians to access customer accounts in their country only.

There are multiple ways to solve this. You need to select something that is easy to maintain and scalable and does not pose a threat to the governor limits.

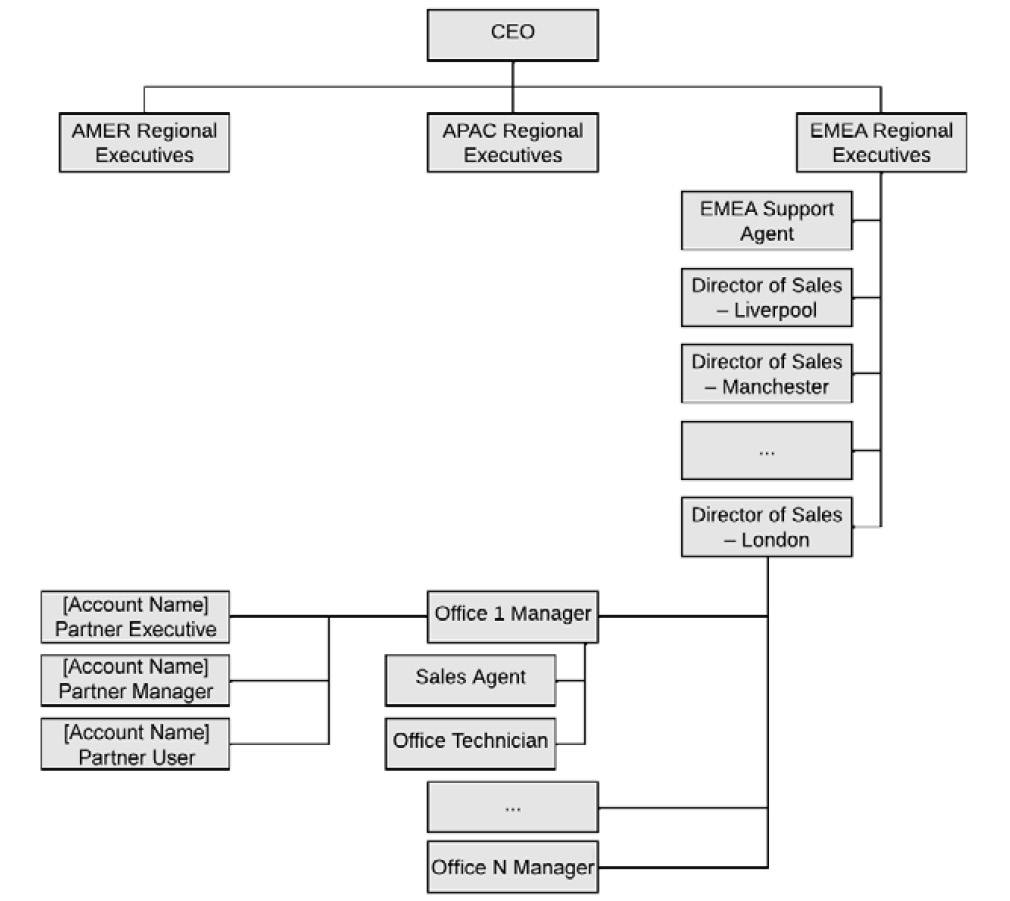

Based on the role hierarchy you developed in Chapter 12, Practice the Review Board: First Mock, you can come up with the following solution:

- You can introduce two roles underneath the office manager, representing the sales reps and technicians working in that office.

- Owner-based sharing rules rely on the account owner. Customer accounts might be owned by multiple users in different roles. Criteria-based sharing rules are more suitable for this requirement. You can configure a set of criteria-based sharing rules to share all accounts with sales agents that will automatically grant all users higher than them in the hierarchy role access to the customer accounts.

- You can configure another set of criteria-based sharing rules to share the customer accounts with technicians from a particular country.

Sound good? Not really.

Note

You have a limited number of criteria-based sharing rules that can be defined for any object: a maximum of 50 per object. You can view the latest limitations at the following link: https://packt.link/OoGuu.

PPA operates only across 10 countries. However, there are multiple cities covered in each country and various offices in each city. The number of sharing rules required to share customer accounts with the sales agent role could easily go beyond 50.

You can do the math, but even if the number falls a bit short of 50, you are still creating a solution that is at the edge of the governor limit. This cannot be considered a scalable solution.

However, you have another tool that you can utilize in addition to role hierarchy and sharing rules to solve this challenge. You can create a public group for all sales agents. This will be hard to maintain if you choose to add all the sales agent users to the group (imagine the efforts associated with adding/removing users upon joining or leaving the company). However, you can add roles instead.

By selecting and adding all the sales agent roles to the group and ensuring that the Grant Access Using Hierarchies checkbox for that group is checked, you will have an easy-to-maintain, scalable solution. You will need just one criteria-based sharing rule to share all customer account records with this public group. This will ensure all sales agents and anyone above them in the role hierarchy has access to these accounts.

You can follow a similar approach for technicians, except that you would need 10 different public groups, one per country. You would also need 10 criteria-based sharing rules. This is not very scalable, but it is well below the governor limit. You can also highlight that you might need to switch to Apex-based sharing if the company continues expanding to other countries.

Update your role hierarchy if needed; it should now look like this:

Figure 13.3 – Role hierarchy (final)

Now, move on to the next requirement.

Support incidents should be visible to support agents and their managers only.

You can follow an approach similar to the one used in the previous requirement. You can create a public group, ensure the Grant Access Using Hierarchies checkbox is checked, and add all support agent roles to the group. You will also need to set the Case object’s OWD to Private, then create a criteria-based sharing rule to share Case records of a specific type with this public group. Keep in mind that once you set the Account object’s OWD to Private, you have no choice but to set the Case object to Private too.

The criteria-based sharing rule will grant the support agents, their managers, and everyone above them in the role hierarchy access to the Case records.

If you had multiple other roles between the regional execs and the CEO, you would likely need to disable the Grant Access Using Hierarchies checkbox and use different public groups for support agents and their managers.

Only office technicians can update the car status to indicate that it is out of service and requires repairs.

You have utilized the Product object to model cars. In this case, you need to add a custom field to indicate the car status; you can call it Car_status__c. You can then set the Field-Level Security (FLS) of that field to read-only for all profiles except the technicians, who will be granted this edit privilege.

This is an easy point to collect. However, none of the diagrams show FLS settings. Therefore, you need to ensure you call the solution out during the presentation.

Update your data model diagram and move on to the next requirement.

Office technicians should not be able to view the customer’s driving license number.

This is another requirement that can be solved using FLS. This time, you need to make the Driving_license__c field on the Contact object completely inaccessible/invisible to users with the technician profile.

Move on to the next requirement.

Once a theft incident is detected, only the support agents trained for that type of incident, their managers, and regional executives can view the incident’s details.

You used the Case object to model the theft incident in your solution. How can you assign Cases to the right teams based on skills?

Salesforce Omni-Channel provides a skills-based routing capability. You can also define a specific queue for theft incidents and add all trained support agents to the queue. You can then use standard Case Assignment Rules to assign Cases of a particular type to this queue.

So, which approach should you follow? Both would serve the purpose; however, you need to show that you know the most appropriate tool to use from your toolbox. As a simple rule of thumb, the two approaches are different in the following way:

- Queues are suitable to represent a single skill. If you needed to represent multiple skills, you would need to define multiple queues. Users can be assigned to multiple queues, but the Case record would only be assigned to one of these queues.

- Skills-based routing would consider all skills required to fulfill a particular Case. It then identifies the users who possess these skills and assigns the Case record to one of them.

The former approach is simpler to set up. Moreover, it is good enough to fulfill this requirement. You can use queue-based assignments to solve this requirement. Users higher in the role hierarchy will get access to these records by default due to your role hierarchy’s setup.

The regional executive team should have access to all regional data, including the driver’s behavior, such as harsh braking.

The regional executive team will, by default, get access to all data in their region due to the proposed role hierarchy.

The driver’s behavior data resides in Heroku and CRM Analytics. The regional executives would require the CRM Analytics license. You penciled that earlier in Figure 12.2, on the actors and licenses diagram. Now, you have confirmed it. Update your actors and licenses diagram and move on to the next requirement.

Office managers should be able to see the details of the partner accounts in their own country only.

Office managers own partner accounts. They can see the records they own regardless of the OWD settings. However, the requirement here indicates that they should be able to view all partner accounts in their country, even if a different office manager owns them.

You can utilize country-based public groups just like you did with other previous requirements and add all that country’s office manager roles to it. The only difference here is that you can utilize owner-based sharing rules as the partner accounts will always be owned by an office manager.

This owner-based sharing rule will be simple: share all account records owned by a member of this public group with all the group members. You will end up with 10 owner-based sharing rules, which is way below the governor limit.

Partners should be able to log in to Salesforce using Salesforce-managed credentials only.

You can use a single community for customers and partners, but that is an unusual setup. They would likely expect a completely different UI, services, and user experience.

You can propose a separate community for partners that does not have an external authentication provider. This will ensure that partners will be able to log in to Salesforce using Salesforce-managed credentials only. Thus, the requirement is fulfilled.

Update your landscape architecture diagram to include a Partner Community and move on to the next requirement.

Customers can self-register on the online portal and mobile application.

You went through the entire customer registration business process in Chapter 12, Practice the Review Board: First Mock. To enable customer self-registration, you must turn that setting on in the community setup.

Again, this is a straightforward point to collect. It could be easily missed as well, considering its simplicity.

Customers should also be able to log in to the online portal and the mobile application using their Facebook accounts.

Customers and partners will have two separate communities. The Customer Community can be configured to have Facebook as an authentication provider (the exact name of Salesforce’s functionality is Auth. Provider). You must be clear in describing your social sign-on solution and mention the name of the functionality. You also need to explain how you can use the registration handler to manage user provisioning, updating, and linking with existing Salesforce users.

The registration handler is an Apex class that implements the RegistrationHandler interface. When the user is authenticated by the authentication provider (for example, Facebook), a data structure containing several values is passed back to Salesforce and received by the registration handler class associated with the authentication provider.

The class can then parse this payload and execute Apex logic to determine whether a new user needs to be provisioned or an existing user needs to be updated. The email address is a common attribute to uniquely identify the user (in this case, it is equal to a federated identifier for your Community users). However, the scenario might ask to utilize a different attribute, such as the phone number. You should expect such requirements during the Q&A too.

Therefore, you need to practice creating a registration handler and try these scenarios out. The payload you receive from an authentication provider contains several standard attributes, but it can also include custom attributes. These attributes can be used to enrich the user definition in Salesforce. In some cases, one of these attributes could be the desired federated identifier for a given enterprise.

Needless to say, you should be prepared to draw the sequence diagram for this authentication flow. You learned about the OAuth 2.0/OpenID Connect web server flow earlier in Chapter 4, Core Architectural Concepts: Identity and Access Management.

Note

The judges might ask you to explain/draw the sequence for layered flows (where more than one flow is combined), for example, a Community user connecting from a Salesforce Mobile Publisher app and using social sign-on to authenticate. In this example, the layered flows are OAuth 2.0 (or OpenID Connect) User-Agent and the OpenID Connect web server.

Update your landscape diagram and your integration interfaces by adding a new Single sign-on (SSO) interface, then move on to the next requirement.

PPA employees who are logged in to the corporate network should be able to automatically log in to Salesforce without the need to provide their credentials again.

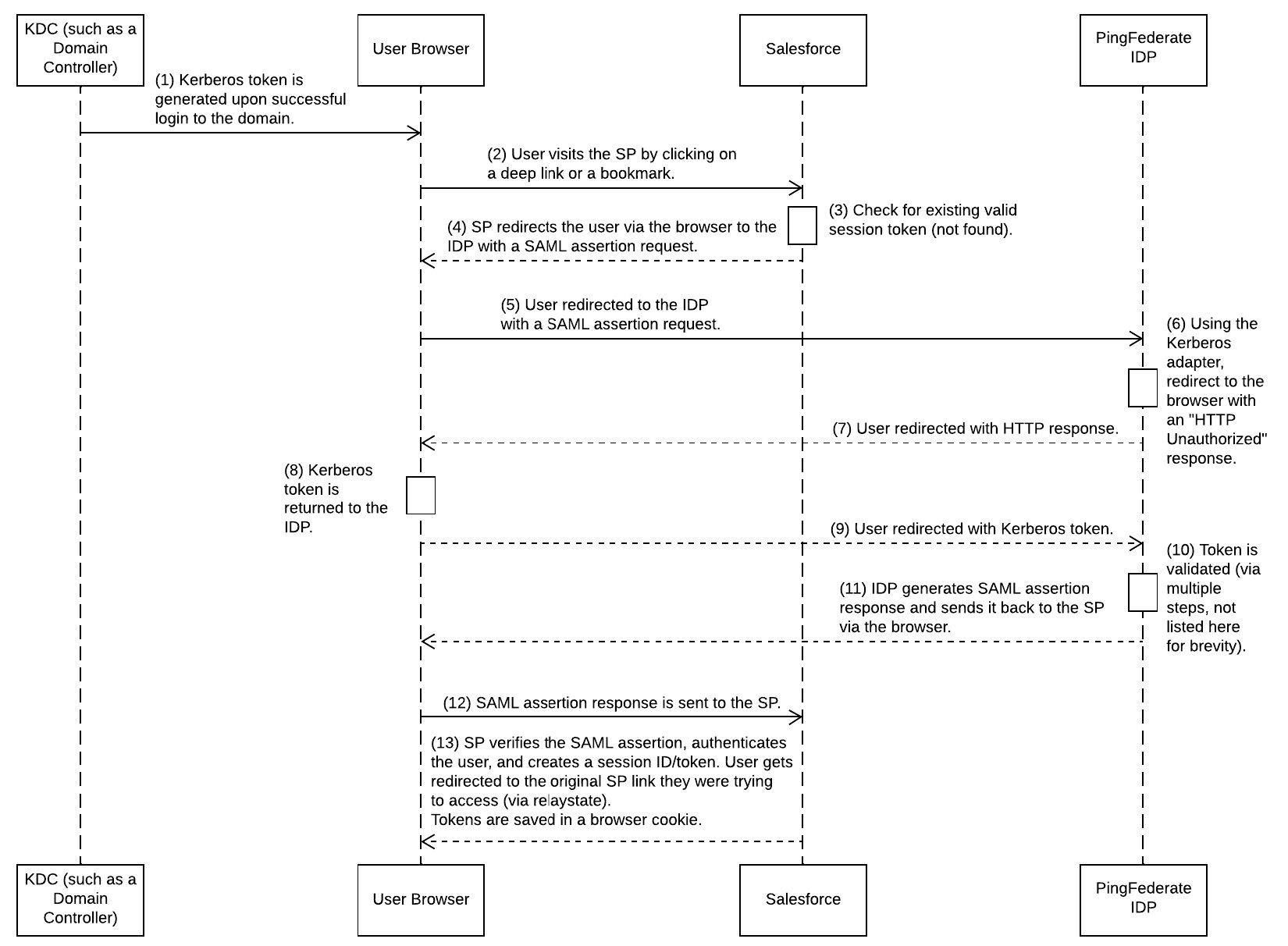

This requirement should point you directly to an authentication protocol you came across in Chapter 4, Core Architectural Concepts: Identity and Access Management, which is used over networks (such as a local enterprise network) to provide SSO capabilities. The protocol referred to is Kerberos.

Once a user logs in to the corporate network (which is basically what an employee would do first thing in the morning when they open their laptop/desktop and sign into the domain), the following simplified steps take place:

- A ticket/token is issued by a Key Distribution Center (KDC) once the user logs in to the domain from their laptop/desktop. This token is issued transparently without any user interaction and stored on the user’s machine (in a specific cache).

- This cached token is then used by the browser whenever there is a need to authenticate to any other system. You need to update some settings in the browser itself to enable this (such as enabling Integrated Windows Authentication). These configurations are not in the scope of this book.

To clarify what a KDC is, in a Windows-based network, the KDC is the Domain Controller (DC).

Identity providers such as PingFederate have adapters for Kerberos, which allows them to facilitate the request, recipient, and validation of a Kerberos token (the terms ticket and token are used interchangeably in Kerberos). For example, PingFederate IDP (the full name of the product from Ping Identity) operates in the following way:

- When the client starts an SP-initiated SSO by clicking on a deep link (or bookmark) or starts an IDP-initiated SSO by clicking on a link from a specific page, an authentication request is sent to PingFederate.

- Using the Kerberos adapter, PingFederate redirects the user back to the browser with the response HTTP 401 Unauthorized.

- The browser (if configured correctly) will return the Kerberos token to PingFederate.

- Once PingFederate receives the token, its domain is validated against the settings stored in PingFederate’s adapter.

- Upon successful validation, PingFederate extracts the Security Identifiers (SIDs), domain, and username from the ticket, generates a SAML assertion, and then passes it to the SP.

- The SP (Salesforce in this case) receives the assertion and authenticates the user based on it.

It is worth mentioning that PingFederate would be integrated with LDAP, which is the identity store for PPA as per the scenario. You could have used ADFS if PPA uses Active Directory (AD). However, this product does not currently support LDAP.

The following sequence diagram (a modified version of the SAML SP-initiated flow in Chapter 4, Core Architectural Concepts: Identity and Access Management) explains this flow. Please note that this diagram is simplified and does not describe in detail the way Kerberos works:

Figure 13.4 – SAML SP-initiated flow with Kerberos

Be prepared to draw and explain this sequence diagram, similar to any other authentication flow you covered in Chapter 4, Core Architectural Concepts: Identity and Access Management.

Update your landscape diagram and your integration interfaces by adding another SSO interface, then move on to the next requirement, which starts with the following:

PPA employees can log in to Salesforce from outside the corporate network using the LDAP credentials.

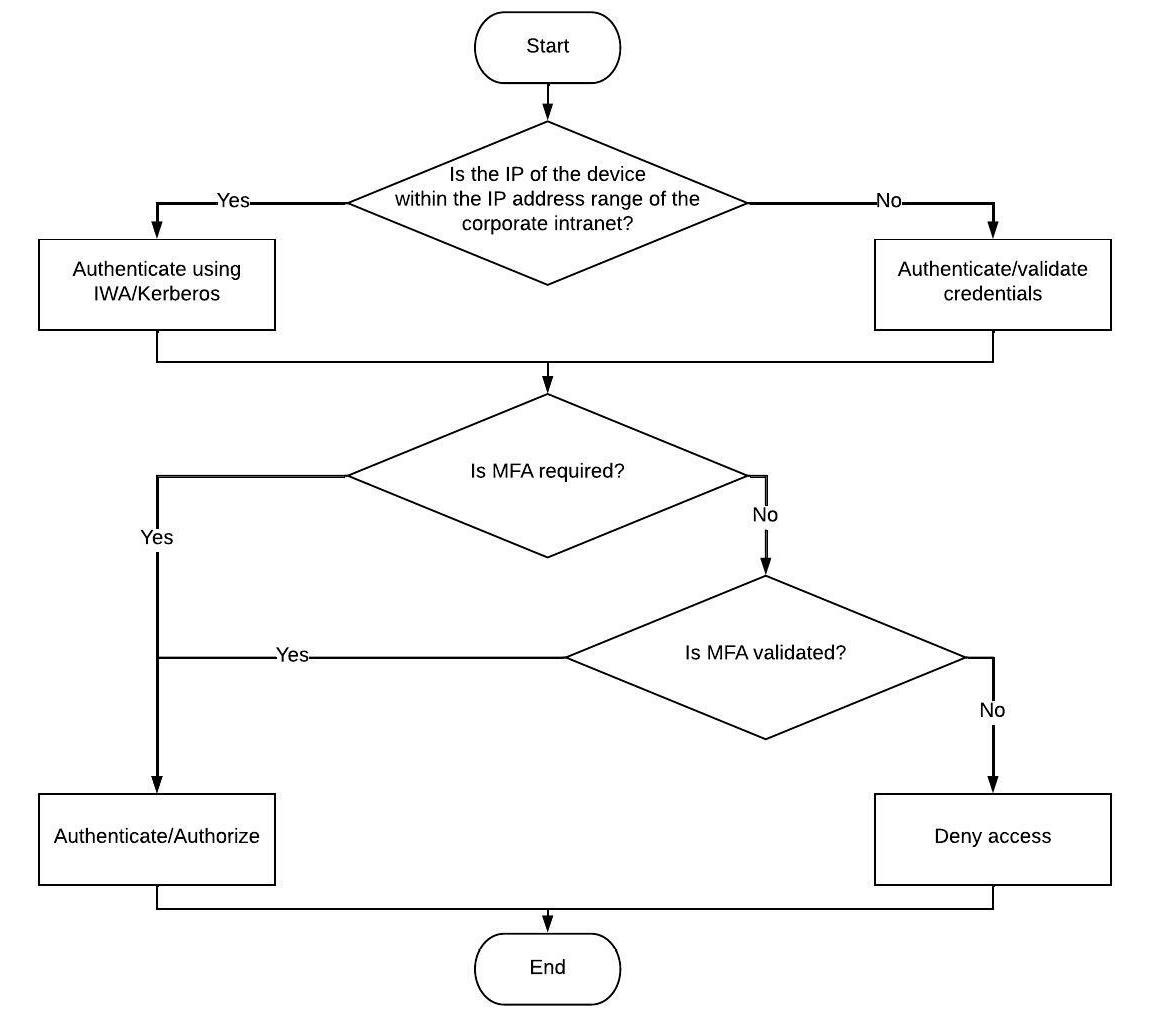

The good news is that both requested features are supported by tools such as PingFederate. PingFederate has a feature called adaptive authentication and authorization, which delivers a set of functionalities, including reading the user’s IP address to determine whether the user is inside or outside the corporate network and driving different behavior accordingly.

You can configure PingFederate to request multi-factor authentication (MFA) if the user is logging in from an IP outside the corporate network range. The following simple flowchart illustrates this logic:

Figure 13.5 – Flowchart explaining PingFederate’s adaptive authentication and authorization with MFA

You can use a mobile application such as PingID to receive and resolve the second factor of authentication (for example, a text message). Add the mobile application to your landscape. You do not need to add a new integration interface for it as it will communicate with PingFederate rather than with Salesforce.

This concludes the accessibility and security section requirements. Congratulations! You have nearly covered 85% of the scenario (in terms of complexity). You still have a few more requirements to solve, but you have passed most of the tricky topics. Continue pushing forward. The next target is reporting requirements.

Analyzing the Reporting Requirements

The reporting requirements could occasionally impact your data model, particularly when deciding to adopt a denormalized data model to reduce the number of generated records. Reporting could also drive the need for additional integration interfaces or raise the need for some specific licenses. Go through the requirements shared by PPA and solve them one at a time.

The regional executive team would like a report that shows relations between customers’ driving behavior and particular times of the year across the past 10 years.

This report needs to deal with a vast amount of data. The raw data for drivers’ behavior is in Heroku. You can use a tool such as CRM Analytics to create the desired report. You already have it in the landscape architecture. The Int-011 integration interface can be used to transfer data from Heroku to CRM Analytics. You can then expose dashboards within the Salesforce Core Cloud if needed.

Move on to the next requirement, which starts with the following:

The regional executive team would like a trending monthly report that shows the pick-up and drop-off locations of each rental.

Reporting requirements that indicate a need for trending reports should point you toward one of these capabilities:

- Reporting snapshots: A functionality within the Salesforce Core Cloud enables users to report on historical data stored on the platform. Users can save standard report results in a custom object, then schedule a job to run the specific reports and populate the mapped fields on the custom object with data. Once that is done, the user can report on the custom object that contains the report results rather than on the original data.

- Analytics/Business intelligence tools: These tools are designed to work with enormous datasets. They provide more flexibility to drill into the details and analyze their patterns. Moreover, these tools can deal with data on and off the Salesforce Platform.

Which one would you pick for this requirement?

If you pick reporting snapshots, then be prepared to adjust your solution if a judge decides to raise the challenge during Q&A and asks you to provide more capabilities to drill into the data or augment the data stored in Salesforce with data archived on Heroku.

A better, more scalable, and safer option would be to pick an analytics tool, such as CRM Analytics.

Again, you already have CRM Analytics in the landscape and a list of interfaces. You can confirm now that the regional executive team members will need the CRM Analytics license. Update your actors and licenses diagram, then move on to the following requirement.

Customers should be able to view a full history of their rental bookings, including bookings from the past three years.

The data required for this report exists mostly outside the platform. As a reminder, open orders will be in Salesforce while completed orders will be archived to Heroku.

Try not to complicate things for yourself and think of a custom solution that mixes data from both sources to display it in a single interface. Go with the simple, out-of-the-box functionalities.

You can simply display two different lists: one with open orders and another with completed orders. The first is retrieved directly from Salesforce, while the other is retrieved using External Objects (Salesforce Connect). The Int-014 integration interface can be utilized for this.

There is one more thing to consider; so far, you have been planning to use a principal user to authenticate to Heroku for the Int-014 interface. That means all data that the principal user can access will be returned, which is normally all the data available.

To expose such an interface to end customers, you need to follow one of these two approaches:

- Switch the authentication policy to per-user. This will shift the responsibility of controlling who sees what to Heroku. Heroku must have a comprehensive module to determine the fields, objects, and records each user can see or retrieve from its database. This approach is achievable but would require custom development as Heroku does not come with an advanced sharing and visibility module similar to the one in Salesforce Core.

- Develop a custom Lightning component on top of the External Objects to filter the retrieved records using the current user’s ID. This will ensure the user can only view the records that they are supposed to see. However, this is not a secure approach as a bug in the Lightning component could reveal records that should not be displayed.

Moreover, considering that the security measures are applied by the UI layer rather than the data layer, there is a chance that an experienced hacker could find a way to get access to records that they are not allowed to access.

You can propose the second option if you can communicate the associated risks and considerations. This is the approach you will use for this scenario. You will also need to explain why you preferred it over the first option (for example, to save the development efforts on Heroku).

Customers should also be able to view their full trip history for each completed rental.

This is a challenge similar to the one in the previous requirement. This is good news because you can propose a similar solution.

The full trip history data is also stored on Heroku. You can develop a Lightning component that is displayed when the user clicks on a Display Trip button next to a particular rental record. When the component is displayed, it retrieves the External Objects’ data, filters them by the user ID, and displays them on the UI.

Remember that you must explain all of that during your presentation. It is not enough to simply say that you will develop a Lightning component that fulfills this requirement. You must explain how it is going to be used and how it will operate. After all, this is what your client would expect from you.

Now, move on to the next and final reporting requirement.

Partners should be able to view a monthly report showing the repair jobs completed for PPA.

This is a straightforward requirement. The repair jobs are represented as Cases. Partner users can simply use standard reports and dashboards to display data of the Cases they have access to, which are the Case records assigned to one of their users.

That concludes the requirements of the reporting section. The last part of the scenario normally lists requirements related to the governance and development life cycle. Keep your foot on the gas pedal and tackle the next set of requirements.

Analyzing the Project Development Requirements

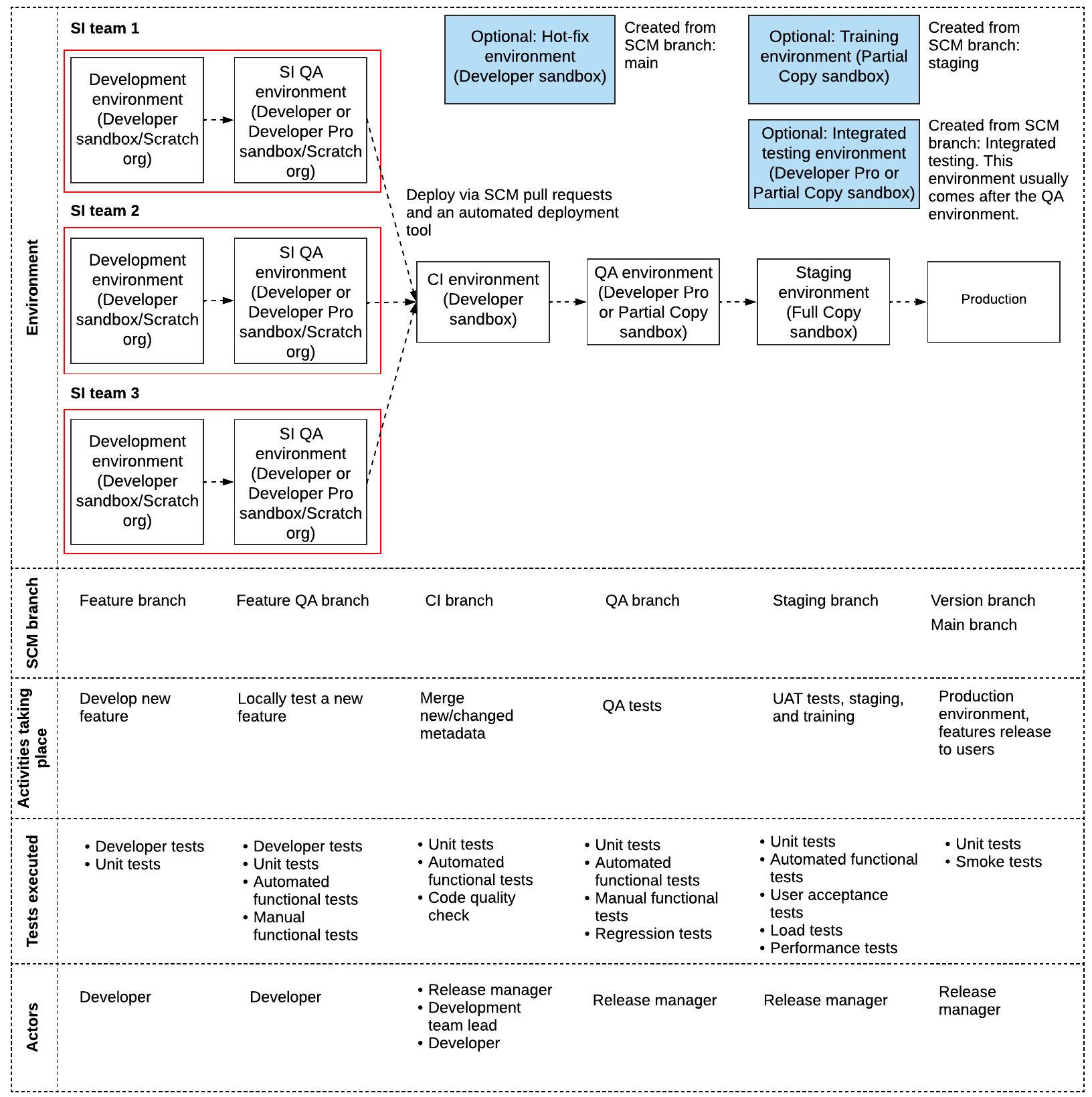

This part of the scenario would generally focus on the tools and techniques used to govern the development and release process in a Salesforce project. Previously, you created some nice artifacts in Chapter 10, Development Life Cycle and Deployment Planning, such as Figure 10.3 (Proposed CoE structure), Figure 10.4 (Proposed DA structure), and Figure 10.7 (Development life cycle diagram (final)).

These artifacts will become handy at this stage. Try to memorize them and become familiar with drawing and explaining them. You will now go through the different requirements shared by PPA and see how you can put these artifacts into action to resolve some of the raised challenges. Start with the first requirement, which begins with the following:

PPA is planning to have three different Service Implementers (SIs), delivering different functionalities across parallel projects.

The best mechanism to resolve such situations is by implementing source code-driven development using a multi-layered development environment. CI/CD would also reduce the chances of having conflicts by ensuring all developers are working on the latest possible code base (it might not be the very latest, but it is the closest possible).

In Chapter 10, Development Life Cycle and Deployment Planning, you covered the rationale and benefits of this setup. Keep in mind that this is something you need to explain during your presentation. It is not enough to draw the diagram or throw buzzwords around without showing enough practical knowledge about them.

You also saw a sample development life cycle diagram, Figure 10.7 (Development life cycle diagram (final)). This can be the base to use for scenarios such as this. You have three different SIs here, so it makes sense to have three different threads/teams in the diagram.

Your diagram could look like the following:

Figure 13.6 – Development life cycle diagram (final)

You would also need an automated build and deployment tool. There are open-source solutions such as Jenkins and tools built specifically for Salesforce environments such as Copado and AutoRABIT. Select one and add it to your landscape. Also, remember to add an automated testing tool such as Selenium or Provar.

Move on to the next requirement, which begins with the following:

Historically, SIs used to follow their own coding standards while dealing with other platforms.

This is an interesting requirement. The challenge here is related to the lack of standardization and communication across the different SI teams.

Would CI/CD solve this? Not really. It can improve things a bit with automated code scanning and controlled merging, but to significantly improve standardization, communication, reuse, and overall quality, you need to introduce a Salesforce CoE.

Bringing together functional and technical resources, you can even introduce a design authority as another organizational structure to ensure every single requirement is discussed in detail, validated, and approved before starting any development efforts.

In Chapter 10, Development Life Cycle and Deployment Planning, you learned about the value and structure of each of these. Figure 10.3 (Proposed CoE structure) and Figure 10.4 (Proposed DA structure) can also be handy to visualize the two structures.

You are unlikely to be asked to draw the CoE or DA structures, but most likely you will be asked about the value and the challenges they address. If you are already on a project that has both, that is excellent news. Otherwise, do not be shy about proposing this to your stakeholders. This structure brings tangible benefits to the project and, at the same time, helps develop the skills of its members. It is a win-win for everyone.

PPA has a new platform owner who joined from another company that also used Salesforce.

Based on her experience from her previous workplace, the newly joined platform owner reported that bugs were fixed in UAT on several occasions but then showed up again in production. Such a sad experience impacts many people’s judgment of the platform’s agility and ability to adopt modern release management concepts. Usually, it ends up being a bad adaptation of a sound concept. On other occasions, such as this one, apparently, it is due to a poor environment management strategy and release cycle.

When a bug is fixed in UAT, UAT’s code base becomes unaligned with the code base in lower environments. The next time a feature is promoted from lower-level environments (such as development environments), it could easily overwrite the changes made previously in UAT. Eventually, when this is pushed to production, the bugs that were resolved come back.

You could simply say that this symptom is happening because the client did not use a proper CI/CD release management strategy and probably relied on mechanisms such as change sets. However, this is not considered a good enough answer. Instead of using buzzwords, you are expected to explain, educate, and justify in detail. This is how a CTA should respond during the review board and in real life.

This issue could be resolved in one of three ways:

- After fixing the UAT bug, every lower environment should refresh its code base to get this new change. This requires a high level of discipline and organization.

- Upon promoting the lower environment’s code, avoid overwriting existing and conflicting features in UAT. Attempt to resolve that with the development team. This is not always easy and can become difficult when dealing with point-and-click features such as Salesforce Flows.

- Fix the bug on a hotfix branch and environment, merge the branch into UAT, then promote it to production. The hotfix branch is also used to refresh all lower-level environment branches. This option sounds very similar to the first, but the difference is that it is more trackable and easier to govern. You still need an adequate level of discipline and organization.

Release management tools such as Copado make this process easier than usual using the Graphical User Interface (GUI) and point-and-click features rather than a pure Command-Line Interface (CLI).

You must answer such requirements with enough details, but do not get carried away. Keep an eye on the timer and ensure you leave enough time to cover other topics, whether this is taking place during the presentation or Q&A.

Move on to the final requirement in this section, which starts with the following:

PPA would like to release the solution in Italy first, then roll the same solution out in other countries based on a pre-agreed schedule.

You have already explained the value of the CoE structure in these situations. It is the best organizational structure to ensure everyone is involved, sharing their ideas and feedback, and aware of the planned features and roadmap. This is where conflicting business requirements are detected and resolved. The CoE will aim to create a unified process across all countries.

However, in addition to that, you need the right delivery methodology to ensure the smooth rollout of the solution. The CoE and DA will ensure that requirements are gathered, validated, and solutioned in the right way to guarantee high quality. Moreover, they will also ensure that the features are developed to accommodate the slight process variation between countries. A 100% unified global process is a hard target to aim for. Your solution should be designed to accommodate and embrace slight variations in a scalable manner.

Consider the following when choosing the right delivery methodology:

- A rigid waterfall methodology will ensure a clear scope with a detailed end-to-end solution. However, it will also drive a great distance between the development team and the business that would eventually use the solution. Moreover, it has a longer value realization curve.

- An agile methodology ensures that the business is closer to the developed solution. This increases adoption and enables quick feedback. However, without a clear vision, this could easily create a solution that is a perfect fit for one country but unfit for others. This would be difficult to maintain even in the short term.

- A hybrid methodology ensures you get the best of both worlds with minor compromises. You get a relatively clear scope during the blueprinting phase with an end-to-end solution covering 70%-80% of the scope. It is not as rigid and well defined as you would get in a waterfall methodology, but it mitigates many risks from day one.

The solution gets further clarified during the sprints’ refinement activities. With the DA structure in place, you ensure that every user story’s solution is challenged and technically validated by the right people before you start delivering it.

Again, keep an eye on the timer while explaining such requirements. Some candidates get carried away while explaining this part, mostly describing personal experiences. Also, remember that you have very limited time. Your primary goal is to pass the review board.

Many candidates run out of steam at this stage of the presentation, which is totally understandable considering the mental and emotional efforts required up to this point. However, you need to keep your composure. You are close to becoming a CTA. Do not lose your grip on the steering wheel just yet.

That concludes the requirements of the project development section. The next section is usually limited and contains other general topics and challenges. Maintain your momentum and continue to the next set of requirements.

Analyzing the Other Requirements

The other requirements section in a full mock scenario could contain further requirements about anything else that has not yet been covered by the other sections. There is usually a limited number of requirements in this section.

Your experience and broad knowledge of the Salesforce Platform and its ecosystem will play a vital role at this stage. Next, explore the shared requirement.

PPA wants to ensure that data in development environments is always anonymized.

This is an essential requirement today, considering all the data privacy regulations. As a CTA, you should be ready to not only answer this question but also even proactively raise it with your client.

Not all users would or should have access to production data. Even if some have access, it is likely restricted to a specific set of records. While developing new features or fixing bugs in the different development sandboxes, it is crucial to ensure that the data used by developers and testers is test data. Even when dealing with staging environments (where the data is supposed to be as close as possible to production), you still need to ensure that the data is anonymized, pseudonymization, or even deleted. Here is a brief description of these three options:

- Anonymization: Works by changing and scrambling the contents of fields, so they become uninterpretable. For example, a contact with the name Rachel Greene could become hA73Hns#d$. An email address such as [email protected] could become an unreadable value such as JA7ehK23.

- Pseudonymization: Converts a field into readable values unrelated to the original value. For example, a contact with the name Rachel Greene could become Mark Bates. An email address such as [email protected] could become [email protected].

- Deletion: This is more obvious; this approach simply empties the target field.

Developer and Developer Pro sandboxes contain a copy of your production org’s metadata, but not the data. However, Partial Copy sandboxes contain the metadata and a selected set of data that you define. Full Copy sandboxes contain a copy of all your production data and metadata.

Your strategy here could be to do the following:

- Ensure that data used in the Developer and Developer Pro sandboxes is always purposely created test data.

- Ensure that any data in Partial or Full Copy sandboxes is anonymized or pseudonymized. You can achieve that using tools such as Salesforce Data Mask (which is a managed package), custom-developed ETL jobs, custom-developed Apex code, or third-party tools such as Odaseva or OQCT.

Note

You can find out more details about Salesforce Data Mask at the following link: https://packt.link/pTX3n.

Propose using that for PPA. Add the Salesforce Data Mask to your landscape architecture diagram. Your landscape architecture diagram should look like the following:

Figure 13.7 – Landscape architecture (final)

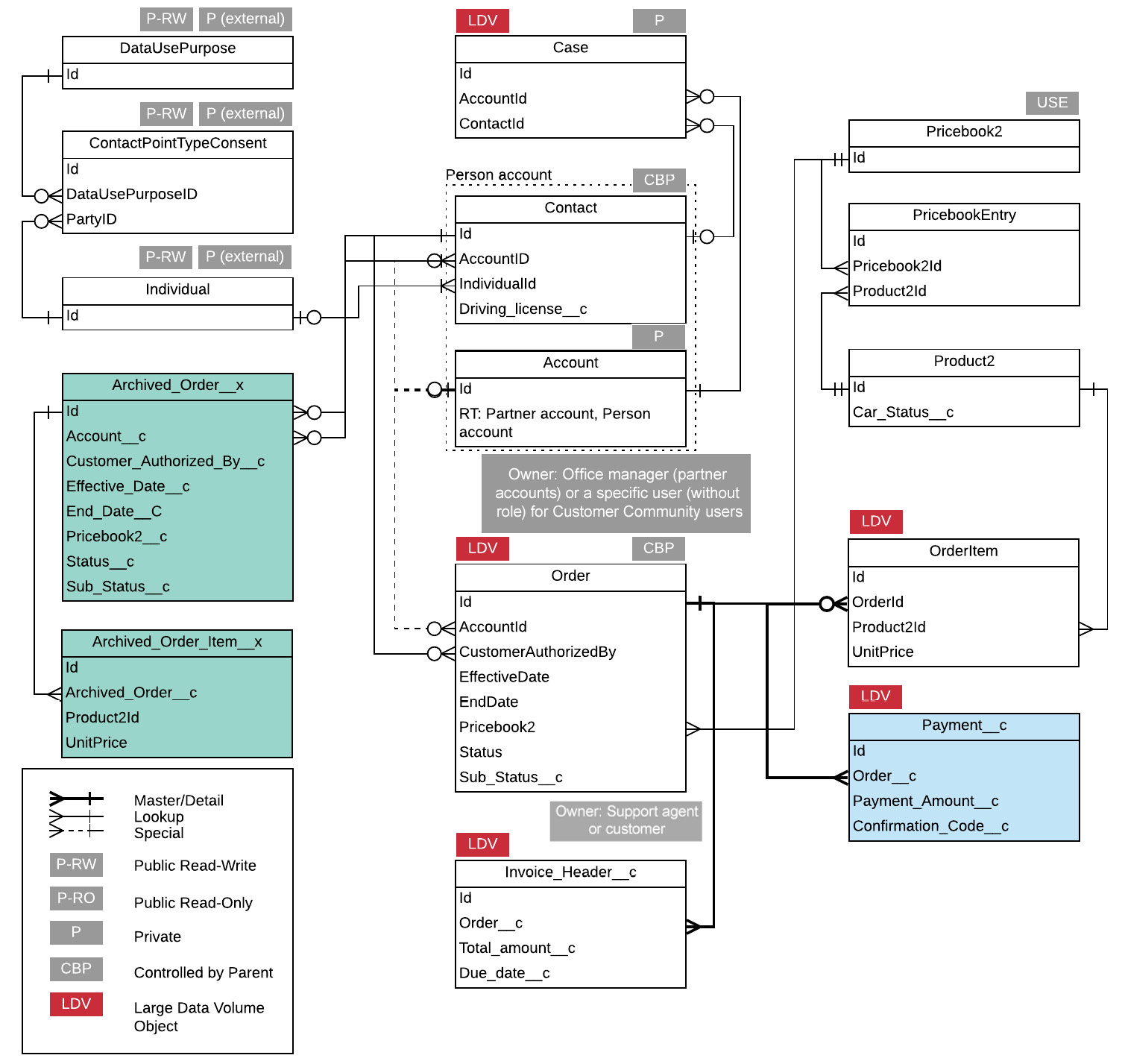

Your list of integration interfaces should look like this:

Figure 13.8 – Integration interfaces (final)

Your data model diagram should look like the following:

Figure 13.9 – Data model (final)

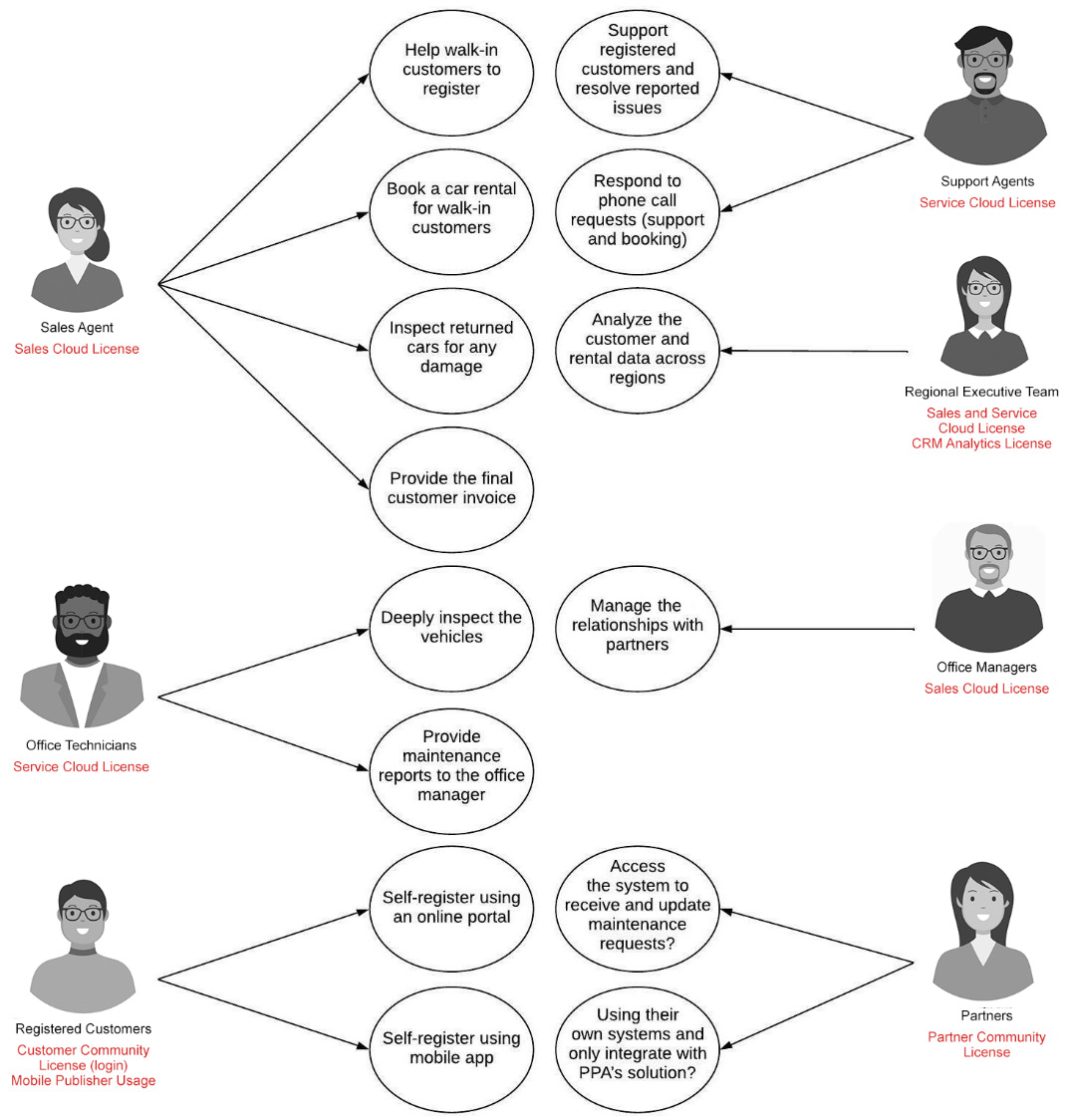

Finally, your actors and licenses diagram should look like the following:

Figure 13.10 – Actors and licenses (final)

Note you have updated some of the penciled-in licenses for some actors and upgraded the technician license from the Salesforce Platform license to Salesforce Service Cloud to allow technicians to create and work with the Case object.

Congratulations! You have managed to complete the scenario and create a scalable and secure end-to-end solution. You need to pair that now with a strong, engaging, and attractive presentation to nail down as many marks as possible and leave the Q&A stage to close a few gaps only.

Ready for the next stage? Move on to it.

Presenting and Justifying Your Solution

It is now time to create your presentation. This is something you will have to do on the go during the review board as creating the solution and supporting artifacts will likely consume three hours.

Therefore, you need to develop your presentation and time management skills to ensure you describe the solution with enough details in the given timeframe. You will learn some time-keeping techniques in Appendix, Tips and Tricks, and the Way Forward.

In previous chapters, you saw several examples of presentation pitches that dived into the right level of detail. This time, you will focus on shaping an actual review board presentation. You will also create presentation pitches for the following topics: the overall solution and artifact introduction, one of the business processes, and the data migration and LDV mitigation strategy. This chapter will cover the customer registration process as it is the most complex and lengthy.

You will then hold your ground and defend and justify your solution, knowing that you might need to adjust the solution based on newly introduced challenges. Start by understanding the structure and strategy of the presentation.

Understanding the Presentation Structure

During the review board presentation, you need to engage and captivate your audience. In addition, you need to help them keep up with you.

You could create a fantastic pitch (using slides or other tools) that describes the solution from end to end, mixing business processes with accessibility requirements and data migration, and present it in an engaging but not very organized way, firing out a solution whenever the related topic comes to your mind.

This will make it very challenging for the judges to keep up with you. You could play out a solution for a requirement on page 7 of the exam scenario, followed by a solution for a requirement on page 3, then two more on page 5. It is indeed the judge’s responsibility to give you points for the solutions you provide, but try to always help the judges by being more organized. After all, the ability to walk an audience in an organized fashion is a real soft skill that you need in real life.

You should divide your presentation into two main parts:

- Structured part: In this part, you describe specific pre-planned elements of your solution. You usually start by introducing the overall solution and the artifacts you created. Then, you move on to describe the proposed solution for the business processes, followed by an explanation of your LDV mitigation and data migration strategies. You can add more elements to this part as you see appropriate.

You need to plan which elements and requirements you will be covering in this part before you start your presentation. This will help you budget time for it and ensure you have enough left for the next part.

- Catch-all part: This is where you go through the requirements one by one and call out those that you haven’t covered in the first part. This will ensure you do not miss any points, particularly the easy ones.

In the following sections, you will be creating sample presentation pitches for the structured part. Start with an overall introduction to the solution and artifacts.

Introducing the Overall Solution and Artifacts

Start by introducing yourself; this should not be more than a single paragraph. It should be as simple as the following:

Then proceed with another short paragraph that briefly describes the company’s business. This will help you to get into presentation mode and break the ice. You can simply utilize a shortened version of the first paragraph in the scenario, such as:

You should not take more than a minute to present yourself and set the scene. Now you can start explaining the key need of PPA using a short and brief paragraph. At this stage, you should be utilizing your landscape architecture diagram. Remember to use your diagrams. This is an extremely important thing to practice.

At this stage, you should be pointing out the relevant sections of your diagrams to help the judges visualize your solution. Even if you are doing this using a PowerPoint presentation, use your mouse cursor and flip between the slides. Do not be under the impression that a professional presentation should go in one direction only. That does not apply to this kind of presentation. Your pitch could be as follows:

That should be enough as a high-level introduction to the solution. You have introduced the problem and the key elements of the solution and given some teasers for what is to be discussed.

Now you have the audience’s attention. You are showing them that you are not here to answer a question but to give them a presentation on the solution they will be investing in. Remember, they are PPA’s CXOs today.

Remember to call out your org strategy (and the rationale behind it) during your presentation. You can then proceed by explaining the licenses they need to invest in. Switch to the actors and licenses diagram. If you are doing the presentation in person, move around; do not just stand in one place. You should radiate confidence. Moving around always does the trick. Your presentation could be as follows:

That concludes the overall high-level solution presentation. This part should take no more than five minutes to cover from end to end.

Next, move on to the most significant part of your presentation, where you will explain how your solution will solve the shared business processes.

Presenting the Business Processes’ End-to-End Solution

You are still in the structured part of your presentation. For each business process, you will use the relevant business process diagram you created. In addition, you will be using the landscape architecture diagram, the data model, and the list of interfaces to explain your solution’s details. You can start this part with a short intro, such as the following:

Next, you will go through a sample presentation for the first business process.

Customer Registration

You have already planned a solution for this process and created a business process diagram. Check Figure 12.4: Customer registration business process diagram. You will use this diagram to tell the story and explain this process’s end-to-end solution. Your pitch could be as follows:

You should be pointing to the right integration interface on your list, the objects in your data model, and the other different elements in your landscape architecture diagram, such as MuleSoft and the national driver and vehicle licensing agency systems.

You can be a bit creative with your presentation and bring forward a requirement from the accessibility and security requirement section. You could add the following:

You have seeded some questions, such as the full description of the OAuth 2.0 web server flow. If the judges feel a need to get more details, they will come back to this point during the Q&A.

This is a lengthy business process but presenting its solution should not take more than five minutes. The other business processes should take less time. Practice the presentation using your own words and keep practicing until you are comfortable with delivering a similar pitch in no more than five minutes. Time management is crucial for this exam.

Move on to the next element of your structured presentation.

Presenting the LDV Mitigation Strategy

After presenting the business processes, the next set of requirements in the scenario is related to data migration. Before proceeding with that, prepare your pitch for the LDV objects mitigation strategy:

That explains your LDV mitigation strategy and rationale. Proceed with explaining the data migration strategy.

Presenting the Data Migration Strategy

Stick with the four points listed earlier to explain the data migration strategy. You need to explain how you will get the migration data, how to load it into Salesforce, and what your migration plan and approach look like. Start to put that together:

That covers the data migration strategy and plan. You must mention these details to collect this domain’s points. Again, remember to keep an eye on the timing. You should not consume more than 30-35 minutes of your valuable 45 minutes to cover the structured part of your presentation. This will leave you with 10-15 minutes for the catch-all part, which is very important to avoid losing any easy points. Proceed to that part and see how it works.

Going Through the Scenario and Catching All Remaining Requirements

At this part of the presentation, you will go through the requirements one by one and ensure you’ve covered each one.

If you are doing an in-person review board, you will receive a printed version of the scenario. You will also have access to a set of pens and markers. When you read and solution the scenario, highlight or underline the requirements. If you have a solution in mind, write it next to it. This will help you during the catch-all part of the scenario.

If you are doing a virtual review board, you will get access to a digital version of the scenario. You will have access to MS PowerPoint and Excel, and you will be able to copy requirements to your slides/sheets and then use those during the presentation. The catch-all part of the presentation is not attractive, as it feels like you are going through questions and answering them. But it is a pragmatic and effective way to ensure you never miss a point. Also, remember to help the judges keep up with you.

Next, you will cover two examples from the accessibility and security requirements section.

Support incidents should be visible to support agents and their managers only.

You have already created a solution for this requirement. You can use the following way to present it. Firstly, you need to help the judges keep up with you by calling out the location of the requirement, such as the following:

You do not need to do that for every requirement. Just do it whenever you feel the need or when you move to a new set of requirements.

Proceed with the presentation as usual:

You can create a public group, ensure the checkbox is checked, and add all support agent roles to the group. You will also need to set the Case object’s OWD to Private, then create a criteria-based sharing rule to share Case records of a specific type with this public group.

Remember that you still need to explain the solution from end to end, with enough details, and use your diagrams. Being in the catch-all part does not mean you skip that. Move on to the next example.

Only office technicians can update a car’s status to indicate that it is out of service and requires repairs.

You should explain the solution for such requirements in less than 20 seconds. It can be as simple as the following:

Use your own words but keep it short and concise.

The catch-all part will ensure you never miss a requirement. For example, this book has intentionally left one requirement without a solution. Have you spotted it?

It was mentioned at the beginning of the scenario – easy to miss. But this approach will ensure you do not even miss those easy-to-miss scenarios. Proceed with creating a solution for it now.

Some partners are open to utilizing PPA’s solution, while others expressed their interest in using their own systems and only integrating with PPA’s solution.

The solution can be straightforward, such as the following:

That concludes the presentation. The next part is the Q&A, that is, the stage where the judges will ask questions to fill in any gaps that you have missed or not clearly explained during the presentation.

Justifying and Defending Your Solution

We will now move on to the Q&A. Relax and be proud of what you have achieved so far. You are very close to achieving your dream.

During the Q&A, you should also expect the judges to change some requirements to test your ability to solution on the fly. This is all part of the exam. Next, you will see some example questions and answers.

The judges might decide to probe for more details on one topic, such as the proposed solution for IoT. The question could be as follows:

Avoid losing your composure and assuming the worst straight away. The judge could have simply missed parts of your presentation and wanted to make sure you understand the platform’s limitations. Or they could simply be trying to put you under pressure to see how you respond.

Ensure that you fully understand the question. If you do not, ask them to repeat it. Take a few seconds to organize your thoughts if needed. Your answer could be as follows:

Assuming that the audience knows the limitations (because they are all CTAs), and therefore you do not need to bring that up, is a common killing mistake. You should consider yourself presenting to the client’s CXOs, not to CTA judges from Salesforce.

You do not want to bother the CXOs with the exact number of allowed APIs per day. But you should clearly communicate the limits a solution could potentially violate and explain how your solution will honor those limits.

Explore another question raised by the judges:

On some occasions, you might lack the knowledge on how to set up a particular third-party product. But you still have your broad general architectural knowledge, and you will be able to rationally think of a valid answer.

In Chapter 3, Core Architectural Concepts: Integration and Cryptography, you came across a similar challenge with ETL tools, while in Chapter 11, Communicating and Socializing Your Solution, you encountered that challenge again with MuleSoft.

The same principle can be extended to IDPs such as PingFederate or Okta. Connecting them via a VPN or an agent application are valid options (depending on each product’s finer details). Also, some of these tools offer a password sync agent that can synchronize password changes from LDAP (or AD) to the tool’s own database (remember, passwords are always hashed). Your answer could be as follows:

You do not need to worry that you do not know how to configure PingFederate or the firewall. Remember that you are not expected to know how to configure all the tools under the sun. But you are expected to know that a cloud application cannot communicate directly with an application hosted behind a firewall without specific arrangements and tools. You covered that in detail in Chapter 3, Core Architectural Concepts: Integration and Cryptography.

Explore another question raised by the judges:

This is an expected question. How are you planning to handle it? If you want to ensure that you collect all points, you must draw the sequence diagram and explain it. You learned about these two flows in detail in Chapter 4, Core Architectural Concepts: Identity and Access Management. The diagram will not be repeated in this chapter. Make sure to memorize all the authentication flows covered in this book.

Explore one last question raised by the judges:

This is one of the requirements that test your ability to solution on the fly. At the same time, it tests your platform knowledge. Your answer could be as follows:

That concludes the Q&A stage. You experienced different types of questions that could be raised and confidently defended your solution or adjusted it if needed. Try to keep your answers short, concise, and to the point. This will give more time for the judges to cover topics that you might have missed.

Summary

In this chapter, you continued developing the solution for your first full mock scenario. You encountered more challenging accessibility and security requirements. You created comprehensive LDV mitigation and data migration strategies. You tackled challenges with reporting and had a good look at the release management process along with its supporting governance model.

You then looked into specific topics and created an engaging presentation pitch that described the proposed solution from end to end. You used the diagrams to help explain the architecture more clearly and practiced the Q&A stage using various potential questions.

In the next chapter, you will tackle the second full mock scenario. It is as challenging as the first, but it has a slightly trickier data model. You will experience a new set of challenges and learn how to overcome them. Nothing prepares you better than a good exercise, and you will have plenty up next.