In this chapter we will look at the different types of evaluation form (also known as ‘happy sheets’) and their use and applicability, linked to Kirkpatrick’s famous evaluation model. We will identify when to use them and when not to; and examine why some people are reluctant to complete them and how we can challenge their mindset.

Trainers must begin with desired results and then determine what behaviour is needed to accomplish them. Then trainers must determine the attitudes, knowledge, and skills that are necessary to bring about the desired behaviour(s). The final challenge is to present the training programme in a way that enables the participants not only to learn what they need to know but also to react favorably to the programme. Donald Kirkpatrick, 1998 |

It is that time of the day, when the evaluation forms are handed out and the facilitator either tries to make small talk or just keeps very quiet, waiting with bated breath to see if it has all been worth it.

As mentioned in the last chapter, evaluation forms can be given out at the beginning and completed as the workshop develops. However, on the whole, forms tend to be handed out at the end. The reality is that most people want to get away at the end of the day to catch their train or bus. The evaluation form is rushed through, and a few ticks here and there aren’t given much thought.

Now, I don’t know about you, but when I have worked hard all day to enable the group to have a positive learning experience, I expect a little more focus on a process that should be given higher priority than many organisations give it. It is mainly the facilitator that looks through the ticks and comments and breathes a sigh of relief when there is nothing really challenging in the feedback.

Nothing great was ever achieved without enthusiasm. Ralph Waldo Emerson |

To evaluate or not?

That is the question. . . An evaluation takes time and can cost money. The cost can be attributed to the time spent by an individual to collate and enter all the data onto a spreadsheet, for example. A decision needs to be made whether it is worthwhile to evaluate at all.

The greater the cost of the workshop, the more important it is to evaluate the effectiveness of the decision to spend the amount and the value that the workshop has given to the participants. If the workshop is likely to be repeated, then again, it is worthwhile to evaluate so that improvements can be made if necessary.

He that will not reflect is a ruined man. Asian proverb |

Also, how many people will be attending the workshop? If it is high in proportion to the size of the organisation, then it is important that value for money is assessed.

A colleague showed me a model she uses to help her decide whether to evaluate a particular session or workshop. There are a range of factors that are scored on a scale of 1–10 (see next page). If the total score is more than 25 it will be worthwhile evaluating the session or workshop. If a particular factor scores 7 or more then think about whether that particular factor should be assessed in greater detail.

It really all depends on what the organisation wants measured and/or costed. There are different types of cost:

- Direct cost – these are the costs that are incurred only if the workshop is run.

- Indirect cost – these costs are variable and part of supporting the workshop.

| Direct costs | Indirect costs |

|---|---|

| The venue | Flipcharts/pens |

| An external facilitator (including travel expenses) | Projector |

| Refreshments | Administrative support |

| Resources (materials) | Room clearance/cleaning |

Once these costs are included, a workshop’s total cost is often doubled.

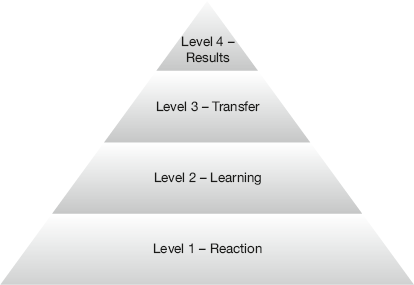

Kirkpatrick’s model of evaluation

Professor Donald Kirkpatrick (1959) developed the most famous model of evaluation, containing four levels (see Figure 11.1). (See www.kirkpatrickpartners.com for further information.)

Figure 11.1 Kirkpatrick’s model of evaluation

Source: Based on data from Kirkpatrick (1959)

Reaction

This is normally the reaction of learners immediately after the workshop. They complete the evaluation forms based on content, objectives, the facilitator’s effectiveness and resources or materials used.

| Pros | Cons |

|---|---|

| Provides immediate data. | Provides data on how people ‘felt’. |

| High completion rate if completed on the day. | Data can be rushed. |

Learning

This stage measures what people have learnt. To measure this effectively there needs to be some pre- and post-workshop questionnaires completed, to calculate any change in attitude, improvement in knowledge or reduction in skills gaps. Some feedback from their manager/colleagues after the event can give further data on whether the individual has added value to the team/department since the workshop.

| Pros | Cons |

|---|---|

| Measures if the new skill or knowledge has made a positive difference. | Collating the post-workshop evidence can be time consuming. |

| Can validate the success of the learning intervention. | May not be able to apply the workshop learning environment back into the workplace. |

Transfer of learning

Normally undertaken three to six months after the workshop. This measures how the learner has transferred the learning into the workplace after a longer time period.

| Pros | Cons |

|---|---|

| Can really measure the impact of the workshop with detailed data. | Can be costly. |

| Line manager role crucial – buy-in here can add longer-term value in development. | Can take a large amount of time to complete and validate the data. |

Results

This measures the impact of the workshop on organisational effectiveness. It could be that the organisation has increased sales, reduced complaints in customer service or managed staff performance more effectively. This stage is very difficult to measure. Correlating an organisation’s success with a workshop or workshops is not easy as there may be several other contributing factors.

Other models of note

Although there are many models of evaluation, the two that I grew up with, apart from Kirkpatrick, were the following:

- The CIRO model (Warr, Bird and Rackham, 1970).

- Five level evaluation (Hamblin, 1974).

The CIRO model

| Context | Inputs | Reactions | Outcomes |

|---|---|---|---|

| Were the needs analysed accurately at the outset? Why was this particular workshop chosen? What were the learning objectives? Does it link to the organisation’s culture? | What resources were available to support the workshop? Did we choose the right participants? | Did the workshop achieve what it set out to do? (Feedback from the participants.) | Did the outcomes match the original objectives? Has the workshop been a success? |

Source: Based on data from Warr, Bird and Rackham (1970)

Five level evaluation

| Level 1 – reactions | What did the participants think of the workshop? |

| Level 2 – learning | Did the participants learn what was intended? |

| Level 3 – job behaviour | Has the participant transferred the learning to the workplace? |

| Level 4 – organisational/departmental | Has the workshop assisted organisational/departmental goals? |

| Level 5 – ultimate value | Has the workshop impacted on profit/customer service/public perception? |

Source: Based on data from Hamblin (1974)

Evaluation examples

There are a number of ways that you can collect information to evaluate your workshop. For this book we will be focusing on evaluating the workshop on the day – level 1, reaction – so that you can design a questionnaire that works for you. Ideally line managers should be involved in the whole process but, in my experience, this rarely happens. We must presume that they don’t want to be or that the organisation does not think it is necessary.

The forms can vary depending on the organisation and its culture and the type of workshop you have run. If you do get a say in the design of an evaluation form, always attempt to tailor it specifically to the subject. Generic forms are used by organisations for consistency in collating the data, but sometimes you cannot measure like with like as the subjects are so different.

Some forms allow you to circle a few words about how you felt about the workshop (see example overleaf).

This is acceptable as long as it is followed up with questions unpacking what they meant by their selection. Too often, participants circle a word and there is no follow-up question. (Refer to the open and closed questioning skills in Chapter 1.)

There are many forms with an opening question of ‘What were your personal objectives before attending the workshop?’ This will not work unless the organisation has a clear learning strategy and each individual has sat down with their line manager to have this discussion. This rarely happens. This therefore makes the question redundant but organisations still ask.

Some styles prefer the participant to have free rein to write just how they think the workshop benefited them. The example below is a simple four-quadrant model on an A4 sheet.

| What I have learnt today |

How it applies to my role |

| What I am going to do differently |

Follow-up action |

The design of your questionnaire can be a mix of open and closed questions, usually with some rating scale questions.

Examples of rating scale questions

How useful was the information received today? (1 is high, 5 is low)

What is the problem with this question and style?

Too often, the rating headings are not defined. For example, if the rating headings are ‘excellent’, ‘good’, ‘satisfactory’ and ‘poor’, it is going to be open to interpretation as a ‘good’ for one person may be a ‘satisfactory’ for another. Defining the levels is important.

It is the same when there is just a number – usually stated as, ‘If 1 is high and 5 is poor, complete the following assessment.’ It is a very personal scoring system and more clarity should be given, as everyone has different standards.

When asking how useful was the information received, there is no clarity around the words ‘useful’ and ‘information’. Are people measuring the same thing? Probably not.

At level 1, we are really seeing the reaction to the workshop and what people have learnt. We tend to capture feedback on the admin processes, the lunch, the room, the facilitator, the handouts, etc. For example, what has just happened? How was the experience for you?

Three styles that I particularly like are as follows. They have been used alongside other evaluation forms but they work for me – and, more importantly, the participants and the organisation.

Stop/start/continue

This is very simple. Each participant is given an A4 sheet at the beginning of the day with three sections split horizontally. They complete it as the workshop progresses.

They make notes after each session as to what they have learnt by completing the sheet.

Pre- and post-workshop

The second form that is very effective for measuring learning gaps is one that is given out at the start. They complete one section before the workshop commences and then complete another at the end, using their own rating scale.

The following example is taken from a ‘Managing Change’ workshop:

- Complete column A before the workshop commences.

- Complete columns B and C after the workshop finishes.

- Add up column totals and compare pre- and post-workshop ability.

This can show a learning shift for a participant. It can also show how effective the workshop has been is helping others to attain the skills required to implement – in this case – the upcoming changes.

Commitment plan

The last choice is called a ‘commitment plan’ – this idea was formulated in 2004 by W. Leslie Rae and full details of the plan can be seen at www.businessballs.com/trainingevaluationtools.pdf. At the end of a workshop a commitment plan should be completed based on what has been learnt or has been reminded. When learning is applied on their return to work, the new skills and knowledge develop, reinforcing new abilities, and the organisation benefits from improved performance. Learning without meaningful follow-up and application is largely forgotten and wasted. The plan is in two parts.

Firstly, participants complete three to five key areas of learning from the day. An example is shown in the table below.

| Item | How to implement | When I will implement | |

|---|---|---|---|

| 1 | Be more assertive in managing the team | Make decisions and stick with them | In the next month |

| 2 | Improve my communication skills Get feedback from other managers | Next week | |

| 3 | To listen more effectively | Get feedback when I talk over people | Commencing immediately |

The second part of the plan is to take each one individually and then to build an in-depth approach to achieving your commitments. Obviously this takes time, so get participants to complete at least one action point at the workshop and then complete the others with their line manager back in the workplace.

Questions that need to be answered in the second part are given in full at www.businessballs.com/trainingevaluationtools.pdf (page 7).

Whatever the reasons you and/or the organisation may have for evaluation, make sure that you are measuring the right things. You need to know the purpose of the training, its context and the objectives before any evaluation methodology can begin. Ask why the evaluation is taking place. What will it measure? How will it be measured? What are you going to do with the results?

Convincing participants

All too rarely do we make the participants understand the importance of evaluation and their role in helping the organisation meets its challenges of the future. By having a short session at the outset on evaluation and its importance, you have more chance of getting buy-in. However, it is challenging to get a positive message across when the culture is seen as one that does nothing with the evaluation once collated. It is just a process to them.

Few people see the results of the evaluation outside HR or L&D departments. The participants have completed the forms and yet they will be the last to know – or they will never know!

With advancement in technology accelerating at increasing speed, many evaluations are completed online. Participants have an email waiting for them after the workshop and they have to complete it within a certain timeframe. The data can be collated and put into a report within seconds and the organisation can evaluate the success of the workshop.

Once the evaluation data is collated, as well as sending it to HR or another department in the organisation, send the report to the participants who completed it in the first place. It is courtesy to do so and elevates the priority an organisation gives to evaluation.

- Identify whether it is beneficial to evaluate the workshop.

- If so, know what it is you are measuring and why, and at what level.

- Evaluation begins when the workshop starts.

- Use a mix of open/closed questions and rating scales.

- Always define rating scales with definitions.

- Share the evaluation results with the participants.