Chapter 4

Communication and Network Security

This chapter covers the following topics:

Secure Network Design Principles: Concepts covered include the OSI and TCP/IP models.

IP Networking: Concepts discussed include common TCP/UDP ports, logical and physical addressing, network transmission, and network types.

Protocols and Services: Protocols and services discussed include ARP, DHCP, DNS, FTP, HTTP, ICMP, IMAP, LDAP, NAT, NetBIOS, NFS, PAT, POP, CIFS/SMB, SMTP, SNMP, and multilayer protocols.

Converged Protocols: Protocols discussed include FCoE, MPLS, VoIP, and iSCSI.

Wireless Networks: Concepts covered include wireless techniques, WLAN structure, WLAN standards, and WLAN security.

Communications Cryptography: Concepts discussed include link encryption, end-to-end encryption, email security, and Internet security.

Secure Network Components: Components discussed include operation of hardware, transmission media, network access control devices, endpoint security, and content-distribution networks.

Secure Communication Channels: Topics discussed include voice, multimedia collaboration, remote access, data communications, and virtualized networks.

Network Attacks: Concepts discussed include cabling attacks, network component attacks, ICMP attacks, DNS attacks, email attacks, wireless attacks, remote attacks, and other attacks.

Sensitive data must be protected from unauthorized access when the data is at rest (on a hard drive) and in transit (moving through a network). Moreover, sensitive communications of other types such as emails, instant messages, and phone conversations must also be protected from prying eyes and ears. Many communication processes send information in a form that can be read and understood if captured with a protocol analyzer or sniffer.

The Communication and Network Security domain addresses a broad array of topics including network architecture, components, and secure communication channels. Out of 100% of the exam, this domain carries an average weight of 14%, which is the second highest weight of all the eight domains. So, pay close attention to the many details in this chapter!

In the world of communication today, you should assume that your communications are being captured regardless of how unlikely you think that might be. You should also take steps to protect or encrypt the transmissions so they will be useless to anyone capturing them. This chapter covers the protection of wired and wireless transmissions and of the network devices that perform the transmissions, as well as some networking fundamentals required to understand transmission security.

Foundation Topics

Secure Network Design Principles

To properly configure communication and network security, security professionals must understand secure network design principles. They need to know how to ensure that a network is set up properly and will need minimal reconfiguration in the future. To use secure network design principles, security professionals must understand the OSI and TCP/IP models.

OSI Model

A complete understanding of networking requires an understanding of the Open Systems Interconnection (OSI) model. Created in the 1980s by the International Organization for Standardization (ISO) as a part of its mission to create a protocol set to be used as a standard for all vendors, the OSI model breaks the communication process into layers. Although the ensuing protocol set did not catch on as a standard (Transmission Control Protocol/Internet Protocol [TCP/IP] was adopted), the model has guided the development of technology since its creation. It also has helped generations of students understand the network communication process between two systems.

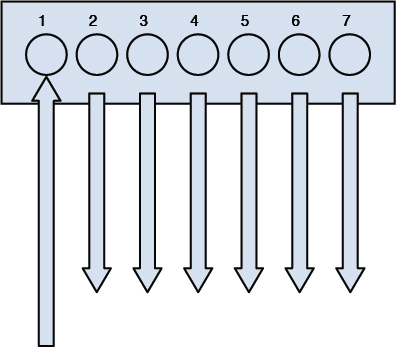

The OSI model breaks up the process into seven layers, or modules. The benefits of doing this are

It breaks up the communication process into layers with standardized interfaces between the layers, allowing for changes and improvements on one layer without necessitating changes on other layers.

It provides a common framework for hardware and software developers, fostering interoperability.

This open systems architecture is owned by no vendor, and it acts as a blueprint or model for developers to work with. Various protocols operate at different layers of this model. A protocol is a set of communication rules two systems must both use and understand to communicate. Some protocols depend on other protocols for services, and as such, these protocols work as a team to get transmissions done, much like the team at the post office that gets your letters delivered. Some people sort, others deliver, and still others track lost shipments.

The OSI model and the TCP/IP model, explained in the next section, are often both used to describe the process called packet creation, or encapsulation. Until a packet is created to hold the data, it cannot be sent on the transmission medium.

With a modular approach, it is possible for a change in a protocol or the addition of a new protocol to be accomplished without having to rewrite the entire protocol stack (a term for all the protocols that work together at all layers). The model has seven layers. This section discusses each layer’s function and its relationship to the layer above and below it in the model. The layers are often referred to by their number with the numbering starting at the bottom of the model at Layer 1, the Physical layer.

The process of creating a packet or encapsulation begins at Layer 7, the Application layer rather than Layer 1, so we discuss the process starting at Layer 7 and work down the model to Layer 1, the Physical layer, where the packet is sent out on the transmission medium.

Application Layer

The Application layer (Layer 7) is where the encapsulation process begins. This layer receives the raw data from the application in use and provides services, such as file transfer and message exchange to the application (and thus the user). An example of a protocol that operates at this layer is Hypertext Transfer Protocol (HTTP), which is used to transfer web pages across the network. Other examples of protocols that operate at this layer are DNS queries, FTP transfers, and SMTP email transfers. The Dynamic Host Configuration Protocol (DHCP) and DHCP for IPv6 (DHCPv6) also operate at this layer.

The user application interfaces with these application protocols through a standard interface called an application programming interface (API). The Application layer protocol receives the raw data and places it in a container called a protocol data unit (PDU). When the process gets down to Layer 4, these PDUs have standard names, but at Layers 5–7 we simply refer to the PDU as “data.”

Presentation Layer

The information that is developed at Layer 7 is then handed to Layer 6, the Presentation layer. Each layer makes no changes to the data received from the layer above it. It simply adds information to the developing packet. In the case of the Presentation layer, information is added that standardizes the formatting of the information if required.

Layer 6 is responsible for the manner in which the data from the Application layer is represented (or presented) to the Application layer on the destination device (explained more fully in the section “Encapsulation and De-encapsulation”). If any translation between formats is required, it will take care of it. It also communicates the type of data within the packet and the application that might be required to read it on the destination device.

This layer consists of two sublayers: the common application service element (CASE) sublayer and the specific application service element (SASE) sublayer. CASE provides services to the Application layer and requests services from the Session layer. SASE supports application-specific services.

Session Layer

The Session layer, or Layer 5, is responsible for adding information to the packet that makes a communication session between a service or application on the source device possible with the same service or application on the destination device. Do not confuse this process with the one that establishes a session between the two physical devices. That occurs not at this layer but at Layers 3 and 4. This session is built and closed after the physical session between the computers has taken place.

The application or service in use is communicated between the two systems with an identifier called a port number. This information is passed on to the Transport layer, which also makes use of these port numbers.

Transport Layer

The protocols that operate at the Transport layer (Layer 4) work to establish a session between the two physical systems. The service provided can be either connection-oriented or connectionless, depending on the transport protocol in use. The “TCP/IP Model” section (TCP/IP being the most common standard networking protocol suite in use) discusses the specific transport protocols used by TCP/IP in detail.

The Transport layer receives all the information from Layers 7, 6, and 5 and adds information that identifies the transport protocol in use and the specific port number that identifies the required Layer 7 protocol. At this layer, the PDU is called a segment because this layer takes a large transmission and segments it into smaller pieces for more efficient transmission on the medium.

Network Layer

At Layer 3, or the Network layer, information required to route the packet is added. This is in the form of a source and destination logical address (meaning one that is assigned to a device in some manner and can be changed). In TCP/IP, this is in terms of a source and destination IP address. An IP address is a number that uniquely differentiates a host from all other devices on the network. It is based on a numbering system that makes it possible for computers (and routers) to identify whether the destination device is on the local network or on a remote network. Any time a packet needs to be sent to a different network or subnet (IP addressing is covered later in the chapter), it must be routed and the information required to do that is added here. At this layer, the PDU is called a packet.

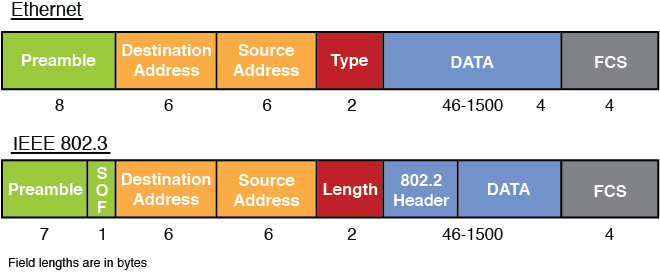

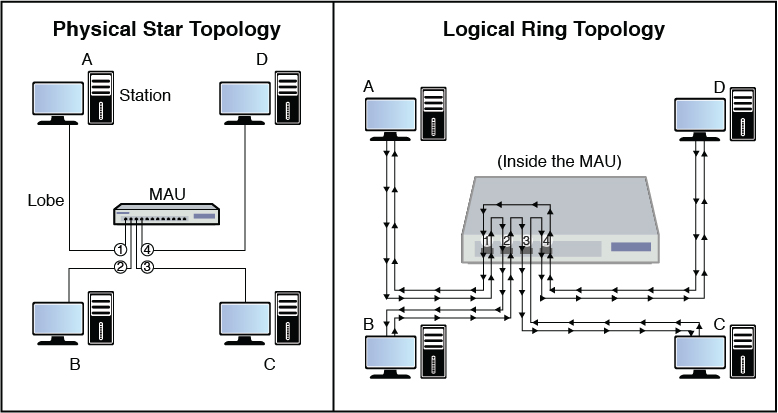

Data Link Layer

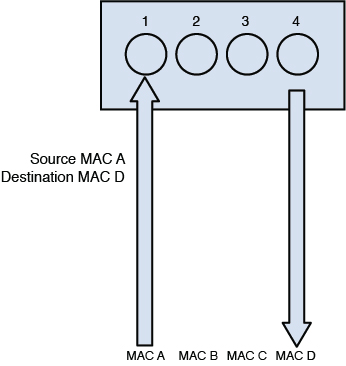

The Data Link layer, or Layer 2, is responsible for determining the destination physical address. Network devices have logical addresses (IP addresses) and the network interfaces they possess have a physical address (a media access control [MAC] address), which is permanent in nature. When the transmission is handed off from routing device to routing device, at each stop this source and destination address pair changes, whereas the source and destination logical addresses (in most cases IP addresses) do not. This layer is responsible for determining what those MAC addresses should be at each hop (router interface) and adding them to this part of the packet. The later section “TCP/IP Model” covers how this resolution is performed in TCP/IP. After this is done, we call the PDU a frame.

In some networks, the Data Link layer is discussed as including the media access control (MAC) and logical link control (LLC) sublayers. In the Data Link layer, the IEEE 802.2 LLC protocol can be used with all of the IEEE 802 MAC layers.

Something else happens that is unique to this layer. Not only is a Layer 2 header placed on the packet but also a trailer at the “end” of the frame. Information contained in the trailer is used to verify that none of the data contained has been altered or damaged en route.

Physical Layer

Finally, the packet (or frame, as it is called at Layer 2) is received by the Physical layer (Layer 1). Layer 1 is responsible for turning the information into bits (ones and zeros) and sending it out on the medium. The way in which this is accomplished can vary according to the media in use. For example, in a wired network, the ones and zeros are represented as electrical charges. In wireless, they are represented by altering the radio waves. In an optical network, they are represented with light.

The ability of the same packet to be routed through various media types is a good example of the independence of the layers. As a PDU travels through different media types, the physical layer will change but all the information in Layers 2–7 will not. Similarly, when a frame crosses routers or hops, the MAC addresses change but none of the information in Layers 3–7 changes. The upper layers depend on the lower layers for various services, but the lower layers leave the upper layer information unchanged.

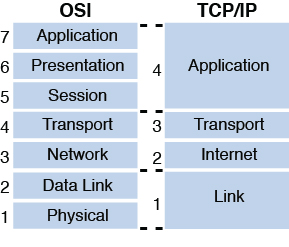

Figure 4-1 shows common protocols mapped to the OSI model. The next section covers another model that perhaps more accurately depicts what happens in a TCP/IP network. Because TCP/IP is the standard now for transmission, comparing these two models is useful. Although they have a different number of layers and some of the layer names are different, they describe the same process of packet creation or encapsulation.

TCP/IP Model

The protocols developed when the OSI model was developed (sometimes referred to as OSI protocols) did not become the standard for the Internet. The Internet as we know it today has its roots in a wide area network (WAN) developed by the Department of Defense (DoD), with TCP/IP being the protocol developed for that network. The Internet is a global network of public networks and Internet service providers (ISPs) throughout the world.

This model bears many similarities to the OSI model, which is not unexpected because they both describe the process of packet creation or encapsulation. The difference is that the OSI model breaks the process into seven layers, whereas the TCP/IP model breaks it into four. If you examine them side by side, however, it becomes apparent that many of the same functions occur at the same layers, while the TCP/IP model combines the top three layers of the OSI model into one and the bottom two layers of the OSI model into one. Figure 4-2 shows the two models next to one another.

The TCP/IP model has only four layers and is useful to study because it focuses its attention on TCP/IP. This section explores those four layers and their functions and relationships to one another and to layers in the OSI model.

Application Layer

Although the Application layer in the TCP/IP model has the same name as the top layer in the OSI model, the Application layer in the TCP/IP model encompasses all the functions performed in Layers 5–7 in the OSI model. Not all functions map perfectly because both are simply conceptual models. Within the Application layer, applications create user data and communicate this data to other processes or applications on another host. For this reason, it is sometimes also referred to as the process-to-process layer.

Examples of protocols that operate at this layer are SMTP, FTP, SSH, and HTTP. These protocols are discussed in the section “Protocols and Services,” later in this chapter. In general, however, these are usually referred to as higher layer protocols that perform some specific function, whereas protocols in the TCP/IP suite that operate at the Transport and Internet layers perform location and delivery service on behalf of these higher layer protocols.

A port number identifies to the receiving device these upper layer protocols and the programs on whose behalf they function. The number identifies the protocol or service. Many port numbers have been standardized. For example, Domain Name System (DNS) is identified with the standard port number 53. The “Common TCP/UDP Ports” section covers these port numbers in more detail.

Transport Layer

The Transport layers of the OSI model and the TCP/IP model perform the same function, which is to open and maintain a connection between hosts. This must occur before the session between the processes can occur as described in the Application layer section and can be done in TCP/IP in two ways: connectionless and connection-oriented. A connection-oriented transmission means that a connection will be established before any data is transferred, whereas in a connectionless transmission this is not done. One of two different transport layer protocols is used for each process. If a connection-oriented transport protocol is required, Transmission Control Protocol (TCP) will be used. If the process will be connectionless, User Datagram Protocol (UDP) is used.

Application developers can choose to use either TCP or UDP as the Transport layer protocol used with the application. Regardless of which transport protocol is used, the application or service will be identified to the receiving device by its port number and the transport protocol (UDP or TCP).

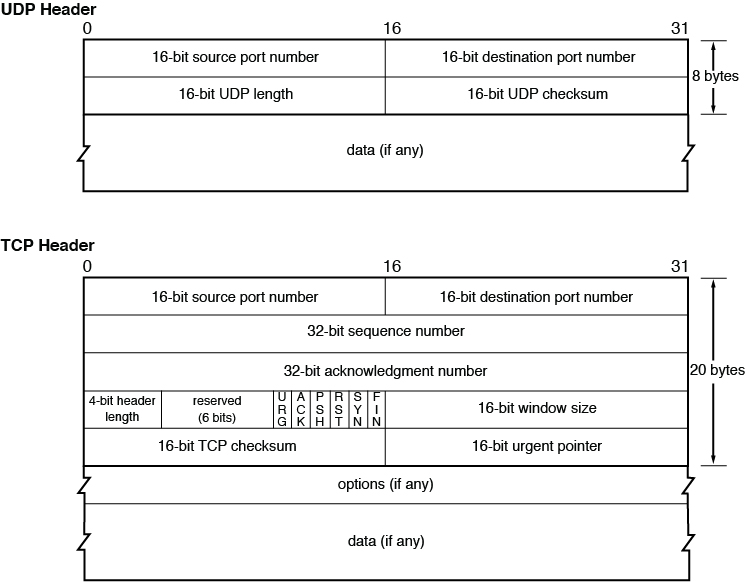

Although TCP provides more functionality and reliability, the overhead required by this protocol is substantial when compared to UDP. This means that a much higher percentage of the packet consists of the header when using TCP than when using UDP. This is necessary to provide the fields required to hold the information needed to provide the additional services. Figure 4-3 shows a comparison of the sizes of the two respective headers.

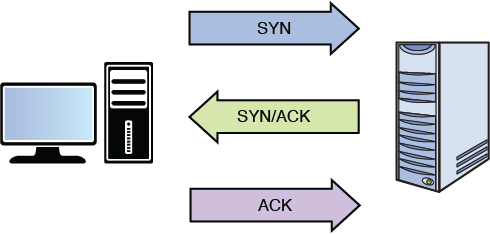

When an application is written to use TCP, a state of connection is established between the two hosts before any data is transferred. This occurs using a process known as the TCP three-way handshake. This process is followed exactly, and no data is transferred until it is complete. Figure 4-4 shows the steps in this process. The steps are as follows:

The initiating computer sends a packet with the SYN flag set (one of the fields in the TCP header), which indicates a desire to create a connection.

The receiving host acknowledges receiving this packet and indicates a willingness to create a state of connection by sending back a packet with both the SYN and ACK flags set.

The first host acknowledges completion of the connection process by sending a final packet back with only the ACK flag set.

Figure 4-4 TCP Three-Way Handshake

So what exactly is gained by using the extra overhead to use TCP? The following are examples of the functionality provided with TCP:

Guaranteed delivery: If the receiving host does not specifically acknowledge receipt of each packet, the sending system will resend the packet.

Sequencing: In today’s routed networks, the packets might take many different routes to arrive and might not arrive in the order in which they were sent. A sequence number added to each packet allows the receiving host to reassemble the entire transmission using these numbers.

Flow control: The receiving host has the capability of sending the acknowledgement packets back to signal the sender to slow the transmission if it cannot process the packets as fast as they are arriving.

Many applications do not require the services provided by TCP or cannot tolerate the overhead required by TCP. In these cases the process will use UDP, which sends on a “best effort” basis with no guarantee of delivery. In many cases some of these functions are provided by the Application layer protocol itself rather than relying on the Transport layer protocol.

Internet Layer

The Transport layer can neither create a state of connection nor send using UDP until the location and route to the destination are determined, which occurs on the Internet layer. The four protocols in the TCP/IP suite that operate at this layer are

Internet Protocol (IP): Responsible for putting the source and destination IP addresses in the packet and for routing the packet to its destination.

Internet Control Message Protocol (ICMP): Used by the network devices to send messages regarding the success or failure of communications and used by humans for troubleshooting. When you use the ping or traceroute/tracert commands, you are using ICMP.

Internet Group Management Protocol (IGMP): Used when multicasting, which is a form of communication whereby one host sends to a group of destination hosts rather than a single host (called a unicast transmission) or to all hosts (called a broadcast transmission). There are three versions of IGMP. Version 2 adds two query types: general query and group-specific query. Version 3 adds membership query.

Address Resolution Protocol (ARP): Resolves the IP address placed in the packet to a physical address (called a MAC address in Ethernet).

The relationship between IP and ARP is worthy of more discussion. IP places the source and destination IP addresses in the header of the packet. As we saw earlier, when a packet is being routed across a network, the source and destination IP addresses never change but the Layer 2 or MAC address pairs change at every router hop. ARP uses a process called the ARP broadcast to learn the MAC address of the interface that matches the IP address of the next hop. After it has done this, a new Layer 2 header is created. Again, nothing else in the upper layer changes in this process, just Layer 2.

That brings up a good point concerning the mapping of ARP to the TCP/IP model. Although we generally place ARP on the Internet layer, the information it derives from this process is placed in the Link layer or Layer 2, the next layer in our discussion.

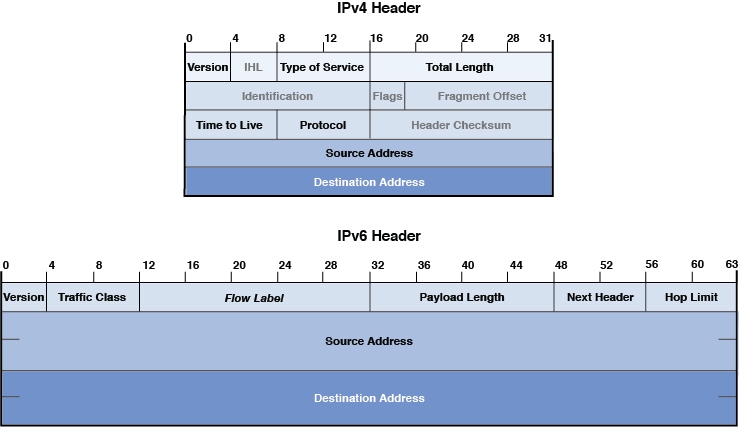

Just as the Transport layer added a header to the packet, so does the Internet layer. One of the improvements made by IPv6 is the streamlining of the IP header. Although the same information is contained in the header and the header is larger, it has a much simpler structure. Figure 4-5 shows a comparison of the two.

Link Layer

The Link layer, also called Network Access layer, of the TCP/IP model provides the services provided by both the Data Link and the Physical layers in the OSI model. The source and destination MAC addresses are placed in this layer’s header. A trailer is also placed on the packet at this layer with information in the trailer that can be used to verify the integrity of the data.

This layer is also concerned with placing the bits on the medium, as discussed in the section “OSI Model,” earlier in this chapter. Again, the exact method of implementation varies with the physical transmission medium. It might be in terms of electrical impulses, light waves, or radio waves.

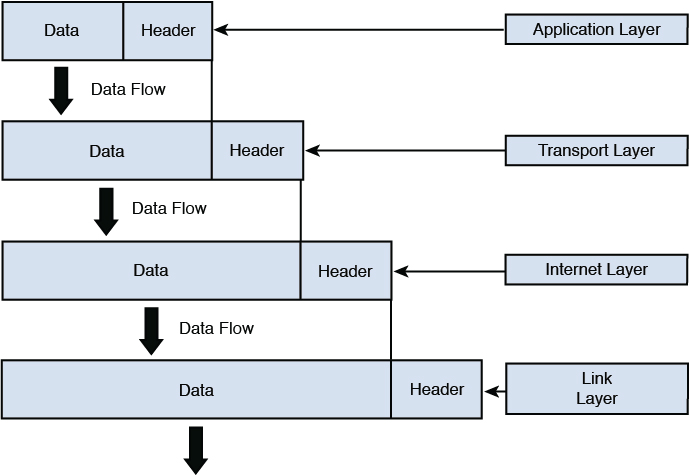

Encapsulation and De-encapsulation

In either model as the packet is created, information is added to the header at each layer and then a trailer is placed on the packet before transmission. This process is called encapsulation. Intermediate devices, such as routers and switches, only read the layers of concern to that device (for a switch, Layer 2 and for a router, Layer 3). The ultimate receiver strips off the entire header with each layer, making use of the information placed in the header by the corresponding layer on the sending device. This process is called de-encapsulation. Figure 4-6 shows a visual representation of encapsulation.

IP Networking

Now that you understand secure design principles and the OSI and TCP/IP models, it is time to delve more deeply into IP networking. The Internet Protocol (IP) is the main communications protocol in the TCP/IP suite and is responsible for relaying datagrams across network boundaries. This section covers common TCP/UDP ports, logical and physical addressing, network transmission, and network types.

Common TCP/UDP Ports

When the Transport layer learns the required port number for the service or application required on the destination device from the Application layer, it is recorded in the header as either a TCP or UDP port number. Both UDP and TCP use 16 bits in the header to identify these ports. These port numbers are software based or logical, and there are 65,535 possible numbers. Port numbers are assigned in various ways, based on three ranges:

System, or well-known, ports (0–1023)

User ports (1024–49151)

Dynamic and/or private ports (49152–65535)

System ports are assigned by the Internet Engineering Task Force (IETF) for standards-track protocols, as per RFC 6335. User ports can be registered with the Internet Assigned Numbers Authority (IANA) and assigned to the service or application using the “Expert Review” process, as per RFC 6335. Dynamic ports are used by source devices as source ports when accessing a service or application on another machine. For example, if computer A is sending an FTP packet, the destination port will be the well-known port for FTP and the source will be selected by the computer randomly from the dynamic range.

The combination of the destination IP address and the destination port number is called a socket. The relationship between these two values can be understood if viewed through the analogy of an office address. The office has a street address, but the address also must contain a suite number as there could be thousands (in this case 65,535) of suites in the building. Both are required to get the information where it should go.

As a security professional, you should be aware of well-known port numbers of common services. In many instances, firewall rules and access control lists (ACLs) are written or configured in terms of the port number of what is being allowed or denied rather than the name of the service or application. Table 4-1 lists some of the more important port numbers. Some use more than one port.

Table 4-1 Common TCP/UDP Port Numbers

Application Protocol |

Transport Protocol |

Port Number |

|---|---|---|

Telnet |

TCP |

23 |

SMTP |

UDP |

25 |

HTTP |

TCP |

80 |

SNMP |

TCP and UDP |

161 and 162 |

FTP |

TCP and UDP |

20 and 21 |

FTPS |

TCP |

989 and 990 |

SFTP |

TCP |

22 |

TFTP |

UDP |

69 |

POP3 |

TCP and UDP |

110 |

DNS |

TCP and UDP |

53 |

DHCP |

UDP |

67 and 68 |

SSH |

TCP |

22 |

LDAP |

TCP and UDP |

389 |

NetBIOS |

TCP and UDP |

137 (TCP), 138 (TCP), and 139 (UDP) |

CIFS/SMB |

TCP |

445 |

NFSv4 |

TCP |

2049 |

SIP |

TCP and UDP |

5060 |

XMPP |

TCP |

5222 |

IRC |

TCP and UDP |

194 |

RADIUS |

TCP and UDP |

1812 and 1813 |

rlogin |

TCP |

513 |

rsh and RCP |

TCP |

514 |

IMAP |

TCP |

143 |

HTTPS |

TCP and UDP |

443 |

RDP |

TCP and UDP |

3389 |

AFP over TCP |

TCP |

548 |

Logical and Physical Addressing

During the process of encapsulation at Layer 3 of the OSI model, IP places source and destination IP addresses in the packet. Then at Layer 2, the matching source and destination MAC addresses that have been determined by ARP are placed in the packet. IP addresses are examples of logical addressing, and MAC addresses are examples of physical addressing. IP addresses are considered logical because these addresses are administered by humans and can be changed at any time. MAC addresses on the other hand are assigned permanently to the interface cards of the devices when the interfaces are manufactured. It is important to note, however, that although these addresses are permanent, they can be spoofed. When this is done, however, the hacker is not actually changing the physical address but rather telling the interface to place a different MAC address in the Layer 2 headers.

This section discusses both address types with a particular focus on how IP addresses are used to create separate networks or subnets in the larger network. It also discusses how IP addresses and MAC addresses are related and used during a network transmission.

IPv4

IPv4 addresses are 32 bits in length and can be represented in either binary or in dotted-decimal format. The number of possible IP addresses using 32 bits can be calculated by raising the number 2 (the number of possible values in the binary number system) to the 32nd power. The result is 4,294,967,296, which on the surface appears to be enough IP addresses. But with the explosion of the Internet and the increasing number of devices that require an IP address, this number has proven to be insufficient.

Due to the eventual exhaustion of the IPv4 address space, several methods of preserving public IP addresses (more on that in a bit, but for now these are addresses that are legal to use on the Internet) have been implemented, including the use of private addresses and network address translation (NAT), both discussed in the following sections. The ultimate solution lies in the adoption of IPv6, a newer system that uses 128 bits and allows for enough IP addresses for each man, woman, and child on the planet to have as many IP addresses as the entire IPv4 numbering space. IPv6 is discussed later in this section.

IP addresses that are written in dotted-decimal format, the format in which humans usually work with them, have four fields called octets separated by dots or periods. Each field is called an octet because when we look at the addresses in binary format, we devote 8 bits in binary to represent each decimal number that appears in the octet when viewed in dotted-decimal format. Therefore, if we look at the address 216.5.41.3, four decimal numbers are separated by dots, where each would be represented by 8 bits if viewed in binary. The following is the binary version of this same address:

11011000.00000101.00101001.00000011

There are 32 bits in the address, 8 in each octet.

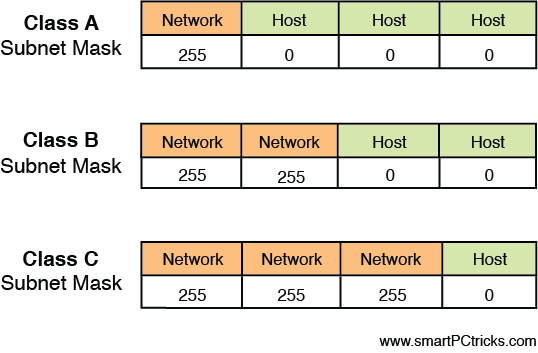

The structure of IPv4 addressing lends itself to dividing the network into subdivisions called subnets. Each IP address also has a required companion value called a subnet mask. The subnet mask is used to specify which part of the address is the network part and which part is the host. The network part, on the left side of the address, determines on which network the device resides, whereas the host portion on the right identifies the device on that network. Figure 4-7 shows the network and host portions of the three default classes of IP address.

When the IPv4 system was first created, there were only three default subnet masks. This yielded only three sizes of networks, which later proved to be inconvenient and wasteful of public IP addresses. Eventually a system called Classless Inter-Domain Routing (CIDR) was adopted that uses subnet masks that allow you to make subnets or subdivisions out of the major classful networks possible before CIDR. CIDR is beyond the scope of the exam but it is worth knowing about. You can find more information about how CIDR works at https://searchnetworking.techtarget.com/definition/CIDR.

IP Classes

Classful subnetting (pre-CIDR) created five classes of networks. Each class represented a range of IP addresses. Table 4-2 shows the five classes. Only the first three (A, B, and C) are used for individual network devices. The other ranges are for special use.

Table 4-2 Classful IP Addressing

Class |

Range |

Mask |

Initial Bit Pattern of First Octet |

Network/Host Division |

|---|---|---|---|---|

Class A |

0.0.0.0–127.255.255.255 |

255.0.0.0 |

01 |

net.host.host.host |

Class B |

128.0.0.0–191.255.255.255 |

255.255.0.0 |

10 |

net.net.host.host |

Class C |

192.0.0.0–223.255.255.255 |

255.255.255.0 |

11 |

net.net.net.host |

Class D |

224.0.0.0–239.255.255.255 |

Used for multicasting |

|

|

Class E |

240.0.0.0–255.255.255.255 |

Reserved for research |

|

|

As you can see, the key value that changes as you move from one class to another is the value of the first octet (the one on the far left). What might not be immediately obvious is that as you move from one class to another, the dividing line between the host portion and network portion also changes. This is where the subnet mask value comes in. When the mask is overlaid with the IP addresses (thus we call it a mask), every octet in the subnet mask where there is a 255 is a network portion and every octet where there is a 0 is a host portion. Another item to mention is that each class has a distinctive pattern in the first two bits of the first octet. For example, any IP address that begins with 01 in the first bit positions must be in Class A, also indicated in Table 4-2.

The significance of the network portion is that two devices must share the same values in the network portion to be in the same network. If they do not, they will not be able to communicate.

Public Versus Private IP Addresses

The initial solution used (and still in use) to address the exhaustion of the IPv4 space involved the use of private addresses and NAT. Three ranges of IP addresses were set aside to be used only within private networks and are not routable on the Internet. RFC 1918 set aside the private IP address ranges in Table 4-3 to be used for this purpose. Because these addresses are not routable on the public network, they must be translated to public addresses before being sent to the Internet. This process, called NAT, is discussed in the next section.

Table 4-3 Private IP Address Ranges

Class |

Range |

|---|---|

Class A |

10.0.0.0–10.255.255.255 |

Class B |

172.16.0.0–172.31.255.255 |

Class C |

192.168.0.0–192.168.255.255 |

NAT

Network address translation (NAT) is a service that can be supplied by a router or by a server. The device that provides the service stands between the local area network (LAN) and the Internet. When packets need to go to the Internet, the packets go through the NAT service first. The NAT service changes the private IP address to a public address that is routable on the Internet. When the response is returned from the Web, the NAT service receives it, translates the address back to the original private IP address, and sends it back to the originator.

This translation can be done on a one-to-one basis (one private address to one public address), but to save IP addresses, usually the NAT service will represent the entire private network with a single public IP address. This process is called port address translation (PAT). This name comes from the fact that the NAT service keeps the private clients separate from one another by recording their private address and the source port number (usually a unique number) selected when the packets were built.

Allowing NAT to represent an entire network (perhaps thousands of computers) with a single public address has been quite effective in saving public IP addresses. However, many applications do not function properly through NAT, and thus it has never been seen as a permanent solution to resolving the lack of IP addresses. That solution is IPv6.

NAT is not compatible with IP Security (IPsec, discussed later in this chapter) because NAT modifies packet headers. There are versions of NAT designed to support IPsec.

Security professionals need to understand stateful NAT, static versus dynamic NAT, and APIPA.

Stateful NAT

Stateful NAT (SNAT) implements two or more NAT devices to work together as a translation group. One member provides network translation of IP address information. The other member uses that information to create duplicate translation table entries. If the primary member that provides network translation fails, the backup member can then become the primary translator. It is called stateful NAT because it maintains a table about the communication sessions between internal and external systems. Figure 4-8 illustrates an example of a SNAT deployment.

Static Versus Dynamic NAT

NAT operates in two modes: static and dynamic. With static NAT, an internal private IP address is mapped to a specific external public IP address. This is a one-to-one-mapping. With dynamic NAT, multiple internal private IP addresses are given access to multiple external public IP addresses. This is a many-to-many mapping.

APIPA

Automatic Private IP Addressing (APIPA) assigns an IP address to a device if the device is unable to communicate with the DHCP server and is primarily implemented in Windows. The range of IP addresses assigned is 169.254.0.1 to 169.254.255.254 with a subnet mask of 255.255.0.0.

When a device is configured with an APIPA address, it is only able to communicate with other APIPA-configured devices on the same subnet. It is unable to communicate with non-APIPA devices on the same subnet or with devices on a different subnet. If a technician notices that a device is configured with an APIPA address, a communication problem exists between the device and DHCP, which could range from a bad network interface card or controller (NIC) or cable to DHCP router or server failure.

MAC Addressing

All the discussion about addressing thus far has been addressing that is applied at Layer 3, which is IP addressing. At Layer 2, physical addresses reside. In Ethernet, these are called MAC addresses. They are called physical addresses because these 48-bit addresses expressed in hexadecimal are permanently assigned to the network interfaces of devices. Here is an example of a MAC address:

01-23-45-67-89-ab

As a packet is transferred across a network, at every router hop and then again when it arrives at the destination network, the source and destination MAC addresses change. ARP resolves the next hop address to a MAC address using a process called the ARP broadcast. MAC addresses are unique. This comes from the fact that each manufacturer has a different set of values assigned to it at the beginning of the address called the organizationally unique identifier (OUI). Each manufacturer ensures that it assigns no duplicate within its OUI. The OUI is the first three bytes of the MAC address.

Network Transmission

Data can be communicated across a variety of media types, using several possible processes. These communications can also have a number of characteristics that need to be understood. This section discusses some of the most common methods and their characteristics.

Analog Versus Digital

Data can be represented in various ways on a medium. On a wired medium, the data can be transmitted in either analog or digital format. Analog represents the data as sound and is used in analog telephony. Analog signals differ from digital in that there are an infinite possible number of values. If we look at an analog signal on a graph, it looks like a wave going up and down. Figure 4-9 shows an analog waveform compared to a digital one.

Digital signaling on the other hand, which is the type used in most computer transmissions, does not have an infinite number of possible values, but only two: on and off. A digital signal shown on a graph exhibits a sawtooth pattern, as shown in Figure 4-9. Digital signals are usually preferable to analog because they are more reliable and less susceptible to noise on the line. Transporting more information on the same line at a higher quality over a longer distance than with analog is also possible.

Asynchronous Versus Synchronous

When two systems are communicating, they not only need to represent the data in the same format (analog/digital) but they must also use the same synchronization technique. This process tells the receiver when a specific communication begins and ends so two-way conversations can happen without talking over one another. The two types of techniques are asynchronous transmission and synchronous transmission.

With asynchronous transmissions, the systems use start and stop bits to communicate when each byte is starting and stopping. This method also uses parity bits for the purpose of ensuring that each byte has not changed or been corrupted en route. This introduces additional overhead to the transmission.

Synchronous transmission uses a clocking mechanism to sync up the sender and receiver. Data is transferred in a stream of bits with no start, stop, or parity bits. This clocking mechanism is embedded into the Layer 2 protocol. It uses a different form of error checking (cyclic redundancy check or CRC) and is preferable for high-speed, high-volume transmissions. Figure 4-10 shows a visual comparison of the two techniques.

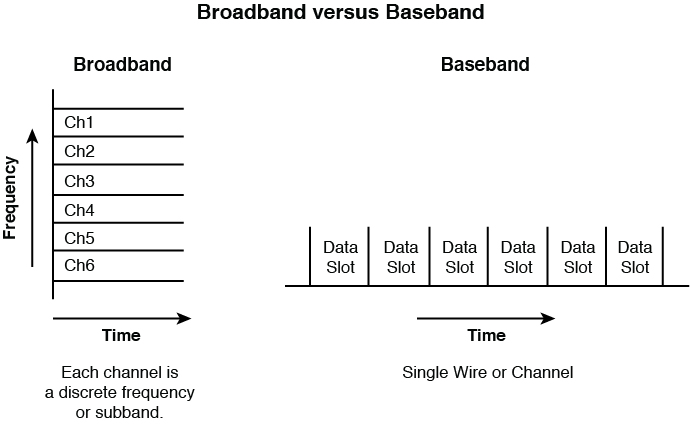

Broadband Versus Baseband

All data transfers use a communication channel. Multiple transmissions might need to use the same channel. Sharing this medium can be done in two different ways: broadband or baseband. The difference is in how the medium is shared.

In baseband, the entire medium is used for a single transmission, and then multiple transmission types are assigned time slots to use this single circuit. This is called time division multiplexing (TDM). Multiplexing is the process of using the same medium for multiple transmissions. The transmissions take turns rather than sending at the same time.

Broadband, on the other hand, divides the medium in different frequencies, a process called frequency division multiplexing (FDM). This has the benefit of allowing true simultaneous use of the medium.

An example of broadband transmission is Digital Subscriber Line (DSL), where the phone signals are sent at one frequency and the computer data at another. This is why you can talk on the phone and use the Web at the same time. Figure 4-11 illustrates these two processes.

Unicast, Multicast, and Broadcast

When systems are communicating in a network, they might send out three types of transmissions. These methods differ in the scope of their reception as follows:

Unicast: Transmission from a single system to another single system. It is considered one-to-one.

Multicast: A signal is received by all others in a group called a multicast group. It is considered one-to-many.

Broadcast: A transmission sent by a single system to all systems in the network. It is considered one-to-all.

Figure 4-12 illustrates the three methods.

Wired Versus Wireless

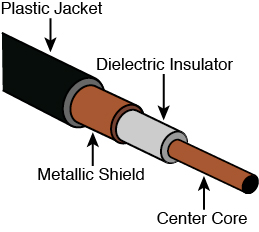

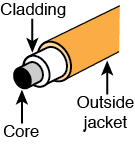

As you probably know by now, not all transmissions occur over a wired connection. Even within the category of wired connections, the way in which the ones and zeros are represented can be done in different ways. In a copper wire, the ones and zeros are represented with changes in the voltage of the signal, whereas in a fiber optic cable, they are represented with manipulation of a light source (lasers or light-emitting diodes [LEDs]).

In wireless transmission, radio waves or light waves are manipulated to represent the ones and zeros. When infrared technology is used, this is done with infrared light. With wireless LANs (WLANs), radio waves are manipulated to represent the ones and zeros. These differences in how the bits are represented occur at the Physical and Data Link layers of the OSI model. When a packet goes from a wireless section of the network to a wired section, these two layers are the only layers that change.

When a different physical medium is used, typically a different Layer 2 protocol is called for. For example, while the data is traveling over the wired Ethernet network, the 802.3 standard is used. However, when the data gets to a wireless section of the network, it needs a different Layer 2 protocol. Depending on the technology in use, it could be either 802.11 (WLAN) or 802.16 (WiMAX).

The ability of the packet to traverse various media types is just another indication of the independence of the OSI layers because the information in Layers 3–7 remains unchanged regardless of how many Layer 2 transitions must be made to get the data to its final destination.

IPv6

IPv6 was developed to more cleanly address the issue of the exhaustion of the IPv4 space. Although private addressing and the use of NAT have helped to delay the inevitable, the use of NAT introduces its own set of problems. The IPv6 system uses 128 bits so it creates such a large number of possible addresses that it is expected to suffice for many, many years.

IPv6 addresses look different than IPv4 addresses because they use a different format and use the hexadecimal number system, so there are letters and numbers in them such as you would see in a MAC address. There are eight fields separated by colons, not dots. Here is a sample IPv6 address:

2001:00000:4137:9e76:30ab:3035:b541:9693

Many of the security features that were add-ons to IPv4 (such as IPsec) have been built into IPv6, increasing its security. Moreover, while DHCP can be used with IPv6, IPv6 provides a host the ability to locate its local router, configure itself, and discover the IP addresses of its neighbors. Finally, broadcast traffic is completely eliminated in IPv6 and replaced by multicast communications.

Table 4-4 shows the differences between IPv4 and IPv6.

Table 4-4 Differences Between IPv4 and IPv6 (Adapted from NIST SP 800-119)

Property |

IPv4 |

IPv6 |

|---|---|---|

Address size and network size |

32 bits, network size 8–30 bits |

128 bits, network size 64 bits |

Packet header size |

20–60 bytes |

40 bytes |

Header-level extension |

Limited number of small IP options |

Unlimited number of IPv6 extension headers |

Fragmentation |

Sender or any intermediate router allowed to fragment |

Only sender may fragment |

Control protocols |

Mixture of non-IP (ARP), ICMP, and other protocols |

All control protocols based on ICMPv6 |

Minimum allowed MTU |

576 bytes |

1280 bytes |

Path MTU discovery |

Optional, not widely used |

Strongly recommended |

Address assignment |

Usually one address per host |

Usually multiple addresses per interface |

Address types |

Use of unicast, multicast, and broadcast address types |

Broadcast addressing no longer used; use of unicast, multicast, and anycast address types |

Address configuration |

Devices configured manually or with host configuration protocols like DHCP |

Devices configure themselves independently using stateless address autoconfiguration (SLAAC) or use DHCP |

NIST SP 800-119

NIST Special Publication (SP) 800-119 provides guidelines for the secure deployment of IPv6. According to this SP, organizations planning the deployment of IPv6 should consider the following during the planning process:

IPv6 is a new protocol that is not backward compatible with IPv4.

In most cases IPv4 will still be a component of an IT infrastructure. As such, even after the deployment of IPv6, organizations will require mechanisms for IPv6 and IPv4 co-existence.

IPv6 can be deployed just as securely as IPv4, although it should be expected that vulnerabilities within the protocol, as well as with implementation errors, will lead to an initial increase in IPv6-based vulnerabilities. As a successor to IPv4, IPv6 does incorporate many of the lessons learned by the IETF for IPv4.

IPv6 has already been deployed and is currently in operation in large networks globally.

To overcome possible obstacles associated with deploying IPv6, organizations should consider the following recommendations:

Encourage staff to increase their knowledge of IPv6 to a level comparable with their current understanding of IPv4.

Plan a phased IPv6 deployment utilizing appropriate transition mechanisms to support business needs; don’t deploy more transition mechanisms than necessary.

Plan for a long transition period with dual IPv4/IPv6 co-existence.

Organizations that are not yet deploying IPv6 globally should implement the following recommendations:

Block all IPv6 traffic, native and tunneled, at the organization’s firewall. Both incoming and outgoing traffic should be blocked.

Disable all IPv6-compatible ports, protocols, and services on all software and hardware.

Begin to acquire familiarity and expertise with IPv6, through laboratory experimentation and/or limited pilot deployments.

Make organization web servers, located outside of the organizational firewall, accessible via IPv6 connections. This will enable IPv6-only users to access the servers and aid the organization in acquiring familiarity with some aspects of IPv6 deployment.

Organizations that are deploying IPv6 should implement the following recommendations to mitigate IPv6 threats:

Apply an appropriate mix of different types of IPv6 addressing (privacy addressing, unique local addressing, sparse allocation, and so on) to limit access and knowledge of IPv6-addressed environments.

Use automated address management tools to avoid manual entry of IPv6 addresses, which is prone to error because of their length.

Develop a granular ICMPv6 (ICMP for IPv6) filtering policy for the enterprise. Ensure that ICMPv6 messages that are essential to IPv6 operation are allowed, but others are blocked.

Use IPsec to authenticate and provide confidentiality to assets that can be tied to a scalable trust model (an example is access to Human Resources assets by internal employees that make use of an organization’s public key infrastructure [PKI] to establish trust).

Identify capabilities and weaknesses of network protection devices in an IPv6 environment.

Enable controls that might not have been used in IPv4 due to a lower threat level during initial deployment (implementing default deny access control policies, implementing routing protocol security, and so on).

Pay close attention to the security aspects of transition mechanisms such as tunneling protocols.

Ensure that IPv6 routers, packet filters, firewalls, and tunnel endpoints enforce multicast scope boundaries and make sure that Multicast Listener Discovery (MLD) packets are not inappropriately routable.

Be aware that switching from an environment in which NAT provides IP addresses to unique global IPv6 addresses could trigger a change in the Federal Information Security Management Act (FISMA) system boundaries.

The following sections on IPv6 are adapted from NIST SP 800-119. For more information on IPv6 beyond what is provided here, please refer to NIST SP 800-119.

IPv6 Major Features

According to NIST SP 800-119, IPv6 has many new or improved features that make it significantly different from its predecessor. These features include extended address space, autoconfiguration, header structure, extension headers, IPsec, mobility, quality of service, route aggregation, and efficient transmission.

Extended Address Space

Each IPv4 address is typically 32 bits long and is written as four decimal numbers representing 8-bit octets and separated by decimal points or periods. An example address is 172.30.128.97. Each IPv6 address is 128 bits long (as defined in RFC 4291) and is written as eight 16-bit fields in colon-delimited hexadecimal notation (an example is fe80:43e3:9095:02e5:0216:cbff:feb2:7474). This new 128-bit address space provides an enormous number of unique addresses, 2128 (or 3.4 × 1038) addresses, compared with IPv4’s 232 (or 4.3 × 109) addresses.

Autoconfiguration

Essentially plug-and-play networking, IPv6 Stateless Address Auto-configuration is one of the most interesting and potentially valuable addressing features in IPv6. This feature allows devices on an IPv6 network to configure themselves independently using a stateless protocol. In IPv4, hosts are configured manually or with host configuration protocols like DHCP; with IPv6, autoconfiguration takes this a step further by defining a method for some devices to configure their IP addresses and other parameters without the need for a server. Moreover, it also defines a method, renumbering, whereby the time and effort required to renumber a network by replacing an old prefix with a new prefix are vastly reduced.

Header Structure

The IPv6 header is much simpler than the IPv4 header and has a fixed length of 40 bytes (as defined in RFC 2460). Even though this header is almost twice as long as the minimum IPv4 header, much of the header is taken up by two 16-byte IPv6 addresses, leaving only 8 bytes for other header information. This allows for improved fast processing of packets and protocol flexibility.

Extension Headers

An IPv4 header can be extended from 20 bytes to a maximum of 60 bytes, but this option is rarely used because it impedes performance and is often administratively prohibited for security reasons. IPv6 has a new method to handle options, which allows substantially improved processing and avoids some of the security problems that IPv4 options generated. IPv6 RFC 2460 defines six extension headers: hop-by-hop option header, routing header, fragment header, destination options header, authentication header (AH), and encapsulating security payload (ESP) header. Each extension header is identified by the Next Header field in the preceding header.

Mandatory IPsec Support

IP Security (IPsec) is a suite of protocols for securing IP communications by authenticating the sender and providing integrity protection plus, optionally, confidentiality for the transmitted data. This is accomplished through the use of two extension headers: ESP and AH. The negotiation and management of IPsec security protections and the associated secret keys are handled by the Internet Key Exchange (IKE) protocol. IPsec is a mandatory part of an IPv6 implementation; however, its use is not required. IPsec is also specified for securing particular IPv6 protocols (e.g., Mobile IPv6 and OSPFv3 [Open Shortest Path First version 3]).

Mobility

Mobile IPv6 (MIPv6) is an enhanced protocol supporting roaming for a mobile node, so that it can move from one network to another without losing IP-layer connectivity (as defined in RFC 3775). Mobile IPv6 uses IPv6’s vast address space and Neighbor Discovery (RFC 4861) to solve the handover problem at the network layer and maintain connections to applications and services if a device changes its temporary IP address. Mobile IPv6 also introduces new security concerns such as route optimization (RFC 4449) where data flow between the home agent and mobile node will need to be appropriately secured.

Quality of Service

IP (for the most part) treats all packets alike, as they are forwarded with best-effort treatment and no guarantee for delivery through the network. TCP adds delivery confirmations but has no options to control parameters such as delay or bandwidth allocation. Quality of Service (QoS) offers enhanced policy-based networking options to prioritize the delivery of information. Existing IPv4 and IPv6 implementations use similar QoS capabilities, such as Differentiated Services and Integrated Services, to identify and prioritize IP-based communications during periods of network congestion. Within the IPv6 header two fields can be used for QoS, the Traffic Class and Flow Label fields. The new Flow Label field and enlarged Traffic Class field in the main IPv6 header allow more efficient and finer grained differentiation of various types of traffic. The new Flow Label field can contain a label identifying or prioritizing a certain packet flow such as Voice over IP (VoIP) or videoconferencing, both of which are sensitive to timely delivery. IPv6 QoS is still a work in progress and security should be given increased consideration in this stage of development.

Route Aggregation

IPv6 incorporates a hierarchal addressing structure and has a simplified header allowing for improved routing of information from a source to a destination. The large amount of address space allows organizations with large numbers of connections to obtain blocks of contiguous address space.

Contiguous address space allows organizations to aggregate addresses under one prefix for identification on the Internet. This structured approach to addressing reduces the amount of information Internet routers must maintain and store and promotes faster routing of data. Additionally, it is envisioned that IPv6 addresses will primarily be allocated only from ISPs to customers. This will allow for ISPs to summarize route advertisements to minimize the size of the IPv6 Internet routing tables.

Efficient Transmission

IPv6 packet fragmentation control occurs at the IPv6 source host, not at an intermediate IPv6 router. With IPv4, a router can fragment a packet when the maximum transmission unit (MTU) of the next link is smaller than the packet it has to send. The router does this by slicing a packet to fit into the smaller MTU and sends it out as a set of fragments. The destination host collects the fragments and reassembles them. All fragments must arrive for the higher-level protocol to get the packet. Therefore, when one fragment is missing or an error occurs, the entire transmission has to be redone.

In IPv6, a host uses a procedure called Path Maximum Transmission Unit Discovery (PMTUD) to learn the path MTU size and eliminate the need for routers to perform fragmentation. The IPv6 Fragment Extension Header is used when an IPv6 host wants to fragment a packet, so fragmentation occurs at the source host, not the router, which allows efficient transmission.

IPv4 Versus IPv6 Threat Comparison

Based on the threat comparison between IPv4 and IPv6, the following actions are recommended to mitigate IPv6 threats during the deployment process:

Apply different types of IPv6 addressing (privacy addressing, unique local addressing, sparse allocation, etc.) to limit access and knowledge of IPv6-addressed environments.

Assign subnet and interface identifiers randomly to increase the difficulty of network scanning.

Develop a granular ICMPv6 filtering policy for the enterprise. Ensure that ICMPv6 messages that are essential to IPv6 operation are allowed, but others are blocked.

Use IPsec to authenticate and provide confidentiality to assets that can be tied to a scalable trust model (an example is access to Human Resources assets by internal employees that make use of an organization’s PKI to establish trust).

Identify capabilities and weaknesses of network protection devices in an IPv6 environment.

Enable controls that might not have been used in IPv4 due to a lower threat level during initial deployment (implementing default deny access control policies, implementing routing protocol security, etc.).

Pay close attention to the security aspects of transition mechanisms such as tunneling protocols.

On networks that are IPv4-only, block all IPv6 traffic.

IPv6 Addressing

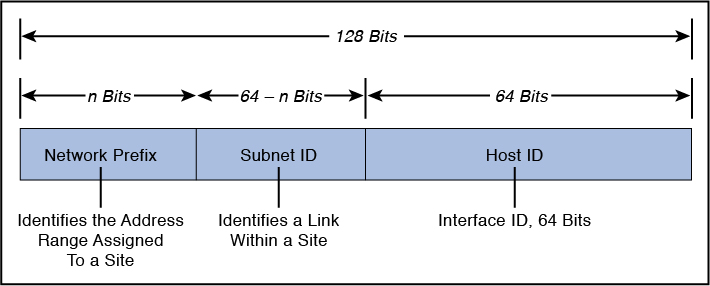

According to NIST SP 800-119, IPv6 addresses are 128 bits long and are written in what is called colon-delimited hexadecimal notation. An IPv6 address is composed of eight distinct numbers representing 16 bits each and written in base-16 (hexadecimal or hex) notation. The valid hex digits are 0 through 9 and A through F and together with the colon separator are the only characters that can be used for writing an IPv6 address. A comparison of IPv4 and IPv6 addressing conventions is illustrated in Figure 4-13.

An example of an IPv6 address is

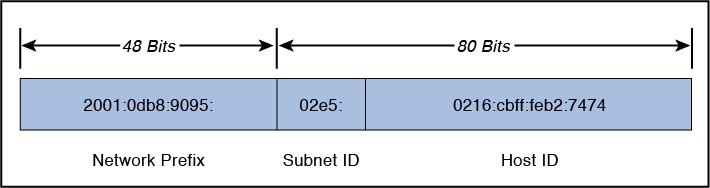

2001:0db8:9095:02e5:0216:cbff:feb2:7474

Note that the address contains eight distinct four-place hex values, separated by colons. Each of these values represents 16 bits, for a total of 128 bits in the entire address.

IPv6 addresses are divided among the network prefix, the subnet identifier, and the host identifier portions of the address. The network prefix is the high-order bits of an IP address, used to identify a specific network and, in some cases, a specific type of address. The subnet identifier (subnet ID) identifies a link within a site. The subnet ID is assigned by the local administrator of the site; a single site can have multiple subnet IDs. This is used as a designator for the network upon which the host bearing the address is resident. The host identifier (host ID) of the address is a unique identifier for the node within the network upon which it resides. It is identified with a specific interface of the host. Figure 4-14 depicts the IPv6 address format with the network prefix, subnet identifier, and host identifier.

There is no subnet mask in IPv6, although the slash notation used to identify the network address bits is similar to IPv4’s subnet mask notation. The IPv6 notation appends the prefix length and is written as a number of bits with a slash, which leads to the following format: IPv6 address/prefix length. The prefix length specifies how many of the address’s left-most bits comprise the network prefix. An example address with a 32-bit network prefix is 2001:0db8:9095:02e5:0216:cbff:feb2:7474/32.

Quantities of IPv6 addresses are assigned by the international registry services and ISPs based in part upon the size of the entity receiving the addresses. Large, top-tier networks may receive address allocations with a network prefix of 32 bits as long as the need is justified. In this case, the first two groupings of hex values, separated by colons, comprise the network prefix for the assignee of the addresses. The remaining 96 bits are available to the local administrator primarily for reallocation of the subnet ID and the host ID. The subnet ID identifies a link within a site, which can have multiple subnet IDs. The host ID within a network must be unique and identifies an interface on a subnet for the organization, similar to an assigned IPv4 address. Figure 4-15 depicts an IPv6 address with 32 bits allocated to the network prefix.

Government, educational, commercial, and other networks typically receive address allocations from top-tier ISPs with a network prefix of 48 bits (/48), leaving 80 bits for the subnet identifier and host identifier. Figure 4-16 depicts an IPv6 address with 48 bits allocated to the network prefix.

Subnets within an organization often have network prefixes of 64 bits (/64), leaving 64 bits for allocation to hosts’ interfaces. The host ID should use a 64-bit interface identifier that follows EUI-64 (Extended Unique Identifier) format when a global network prefix is used (001 to 111), except in the case when multicast addresses (1111 1111) are used. Figure 4-17 depicts an IPv6 address with 64 bits allocated to the network prefix.

Shorthand for Writing IPv6 Addresses

According to NIST SP 800-119, IPv6 addresses do not lend themselves to human memorization due to their length. Administrators of IPv4 networks typically can recall multiple IPv4 network and host addresses; remembering multiple IPv6 network and host addresses is more challenging. The notation for IPv6 addresses may be compressed and simplified under specific circumstances.

One to three zeros that appear as the leading digits in any colon-delimited hexadecimal grouping may be dropped. This simplifies the address and makes it easier to read and to write. For example:

2001:0db8:0aba:02e5:0000:0ee9:0000:0444/48

becomes

2001:db8:aba:2e5:0:ee9:0:444/48

It is important to note that trailing zeros may not be dropped, because they have intrinsic place value in the address format.

Further efficiency is gained by combining all-zero portions of the address. Any colon-delimited portion of an address containing all zeros may be compressed so that nothing appears between the leading and trailing colons. For example:

2001:0db8:0055:0000:cd23:0000:0000:0205/48

becomes

2001:db8:55:0:cd23::205/48

In this example, the sixth and seventh 16-bit groupings contain all zeros; they were compressed by eliminating the zeros completely, as well as the colon that divided the two groupings. Nevertheless, compressing an address by removing one or more consecutive colons between groups of zeros may only be done once per address. The fourth 16-bit grouping in the example also contains all zeros, but in the condensed form of the address, it is represented with a single zero. A choice had to be made as to which group of zeros was to be compressed. The example address could be written

2001:db8:55::cd23:0:0:205/48

but this is not as efficient as

2001:db8:55:0:cd23::205/48

It is important to note that both of the addresses in the preceding paragraph are properly formatted, but the latter address is shorter. Compression is just a convention for writing addresses, it does not affect how an address is used, and it makes no difference whether compression falls within the network prefix, host identifier, or across both portions of the address.

IPv6 Address Types

According to NIST SP 800-119, IPv6 addressing differs from IPv4 in several ways aside from the address size. In both IPv4 and IPv6, addresses specifically belong to interfaces, not to nodes. However, because IPv6 addresses are not in short supply, interfaces often have multiple addresses. IPv6 addresses consist of a network prefix in the higher order bits and an interface identifier in the lower order bits. Moreover, the prefix indicates a subnet or link within a site, and a link can be assigned multiple subnet IDs.

Many IPv6 address ranges are reserved or defined for special purposes by the IETF’s IPv6 standards and by IANA. Table 4-5 lists the major assignments and how to identify the different types of IPv6 address from the high-order bits.

Table 4-5 IPv6 Address Types (Copied from NIST SP 800-119)

Address Type |

IPv6 Notation |

Uses |

|---|---|---|

Embedded IPv4 address |

::FFFF/96 |

Prefix for embedding IPv4 address in an IPv6 address |

Loopback |

::1/128 |

Loopback address on every interface |

Global unicast |

2000::/3 |

Global unicast and anycast (allocated) |

Global unicast |

4000::/2–FC00::/9 |

Global unicast and anycast (unallocated) |

Teredo |

2001:0000::/32 |

Teredo |

Nonroutable |

2001:DB8::/32 |

Nonroutable. Documentation purposes only |

6to4 |

2002::/16 |

6to4 |

6Bone |

3FFE::/16 |

Deprecated. 6Bone testing assignment, 1996 through mid-2006 |

Link-local unicast |

FE80::/10 |

Link-local unicast |

Reserved |

FEC0::/10 |

Deprecated. Formerly Site-local address space, unicast and anycast |

Local IPv6 address |

FC00::/7 |

Unicast Unique local address space, unicast and anycast |

Multicast |

FF00::/8 |

Multicast address space |

IPv6 uses the notion of address types for different situations. These different address types are defined below:

Unicast addresses: Addresses that identify one interface on a single node; a packet with a unicast destination address is delivered to that interface.

Multicast addresses: RFC 4291 defines a multicast address as “An identifier for a set of interfaces (typically belonging to different nodes). A packet sent to a multicast address is delivered to all interfaces identified by that address.” Although multicast addresses are common in both IPv4 and IPv6, in IPv6 multicasting has new applications. The single most important aspect of multicast addressing under IPv6 is that it enables fundamental IPv6 functionality, including neighbor discovery (ND) and router discovery. Multicast addresses begin with FF00::/8. They are intended for efficient one-to-many and many-to-many communication. The IPv6 standards prohibit sending packets from a multicast address; multicast addresses are valid only as destinations.

Anycast addresses: Addresses that can identify several interfaces on one or more nodes; a packet with an anycast destination address is delivered to one of the interfaces bearing the address, usually the closest one as determined by routing protocols. Anycast addressing was introduced as an add-on for IPv4, but it was designed as a basic component of IPv6.

The format of anycast addresses is indistinguishable from unicast addresses.

Broadcast addressing is a common attribute of IPv4, but is not defined or implemented in IPv6. Multicast addressing in IPv6 meets the requirements that broadcast addressing formerly fulfilled.

IPv6 Address Scope

According to NIST SP 800-119, in the original design for IPv6, link-local, site-local, and global addresses were defined; later, it was realized that site-local addresses were not well enough defined to be useful. Site-local addresses were abandoned and replaced with unique local addresses. Older implementations of IPv6 may still use site-local addresses, so IPv6 firewalls need to recognize and handle site-local addresses correctly.

The IPv6 standards define several scopes for meaningful IPv6 addresses:

Interface-local: This applies only to a single interface; the loopback address has this scope.

Link-local: This applies to a particular LAN or network link; every IPv6 interface on a LAN must have an address with this scope. Link-local addresses start with FE80::/10. Packets with link-local destination addresses are not routable and must not be forwarded off the local link.

Link-local addresses are used for administrative purposes such as neighbor and router discovery.

Site-local: This scope was intended to apply to all IPv6 networks or a single logical entity such as the network within an organization. Addresses with this scope start with FEC0::/10. They were intended not to be globally routable but potentially routed between subnets within an organization. Site-local addresses have been deprecated and replaced with unique local addresses.

Unique local unicast: This scope is meant for a site, campus, or enterprise’s internal addressing. It replaces the deprecated site-local concept. Unique local addresses (ULAs) may be routable within an enterprise. Use of unique local addresses is not yet widespread.

Global: The global scope applies to the entire Internet. These are globally unique addresses that are routable across all publicly connected networks.

Embedded IPv4 unicast: The IPv6 specification has the ability to leverage existing IPv4 addressing schemes. The transition to IPv6 will be gradual, so two special types of addresses have been defined for backward compatibility with IPv4: IPv4-compatible IPv6 addresses (rarely used and deprecated in RFC 4291) and IPv4-mapped IPv6 addresses. Both allow the protocol to derive addresses by embedding IPv4 addresses in the body of an IPv6 address. An IPv4-mapped IPv6 address is used to represent the addresses of IPv4-only nodes as an IPv6 address, which allows an IPv6 node to use this address to send a packet to an IPv4-only node.

IPv6 makes use of addresses other than those shown above. The unspecified address consists of all zeros (0:0:0:0:0:0:0:0 or simply ::) and may be the source address of a node soliciting its own IP address from an address assignment authority (such as a DHCPv6 server). IPv6-compliant routers never forward a packet with an unspecified address. The loopback address is used by a node to send a packet to itself. The loopback address, 0:0:0:0:0:0:0:1 (or simply ::1), is defined as being interface local.

IPv6-compliant hosts and routers never forward packets with a loopback destination.

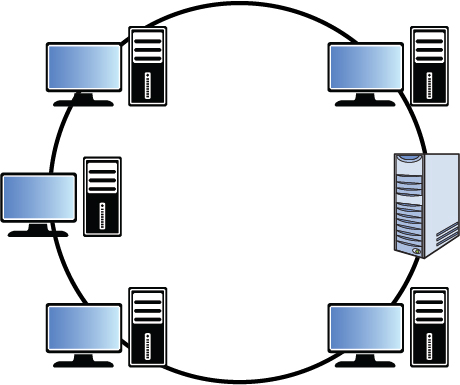

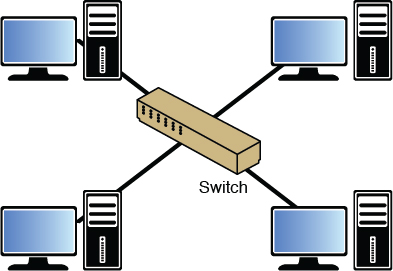

Network Types

So far we have discussed network topologies and technologies, so now let’s look at a third way to describe networks: network type. Network type refers to the scope of the network. Is it a LAN or a WAN? Is it a part of the internal network, or is it an extranet? This section discusses and differentiates all these network types.

LAN

First let’s talk about what makes a local area network (LAN) local. Although classically we think of a LAN as a network located in one location, such as a single office, referring to a LAN as a group of systems that are connected with a fast connection is more correct. For purposes of this discussion, that is any connection over 10 Mbps.

That might not seem very fast to you, but it is when compared to a WAN. Even a T1 connection is only 1.544 Mbps. Using this as our yardstick, if a single campus network has a WAN connection between two buildings, then the two networks are considered two LANs rather than a single LAN. In most cases, however, networks in a single campus are typically not connected with a WAN connection, which is why usually you hear a LAN defined as a network in a single location.

Intranet

Within the boundaries of a single LAN, there can be subdivisions for security purposes. The LAN might be divided into an intranet and an extranet. The intranet is the internal network of the enterprise. It would be considered a trusted network and typically houses any sensitive information and systems and should receive maximum protection with firewalls and strong authentication mechanisms.

Extranet

An extranet is a network logically separate from the intranet where resources that will be accessed from the outside world are made available. Access might be granted to customers, business partners, and the public in general. All traffic between this network and the intranet should be closely monitored and securely controlled. Nothing of a sensitive nature should be placed in the extranet.

MAN

A metropolitan area network (MAN) is a type of LAN that encompasses a large area such as the downtown of a city. In many cases it is a backbone that is provided for LANs to hook into. Three technologies are usually used in a MAN:

Fiber Distributed Data Interface (FDDI)

Synchronous Optical Networking (SONET)

Metro Ethernet

FDDI and SONET rings, which both rely on fiber cabling, can span large areas, and businesses can connect to the rings using T1, fractional T1, or T3 connections. FDDI rings are a double ring with fault tolerance built in. SONET is also self- healing, meaning it has a double ring with a backup line if a line goes bad.

Metro Ethernet is the use of Ethernet technology over a wide area. It can be pure Ethernet or a combination of Ethernet and other technologies such as the ones mentioned in this section. Traditional Ethernet (the type used on a LAN) is less scalable. It is often combined with Multiprotocol Label Switching (MPLS) technology, which is capable of carrying packets of various types, including Ethernet.

Less capable MANs often feed into MANs of higher capacity. Conceptually, you can divide the MAN architecture into three sections: customer, aggregation, and core layer. The customer section is the local loop that connects from the customer to the aggregation network, which then feeds into the high-speed core. The high-speed core connects the aggregation networks to one another.

WAN

WANs are used to connect LANs and MANs together. Many technologies can be used for these connections. They vary in capacity and cost, and access to these networks is purchased from a telecommunications company. The ultimate WAN is the Internet, the global backbone to which all MANs and LANs are connected. However, not all WANs connect to the Internet because some are private, dedicated links to which only the company paying for them has access.

WLAN

A wireless local area network (WLAN) allows devices to connect wirelessly to each other via a wireless access point (WAP). Multiple WAPs can work together to extend the range of the WLAN. WLAN technologies are discussed in more detail later in this chapter.

SAN

A storage area network (SAN) provides a connection to data storage devices through a technology like Fibre Channel or iSCSI, both of which are discussed in more detail later in this chapter.

CAN

A campus area network (CAN) includes multiple LANs but is smaller than a MAN. A CAN could be implemented on a hospital or local business campus.

PAN

A personal area network (PAN) includes devices, such as computers, telephones, tablets, and mobile phones, that are in close proximity with one another. PANs are usually implemented using Bluetooth, Z-Wave, Zigbee, and Infrared Data Association (IrDA).

Protocols and Services

Many protocols and services have been developed over the years to add functionality to networks. In many cases these protocols reside at the Application layer of the OSI model. These Application layer protocols usually perform a specific function and rely on the lower layer protocols in the TCP/IP suite and protocols at Layer 2 (like Ethernet) to perform routing and delivery services.

This section covers some of the most important of these protocols and services, including some that do not operate at the Application layer, focusing on the function and port number of each. Port numbers are important to be aware of from a security standpoint because in many cases port numbers are referenced when configuring firewall rules. In cases where a port or protocol number is relevant, it will be given as well.

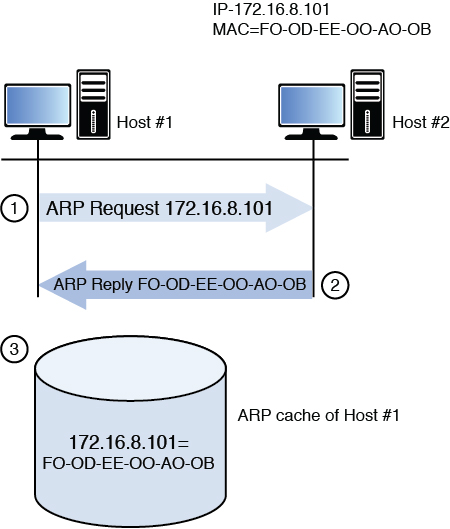

ARP/RARP

Address Resolution Protocol (ARP), one of the protocols in the TCP/IP suite, operates at Layer 3 of the OSI model. The information it derives is utilized at Layer 2, however. ARP’s job is to resolve the destination IP address placed in the header by IP to a Layer 2 or MAC address. Remember, when frames are transmitted on a local segment the transfer is done in terms of MAC addresses, not IP addresses, so this information must be known.

Whenever a packet is sent across the network, at every router hop and again at the destination subnet, the source and destination MAC address pairs change but the source and destination IP addresses do not. The process that ARP uses to perform this resolution is called an ARP broadcast.

First an area of memory called the ARP cache is consulted. If the MAC address has been recently resolved, the mapping will be in the cache and a broadcast is not required. If the record has aged out of the cache, ARP sends a broadcast frame to the local network that all devices will receive. The device that possesses the IP address responds with its MAC address. Then ARP places the MAC address in the frame and sends the frame. Figure 4-18 illustrates this process.

Reverse ARP (RARP) resolves MAC addresses to IP addresses.

In IPv6 networks, the functionality of ARP is provided by the Neighbor Discovery Protocol (NDP).

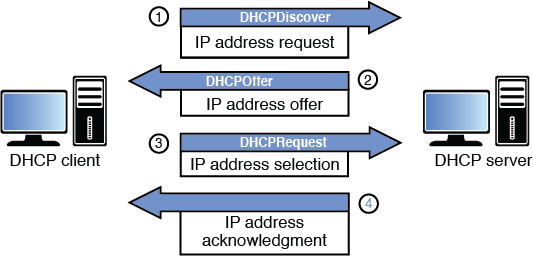

DHCP/BOOTP

Dynamic Host Configuration Protocol (DHCP) is a service that can be used to automate the process of assigning an IP configuration to the devices in the network. A DHCP server uses the bootstrap protocol (BOOTP) to perform its functions. Manual configuration of an IP address, subnet mask, default gateway, and DNS server is not only time-consuming but fraught with opportunity for human error. Using DHCP can not only automate this, but can also eliminate network problems from this human error.