Chapter 11. Endpoint Telemetry and Analysis

This chapter covers the following topics:

• Understanding Host Telemetry

• Endpoint Security Technologies

The focus of this chapter is on understanding how analysts in a security operations center (SOC) can use endpoint telemetry for incident response and analysis. This chapter covers how to collect and analyze telemetry from Windows, Linux, and macOS systems, as well as mobile devices.

“Do I Know This Already?” Quiz

The “Do I Know This Already?” quiz allows you to assess whether you should read this entire chapter thoroughly or jump to the “Exam Preparation Tasks” section. If you are in doubt about your answers to these questions or your own assessment of your knowledge of the topics, read the entire chapter. Table 11-1 lists the major headings in this chapter and their corresponding “Do I Know This Already?” quiz questions. You can find the answers in Appendix A, “Answers to the ‘Do I Know This Already?’ Quizzes and Review Questions.”

Table 11-1 “Do I Know This Already?” Foundation Topics Section-to-Question Mapping

1. Which of the following are useful attributes you should seek to collect from endpoints? (Select all that apply.)

a. IP address of the endpoint or DNS host name

b. Application logs

c. Processes running on the machine

d. NetFlow data

2. SIEM solutions can collect logs from popular host security products, including which of the following? (Select all that apply.)

a. Antivirus or antimalware applications

b. CloudLock logs

c. NetFlow data

d. Personal (host-based) firewalls

3. Which of the following are useful reports you can collect from Cisco ISE related to endpoints? (Select all that apply.)

a. Web server log reports

b. Top Application reports

c. RADIUS Authentication reports

d. Administrator Login reports

4. Which of the following is not true about listening ports?

a. A listening port is a port held open by a running application in order to accept inbound connections.

b. Seeing traffic from a known port will always identify the associated service.

c. Listening ports use values that can range between 1 and 65,535.

d. TCP port 80 is commonly known for Internet traffic.

5. A traffic substitution and insertion attack does which of the following?

a. Substitutes the traffic with data in a different format but with the same meaning

b. Substitutes the payload with data in the same format but with a different meaning

c. Substitutes the payload with data in a different format but with the same meaning

d. Substitutes the traffic with data in the same format but with a different meaning

6. Which of the following is not a method for identifying running processes?

a. Reading network traffic from a SPAN port with the proper technology

b. Reading port security logs

c. Reading traffic inline with the proper technology

d. Using port scanner technology

7. Which of the following statements is not true about host profiling?

a. Latency is a delay in throughput detected at the gateway of the network.

b. Throughput is typically measured in bandwidth.

c. In a valley there is an unusually low amount of throughput compared to the normal baseline.

d. In a peak there is a spike in throughput compared to the normal baseline.

8. Which of the follow best describes Windows process permissions?

a. User authentication data is stored in a token that is used to describe the security context of all processes associated with the user.

b. Windows generates processes based on super user–level security permissions and limits processes based on predefined user authentication settings.

c. Windows process permissions are developed by Microsoft and enforced by the host system administrator.

d. Windows grants access to all processes unless otherwise defined by the Windows administrator.

9. Which of the following is a true statement about stacks and heaps?

a. Heaps can allocate a block of memory at any time and free it at any time.

b. Stacks can allocate a block of memory at any time and free it at any time.

c. Heaps are best when you know exactly how much memory you should use.

d. Stacks are best when you don’t know how much memory to use.

10. What is the Windows Registry?

a. A list of registered software on the Windows operating system

b. Memory allocated to running programs

c. A database used to store information necessary to configure the system for users, applications, and hardware devices

d. A list of drivers for applications running on the Windows operating system

11. Which of the following is a function of the Windows Registry?

a. To register software with the application provider

b. To load device drivers and startup programs

c. To back up application registration data

d. To log upgrade information

12. Which of the following statements is true?

a. WMI is a command standard used by most operating systems.

b. WMI cannot run on older versions of Windows such as Windows 7.

c. WMI is a defense program designed to prevent scripting languages from managing Microsoft Windows computers and services.

d. WMI allows scripting languages to locally and remotely manage Microsoft Windows computers and services.

13. What is a virtual address space in Windows?

a. The physical memory allocated for processes

b. A temporary space for processes to execute

c. The set of virtual memory addresses that reference the physical memory object a process is permitted to use

d. The virtual memory address used for storing applications

14. What is the difference between a handle and pointer?

a. A handle is an abstract reference to a value, whereas a pointer is a direct reference.

b. A pointer is an abstract reference to a value, whereas a handle is a direct reference.

c. A pointer is a reference to a handle.

d. A handle is a reference to a pointer.

15. Which of the following is true about handles?

a. When Windows moves an object such as a memory block to make room in memory and the location of the object is impacted, the handles table is updated.

b. Programmers can change a handle using Windows API.

c. Handles can grant access rights against the operating system.

d. When Windows moves an object such as a memory block to make room in memory and the location of the object is impacted, the pointer to the handle is updated.

16. Which of the following is true about Windows services?

a. Windows services function only when a user has accessed the system.

b. The Services Control Manager is the programming interface for modifying the configuration of Windows Services.

c. Microsoft Windows services run in their own user session.

d. Stopping a service requires a system reboot.

17. Which process type occurs when a parent process is terminated and the remaining child process is permitted to continue on its own?

a. Zombie process

b. Orphan process

c. Rogue process

d. Parent process

18. A zombie process occurs when which of the following happens?

a. A process holds its associated memory and resources but is released from the entry table.

b. A process continues to run on its own.

c. A process holds on to associated memory but releases resources.

d. A process releases the associated memory and resources but remains in the entry table.

19. What is the best explanation of a fork (system call) in Linux?

a. When a process is split into multiple processes

b. When a parent process creates a child process

c. When a process is restarted from the last run state

d. When a running process returns to its original value

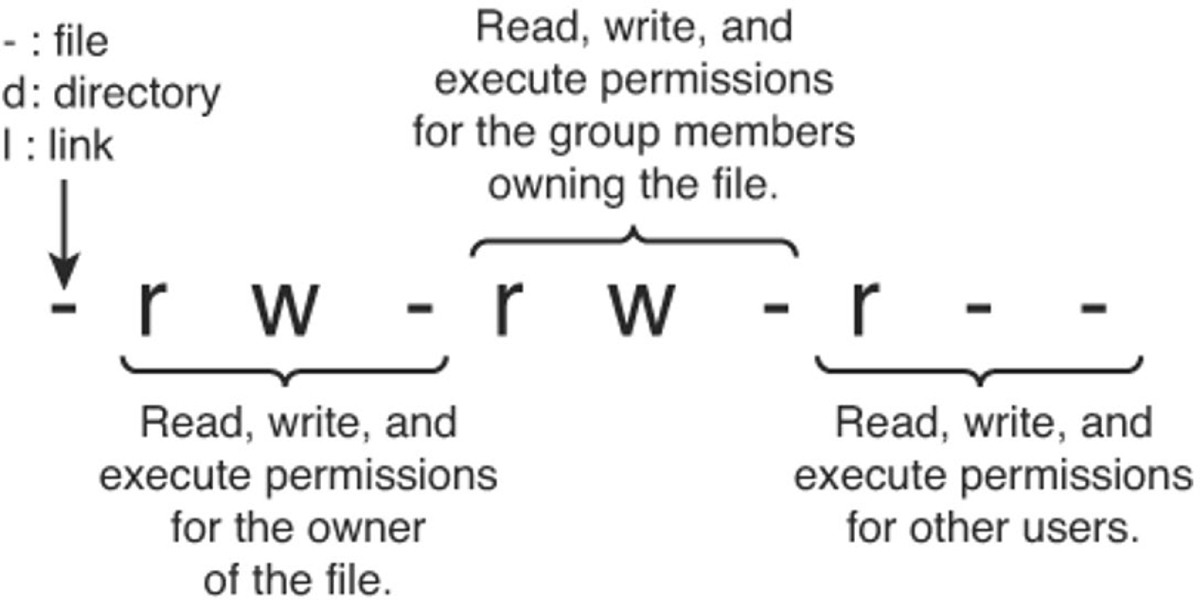

20. Which of the following gives permissions to the group owners for read and execute; gives the file owner permission for read, write, and execute; and gives all others permissions for execute?

a. -rwx-rx-x

b. -rx-rwx-x

c. -rx-x-rwx

d. -rwx-rwx-x

21. Which is a correct explanation of daemon permissions?

a. Daemons run at root-level access.

b. Daemons run at super user–level access.

c. Daemons run as the init process.

d. Daemons run at different privileges, which are provided by their parent process.

22. Which of the following is not true about symlinks?

a. A symlink will cause a system error if the file it points to is removed.

b. Showing the contents of a symlink will display the contents of what it points to.

c. An orphan symlink occurs when the link that a symlink points to doesn’t exist.

d. A symlink is a reference to a file or directory.

23. What is a daemon?

a. A program that manages the system’s motherboard

b. A program that runs other programs

c. A computer program that runs as a background process rather than being under direct control of an interactive user

d. The only program that runs in the background of a Linux system

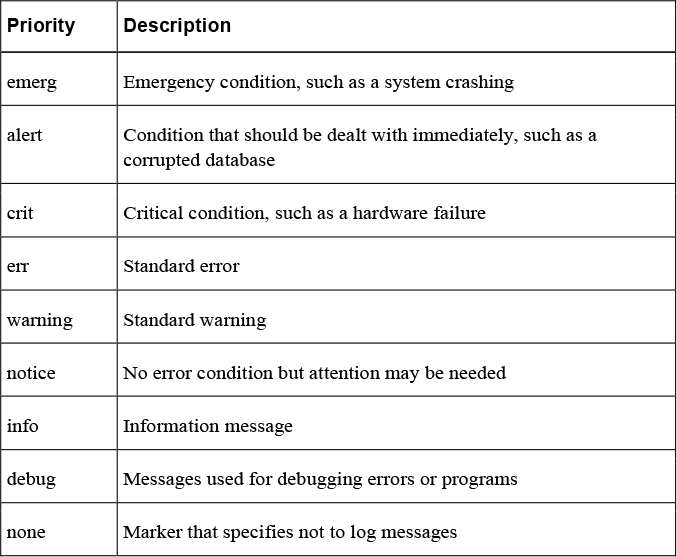

24. Which priority level of logging will be sent if the priority level is err?

a. err

b. err, warning, notice, info, debug, none

c. err, alert, emerg

d. err, crit, alert, emerg

25. Which of the following is an example of a facility?

a. marker

b. server

c. system

d. mail

26. What is a Trojan horse?

a. A piece of malware that downloads and installs other malicious content from the Internet to perform additional exploitation on an affected system.

b. A type of malware that executes instructions determined by the nature of the Trojan to delete files, steal data, and compromise the integrity of the underlying operating system, typically by leveraging social engineering and convincing a user to install such software.

c. A virus that replicates itself over the network infecting numerous vulnerable systems.

d. A type of malicious code that is injected into a legitimate application. An attacker can program a logic bomb to delete itself from the disk after it performs the malicious tasks on the system.

27. What is ransomware?

a. A type of malware that compromises a system and then often demands a ransom from the victim to pay the attacker in order for the malicious activity to cease, to recover encrypted files, or for the malware to be removed from the affected system

b. A set of tools used by attackers to elevate their privilege to obtain root-level access to completely take control of the affected system

c. A type of intrusion prevention system

d. A type of malware that doesn’t affect mobile devices

28. Which of the following are examples of free antivirus or antimalware software? (Select all that apply.)

a. McAfee Antivirus

b. Norton AntiVirus

c. ClamAV

d. Immunet

29. Host-based firewalls are often referred to as which of the following?

a. Next-generation firewalls

b. Personal firewalls

c. Host-based intrusion detection systems

d. Antivirus software

30. What is an example of a Cisco solution for endpoint protection?

a. Cisco ASA

b. Cisco ESA

c. Cisco AMP for Endpoints

d. Firepower Endpoint System

31. Which of the following are examples of application file and folder attributes that can help with application whitelisting? (Select all that apply.)

a. Application store

b. File path

c. Filename

d. File size

32. Which of the following are examples of sandboxing implementations? (Select all that apply.)

a. Google Chromium sandboxing

b. Java virtual machine (JVM) sandboxing

c. HTML CSS and JavaScript sandboxing

d. HTML5 “sandbox” attribute for use with iframes

Foundation Topics

Understanding Host Telemetry

Telemetry from user endpoints, mobile devices, servers, and applications is crucial when protecting, detecting, and reacting to security incidents and attacks. The following sections describe several examples of this type of telemetry and their use.

Logs from User Endpoints

Logs from user endpoints can help you not only for attribution if they are part of a malicious activity but also for victim identification. However, how do you determine where an endpoint and user are located? If you do not have sophisticated host or network management systems, it’s very difficult to track every useful attribute about user endpoints. This is why it is important what type of telemetry and metadata you collect as well as how you keep such telemetry and metadata updated and how you perform checks against it.

The following are some useful attributes you should seek to collect:

• Location based on just the IP address of the endpoint or DNS host name

• Application logs

• Processes running on the machine

You can correlate those with VPN and DHCP logs. However, these can present their own challenges because of the rapid turnover of network addresses associated with dynamic addressing protocols. For example, a user may authenticate to a VPN server, drop the connection, reauthenticate, and end up with a completely new address.

The level of logs you want to collect from each and every user endpoint depends on many environmental factors, such as storage, network bandwidth, and also the ability to analyze such logs. In many cases, more detailed logs are used in forensics investigations.

For instance, let’s say you are doing a forensics investigation on an Apple macOS device; in that case, you may need to collect hard evidence on everything that happened on that device. If you monitor endpoint machines daily, you will not be able to inspect and collect information about the device and the user in the same manner you would when doing a forensics investigation. For example, for that same Mac OS X machine, you may want to take a top-down approach while investigating files, beginning at the root directory, and then move into the User directory, which may have a majority of the forensic evidence.

Another example is dumping all the account information on the system. Mac OS X contains a SQLite database for the accounts used on the system. This includes information such as email addresses, social media usernames, and descriptions of the items.

On Windows, events are collected and stored by the Event Logging Service. This service keeps events from different sources in event logs and includes chronological information. On the other hand, the type of data that will be stored in an event log depends on system configuration and application settings. Windows event logs provide a lot of data for investigators. Some items of the event log record, such as Event ID and Event Category, help security professionals get information about a certain event. The Windows Event Logging Service can be configured to store granular information about numerous objects on the system. Almost any resource of the system can be considered an object, thus allowing security professionals to detect any requests for unauthorized access to resources.

Typically, what you do in a security operations center (SOC) is monitor logs sent by endpoint systems to a security information and event management (SIEM) system. You already learned one example of a SIEM: Splunk.

A SIM mainly provides a way to digest large amount of log data, making it easy to search through collected data. SEMs are designed to consolidate and correlate large amounts of event data so that the security analyst or network administrator can prioritize events and react appropriately. SIEM solutions can collect logs from popular host security products, including the following:

• Personal firewalls

• Intrusion detection/prevention systems

• Antivirus or antimalware

• Web security logs (from a web security appliance)

• Email security logs (from an email security appliance)

• Advanced malware protection logs

There are many other host security features, such as data-loss prevention and VPN clients. For example, the Cisco AnyConnect Secure Mobility Client includes the Network Visibility Module (NVM), which is designed to monitor application use by generating IPFIX flow information.

The AnyConnect NVM collects the endpoint telemetry information, including the following:

• The endpoint device, irrespective of its location

• The user logged in to the endpoint

• The application that generates the traffic

• The network location the traffic was generated on

• The destination (FQDN) to which this traffic was intended

The AnyConnect NVM exports the flow records to a collector (such as the Cisco Stealthwatch system). You can also configure NVM to get notified when the VPN state changes to connected and when the endpoint is in a trusted network. NVM collects and exports the following information:

• Source IP address

• Source port

• Destination IP address

• Destination port

• A universally unique identifier (UUID) that uniquely identifies the endpoint corresponding to each flow

• Operating system (OS) name

• OS version

• System manufacturer

• System type (x86 or x64)

• Process account, including the authority/username of the process associated with the flow

• Parent process associated with the flow

• The name of the process associated with the flow

• An SHA-256 hash of the process image associated with the flow

• An SHA-256 hash of the image of the parent process associated with the flow

• The DNS suffix configured on the interface associated with the flow on the endpoint

• The FQDN or host name that resolved to the destination IP on the endpoint

• The total number of incoming and outgoing bytes on that flow at Layer 4 (payload only)

Mobile devices in some cases are treated differently because of their dynamic nature and limitations such as system resources and restrictions. Many organizations use mobile device management (MDM) platforms to manage policies on mobile devices and to monitor such devices. The policies can be applied using different techniques—for example, by using a sandbox that creates an isolated environment that limits what applications can be accessed and controls how systems gain access to the environment. In other scenarios, organizations install an agent on the mobile device to control applications and to issue commands (for example, to remotely wipe sensitive data). Typically, MDM systems include the following features:

• Mandatory password protection

• Jailbreak detection

• Remote wipe

• Remote lock

• Device encryption

• Data encryption

• Geolocation

• Malware detection

• VPN configuration and management

• Wi-Fi configuration and management

The following are a few MDM vendors:

• AirWatch

• MobileIron

• Citrix

• Good Technology

MDM solutions from these vendors typically have the ability to export logs natively to Splunk or other third-party reporting tools such as Tableau, Crystal Reports, and QlikView.

You can also monitor user activity using the Cisco Identity Services Engine (ISE). The Cisco ISE reports are used with monitoring and troubleshooting features to analyze trends and to monitor user activities from a central location. Think about it: identity management systems such as the Cisco ISE keep the keys to the kingdom. It is very important to monitor not only user activity but also the activity on the Cisco ISE itself.

The following are a few examples of user and endpoint reports you can run on the Cisco ISE:

• AAA Diagnostics reports provide details of all network sessions between Cisco ISE and users. For example, you can use user authentication attempts.

• The RADIUS Authentications report enables a security analyst to obtain the history of authentication failures and successes.

• The RADIUS Errors report enables security analysts to check for RADIUS requests dropped by the system.

• The RADIUS Accounting report tells you how long users have been on the network.

• The Authentication Summary report is based on the RADIUS authentications. It tells the administrator or security analyst about the most common authentications and the reason for any authentication failures.

• The OCSP Monitoring Report allows you to get the status of the Online Certificate Status Protocol (OCSP) services and provides a summary of all the OCSP certificate validation operations performed by Cisco ISE.

• The Administrator Logins report provides an audit trail of all administrator logins. This can be used in conjunction with the Internal Administrator Summary report to verify the entitlement of administrator users.

• The Change Configuration Audit report provides details about configuration changes within a specified time period. If you need to troubleshoot a feature, this report can help you determine if a recent configuration change contributed to the problem.

• The Client Provisioning report indicates the client-provisioning agents applied to particular endpoints. You can use this report to verify the policies applied to each endpoint to verify whether the endpoints have been correctly provisioned.

• The Current Active Sessions report enables you to export a report with details about who was currently on the network within a specified time period.

• The Guest Activity report provides details about the websites that guest users are visiting. You can use this report for security-auditing purposes to demonstrate when guest users accessed the network and what they did on it.

• The Guest Accounting report is a subset of the RADIUS Accounting report. All users assigned to the Activated Guest or Guest Identity group appear in this report.

• The Endpoint Protection Service Audit report is based on RADIUS accounting. It displays historical reporting of all network sessions for each endpoint.

• The Mobile Device Management report provides details about integration between Cisco ISE and the external mobile device management (MDM) server.

• The Posture Detail Assessment report provides details about posture compliancy for a particular endpoint. If an endpoint previously had network access and then suddenly was unable to access the network, you can use this report to determine whether a posture violation occurred.

• The Profiled Endpoint Summary report provides profiling details about endpoints that are accessing the network.

Logs from Servers

![]()

Just as you do with endpoints, it is important that you analyze server logs. You can do this by analyzing simple syslog messages or more specific web or file server logs. It does not matter whether the server is a physical device or a virtual machine.

For instance, on Linux-based systems, you can review and monitor logs stored under /var/log. Example 11-1 shows a snippet of the syslog of a Linux-based system where you can see postfix database messages on a system running the GitLab code repository.

Example 11-1 Syslog on a Linux system

Sep 4 17:12:43 odin postfix/qmgr[2757]: 78B9C1120595: from=<gitlab@odin>, size=1610, nrcpt=1 (queue active) Sep 4 17:13:13 odin postfix/smtp[5812]: connect to gmail-smtp-in.l.google.com[173.194.204.27]:25: Connection timed out Sep 4 17:13:13 odin postfix/smtp[5812]: connect to gmail-smtp-in.l.google.com[2607:f8b0:400d:c07::1a]:25: Network is unreachable Sep 4 17:13:43 odin postfix/smtp[5812]: connect to alt1.gmail-smtp-in.l.google.com[64.233.190.27]:25: Connection timed out Sep 4 17:13:43 odin postfix/smtp[5812]: connect to alt1.gmail-smtp-in.l.google.com[2800:3f0:4003:c01::1a]:25: Network is unreachable Sep 4 17:13:43 odin postfix/smtp[5812]: connect to alt2.gmail-smtp-in.l.google.com[2a00:1450:400b:c02::1a]:25: Network is unreachable

You can also check the audit.log for authentication and user session information. Example 11-2 shows a snippet of the auth.log on a Linux system, where the user (omar) initially typed his password incorrectly while attempting to connect to the server (odin) via SSH.

Example 11-2 audit.log on a Linux System

Sep 4 17:21:32 odin sshd[6414]: Failed password for omar from 192.168.78.3 port 52523 ssh2 Sep 4 17:21:35 odin sshd[6422]: pam_ecryptfs: Passphrase file wrapped Sep 4 17:21:36 odin sshd[6414]: Accepted password for omar from 192.168.78.3 port 52523 ssh2 Sep 4 17:21:36 odin sshd[6414]: pam_unix(sshd:session): session opened for user omar by (uid=0) Sep 4 17:21:36 odin systemd: pam_unix(systemd-user:session): session opened for user omar by (uid=0)

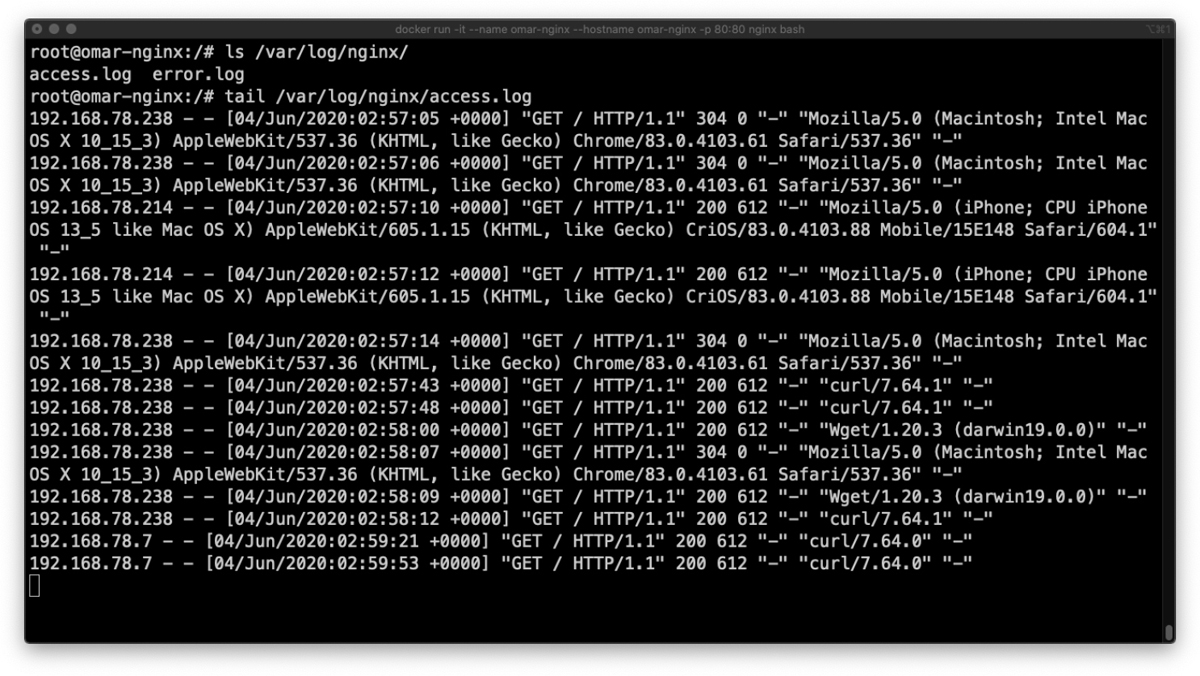

Web server logs are also important and should be monitored. Of course, the amount of activity on these logs can be overwhelming—thus the need for robust SIEM and log management platforms such as Splunk, Naggios, and others. Example 11-3 shows a snippet of a web server (Apache httpd) log.

Example 11-3 Apache httpd Log on a Linux System

192.168.78.167 - - [02/Apr/2022:23:32:46 -0400] “GET / HTTP/1.1” 200 3525 “-” “Mozilla/5.0 (Macintosh; Intel Mac OS X 10_11_3) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/48.0.2564.116 Safari/537.36” 192.168.78.167 - - [02/Apr/2022:23:32:46 -0400] “GET /icons/ubuntu-logo.png HTTP/1.1” 200 3689 “http://192.168.78.8/” “Mozilla/5.0 (Macintosh; Intel Mac OS X 10_11_3) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/48.0.2564.116 Safari/537.36” 192.168.78.167 - - [02/Apr/2022:23:32:47 -0400] “GET /favicon.ico HTTP/1.1” 404 503 “http://192.168.78.8/” “Mozilla/5.0 (Macintosh; Intel Mac OS X 10_11_3) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/48.0.2564.116 Safari/537.36” 192.168.78.167 - - [03/Apr/2022:00:37:11 -0400] “GET / HTTP/1.1” 200 3525 “-” “Mozilla/5.0 (Macintosh; Intel Mac OS X 10_11_3) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/48.0.2564.116 Safari/537.36”

Host Profiling

Profiling hosts on the network is similar to profiling network behavior. This capability can be valuable in identifying vulnerable systems, internal threats, what applications are installed on hosts, and so on. We touch on how to view details directly from the host; however, the main focus is on profiling hosts as an outside entity by looking at a host’s network footprint.

Let’s start by discussing how to view data from a network host and the applications it is using.

Listening Ports

The first goal when looking at a host on a network, regardless of whether the point of a view is from a system administrator, penetration tester, or malicious attacker, is identifying which ports on the host are “listening.” A listening port is a port held open by a running application in order to accept inbound connections. From a security perspective, this may mean a vulnerable system that could be exploited. A worst-case scenario would be an unauthorized active listening port to an exploited system permitting external access to a malicious party. Because most attackers will be outside your network, unauthorized listening ports are typically evidence of an intrusion.

Let’s look at the fundamentals behind ports: Messages associated with application protocols use TCP or UDP. Both of these protocols employ port numbers to identify a specific process to which an Internet or other network message is to be forwarded when it arrives at a server. A port number is a 16-bit integer that is put in the header appended to a specific message unit. Port numbers are passed logically between the client and server transport layers and physically between the transport layer and the IP layer before they are forwarded on. This client/server model is typically seen as web client software. An example is a browser communicating with a web server listening on a port such as port 80. Port values can range between 1 and 65,535, with server applications generally assigned a valued below 1024.

The following is a list of well-known ports used by applications:

• TCP 20 and 21: File Transfer Protocol (FTP)

• TCP 22: Secure Shell (SSH)

• TCP 23: Telnet

• TCP 25: Simple Mail Transfer Protocol (SMTP)

• TCP and UDP 53: Domain Name System (DNS)

• UDP 69: Trivial File Transfer Protocol (TFTP)

• TCP 79: Finger

• TCP 80: Hypertext Transfer Protocol (HTTP)

• TCP 110: Post Office Protocol v3 (POP3)

• TCP 119: Network News Protocol (NNTP)

• UDP 161 and 162: Simple Network Management Protocol (SNMP)

• TCP 443: Secure Sockets Layer over HTTP (HTTPS)

Note

These are just industry guidelines, meaning administrators do not have to run these services over these ports. Typically, administrators will follow these guidelines; however, these services can run over other ports. The services do not have to run on the known port to service list.

There are two basic approaches for identifying listening ports on the network. The first approach is accessing a host and searching for which ports are set to a listening state. This requires a minimal level of access to the host and being authorized on the host to run commands. This could also be done with authorized applications that are capable of showing all possible applications available on the host. The most common host-based tool for checking systems for listening ports on Windows and Linux systems is the netstat command. An example of looking for listening ports using the netstat command is netstat -na, as shown in Example 11-4.

Example 11-4 Identifying Open Ports with netstat

# netstat -na Active Internet connections (servers and established) Proto Recv-Q Send-Q Local Address Foreign Address State tcp 0 0 127.0.0.53:53 0.0.0.0:* LISTEN tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN tcp 0 912 10.1.2.3:22 192.168.88.12:38281 ESTABLISHED tcp6 0 0 :::53 :::* LISTEN tcp6 0 0 :::22 :::* LISTEN

In Example 11-4 the host is “listening” to TCP port 53 and 22 (in IPv4 and in IPv6). A Secure Shell connection from another host (192.168.88.12) is already established and shown in the output of the command.

Another host command to view similar data is the lsof -i command, as demonstrated in Example 11-5. In Example 11-5, Docker containers are also running web applications and listening on TCP ports 80 (http) and 443 (https).

Example 11-5 Identifying Open Ports with lsof

# lsof -i COMMAND PID USER FD TYPE DEVICE SIZE/OFF NODE NAME systemd-r 647 systemd-resolve 12u IPv4 15295 0t0 UDP localhost:domain systemd-r 647 systemd-resolve 13u IPv4 15296 0t0 TCP localhost:domain (LISTEN) sshd 833 root 3u IPv4 18331 0t0 TCP *:ssh (LISTEN) sshd 833 root 4u IPv6 18345 0t0 TCP *:ssh (LISTEN) docker-pr 10470 root 4u IPv6 95596 0t0 TCP *:https (LISTEN) docker-pr 10482 root 4u IPv6 95623 0t0 TCP *:http (LISTEN)

A second and more reliable approach to determining what ports are listening from a host is to scan the host as an outside evaluator with a port scanner application. A port scanner probes a host system running TCP/IP to determine which TCP and UDP ports are open and listening. One extremely popular tool that can do this is the nmap tool, which is a port scanner that can determine whether ports are listening, plus provide many other details. The nmap command nmap-services will look for more than 2200 well-known services to fingerprint any applications running on the port.

It is important to be aware that port scanners provide a best guess, and the results should be validated. For example, a security solution could reply with the wrong information, or an administrator could spoof information such as the version number of a vulnerable server to make it appear to a port scanner that the server is patched. Newer breach detection technologies such as advanced honey pots attempt to attract attackers that have successfully breached the network by leaving vulnerable ports open on systems in the network. They then monitor those systems for any connections. The concept is that attackers will most likely scan and connect to systems that are found to be vulnerable, thus being tricked into believing the fake honey pot is really a vulnerable system.

If attackers are able to identify a server with an available port, they can attempt to connect to that service, determine what software is running on the server, and check to see if there are known vulnerabilities within the identified software that potentially could be exploited, as previously explained. This tactic can be effective when servers are identified as unadvertised because many website administrators fail to adequately protect systems that may be considered “nonproduction” systems yet are still on the network. An example would be using a port scanner to identify servers running older software, such as an older version of Apache HTTPd, NGINX, and other popular web servers and related frameworks that have known exploitable vulnerabilities. Many penetration arsenals such as Metasploit carry a library of vulnerabilities matching the results from a port scanner application. Another option for viewing “listening” ports on a host system is to use a network device such as a Cisco IOS router. A command similar to netstat on Cisco IOS devices is show control-plan host open-ports. A router’s control plane is responsible for handling traffic destined for the router itself versus the data plane being responsible for passing transient traffic.

A best practice for securing any listening and open ports is to perform periodic network assessments on any host using network resources for open ports and services that might be running and are either unintended or unnecessary. The goal is to reduce the risk of exposing vulnerable services and to identify exploited systems or malicious applications. Port scanners are common and widely available for the Windows and Linux platforms. Many of these programs are open-source projects, such as nmap, and have well-established support communities. A risk evaluation should be applied to identified listening ports because some services may be exploitable but wouldn’t matter for some situations. An example would be a server inside a closed network without external access that’s identified to have a listening port that an attacker would never be able to access.

The following list shows some of the known “bad” ports that should be secured:

• 1243/tcp: SubSeven server (default for V1.0-2.0)

• 6346/tcp: Gnutella

• 6667/tcp: Trinity intruder-to-master and master-to-daemon

• 6667/tcp: SubSeven server (default for V2.1 Icqfix and beyond)

• 12345/tcp: NetBus 1.x

• 12346/tcp: NetBus 1.x

• 16660/tcp: Stacheldraht intruder-to-master

• 18753/udp: Shaft master-to-daemon

• 20034/tcp: NetBus Pro

• 20432/tcp: Shaft intruder-to-master

• 20433/udp: Shaft daemon-to-master

• 27374/tcp: SubSeven server (default for V2.1-Defcon)

• 27444/udp: Trinoo master-to-daemon

• 27665/tcp: Trinoo intruder-to-master

• 31335/udp: Trinoo daemon-to-master

• 31337/tcp: Back Orifice

• 33270/tcp: Trinity master-to-daemon

• 33567/tcp: Backdoor rootshell via inetd (from Lion worm)

• 33568/tcp: Trojaned version of SSH (from Lion worm)

• 40421/tcp: Masters Paradise Trojan horse

• 60008/tcp: Backdoor rootshell via inetd (from Lion worm)

• 65000/tcp: Stacheldraht master-to-daemon

One final best practice we’ll cover for protecting listening and open ports is implementing security solutions such as firewalls. The purpose of a firewall is to control traffic as it enters and leaves a network based on a set of rules. Part of the responsibility is protecting listening ports from unauthorized systems—for example, preventing external attackers from having the ability to scan internal systems or connect to listening ports. Firewall technology has come a long way, providing capabilities across the entire network protocol stack and the ability to evaluate the types of communication permitted. For example, older firewalls can permit or deny web traffic via port 80 and 443, but current application layer firewalls can also permit or deny specific applications within that traffic, such as denying YouTube videos within a Facebook page, which is seen as an option in most application layer firewalls. Firewalls are just one of the many tools available to protect listening ports. Best practice is to layer security defense strategies to avoid being compromised if one method of protection is breached.

The list that follows highlights the key concepts covered in this section:

• A listening port is a port held open by a running application in order to accept inbound connections.

• Ports use values that range between 1 and 65,535.

• Netstat and nmap are popular methods for identifying listening ports.

• Netstat can be run locally on a device, whereas nmap can be used to scan a range of IP addresses for listening ports.

• A best practice for securing listening ports is to scan and evaluate any identified listening port as well as to implement layered security, such as combining a firewall with other defensive capabilities.

Logged-in Users/Service Accounts

Identifying who is logged in to a system is important for knowing how the system will be used. Administrators typically have more access to various services than other users because their job requires those privileges. Employees within Human Resources might need more access rights than other employees to validate whether an employee is violating a policy. Guest users typically require very little access rights because they are considered a security risk to most organizations. In summary, best practice for provisioning access rights is to enforce the concept of least privilege, meaning to provision the absolute least number of access rights required to perform a job.

People can be logged in to a system in two ways. The first method is to be physically at a keyboard logged in to the system. The other method is to remotely access the system using something like a Remote Desktop Connection (RDP) protocol. Sometimes the remote system is authorized and controlled, such as using a Citrix remote desktop solution to provide remote users access to the desktop, whereas other times it’s a malicious user who has planted a remote-access tool (RAT) to gain unauthorized access to the host system. Identifying post-breach situations is just one of the many reasons why monitoring remote connections should be a priority for protecting your organization from cyber breaches.

A few different approaches can be used to identify who is logged in to a system. For Windows machines, the first method involves using the Remote Desktop Services Manager suite. This approach requires the software to be installed. Once the software is running, an administrator can remotely access the host to verify who is logged in.

Another tool you can use to validate who is logged in to a Windows system is the PsLoggedOn application. For this application to work, it has to be downloaded and placed somewhere on your local computer that will be remotely checking hosts. Once it’s installed, simply open a command prompt and execute the following command:

C:PsToolspsloggedon.exe \HOST_TO_CONNECT

You can use Windows PowerShell to obtain detailed information about users logged in to the system and many other statistics that can be useful for forensics and incident response activities. Similarly to the psloggedon.exe method, you can take advantage of PowerShell modules like Get-ActiveUser available, which is documented at www.powershellgallery.com/packages/Get-ActiveUser/1.4/.

Note

The PowerShell Gallery (www.powershellgallery.com) is the central repository for PowerShell modules developed by Microsoft and the community.

For Linux machines, various commands can show who is logged in to a system, such as the w command, who command, users command, whoami command, and the last “user name” command. Example 11-6 shows the output of the w command. Two users are logged in as omar and root.

Example 11-6 Using the Linux w Command

$w 21:39:12 up 2 days, 19:18, 2 users, load average: 1.06, 0.95, 0.87 USER TTY FROM LOGIN@ IDLE JCPU PCPU WHAT root tty1 - 18:29 0.00s 0.02s 0.00s ssh 10.6.6.3 omar pts/2 10.6.6.3 21:36 0.00s 0.01s 0.00s w

Example 11-7 shows the output of the who, users, last, and lastlog Linux commands. In Example 11-7, you can see the details on the users root and omar, as well as when they logged in to the system using the last command. The lastlog command reports the most recent login of all users or of a given user. In Example 11-17, the lastlog command displays information on all users in the system.

Example 11-7 The who and users Linux Commands

$who root tty1 Jun 3 18:29 omar pts/2 Jun 3 21:36 (10.6.6.3) $users omar root $last omar pts/2 10.6.6.3 Wed Jun 3 21:36 still logged in root tty1 Wed Jun 3 18:29 still logged in wtmp begins Wed Jun 3 18:29:09 2020 $lastlog Username Port From Latest root tty1 Wed Jun 3 18:29:09 +0000 2020 daemon **Never logged in** bin **Never logged in** sys **Never logged in** sync **Never logged in** games **Never logged in** man **Never logged in** lp **Never logged in** mail **Never logged in** news **Never logged in** uucp **Never logged in** proxy **Never logged in** www-data **Never logged in** backup **Never logged in** list **Never logged in** irc **Never logged in** gnats **Never logged in** nobody **Never logged in** syslog **Never logged in** systemd-network **Never logged in** systemd-resolve **Never logged in** messagebus **Never logged in** postfix **Never logged in** _apt **Never logged in** sshd **Never logged in** uuidd **Never logged in** chelin pts/2 10.6.6.45 Tue Jun 2 11:22:41 +0000 2020 omar pts/2 10.6.6.3 Wed Jun 3 21:36:53 +0000 2020

Each option shows a slightly different set of information about who is currently logged in to a system. One command that displays the same information on a Windows system is the whoami command.

Many administrative tools can be used to remotely access hosts, so the preceding commands can be issued to validate who is logged in to the system. One such tool is a virtual network computing (VNC) server. This method requires three pieces. The first part is having a VNC server that will be used to access clients. The second part is having a VNC viewer client installed on the host to be accessed by the server. The final part is an SSH connection that is established between the server and client once things are set up successfully. SSH can also be used directly from one system to access another system using the ssh “remote_host” or ssh “remote_username@remote_host” command if SSH is set up properly. There are many other applications, both open source and commercial, that can provide remote desktop access service to host systems.

It is important to be aware that validating who is logged in to a host can help identify when a host is compromised. According to the kill chain concept, attackers that breach a network will look to establish a foothold through breaching one or more systems. Once they have access to a system, they will seek out other systems by pivoting from system to system. In many cases, attackers want to identify a system with more access rights so they can increase their privilege level, meaning gaining access to an administration account, which typically can access critical systems. Security tools that include the ability to monitor users logged in to systems can flag whether a system associated with an employee accesses a system that’s typically only accessed by administrator-level users, thus indicating a concern for an internal attack through a compromised host. The industry calls this type of security breach detection, meaning technology looking for post-compromise attacks.

The following list highlights the key concepts covered in this section:

• Employing least privilege means to provision the absolute minimum number of access rights required to perform a job.

• The two methods to log in to a host are locally and remotely.

• Common methods for remotely accessing a host are using SSH and using a remote-access server application such as VNC.

Running Processes

Now that we have covered identifying listening ports and how to check users that are logged in to a host system, the next topic to address is how to identify which processes are running on a host system. A running process is an instance of a computer program being executed. There’s lots of value in understanding what is running on hosts, such as identifying what is consuming resources, developing more granular security policies, and tuning how resources are distributed based on QoS adjustments linked to identified applications. We briefly look at identifying processes with access to the host system; however, the focus of this section is on viewing applications as a remote system on the same network.

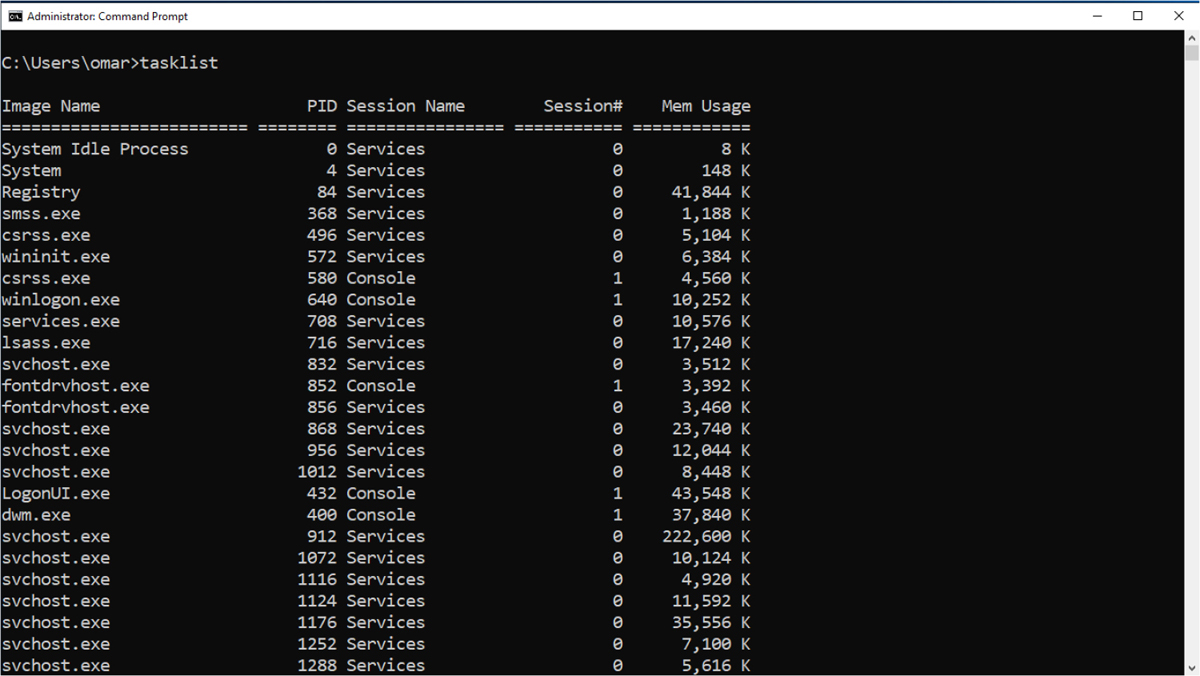

In Windows, one simple method for viewing the running processes when you have access to the host system is to open the Task Manager by pressing Ctrl+Shift+Esc, as shown in Figure 11-1.

Figure 11-1 Windows Task Manager

A similar result can be achieved using the Windows command line by opening the command terminal with the cmd command and issuing the tasklist command, as shown in Figure 11-2.

Figure 11-2 Running the tasklist Command on the Windows Command Line

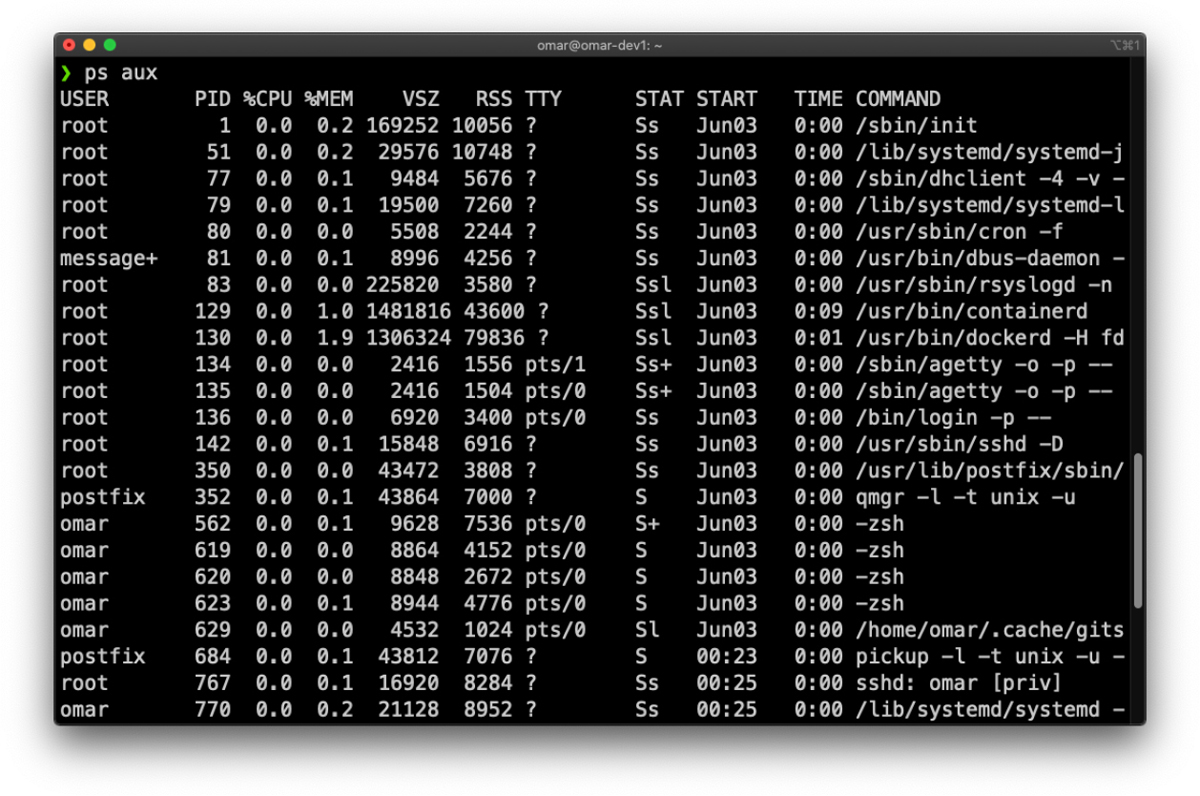

For Linux systems, you can use the ps -e command to display a similar result as the Windows commands previously covered. Figure 11-3 shows executing the ps -e command to display running processes on a macOS system.

Figure 11-3 Using the ps -e Command on a macOS System

You can use the ps -u user command to see all the processes that a specific user is running. For instance, you can use the ps -u root command to see every process running as root. Similarly, you can use the ps -u omar command to see all the processes that the user omar has launched on a system.

These approaches are useful when you can log in to the host and have the privilege level to issue such commands. The focus for the CyberOps Associate exam is identifying these processes from an administrator system on the same network versus administrating the host directly. This requires evaluation of the hosts based on traffic and available ports. There are known services associated with ports, meaning that simply seeing a specific port being used indicates it has a known associated process running. For example, if port 25 shows SMTP traffic, it is expected that the host has a mail process running.

Identifying traffic from a host and the ports being used by the host can be handled using methods we previously covered, such as using a port scanner, having a detection tool inline, or reading traffic from a SPAN port.

Applications Identification

An application is software that performs a specific task. Applications can be found on desktops, laptops, mobile devices, and so on. They run inside the operating system and can be simple tasks or complicated programs. Identifying applications can be done using the methods previously covered, such as identifying which protocols are seen by a scanner, the types of clients (such as the web browser or email client), and the sources they are communicating with (such as what web applications are being used).

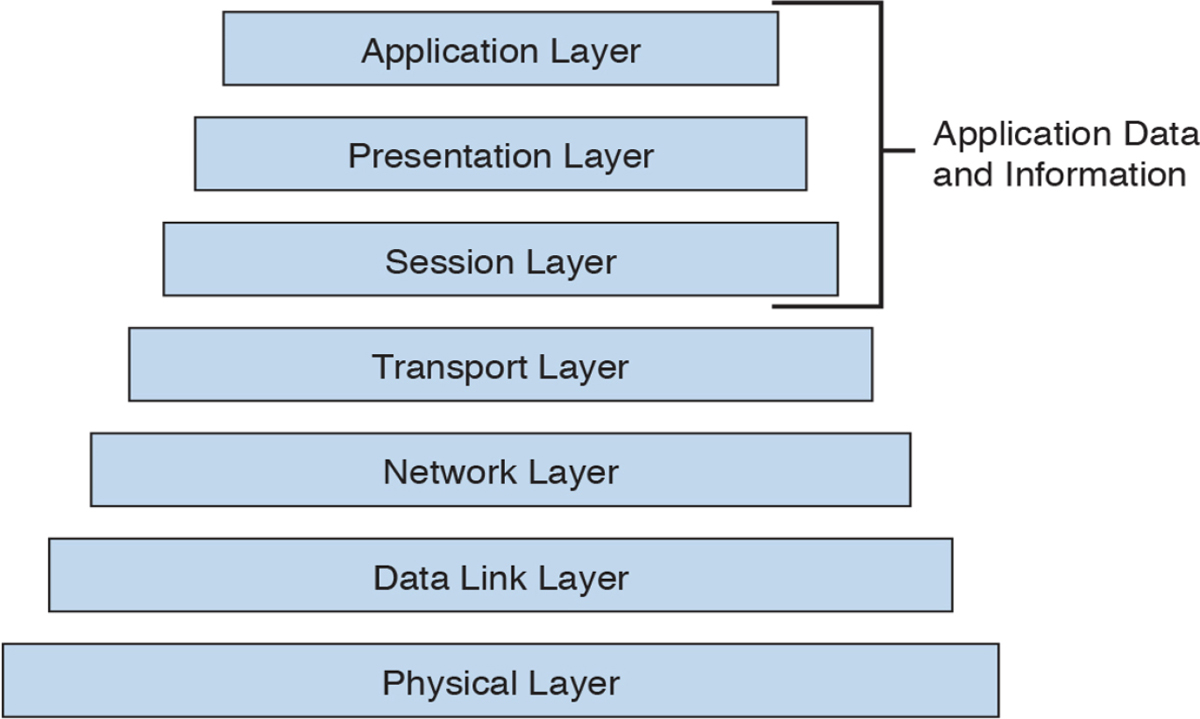

Note

Applications operate at the top of the OSI and TCP/IP layer models, whereas traffic is sent by the transport and lower layers, as shown in Figure 11-4.

Figure 11-4 Representing the OSI and TCP/IP Layer Models

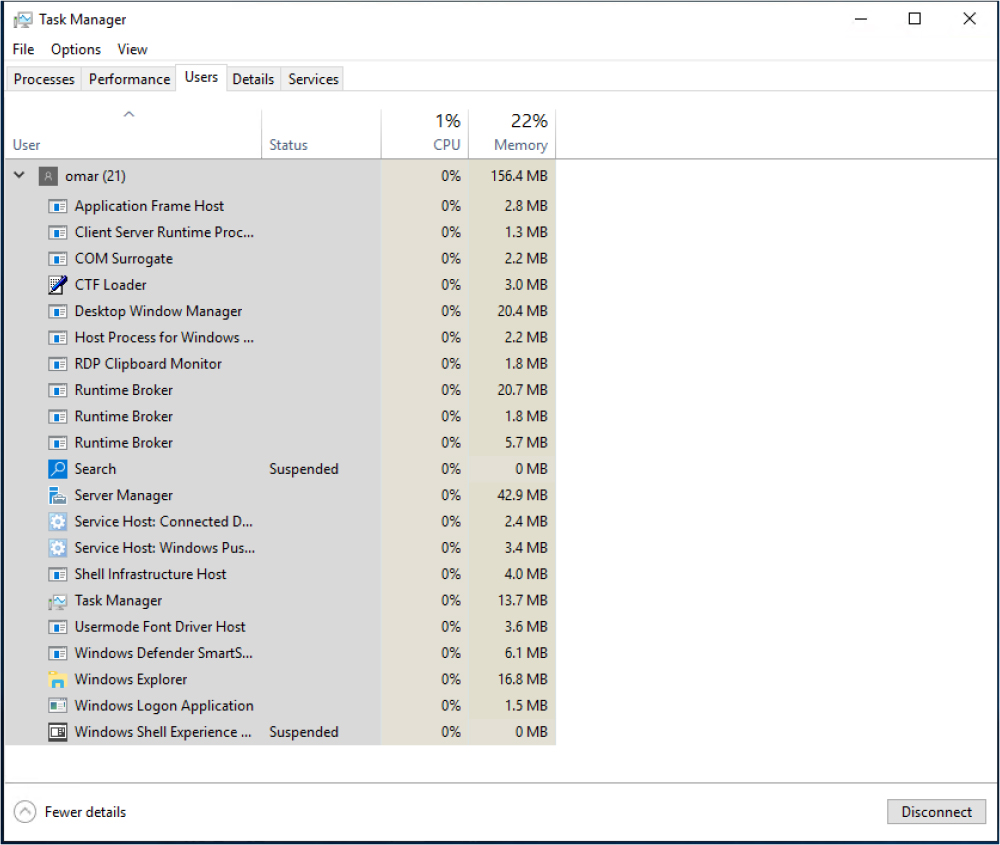

To view applications on a Windows system with access to the host, you can use the same methods we covered for viewing processes. The Task Manager is one option, as shown in Figure 11-5. In this example, notice that only applications owned or run by the user omar are displayed.

Figure 11-5 Windows Task Manager Showing Applications by User

For macOS systems, you can use the Activity Monitor tool, as shown in Figure 11-6.

Figure 11-6 macOS Activity Monitor

Once again, these options for viewing applications are great if you have access to the host as well as the proper privilege rights to run those commands or applications; however, let’s look at identifying the applications as an outsider profiling a system on the same network.

The first tool to consider is a port scanner that can also interrogate for more information than port data. Nmap version scanning can further interrogate open ports to probe for specific services. This tells nmap what is really running versus just the ports that are open. For example, running nmap -v could display lots of details, including the following information showing which port is open and the identified service:

PORT STATE SERVICE 80/tcp open http 631/tcp open ipp 3306/tcp open mysql

A classification engine available in Cisco IOS and Cisco IOS XE software that can be used to identify applications is Network-Based Application Recognition (NBAR). It works by enabling an IOS router interface to map traffic ports to protocols as well as recognize traffic that doesn’t have a standard port, such as various peer-to-peer protocols. NBAR is typically used as a means to identify traffic for QoS policies; however, you can use the show ip nbar protocol-discovery command to identify what protocols and associated applications are identified by NBAR.

Many other tools with built-in application-detection capabilities are available. Most content filters and network proxies can provide application layer details, such as Cisco’s Web Security Appliance (WSA).

Even NetFlow can have application data added when using a Cisco Stealthwatch Flow Sensor. The Flow Sensor adds detection of 900 applications while it converts raw data into NetFlow.

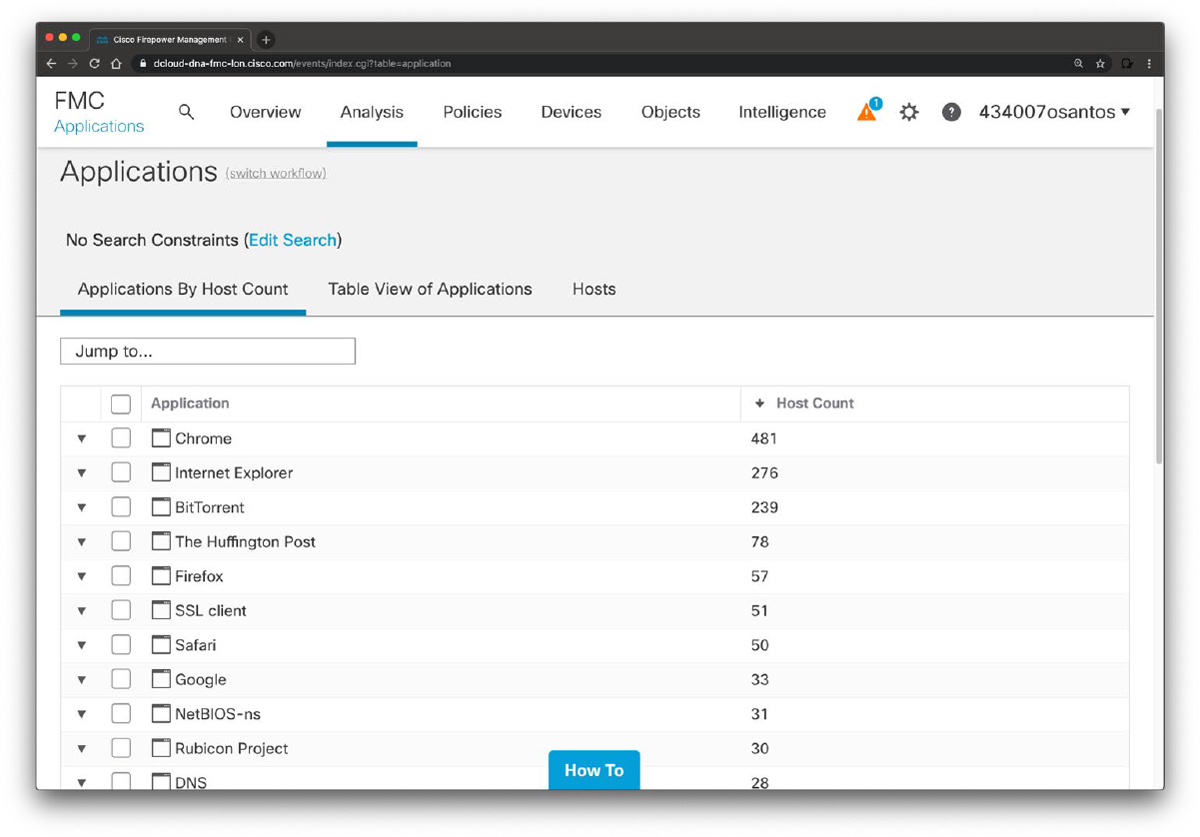

Application layer firewalls also provide detailed application data, such as Cisco Firepower Management Center (FMC), which is shown in Figure 11-7.

Figure 11-7 Firepower Management Center Application Statistics

You can use the Cisco Firepower Management Center (FMC) to view a table of detected applications, as shown in Figure 11-8. Then you can manipulate the event view depending on the information you are looking for as part of your incident response activities.

Figure 11-8 Firepower Detected Applications Table

In summary, networks tools that can detect application layer data must have access to network traffic both to and from a host, such as being inline or off a SPAN port. Examples of tools that can detect application layer data are content filters, application layer firewalls, and tools that have custom application-detection capabilities built in. Also, network scanning can be used to evaluate the ports on host and link traffic to known associated applications.

The following list highlights the key concepts covered in this section:

• An application is software that performs a specific task.

• Applications operate at the top of the OSI and TCP/IP layer models, whereas traffic is sent by the transport layer.

• NBAR in Cisco IOS devices can be used to identify applications.

• Network tools that can detect application layer data must have access to network traffic both to and from a host, such as being inline or off a SPAN port.

Now that we’ve covered profiling concepts, let’s explore how to analyze Windows endpoint logs and other artifacts.

Analyzing Windows Endpoints

In 1984 Microsoft introduced Windows as a graphical user interface (GUI) for Microsoft DOS. Over time, Windows has matured in stability and capabilities with many releases, ranging from Windows 3.0 back in 1990 to the current Windows release. More current releases of Windows have offered customized options; for example, Windows Server was designed for provisioning services to multiple hosts, and Windows Mobile was created for Windows-based phones and was not as successful as other versions of Windows.

The Windows operating system architecture is made up of many components, such as the control panel, administrative tools, and software. The control panel permits users to view and change basic system settings and controls. This includes adding hardware and removing software as well as changing user accounts and accessibility options. Administrative tools are more specific to administrating Windows. For example, System Restore is used for rolling back Windows, and Disk Defragment is used to optimize performance. Software can be various types of applications, from the simple calculator application to complex programing languages.

The CyberOps Associate exam doesn’t ask for specifics about each version of Windows; nor does it expect you to know every component within the Windows architecture. That would involve a ton of tedious detail that is out of scope for the learning objectives of the certification. The content covered here targets the core concepts you are expected to know about Windows. We start with how applications function by defining processes and threads.

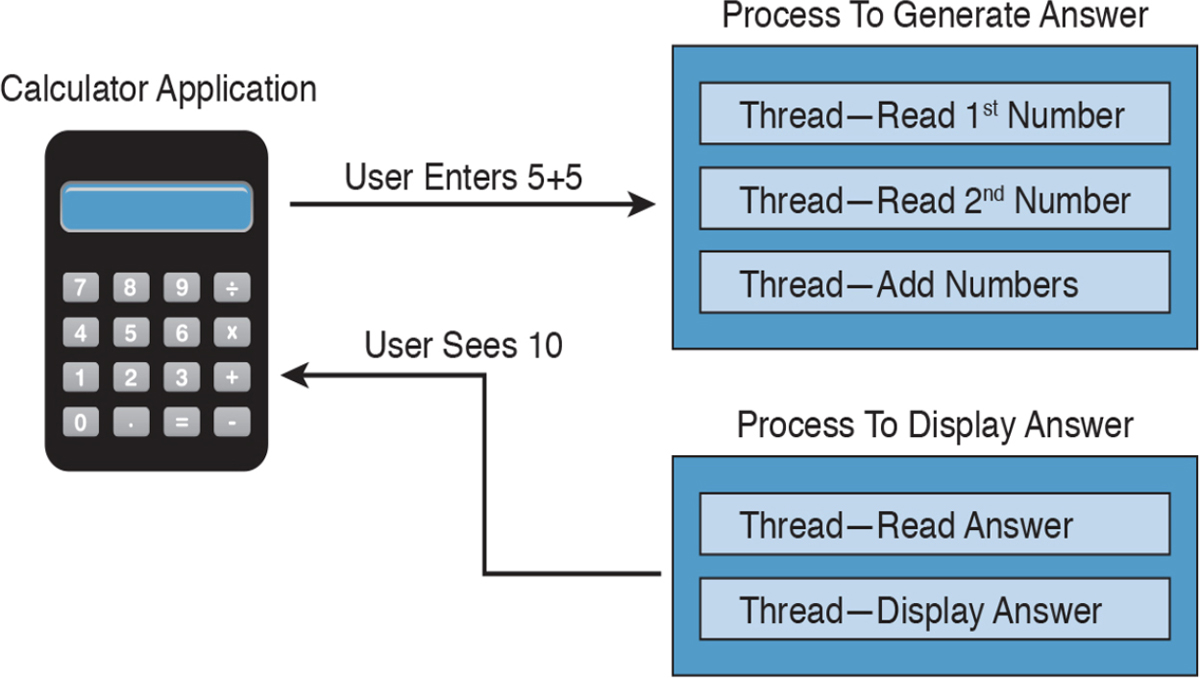

Windows Processes and Threads

![]()

Let’s first run through some technical definitions of processes and threads. When you look at what an application is built from, you will find one or more processes. A process is a program that the system is running. Each process provides the required resources to execute a program. A process is made up of one or more threads, which are the basic units an operating system allocates process time to. A thread can be executed during any part of the application runtime, including being executed by another thread. Each process starts with a single thread, known as the primary thread, but can also create additional threads from any of its threads.

For example, the calculator application could run multiple processes when a user enters numbers to be computed, such as the process to compute the math as well as the process to display the answer. You can think of a thread as each number being called while the process is performing the computation that will be displayed by the calculator application. Figure 11-9 shows this relationship from a high-level view.

Figure 11-9 Calculator Process and Thread Example

Processes can be grouped together and managed as a unit called a job object, which can be used to control the attributes of those processes. Grouping processes together simplifies impacting those processes because any operation performed on a specific job object will impact all associated processes. A thread pool is a group of worker threads that efficiently execute asynchronous callbacks for the application. This is done to reduce the number of application threads and to manage the worker threads. A fiber is a unit of execution that is manually scheduled by an application. Threads can schedule multiple fibers; however, fibers do not outperform properly designed multithreaded applications.

Although these are the foundation concepts to be aware of, it is more important to understand how these items are generally used within Windows for security purposes. Knowing that a Windows process is a running program is important, but it’s equally as import to understand that processes must have permission to run. This keeps processes from hurting the system as well as unauthorized actions from being performed. For example, the process to delete everything on the hard drive should have some authorization settings to avoid killing the computer.

Windows permissions are based on access control to process objects tied to user rights. This means that super users such as administrators will have more rights than other user roles. Windows uses tokens to specify the current security context for a process. This can be accomplished using the CreateProcessWithTokenW function.

Authentication is typically used to provision authorization to a user role. For example, you would log in with a username and password to authenticate to an access role that has specific user rights. Windows would validate this login attempt, and if authentication is successful, you will be authorized for a specific level of access. Windows stores user authentication data in a token that describes the security context of all processes associated with the user role. This means administrator tokens would have permission to delete items of importance, whereas lower-level user tokens would provide the ability to view but not be authorized to delete.

Figure 11-10 ties this token idea to the calculator example, showing processes creating threads. The basic idea is that processes create threads, and threads validate whether they can run using an access token. In this example, the third thread is not authorized to operate for some reason, whereas the other two are permitted.

Figure 11-10 Adding Tokens to the Threads Example

It is important to understand how these components all work together when developing applications and later securing them. Threats to applications, known as vulnerabilities, could be abused to change the intended outcome of an application. This is why it is critical to include security at all stages of application development to ensure these and other application components are not abused. The next section reviews how processes and threads work within Windows memory.

The following list highlights the key process and thread concepts:

![]()

• A process is a program that the system is running and is made of one or more threads.

• A thread is a basic unit that an operating system allocates process time to.

• A job is a group of processes.

• A thread pool is a group of worker threats that efficiently execute asynchronous callbacks for the application.

• Processes must have permission to run within Windows.

• You can use a Windows token to specify the current security context for a process using the CreateProcessWithTokenW function.

• Windows stores data in a token that describes the security context of all processes associated with a particular user role.

Memory Allocation

Now that we have covered how applications function, let’s look at where they are installed and how they run. Computer memory is any physical device capable of storing information in a temporary or permanent state. Memory can be volatile or nonvolatile. Volatile memory is memory that loses its contents when the computer or hardware storage device loses power. RAM is an example of volatile memory. That’s why you never hear people say they are saving something to RAM. It’s designed for application performance.

You might be thinking that there isn’t a lot of value for the data stored in RAM; however, from a digital forensics viewpoint, the following data could be obtained by investigating RAM. (In case you’re questioning some of the items in the list, keep in mind that data that is encrypted must be unencrypted when in use, meaning its unencrypted state could be in RAM. The same goes for passwords!)

• Running processes: Who is logged in

• Passwords in cleartext: Unencrypted data

• Instant messages: Registry information

• Executed console commands: Attached devices

• Open ports: Listening applications

Nonvolatile memory (NVRAM), on the other hand, holds data with or without power. EPROM would be an example of nonvolatile memory.

Note

Memory and disk storage are two different things. Computers typically have anywhere from 1 GB to 16 GB of RAM, but they can have hundreds of terabytes of disk storage. A simple way to understand the difference is that memory is the space that applications use when they are running, whereas storage is the place where applications store data for future use.

Memory can be managed in different ways, referred to as memory allocation or memory management. In static memory allocation a program allocates memory at compile time. In dynamic memory allocation a program allocates memory at runtime. Memory can be assigned in blocks representing portions of allocated memory dedicated to a running program. A program can request a block of memory, which the memory manager will assign to the program. When the program completes whatever it’s doing, the allocated memory blocks are released and available for other uses.

Next up are stacks and heaps. A stack is memory set aside as spare space for a thread of execution. A heap is memory set aside for dynamic allocation (that is, where you put data on the fly). Unlike a stack, a heap doesn’t have an enforced pattern for the allocation and deallocation of blocks. With heaps, you can allocate a block at any time and free it at any time. Stacks are best when you know ahead of time how much memory is needed, whereas heaps are better for when you don’t know how much data you will need at runtime or if you need to allocate a lot of data. Memory allocation happens in hardware, in the operating system, and in programs and applications.

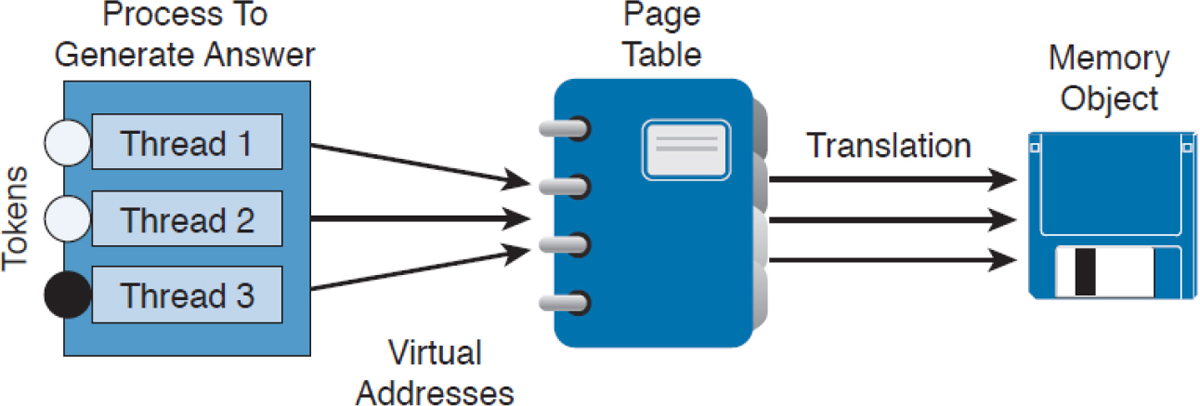

Processes function in a set of virtual memory known as virtual address space. The virtual address space for each process is private and cannot be accessed by other processes unless it is specifically shared. The virtual address does not represent the actual physical location of an object in memory; instead, it’s simply a reference point. The system maintains a page table for each process that is used to reference virtual memory to its corresponding physical address space. Figure 11-11 shows this concept using the calculator example, where the threads point to a page table that holds the location of the real memory object.

Figure 11-11 Page Table Example

The virtual address space of each process can be smaller or larger than the total physical memory available on the computer. A working set is a subset of the virtual address space of an active process. If a thread of a process attempts to use more physical memory than is currently available, the system will page some of the memory contest to disk. The total amount of virtual address space available to process on a specific system is limited by the physical memory and free space on the hard disks for the paging file.

We next touch on a few other concepts of how Windows allocates memory. The ultimate result is the same, but the approach for each is slightly different. VirtualAlloc is a specialized allocation of OS virtual memory system; it allocates straight into virtual memory by reserving memory blocks. HeapAlloc allocates any size of memory requested, meaning it allocates by default regardless of size. Malloc is another memory allocation option, but it is more programming focused and not Windows dependent. It is not important for the CyberOps Associate exam to know the details of how each memory allocation option functions. The goal is just to have a general understanding of memory allocation.

The following list highlights the key memory allocation concepts:

![]()

• Volatile memory is memory that loses its contents when the computer or hardware storage device loses power.

• Nonvolatile memory (NVRAM) holds data with or without power.

• In static memory allocation a program allocates memory at compile time.

• In dynamic memory allocation a program allocates memory at runtime.

• A heap is memory that is set aside for dynamic allocation.

• A stack is the memory that is set aside as spare space for a thread of execution.

• A virtual address space is the virtual memory that is used by processes.

• A virtual address is a reference to the physical location of an object in memory. A page table translates virtual memory into its corresponding physical addresses.

• The virtual address space of each process can be smaller or larger than the total physical memory available on the computer.

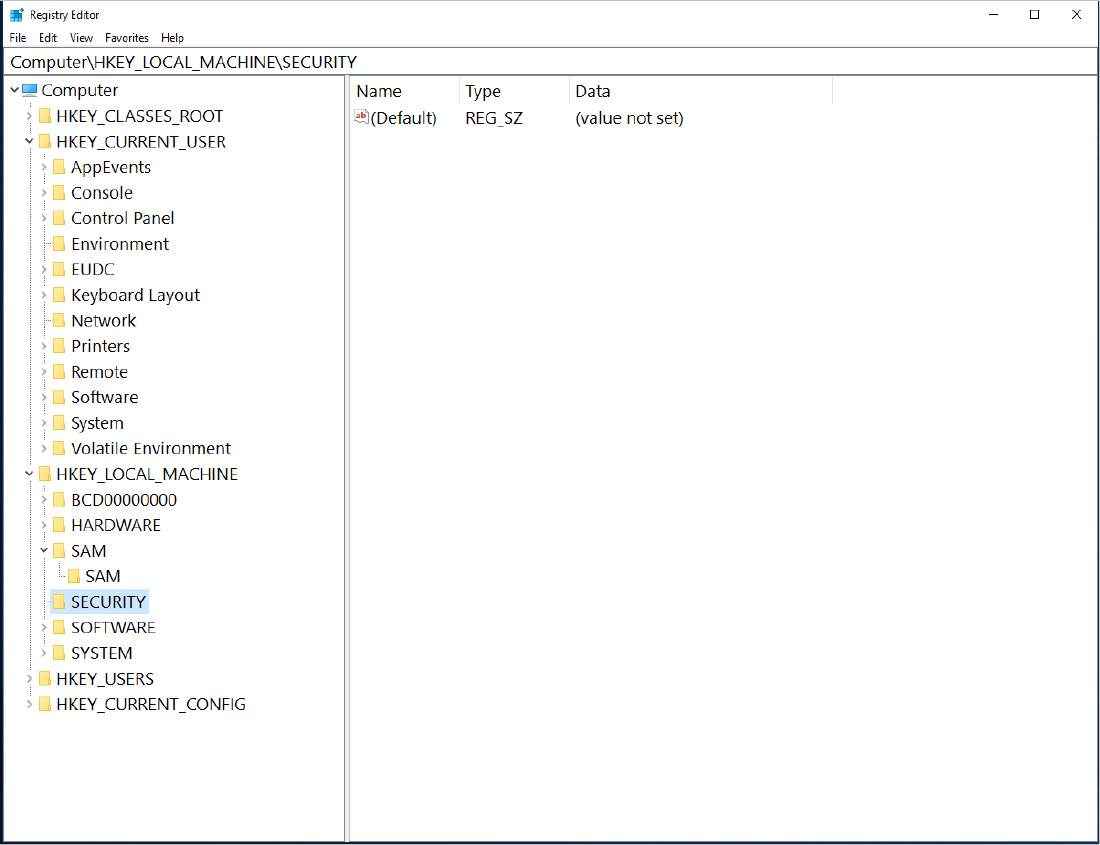

The Windows Registry

Now that we have covered what makes up an application and how it uses memory, let’s look at the Windows Registry. Essentially, anything performed in Windows refers to or is recorded into the Registry. Therefore, any actions taken by a user reference the Windows Registry. The Windows Registry is a hierarchical database for storing the information necessary to configure a system for one or more users, applications, and hardware devices.

Some functions of the Windows Registry are to load device drivers, run startup programs, set environment variables, and store user settings and operating system parameters. You can view the Windows Registry by typing the command regedit in the Run window. Figure 11-12 shows a screenshot of the Registry Editor window.

Figure 11-12 Windows Registry Editor

The Registry is like a structured file system. The five hierarchal folders on the left are called hives and begin with HKEY (meaning the handle to a key). Two of the hives are real locations: HKEY_USERS (HKU) and HKEY_LOCAL_MACHINE (HKLM). The remaining three are shortcuts to branches within the HKU and HKLM hives. Each of the five main hives is composed of keys that contain values and subkeys. Values pertain to the operation system or applications within a key. The Windows Registry is like an application containing folders. Inside an application, folders hold files. Inside the Windows Registry, the hives hold values.

The following list defines the functions of the five hives within the Windows Registry:

• HKEY_CLASSES_ROOT (HKCR): HKCR information ensures that the correct program opens when it is executed in Windows Explorer. HKCR also contains further details on drag-and-drop rules, shortcuts, and information on the user interface. The reference location is HKLMSoftwareClasses.

• HKEY_CURRENT_USER (HKCU): HKCU contains configuration information for any user who is currently logged in to the system, including user folders, screen colors, and control panel settings. The reference location for a specific user is HKEY_USERS. The reference for general use is HKU.DEFAULT.

• HKEY_CURRENT_CONFIG (HCU): HCU stores information about the system’s current configuration. The reference for HCU is HKLMConfigprofile.

• HKEY_LOCAL_MACHINE (HKLM): HKLM contains machine hardware-specific information that the operating system runs on. This includes a list of drives mounted on the system and generic configurations of installed hardware and applications. HKLM is a hive that isn’t referenced from within another hive.

• HKEY_USERS (HKU): HKU contains configuration information of all user profiles on the system. This includes application configurations and visual settings. HKU is a hive that isn’t referenced from within another hive.

Some interesting data points can be gained from analyzing the Windows Registry. All registries contain a value called LastWrite time, which is the last modification time of a file. This value can be used to identify the approximate date and time an event occurred. Autorun locations are Registry keys that launch programs or applications during the boot process. Autorun is extremely important to protect because it could be used by an attacker for executing malicious applications. The most recently used (MRU) list contains entries made due to actions performed by the user. The purpose of the MRU list is to contain items in the event the user returns to them in the future. Think of the MRU list as how a cookie is used in a web browser. The UserAssist key contains a document of what the user has accessed.

Network settings, USB devices, and mounted devices all have Registry keys that can be pulled up to identify activity within the operating system. Having a general understanding of Windows registration should be sufficient for questions found on the CyberOps Associate exam.

The following list highlights the key Windows registration concepts:

![]()

• The Windows Registry is a hierarchical database used to store information necessary to configure the system for one or more users, applications, and hardware devices.

• Some functions of the Registry are to load device drivers, run startup programs, set environment variables, and store user settings and operating system parameters.

• The five main folders in the Windows Registry are called hives. Three of these hives are reference points inside of another primary hive.

• Hives contain values pertaining to the operation system or applications within a key.

Windows Management Instrumentation

The next topic focuses on managing Windows systems and sharing data with other management systems. Windows Management Instrumentation (WMI) is a scalable system management infrastructure built around a single, consistent, standards-based, extensible, object-oriented interface. Basically, WMI is Microsoft’s approach to implementing Web-Based Enterprise Management (WBEM), which is a tool used by system management application developers for manipulating system management information. WMI uses the Common Information Model (CIM) industry standard to represent systems, applications, networks, devices, and other managed components. CIM is developed and maintained by the Distributed Management Task Force (DMTF).

It is important to remember that WMI is only for computers running Microsoft Windows. WMI comes preinstalled on all supported versions of Windows. Figure 11-13 shows a Windows computer displaying the WMI service.

Figure 11-13 Windows Computer Showing the WMI Service

The purpose of WMI is to define a set of proprietary environment-independent specifications used for management information that’s shared between management applications. WMI allows scripting languages to locally and remotely manage Microsoft Windows computers and services. The following list provides examples of what WMI can be used for:

• Providing information about the status of local or remote computer systems

• Configuring security settings

• Modifying system properties

• Changing permissions for authorized users and user groups

• Assigning and changing drive labels

• Scheduling times for processes to run

• Backing up the object repository

• Enabling or disabling error logging

Using WMI by itself doesn’t provide these capabilities or display any data. You must pull this information using scripts and other tools. WMI can be compared to the electronics data of a car, where the car dashboard is the tool used to display what the electronics are doing. Without the dashboard, the electronics are there, but you won’t be able to interact with the car or obtain any useful data. An example of WMI would be using a script to display the time zone configured on a Windows computer or issuing a command to change the time zone on one or more Windows computers.

When considering Windows security, you should note that WMI could be used to perform malicious activity. Malicious code could pull sensitive data from a system or automate malicious tasks. An example would be using WMI to escalate privileges so that malware can function at a higher privilege level if the security settings are modified. Another attack would be using WMI to obtain sensitive system information.

There haven’t been many WMI attacks seen in the wild; however, Trend Micro published a white paper on one piece of WMI malware called TROJ_WMIGHOST.A. So although such attacks are not common, they are possible. WMI requires administrative permission and rights to be installed; therefore, a best practice to protect systems against this form of exploitation is to restrict access to the WMI service.

The following list highlights the key WMI concepts:

![]()

• WMI is a scalable system management infrastructure built around a single, consistent, standards-based, extensible, object-oriented interface.

• WMI is only for Windows systems.

• WMI comes preinstalled on many Windows systems. For older Windows versions, you may need to download and install it.

• WMI data must be pulled in with scripting or tools because WMI by itself doesn’t show data.

Handles

In Microsoft Windows, a handle is an abstract reference value to a resource. Putting this another way, a handle identifies a particular resource you want to work with using the Win32 APIs. The resource is often memory, an open file, a pipe, or an object managed by another system. Handles hide the real memory address from the API user while permitting the system to reorganize physical memory in a way that’s transparent to the program.

Handles are like pointers, but not in the sense of dereferencing a handle to gain access to some data. Instead, a handle is passed to a set of functions that can perform actions on the object that the handle identifies. In comparison, a pointer contains the address of the item to which it refers, whereas a handle is an abstract of a reference and is managed externally. A handler can have its reference relocated in memory by the system without it being invalidated, which is impossible to do with a pointer because it directly points to something (see Figure 11-14).

Figure 11-14 Calculator Example Showing Handles

An important security concept is that a handle not only can identify a value but also associate access rights to that value. Consider the following example:

int fd = open(“/etc/passwd”, O_RDWR);

In this example, the program requests to read the system password file “/etc/passwd” in read/write mode (noted as 0_RDWR). This means the program asks to open this file with the specified access rights, which are read and write. If this is permitted by the operating system, it will return a handle to the user. The actual access is controlled by the operating system, and the handle can be looked at as a token of that access right provided by the operating system. Another outcome could be the operating system denying access, which means not opening the file or providing a handle. This shows why handles can be stored but never changed by the programmer; they are issued and managed by the operating system and can be changed on the fly by the operating system.

Handles generally end with .h (for example, WinDef.h) and are unsigned integers that Windows uses to internally keep track of objects in memory. When Windows moves an object, such as a memory block, to make room in memory and thus impacts the location of the object, the handles table is updated. Think of a handle as a pointer to a structure Windows doesn’t want you to directly manipulate. That is the job of the operating system.

One security concern with regard to handles is a handle leak. This occurs when a computer program requests a handle to a resource but does not free the handle when it is no longer used. The outcome of this is a resource leak, which is similar to a pointer causing a memory leak. A handle leak could happen when a programmer requests a direct value while using a count, copy, or other operation that would break when the value changes. Other times it is an error caused by poor exception handling. An example would be a programmer using a handle to reference some property and proceeding without releasing the handle. If this issue continues to occur, it could lead to a number of handles being marked as “in use” and therefore unavailable, causing performance problems or a system crash.

The following list highlights the key handle concepts:

![]()

• A handle is an abstract reference value to a resource.

• Handles hide the real memory address from the API user while permitting the system to reorganize physical memory in a way that’s transparent to the program.

• A handle not only can identify a value but also associate access rights to that value.

• A handle leak can occur if a handle is not released after being used.

Services

The next topic to tackle is Windows services, which are long-running executable applications that operate in their own Windows session. Basically, they are services that run in the background. Services can automatically kick off when a computer starts up, such as the McAfee security applications shown in Figure 11-15, and they must conform to the interface rules and protocols of the Services Control Manager.

Figure 11-15 Windows Services Control Manager

Services can also be paused and restarted. Figure 11-15 shows some services started under the Status tab. You can see whether a service will automatically start under the Startup Type tab. To view the services on a Microsoft Windows system as shown in Figure 11-15, type Services in the Run window. This brings up the Services Control Manager.

Services are ideal for running things within a user security context, starting applications that should always be run for a specific user, and for long-running functionality that doesn’t interfere with other users who are working on the same computer. An example would be monitoring whether storage is consumed past a certain threshold. The programmer could create a Windows service application that monitors storage space and set it to automatically start at bootup so it is continuously monitoring for the critical condition. If the user chooses not to monitor her system, she could open the Services Control Manager and change the startup type to Manual, meaning it must be manually turned on. Alternatively, she could just stop the service. The services inside the Services Control Manager can be started, stopped, or triggered by an event. Because services operate in their own user account, they can operate when a user is not logged in to the system, meaning that the storage space monitoring application could be set to automatically run for a specific user or for any other users, including when no user is logged in.

Windows administrators can manage services using the Services snap-in, Sc.exe, or Windows PowerShell. The Services snap-in is built into the Services Management Console and can connect to a local or remote computer on a network, thus enabling the administrator to perform some of the following actions:

• View installed services

• Start, stop, or restart services

• Change the startup type for a service

• Specify service parameters when available

• Change the startup type

• Change the user account context where the service operates

• Configure recovery actions in the event a service fails

• Inspect service dependencies for troubleshooting

• Export the list of services

Sc.exe, also known as the Service Control utility, is a command-line version of the Services snap-in. This means it can do everything the Services snap-in can do as well as install and uninstall services. Windows PowerShell can also manage Windows services using the following commands, also called cmdlets:

• Get-Service: Gets the services on a local or remote computer

• New-Service: Creates a new Windows service

• Restart-Service: Stops and then starts one or more services

• Resume-Service: Resumes one or more suspended (paused) services

• Set-Service: Starts, stops, and suspends a service, and changes its properties