The more we think about the cloud, the more we learn that the same challenges that existed on-premise now have morphed onto the cloud. Yes, the risk surface has changed. Yes, the exposure has changed. And yes, the ownership and the environment have changed. Yet, we are still talking about privileged management, identity management, vulnerability management, patch management, and credential and secrets security. The disciplines we have worked with for years are the same. However, how we actually implement the controls, provide management, and provide detailed monitoring are different in the cloud. Therefore, it is important to keep in mind the cybersecurity basics; just think about new ways of applying them in the cloud. These mitigation strategies are the most effective for cloud-based attack vectors and are just extensions of what we have been doing for years. The more things change, the more they stay the same.

Privileged Access Workstations

One of the most common methods for protecting the cloud is to secure all administrative access to the cloud computing environments used for management. Typical workstations used by individuals with privileged credentials are appealing targets for threat actors. This is because those credentials can be stolen and then used for attacks and subsequent lateral movement. Since these are real credentials, the attack is much harder to detect. Therefore, the best practice for protecting administration of the cloud is to provide a dedicated asset (physical or virtual machine) exclusively for privileged access to the cloud. Such an asset is called a Privileged Access Workstation (PAW).

In a typical environment, an identity (user) is provided a dedicated PAW for cloud administration and unique credentials and/or secrets to perform tasks linked to the asset and user. If access using those secrets is attempted from non-PAW resources or on another PAW, it can be an indicator of compromise.

Operationally, when logging into their PAWs, users still should not have direct access to the cloud. A privileged access management solution should broker the session, monitor activity, and inject managed credentials (to obfuscate them from the user) to enable them to perform their mission securely. When this is set up correctly, all these steps are completely transparent to the end user and only take a few seconds to complete automatically. This approach is a security best practice. Therefore, solutions that provide privileged access management are crucial to managing privileged access through PAWs, especially when connecting to the cloud.

Uses dedicated assets (physical or virtual) that are hardened and monitored for all activity.

Operates with the concept of least privilege and operationalize application allow and block listing (formerly application white and block listing).

Is installed on modern hardware that supports TPM (Trusted Platform Module). Preferably 2.0 or higher to support the latest biometrics and encryption.

Managed for vulnerabilities, and automated for timely patch management.

Requires MFA for authentication into sensitive resources.

Operates on a dedicated or trusted network and does not operate on the same network as potentially insecure devices.

Only uses a wired network connection. Wireless communications of any type are not acceptable for PAWs.

Uses physical tamper cables to prevent theft of the device, especially if the PAW is a laptop and in a high-traffic area.

Browsing the Internet, regardless of the browser

Email and messaging applications

Activity over insecure network connectivity, such as Wi-Fi or cellular

Use with USB storage media or unauthorized USB peripherals

Remote access into the PAW from any workstation

Applications or services that would undermine security best practices or create new vulnerabilities

To streamline this approach and avoid using two physical computers, many organizations leverage virtualization technologies (from VMware, Microsoft, Parallels, Oracle, etc.) that allow a single asset to execute a PAW side by side with the base operating system. The primary system is used for daily productivity tasks, and the other serves as the PAW. However, when using this approach, it is preferred that both daily activity and the PAW be virtualized on a hardened OS to provide better segmentation, but this may not always be practical. The PAW, if nothing else, should be virtualized and isolated from the OS (no clipboard sharing, file transfer, etc.) and not the daily productivity machine.

Access Control Lists

An access control list (ACL) is a security mechanism used to define who or what has access to your assets, buckets, network, storage, etc., and the permissions model for the object. An ACL consists of one or more entries that explicitly allow or deny access based on an account, role, or service in the cloud. An entry gives a specified entity the ability to perform specific actions.

Permission: Which defines what actions can be performed (e.g., read, write, create, delete)

Scope: Which defines who or what can perform the specified actions (e.g., a specific account or role)

In one entry, you would give “Read” permission to a scope of authorized accounts that can view the data.

In the other entry, you would give “Write” and/or “Delete” permissions to the scope of accounts responsible for maintenance.

The end results are a list of ACLs that restrict access on a “need to know” basis for the data contained in the storage bucket. While this example is simplistic for an enterprise cloud-based application, it forms the basis for our ACL discussion and protecting assets in the cloud.

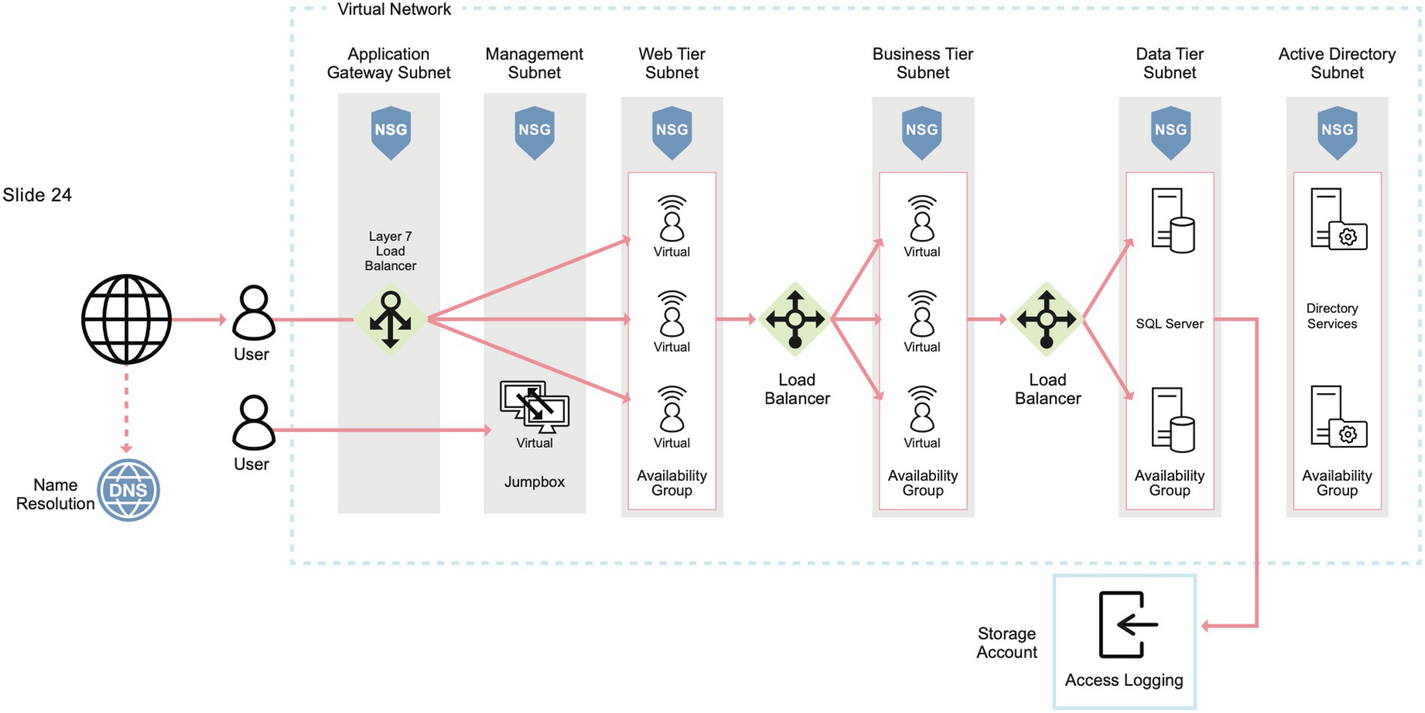

A model diagram of the structure of the n-tier web-based cloud. The parts are Name Resolution, Application Gateway Subnet, Management Subnet, Web Tier Subnet, Business Tier Subnet, Data Tier Subnet, Active Directory Subnet, and the Storage Account.

N-tier cloud-based web application

- 1.

Network traffic from jumping over tiers without communicating through the appropriate assets

- 2.

Connections from the Internet from directly communicating with any layer, besides the load balancer

- 3.

Inappropriate nations or known hostile IP addresses from accessing the application, by adding geolocation (IP and geolocation services) ACLs to the load balancer itself

- 4.

Inappropriate communications within a tier to prevent lateral movement or inappropriate application-to-application access

- 5.

Communications from any layer attempting to communicate with the virtual environment management resources or virtual network

With these in mind, all network traffic should always follow a predictable route, and any inappropriate activity should be monitored via alerts, logs, and events for potential indicators of compromise. That is, if any asset is compromised, a threat actor will typically attempt to deviate from the established architecture and acceptable network traffic to compromise the cloud environment. This is almost always true except for flaws in the web application itself that might disclose or leak data due to a poorly developed application. This has been covered in the “Web Services” section of Chapter 6.

Access control lists are your first and best security tool for restricting access in the cloud when access and network traffic should always follow a predefined route and originate only from specific sources. If you consider the cloud has no true perimeter, only certain TCP/IP addresses are exposed to the Internet, and traditional network architectures are virtualized, ACLs help maintain these conceptual boundaries and allow complex architectures far beyond what was ever possible on-premise.

Hardening

Harden, harden, harden your assets. If we are not clear on this recommendation, harden, harden, harden everything in your cloud environment and everything that is connected and performs administration. (Batman learned this when fighting Superman.) While this may seem like the most basic recommendation, improper hardening accounts for some of the most basic attack vectors in the cloud.

First, let’s have a quick refresher on hardening. As covered in Chapter 6, asset hardening refers to the process of reducing weaknesses in your security devices by changing custom and default settings and using tools to provide a stricter security posture. It is a necessary step that must be taken even before assets are placed live on a network and ready for production in the cloud. This eliminates potential runtime issues with hardening and loopholes that can be exploited by threat actors based on services, features, or default configurations present in an operating system, application, or asset.

Next, what does good cloud hardening entail? It is not the same as the controls used for traditional on-premise servers and workstations, since environmental conditions are different. Hardening for virtual machines does mirror on-premise counterparts, but hardening of containers, serverless environments, and virtual infrastructures has different characteristics due to the cloud service provider’s actual implementation of technology and lack of end-user physical access, like USB ports and drive bays.

Hardening standards for cloud environments and virtual machines

Center for Internet Security (CIS): www.cisecurity.org/cis-benchmarks/ | ||

|---|---|---|

AWS | CIS Benchmarks for Amazon Web Services Foundations | AWS Foundation is a comprehensive set of management and security solutions for cloud and hybrid assets. |

CIS Benchmark for Amazon Web Services Three-tier Web Architecture | The three-tier architecture is the most popular implementation of a multitier architecture and consists of a single presentation tier, logic tier, and data tier. | |

CIS Benchmark for AWS End User Compute Services | End-user computing (EUC) refers to computer systems and platforms that help nonprogrammers create applications. | |

Azure | CIS Benchmarks for Microsoft Azure Foundations | Azure Foundation is a comprehensive set of security, governance, and cost management solutions for Azure. This offering is underpinned by the Microsoft Cloud Adoption Framework for Azure. |

CIS Benchmark for Microsoft Office 365 Foundations | Microsoft 365 is a Software as a Service (SaaS) solution that includes Microsoft Office and other services, such as email and collaboration, from Microsoft’s Azure cloud services. | |

GCP | CIS Benchmarks for Google Cloud Platform Foundation | The Google Cloud Platform Foundation is a comprehensive set of back-end and consumer solutions for security and management of assets in GCP. |

CIS Benchmark for Google Workspace Foundations | Google Workspace is a collection of cloud computing, productivity, and collaboration tools, software, and products developed and marketed by Google. | |

NIST 800-144: https://nvlpubs.nist.gov/nistpubs/Legacy/SP/nistspecialpublication800-144.pdf Other Guidelines on Security and Privacy in Public Cloud Computing | ||

NIST 800-53 rev 5: https://nvlpubs.nist.gov/nistpubs/SpecialPublications/NIST.SP.800-53r5.pdf Security and Privacy Controls for Information Systems and Organizations | ||

ISO 27017: www.itgovernance.co.uk/shop/product/isoiec-27017-2015-standard Information Technology – Security Techniques – Code of Practice for Information Security Controls based on ISO/IEC 27002 for Cloud Services Standard | ||

ISO 27018: www.itgovernance.co.uk/shop/product/isoiec-27018-2019-standard Information technology – Security techniques – Code of practice for protection of personally identifiable information (PII) in public clouds acting as PII processors | ||

FedRamp: www.fedramp.gov The Federal Risk and Authorization Management Program (FedRAMP) provides a standardized approach to security authorizations for cloud service offerings used by government entities. | ||

While these represent some of the best third-party hardening recommendations, it is extremely important to note that almost all of the cloud service providers also provide hardening guidelines for their own technology stacks. These guidelines will specify what settings to change, native tools to use, and auditing capabilities to apply for their unique features, per solution.

As an empirical practice, hardening of cloud assets is a collection of tools, techniques, and best practices to reduce weaknesses in technology applications, systems, infrastructure, hypervisor, and other areas that are cloud service provider independent. The goal of asset hardening is to reduce security risk by eliminating potential attack vectors and condensing the system’s attack surface. By removing superfluous programs, accounts functions, applications, open ports, permissions, access, etc., threat actors and malware have fewer opportunities to gain a foothold within your cloud environment.

An image reads Database, Container, Machine, Operating, Server, Virtual, System, and, Serverless, Application, Network, Hypervisor.

Word cloud of hardening in the cloud

Although the principles of asset hardening are universal, specific tools and techniques do vary depending on the type of hardening you carry out. Asset hardening is needed throughout the technology life cycle, from initial installation through configuration, maintenance, and support to final assessment at end-of-life decommissioning. Systems hardening is also a requirement of compliance initiates, such as PCI DSS, HIPAA, and many others.

Audit: Carry out a comprehensive audit of your existing cloud technology. Use penetration testing, vulnerability scanning, configuration management, and other security auditing tools to find flaws in the system and prioritize fixes. Conduct system hardening assessments against resources, using industry standards from NIST, Microsoft, CIS, DISA, etc.

Strategy: You do not need to harden all your systems at once, but they should be hardened before going into production. Therefore, create a strategy and plan based on the type of asset and the risks that are identified within your technology ecosystem, and use a phased approach to remediate the most significant flaws to ensure any remaining issues can be addressed in a timely manner or granted an exception based on the risk they represent.

Security Updates: Ensure that you have an automated and comprehensive vulnerability and patch management system in place to identify incorrect asset settings, as well as missing security patches. This will help remediate settings that may weaken your implementation as well as vulnerabilities that can be used in conjunction with an existing weakness that has not been mitigated.

Network: Ensure that cloud-based security controls are properly configured and that all rules are regularly audited, secure remote access points and users, block any unused or unneeded open network ports (ACLs), disable and remove unnecessary protocols and services, and encrypt network traffic.

Applications: Remove any components or functions you do not need, restrict access to applications based on user roles and context (such as with application control), and remove all sample files and default passwords. Application passwords should then be managed via a privileged access management solution that enforces password best practices (password rotation, length, etc.). Hardening of applications should also entail inspecting integrations with other applications and systems and removing, or reducing, unnecessary integration components and privileges.

Database: Create administrative restrictions, such as by controlling privileged access, on what users can do in a database, turning on node checking to verify applications and users, encrypting database information in both transit and at rest, enforcing secure passwords, implementing role-based access control (RBAC) privileges, and remove unused accounts.

Virtual Machines (Operating Systems): Apply operating system updates; remove unnecessary drivers, file sharing, libraries, software, services, and functionality; encrypt local storage; tighten registry and other systems permissions; log all activity, errors, and warnings; and implement privileged user controls. One very important note, operating system hardening that is present on-premise with physical machines is nearly identical for virtual machines in the cloud, except for any hardening that allows communications from the virtual machine to (through) the hypervisor, such as shared files and clipboard. This hardening will come from the hypervisor or cloud service provider’s hardening guidelines for their platform. Therefore, you can look for guidance from the operating system manufacturer/source, US Department of Defense (DoD), Center of Internet Security (CIS), etc. The biggest difference would be any controls that pertain to physical access hardening.

Identity and Account Management: Enforce least privilege by removing unnecessary accounts (such as orphaned accounts, default accounts, and unused accounts) and privileges throughout your entire cloud environment.

Targeted Functionality: With fewer unneeded programs and less functionality present after hardening, there is less risk of operational issues, misconfigurations, incompatibilities, resource issues, and, thus, compromise.

Improved Security: A reduced attack surface translates into a lower risk of data breaches, unauthorized access, systems compromise, and even malware.

Compliance and Auditability: Fewer running processes, services, and accounts, coupled with a less complex environment, means auditing the environment will usually be more transparent and straightforward. Only what is needed is truly executing.

Finally, with any attack vector mitigation, test, test, and test your hardening. It is not uncommon for hardening to break an application or disable a service required by a web application in the cloud. It is a bad idea to harden the environment only after it enters production. Hardening should be tested throughout development and quality assurance to verify any exceptions and to document the risk of not hardening them. This is not only a good security practice, but many regulatory bodies also require it.

Vulnerability Management

Like everything else we have been discussing, vulnerability management is a security best practice across any environment, but there are key differences and implications to understand when it is being applied in the cloud. This is mainly due to the methods used for a vulnerability assessment. If you are looking for a good definition for vulnerability management and vulnerability assessment, and the security best practices for each, please reference Asset Attack Vectors1 by Haber and Hibbert (2018).

- 1.Network Scanning: Target an asset based on network address or host name to perform a vulnerability scan.

- a.

Authenticated: Using appropriate privileged credentials, a network scan authenticates into an asset using remote access to perform a vulnerability assessment.

- b.

Unauthenticated: Using only network-exposed services, a network scan attempts to identify vulnerabilities based on open ports, running services, TCP fingerprints, exploit code, and services that will output versioning explicitly (like version 4.20) or through the formatting of strings.

- 2.

Agent Technology: Agent technology is typically small and lightweight software installed with system or root privileges to assess for vulnerabilities across the entire asset and then report the results to a local file or centrally to a vulnerability management system.

- 3.

Backup or Offline Assessments: Using templates, image backups, or other offline asset storage, assets are unhardened (remote access enabled and a privileged account enabled or created) in a controlled environment and subject to a vulnerability assessment using authenticated network scanning technology. This provides accurate results, as long as the image being assessed is recent, not modified, and can be powered on during assessments. Duplicating an entire environment is typically done in quality assurance or a lab.

- 4.

API Side Scanning Technology: This modern approach to vulnerability assessments uses the API available from a cloud service provider to manage an asset and leverages it to enumerate the file system, processes, and services within an asset for vulnerabilities. This approach is only valid since the hypervisor in a cloud service provider typically has complete asset access via an API to apply vulnerability assessment signatures for positive identification. Since this is an identification process only, API side scanning can use read-only API accounts, as contrasted to network scanning with authentication that typically needs administrator or root access. Also, API side scanning does not require special remote access services to be enabled.

- 5.

Binary Inspection: Outside of code analysis, binary inspection is a newer technique that assesses a compiled binary for vulnerabilities. It can identify binaries that can contain open source vulnerabilities and is designed to target compiled applications obtained from third parties that may be a part of your build process and cloud offering. This technique helps identify vulnerabilities early to expedite remediation plans and hold the source accountable based on the open source they may consume.

Network scanning technology using authentication requires a host to be unhardened and allow for remote access in the cloud (which is undesirable).

Unauthenticated network scanning does not provide sufficient results to determine if an asset is truly vulnerable to a known attack, when exposed to the Internet.

Outside of a subset of operating systems that can operate in virtual machines (mainstream Windows, Linux, and a few others), agent technology may not be compatible with your cloud-based virtual machines – especially if you use custom builds.

Agent technology generally has operating systems dependencies that may be a part of your current build process and introduce unnecessary risk and costs when enabled.

Agent technology typically does not operate well in container or serverless environments.

Network scanning and agent technologies utilize network traffic and CPU for an asset to identify vulnerabilities. If you have many assets and pay your cloud service provider based on resource consumption, there is likely a significant cost associated with each scan.

Binary inspection is currently limited to compiled open source technology on Linux and Windows and cannot assess compiled proprietary libraries.

As we have stated, vulnerability management in the cloud is the same as on-premise. The goal is to find the risks, but the techniques for an assessment may be different. Another difference is in MTTR (Mean Time to Repair) or, in this case, Mean Time to Remediate. Since the risk surface is larger in the cloud, especially for Internet-facing assets, high and critically rated vulnerabilities should have a tighter SLA (service-level agreement) for remediation using patch and verification methods.

Finally, as discussed throughout this book, vulnerability and patch management should be your highest priority in the cloud, along with credential and secrets management, to mitigate and remediate cloud attack vectors.

Penetration Testing

While penetration testing (pen testing for short) may not sound like a cloud attack vector mitigation strategy, it plays a crucial role in the security of your cloud applications. By definition, penetration testing is an authorized simulation of a cyberattack on a computer system, cloud assets, or other technology performed to evaluate the overall security of the system and its ability to thwart attacks. Penetration testing should never be confused with a vulnerability assessment since, in lieu of using signatures to detect a vulnerability, penetration testing uses active exploit code to prove that a vulnerability can be leveraged (exploited) in an attack. The process is performed to identify exploitable vulnerabilities, including the potential for unauthorized parties to gain access to the system’s features, data, runtime, configuration, and, potentially, lateral movement into assets that are more critical to the organization.

Penetration tests themselves can be conducted live, in production assets, or in a lab to test the system’s resiliency. Security issues that the penetration test discovers should be remediated before a production deployment or in accordance with the service-level agreements based on the criticality of the original vulnerability leveraged.

Individuals who conduct penetration testing are classified as ethical hackers or white hats and help determine the risk of a system before a threat actor (black hat) actually finds the weakness first and exploits it.

Penetration tests should be conducted periodically. They should occur at least once a year, but preferably much more frequently. Most compliance initiatives require at least one pen test a year, but this is typically insufficient to truly assess a system for risk, especially when changes occur on a more frequent base. For every organization, the goal should be continuous penetration testing to obtain a real-time assessment of the risks for any environment.

Penetration tests should be conducted with every major solution release or when significant features or changes to the code base have occurred.

The penetration test results are highly sensitive and should be handled with care. After all, they provide a blueprint on how to hack your systems.

Penetration testing results will help support the security controls needed for many cloud compliance initiatives, like SOC and ISO.

Unless you are in the Fortune 100, penetration tests typically should not be performed by company employees. As a security best practice, license a reputable third-party organization to perform required tests.

Penetration testing service companies should be changed frequently, about every year or after an assessment of a system is complete, to provide a fresh perspective on the attack vectors and to ensure that testers don’t become complacent. And yes, complacency really does happen when ethical hackers are asked to assess the same system repeatedly.

Most cloud service providers require notification about when a test is going to occur and from what source IP addresses, so the penetration test is not mistaken for an actual attack. Each cloud service provider has a different process for this notification, and the time periods allowed for active testing do vary. Make sure this is well known and communicated before the start of any testing. In some cases, a cloud service provider may consider testing without notification of a material breach of contract.

Penetration testing your cloud systems, applications, and cloud service providers is a critical component of your cloud attack vector protection strategy. The results will help your organization determine how a threat actor may breach your organization and the ability of your assets to notify and thwart a potential attack. The logged results can serve as indicators of compromise for real-world future attacks and symptoms for other related security issues.

Never forget that penetration testing is one of the most valuable tools in your arsenal for proving, in the real world, that your cloud environment has been properly (with reasonable confidence) implemented to safeguard your business, data, and applications.

Patch Management

One commonality between the cloud and on-premises technology is patch management. While there are literally dozens of ways of applying patches to cloud resources – from templates to instances and agents – the simple fact is that vulnerabilities will always be identified, and the best way to remediate them is to apply a security patch via patch management. Patch management solutions are designed to apply security updates and solution patches, regardless of asset type. If it is software, it should be able to be patched. Some solutions provide automatic updates natively, while others require a third-party solution for features and coverage.

Critical Updates: A widely released fix for a code-specific (open source) or product-specific, security-related vulnerability. Critical updates are the most severe and should be applied as soon as possible to protect the resource.

Definition Updates: Deployed solutions that need signature or audit updates periodically to perform their intended mission or function. Antivirus definitions and agent-based vulnerability assessment technologies are examples of these types of signature updates.

Drivers: Non-security-related driver updates to fix a bug, improve functionality, or support changes to the device, operating system, or integrations.

Security Updates: A widely released fix for a product-specific, security-related vulnerability that is noncritical. These updates can be rated up to a “High” and should be scheduled for deployment during normal patch or remediation intervals. These updates can affect commercial or open source solutions.

Cumulative/Update Rollups: A cumulative update provides the latest updates for a specific solution, including bug fixes, security updates, and drivers all for a specific point in time. The purpose is to bundle all the updates in a single patch to cover a specific time period or version, for simplification or ease of deployment.

General Updates: General bug fixes and corrections that can be applied and which are not security related. These updates are typically related to performance, new features, or other non-security runtime issues.

Upgrades: Major and minor (based on version number) operating system or application upgrades that can be automated for deployment. In the cloud, these are rarely done in production, but rather to the templates and virtual machines that may be a part of your DevOps pipeline.

Patch management solutions on-premise typically require an asset to have an operating system with an agent technology installed to apply a patch. Assets in the cloud may not have agents installed or may not even be capable of having agents installed.

Patching operating resources in the cloud may not be possible during production, except for virtual machines. This would follow a change control process similar to the process for on-premise. For non-virtual machines, patching the asset in the cloud should be a part of the DevOps pipeline, with redeployment of the solution, if needed.

When possible, consider using the native patch management features of the cloud service provider for the services they offer. It is in the cloud service provider’s best interest to maintain a secure environment. The vast majority of CSPs provide easy-to-use tools to ensure the products they offer are simple to maintain and secure.

Mitigating controls or virtual patches are possible in the cloud to mitigate a vulnerability for a short period, giving you time to formulate or test a potential patch for your systems. If, for any reason, a critical or high-impact vulnerability patch cannot be applied immediately, consider mitigating controls or a third-party vendor’s virtual patch as a stopgap solution. Note: a mitigation is a setting or policy change to lower the risk, whereas a remediation is the actual application of a security patch that fixes the vulnerability.

In the end, patch management in the cloud is the same as patch management on-premise. You must apply patches in a timely fashion and with potentially a high urgency due to the risk surface. The difference in deploying a patch is different due to the lack of patch management agent technology and DevOps pipelines. This means you may not patch in production but release a new or patched version as a part of your DevOps pipeline. Both should be well understood and a part of your strategic plan to remediate the threats from cloud attack vectors. Also, remember to monitor and measure your patch management approach to look for improvements and to optimize how quickly your organization can close a security gap. As stated earlier, good asset management can help to ensure that coverage is truly complete.

IPv6 vs. IPv4

Internet protocol (IP) is a standardized protocol that allows resources to find and connect to each other over a network. IPv4 (version 4) was originally designed in the early 1980s, before the growth and expansion of the Internet that we know today. Note: there is a long history for IPv4 development that is worth reading if you are interested in its original roots in military communications.

Improved connectivity, integrity, and security

The ability to support web-capable devices

Support for native end-to-end encryption

Backward compatibility to IPv4

Outside of the limited number of publicly addressable resources available to IPv4, which can be solved in many cases using NAT (Network Address Translation) in the cloud and on-premise, the extended range of addresses improves scalability and also introduces additional security by making host scanning and identification more challenging for threat actors.

To start, IPv6 can run end-to-end encryption natively. While this technology was retrofitted onto IPv4, it remains a discretionary feature and is not always properly implemented. The most common use case for IPv4 encryption and integrity checking is currently used in virtual private networks (VPN) and is now a standard component of IPv6. This makes it available for all connections and supported by all modern, compatible resources. As a simple benefit, IPv6 makes it more challenging to conduct man-in-the-middle attacks, regardless of where it is deployed since encryption is built in virtually, eliminating an entire class of attacks.

IPv6 also provides superior security for name resolution, unlike the DNS attack vector discussion we had earlier. The Secure Neighbor Discovery (SEND) protocol can enable cryptographic confirmation that a host is who it claims to be at the time of connection. This renders Address Resolution Protocol (ARP) poisoning and other naming-based attacks nearly moot and, if nothing else, much more difficult for a threat actor to conduct. In contrast, IPv4 can be manipulated by a threat actor to redirect traffic between two hosts where they can tamper with the packets or at least sniff the communications. IPv6 makes it very difficult for this type of attack to succeed.

Put simply, IPv6 security, when properly implemented, is far more secure than IPv4 and should be your primary protocol when architecting solutions in the cloud, as well as for hybrid implementations. The biggest drawback here is making sure all your assets, including security solutions like firewalls, can communicate correctly using IPv6. Threats targeting hybrid environments that include IPv4 and IPv6 plumbed together do represent a significant risk for an organization. A pure implementation is best, and it is not without its own tools and does have an administrative learning curve.

As you begin an IPv4 to IPV6 conversion, exercise caution when using tunneling, while both protocols are active. Tunnels do provide connectivity between IPv4 and IPv6 components or enable partial IPv6 in segments of your network that are still based on IPv4, but they can also introduce unnecessary security risks. Keep tunnels to a minimum and use them only where necessary. When tunneling is enabled, it also makes network security systems less likely to identify attacks, due to the routing and encryption of traffic that is inherently harder to identify.

Consider the entire environment and architecture. A network architecture under IPv6 can be difficult to understand and document compared to IPv4. Duplicating your existing setup, and just swapping the IP address, will not deliver the best results. Therefore, redesign your network to optimize the implementation. This is especially true when you consider all the components affected by IPv6 from the cloud, DMZ, LAN, and public-facing addresses (which will need to be addressable as IPv4 – normally).

Confirm that your entire environment is compatible, up to date, and that planning for the switch is documented and tested. This is not like turning on a light switch. This includes a complete asset inventory for the cloud and hybrid environment. It is easy to miss a network device or security tool that is being used by individuals during the process, especially for basics like vulnerability and patch management. These will create an unnecessary, escalating risk over time. Therefore, do not enable IPv6 until you are ready. Test, test, and test your IPv6 conversion, unless this is a new installation, which is always preferred.

Privileged Access Management (PAM)

Finally, it’s time to have a detailed discussion about privileged access management (PAM). Protecting an asset in the cloud entails far more than security patches, proper configurations, and hardening. Consider the damage a single, compromised privileged account could inflict upon your organization from both a monetary perspective and a reputation perspective; Equifax,2 Duke Energy3 (based on a third-party software vendor), Yahoo,4 and Oldsmar Water Treatment,5 to name just a few, were each severely impacted by breaches that involved the exploitation of inadequately controlled privileged access. The impacts ranged from hits to company stock prices and executive bonuses, to the changing of acquisition terms, to even hindering the ability to do basic business, like accepting payments.

A compromised privileged password does have a monetary value on the dark web for a threat actor to purchase, but it also has a price that can be associated with an organization in terms of risk. What is the value and risk if that password is compromised and its protected contents exposed to the wild? Such an incident can have a significant, negative influence on the overall risk score for the asset and company.

A database of personally identifiable information (PII) is quite valuable, and blueprints or trade secrets have even a higher value if they are sold to the right buyer (or government). My point is simple: privileged accounts have a value (some have immense value), and the challenge is not just about securing them but also about identifying where they exist in the first place. Our asset management discussion highlighted this in detail. If you can discover where privileged accounts exist, you can measure their risk and then monitor them for appropriate usage. Any inappropriate access can be highlighted, using log management or a SIEM, and properly escalated for investigation, if warranted. This is an integral part of the process and procedures we have been discussing.

Some privileged accounts are worth much more than others, based on the risk they represent and the value of the data they can access. A domain administrator account is of higher value than a local administrator account with a unique password (although that may be good enough to leverage for future lateral movement). The domain administrator account gives a threat actor access to everything, everywhere, in contrast to a unique administrative account that only provides access to a single asset.

Treating every privileged account the same is not a good security practice. You could make the same argument for a database administrative account vs. a restricted account used with ODBC for database reporting. While both accounts are privileged, owning the database is much more valuable than just extracting the data.

Identify crown jewels (sensitive data and systems) within the environment. This will help form the backbone for quantifying risk. If you do not have this currently mapped out, it is an exercise worth pursuing as a part of your data governance and asset management plan.

Discover all privileged accounts using an asset inventory scanner. You can accomplish either via free solutions or via an enterprise PAM solution. Also, consider your vulnerability management scanner. Many VM scanners can perform account enumeration and identify privileges and groups for an account.

Map the discovered accounts to crown jewel assets. Based on business functions, this can be done by hostname, subnets, AD queries, zones, or other logical groupings. These should be assigned a criticality in your asset database and linked to your vulnerability management program.

Measure the risk of the asset. This can be done using basic critical/high/medium/low ratings, but it should also consider the crown jewels present and any other risks, like assessed vulnerabilities. Each of these metrics will help weight the asset score. If you are looking for a standardized starting place, consider CVSS6 and environmental metrics.

Finally, overlay the discovered accounts. The risk of the asset will help determine how likely a privileged account is to be compromised (via vulnerabilities and corresponding exploits) and can help prioritize asset remediation outside of the account mapping.

In the real world, a database with sensitive information may have critical vulnerabilities from time to time, specifically in-between patch cycles. This should, however, be as minimal time as possible. The asset may still present a high risk when patch remediation occurs, if the privileged account used for patching is unmanaged. This risk can only be mitigated if the privileges and sessions are monitored and controlled. Criticality can be determined either by the vulnerabilities or the presence of unrestricted, unmanaged, and undelegated privileged access. Therefore, inadequately managed privileged accounts, especially unprotected ones, ones with stale, guessable, or reused passwords, or even default passwords, are just unacceptable in your cloud environments. Thus, implementing and maturing privileged access management plays a foundational role in not only mitigating cloud attack vectors but also unlocking the potential of the cloud.

Vendor Privileged Access Management (VPAM)

One of the most interesting things about information technology is that there are rarely truly original ideas that are game changers for cybersecurity. Often, the next best thing follows the same security best practices we have been engaging with for years, and the new, hot solution is built upon prior art. In fact, one could argue that most modern cybersecurity solutions are simply derivations of previous solutions with incremental improvements in detection, runtime, installation, usage, etc., to solve the same problems that have plagued organizations for years. While some may have innovative approaches that are even patentable, in the end, they are doing the same thing. This is true for antivirus, vulnerability management, intrusion detection, log monitoring, etc.

Vendor: A person or company offering something for sale, services, software, or tangible product, to another person or entity. In many cases, the offering requires installation, maintenance, or other services to ensure success of the offering over a period of time. A vendor does not need to be the manufacturer of the offering, but it is the entity that is actually performing the sale of the solution. Warranties and liability can vary based on terms and conditions from the vendor and manufacturer. When applied to cybersecurity, the manufacturer typically supplies updates, while the vendor may assist with the installation, if contracted to.

Privileged Access Management: PAM consists of the cybersecurity strategies and technologies for exerting control over the elevated (“privileged”) access (local or remote) and permissions for identities, users, accounts, processes, and systems across an environment, whether on-premise or in the cloud. By moderating the appropriate level of privileged access controls, PAM helps entities reduce their attack surface and prevent, or at least mitigate, the damage arising from attacks using accounts with excessive privileges and remote access.

Remote Access: Remote access enables access to an asset, such as a computer, network device, or infrastructure, for a cloud solution from a remote location. This allows an identity to operate remotely, while providing a near seamless experience in using the asset to complete a designated task or mission.

Zero Trust: The underlying philosophy0 behind zero trust is to implement technology based on “never trust, always verify.” This concept implies that all identities and assets should never allow authentication unless the context of the request is verified and actively monitored for appropriate behavior during a session. This includes implementing zero-trust security controls, even if access is connected via a trusted network segment or originating from an untrusted environment. The management of policies governing access and the monitoring of behavior are performed in a secure control plane, while access itself is performed in the data plane.

Vendor privileged access management allows for third-party identities/vendors to successfully access your assets remotely using the concepts of least privilege and without the need to ever know the credentials needed for connectivity. In fact, the credentials needed for access are ephemeral, potentially instantiated just in time, and all access is monitored for appropriate behavior.

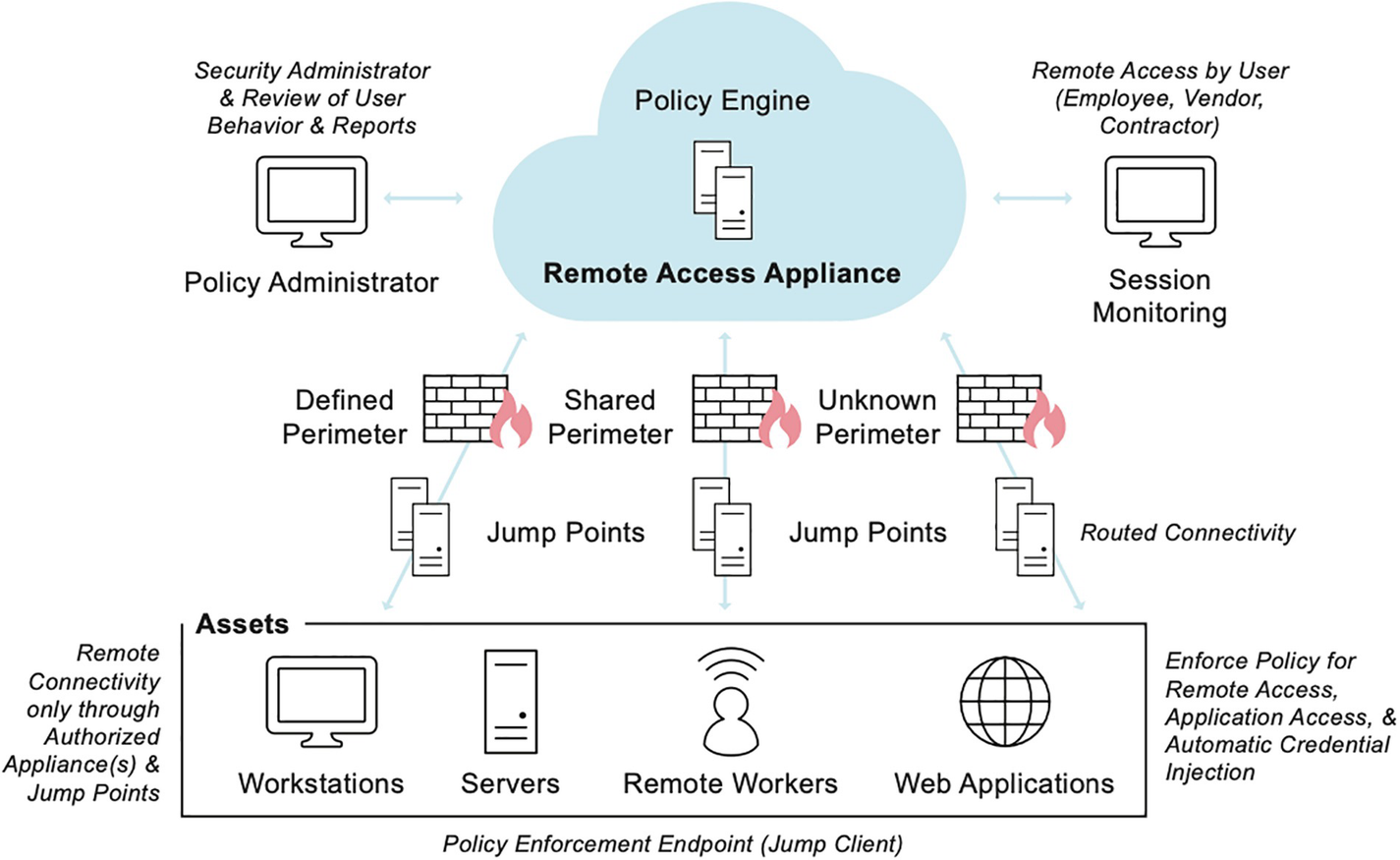

A model diagram of the Vendor Privileged Access Management uses the cloud to provide the control plane for authorized remote access. It permits deployment in the data plane, where the organization needs remote access or administration.

Reference architecture for VPAM

When you consider modernizing your vendor remote access as the work-from-anywhere (WFA) movement continues, consider VPAM. It is one new solution category that takes the best of breed from many existing solutions and unifies them to solve significant challenges of vendor remote access – especially with regard to privileges. In fact, when using VPAM, entities do not need to bolt together multiple solution providers to achieve the desired results. VPAM vendors can provide vendor privileged remote access out of the box based on a SaaS solution (control plane) and can provide a rapid time for deployment (data plane). And, as a Sci-fi reminder, the prefix code for the USS Reliant (NCC-1864) was simply 16309, a five-digit numerical code to take complete remote of a starship. Vendors should never have such simplified administrative access.

VPAM can be used for vendors, contractors, or remote employees who need access to assets on-premise, in the cloud, or located within hybrid environments.

Multi-factor Authentication (MFA)

While we have been focusing on passwords as the primary form of authentication with credentials (single factor), other authentication techniques are needed to secure the cloud properly. As a security best practice and required by many regulatory authorities, multi-factor authentication (MFA) techniques are the standard to secure access. MFA provides an additional layer (beyond username + password combinations) that makes it more challenging to compromise an identity. Thus, MFA is always recommended when securing sensitive assets.

The premise for MFA (two-factor is a subset category for authentication) is simple. In addition to a traditional username and password credential, a “passcode” or other evidence is needed to validate the user. This is more than just a PIN code; it is best implemented when you have something physical to reference or provide as “proof” for your identity. The delivery and randomization of “proof” varies from technology to technology and from vendor to vendor. This proof typically takes on the form of knowledge (something the user knows that is unique to them), possession (something they physically have that’s unique to them), and inherence (something they have in a given state).

The use of multiple authentication factors provides important additional protection around an identity. An unauthorized threat actor is far less likely to supply all the factors required for correct access when MFA is in place. During a session, if at least one of the components is in error, the user’s identity is not verified with sufficient confidence (two of three criteria match); in that case, access to the asset being protected by multi-factor authentication is denied.

A physical device or software, like a phone app or USB key, that produces a secret passcode, re-randomized regularly.

A temporary secret code known only to the end user, like a PIN that is transmitted via a communication medium, like email or text at the time of requested authentication.

A physical characteristic that can be digitally analyzed for uniqueness, like a fingerprint, typing speed, facial recognition, or voice pattern with keywords. These are called biometric authentication technologies.

MFA is an identity-specific layer for authentication. Once validated, the user privileges assigned are no different, unless policies explicitly require multi-factor authentication to obtain step-up privileges. For example, if credentials are compromised in a traditional username and password model, a threat actor could authenticate against any target that will accept them locally or remotely. For multi-factor, even though there is an additional variable required, including physical presence, once you are validated, lateral navigation is still possible from your initial location (barring any segmentation technology or policy) unless you are validated again (step-up) to perform a specific privileged task, like authenticating to another remote asset. The difference is solely your starting point for authentication.

MFA must have all the security conditions met from an entry point, while traditional credentials do not, unless augmented with other security attributes. A threat actor can leverage credentials within a network to laterally move from asset to asset, while changing credentials as needed. Unless the multi-factor system itself is compromised, or they possess an identity’s complete multi-factor challenge and response, the hacker cannot successfully target a multi-factor host for authentication. Hence, there must always be an initial entry point for starting a multi-factor session. Once a session has been initiated, using credentials is the easiest method for a threat actor to continue a privileged attack and accomplish lateral movement.

The continued use of MFA in the cloud is always recommended for step-up authentication, unless you blend it with additional security layers, like Single Sign On (SSO).

Single Sign On (SSO)

Before we dive into Single Sign On, let’s expand on basic authentication. As we have stated, authentication models for end users can be single factor (1FA), two-factor (2FA), or multi-factor (MFA discussed previously). Single-factor authentication is typically based on a simple username and password combination. (Yes – we know, we have stated this multiple times, but you will not forget this based on our repetition, or when it appears on a test). Two-factor is based on something you have and something you know. This includes, for instance, a username and password from single factor (something you know) and a mobile phone or two-factor key fob (something you have). Multi-factor authentication is one step above and uses additional attributes, like biometrics, to validate an identity for authentication.

The flaw with all these authentication models is the something that you know part, traditionally a password. It can be shared, stolen, hacked, etc., and it is the biggest risk to a business when it is compromised. In fact, to manage a cloud environment, you would probably have to remember dozens of unique passwords or use a password manager. LastPass,7 for example, caused a large-scale panic regarding a purportedly massive primary password data breach. Fortunately, the incident turned out not to be true, but it did create fears of similar cloud-based cyberattacks using credentials.8

If authentication is for cloud assets, then the implications of an unauthorized identity gaining access could be devastating, especially for clients using this type of service for Internet-facing assets. Therefore, it is important for organizations to mitigate the issue by replacing passwords (something you know) with something you know and that can be changed, but which cannot be shared or as easily hacked as a password.

Next, consider how many passwords you have for work. Most organizations have hundreds of applications, and if you have embraced digital transformation, you now have hundreds or thousands of cloud-based vendors supplying these applications. This potentially means credentials – username (most likely your email address) and a password – for each one. As a security best practice, you should not reuse your password, which means you have potentially hundreds of unique passwords to remember. This is not humanly possible. We previously mentioned password managers and their potential risks, but a better approach is SSO, especially when combined with MFA.

So, what is SSO? Single Sign On is an authentication model that allows a user to authenticate once, to a defined set of assets, without the need to reauthenticate for a specified period of time, as long as they are operating from the same trusted source. When 2FA or MFA is also applied for the validation, there is a high degree of confidence that the identity is who they say they are. When these methods achieve this high confidence, the associated applications in the defined set can be accessed, without the user having to remember unique passwords for each one over and over again. This SSO model negates the need for a password manager.

Now apply this SSO model to the cloud and all your users. Notice I did not say identities, since SSO is typically only used for human authentication, and not machine-to-machine authentication. From a trusted workstation, an end user authenticates once and can use all the applications they need until they log out, reboot, change the IP address or geolocation, etc. If their primary password is compromised, then only that password needs to be changed, and not passwords for the potentially hundreds of applications hosted by SSO. In fact, if Single Sign On is implemented correctly within an organization, the same end user cannot (and should not be able to) log directly into an application without using the SSO solution. The SSO user should not even know the passwords for the applications operating under SSO. That is all managed by the SSO solution itself, regardless of whether it is injecting credentials or using SAML.

Single Sign On enables a single place to authenticate for all business applications and a single place for credential management. With this in mind, please consider these dos and don’ts for your SSO implementation into the cloud:

Harden your SSO implementation based on the vendor’s best practices.

Enable SSO controls that terminate sessions or automatically log off the user based on asset and user behavioral changes. This includes system reboots, IP address changes, geolocation changes, detected applications, missing security patches, etc.

Select a vendor for SSO that supports open standards, like SAML, and can accommodate legacy applications based on your business needs.

All authentication logging for your SSO should be monitored for inappropriate access and potentially authentication attempts occurring simultaneously from different geolocations.

Consider a screen monitoring (recording) solution as an add-on for SSO, when sensitive systems are accessed, to ensure user behavior is appropriate.

Identify all applications in scope of your SSO deployment, and ensure they support. SAML or other appropriate technology that is secure.

Verify that the identity directory services are accurate and up to date.

Consider user privileges when granting SSO access and honor the principle of least privilege.

Enforce session timeouts globally or based on the sensitivity of individual applications.

Don’t allow SSO from untrusted or unmanaged workstations. This includes BYOD, unless absolutely needed, since the security state of the device cannot be measured or managed by your information technology and security team.

Don’t implement SSO using only single-factor authentication. That is just a bad idea since a single, compromised password could expose all delegated applications for that user.

Don’t allow SSO, ever, from end-of-life operating systems and devices that cannot have the latest security patches applied.

Don’t allow end users to perform password resets on managed SSO applications.

Don’t allow personally identifiable changes to an end user’s profile within an application, like account name and password. Changes of this nature can break SSO integration or be used to compromise an application for additional attack vectors.

And DON’T use SSO for your most sensitive privileged accounts – keep them separate. Consider using a PAW and manage the workstation and credentials separately. No one should be able to authenticate once and have a wide variety of privileged access.

Do not allow direct access to an application that can bypass SSO.

While Single Sign On sounds like a solution to solve the majority of your authentication problems for users in the cloud, a poor implementation can make it a bigger risk than without it. When done correctly, SSO forms a foundation for centrally monitoring, managing, logging, and even directory service consolidation, for all end-user access. And note, we have only been discussing end-user access. Remember, SSO does not apply to machine-based identities, and organizations should not include administrative accounts in SSO unless additional security controls are in place. If SSO for administration is needed, consider a privileged access management solution with full session recording and behavioral monitoring.

Identity As a Service (IDaaS)

The discussion on MFA and SSO is all dependent on the fact that we have a valid identity and account relationship. On-premise, for many organizations, identity management starts with Microsoft’s Active Directory and is linked with multiple, disparate solutions using identity governance and access (IGA) solutions. In the cloud, the proposition is the same, but just like everything else, it is different enough to have substantive implications.

There is a strong need for identity governance to support cloud-based solutions, but also to be compatible with, or even to replace, on-premise technology. The complexity of having multiple directory services is highly undesirable for any organization. This complexity can create challenges around performing a certification for appropriate access or providing an attestation to prove the scope of access within an organization based on role, application, or even data type is accurate. This is where Identity as a Service (IDaaS) has stepped in. It uses the cloud to solve a fundamental problem in the cloud for identity management and governance.

A single source of authority for all identities used for forensics, indicators of compromise, logging, certificates, and attestations, without the need to try to reconcile the same account across multiple directory services.

The joiner, mover, or leaver process for identity governance is greatly simplified for recording all changes to an identity throughout its life cycle.

Identifying rogue, orphaned, or shadow IT identities requires only auditing one directory service (outside of potentially local accounts). This also minimizes the need for any local accounts.

A consolidated solution can reduce the costs and workforce required to maintain and service multiple directory services.

If a security incident requires modifications to an identity and its associated accounts, changes are needed only in a single location vs. manual changes across multiple directory services, some of which could be missed.

Managing identities in the cloud is crucial to all remote access, automation, DevOps pipelines, administrative access, maintenance, backups, etc.; any one of these can be abused as an attack vector, as we have been discussing. Therefore, managing identities with a solution in the cloud, one that is made for the cloud, represents the best model and architecture to ensure that the management of identities, and all their associated accounts, does not become an attack vector in itself.

Cloud Infrastructure Entitlement Management (CIEM)

Cloud Infrastructure Entitlement Management (CIEM, but pronounced “Kim” – but not Ensign Harry Kim) is the next generation of solutions for discovering and managing permissions and entitlements, evaluating access, and implementing least privilege in the cloud. The goal of CIEM is to tackle the shortcomings of current identity access management (IAM) solutions, while addressing the need for identity management in cloud-native solutions. While the CIEM concept can be applied to organizations utilizing a single cloud, the primary benefit is to have a standardized approach that extends across multiple cloud and hybrid cloud environments and continuously enforces the principle of least privilege and measures entitlement risk in a uniform manner.

CIEM solutions can address cloud attack vectors by ensuring the principle of least privilege is consistently and rigorously applied across a cloud and multicloud environment. Least privilege in the cloud entails enabling only the necessary privileges, permissions, and entitlements for a user (or machine identity) to perform a specific task. Privileged access should also be ephemeral or finite in nature.

CIEM is a new class of solutions, built entirely in the cloud and for the cloud, allowing organizations to discover, manage, and monitor entitlements in real time and model the behavior of every identity across multiple cloud infrastructures, including hybrid environments. The technology is designed to provide alerts when a risk or inappropriate behavior is identified and to enforce least privilege policies for any cloud infrastructure, with automation to change policies and entitlements. This makes it simple for a solution owner to apply the sample policies across what have traditionally been incompatible cloud resources.

Provides a consolidated and standardized view for identity management in multicloud environments and allows the granular monitoring and configuration of permissions and entitlements.

The cloud provides a dynamic infrastructure for assets to be constructed and torn down based on demand and workload. If overly provisioned, the management of identities for these use cases can lead to excessive risk. CIEM provides an automated process to ensure that all access is appropriate, regardless of the state in a workflow.

Identity access management solutions that cloud providers offer are designed to work only within the provider’s own cloud. When organizations use multiple providers, instrumenting policies and runtime to manage them becomes a burden due to the inherent dissimilarities. CIEM solves this problem, logically enumerating the differences and providing a single view of entitlements, with actionable guidance for enforcing least privilege.

Mismanagement of identities in the cloud can lead to excessive risk. A security incident is bound to happen without a proactive approach to managing cloud identities and their associated entitlements. This is especially true if an identity is over-provisioned. Implementing management and the concept of least privilege for these identities can lower risk for the entire environment.

When CIEM is used with adjacent privileged access management solutions, the management of secrets, passwords, least privilege, and remote access can all be unified to ensure that any gaps in entitlements or privileges are addressed and access is right-sized to the use cases/needs.

With increasing momentum behind digital transformation strategies, the use of cloud environments has exceeded the basic capabilities of legacy on-premise PAM and IAM solutions. Those solutions were never designed and implemented to manage the cloud nor the dynamic nature of resources in the cloud. CIEM is fast becoming a must-have solution for managing cloud identities, alongside PAM.

Account and Entitlements Discovery: Your CIEM implementation should inventory all identities and entitlements and appropriately classify them. This is performed in real time to adjust for the dynamic nature of cloud environments and the ephemeral properties of resources in the cloud.

Multicloud Entitlements Reconciliation: As workloads expand across cloud environments, organizations must reconcile accounts and entitlements and identify which ones are unique per cloud and which ones are shared, using a uniform model to simplify management.

Entitlements Enumeration: Based on discovery information, entitlements can be reported, queried, audited, and managed by the type of entitlement, permissions, and user. This allows for the pivoting of information to meet objectives and manage identities and entitlements-based classification.

Entitlements Optimization: Based on the real-time discovery, operational usage of entitlements helps classify over-provisioning and identify which identities can be optimized for least privileged access, based on empirical usage.

Entitlements Monitoring: Real-time discovery also affords the ability to identify any changes in identities and entitlements, thus providing alerting and detection of inappropriate changes that could be a liability for the environment, processes, and data.

Entitlements Remediation: Based on all the available data, CIEM can recommend and, in most cases, fully automate the removal of identities and associated entitlements that violate established policies or require remediation to enforce least privilege principles.

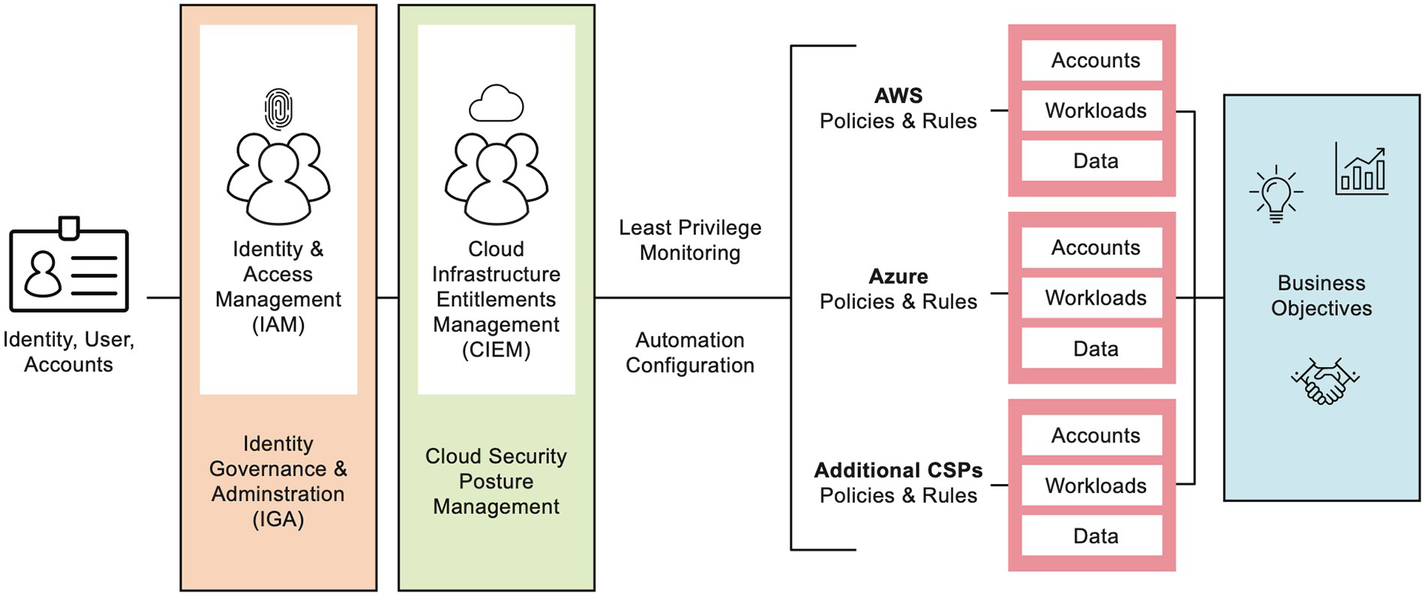

A model diagram of the typical Cloud Infrastructure Entitlement Management solution structure. The parts are User, Identity Access Management, Cloud Security Posture Management, and different policies under A W S, Azure, and Additional C S Ps, and the business objectives,

Architecture for a CIEM solution

API-based connectors to enumerate identities and entitlements per cloud instance and vendor

Database for storage of current and historical identities, entitlements, and remediation policies

Policy engine for identifying threats, changes, and inappropriate identity and entitlement creation and assignments

User interface for managing the solution and aggregating multicloud information into a single view

The primary reason CIEM succeeds over legacy on-premise solutions is predicated on the API-based connectors, which operate in real time. CIEM continuously assesses the state of identities and entitlements, applies them to a policy engine, and supports automation tailored to identify risks in cloud environments. This then allows a user to see attributes for all cloud providers in a single interface using common terminology (much of which has been previously discussed). On-premise technology generally relies on batch-driven discovery over the network using agents, IP addresses, or asset lists that can be resolved using DNS. API discovery provides nearly perfect results compared to the error-filled results of network scanning.

Customer Identity and Access Management (CIAM)

Customer Identity and Access Management (CIAM) is a subset of the larger identity access management (IAM) market and is focused on managing the identities of customers (outside of business) who need access to corporate websites, cloud portals, and online applications. Instead of managing identities and accounts in every instance of a company’s software application, the identity is managed in a CIAM service, making reuse of the identity possible. The most significant difference between CIAM and business (internal) IAM is that CIAM targets consumers of the service to manage their own accounts, profile data, and what information can, and should, be shared with other organizations.

In many ways, CIAM begins to fulfill the mission of BYOI (bring your own identity), but only for the consumer world. It is only a matter of time, in our opinion, before this will enter the business world in lieu of filing our banking information and details for employment history.

As a solution, CIAM is how companies give their end consumers access to their digital resources, as well as how they govern, collect, analyze, market, and securely store data for consumers that interact with their services. CIAM is a practical implementation that blends security, customer experience, and analytics to aid consumers and businesses alike. CIAM is designed to protect sensitive data from exploitation or exfiltration to comply with regional data privacy laws.

With the earlier definition in mind, embarking on a CIAM journey using a homegrown solution is typically out of reach for most organizations due to the complexity, data privacy, and security requirements for such a vast process. Therefore, many organizations will license CIAM as a Service to meet their business objectives in the cloud.

- 1.

Scalability: Traditional cloud or on-premise IAM solutions that can manage thousands of identities associated with employees, contractors, vendors, and machine accounts require role-based access to static lists of resources and applications. In contrast, CIAM may potentially need to scale to millions of identities, based on the consumer implementation. While these requirements may be burstable based on events or seasonal activities, scalability is a requirement of CIAM that natively leverages cloud-based features to operate correctly. Fixed implementations of CIAM generally cannot scale appropriately when high transactional loads are placed on the system.

- 2.

Single Sign On (SSO): SSO allows an identity to authenticate into one application and automatically be authenticated into additional applications based on an inherited trust from the initial application. The most common SSO example is present in Google G Suite. Once authenticated, an identity has access into YouTube, Google Drive, and other Google applications hosted on the Alphabet platform. SSO is a feature for federated identities, and this implementation is designed for consumers to utilize these services transparently, while managing their own account and profile. In effect, they are their own administrators for their information, in contrast to IAM in an organization, which is managed by human resources and the information technology team.

- 3.Multi-factor Authentication (MFA): MFA is designed to mitigate the risks of single-factor authentication (username and password) by applying an additional attribute to the authentication process. This can be done using various methods, since passwords alone are a prime target for threat actors. Typical CIAM implementations of MFA can use:

A one-time SMS PIN sent to the user’s mobile device or email

A confirmation email with a unique URL or pin

A dedicated MFA mobile application, like Microsoft or Google Authenticator

A biometric credential, like a fingerprint or face recognition leveraging a trusted device’s embedded technology

An automated voice call requiring a response via touchpad or voice to confirm the action.

- 4.

Identity Management: Managing identities in your solution needs to be centralized, scalable, and have distinct features for self-service by end users (consumers). Centralized identity management eliminates data silos, data duplication, and helps drive compliance by simplifying data mapping and data governance. All information about an identity is in a central location for management, security, auditing, and analytics processing. And, if an identity needs to be deleted, CIAM solutions can appropriately mark it for removal, without the concerns of orphan information. This is a huge concern for security requirements like GDPR (covered in the following).

- 5.Security and Compliance: Data privacy laws like GDPR and CCPA are fundamentally changing how organizations collect, store, process, and share personally identifiable information. These legal obligations have a substantial impact on how you implement and manage your CIAM solution. As discussed, centralized identity management provides a foundation for understanding where all of a user’s sensitive information resides. Based on the individual requirement, security and compliance can be managed by:

Allowing organizations to provide users, upon request, with copies of their data.

Allowing organizations to provide users, upon request, with audit records of how their data is being used.

Ensuring initiatives like MFA are being implemented against the appropriate identity and not another record, location, or directory service for the same identity.

Implementing “reasonable” and/or “appropriate” security measures to safeguard all identities.

Ensuring all identity information is appropriately stored and encrypted, since it is centralized.

Auditing access to all identity-related information for forensics and other compliance initiatives, like PCI and HIPAA.

A properly implemented CIAM solution will help meet all of these objectives present in many local compliance initiatives.

- 6.

Data Analytics: One of the primary objectives of CIAM is to provide a consolidated analytics view of usage and behaviors based on federated (and sometimes unfederated – guest users that are not centrally managed) identities. This single view of consumers provides myriad business advantages and also aids organizations to meet auditing requirements for data privacy due to centralization and confidence in the security model. Automation and analytics can help drive a customized experience, allow for targeted marketing, drive higher retention rates, and provide business insight into critical consumer trends. If properly analyzed and correlated against other variables outside of the organization, this data can be a significant competitive advantage.

Ergo (and not Star Lord’s father Ego), a well-implemented CIAM solution can achieve the goal of centralized, data-rich consumer profiles that function as a single source of truth about identities and their behavior for consumption by third parties.

Cloud Security Posture Management (CSPM)

Cloud Security Posture Management (CSPM) is a product and market segment designed to identify cloud configuration anomalies and compliance risks associated with cloud deployments, regardless of type. By design, CSPM solutions are designed to be real time (or as near real time as possible) to continuously monitor cloud infrastructure for risks, threats, and indicators of compromise based on misconfigurations, vulnerabilities, and inappropriate hardening. As discussed, these are three critical areas to manage to mitigate cloud attack vectors. Therefore, the primary goal is to enforce your organization’s policy for risk tolerance for these three security disciplines.

CSPM as a solution category was originally branded by Gartner,9 a leading IT research and advisory firm. CSPM solutions are implemented using agent and agentless technology (cloud APIs) to compare a cloud environment against policies and rules for best practices and known security risks. If a threat is identified, some solutions have automation to mitigate the risks automatically, while others focus on alerting and documentation for manual intervention.

While it is considered more advanced to use Robotic Process Automation (RPA) to remediate a potential issue, false positives, denial of service, account lockouts, or other undesirable effects can occur if remediation interferes with the normal workload. This is where risk tolerance becomes an important factor in the decision for notification, automatic remediation, or a hybrid model requiring manual approval before automation.

Automatically detects and remediates cloud misconfigurations in real time (or near real time)

Provides a catalog and reference inventory of best practices for different cloud service providers and their services, including how they should be hardened using native recommendations or third-party recommendations, like from the Center of Internet Security (CIS)

Provides a reference map of current runtime and dormant configuration to established compliance frameworks and data privacy regulations

Provides monitoring for storage buckets, encryption, account permissions, and entitlements for the entire instance and reports back findings based on compliance violations and actual risk

Inspects the environment in real time (or near real time) for publicly disclosed vulnerabilities with operating systems, applications, or infrastructure

Performs file integrity monitoring of sensitive files to ensure they are not tampered with or exfiltrated

Operates and supports multiple cloud service providers, including all the major vendors listed in Chapter 5

CSPM tools play an essential role in securing a cloud environment by reducing the potential attack vectors that a threat actor can exploit. It is also important to note that some of these tools can be specific to a cloud service provider and, thus, will not operate across other cloud environments unless the solution provider has explicitly added support. So, for instance, some tools may be limited to being able to detect anomalies in an AWS or Azure cloud, but not in a GCP environment.

As a mitigation strategy for cloud attack vectors, all organizations should consider some form of CSPM solution above and beyond their traditional security tools. This is one place where the best practices have stayed the same, but the tool has evolved to solve the problem.

Cloud Workload Protection Platform (CWPP)

Cloud Workload Protection Platform (CWPP) is another solution category coined by Gartner.10 It is a workload-centric security solution that focuses on the protection requirements of workloads in the cloud and automation present in modern enterprise cloud and multicloud environments.

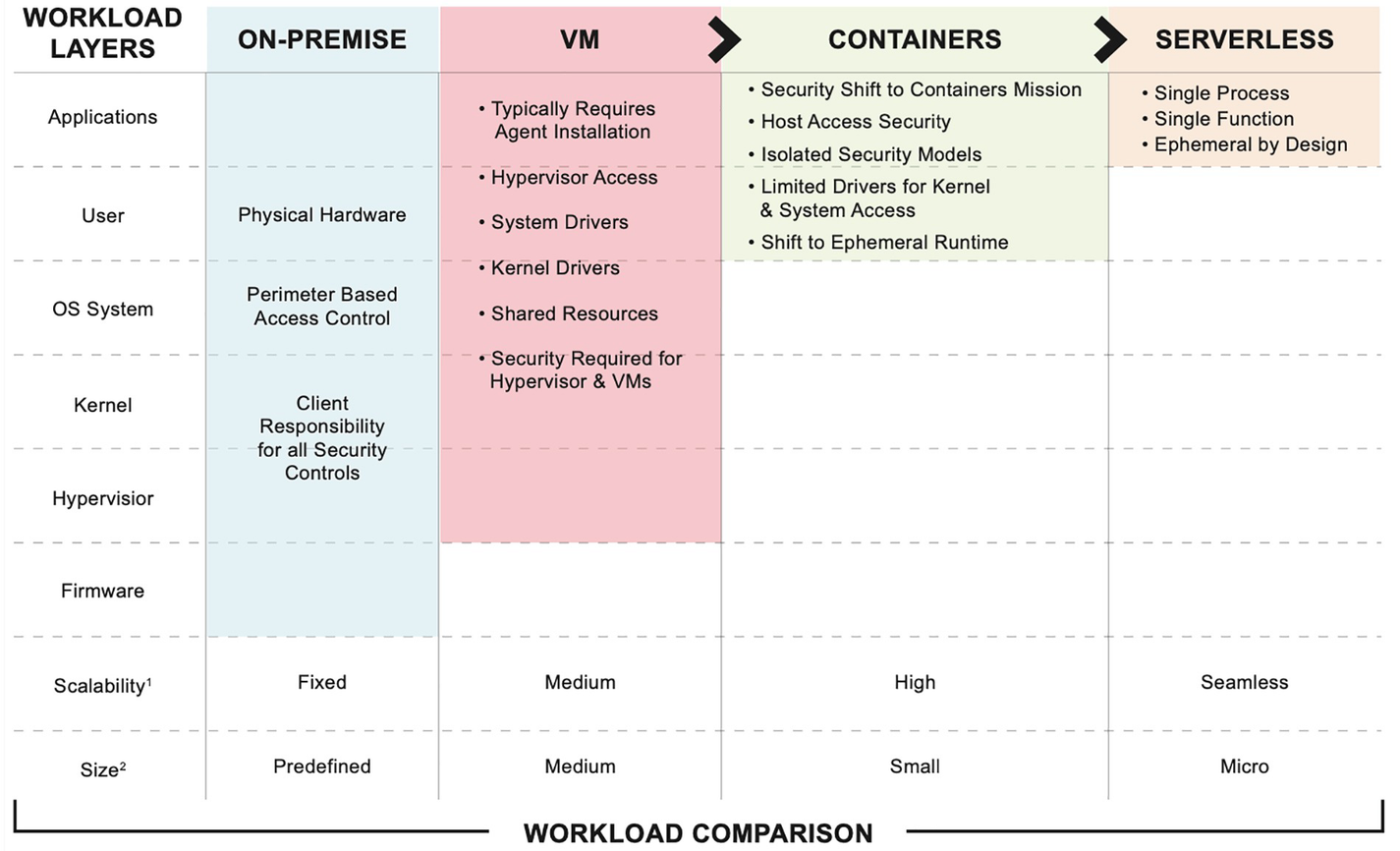

A model diagram of the transition of computer resources from on-premise systems to those hosted in the cloud and those without servers. The phases are On-Premise, V M, Containers, and Serverless.

Evolution of computing resources from on-premise to cloud and serverless environments