CHAPTER 7

Operations, Management, and Orchestration in the Cloud

The first words in the title of this chapter refer to the means of supporting the Operations and Management (OA&M) of the Cloud infrastructure. While the practice of operations and management has been fairly well understood and even partly standardized, the word “orchestration” remains somewhat ambiguous, one of the most misused words in the industry. Yet the concept of orchestration is critical to Cloud Computing. Our first task is to clarify this concept.

Things were simpler in the 19th and 20th centuries,1 when orchestration simply referred to the task, performed by a composer, of writing a score for an ensemble of musical instruments (typically a symphonic orchestra). The same word has also referred to the musical discipline—taught in conservatories as part of a composition curriculum—of writing for orchestra. The discipline catalogs the musical characteristics (range, timbre, technical difficulties, and idioms) of the representatives of various groups of instruments (strings, woodwind, brass, and percussion)—the subject also referred to as instrumentation—and teaches how different individual instruments may be combined or juxtaposed to achieve the sound color and balance envisioned by a composer. It should be noted that the physical characteristics of the instruments employed in the modern symphonic orchestra have been largely standardized, and orchestra performers have been trained according to this standard. That makes the instrumentation part rather precise (in specifying, for example, which trill is easy to play on a trombone, and which one is impossible to play). On the other hand, the orchestration proper—that is, the part that deals with combining the sound qualities of various instruments to achieve new effects—can do nothing more beyond listing a few generic principles and then bringing in various examples from the work of masters to illustrate the effects created. If one follows these examples as rules, one cannot create new effects. Yet, great composers (notably Richard Wagner in the 19th century and Maurice Ravel and Igor Stravinsky in the 20th century) have revolutionized orchestration by discovering new and striking sound combinations that fit their respective artistic visions. Once their music became known and accepted, their scores became a new source for teaching orchestration. We refer interested readers to an excellent book on orchestration [1], from which at least one author has learned much.

The meaning of orchestration in Cloud Computing is not that dissimilar from that of its original counterpart in music. In the Cloud, the “instruments” are the resources described in the previous chapters. The word “instruments” refers to both physical resources (i.e., hosts, storage, and networking devices) and software resources (hypervisors and various operating systems), all of which are “played” with the single purpose of introducing and supporting Cloud services.

Two NIST Cloud architecture publications [2, 3] address this subject with the following definition:

“Service Orchestration refers to the composition of system components to support the Cloud providers' activities in arrangement, coordination and management of computing resources in order to provide Cloud services to Cloud Consumers.”

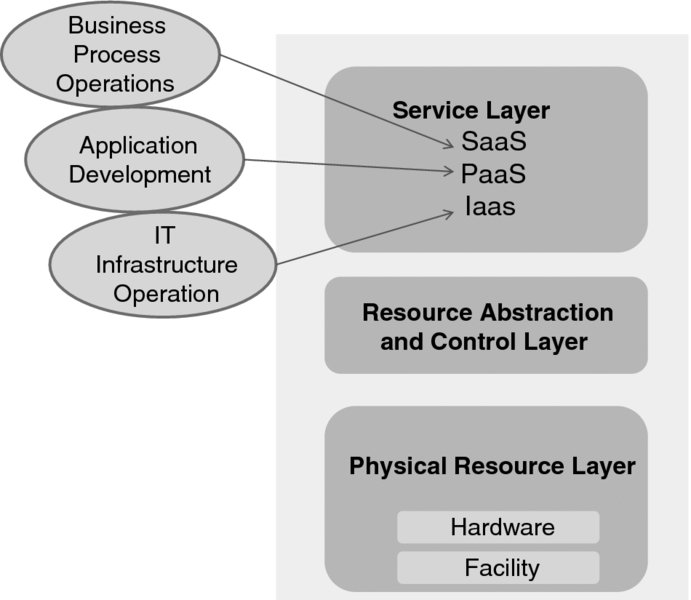

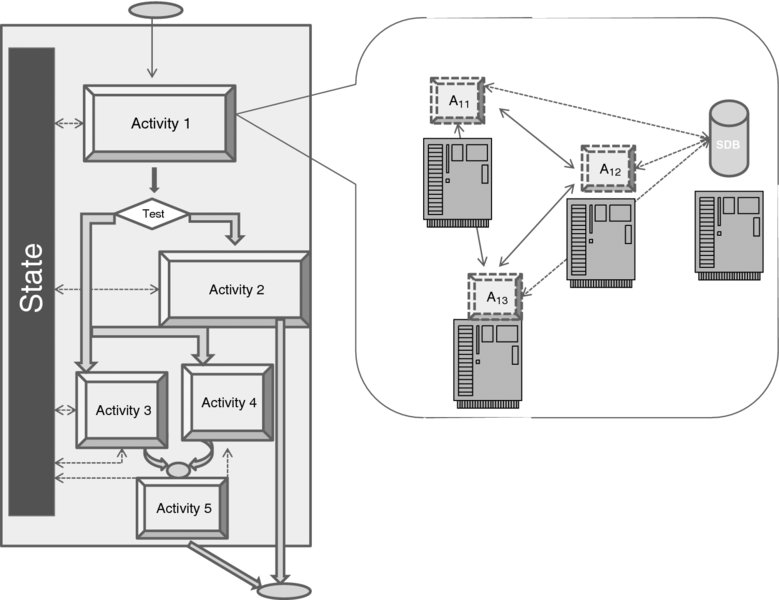

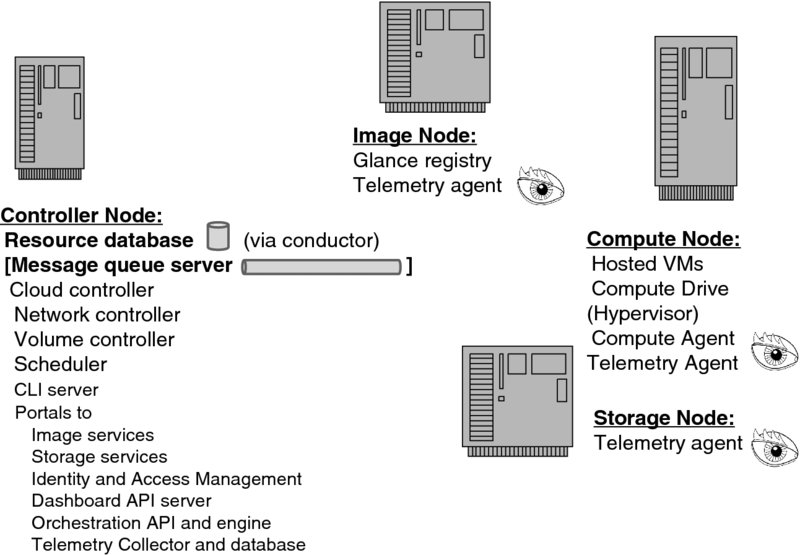

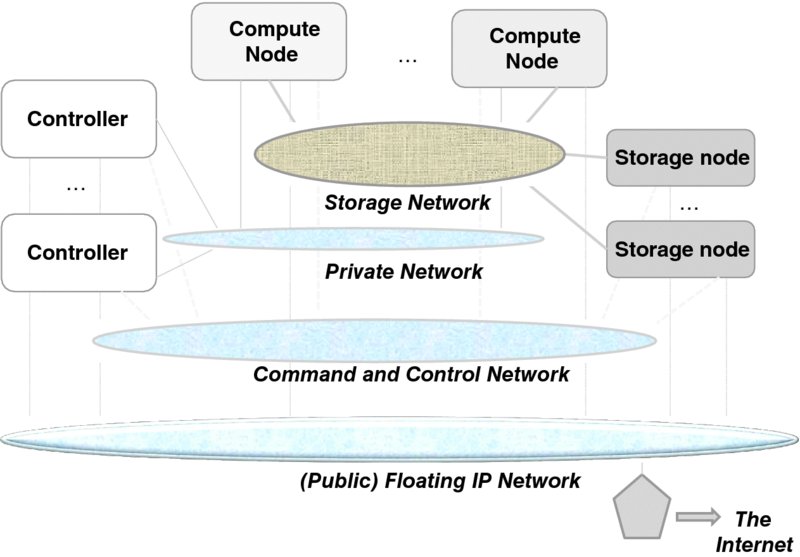

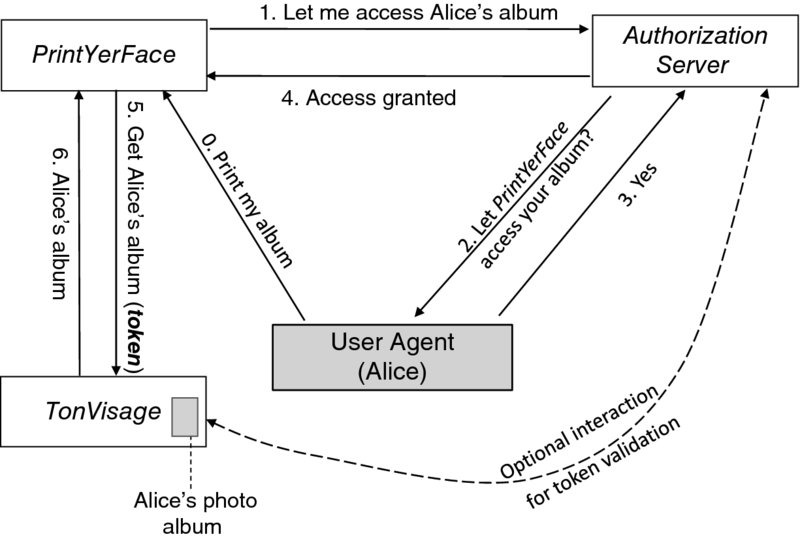

The NIST Cloud Computing reference architecture [3] illustrates the task of orchestration (depicted here in Figure 7.1).

Figure 7.1 Service orchestration (after NIST SP 500-292).

The NIST three-layered model represents the grouping of the components that a Cloud provider deals with. On the top is the service layer where the service-access interfaces are defined. The middle layer contains the system components that provide and manage access to the physical computing resources through software. The control aspect here relates to resource allocation, access control, and monitoring. NIST refers to these as “the software fabric that ties together the numerous underlying physical resources and their software abstractions to enable resource pooling, dynamic allocation, and measured service.” At the bottom of the stack is the physical resource layer, which includes all hardware resources—computers, storage components, and networks, but also “facility resources, such as heating, ventilation and air conditioning (HVAC), power, communications, and other aspects of the physical plant.”

How exactly these resources are coordinated is not specified in [3]; we will provide some “under-the-hood” views later in this chapter. (In particular, we will extend the above model in Section 7.3.) What is important is that the NIST description underlines the distinction between service orchestration and service management tasks performed by a Cloud provider. Service management includes “all of the service-related functions that are necessary for the management and operation of those services required by or proposed to Cloud consumers.” The three service management categories are business support, provisioning and configuration, and portability and interoperability.

In the business support category are the tasks of customer-, contract-, and inventory management, as well as accounting and billing, reporting and auditing, and pricing and rating. The actual operation of the Cloud is the subject of the provisioning and configuration category, whose tasks include provisioning, resource change, monitoring and reporting, metering, and SLA management. Finally, data transfer, VM image migration, and the all-encompassing application and service migration are the tasks of portability and interoperability, accomplished through the unified management interface.

To understand what is actually involved, it is necessary to distinguish the components and observe the respective evolution of each of them separately.

In the first section of this chapter we discuss the evolution of the concept of orchestration in the enterprise (i.e., IT) industry—where the concept was actually born at the turn of the century.

In the second section, we review the discipline of network and operations management, with the emphasis on the evolution of the operations support systems. Note that, the word “network” aside, network management is a purely software matter. Network management is used both in the enterprise and telecommunications industries, but naturally it originated in the telecom world. In the context of this discussion we will also review several widely implemented standards.

The third section of this chapter synthesizes the above concepts in the context of Cloud, where hosted services (along with the appropriate orchestration tools) have been offered to an enterprise by the Cloud provider (who naturally needs its own tools to orchestrate the services). As might be expected, there is not much in the way of history here, let alone standards; but the history is being made right now, at the time of this writing, and it is happening very fast with multiple open-source initiatives!

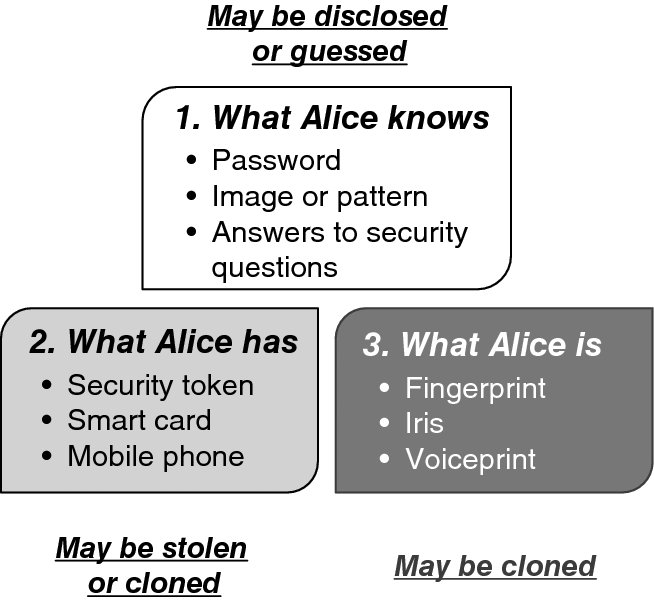

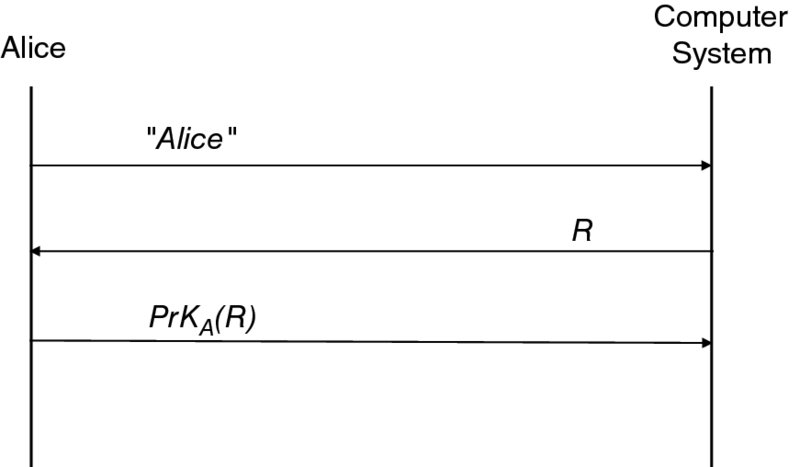

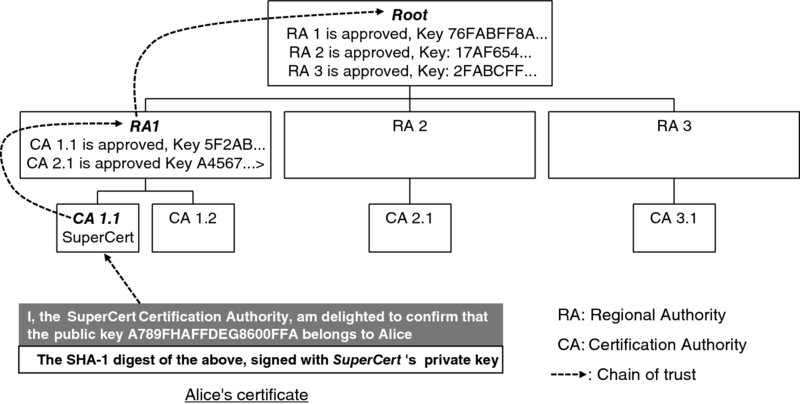

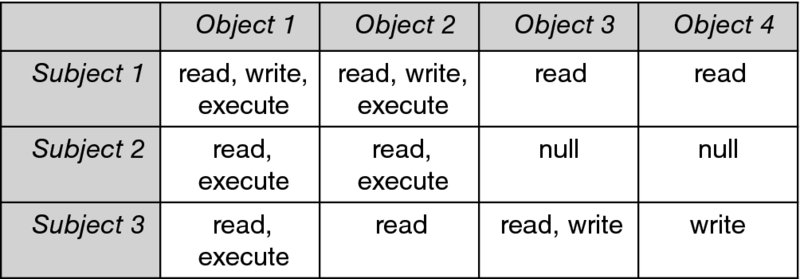

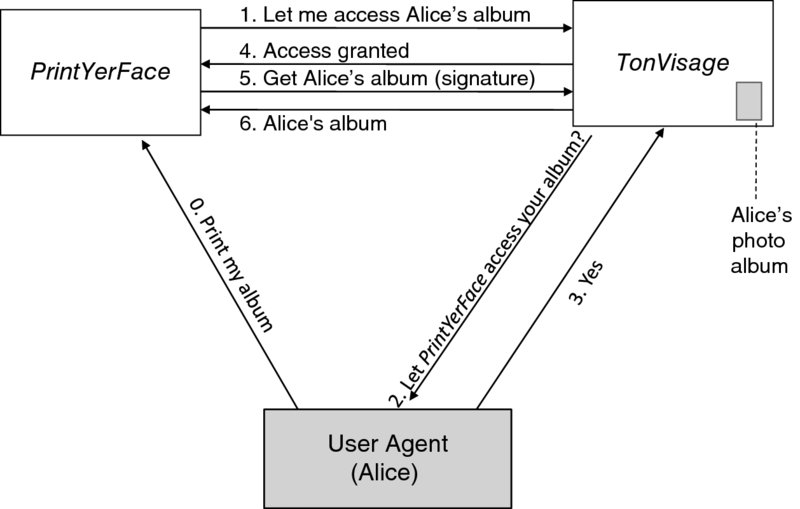

The fourth and last section of this chapter deals with the subject of identity and access management. We have mentioned that before, and we repeat it now: the success of security in general and identity management in particular has been considered by many the single most important matter that the industry needs to deal with. Needless to say, the authors—who have been working on this very subject for more than a decade—subscribe to this view.

7.1 Orchestration in the Enterprise

The origin of the term dates back to the information technology movement developed in the early 2000s. The movement has been known as Service-Oriented Architecture (SOA). As we will see later in this section, SOA “died” in 2009—at least this is when its obituary was written—but the overarching idea and the objectives of the movement are still alive and well!

The major motivation was to break the old model of developing and maintaining monolithic applications2 by harnessing modularity and enabling distributed processing.

Of course, modularity has been the holy grail of software since at least the 1960s, and much has been accomplished in the years that have passed. ALGOL-60—the progenitor of all structured high-level programming languages used to date—provided the mechanisms for modules to have independent variable naming so that they could interact with one another only through clearly defined parameter-based interfaces. Once the interfaces were defined, the modules could be developed independently (by the programmers who, presumably, never needed even to talk to one another). These modules could then be compiled and the resulting object code stored in libraries, which would eventually be linked with the main-line application code. One essential point here is that one—presumably better-performing—module could always substitute for another as long as both modules adhered to the same interface.

In the 1980s the evolution of this paradigm forked into three independent developments, which influenced the service orchestration concept.

The first development, fostered by the Unix operating system shell interface, provided programmers with powerful means to execute a set of self-contained programs, without any need for compilation or linking with the main-line code. These programs could even be arranged so that one fed its output into another (through the pipe interface). It is important to underline that, unlike with the previous job-control language environments provided by other operating systems, the shell environment was really a well-thought-through collaborative programming platform. Anyone can write a new “command,” compile it, and make it available to others. Furthermore, the same “command” name can be shared by different modules as long as they are stored in different directories. The set of directories to fetch a module from is indicated by an environmental variable, which can be changed on the fly.3 And, again, the shell programs don't need to be recompiled when changed, because they did not need to be compiled in the first place—they are being interpreted. Finally, a module invoked in a shell script can be written in any language (including shell itself).

The second development, called object-oriented programming, significantly simplified the interface to the modules (previously thought of as procedures). Whereas previously programmers needed to understand every detail of the data structures on which library procedures operated, with object-oriented programming the data structures have become encapsulated along with the methods (i.e., the procedures) that perform operations on them. Only methods are visible to a programmer, who therefore no longer needs to care about the data structures. The latter can be quite complex,4 but a programmer who uses an object does not need to understand this complexity; only the programmer who implements the object class (an equivalent of a type that defines a data structure) does. With that, the objects instantiated to a given class started to be thought of as services.5 The first object-oriented language, SIMULA, was actually developed in 1967—at about the same time as ALCOL-67, and it was a natural superset of ALGOL, created solely for the purpose of simulating systems. (It was, in fact, used in simulating complex hardware systems.) Since every system consists of “black boxes”—some of the same type (or class, in object-oriented parlance), the paradigm was born naturally. Of course, the objective of SIMULA was modeling rather than effective code reuse. It took about 20 years to standardize SIMULA, the task carried out by the SIMULA Standards Group (1) and completed in 1986. By the time that was done, in 1983, a new language—C++—was released by a Bell Labs researcher, Dr. Bjarne Stroustrup, who had quietly worked on it since 1979. C++ borrowed much from SIMULA, but it has been based on (and, in fact, compiled to) the C language, which was designed as a systems programming language (or, in other words, allowed a programmer to cut corners unceremoniously in order to work closely with the hardware at hand). It is this efficiency—combined with the full implementation of the object-oriented paradigm—that made C++ so popular. It has been ratified by ISO as the ISO/IEC 14882 standard. An earlier version was issued in 1998, but the present standard in force is ISO/IEC 14882:2011, which is known in the industry as C++11. There is a much recommended book [4] on the subject, issued by its inventor and first developer. C++ is also a progenitor of a plethora of other popular interpreted object-oriented languages, notably Java, designed for an increasingly lighter-weight application development (vs. system programming, in which C++ still rules).

From a programmer's point of view, the new object-oriented languages have implemented parametric polymorphism (a feature that allows programmers to define subroutines with both a flexible number of parameters and—to some extent—flexible typing of parameters). This has significantly improved the flexibility of the interface between the program that uses the service and the program that provides a service. The interface is called the Application Programmer's Interface (API).

The third development was distributed computing. For a comprehensive monograph, we highly recommend [5]. As part of this development, much has been researched and standardized in the way of remote execution. An essential objective here was to shield a programmer from the complex (and often tedious) detail of keeping track of the actual physical distribution of the computing resources. To this end, a programmer should even be unaware of the actual distribution. For all practical purposes, the API had to look exactly as the one already provided by operating systems or any application library—the interface being that of a procedure call (or, in the object-oriented model, the method invocation).

With this objective in view, the Remote Procedure Call (RPC) model has been developed. In this model, a programmer writes a local procedure call—what else other than local could it be, anyway?—but the underlying software “transfers” the call to another machine by means of an application protocol. The model was primarily intended for client/server interactions, where the client program invokes “remote” procedures on the server. The issues here are non-trivial (consider passing parameters by reference from a client to a server, or crash recovery—especially on the server side).

Aside from the algorithmic part covering concurrent execution, the industrial infrastructure has been developed for advertising the services6 provided by the objects across the machines and for accessing such services. To this end, more than one infrastructure has been developed, as quite a few standards organizations and fora were involved. These included ISO/IEC, ITU-T, Object Management Group (OMG), and—later, with the success of the World-Wide Web—the World-Wide Web Consortium (W3C) and the Organization for the Advancement of Structured Information Standards (OASIS), to name just a few.7

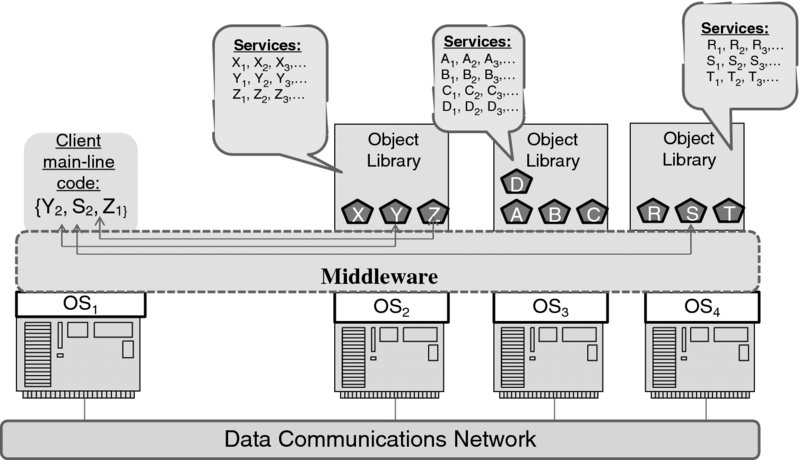

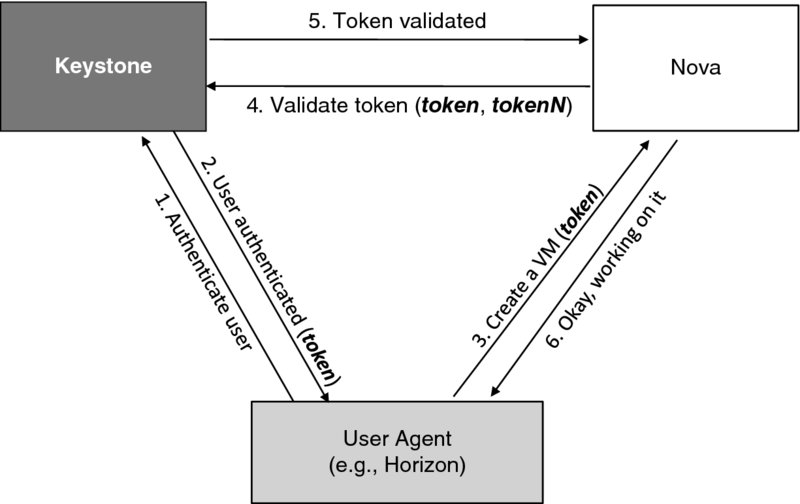

The common model that shaped up around the mid-1990s is depicted in Figure 7.2.

Figure 7.2 Distributed object-oriented computing model.

Here, the client program can invoke remotely the methods of various objects residing across the infrastructure. The infrastructure may include different machines, which run different operating systems. The only requirement of a “physical” nature is that these computers be interconnected through a data network.

Each object library can, of course, be implemented in its own language. The mechanisms of remote invocation have been largely invariant to both the original language and the operating system on which the respective code is to be executed. This has been achieved through middleware, which provides its own primitives (local API) to insulate the programming environment from the operating systems and thus ensure universal portability. As the environment also allowed the object libraries to advertise their services, some models included the concept of a service broker, whose job was to match the client service requirements to various service providers' libraries.

One disturbing development that took place in the mid-1990s, when all eyes were focused on the Internet and the World-Wide Web, was the en-masse rejection of the standards that had been written before then (and most standards that enabled the infrastructure had been written before then). This was not necessarily bad for the standards people, who suddenly got new and exciting jobs; nor was it bad for the new standards fora that mushroomed and pushed aside the older organizations, which had been struggling with completing the standards based on technology that was labeled “old” almost overnight. It was also good for people who had fresh ideas on how to “simplify” programming, because the ebb of fashion propelled many an untested idea into standards. Technology was moving fast, and few companies—and fora that depended on them—risked being left behind. Eventually, when the bubble burst, they were left behind anyway, but the fast development of untested technology proved to be ruinous even for the technology itself!

At the root of the problem was a truly religious aspect of the new wave of distributed processing technology: it was believed that all application-layer protocols had to be ASCII-text-based. The truth is that the Simple Mail Transfer Protocol (SMTP) was indeed ASCII-text-based, which was advantageous at a time when most terminals were teletype-like and using ASCI text was helpful in testing and debugging (and also for breaking in by hackers, although that most certainly was not a design objective!). Similarly, the main protocol of the Web—the Hyper-Text Transfer Protocol (HTTP), initially had to deal only with the transfer of the ASCII-encoded (HTML) files. Since the amount of protocol-related data was small compared with the payload, text-based encoding was justified. But these decisions, which were necessary—or at least justifiable at a time when SMTP and then HTTP were being developed—later somehow became interpreted as the maxim that all Internet application protocols must be text-encoded. The maxim soon became a belief, joining other false beliefs (such as that IPv6 is “better for security” than IPv4). As the application protocols grew, the absurdity of applying the maxim became evident. Not only has the amount of data become huge, but parsing it became a problem for real-time protocols. In fact, the new version of HTTP [7] presently developed in the IETF HTTPbis working group uses binary encoding, providing the following explanation for the change: “…HTTP/1.1 header fields are often repetitive and verbose, which, in addition to generating more or larger network packets, can cause the small initial TCP congestion window to quickly fill.…Finally, this encapsulation also enables more scalable processing of messages through use of binary message framing.”

The effect of text encoding on distributed object-oriented computing first manifested itself in abandoning the Abstract Syntax Notation (ASN.1) encoding standard—which required compilation into a binary format8—in favor of the Extensible Markup Language (XML).9 Nothing is wrong with XML, but using it indiscriminately can be catastrophic.10 Even though HTTP itself provided a mechanism for remote API access, W3C decided to develop an RPC mechanism that ran on top of HTTP. Hence a new protocol—SOAP, the acronym originally expended as Simple Object Access Protocol.11 “The word simple proved to be a misnomer, and so the expansion of the acronym was dropped in SOAP version 1.2.12 SOAP became quite fashionable, and the complex SOA infrastructure was developed on top of it.

While SOAP was (and still is) used as a remote procedure call mechanism, its serialization in the XML format made it perform much worse than the RPC in the Common Object Request Broker Architecture (CORBA) developed by OMG.13 That alone required extra work (and extra standards) for embedding binary objects, but what has proven worse is that SOAP competed directly with the HTTP since it used HTTP as transport. Although, strictly speaking, running on top of the HTTP was not a requirement, the default SOAP/HTTP binding took off, in part because that ensured firewall traversal. (The reader may remember the April 1 RFC mentioned earlier—here is an example of the stuff of a rather cynical joke suddenly materializing as reality.) The result was not only a political confrontation (no one wants his or her application protocol to be a mere transport for someone else's application protocol!) but also a dilemma: either accept the strict client–server structure of the HTTP, in which every communication must be started by a client, and which therefore makes server notifications impossible to implement14 or invent more and more mechanisms to make up for the limitation. But the most serious argument against the RPC approach in general, and SOAP in particular, was that the remote procedure call—as a concept—could not easily adapt to the structure of the Web, which involves midboxes—proxies and caches. As it happened, the industry went on inventing more mechanisms and adding more complexity.

Finally, there was a revolt against SOAP in the industry, with the “native” Web discipline called REpresentation State Transfer (REST). The REST API won, at least for Web access.15 We will discuss the REST principles in the Appendix. For now, we only mention that turning toward the REST style has become necessary because of the “API” in “REST API”—it is something of a misnomer in that it does not involve procedure calls per se. Instead, the programmer writes the application-layer PDUs, the protocol being—for all practical purposes—HTTP. There is no REST standard; REST is merely a style, as we will see later.

Talking about standards, CORBA has been around, and so have SOAP and a few others which may have made fewer headlines. One should never forget Andrew Tanenbaum's aphorism: “The nice thing about standards is that you have so many to choose from!”

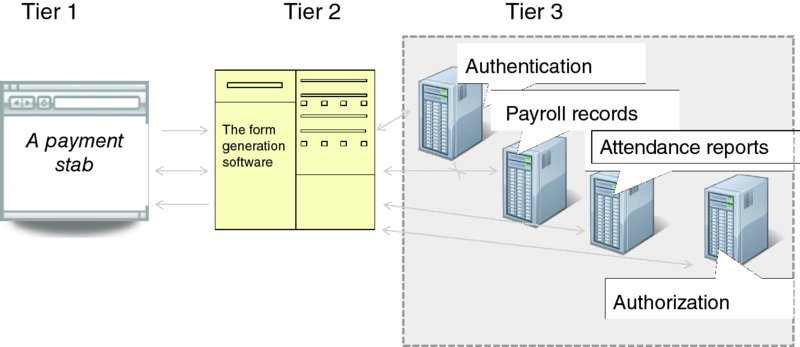

In the case of REST vs. RPC though, the division of labor is rather straightforward, owing to the widely implemented three-tier model, which emerged in the enterprise and has become the model of choice for providing software-as-a-service. In this model, Tier 1(a client) issues HTTP-based queries, Tier 2 (a server) provides the business processes logic and data access, and Tier 3 (often a set of hosts running database software) provides the actual data. It is the REST paradigm that is used by clients to access the front end (the second tier) of the service delivery infrastructure; the back-end communications may use RPC and other distributed processing mechanisms.

In the example of Figure 7.3, the client requests a pay stub for a particular employee from the Tier-2 server. The server, in turn, generates the form after querying the corporate databases that contain the payroll records and attendance reports. Of course, this act is performed only after authenticating the user who had requested this information and ensuring that the user is authorized to receive it. (Another example is the now ubiquitous Web-based e-mail service. A Web client speaks REST with the Tier-2 server, which uses the actual mail–client protocols to send and receive e-mail messages from an SMTP mail server.)

Figure 7.3 An example of the three-tier enterprise model.

Competing standards and non-interworking implementations aside, the advantages for modularity brought about by the architecture and mechanisms outlined so far are clear: nothing could be made more modular than the infrastructure that provided buckets of ready-to-execute service modules, which, on top of everything, could be invoked from anywhere. But its promise went even further—it was expected to reduce IT costs by making programming so easy as to allow the very people who define the business do it, thus eliminating their dependency on in-house specialized software development.

An industry effort to deliver on the promise came in the form of the SOA, which introduced the term orchestration.

7.1.1 The Service-Oriented Architecture

To begin with, we note that there has been much misunderstanding in the industry on what “SOA” means. At the beginning of his authoritative monograph [8], Thomas Erl writes: “I cannot recall any one term causing as much confusion as ‘service-oriented.’ Its apparent ambiguity has led vendors, IT professionals, and the media to claim their own interpretations. This, of course, makes grasping the meaning of a technical architecture labeled as ‘service-oriented’ all the more difficult.”

This is exactly the problem: interpretation. It is a truism, of course, that a vision (in the case of the SOA the vision being remote execution of API-defined services on a distributed computing platform) can be implemented in different ways, which may not necessarily interwork with one another. But once something is specified in detail to ensure a unique interpretation, it risks being labeled “an implementation.”

To this end, the SOA specifications were piling up. First, W3C produced the XML-based Web Services Description Language (WSDL16) for “describing network services as a set of endpoints operating on messages containing either document-oriented or procedure-oriented information.” The WSDL was supposed to be abstract and extensible so as to bind to any protocol, but the specification centered on one binding—specifically binding with SOAP 1.1 over a subset of HTTP.

The standard for the next necessary SOA component—the registry to enable publication and subsequent discovery—was developed by OASIS in the form of the (also XML-based) Universal Description, Discovery and Integration (UDDI) standard.17

Yet another set of SOA components addressed quality of service (a concept which, in this context, has nothing to do with the QoS in data communications), which also included a set of parameters for security (built on the OASIS Security Assertion Markup Language (SAML) standard), reliability, policy assertion, and orchestration per se. The standard for the latter, the Web Services Business Process Execution Language (WSBPEL),18 was produced by OASIS based on an earlier specification created by the joint efforts of IBM, Microsoft, and BEA, in turn inspired by the IBM Web Services Flow Language (WSFL) and Microsoft XLANG.

In a nutshell, WSBPEL uses XML-encoded facilities to specify business process requirements in a manner similar to that used in specialized programming languages to specify the execution of concurrent processes. Both provide facilities for describing parallel activities and dealing with exceptions. A number of other WS specifications were laid out to deal with management and coordination as part of the broad quality-of-service discipline.

Unfortunately, the SOA effort was not the success it had promised to be. By the end of 2005, the UDDI standard alone contained over 400 pages—something few developers had time to deal with, especially since the specifications were filled with the arguably unnecessary new terminology, as is the wont of many standards documents. In December 2005, the SOA World Magazine (http://soa.sys-con.com) published an article19 commenting on the decisions made by IBM, Microsoft, and SAP to close their UDDI registries.

On January 9, 2009, Anne Thomas Manes, a Burton Group analyst, wrote in her blog an obituary for the SOA.20 Citing the impact of the recession (and the resulting refusal of IT organizations to spend more money on the SOA), Ms Manes noted that “SOA fatigue has turned into SOA disillusionment. Business people no longer believe that SOA will deliver spectacular benefits.” The blog though was by no means derisive—it characterized the situation as “tragic for the IT industry” because “service-orientation is a prerequisite for rapid integration of data and business processes” and expressed the need to develop it for the SOA “survivors”—web mash-ups and SaaS. To this end, the blog actually suggested that it is the term “SOA” that is dead, while “the requirement for service-oriented architecture is stronger than ever.”

This was the common sentiment in the industry at that time. When one author googled “why SOA failed,” over three million results came up. On the business side, the blame was almost uniformly laid on the lack of resolve in the IT industry to change. The business people in turn blamed the proponents of the SOA for failing to communicate the importance of the SOA to the business. “Shortage of talent” was yet another explanation, and there were many more.

In our opinion, the SOA history was similar to that of the OSI in the late 1980s. In fact, the fates of the OSI and the SOA are strikingly familiar in at least three aspects, one of which is that both have produced sound metaphors and foundation architectures, which survived the test of time. The second similarity is that both the OSI and SOA standards were challenged by the Internet community. Just as the SOAP-based SOA was declared dead, the REST paradigm was picking up. The third aspect is a fundamental change in the way things were done: The Internet connected private networks and enabled partial outsourcing of networking; the appearance of the Cloud enabled outsourcing of IT services.

Four years later, in an article21 in InfoWorld magazine, David Linthicum noted that SOA practices are absolutely necessary in the Cloud. Perhaps the problem with SOA in the 2000s was the problem with a specific solution to SOA. Furthermore, the SOA referred to earlier was about the application development within the enterprise. In the context of the Cloud, we need a much broader definition. This is why we chose in this book not to describe SOA in any detail. The major surviving SOA concept is that of workflows.

7.1.2 Workflows

In describing a task—any task—one lists all the activities that are involved in carrying the task to completion. Some of these activities may run in parallel; others need to wait for the completion of prerequisite activities. A workflow is a specification that defines and orders all the activities within a task. Naturally, to automate a task involving a distributed system, its workflow must be defined in such a way that it is executable in a distributed environment.

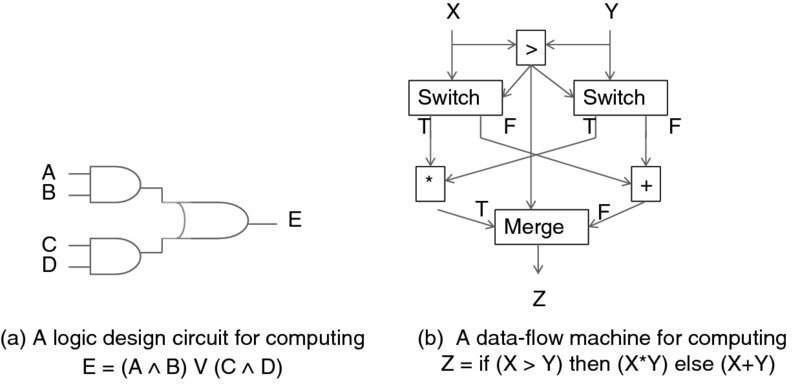

In a way, the whole development of computing has been based on workflows. Hardware is built based on the discipline of logic design, dealing with building circuits by connecting the building blocks—the logic gates—that perform basic operations. Figure 7.4(a) depicts such a circuit.

Figure 7.4 Flow-based computing examples.

In the 1980s there was a research movement to build workflow-based computers—then called data-flow machines—from elementary blocks. The blocks are chained as specified by a directed graph, and each block is activated when it receives a message (token). A 1986 MIT memorandum [9] describes the model and issues involved in developing such machines. Figure 7.4(b) (after [9]) gives an example of a data-flow machine that calculates a conditional expression.

In a way, the data-flow machines were workflows built in hardware. It might have developed this way, had it not been for the industry's realization that standardized, ubiquitous computing platforms provided the means for a much more economical (and arguably more flexible) software implementation approach. The trick was to develop software building blocks that could be mixed and matched just as the pieces of silicone could.

Before the introduction of structured computer languages, the algorithms were specified using flowcharts. The flowcharts were suitable for a single-process specification, but proved to be quite unwieldy for describing parallel activities in distributed processing. This is where software-based implementation of data-flow machines—which is, again, what workflows are really all about—helped.

One example, which came from the authors' personal experience, was service creation in telephony. The intelligent network technology, already referred to in this book, was developed in the late 1980s through the 1990s. Its major objective was to enable rapid development of telephony services. “Rapid” meant that the service developers—while being blissfully unaware of the network structure and the distributed nature of the processing—could put services together with the help of a graphical interface by simply chaining icons. Each icon represented a service-independent building block (such as queue_call or translate_number). Thus, a complex 800-number service, which involved time- and location-based translation, playing announcements and gathering input, and so on, could be programmed in minutes. Of course, the execution of each service-independent building block was in itself a complex distributed activity. Yet, since it was contained in a ready module, the service programmer was not concerned with that complexity. In modern terms, each service was programmed as a workflow. An attempt was even made to coordinate the call establishment with billing and charging processes. (As no standard was developed, several service-creation environments existed, but it was not trivial to merge them. In the mid-1990s the authors researched the means of unifying several such environments in AT&T, reporting on the results in [10].)

Figure 7.5 elucidates the general concept of workflow specification and execution. On the left-hand side, a workflow program is represented as a directed graph of activities. (This almost looks like a flowchart, although, as we will see, there is a significant difference.) Specifically, Activity 1 is the first such activity, which starts the workflow. Each activity—with the exception of the last, terminal, activity—has an output directed toward the next activity, said to consume the output, in the chain. In principle, activities can loop back, although the figure does not display such an example.

Figure 7.5 Workflow as a directed graph of activities.

Once an activity completes, the next activity can be selected via a conditional test. In our example, the test block determines whether Activity 2 is to start after Activity 1 and consume its output. If not, two activities—Activity 3 and Activity 4—are to execute concurrently. The example demonstrates that it is possible to synchronize the execution of both activities, by making the checkpoint (CP) wait for the completion of both activities before filtering their respective outputs to Activity 5. Supporting concurrency makes a workflow specification different from that of a flowchart. Another difference is that a workflow specification maintains its explicit state (depicted in a block on the left), which is read and updated by all activities.

So far we have discussed only a specification of a workflow. The execution is a different matter altogether, and it is explained by the right-hand side of Figure 7.5, which expands Activity 1. As we can see, here it is executed by three processes—A11, A12, and A13—which run on three different machines. The state database is maintained (in this example) on yet another machine. Of course, it is non-essential that A11, A12, and A13 run on separate hosts—they could be distributed between two hosts or even run on the same host; nor is it essential that they are processes rather than threads within a single process. The ingenious part of the arrangement is that the choice of the execution host and the form of the execution is absolutely flexible—it is left to the run-time environment. Similarly, the location of the state database (which, in fact, may also be distributed) is irrelevant as long as it meets the performance requirements. To increase reliability, and also to improve performance, the state database may be replicated. Soon, we will see this principle applied to the design of OpenStack.

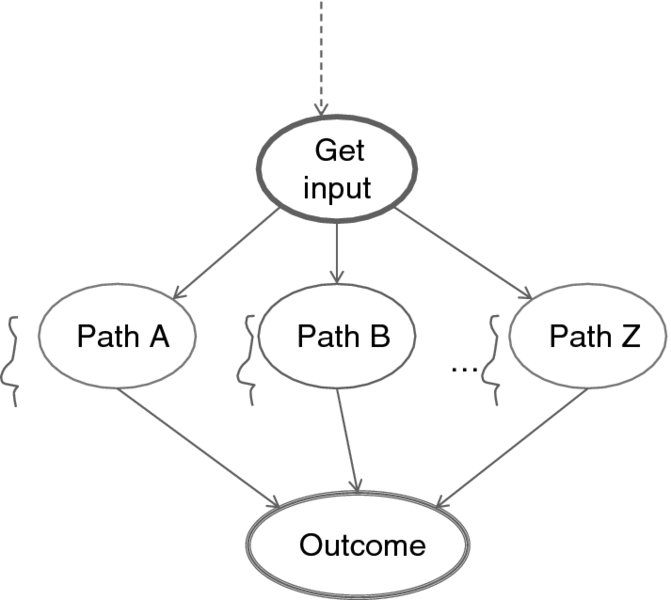

One other aspect of improving performance is workflow optimization. If a workflow specification language is formally defined (so that it can be parsed), it is possible to apply the compiler theory to eliminate redundancies and—most important—optimize scheduling of parallel activities. But it is also possible to analyze the performance of a workflow statistically, as it repeats, to discover performance problems. This approach, illustrated in Figure 7.6, is called path analysis, and it is particularly useful in the workflows that implement diagnostic tools.

Figure 7.6 Path analysis.

Starting from some place within a workflow where an input has been gathered there may be several ways—represented by paths through the workflow graph—to achieve an outcome. An inference that among the paths A, B, …, Z the path B is a shortcut in terms of the execution time may very well suggest to the workflow designer that other paths be eliminated to streamline the workflow.

There is a significant volume of literature and a number of products related to workflows. We refer only to a few examples.

An earlier grid-related research project, GridAnt, is described in [11] along with a survey of the then-existing commercial products. A research paper [12] provides an overview of several workflow optimization algorithms and proposes an extended one (the Boolean verification algorithm) that deals with the workflows that contain conditional branches and cycles.

As far as products are concerned, the Microsoft Windows Workflow Foundation is described on a dedicated site.22 This site also contains an excellent tutorial.

Amazon provides the Amazon Simple Workflow Service (AWS) API along with the AWS Flow Framework to invoke these APIs23 from user programs. A developer needs to specify coordination logic (sequencing, timing, and failure response) as well as the code for each step of the workflow. To this end, a library of commonly used programming patterns in support of coordination logic is also available.24

So far we have discussed the generic use of workflows in applications. Later in this chapter we will return to this subject, but we will narrow the focus of the discussion to the application of workflows to the specific task of Cloud orchestration. Yet, before doing so, we need to review the concepts and techniques of network and operations management.

7.2 Network and Operations Management

As we noted earlier, the discipline of network management predates that of data communications. It started with telephone networks, and it has been driven solely by automation. As the telecommunication network equipment evolved from human-operated switching boards to computer-controlled switches that processed call requests automatically, the need to control individual calls morphed into the need to control the equipment that controlled the calls. Furthermore, with the introduction of time-division multiplexing, the operation of the transmission equipment itself has become complex enough to warrant real-time monitoring and administration activities.

In the Bell Telephone System [13],25 as in all other major telephone companies, these activities—commonly called network-related operations—were part of the overall company operations, which included provision of services to the customer, service administration, and maintenance operation. Incidentally, we are not mentioning these for purely historical (or even historic) reasons—these activities remain at the heart of the Cloud today! Interestingly enough, a good deal of software technology concepts, as we see them today, were developed to streamline network operations.

The administrative processes were initially performed manually, but during the magic 1970s they had been increasingly moving to computerized processing. Separate systems were developed—one for each piece of equipment to be administered. Initially, Bell System was purchasing various mainframes to host the operations support software, but when the DEC PDP-11 line became available (as did the Unix operating system, which was first developed for PDP-11), its minicomputers were used for the development of the Operations Support Systems (OSSs).26 Ultimately, because the Unix operating system could run on any computer, the particular choice of hardware became less and less relevant. In the late 1980s, the largest part of the software research and development in Bell Laboratories27 was dedicated entirely to the design of OSSs.

OSSs required more or less the same capabilities that any business administration would, but on a much larger scale because the telecom domain contained thousands of pieces of autonomous computer-based equipment (not to mention hundreds of millions of individual telephone lines!), further governed by various business processes and US government regulations.

In the 1980s, the objective of the OSS development was to have a universal OSS which would govern all activities, but this was a tall order.28

To begin with, the business activities were disconnected across the company. When a telephone service was requested, it had to be processed by the business office. Sure enough, a customer record was created in one or another database—most likely in several—but it could not reach the local switch's database automatically. According to a Bell Labs anecdote of the time, an operator of the switching exchange management system, which was accessed through a dedicated terminal, needed to turn in a swivel chair to use another terminal to log into the order system to read the customer order record and then turn back to retype the information into the switching system. Apparently, this is when the term “swivel chair integration” was coined.

To be precise, the independent operations support systems were—and largely still remain in the telecom world—as follows:

- Trunks integrated record-keeping system.

- Plug-in inventory control system.

- Premises information system.29

- Total network data system.

- Switching control data system.

- Central office equipment engineering system.

- A number of facility network planning systems.

In addition, in the 1970s AT&T developed a central network management system, which was showcased at the Network Operations Center, Bedminster, NJ.30 There, the updates from all over the network were displayed on a wall-sized map of the United States, indicating the state of the network. The network managers, working at individual terminals, were able to take corrective action when necessary. This was the first decisive step toward network (vs. element) management. In the second half of the 1980s, central network traffic management systems were developed by AT&T's Network Systems division for sale to regional operating companies and abroad.31

Back to the unified OSS vision. Again, the major obstacle in its way was that in Bell System alone multiple systems had evolved separately, without any common platform.32 Rewriting all this software was out of the question, but even if a decision were made to rewrite it, there was still no standard which different vendors could implement. As the vision was built around the ISDN technology, in which telephony services were combined with data communications services, the first step was (naturally) to integrate the management of the data communications network. The latter had morphed into a discipline of its own, starting with the ISO OSI network management project with its five-item framework. This framework is still all-encompassing; we describe it in the next section.

7.2.1 The OSI Network Management Framework and Model

The first aspect of the framework is configuration management, and it is concerned with the multitude of parameters whose values need to be maintained within specified ranges on all the devices in the network. The values of some parameters may be changed directly by the network owner; others can be read-only.33

The second aspect of the framework deals with fault management. The word “fault” broadly refers to any abnormal condition in the network. One big design task here, of course, is to define clearly all events that correspond to changes from “abnormal” to normal. Another design task is to select those events that are worthy of being detected on the one hand, and on the other hand to ensure that the reporting of these events does not overwhelm the system's processing power. A typical event constitutes a change of a parameter value beyond a certain threshold. The change is (often) logged and reported in real time through an alarm mechanism. (Recalling an earlier discussion of the computer architecture and operating system, this situation is very similar to a CPU interrupt flag being raised by a device, and, indeed, just as an operating system needs to supply an interrupt handling routine, so does the network management system needs to supply a proper operating procedure.) Note that in order to detect a change (as well as to react to it), the configuration management mechanisms need to be invoked.

The third aspect of the framework is performance management. This, again, relies on the configuration management mechanism to measure the utilization of the network resources in real time. A longer-term part of this activity is capacity planning. It is pretty obvious that when a given resource becomes overwhelmed so as to affect the overall network performance, it may be high time to replace it with a larger one (but determining which resources contribute to a bottleneck is a complex problem). As replacing or beefing up the equipment is often expensive, effective capacity planning can save much money.

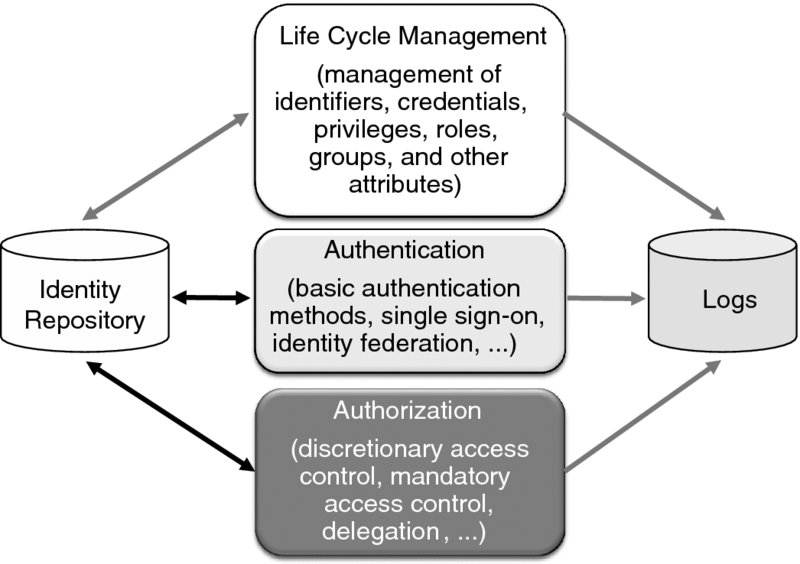

The fourth aspect is identity and access management, addressed in the last section of this chapter. In a nutshell, the task of access management is to ensure that every single attempt to learn any information about the network—or to change anything in it—is captured and allowed to proceed only after it is determined that the attempting entity is properly authorized to do so. Typically, the attempts to access critical data are logged and otherwise processed through the fault management mechanisms.

The fifth and final aspect is accounting management. This involves the whole range of activities that deal with charging for the use of resources. In an enterprise network comprising several organizations, this may mean determining a proportion of the overall communications bill that each organization should pay. In an operator's network, this is the activity that determines the revenue.

While the framework has been clear—and it remains unchanged for the Cloud—the development of network management standards has proven to be rather erratic, with competing parallel activities carried by several organizations and still inconclusive results.

Historically, the above five aspects, spelled out in a different order—Fault, Configuration, Accounting, Performance, and Security—and thus known by the acronym FCAPS, formed the basis for the ISO work, later carried out jointly with ITU-T. In parallel, and according to the same model, the IETF was developing its own protocol series. We will briefly address both, but we start with the common basic model, as depicted in Figure 7.7.

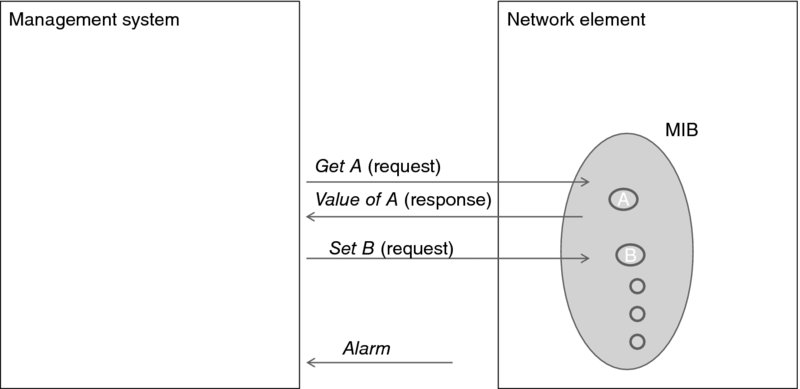

Figure 7.7 The basic network management model.

Each managed device is associated with the Management Information Base (MIB), which actually defines the configuration parameters. The management system may request (Get) the value of a parameter as well as change (Set) this value. Which values may be changed externally (and, if so, by whom) is part of a MIB specification. (There are also other capabilities with respect to the parameters—such as learning which parameters are defined within the MIB—and many nuances to defining the managed objects.) An alarm or trap message is a notification from the device, which can only be processed as an interrupt at the machine hosting the management system.

ITU has developed, jointly with ISO/IEC JTC 1, the Common Management Information Protocol (CMIP), defined in [15] and other ITU-T recommendations in the X.700 series. As CMIP was using the OSI application-layer services (such as the OSI Remote Operations Service Element) that were unavailable in the Internet, the IETF had decided to proceed with its own protocol, and here the development of the network management standards forked.

Based on CMIP and other modules, ITU-T has come up with a large set of specifications (the M.3000 series) called the Telecommunications Management Network (TMN), while the IETF has produced the so-called Simple Network Management Protocol (SNMP), now in its third version SNMPv3. The enterprise IT industry has deployed SNMP exclusively, while TMN is deployed in the telephone networks—notably in WorldCom, as reported in [16]. This divergence is rather unfortunate, as it has contributed to enlarging the difference between telephony and IT—the very difference that the network management standardization effort was supposed to eliminate!

The SNMP STD 62 standard was completed in 2002, reflecting more than 10 years of SNMP development. By 2003, when the IETF Internet Architecture Board had a workshop,34 SNMP was widely deployed, with some MIBs implemented on most IP devices. Hence the industry had obtained enough operational experience to understand the technology limitations. The major one was that SNMP dealt primarily with the device monitoring aspect of network management (as opposed to the configuration aspect).

We should stress that device monitoring was—and remains—an important function because it has provided, among other things, notifications (“traps”) of the state of physical equipment (such as a server board or a simple fan). Knowing that hardware works properly and detecting a malfunction as early as possible is the foundation of the operations discipline. In modern data centers, such SNMP traps are fed into specialized monitoring systems (such as Nagios)35 used as part of the modern solutions based on the development operations (devops) methodology.

Although the use of SNMP for configuring devices was not unheard of (after all, the protocol explicitly supports changing device parameters via a SET method!), many standard MIB modules lacked writable objects. With SNMP, it is not easy to identify configuration objects, and, as RFC 3535 documented, the naming system itself seemed to be in the way of playing back a previous configuration of a reconfigured system. But even if all MIBs were perfect, SNMP is, too low level for network operators—who lamented that not much had been done in the way of developing a bird's-eye view of application building blocks.

Nor might the development of such building blocks help, as the SNMP software started to reach its performance limits. Retrieving routing tables, for example, proved to be very slow. Another set of problems was caused by the objective of keeping things simple (as the “S” in SNMP might indicate). Sure enough, the protocol was simple enough—compared with CMIP—but this has merely left the complexity to the developers to deal with. Now it was the network management application that was supposed to checkpoint the state of SNMP transactions,36 and be prepared to roll a device back into a consistent state. Designing such an application required significant experience with distributed processing, and even for the experts it was by no means a simple task. This was at cross purposes with the plan to make network management applications “easy” to develop (i.e., cobbled by non-programmers from some elementary building blocks). More generally, as RFC 3535 states, there was “often a semantic mismatch between the task-oriented view of the world usually preferred by operators and the data-centric view of the world provided by SNMP. Mapping from a task-oriented view to the data-centric view often requires some non-trivial code.”

And then the “simplicity” resulted in under-specification, which hindered interoperability:

“Several standardized MIB modules lack a description of high-level procedures. It is often not obvious from reading the MIB modules how certain high-level tasks are accomplished, which leads to several different ways to achieve the same goal, which increases costs and hinders interoperability.”

Part of the problem with the ineffectiveness of SMNP with respect to configuration management is the very model in which the network manager (presumed to be “intelligent”) deals with a “dumb device.” Initially, the devices (a modem is a good example of one) were indeed not programmable, but by the late 1990s the situation had changed drastically. To appreciate the difference, consider what happened to the concept of a home network, which evolved from a bulky modem, connecting a computer to a telephone line, to an Ethernet LAN hub (although relatively few people had this in their homes), and then to the present WiFi base-station router with built-in firewalls and a NAT box. Beside the obvious differences, here is a fairly subtle one: the complexity introduced with all this equipment required that configuration changes be made according to a specific policy.

For more detail on SNMP, we refer the reader to the next chapter.

7.2.2 Policy-Based Management

The IETF started to address the problem gradually, strictly on a specific need basis. The first such need was the policy configuration in support of QoS. Here, the device (typically, a router) is by no means dumb: its configuration needs to change continuously—in response to users' requirements– and so the management system needs to propagate the change into a device from a local copy. Here, the model introduced a new challenge—the need to maintain a synchronized state between the network manager and the device. Another challenge came from the potential interference among two or more network managers administering the same device.37 That case introduced the potential to corrupt the device with contradictory changes.

And then there is a need for policy-based management. While a device may have to change in response to users' requests, it is hardly acceptable to allocate network resources based only on user requests—that is, always give whatever one asks. Network providers wanted to have a mechanism that would enable granting a resource based on a set of policy rules. The decision on whether to grant the resource takes into account information about the user, the requested service, and the network itself.

Employing SNMP for this purpose was not straightforward, and so the IETF developed a new protocol, for communications between the network element and the Policy Decision Point (PDP)—where the policy-based decisions were made. The protocol is called Common Open Policy Service (COPS); we review it in the Appendix.

As an important aside, COPS has greatly influenced the Next-Generation telecommunications Network (NGN) standards, which have been developed since 2004 in both ETSI and ITU-T. NGN is characterized, among other things, by (1) the prevalent use of IP for end-to-end packet transfer and (2) the drive to convergence between wireline and wireless technologies.38

In contrast to specialized networks optimized for specific applications, NGN has been envisioned as a general multi-service network that would meet a wide range of application performance needs and security requirements. To this end, service control was to be separate from transport as well as from the mechanisms required to allocate and provide—often in real or near-real time—network resources to applications.

One specific set of such applications emerged to support the so-called triple-play services, which encompass Voice-over-IP (VoIP), IP television (IPTV), and Internet access. These applications required—and still require—special QoS treatment.

As we saw in Chapter 4, the performance needs of applications are characterized by four key parameters: bandwidth, packet loss, delay, and jitter (i.e., variation in delay), which determine the quality of service. Overall, the needs of the triple-play services are different with respect to QoS. For example, some popular data applications (such as e-mail and web access) require low to medium bandwidth and are quite relaxed as far as delay and jitter are concerned. In contrast, VoD flows have relaxed requirements on delay, but they do need high bandwidth and cannot tolerate much packet loss or jitter. VoIP, while tolerating some packet loss, needs much lower bandwidth than VoD, but it can tolerate neither long delay nor jitter.

In addition to the QoS-related resources, networks often need to grant other resources (e.g., IP addresses or service-related port numbers) to the endpoints and the processes that execute on them. As we may recall from Chapter 5, this specific need arose from NAT LSNAT deployment, which has been employed to hide the internal network topology. These diversified and already complex tasks were further complicated by the very structure of the NGN, which combines several network types, including Asynchronous Transfer Mode (ATM), Digital Subscriber Line (DSL), Ethernet, and fixed and mobile wireless access networks.

The key to fulfilling this complex duty was a dynamic, policy-based resource management framework, known as the Resource and Admission Control Functions (RACF), described in [18] (the ITU-T standard published in [19]). An important point to emphasize is that RACF was put in place to interwork the real-time processing with OSSs; RACF have both functions, and their protocols combine both sets of building blocks.

Even though RACF was influenced directly by COPS, its framework also relied on a number of IETF protocols other than COPS.39 Starting from their inception, the Third-Generation Partnerships—3GPP and 3GPP2—have been following and influencing the development of the IETF building blocks in support of the IP Multimedia Subsystem (IMS).40

While the Third-Generation Partnerships were focusing on the needs of wireless carriers, the ETSI Telecommunication and Internet Converged Services and Protocols for Advanced Networks (TISPAN) group embarked in 2003 on a project that dealt with fixed access. Its approach to resource management was reflected in its Resource and Admission Control Subsystem (RACS), published in [21].

We should emphasize again that the need to control Network Address and Port Translation (NAPT) and NAT traversal was an important driver for the ETSI work. When service providers started deploying VoIP, they discovered the complications—which we now know very well—caused by the end users being located behind NAT devices (as is the case for most broadband access users). This problem could be circumvented with session border controllers supporting hosted NAT traversal. Standalone session border controllers, however, do not fit well in the overall IMS approach. In contrast, the RACS model supports NAPT and hosted NAT traversal as part of policy enforcement under the control of a policy decision function that interfaces with IMS session control.

In 2004, ITU-T embarked on the RACF effort with the objective of preserving the separation of services and transport while enabling dynamic, application-driven, policy-based end-to-end QoS and resource control capabilities (in particular, resource reservation, admission and gate control, NAPT, and hosted NAT traversal within the network domain and at network boundaries. From its onset, the scope of the RACF included various types of access and core networks. To this end, RACF was the first attempt to create a flexible end-to-end resource management solution by blending (rather than replacing) the existing standardization results within a common framework.

As we can see, COPS has solved the problem of policy-based management, but it has not solved the problem of managing configurations effectively. Going back to RFC 3535 (which, as the reader may remember, reports on the 2002 IAB workshop), the prevailing complaint from the operators was the lack of a consistent, all-encompassing configuration discipline. Neither COPS nor the ever-growing IETF set of MIBs was helpful here.

Hence, one objective of the workshop was to determine how to refocus the IETF resources.41 The workshop made eight recommendations—both positive (which activities to focus on) and negative (which activities to stop). Of these, the only positive recommendation that enjoyed “strong consensus from both protocol developers and operators” was that “the IETF focus resources on the standardization of configuration management mechanisms.” Two other recommendations, apparently supported more by the operators than the protocol developers, were that the resources be spent “on the development and standardization of XML-based device configuration and management technologies” and not be spent on the HTML-over-HTTP-based configuration management.42

To the IETF's credit, it turned out to be quite nimble, responding decisively. In 2003, the NETCONF WG was created, and three years later it had published the first version of the NETCONF protocol. That was augmented in the next two years to incorporate notifications and several classical distributed processing and security mechanisms, and the protocol kept evolving for the next eight years. The present version of the base NETCONF protocol was published as RFC 624143 in June 2011. (Its extensions have been published in separate RFCs.) We review NETCONF in detail in the next chapter.

Meanwhile, the industry has created several configuration management tools, which have been used extensively in today's Cloud. In the rest of this section we review two well-known examples: Chef by Chef44 (formerly Opscode) and Puppet by Puppet Labs.45

With Chef, an administrator describes the structure of the distributed system (which might include web servers, load balancers, and back-end databases) using what is called recipes. These recipes describe how the entities within the structure are deployed, configured, and managed; they are stored at the Chef server. The Chef clients are installed on the respective nodes (which could be virtual machines). The job of the Chef clients is to keep the software on its respective node up to date, which it achieves by checking the compliance with the latest recipe installed at the Chef server and automatically updating the software as necessary. At the moment of this writing, the company provides free experimentation as a learning tool (and even limited free software distribution) at its website, which we highly recommend to the interested reader.

Puppet automates the same configuration tasks similarly, as it is also based on the client–server model. The main difference from Chef is in the specification method. The Puppet specification (which uses its own DSL) is declarative—it specifies the dependencies and the client ensures that these are followed. The Chef specification, in contrast, is procedural—written in the Ruby language. Just as Chef, Puppet is available as open source. There is an incisive article [22] comparing the two.

7.3 Orchestration and Management in the Cloud

We are ready to start putting together the pieces of the puzzle developed in this chapter and elsewhere in the book. The elements of the management of the physical elements of the data centers and the network interconnection have already been introduced. The piece that we have not touched on is the management of the life cycle of a Cloud service.

In addition to many technical aspects (such as creation and bootstrapping of images), here the business aspects enter the picture. An excellent introduction to the subject matter has been produced by the Distributed Management Task Force (DMTF)46 organization, and so we will use the definitions and concepts described in the DMTF white paper, Architecture for Managing Clouds.47 We address the life cycle of a service in the next section. The sections that follow review the orchestration and management in OpenStack.

We need to emphasize here that orchestration can be implemented at various levels. As we started this section with a musical simile, we will complete it with the same. Ultimately, in an orchestra each musical instrument needs to have its own part. These parts may be shared among the “clusters” of musicians (e.g., first or second violin sections), but ultimately the individual parts are combined into sections, and then into a single score—the overall composition that the conductor deals with.

In the extreme—and somewhat degenerate—case, each VM in the Cloud can be configured, monitored, relocated, and so on, manually by its own administrator. This task can be automated using tools (such as Chef or Puppet).48 This is where the VM (an “instrument”) is accompanied by its own score. At the next level, the whole infrastructure (the VMs along with the network components to interconnect them) can be orchestrated according to a uniform “score”—and this is what the OpenStack example will demonstrate. But things can go even further! At the top layer, a “score” can be written that combines business policies with the infrastructure specification. This can be achieved with the Topology and Orchestration Specification for Cloud Applications (TOSCA), an OASIS standard, which we will review in the Appendix.

7.3.1 The Life Cycle of a Service

The three entities involved here are the Cloud service provider, the Cloud service developer, and the Cloud service consumer.

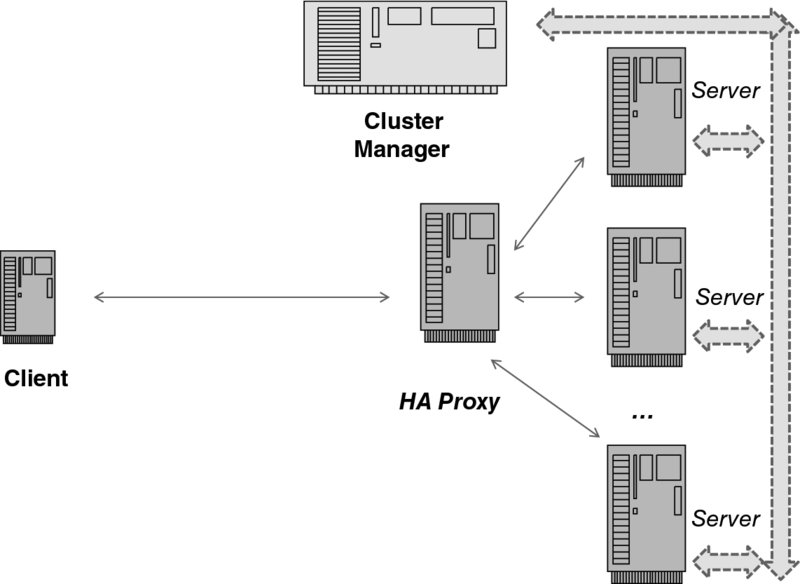

Suppose the Cloud service developer needs to create a (typical) web services infrastructure—say three identical servers and a load balancer along with a back-end database. Writing a program that issues individual requests to the Cloud service provider for creating all the instances—and networks—is problematic in more than one way.

First, suppose the instances for a load balancer and two servers have been created successfully, but creating the virtual machine for the third server has failed. What should the user program do? Deleting all other instances and restarting again is hardly an efficient course of action for the following reasons. From the service developer's point of view, this would greatly complicate the program (which is supposed to be fairly simple). From the service provider's point of view, this would result in wasting the resources which were first allocated and then released but never used.

Second, assuming that all instances have been created, a service provider needs to support elasticity. The question is: How can this be (a) specified and (b) effected? Suppose each of the three servers has reached its threshold CPU utilization. Then a straightforward solution is to create yet another instance (which can be deleted once the burst of activity is over), but how can all this be done automatically? To this end, perhaps, maybe not three but only two instances should have been created in the first place.

The solution adopted by the industry is to define a service in more general terms (we will clarify this with examples), so that the creation of a service is an atomic operation performed by the service provider—this is where orchestration first comes into the picture. And once the service is deployed, the orchestrator itself will then add and delete instances (or other resources) as specified in the service definition.

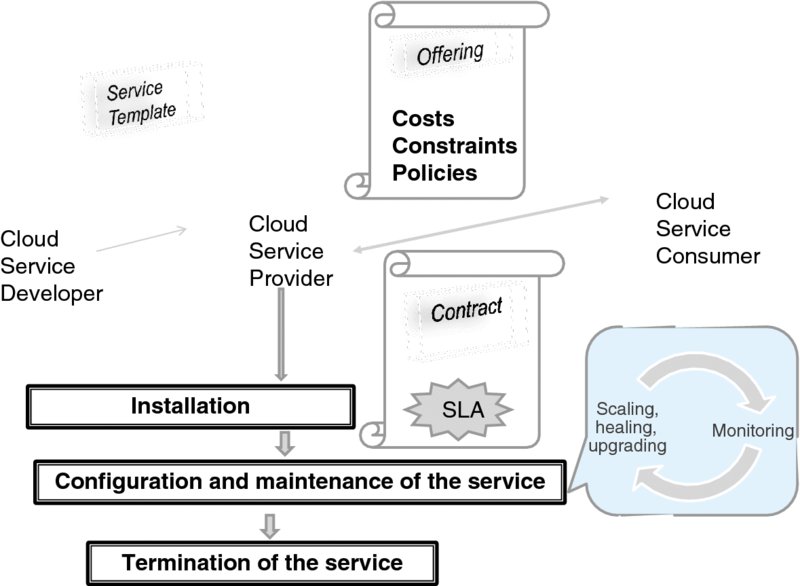

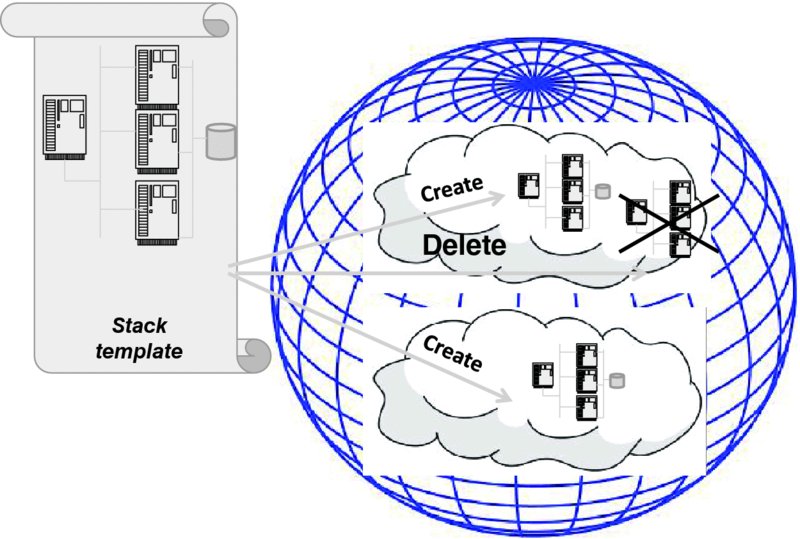

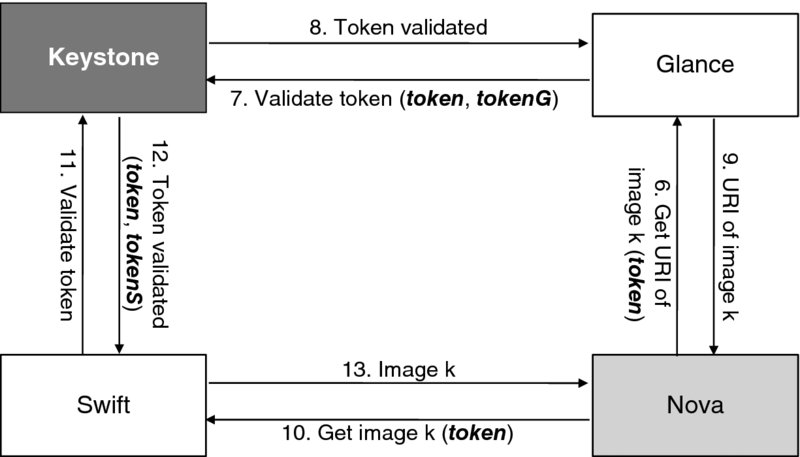

Hence the workflow depicted in Figure 7.8. The service developer defines the service in a template, which also specifies the interfaces to a service. The template (sometimes also called a recipe in the industry) specifies various resources: VM images, connectivity definitions, storage configuration, and so on.

Figure 7.8 The service life cycle.

The service provider creates an offering for a service consumer by augmenting this template with the constraints, costs, policies, and SLA. On accepting the offering, the consumer and provider enter into a contract, which contains, among other items, the SLA and a set of specific, measurable aspects of the SLA called Service-Level Objectives (SLOs).49

At this point, the provider may modify the template to fit the contract requirements. Based on the template, the provider then deploys (or provisions) the service instance. Provisioning involves committing the resources necessary to fulfill the contract.

Once deployed, the service is maintained until the contract is terminated and so the service ends and the resources committed to its support are redeployed. From the orchestration point of view, an essential part of service maintenance is monitoring. Here the relevant events are collected and acted on automatically so as to scale—up or down—the capacity or heal the service in case of a breakdown. Similarly, upgrades are handled automatically in this phase, too. The auto-scaling and auto-healing capabilities are two major functions of orchestration.

As we can see, the model implies that the business objectives and interface definitions be expressed (i.e., encoded) in some form. The formal language constructs for doing so are developed in the Telemanagement Forum (TMF).50 The synergies between the DMTF and the TMF have been explored in the joint DMTF/TMF White Paper, Cloud Management for Communications Service Providers.51

Let us start with onboarding.52 Here a service developer needs to specify which applications run on which virtual machines, what kinds of events an orchestrator needs to handle (and what exactly to do when such an event occurs), and what information to collect.

An application recipe (or template) describes the services that the application requires, each service further defined as a group of service instances (running on separate VMs). These are provided as file descriptors. Services are further specified in individual recipes that specify (a) the number of instances, (b) the hardware and software requirements, and (c) the life cycle events along with their “interrupt handlers,” which are the pointers to the respective scripts. In support of network and operations management, a recipe can also specify probes for monitoring and configuration management. In addition to pre-defined probes available to a service developer, the latter may plug in independent scripts. One aspect of Cloud management and orchestration is that a Cloud provider's resources that are needed to fulfill obligations to customers must be used optimally (as far as the cost is concerned). Optimization here is a complex task because of the many constraints, which include compliance with a customer's policies and various regulations.

The other aspect is providing a customer with the orchestration tools so the customer may control its own infrastructure. Ultimately, what is good for the goose is good for the gander: a provider may share some of its own orchestration tools with customers. Inasmuch as the orchestration involves interworking with business activities, employing workflow-supporting tools is becoming an expected feature. For instance, the VMware® vCenterTM OrchestratorTM provides53 a pre-built workflow library along with tools to design customized workflows. The new blocks for workflows can be created using a JavaScript-based scripting engine. The policy engine launches the appropriate workflows in response to external events (and according to defined policies).

Another important example—and in a way a benchmark for orchestrators—is the Amazon AWS CloudFormation service54, which provides a mechanism for managing the AWS infrastructure deployments. As we will see, the OpenStack orchestrator, Heat, has adopted the terminology as well as the template format of AWS CloudFormation, and in its early orchestration offer did much to interwork with the same tools and interfaces that AWS CloudFormation had given its users.

With the AWS CloudFormation, all resources and dependencies are declared in a template file. Each template defines a collection of resources pertinent to a service, along with the dependencies among them. The collection, which actually represents an infrastructure, is called a stack.55 The idea is that the multitude of resources within a given stack are treated as a single entity, which can be created (or deleted) with a single create or delete command.

Furthermore, when a template for a stack is updated, the stack gets updated (automatically), too. Furthermore, once a template is specified, the whole stack can be replicated or even moved into a different data center or even a different Cloud.

Figure 7.9 illustrates this concept. Here, the template defines the infrastructure that we discussed earlier: a load balancer distributing the traffic among three identical servers. To make the service look realistic, we also added the back-end database. Two networks are involved: one to be shared among the load balancer and the servers, and the other among the servers and the database. With a sequence of < ?TeX{? >create, delete, create< ?TeX}? > operations, the whole infrastructure is first created in one Cloud and later replicated in another. (Of course, this assumes that both Cloud providers support the same template. As we will see in the next section, the OpenStack project has achieved just that by creating a standard along with the software for implementing it!)

Figure 7.9 Operations on a stack (an example).

In line with the ideas outlined at the beginning of this section, we stress that when a stack is created (or deleted), all resources specified in a template are instantiated (or deleted) simultaneously. During the lifetime of a stack, the declared interdependencies among the resources are maintained automatically.

To begin with, Amazon deployed CloudFormation endpoints—with known URLs—across the world regions. Referring to the local geographic endpoint reduces latency. As we will see, some functional capabilities rely on the choice of endpoints.

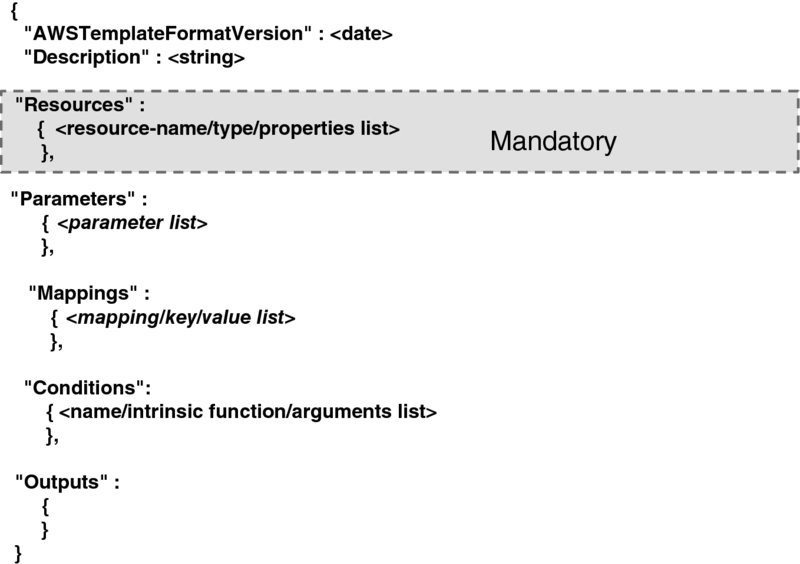

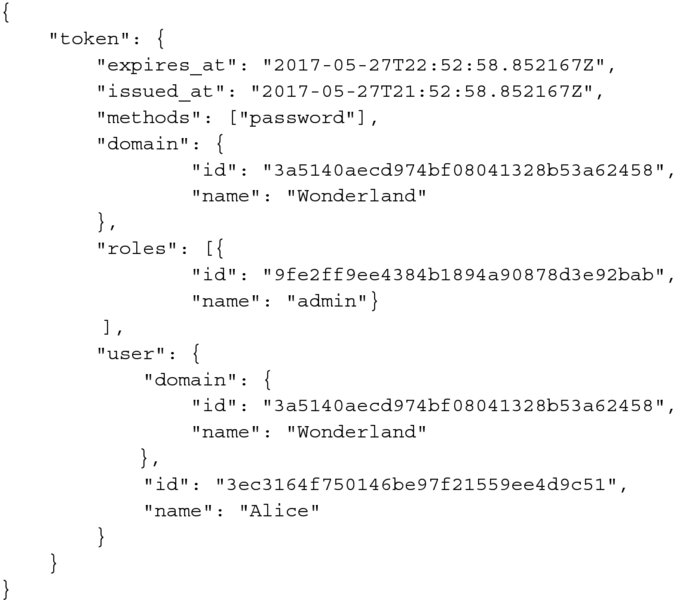

The template is written in the JavaScript Object Notation (JSON) format.56 In addition to the version and description fields, it has the following entries: resources, parameters, mappings, conditions, and outputs. We review them, in that order, with the help of Figure 7.10.

Figure 7.10 The AWS CloudFormation template.

The term resource refers to a VM instance or any other AWS pre-defined object (such as a security group, or an auto-scaling group—we will see specific examples soon).57 Each resource is assigned a resource name, which must be unique within a template. The resource type is another part of the resource specification. In addition, a set of resource properties associated with a resource may be declared, too, each declaration taking the form of a name/value pair. A property's value may be known only at run time, and so the template syntax allows the use of an intrinsic function instead of a static value. The resource entry is the only mandatory one; the rest are optional.

A parameter is just a name string, whose specification may list the conditions that constrain the values that parameters can take.

Mappings automate the parameters' value assignments. One can define a subset of parameter's values and associate it with a key. A typical example of a key is the name of a region; all region-specific values (e.g., current time or local regulations) are assigned to the respective parameters automatically.

Conditions are but a programmatic tool. These are Boolean functions that compare parameter values, either with one another or with constants. If the result of a comparison is positive, resources are created. All conditions are evaluated when a stack is (a) created or (b) updated (and only then).

Outputs are parameters declared specifically in support of the feedback mechanism. The end user can query the value of any output via a describe-stack command. Again, conditions can be employed to guide the value assignment.

Going back to our earlier example of a web service, we can see how a template may be constructed in support of auto-scaling— an orchestrator-provided service that enables elasticity. In AWS in particular, auto-scaling enables launching or terminating an instance according to user-defined policies as well as run-time characteristics (such as an application's “health” gauged through monitoring). Scaling can be achieved vertically, by changing the compute capacity of an instance, or horizontally, by changing the number of load-balanced instances. It is particularly the horizontal scaling that demonstrates the unique economic advantages of the Cloud environment: in the physical deployment, there is a need to keep additional servers on standby in anticipation of increased load—or actually load balance all of them, while they are under-utilized—but in the Cloud environment an additional server instance may be deployed on the fly, the moment the demand reaches a specified threshold. Conversely, when the demand drops sufficiently, a superfluous instance can be shut down. As a result, the expenditure for the extra resource is incurred only when the resource is needed.

A template58 for operating the environment that involves a group of web servers would specify under the Resource header a group of the type “AWS::AutoScaling:: AutoScalingGroup,” with the properties that list the availability zones, the configuration name (another resource, pointing to the image of the instance to launch), and both the minimum and a maximum size of the group.

If notification of the events to the operator (an interesting feature!) is desired, the notification topic can also be specified as a resource with the type “AWS::SNS::Topic,” which would refer to the appropriate resource—the endpoint (the operator's e-mail)—and specify the protocol (“email”). In this case, the common group specification would also list specific notification message strings (e.g., “instance launched,” “instance terminated,” or “error,” the latter also supplying an appropriate error code).

Next, the scale-up and scale-down policies can be specified, using the resource type “AWS::AutoScaling::ScalingPolicy”. The actual alarm event that triggers scaling up (or down) can be specified as the resource, too: “Type”: “AWS::CloudWatch::Alarm.” For instance, if the requirement for scaling up is a burst of CPU utlilization exceeding 80% for 5 minutes, the properties of the scaling-up alarm will include, using the “WS/EC2” namespace, “MetricName: CPUUtlilization,” “Period: 300,” and “Threshold: 90.” The “AlarmActions” will refer to the name of the scale-up policy defined above. The intrinsic function used here is “ComparisonOperator,” with the value “GreaterThanThreshold.”

Another resource that needs to be specified is the load balancer itself, of the type “AWS::ElasticLoadBalancing::LoadBalancer,” with properties that include the port number to listen to and—given that we deal with web servers—the instance port number and the protocol (HTTP).

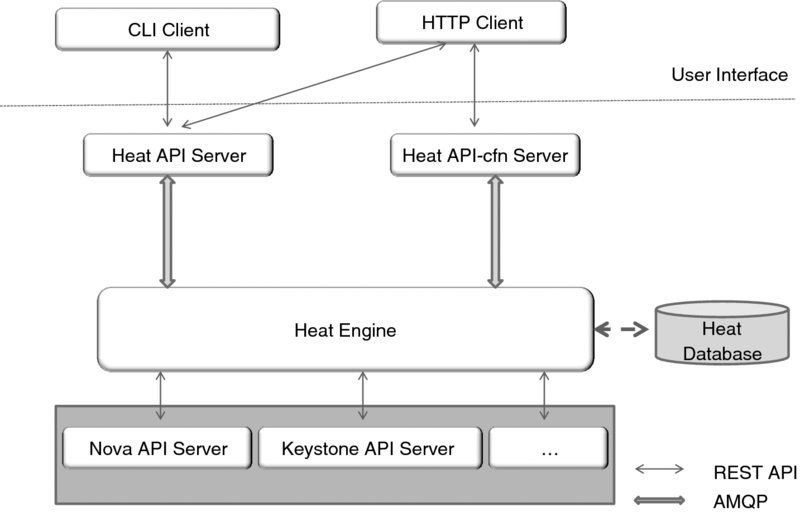

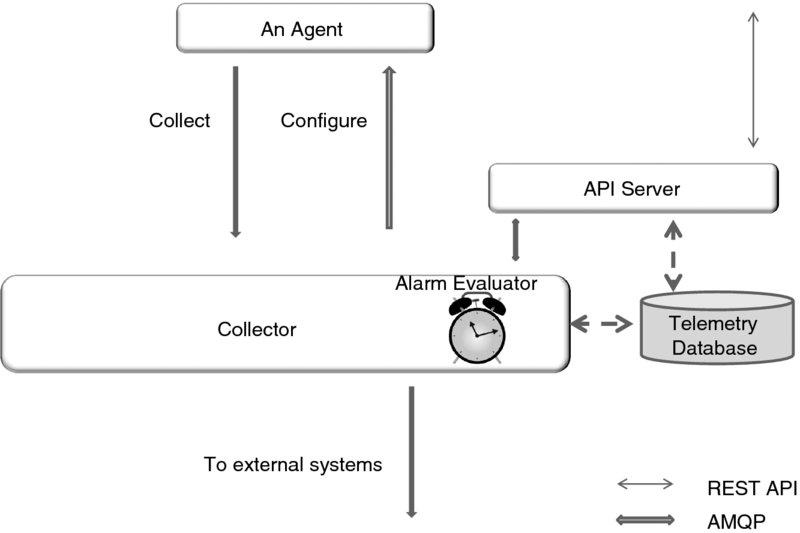

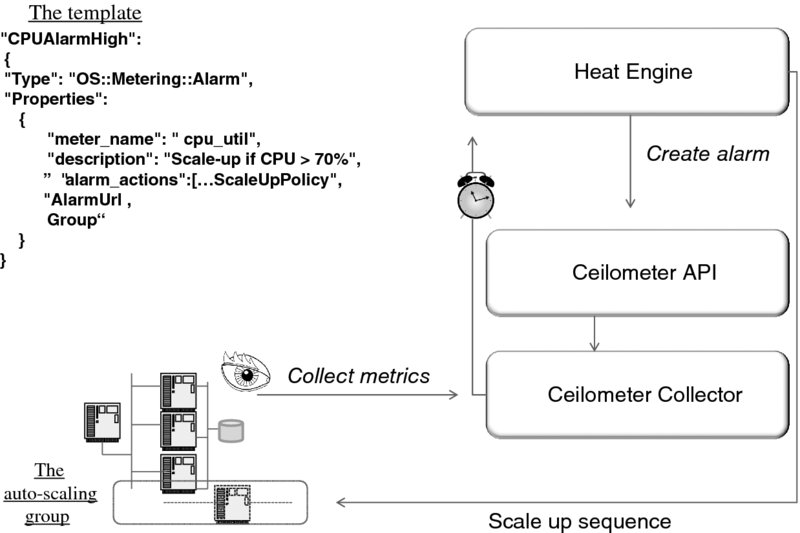

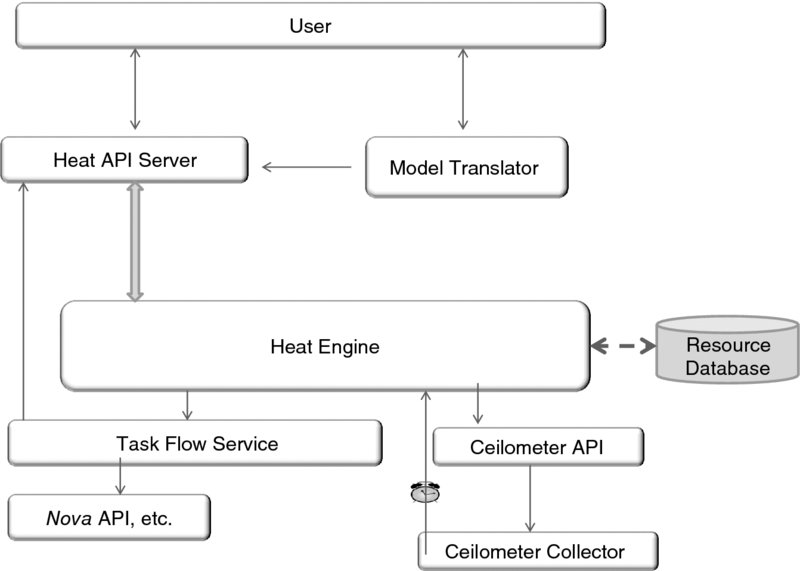

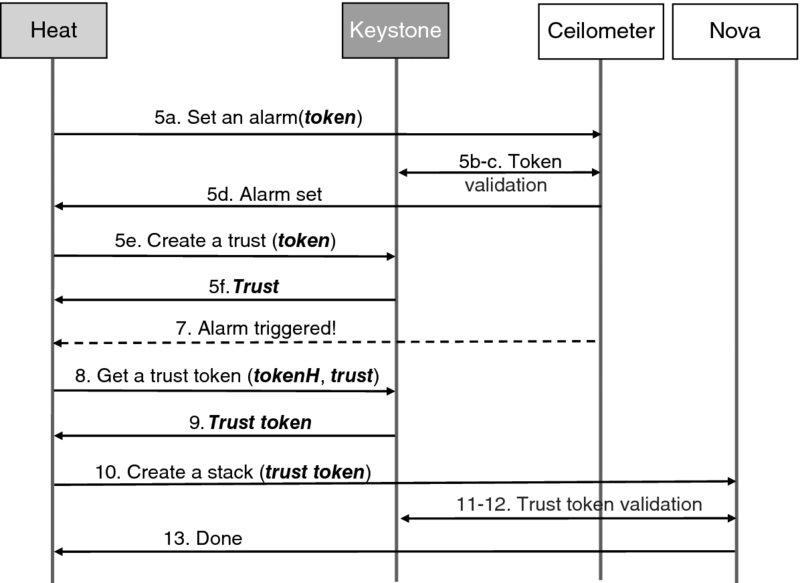

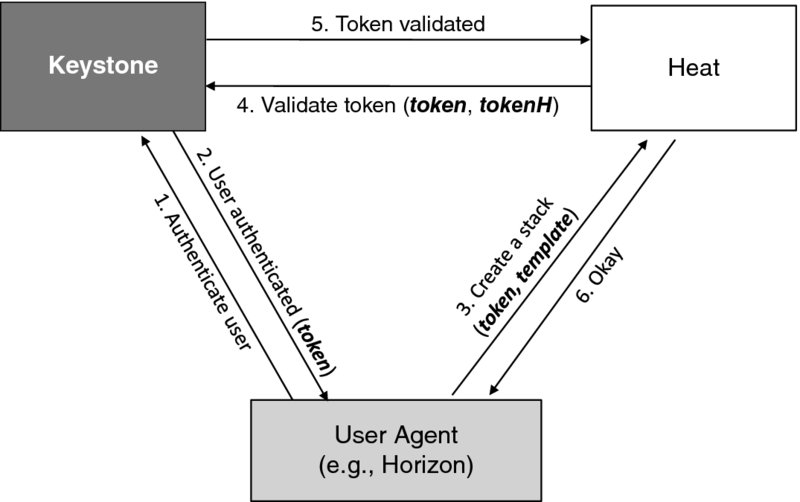

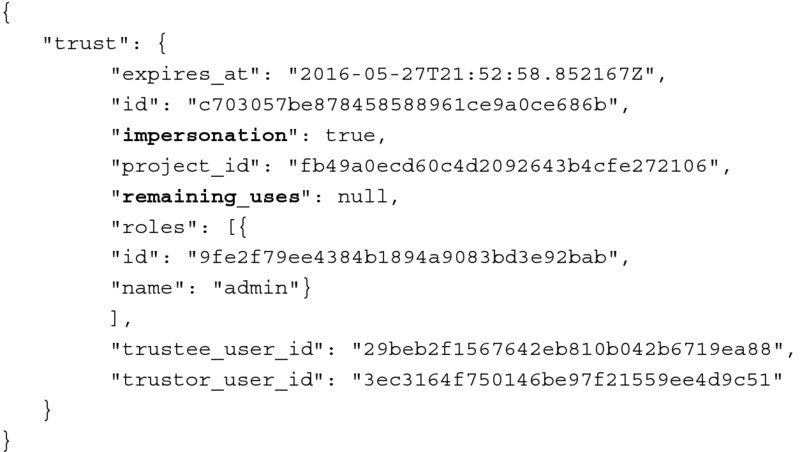

Last but not least, a resource describing the instance security group of the type “InstanceSecurityGroup” must be created. The typical use is enabling Secure Shell (SSH59)-based access to the front end, the load balancer, only.